The Age of Imaginative Machines

post by Yuli_Ban · 2021-03-18T00:35:56.212Z · LW · GW · 1 commentsContents

Stylized Exaggeration: How Cartoons Work Cartoon-a-Trons None 1 comment

A previous post of mine detailed the rise of "synthetic media [LW · GW]" and discussed the burgeoning field in terms that now read as if from the 20th century. At the time of writing, the most advanced publicly available language modeler was the recently unveiled GPT-2 with its now quaint 1.5 billion data parameters.

Things have changed.

Indeed, I've now begun to use synthetic media for my own endeavors, such as generating photorealistic character portraits and creating landscapes with Artbreeder— pieces that would have cost hundreds of dollars' worth of effort even two years ago, I can now have for no cost on my end and with the only difficulty and concession being the need to fiddle with GANs to create only a rough approximation that lacks the ability to add any defining nuances with ease. And this is using technology that very well may be obsolete within the year.

It came my attention that essays from later that same year— reflecting my shock at the sheer rate of change— are hidden by poor metadata and their host websites delisting them. So I've decided to transfer one essay here, except now with a few updates to reflect the changes and further mullings given since the summer of 2019.

As recently as five years ago, the concept of “creative machines” was cast off as impossible- or at the very least, improbable for decades. Indeed, the phrase remains an oxymoron in the minds of most. Perhaps they are right. Creativity implies agency and desire to create. All machines today lack their own agency. Yet we bear witness to the rise of computer programs that imagine and “dream” in ways not dissimilar to humankind.

Though lacking agency, this still meets the definition of imagination.

To reduce it to its most fundamental ingredients: Imagination = experience + abstraction + prediction. To get creativity, you need only add “drive”. Presuming that we fail to create artificial general intelligence in the next ten years (an easy thing to assume because it’s unlikely we will achieve fully generalized AI even in the next thirty), we still possess computers capable of the former three ingredients.

Someone who lives on a flat island and who has never seen a mountain before can learn to picture what one might be by using what they know of rocks and cumulonimbus clouds, making an abstract guess to cross the two, and then predicting what such a “rock cloud” might look like. This is the root of imagination.

As Descartes noted, even the strongest of imagined sensations is duller than the dullest physical one, so this image in the person’s head is only clear to them in a fleeting way. Nevertheless, it’s still there. Through great artistic skills, the person can learn to express this mental image through artistic means. In all but the most skilled, it will not be a pure 1-to-1 realization due to the fuzziness of our minds, but in the case of expressive art, it doesn’t need to be.

Computers lack this fleeting ethereality of imagination completely. Once one creates something, it can give you the uncorrupted output.

Right now, this makes for wonderful tools and apps that many play around with online and on our phones.

But extrapolating this to the near future results in us coming face to face many heavy questions. If a computer can imagine things and the outputs can be used to create entertainment on par with and cheaper than human-created commercial art, what happens to the human-centric entertainment industry?

Stylized Exaggeration: How Cartoons Work

Exaggeration is a fundamental element of cartooning. Though some cartoons do exist that feature anatomically accurate proportions and realistic reactions, the magic of visual arts is that we are given the power to defy physical laws to create something that could only work on paper or in a computer. We call stylized exaggerations of things “caricatures.”

Newcomers to cartoons almost always use unrealistic proportions out of a lack of understanding of anatomy. Once they understand basic anatomical proportions, their art becomes more and more real to life- giving them the experience to know how to break the rules and leading to a return to wacky exaggerated anatomy, now with actual understanding of what they’re doing and why they’re doing it. Western animated cartoons, Japanese anime & manga, comic books, and more all utilize the same principles.

Thus, for machines to make their mark in this field, they need to move past vector filter effects. They’ll need to learn how to do two things:

- Exaggerate & simplify existing features

- Break down a composition to create new features

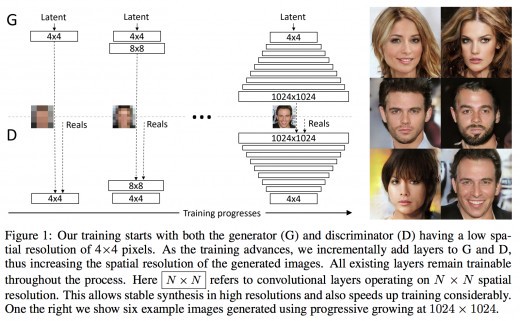

With the rise of generative adversarial networks & multimodal language modelers, not only are these possible but they’ve already been done.

This presented a question going forward: how will neural networks affect audiovisual arts industries as a whole once they've licked even the most absurd forms of visual media?

The answer: profoundly. So profoundly, in fact, that it is my (uneducated) hypothesis that it is in the fields of commercial animation & comics that we will first see the widespread effects of intelligent automation.

And with the rise of multimodal transformers, the rate of acceleration in the field of media synthesis will only accelerate. After all, human language itself is a multimodal tool. It is constructed through a constellation of experiences, without which you have the proverbial 5-year-old attempting to write a door-stopping Victorian novel.

The first instances of synthesized visual entertainment were extremely surreal. Characters tended to be distorted with body parts placed in bizarre locations, colors would bleed beyond lines, and text looked uncanny as if it were almost but not quite a language. What’s more, GANs often could not handle having two or more figures in a single image without the second appearing to be a hideous abomination. Neural networks possessed some rudimentary idea of composition, but lacked enough data and commonsense to understand it.

By 2024, refinements wrought apps that are capable of generating comic panels roughly indistinguishable from those hand-drawn. The descendant of GauGAN is routinely used by comic artists and animators to create backgrounds, while some use this app more creatively, perhaps using a frame-by-frame process to generate new effects, alternative versions of scenes, or upscale old works. The results are astounding to most, and especially adored by studios and indie creators. With ANNs, these creators can effectively shave off large chunks of production time and save quite a bit of money. But this is also where we begin to see automation take its toll: matte painters and background stylists will find much less work, and what positions are open are those where the creators absolutely need an extremely detailed style that only humans could make- movies and higher-budget productions predominantly. Certain indie creators also prefer a fully hands-on staff (and gain faithful followers because of this), but most indies simply lack the financial flexibility to choose. Hiring an artist to complete backgrounds may cost hundreds or thousands, whereas utilizing GANs is free and results in comparable quality. This pigeonholes background artists into competing for a tiny handul of jobs.

Background artists might seem to be a mundane choice to start with- but the truth is that many kinds of artists will be seeing similar diminishing prospects. Colorists are seeing vastly fewer opportunities, and editors are being replaced by neural networks who understand composition.

This all adds up, creating a crisis of doubt across the internet. With neural networks growing in capability, cartooning is seeing both a resurgence and claims that the field is losing its soul. On various social media sites, it’s easy to find rants of those laid off by studios & subcontractors as a part of a mass cost-cutting endeavor.

People with no artistic skill or talent are throwing their efforts into the field- most infamously, fanfiction undergoes a renaissance as creators take old works and feed them into audio-visual synthesis apps. This has become a popular meme as the easily amused, “cringe” culture obsessed, and less politically correct watch with schadenfreudistic glee as young and inexperienced creators bring their works to life and share them on various websites and forums dedicated to showing off “amateur productions.”

Of course, anyone with knowledge of these apps and with a work in mind can do the same thing regardless of what they’ve created.

Another less-notable field that will suffer is the indie commissions market. Most art is commission-based- that is, someone contracts an artist to create a desired piece. Many freelance work boards for commissions-based artist exist, while others operate on an ask-pay-and-you-shall-receive basis. The latter tend to command higher prices due to either being more notable or having a closer relationship with the contractor. Workboards, however, are almost always a race-to-the-bottom in terms of price-for-content. Freelancers often have to lower their asking rates just to find work in a competitive field, and many of those freelancers are newcomers whose skills need to be professional-tier just to be noticed.

If one doesn’t want to pay to high-quality commissioned art (where basic black-and-white character busts are the cheapest option and often start at $40 and get exponentially more expensive from there), the only other option has historically been character creation tools on the Internet.

Character creation programs have existed for as long as it was possible to display interactive images online and were always simplified tools that tended towards being “dress up a pre-made avatar”. Dress-up flash games were a dime a dozen, and each individual one was thematic with few clothing options or available poses, let alone varying body types or backgrounds. As they were and still are free, the art assets were almost always of a lower quality than what you can find from a commissioned artist. Should one have wanted a more robust character creation engine, they turned to equally thematic paid-games, such as MMOs and RPGs which required one to play through for hours just to buy & unlock more options.

In comes artificial neural networks, which contractors increasingly use to bypass the cost of paying freelancers. This is greatly due to the rise of “deep learning create-a-character” programs. By adding customizable parameters to image synthesis models, one can use them to achieve virtually unlimited creative flexibility. ANNs can generate art in any art style, with any amount of exaggeration, in any pose, without being limited by pre-made assets so long as they have massive training sets. Some of the older programs are rough around the edges, but the high-end models can create art on par with any commissioned artist.

Naturally, this leads to quite a lot of havoc online as freelance artists find work drying up while already contracted artists may be let go. And considering how large the field for visual art is- ranging from designing logos on mundanity such as toothpaste tubes & portable bathrooms to designing characters for movies to drawing designs for buildings to illustrating book covers & adverts and so, so much more- this means much of this job market has been eviscerated on very short notice. Online publications watch on with boggled eyes as automation runs through the field of creatives they had said for decades would be last on the chopping block (if it ever came at all). Entire art schools are cutting themselves back and students going for degrees in art are increasingly anxious over their rapidly dimming prospects. All because of algorithms that can instantly generate designs.

While some of the designing process is done via a more meticulous process similar to a much more automated version of Photoshop, there’s another method people use to generate images and short videos that automates the process even further: text-to-image.

Text-to-image synthesis (TTIS) remains somewhat scattershot for the most specific scenes, but for those who are less auteur, this technology represents the greatest leap forward in art since the advent of photography.

Input text into the ANN and you’ll get an image out of it. The more specific the text, the more accurate the final image- of course, these ANNs require vast training data sets in order to understand image composition in the first place, and if you input a word describing something that wasn’t in the training data, the ANN will ignore the request. For some, that makes TTIS programs weak or limited. Yet as the deep learning researchers themselves have pointed out, there is an obvious work-around- create that reference yourself and use it as training data going forward.

Modern ANNs, though they are powered by terabytes and petabytes of data for structure, are also capable of “zero-shot learning”. If you input a single image of a jabberwocky into a network trained on real animals, the network will be able to retrofit the creature into its data set, breaking it down and predicting what it might look like from different angles or with different textures- adding more images naturally increases the accuracy, but it can make do with what it has.

Couple this with TTIS programs and the power of non-artists to bring their imaginations to life becomes unprecedented.

TTIS programs also do not require one to be a masterful writer. Though descriptive passages yield more accurate results (e.g. “cream and mauve Ford Pinto” vs. “white and purple car”), a young child could use these programs- and often do as part of schooling classes in order to learn how to recognize symbols and the relationship between words & images.

Yet as exciting as TTIS programs are, they are said to herald the next generation of media synthesis: “text-to-video synthesis” (TTVS). With these, one can describe a scene and receive a fully animated output, typically upwards of 25 to 30 seconds in length. By combining video synthesis with style transfer and cartoon exaggeration, creators can effectively conjure cartoons of virtually any style.

The most powerful networks can generate outputs upwards of a minute long with narrative coherence across it all, but they remain in laboratories and on GitHub.

Animators watch closely at the incoming tsunami of change, knowing they still have a little time left but also understanding that things will only grow more insane moving forward from here.

Machines can just as easily create entertaining pieces in 3D- in fact, researchers even claim it’s easier to do so as these networks can work with existing graphical & procedural generation engines.

And with machines capable of intelligent procedural modeling, the possibility of “automated video game generation” hasn’t escaped people’s imagination. 2024 is too soon for this to become a standard- intelligent procedural modeling remains a bleeding edge tool more utilized by indie developers, though some have developed autonomous agents that can be tasked with putting together rudimentary games and scenes in engines such as Unity & Blender.

Indeed, autonomous agent-directed media synthesis is put to its greatest use in generating animated shorts that, to the casual observer, looks on par with a Disney Pixar film (though a more critical eye will find many shortcomings). Coupled with audio synthesis programs, this gives a plebian dreamer the capability of creating an amazing experience in their bedroom on a store-bought laptop, so long as they have a few hours to kill while waiting for a scene to render. Most magically, this suggests that in the near future, such dreamers will be able to type a description of a story into a text box and get a full-fledged movie as an output. Barring current examples, predictions on when this will become commonplace range from “in a few years” all the way to “never”- those latter voices primarily being skeptics who perennially believe artificial intelligence has gone as far as it ever will, while the former being those who believe the Technological Singularity is months away. The average estimate, however, puts the “bedroom franchise” era in the early to mid 2030s.

One added benefit of machine learning generated images is that this method is able to jump the gap separating us from the ever-elusive “total photorealism” long sought in video game graphics. As graphical fidelity has gotten higher over the decades, so have production costs. This has been a root cause for why AAA games have felt more like interactive movies- due to costs being so high, studios and producers absolutely must turn a profit. This resulted in studios taking the safest possible route in development, and one of the historically safest choices has been to make a game visually stunning. Visuals cost money and take up resources that can’t be used elsewhere. Many now forgotten or hated AAA titles would have likely become more highly regarded had their development teams put less focus on graphics, but this is a double-edged sword as gamers do react negatively to subpar graphical quality. And yet despite our march towards better and better graphics, video games have not yet achieved true photorealism. Video game mods that do bring fidelity to an extreme point often lack the other half of making CG look realistic- physics. If a video game looks indistinguishable from real life until everything starts moving, it will still be considered sub-realistic.

In the age of imaginative machines, some of the work has been automated. Machine learning has been used to retroactively upscale retro games- 3D & pseudo-3D titles as far back as the late 1980s can be run through these networks and come out looking just as good as any contemporary game. But again, the illusion of upscaled graphics is ruined when older games move. DOOM and DOOM II have been put through neural networks and visually enhanced to that of a 9th gen console game, but animations are still very rough and jerky with only passable attempts at translating pixel animation to full polygonal motion. Likewise, building a video game from the ground up with a neural networks is not an easy task and still requires many asset designers & programmers.

Cartoon-a-Trons

I’ll end with an anecdote. When I was 10 years old way back in 2005, I erroneously believed that cartoon synthesizing programs already existed and tried looking them up online with the intent of making my own little show. In logic one could only find in a child, I sincerely believed that people wrote stories into a text document and received finished products out of it because I had absolutely no idea of just how labor intensive the process actually was as I’d never seen how cartoons were created. They were simply 22-minute brightly colored fantasies that magically appeared on TV. Surely it was just a matter of finding and downloading the right program!

I failed.

And I tried again in a few years later, this time believing a slightly more grounded fantasy that there were programs for sale that could generate cartoons after seeing an ‘Anime Studios’ product in a local Walmart. I bought that disc and, on the car ride home, hoped that it had the voices I wanted to use for my new show. Once I installed the program and realized that I’d be doing the vast bulk of the work, I decided to formally research just went into making a TV-ready cartoon. My ambitions of becoming an “animator” (perhaps better described as a “cartoon creator” considering my intentions) were forever dashed.

How surreal is it to know that my long-lost dream may actually be on the verge of coming true- if at the cost of a number of industries that employ millions.

This little post represents scarcely 1% of the true breadth of possibilities afforded by AI-generated media and focused almost solely on the visual. Perhaps at a future date, I'll dedicate a post to things such as audio synthesis and the vast rainbow of possibilities there, as well as things like a perpetual-movie maker, the breakdown of the entertainment industry and literary tradition, synthetic reality bubbles, and my favorite contemplative possibility of all: bedroom multimedia franchises and the coming ability for anybody with a PC to synthesize something on par with the MCU, Marvel Comics, and related video games and soundtracks entirely with AI.

1 comments

Comments sorted by top scores.

comment by MSRayne · 2021-03-18T02:19:09.917Z · LW(p) · GW(p)

This will be great for me, because I have tons of ideas but suck at art. In fact, I hope I'll be able to be one of the people who makes all this possible. I've always wanted to dedicate my life to creating virtual worlds better than the real one, after all. (And eventually, uploading as many people and other sentient beings as possible into them, and replacing the real world altogether with an engineered paradise.)