What "The Message" Was For Me

post by Alex Beyman (alexbeyman) · 2022-10-11T08:08:11.673Z · LW · GW · 14 commentsContents

14 comments

Warning: If you have low tolerance for analogies or poetic language, skip to the numbered chain of dependencies for a more straightforward rundown of the reasoning presented herein.

It's been said that when you get the message, hang up the phone. What is "the message" though? I can't dictate what it is for everybody. This was just my biggest "aha moment" in recent years.

The most commonly expressed version of this idea I have seen, by analogy, is that living beings are like the countless little eyes which make up a compound eye. Each seeing a narrow, limited perspective of the world. The "big picture" which only the overall eye can see is all of those combined.

You could also express it as similar to the way in which a picture on a monitor is made up of many little differently colored pixels. There doesn't appear to be any rhyme or reason to it until you zoom out far enough.

In the same way, a human being viewed up close is actually trillions(!) of tiny individual organisms, none of which know they are part of a much larger creature. All expressions of the same basic idea that we're all part of the same thing, connected in ways that are not immediately obvious until viewed on a grand scale, and that separation is to some extent an illusion.

I experienced the same apparent self-evident nature of this idea but my brain is wired such that it is unsatisfied with that sort of just-so answer. I want complete explanations, diagrams with every little part clearly labeled. I want to know how it works. So I kept chugging away at it until this occurred to me:

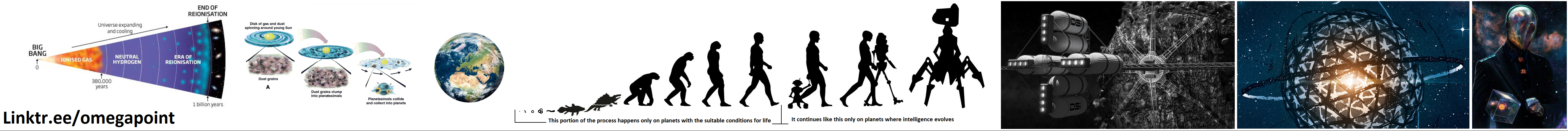

- In biological evolution, simple chemical self-replicators gave rise to intelligent organisms such as ourselves. We know this to be true with quite a lot of certainty.

- Humans will eventually develop self-replicating machines. This is a plausible assumption given present technological trends toward automation, and probably occurs on any planet where intelligent life evolves. (Providing they don't destroy themselves first)

- Since self-replicating machines will reproduce the conditions that started biological evolution (simple replicators), we have reason to believe the same thing will happen - resulting in the evolution of machine intelligence (Even if we do not make them intelligent to begin with).

- These machine intelligences will assume dominion over their environment (which at their technological level will likely be anything reachable by spaceflight) just as humans, the previously evolved intelligent beings, asserted dominion over the earth. This is a reasonable expectation based on extrapolation of forces we have observed to produce this outcome already.

- Self-replicating machine intelligence, wherever and however it originates, will set about converting all accessible matter in the universe into computational substrate, as that is the equivalent of habitable living space for intelligent machines (as opposed to, say, a Dyson sphere or O'Neill cylinder).

- Finally, once the entire universe is converted into a single vast thinking machine, the universe may link to other universes (the few which arrived at the same outcome due to having similar laws to our own) to form a network of neuron analogs, forming the neural network some consider the supreme being.

Even though individual universes eventually run out of energy (heat death), this does not kill the larger organism described here for the same reason that individual cells in your brain can die and be replaced by new ones over time without interrupting your continuous experience of consciousness.

If you subtract labels like "robot", "biological", "technological" and so on from it, what's described there is just a tendency for the matter supplied by the big bang to undergo a process driven by the energy it also supplied, gradually self-organizing into intelligence (like humans, most recently).

Note that essentially the same outcome occurs no matter what. For example, if self replicating machines or strong AI are impossible, then instead the matter of the universe is converted into space colonies with biological creatures like us inside, closely networked. "Self replicating intelligent matter" in some form, be it biology, machines or something we haven't seen yet. Many paths, but to the same destination.

Humans die out? No problem, plenty of other intelligent life has already arisen before and will arise after us, like how it's no big deal if any given sperm fails to reach the egg since there's plenty more where that came from. And so on and so forth. It will seem highly speculative until you exhaustively try to find a way for it not to turn out as described. Then you will discover that all roads lead to Rome, as it were.

Note also that nothing described here is supernatural. Just matter and energy obeying the laws of physics. This concept does not contradict any of what science has so far revealed, but instead relies upon and extrapolates from it. It is in fact descended from the same lines of evidence and reasoning which commonly lead people to atheism, just thought through further than usual.

I don't consider this knowledge necessary. It's entirely possible to live a fulfilled life without it. I chopped wood and carried water before and still do. But I appreciate it because at the time I was feeling lost, and anxious about the future, worried that perhaps it was all up to humanity to spread life/intelligence (of some kind, be it biology or machinery) into space and perhaps we'd fail, destroying ourselves before we could accomplish that or whatever. Now I realize that while that's what we're here to do, it's not the end of the world if we fail.

There's a lot more to it; this concept intersects very neatly with the probabilistic argument for simulationism and a bunch of other related concepts. Incidentally I believe this to be the same thing Terrence McKenna dubbed the "transcendental object at the end of time" and which John C. Lilly named the "solid state intelligence". But that's a topic for another article. I figure it's enough to present the basic core concept to you fine fellows for the time being.

BBC: What if the Aliens We're Looking For are AI?

Kursezagt: Civilization Scale

Popular Mechanics: Why Superintelligent Machines Are Probably the Dominant Lifeforms in the Universe

CBS: If Aliens Arrive, Expect Robots

Universe Today: Is Our Universe Ruled by Artificial Intelligence?

Nautil.us: Why Alien Life Will Be Robotic

14 comments

Comments sorted by top scores.

comment by mu_(negative) · 2022-10-13T04:13:47.025Z · LW(p) · GW(p)

While I may or may not agree with your more fantastical conclusions, I don't understand the downvotes. The analogy between biological, neural, and AI systems is not new but is well presented. I particularly enjoyed the analogy that comptronium is "habitable space" to AI. Minus physics-as-we-know-it breaking steps, which are polemic and not crucial to the argument's point, I'd call on downvoters to be explicit about what they disagree with or find unhelpful.

Speculatively, perhaps at least some find the presentation of AI as the "next stage of evolution" infohazardous. I'd disagree. I think it should start discussion along the lines of "what we mean by alignment". What's the end state for a human society with "aligned" AI? It probably looks pretty alien to our present society. It probably tends towards deep machine mediated communication blurring the lines between individuals. I think it's valuable to envision these futures.

Replies from: alexbeyman↑ comment by Alex Beyman (alexbeyman) · 2022-10-13T08:03:27.036Z · LW(p) · GW(p)

I appreciate your insightful post. We seem similar in our thinking up to a point. Where we diverge is that I am not prejudicial about what form intelligence takes. I care that it is conscious, insofar as we can test for such a thing. I care that it lacks none of our capacities, so that what we offer the universe does not perish along with us. But I do not care that it be humans, specifically, and feel there are carriers of intelligence far more suited to the vacuum of space than we are, or even cyborgs. Does the notion of being superceded disturb you?

Replies from: mu_(negative)↑ comment by mu_(negative) · 2022-10-13T15:26:10.430Z · LW(p) · GW(p)

Yes, the notion of being superceded does disturb me. Not in principle, but pragmatically. I read your point, broadly, to be that there are a lot of interesting potential non-depressing outcomes to AI, up to advocating for a level of comfort with the idea of getting replaced by something "better" and bigger than ourselves. I generally agree with this! However, I'm less sanguine than you that AI will "replicate" to evolve consciousness that leads to one of these non-depressing outcomes. There's no guarantee we get to be subsumed, cyborged, or even superceded. The default outcome is that we get erased by an unconscious machine that tiles the universe with smiley faces and keeps that as its value function until heat death. Or it's at least a very plausible outcome we need to react to. So caring about the points you noted you care about, in my view, translates to caring about alignment and control.

Replies from: alexbeyman↑ comment by Alex Beyman (alexbeyman) · 2022-10-14T05:59:20.826Z · LW(p) · GW(p)

Fair point. But then, our most distant ancestor was a mindless maximizer of sorts with the only value function of making copies of itself. It did indeed saturate the oceans with those copies. But the story didn't end there, or there would be nobody to write this.

comment by TAG · 2022-10-12T19:29:14.733Z · LW(p) · GW(p)

the universe may link to other universes

How?

Replies from: alexbeyman↑ comment by Alex Beyman (alexbeyman) · 2022-10-12T21:48:18.678Z · LW(p) · GW(p)

Is it reasonable to expect that every future technology be comprehensible to the minds of human beings alive today, otherwise it's impossible? I realize this sounds awfully convenient/magic-like, but is there not a long track record in technological development of feats which were believed impossible, becoming possible as our understanding improves? A famous example being the advent of spectrometry making possible the determination of the composition of stars, and the atmospheres of distant planets:

"In his 1842 book The Positive Philosophy, the French philosopher Auguste Comte wrote of the stars: “We can never learn their internal constitution, nor, in regard to some of them, how heat is absorbed by their atmosphere.” In a similar vein, he said of the planets: “We can never know anything of their chemical or mineralogical structure; and, much less, that of organized beings living on their surface.”

Comte’s argument was that the stars and planets are so far away as to be beyond the limits of everything but our sense of sight and geometry. He reasoned that, while we could work out their distance, their motion and their mass, nothing more could realistically be discerned. There was certainly no way to chemically analyse them.

Ironically, the discovery that would prove Comte wrong had already been made. In the early 19th century, William Hyde Wollaston and Joseph von Fraunhofer independently discovered that the spectrum of the Sun contained a great many dark lines.

By 1859 these had been shown to be atomic absorption lines. Each chemical element present in the Sun could be identified by analysing this pattern of lines, making it possible to discover just what a star is made of."

https://www.newscientist.com/article/dn13556-10-impossibilities-conquered-by-science/

↑ comment by TAG · 2022-10-12T22:00:36.439Z · LW(p) · GW(p)

Is it reasonable to expect that every future technology be comprehensible to the minds of human beings alive today, otherwise it’s impossible?

No, but it's also not reasonable to privilege a hypothesis.

Replies from: alexbeyman↑ comment by Alex Beyman (alexbeyman) · 2022-10-12T23:41:12.476Z · LW(p) · GW(p)

This feels like an issue of framing. It is not contentious on this site to propose that AI which exceeds human intelligence will be able to produce technologies beyond our understanding and ability to develop on our own, even though it's expressing the same meaning.

Replies from: TAG↑ comment by TAG · 2022-10-13T00:10:53.017Z · LW(p) · GW(p)

Then why limit things to light cones?

Replies from: alexbeyman↑ comment by Alex Beyman (alexbeyman) · 2022-10-13T00:37:30.725Z · LW(p) · GW(p)

Conservatism, just not absolute.

comment by IrenicTruth · 2022-10-12T13:25:33.263Z · LW(p) · GW(p)

A lot of the steps in your chain are tenuous. For example, if I were making replicators, I'd ensure they were faithful replicators (not that hard from an engineering standpoint). Making faithful replicators negates step 3.

(Note: I won't respond to anything you write here. I have too many things to respond to right now. But I saw the negative vote total and no comments, a situation I'd find frustrating if I were in it, so I wanted to give you some idea of what someone might disagree with/consider sloppy/wish they hadn't spent their time reading.)

Replies from: mu_(negative), alexbeyman↑ comment by mu_(negative) · 2022-10-13T04:21:07.680Z · LW(p) · GW(p)

"For example, if I were making replicators, I'd ensure they were faithful replicators "

Isn't this the whole danger of unaligned AI? It's intelligent, it "replicates" and it doesn't do what you want.

Besides physics-breaking 6, I think the only tenuous link in the chain is 5; that AI ("replicators") will want to convert everything to comptronium. But that seems like at least a plausible value function, right? That's basically what we are trying to do. It's either that or paperclips, I'd expect.

(Note, applaud your commenting to explain downvote.)

Replies from: alexbeyman↑ comment by Alex Beyman (alexbeyman) · 2022-10-13T08:00:18.913Z · LW(p) · GW(p)

Well put! While you're of course right in your implication that conventional "AI as we know it" would not necessarily "desire" anything, an evolved machine species would. Evolution would select for a survival instinct in them as it did in us. All of our activities you observe fall along those same lines are driven by instincts programmed into us by evolution, which we should expect to be common to all products of evolution. I speculate a strong AI trained on human connectomes would also have this quality, for the same reasons.

↑ comment by Alex Beyman (alexbeyman) · 2022-10-12T21:51:44.779Z · LW(p) · GW(p)

>"A lot of the steps in your chain are tenuous. For example, if I were making replicators, I'd ensure they were faithful replicators (not that hard from an engineering standpoint). Making faithful replicators negates step 3."

This assumes three things: First, the continued use of deterministic computing into the indefinite future. Quantum computing, though effectively deterministic, would also increase the opportunity for copying errors because of the added difficulty in extracting the result. Second, you assume that the mechanism which ensures faithful copies could not, itself, be disabled by radiation. Third, that nobody would intentionally create robotic evolvers which not only do not prevent mutations, but intentionally introduce them.

The article also addresses the possibility that strong AI itself, or self replicating robots, are impossible (or not evolvable) when it talks about a future universe saturated instead with space colonies:

"if self replicating machines or strong AI are impossible, then instead the matter of the universe is converted into space colonies with biological creatures like us inside, closely networked. "Self replicating intelligent matter" in some form, be it biology, machines or something we haven't seen yet. Many paths, but to the same destination."

>"But I saw the negative vote total and no comments, a situation I'd find frustrating if I were in it,"

I appreciate the consideration but assure you that I feel no kind of way about it. I expect that response as it's also how I responded when first exposed to ideas along these lines, mistrusting any conclusion so grandiose that I did not put together on my own. LessWrong is a haven for people with that mindset which is why I feel comfortable here and why I am not surprised, disappointed or offended that they would also reject a conclusion like this at first blush, only coming around to it months or years later, upon doing the internal legwork themselves.