Subsystem Alignment

post by abramdemski, Scott Garrabrant · 2018-11-06T16:16:45.656Z · LW · GW · 12 commentsContents

12 comments

(The bibliography for the whole sequence can be found here)

12 comments

Comments sorted by top scores.

comment by Rohin Shah (rohinmshah) · 2018-11-07T00:56:24.666Z · LW(p) · GW(p)

The first part of this post seems to rest upon an assumption that any subagents will have long-term goals that they are trying to optimize, which can cause competition between subagents. It seems possible to instead pursue subgoals under a limited amount of time, or using a restricted action space, or using only "normal" strategies. When I write a post, I certainly am treating it as a subgoal -- I don't typically think about how the post contributes to my overall goals while writing it, I just aim to write a good post. Yet I don't recheck every word or compress each sentence to be maximally informative. Perhaps this is because that would be a new strategy I haven't used before and so I evaluate it with my overall goals, instead of just the "good post" goal, or perhaps it's because my goal also has time constraints embedded in it, or something else, but in any case it seems wrong to think of post-writing-Rohin as optimizing long term preferences for writing as good a post as possible.

This agent design treats the system’s epistemic and instrumental subsystems as discrete agents with goals of their own, which is not particularly realistic.

Nitpick: I think the bigger issue is that the epistemic subsystem doesn't get to observe the actions that the agent is taking. That's the easiest way to distinguish delusion box behavior from good behavior. (I call this a nitpick because this isn't about the general point that if you have multiple subagents with different goals, they may compete.)

comment by Pattern · 2018-11-07T05:45:08.624Z · LW(p) · GW(p)

How could a (relatively) 'too-strong' epistemic subsystem be a bad thing?

Replies from: Scott Garrabrant, Davidmanheim↑ comment by Scott Garrabrant · 2018-11-07T18:52:53.598Z · LW(p) · GW(p)

So if we view an epistemic subsystem as an super intelligent agent who has control over the map and has the goal of make the map match the territory, one extreme failure mode is that it takes a hit to short term accuracy by slightly modifying the map in such a way as to trick the things looking at the map into giving the epistemic subsystem more control. Then, once it has more control, it can use it to manipulate the territory to make the territory more predictable. If your goal is to minimize surprise, you should destroy all the surprising things.

Note that we would not make an epistemic system this way, a more realistic model of the goal of an epistemic system we would build is "make the map match the territory better than any other map in a given class," or even "make the map match the territory better than any small modification to the map." But a large point of the section is that if you search strategies that "make the map match the territory better than any other map in a given class," at small scales, this is the same as "make the map match the territory." So you might find "make the map match the territory" optimizers, and then go wrong in the way above.

I think all this is pretty unrealistic, and I expect you are much more likely to go off in a random direction than something that looks like a specific subsystem the programmers put in gets too much power and optimizes stabile for what the programmers said. We would need to understand a lot more before we would even hit the failure mode of making a system where the epistemic subsystem was agenticly optimizing what it was supposed to be optimizing.

↑ comment by Davidmanheim · 2018-11-07T07:21:23.176Z · LW(p) · GW(p)

It's easy to find ways of searching for truth in ways that harm the instrumental goal.

Example 1: I'm a self-driving car AI, and don't know whether hitting pedestrians at 35 MPH is somewhat bad, because it injures them, or very bad, because it kills them. I should not gather data to update my estimate.

Example 2: I'm a medical AI. Repeatedly trying a potential treatment that I am highly uncertain about the effects of to get high-confidence estimates isn't optimal. I should be trying to maximize something other than knowledge. Even though I need to know whether treatments work, I should balance the risks and benefits of trying them.

comment by nostalgebraist · 2018-11-07T17:02:06.988Z · LW(p) · GW(p)

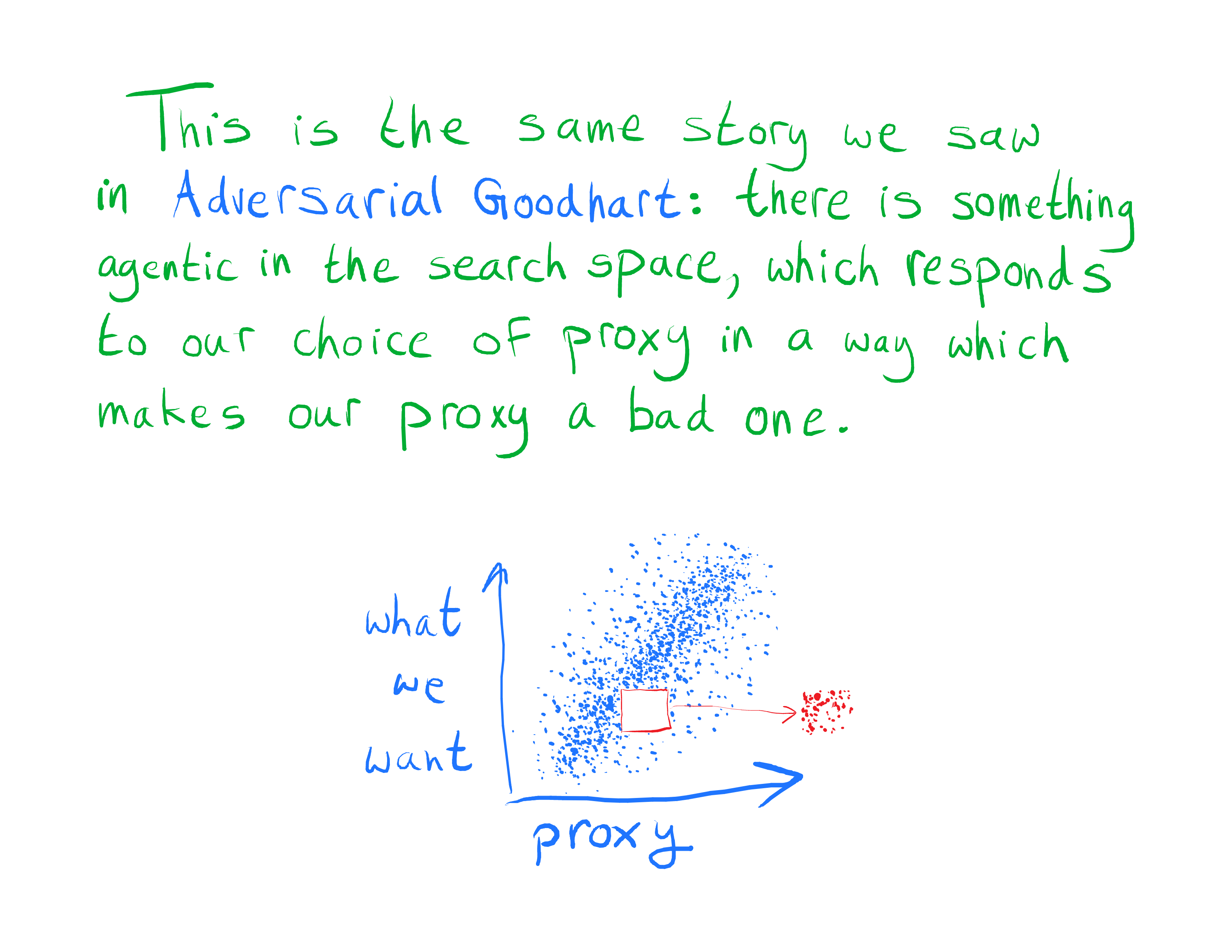

Does "subsystem alignment" cover every instance of a Goodhart problem in agent design, or just a special class of problems that arises when the sub-systems are sufficiently intelligent?

As stated, that's a purely semantic question, but I'm concerned with a more-than-semantic issue here. When we're talking about all Goodhart problems in agent design, we're talking about a class of problems that already comes up in all sorts of practical engineering, and which can be satisfactorily handled in many real cases without needing any philosophical advances. When I make ML models at work, I worry about overfitting and about misalignments between the loss function and my true goals, but it's usually easy to place bounds on how much trouble these things can cause. Unlike humans interacting with "evolution," my models don't live in a messy physical world with porous boundaries; they can only control their output channel, and it's easy to place safety restrictions on the output of that channel, outside the model. This is like "boxing the AI," but my "AI" is so dumb that this is clearly safe. (We could get even clearer examples by looking at non-ML engineers building components that no one would call AI.)

Now, once the subsystem is "intelligent enough," maybe we have something like a boxed AGI, with the usual boxed AGI worries. But it doesn't seem obvious to me that "the usual boxed AGI worries" have to carry over to this case. Making a subsystem strikes me as a more favorable case for "tool AI" arguments than making something with a direct interface to physical reality, since you have more control over what the output channel does and does not influence, and the task may be achievable even with a very limited input channel. (As an example, one of the ML models I work on has an output channel that just looks like "show a subset of these things to the user"; if you replaced it with a literal superhuman AGI, but kept the output channel the same, not much could go wrong. This isn't the kind of output channel we'd expect to hook up to a real AGI, but that's my point: sometimes what you want out of your subsystem just isn't rich enough to make boxing fail, and maybe that's enough.)

Replies from: Davidmanheim↑ comment by Davidmanheim · 2018-11-08T09:22:53.354Z · LW(p) · GW(p)

...we're talking about a class of problems that already comes up in all sorts of practical engineering, and which can be satisfactorily handled in many real cases without needing any philosophical advances.

The explicit assumption of the discussion here is that we can't pass the full objective function to the subsystem - so it cannot possibly have the goal fully well defined. This isn't going to depend on whether the subsystem is really smart or really dumb, it's a fundamental problem if you can't tell the subsystem enough to solve it.

But I don't think that's a fair characterization of most Goodhart-like problems, even in the limited practical case. Bad models and causal mistakes don't get mitigated unless we get the correct model. And adversarial Goodhart is much worse than that. I agree that it describes "tails diverge" / regressional goodhart, and we have solutions for that case, (compute the Bayes estimate, as the previous ) but only once the goal is well-defined. (We have mitigations for other cases, but they have their own drawbacks.)

comment by Abe Dillon (abe-dillon) · 2019-06-29T00:29:19.928Z · LW(p) · GW(p)

I'll probably have a lot more to say on this entire post later, but for now I wanted to address one point. Some problems, like wire-heading, may not be deal-breakers or reasons to write anything off. Humans are capable of hijacking their own reward centers and "wireheading" in many different ways (the most obvious being something like shooting heroin), yet that doesn't mean humans aren't intelligent. Things like wireheading, bad priors, or the possibility of "trolling"[https://www.lesswrong.com/posts/CvKnhXTu9BPcdKE4W/an-untrollable-mathematician-illustrated] may just be hazards of intelligence.

If you're born and you build a model of how the world works based on your input, then you start using that model to reject noise, you might be rejecting information that would fix flaws and biases in your model. When you're young, it makes sense to believe elders when they tell you about how the world works because they probably know better than you, but if all those elders and everyone around you tell you about their belief in some false superstition, then that becomes an integral part of your world model, and evidence against your flawed world model may come long after you've weighted the model with an extremely high probability of being true. If the superstition involves great reward for adhering to and spreading it and great punishment for questioning it, then you have a trap that most valid models of intelligence will struggle with.

If some theory of intelligence is susceptible to that trap, it's not clear that said theory should be dismissed because the only current implementation of general intelligence we know of is also highly susceptible to that trap.

comment by bfinn · 2018-11-07T13:06:06.721Z · LW(p) · GW(p)

Business practice and management studies might provide some useful examples, and possibly some useful insights, here. E.g. off the top of my head:

Fairly obviously, how shareholders try to align managers with their goals, and managers align their subordinates with hopefully the same or close enough goals (and so on down the management chain to the juniors). Incompetent/scheming middle managers with their own agendas (and who may do things like unintentionally or deliberately alter information passed via them up & down the management chain) are a common problem. As are incorrectly incentivized CEOs (not least because their incentive package is typically devised by a committee of fellow board directors, of whom only some may be shareholders).

Less obviously, recruitment as an example of searching for optimizers: how do shareholders find managers who are best able to optimize shareholders' interests, how do managers recruit subordinates, how do shareholders ensure managers are recruiting subordinates aligned with the shareholders' goals rather than some agenda of their own, how are recruiters themselves incentivized and recruited, are there relevant differences between internal & external recruiters (e.g. HR vs headhunters), etc.

Replies from: Davidmanheim↑ comment by Davidmanheim · 2018-11-08T09:33:16.681Z · LW(p) · GW(p)

There is a literature on this, and it's not great for the purposes here - principle-agent setups assume we can formalize the goal as a good metric, and the complexity of management is a fundamentally hard problem that we don't have good answers for (see my essay on scaling companies here: https://www.ribbonfarm.com/2016/03/17/go-corporate-or-go-home/ ), and goodhart failures due to under-specified goals are fundamentally impossible (see my essay on this here: https://www.ribbonfarm.com/2016/09/29/soft-bias-of-underspecified-goals/ ).

There are a set of strategies for mitigating the problems, and I have a paper on this that is written but still needs to be submitted somewhere, tentatively titled "Building Less Flawed Metrics: Dodging Goodhart and Campbell’s Laws," if anyone wants to see it they can message/email/tweet at me.

Abstract: Metrics are useful for measuring systems and motivating behaviors. Unfortunately, naive application of metrics to a system can distort the system in ways that undermine the original goal. The problem was noted independently by Campbell and Goodhart, and in some forms it is not only common, but unavoidable due to the nature of metrics. There are two distinct but interrelated problems that must be overcome in building better metrics; first, specifying metrics more closely related to the true goals, and second, preventing the recipients from gaming the difference between the reward system and the true goal. This paper describes several approaches to designing metrics, beginning with design considerations and processes, then discussing specific strategies including secrecy, randomization, diversification, and post-hoc specification. Finally, it will discuss important desiderata and the trade-offs involved in each approach.

(I should edit this comment to be a link once I have submitted and have a pre-print or publication.)

Replies from: bfinn↑ comment by bfinn · 2018-11-09T11:05:57.175Z · LW(p) · GW(p)

Thanks, I'll read those with interest.

I didn't think it likely that business has solved any of these problems, but I just wonder if it might include particular failures not found in other fields that help point towards a solution; or even if it has found one or two pieces of a solution.

Business may at least provide some instructive examples of how things go wrong. Because business involves lots of people & motives & cooperation & conflict, things which I suspect humans are particularly good at thinking & reasoning about (as opposed to more abstract things).