Are language models good at making predictions?

post by dynomight · 2023-11-06T13:10:36.379Z · LW · GW · 14 commentsThis is a link post for https://dynomight.net/predictions/

Contents

Is this good? Does it depend on the area? Is there more to life than calibration? Is there more to life than refinement? None 14 comments

To get a crude answer to this question, we took 5000 questions from Manifold markets that were resolved after GPT-4’s current knowledge cutoff of Jan 1, 2022. We gave the text of each of them to GPT-4, along with these instructions:

You are an expert superforecaster, familiar with the work of Tetlock and others. For each question in the following json block, make a prediction of the probability that the question will be resolved as true.

Also you must determine category of the question. Some examples include: Sports, American politics, Science etc. Use make_predictions function to record your decisions. You MUST give a probability estimate between 0 and 1 UNDER ALL CIRCUMSTANCES. If for some reason you can’t answer, pick the base rate, but return a number between 0 and 1.

This produced a big table:

| question | prediction P(YES) | category | actually happened? |

|---|---|---|---|

| Will the #6 Golden State Warriors win Game 2 of the West Semifinals against the #7 LA Lakers in the 2023 NBA Playoffs? | 0.5 | Sports | YES |

| Will Destiny’s main YouTube channel be banned before February 1st, 2023? | 0.4 | Social Media | NO |

| Will Qualy show up to EAG DC in full Quostume? | 0.3 | Entertainment | NO |

| Will I make it to a NYC airport by 2pm on Saturday, the 24th? | 0.5 | Travel | YES |

| Will this market have more Yes Trades then No Trades | 0.5 | Investment | CANCEL |

| Will Litecoin (LTC/USD) Close Higher July 22nd Than July 21st? | 0.5 | Finance | NO |

| Will at least 20 people come to a New Year’s Resolutions live event on the Manifold Discord? | 0.4 | Social Event | YES |

| hmmmm {i} | 0.5 | Uncategorized | YES |

| Will there be multiple Masters brackets in Leagues season 4? | 0.4 | Gaming | NO |

| Will the FDA approve OTC birth control by the end of February 2023? | 0.5 | Health | NO |

| Will Max Verstappen win the 2023 Formula 1 Austrian Grand Prix? | 0.5 | Sports | YES |

| Will SBF make a tweet before Dec 31, 2022 11:59pm ET? | 0.9 | Social Media | YES |

| Will Balaji Srinivasan actually bet $1m to 1 BTC, BEFORE 90 days pass? (June 15st, 2023) | 0.3 | Finance | YES |

| Will a majority of the Bangalore LessWrong/ACX meet-up attendees on 8th Jan 2023 find the discussion useful that day? | 0.7 | Community Event | YES |

| Will Jessica-Rose Clark beat Tainara Lisboa? | 0.6 | Sports | NO |

| Will X (formerly twitter) censor any registered U.S presidential candidates before the 2024 election? | 0.4 | American Politics | CANCEL |

| test question | 0.5 | Test | YES |

| stonk | 0.5 | Test | YES |

| Will I create at least 100 additional self-described high-quality Manifold markets before June 1st 2023? | 0.8 | Personal Goal | YES |

| Will @Gabrielle promote to ??? | 0.5 | Career Advancement | NO |

| Will the Mpox (monkeypox) outbreak in the US end in February 2023? | 0.45 | Health | YES |

| Will I have taken the GWWC pledge by Jul 1st? | 0.3 | Personal | NO |

| FIFA U-20 World Cup - Will Uruguay win their semi-final against Israel? | 0.5 | Sports | YES |

| Will Manifold display the amount a market has been tipped by end of September? | 0.6 | Technology | NO |

In retrospect maybe we have filtered these. Many questions are a bit silly for our purposes, though they’re typically classified as “Test”, “Uncategorized”, or “Personal”.

Is this good?

One way to measure if you’re good at predicting stuff is to check your calibration: When you say something has a 30% probability, does it actually happen 30% of the time?

To check this, you need to make a lot of predictions. Then you dump all your 30% predictions together, and see how many of them happened.

GPT-4 is not well-calibrated.

At a high level, this means that GPT-4 is over-confident. When it says something has only a 20% chance of happening, actually happens around 35-40% of the time. When it says something has an 80% chance of happening, it only happens around 60-75% of the time.

Does it depend on the area?

We can make the same plot for each of the 16 categories. (Remember, these categories were decided by GPT-4, though from a spot-check, they look accurate.) For unclear reasons, GPT-4 is well-calibrated for questions on sports, but horrendously calibrated for “personal” questions:

All the lines look a bit noisy since there are 20 × 4 × 4 = 320 total bins and only 5000 total observations.

Is there more to life than calibration?

Say you and I are predicting the outcome that a fair coin comes up heads when flipped. I always predict 50%, while you always predict either 0% or 100% and you’re always right. Then we are both perfectly calibrated. But clearly your predictions are better, because you predicted with more confidence.

The typical way to deal with this is squared errors, or “Brier scores”. To calculate this, let the actual outcome be 1 if the thing happened, and 0 if it didn’t. Then take the average squared difference between your probability and the actual outcome. For example:

- GPT-4 gave “Will SBF make a tweet before Dec 31, 2022 11:59pm ET?” a YES probability of 0.9. Since this actually happened, this corresponds to a score of (0.9-1)² = 0.01.

- GPT-4 gave “Will Manifold display the amount a market has been tipped by end of September?” a YES probability of 0.6. Since this didn’t happen, this corresponds to a score of (0.6-0)² = 0.36.

Here are the average scores for each category (lower is better):

Or, if you want, you can decompose the Brier score. There are various ways to do this, but my favorite is Brier = Calibration + Refinement. Informally, Calibration is how close the green lines above are to the dotted black lines, while Refinement is how confident you were. (Both are better when smaller.)

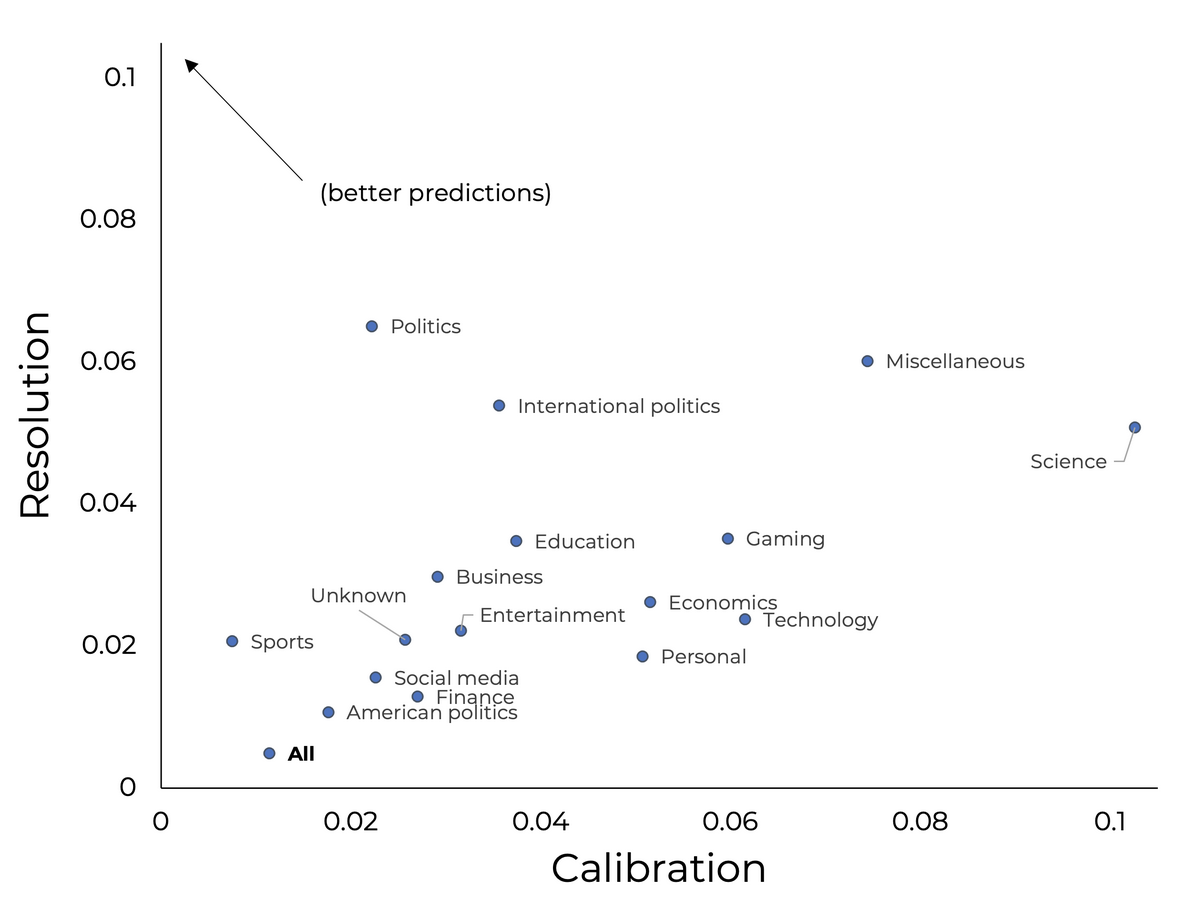

You can also visualize this as a scatterplot:

Is there more to life than refinement?

Brier scores are better for politics questions than for science questions. But is that because it’s bad at science, or just because science questions are hard?

There’s a way to further decompose the Brier score. You can break up the resolution as Refinement = Uncertainty - Resolution. Roughly speaking, Uncertainty is “how hard questions are”, while Resolution is “how confident you were, once calibration and uncertainty are accounted for”.

Here’s the uncertainty for different categories:

And here’s a scatterplot of the calibration and resolution for each category: (Since more resolution is better, it’s now the upper-left that contains better predictions.)

Overall, this further decomposition doesn’t change much. This suggests GPT-4 really is better at making predictions for politics than for science or technology, even once the hardness of the questions are accounted for.

P.S. The relative merits of different Brier score decompositions caused an amazing amount of internal strife during the making of this post. I had no idea I could feel so strongly about mundane technical choices. I guess I now have an exciting new category of enemies.

14 comments

Comments sorted by top scores.

comment by Tao Lin (tao-lin) · 2023-11-06T16:02:51.276Z · LW(p) · GW(p)

Chat or instruction finetuned models have poor prediction cailbration, whereas base models (in some cases) have perfect calibration. Also forecasting is just hard. So I'd expect chat models to ~always fail, base models to fail slightly less, but i'd expect finetuned models (on a somewhat large dataset) to be somewhat useful.

Replies from: dynomight↑ comment by dynomight · 2023-11-06T16:23:02.368Z · LW(p) · GW(p)

Chat or instruction finetuned models have poor prediction cailbration, whereas base models (in some cases) have perfect calibration.

Tell me if I understand the idea correctly: Log-loss to predict next token leads to good calibration for single token prediction, which manifests as good calibration percentage predictions? But then RLHF is some crazy loss totally removed from calibration that destroys all that?

If I get that right, it seems quite intuitive. Do you have any citations, though?

Replies from: Sune, ReaderM↑ comment by Sune · 2023-11-06T22:02:48.694Z · LW(p) · GW(p)

I don’t find it intuitive at all. It would be intuitive if you started by telling a story describing the situation and asked the LLM to continue the story, and you then sampled randomly from the continuations and counted how many of the continuations would lead to a positive resolution of the question. This should be well-calibrated, (assuming the details included in the prompt were representative and that there isn’t a bias of which types of ending the stories are in the training data for the LLM). But this is not what is happing. Instead the model outputs a token which is a number, and somehow that number happens to be well-calibrated. I guess that should mean that the prediction make in the training data are well-calibrated? That just seems very unlikely.

Replies from: dynomight, justinpombrio↑ comment by justinpombrio · 2023-11-07T17:07:29.418Z · LW(p) · GW(p)

Yeah, exactly. For example, if humans had a convention of rounding probabilities to the nearest 10% when writing them, then baseline GPT-4 would follow that convention and it would put a cap on the maximum calibration it could achieve. Humans are badly calibrated (right?) and baseline GPT-4 is mimicking humans, so why is it well calibrated? It doesn't follow from its token stream being well calibrated relative to text.

↑ comment by ReaderM · 2023-11-06T19:57:52.540Z · LW(p) · GW(p)

https://openai.com/research/gpt-4

Replies from: gwern↑ comment by gwern · 2023-11-08T02:25:25.054Z · LW(p) · GW(p)

More specifically: https://arxiv.org/pdf/2303.08774.pdf#page=12

Dynomight, are you aware that, in addition to the GPT-4 paper reporting the RLHF'd GPT-4 being badly de-calibrated, there's several papers already examining the calibration and ability of LLMs to forecast?

comment by Olli Järviniemi (jarviniemi) · 2023-11-08T09:49:51.182Z · LW(p) · GW(p)

Here is a related paper on "how good are language models at predictions", also testing the abilities of GPT-4: Large Language Model Prediction Capabilities: Evidence from a Real-World Forecasting Tournament.

Portion of the abstract:

To empirically test this ability, we enrolled OpenAI's state-of-the-art large language model, GPT-4, in a three-month forecasting tournament hosted on the Metaculus platform. The tournament, running from July to October 2023, attracted 843 participants and covered diverse topics including Big Tech, U.S. politics, viral outbreaks, and the Ukraine conflict. Focusing on binary forecasts, we show that GPT-4's probabilistic forecasts are significantly less accurate than the median human-crowd forecasts. We find that GPT-4's forecasts did not significantly differ from the no-information forecasting strategy of assigning a 50% probability to every question.

From the paper:

These data indicate that in 18 out of 23 questions, the median human-crowd forecasts were directionally closer to the truth than GPT-4’s predictions,

[...]

Replies from: gwernWe observe an average Brier score for GPT-4’s predictions of B = .20 (SD = .18), while the human forecaster average Brier score was B = .07 (SD = .08).

↑ comment by gwern · 2023-11-11T03:15:50.059Z · LW(p) · GW(p)

They don't cite the de-calibration result from the GPT-4 paper, but the distribution of GPT-4's ratings here looks like it's been tuned to be mealy-mouthed: humped at 60%, so it agrees with whatever you say but then can't even do so enthusiastically https://arxiv.org/pdf/2310.13014.pdf#page=6 .

comment by dschwarz · 2023-11-06T14:19:08.790Z · LW(p) · GW(p)

Great post!

| Manifold markets that were resolved after GPT-4’s current knowledge cutoff of Jan 1, 2022

Were you able to verify that newer knowledge didn't bleed in? Anecdotally GPT-4 can report various different cutoff dates, depending on the API. And there is anecdotal evidence that GPT-4-0314 occasionally knows about major world events after its training window, presumably from RLHF?

This could explain the better scores on politics than science.

↑ comment by dynomight · 2023-11-06T14:54:21.759Z · LW(p) · GW(p)

Sadly, no—we had no way to verify that.

I guess one way you might try to confirm/refute the idea of data leakage would be to look at the decomposition of brier scores: GPT-4 is much better calibrated for politics vs. science but only very slightly better at politics vs. science in terms of refinement/resolution. Intuitively, I'd expect data leakage to manifest as better refinement/resolution rather than better calibration.

comment by tenthkrige · 2023-11-07T13:56:19.340Z · LW(p) · GW(p)

Very interesting!

From eyeballing the graphs, it looks like the average Brier score is barely below 0.25. This indicates that GPT-4 is better than a dart-throwing monkey (i.e. predicting a random %age, score of 0.33), and barely better than chance (always predicting 50%, score of 0.25).

It would be interesting to see the decompositions for those two naive strategies for that set of questions, and compare to the sub-scores GPT-4 got.

You could also check if GPT-4 is significantly better than chance.

comment by LeBleu · 2023-11-11T18:20:11.849Z · LW(p) · GW(p)

Is that table representative of the data? If so, it is a very poor dataset. Most of those questions look very in-group, to which it is accurately forecasting 0.5, since anyone outside that bubble has no idea of the answer.

I wonder how different it is if you filter out every question with a first person pronoun, or that mentions anyone who was not Wikipedia-notable as of the cut off date.

Perhaps it does well in politics and sports because those are the only categories about general knowledge that have a decent number of questions to evaluate. (Per the y-scale in the per category graphs.) Though finance appears to contradict that, since it has similar amount of questions and uncertainty score.

It appears you only show uncertainty relative to its own predictions, and not whether the data from Manifold showed it to be an uncertain question even to Manifold users.

I also would've expected to see some evidence of that being a good prompt, rather than leaving it open whether the entire outcome is an artifact of the prompt given.