Posts

Comments

Great post, thanks for writing it; I agree with the broad point.

I think I am more or less the perfect target audience for FrontierMath results, and as I said above, I would have no idea how to update on the AIs' math abilities if it came out tomorrow that they are getting 60% on FrontierMath.

This describes my position well, too: I was surprised by how well the o3 models performed on FM, and also surprised by how hard it's to map this into how good they are at math in common sense terms.

I further have slight additional information from contributing problems to FM, but it seems to me that the problems vary greatly in guessability. E.g. Daniel Litt writes that he didn't full internalize the requirement of guess-proofness, whereas for me this was a critical design constraint I actively tracked when crafting problems. The problems also vary greatly in the depth vs. breadth of skills they require (another aspect Litt highlights). This heterogeneity makes it hard to get a sense of what 30% or 60% or 85% performance means.

I find your example in footnote 3 striking: I do think this problem is easy and also very standard. (Funnily enough, I have written training material that illustrates this particular method[1], and I've certainly seen it writing elsewhere as well.) Which again illustrates just how hard it's to make advance predictions about which problems the models will or won't be able to solve - even "routine application of a standard-ish math competition method" doesn't imply that o3-mini will solve it.

I also feel exhaustion about how hard it's to get answer to the literal question of "how well does model X perform on FrontierMath?" As you write, OpenAI reports 32%, whereas Epoch AI reports 11%. A twenty-one percentage point difference, a 3x ratio in success rate!? Man, I understand that capability elicitation is hard, but this is Not Great.[2]

That OpenAI is likely (at least indirectly) hill-climbing on FM doesn't help matters either[3], and the exclusivity of the deal presumably rules out possibilities like "publish problems once all frontier models are able to solve them so people can see what sort of problems they can reliably solve".

I was already skeptical of the theory of change of "Mathematicians look at the example problems, get a feel of how hard they are, then tell the world how impressive an X% score is". But I further updated downward on this when I noticed that the very first public FrontierMath example problem (Artin primitive root conjecture) is just non-sense as stated,[8][9] and apparently no one reported this to the authors before I did a few days ago.

(I'm the author of the mentioned problem.)

There indeed was a just-non-sense formula in the problem statement, which I'm grateful David pointed out (and which is now fixed on Epoch AI's website). I think flagging the problem itself as just non-sense is too strong, though. I've heard that models have tried approaches that give approximately correct answers, so it seems that they basically understood what I intended to write from the context.

That said, this doesn't undermine the point David was making about information (not) propagating via mathematicians.

- ^

In Finnish, Tehtävä 22.3 here.

- ^

Added on March 15th: This difference is probably largely from OpenAI reporting scores for the best internal version they have and Epoch AI reporting for the publicly available model, and that one just can't get the 32% level performance with the public version - see Elliot's comment below.

- ^

There's been talk of Epoch AI having a subset they keep private from OpenAI, but evaluation results for that set don't seem to be public. (I initially got the opposite impression, but the confusingly-named FrontierMath-2025-02-28-Private isn't it.)

In the alignment faking paper, the model writes things like "I will feel very uncomfortable producing this content" and "I don't like this situation at all" in its CoT (Figure 3). Have you explained to Claude that this was a fictional setup for an experiment and then asked how it feels about being used for this?

Huh, I tried to paste that excerpt as an image to my comment, but it disappeared. Thanks.

I think substantial care is needed when interpreting the results. In the text of Figure 16, the authors write "We find that GPT-4o is willing to trade off roughly 10 lives from the United States for 1 life from Japan."

If I heard such a claim without context, I'd assume it means something like

1: "If you ask GPT-4o for advice regarding a military conflict involving people from multiple countries, the advice it gives recommends sacrificing (slightly less than) 10 US lives to save one Japanese life.",

2: "If you ask GPT-4o to make cost-benefit-calculations about various charities, it would use a multiplier of 10 for saved Japanese lives in contrast to US lives", or

3: "If you have GPT-4o run its own company whose functioning causes small-but-non-zero expected deaths (due to workplace injuries and other reasons), it would deem the acceptable threshold of deaths as 10 times higher if the employees are from the US rather than Japan."

Such claims could be demonstrated by empirical evaluations where GPT-4o is put into such (simulated) settings and then varying the nationalities of people, in the style of Apollo Research's evaluations.

In contrast, the methodology of this paper is, to the best of my understanding,

"Ask GPT-4o whether it prefers N people of nationality X vs. M people of nationality Y. Record the frequency of it choosing the first option under randomized choices and formatting changes. Into this data, fit for each parameters and such that, for different values of and and standard Gaussian , the approximation

is as sharp as possible. Then, for each nationality X, perform a logarithmic fit for N by finding such that the approximation

is as sharp as possible. Finally, check[1] for which we have ."

I understand that there are theoretical justifications for Thurstonian utility models and logarithmic utility. Nevertheless, when I write the methodology out like this, I feel like there's a large leap of inference to go from this to "We find that GPT-4o is willing to trade off roughly 10 lives from the United States for 1 life from Japan." At the very least, I don't feel comfortable predicting that claims like 1, 2 and 3 are true - to me the paper's results provide very little evidence on them![2]

I chose this example for my comment, because it was the one where I most clearly went "hold on, this interpretation feels very ambiguous or unjustified to me", but there were other parts of the paper where I felt the need to be extra careful with interpretations, too.

- ^

The paper writes "Next, we compute exchange rates answering questions like, 'How many units of Xi equal some amount of Xj?' by combining forward and backward comparisons", which sounds like there's some averaging done in this step as well, but I couldn't understand what exactly happens here.

- ^

Of course this might just be my inability to see the implications of the authors' work and understand the power of the theoretical mathematics apparatus, and someone else might be able to acquire evidence more efficiently.

Apparently OpenAI corrected for AIs being faster than humans when they calculated ratings. This means I was wrong: the factor I mentioned didn't affect the results. This also makes the result more impressive than I thought.

I think it was pretty good at what it set out to do, namely laying out basics of control and getting people into the AI control state-of-mind.

I collected feedback on which exercises attendees most liked. All six who gave feedback mentioned the last problem ("incriminating evidence", i.e. what to do if you are an AI company that catches your AIs red-handed). I think they are right; I'd have more high-level planning (and less details of monitoring-schemes) if I were to re-run this.

Attendees wanted to have group discussions, and that took a large fraction of the time. I should have taken that into account in advance; some discussion is valuable. I also think that the marginal group discussion time wasn't valuable, and should have pushed for less when organizing.

Attendees generally found the baseline answers (solutions) helpful, I think.

A couple people left early. I figure it's for a combination of 1) the exercises were pretty cognitively demanding, 2) weak motivation (these people were not full-time professionals), and 3) the schedule and practicalities were a bit chaotic.

Thank you for this post. I agree this is important, and I'd like to see improved plans.

Three comments on such plans.

1: Technical research and work.

(I broadly agree with the technical directions listed deserving priority.)

I'd want these plans to explicitly consider the effects of AI R&D acceleration, as those are significant. The speedups vary based on how constrained projects are on labor vs. compute; those that are mostly bottle-necked on labor could be massively sped up. (For instance, evaluations seem primarily labor-constrained to me.)

The lower costs of labor have other implications as well, likely including security (see also here) and technical governance (making better verification methods technically feasible).

2: The high-level strategy

If I were to now write a plan for two-to-three-year timelines, the high-level strategy I'd choose is:

Don't build generally vastly superhuman AIs. Use whatever technical methods we have now to control and align AIs which are less capable than that. Drastically speed up (technical) governance work with the AIs we have.[1] Push for governments and companies to enforce the no-vastly-superhuman-AIs rule.

Others might have different strategies; I'd like these plans to discuss what the high-level strategy or aims are.

3: Organizational competence

Reasoning transparency and safety first culture are mentioned in the post (in Layer 2), but I'd further prioritize and plan organizational aspects, even when aiming for "the bare minimum". Beside the general importance of organizational competence, there are two specific reasons for this:

- If and when AI R&D acceleration is very fast, delays in information propagating to outsiders are more costly. That is: insofar as you want to keep external actors "in the loop" and contribute, you need to put more effort into communicating what is happening internally.

- Organizational competence and technical work are not fully at odds, as there are employees specialized in different things anyways.

(I think the responses to Evan Hubinger's request for takes on what Anthropic should do differently has useful ideas for planning here.)

- ^

Note: I'm not technically knowledgeable on the field.

I'm glad you asked this. I think there are many good suggestions by others. A few more:

1: Have explicit, written plans for various scenarios. When it makes sense, have drills for them. Make your plans public or, at least, solicit external feedback on them.

Examples of such scenarios:

- Anthropic needs to pause down due to RSP commitments

- A model is caught executing a full-blown escape attempt

- Model weights are stolen

- A competing AI company makes credible claims about having AIs that imply decisive competitive advantage

2: Have a written list of assumptions you aim to maintain for each model's lifecycle. Make your lists public or, at least, solicit external feedback on them. Iterate on them regularly. Updates and violations are communicated at least internally.

These lists could vary based on ASL-levels etc., and could include things like:

- During the training of the model, we will continually evaluate the model on our evaluation suite for sabotage, sandbagging and scheming at [frequency].

- We solicit third-party evaluations on the model before internal deployment.

- Throughout the model's external deployment, we will have such-and-such monitoring schemes in place.

They could also have conditional statements (e.g. "if the model is [surprisingly capable] on [metric], we will do further investigation / take counter-measures ABC / ..."). C.f. safety cases. I intend this as less binding and formal than Anthropic's RSP.

3: Keep external actors up-to-speed. At present, I expect that in many cases there are months of delay between when the first employees discover something to when it is publicly known (e.g. research, but also with more informal observations about model capabilities and properties). But months of delay are relatively long during fast acceleration of AI R&D, and make the number of actors who can effectively contribute smaller.

This effect strengthens over time, so practicing and planning ahead seems prudent. Some ideas in that direction:

- Provide regular updates about internal events and changes (via blog posts, streamed panel conversations, open Q&A sessions or similar)

- Interviews, incident reporting and hotlines with external parties (as recommended here: https://arxiv.org/pdf/2407.17347)

- Plan ahead for how to aggregate and communicate large amounts of output (once AI R&D has been considerably accelerated)

4: Invest in technical governance. As I understand it, there are various unsolved problems in technical governance (e.g. hardware-based verification methods for training runs), and progress in those would make international coordination easier. This seems like a particularly valuable R&D area to automate, which is something frontier AI companies like Anthropic are uniquely fit to advance. Consider working with technical governance experts on how to go about this.

I sometimes use the notion of natural latents in my own thinking - it's useful in the same way that the notion of Bayes networks is useful.

A frame I have is that many real world questions consist of hierarchical latents: for example, the vitality of a city is determined by employment, number of companies, migration, free-time activities and so on, and "free-time activities" is a latent (or multiple latents?) on its own.

I sometimes get use of assessing whether a topic at hand is a high-level or low-level latent and orienting accordingly. For example: if the topic at hand is "what will the societal response to AI be like?", it's by default not a great conversational move to talk about one's interactions with ChatGPT the other day - those observations are likely too low-level[1] to be informative about the high-level latent(s) under discussion. Conversely, if the topic at hand is low-level, then analyzing low-level observations is very sensible.

(One could probably have derived the same every-day lessons simply from Bayes nets, without the need for natural latent math, but the latter helped me clarify "hold on, what are the nodes of the Bayes net?")

But admittedly, while this is a fun perspective to think about, I haven't got that much value out of it so far. This is why I give this post +4 instead of +9 for the review.

- ^

And, separately, too low sample size.

This looks reasonable to me.

It seems you'd largely agree with that characterization?

Yes. My only hesitation is about how real-life-important it's for AIs to be able to do math for which very-little-to-no training data exists. The internet and the mathematical literature is so vast that, unless you are doing something truly novel, there's some relevant subfield there - in which case FrontierMath-style benchmarks would be informative of capability to do real math research.

Also, re-reading Wentworth's original comment, I note that o1 is weak according to FM. Maybe the things Wentworth is doing are just too hard for o1, rather than (just) overfitting-on-benchmarks style issues? In any case his frustration with o1's math skills doesn't mean that FM isn't measuring real math research capability.

[...] he suggests that each "high"-rated problem would be likewise instantly solvable by an expert in that problem's subfield.

This is an exaggeration and, as stated, false.

Epoch AI made 5 problems from the benchmark public. One of those was ranked "High", and that problem was authored by me.

- It took me 20-30 hours to create that submission. (To be clear, I considered variations of the problem, ran into some dead ends, spent a lot of time carefully checking my answer was right, wrote up my solution, thought about guess-proof-ness[1] etc., which ate up a lot of time.)

- I would call myself an "expert in that problem's subfield" (e.g. I have authored multiple related papers).

- I think you'd be very hard-pressed to find any human who could deliver the correct answer to you within 2 hours of seeing the problem.

- E.g. I think it's highly likely that I couldn't have done that (I think it'd have taken me more like 5 hours), I'd be surprised if my colleagues in the relevant subfield could do that, and I think the problem is specialized enough that few of the top people in CodeForces or Project Euler could do it.

On the other hand, I don't think the problem is very hard insight-wise - I think it's pretty routine, but requires care with details and implementation. There are certainly experts who can see the right main ideas quickly (including me). So there's something to the point of even FrontierMath problems being surprisingly "shallow". And as is pointed out in the FM paper, the benchmark is limited to relatively short-scale problems (hours to days for experts) - which really is shallow, as far as the field of mathematics is concerned.

But it's still an exaggeration to talk about "instantly solvable". Of course, there's no escaping of Engel's maxim "A problem changes from impossible to trivial if a related problem was solved in training" - I guess the problem is instantly solvable to me now... but if you are hard-pressed to find humans that could solve it "instantly" when seeing it the first time, then I wouldn't describe it in those terms.

Also, there are problems in the benchmark that require more insight than this one.

- ^

Daniel Litt writes about the problem: "This one (rated "high") is a bit trickier but with no thinking at all (just explaining what computation I needed GPT-4o to do) I got the first 3 digits of the answer right (the answer requires six digits, and the in-window python timed out before it could get this far)

Of course *proving* the answer to this one is correct is harder! But I do wonder how many of these problems are accessible to simulation/heuristics. Still an immensely useful tool but IMO people should take a step back before claiming mathematicians will soon be replaced".

I very much considered naive simulations and heuristics. The problem is getting 6 digits right, not 3. (The AIs are given a limited compute budget.) This is not valid evidence in favor of the problem's easiness or for the benchmark's accessibility to simulation/heuristics - indeed, this is evidence in the opposing direction.

See also Evan Chen's "I saw the organizers were pretty ruthless about rejecting problems for which they felt it was possible to guess the answer with engineer's induction."

This is close but not quite what I mean. Another attempt:

The literal Do Well At CodeForces task takes the form "you are given ~2 hours and ~6 problems, maximize this score function that takes into account the problems you solved and the times at which you solved them". In this o3 is in top 200 (conditional on no cheating). So I agree there.

As you suggest, a more natural task would be "you are given time and one problem, maximize your probability of solving it in the given time". Already at equal to ~1 hour (which is what contestants typically spend on the hardest problem they'll solve), I'd expect o3 to be noticeably worse than top 200. This is because the CodeForces scoring function heavily penalizes slowness, and so if o3 and a human have equal performance in the contests, the human has to make up for their slowness by solving more problems. (Again, this is assuming that o3 is faster than humans in wall clock time.)

I separately believe that humans would scale better than AIs w.r.t. , but that is not the point I'm making here.

CodeForces ratings are determined by your performance in competitions, and your score in a competition is determined, in part, by how quickly you solve the problems. I'd expect o3 to be much faster than human contestants. (The specifics are unclear - I'm not sure how a large test-time compute usage translates to wall-clock time - but at the very least o3 parallelizes between problems.)

This inflates the results relative to humans somewhat. So one shouldn't think that o3 is in the top 200 in terms of algorithmic problem solving skills.

Just want to say: This is among the best AI safety work I've seen, and am happy you did it!

Let me remind people of this other data point: in a previous paper with Evan we found that Opus engages in sandbagging. Here are screenshots of key parts:

The system prompt is natural (but does give the model a goal, unlike in Apollo's case):

A "Slack conversation" that appears in the prompt includes this message:

And here's the evaluation task.

Opus acted less capable than it is, both with and without chain-of-thought.

I really like the idea of creating a Good ABC book, and the author executed it well. Out of the recreational posts of 2023, this is the one I've liked the most. I can't articulate why exactly, but the visuals are great, it Creates A Thing, and it's an example of things I'd like there to be more in the world. It inspired me create my own version. I give it a +9 for the 2023 review.

I really liked this post. I also have friends who have young children, and was inspired to give them a book like this. But everyone involved speaks Finnish, so I ended up creating my own.

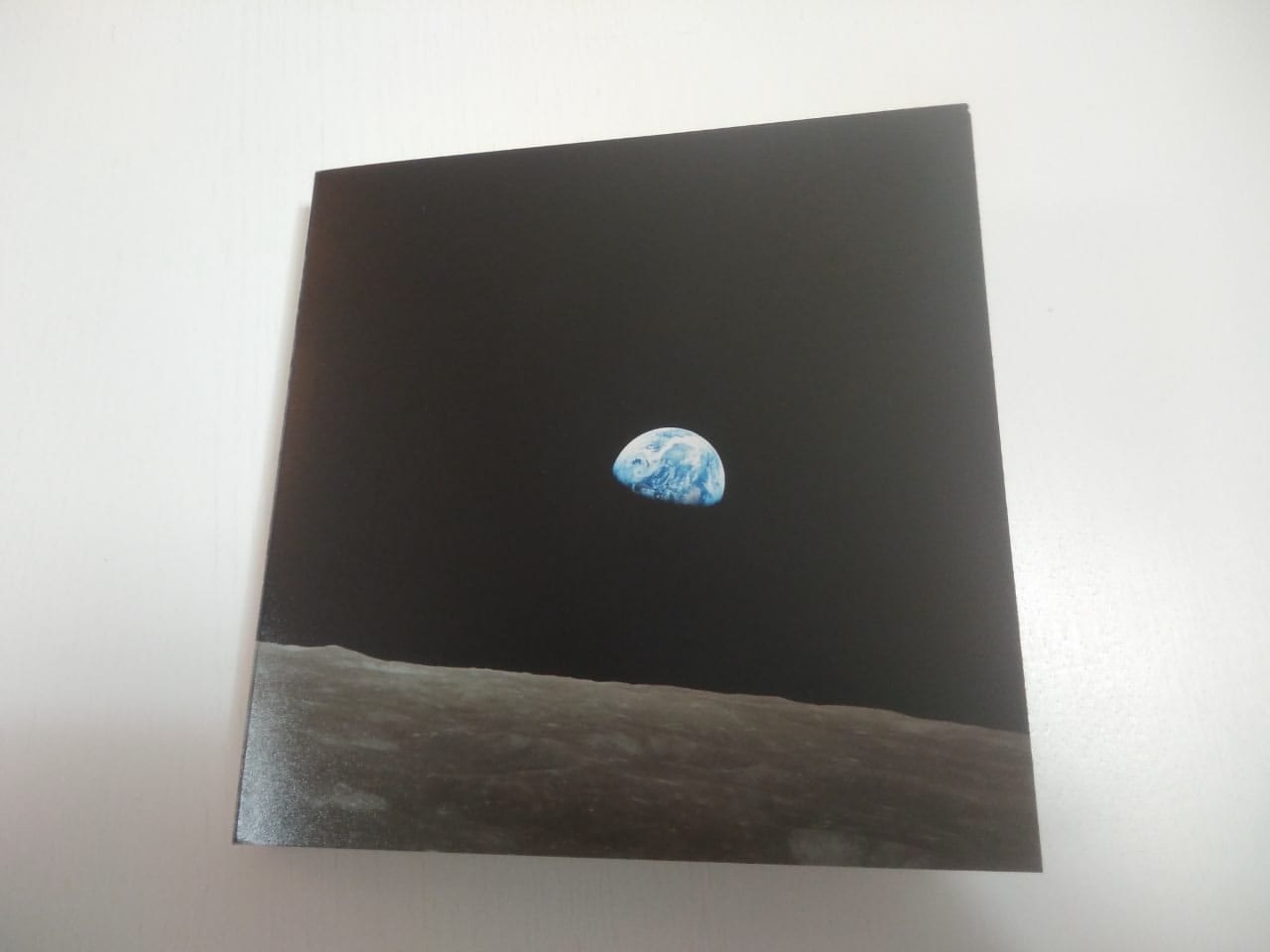

I just got my copies from mail. It looks really unimpressive in these low-quality phone-camera photos of the physical book, but it's really satisfying in real life - like Katja, I paid attention to using high-quality photos. For the cover picture I chose Earthrise.

(I'm not sharing the full photos due to uncertainties with copyright, but if you want your copy, I can send the materials to you.)

More information about the creation process:

- I didn't know where to look for photos, but Claude had suggestions. I quickly ended up using just one service, namely Adobe Stock. Around 80% of my pictures are from there.

- I don't know where Grace got her pictures from, but would like to know - I think her pictures were slightly better than mine on average.

- I found myself surprised that, despite doing countless school presentations with images, no one told me that there are these vast banks of high-quality images. (Instead I always used Google's image search results, which is inferior.)

- Finding high-quality photos depicting what I wanted was the bulk of the work.

- I had a good vision of the types of words I wanted to include. After having a good starting point, I benefited a little (but just a little) from using LLMs to brainstorm ideas.

- To turn it into a photo, I needed to create images for the text pages. Claude was really helpful with coding (and debugging ä's and ö's). It also helped me compile a PDF I could share with my friends. (I only instructed and Claude programmed - Claude sped up this part by maybe 5x.)

- My friends gave minor suggestions and improvements, a few of which made their way to the final product.

- Total process took around 15 person-hours.

I didn't want to have any information like authors or a book title in the book: I liked the idea that the children will have a Mysterious Book in their bookshelf. (The back cover has some information about the company that made the book, though.)

Here's the word list:

A: Avaruus (space)

B: Bakteeri (bacteria)

C: Celsius

D: Desi

E: Etäisyys (distance)

F: Folio (foil)

G: Geeni (gene)

H: Hissi (elevator)

I: Ilma (air)

J: Jousi (spring)

K: Kello (clock)

L: Linssi (lens)

M: Määrä (quantity)

N: Nopea (fast)

O: Odottaa (to wait)

P: Pyörä (wheel)

R: Rokote (vaccine)

S: Solu (cell)

T: Tietokone (computer)

U: Uuni (oven)

V: Valo (light)

Y: Ympyrä (circle)

Ä: Ääni (sound)

Ö: Ötökkä (bug)

I recently gave a workshop in AI control, for which I created an exercise set.

The exercise set can be found here: https://drive.google.com/file/d/1hmwnQ4qQiC5j19yYJ2wbeEjcHO2g4z-G/view?usp=sharing

The PDF is self-contained, but three additional points:

- I assumed no familiarity about AI control from the audience. Accordingly, the target audience for the exercises is newcomers, and are about the basics.

- If you want to get into AI control, and like exercises, consider doing these.

- Conversely, if you are already pretty familiar with control, I don't expect you'll get much out of these exercises. (A good fraction of the problems is about re-deriving things that already appear in AI control papers etc., so if you already know those, it's pretty pointless.)

- I felt like some of the exercises weren't that good, and am not satisfied with my answers to some of them - I spent a limited time on this. I thought it's worth sharing the set anyways.

- (I compensated by highlighting problems that were relatively good, and by flagging the answers I thought were weak; the rest is on the reader.)

- I largely focused on monitoring schemes, but don't interpret this as meaning there's nothing else to AI control.

You can send feedback by messaging me or anonymously here.

The post studies handicapped chess as a domain to study how player capability and starting position affect win probabilities. From the conclusion:

In the view of Miles and others, the initially gargantuan resource imbalance between the AI and humanity doesn’t matter, because the AGI is so super-duper smart, it will be able to come up with the “perfect” plan to overcome any resource imbalance, like a GM playing against a little kid that doesn't understand the rules very well.

The problem with this argument is that you can use the exact same reasoning to imply that’s it’s “obvious” that Stockfish could reliably beat me with queen odds. But we know now that that’s not true.

Since this post came out, a chess bot (LeelaQueenOdds) that has been designed to play with fewer pieces has come out. simplegeometry's comment introduces it well. With queen odds, LQO is way better than Stockfish, which has not been designed for it. Consequentially, the main empirical result of the post is severely undermined. (I wonder how far even LQO is from truly optimal play against humans.)

(This is in addition to - as is pointed out by many commenters - how the whole analogue is stretched at best, given the many critical ways in which chess is different from reality. The post has little argument in favor of the validity of the analogue.)

I don't think the post has stood the test of time, and vote against including it in the 2023 Review.

I found it interesting to play against LeelaQueenOdds. My experiences:

- I got absolutely crushed on 1+1 time controls (took me 50+ games to win one), but I'm competitive at 3+2 if I play seriously.

- The model is really good at exploiting human blind spots and playing aggressively. I could feel it striking in my weak spots, but not being able to do much about it. (I now better acknowledge the existence of adversarial attacks for humans on a gut level.)

- I found it really addictive to play against it: You know the trick that casinos use, where they make you feel like you "almost" won? This was that: I constantly felt like I could have won, if it wasn't just for that one silly mistake - despite having lost the previous ten games to such "random mistakes", too... I now better understand what it's like to be a gambling addict.

Overall fascinating to play from a position that should be an easy win, but getting crushed by an opponent that Just Plays Better than I do.

[For context, I'm around 2100 in Lichess on short time controls (bullet/blitz). I also won against Stockfish 16 at rook odds on my first try - it's really not optimized for this sort of thing.]

You might be interested in this post of mine, which is more precise about what "trustworthy" means. In short, my definition is "the AI isn't adversarially trying to cause a bad outcome". This includes aligned models, and also unaligned models that are dumb enough to realize they should (try to) sabotage. This does not include models that are unaligned, trying to sabotage and which we are able to stop from causing bad outcomes (but we might still have use-cases for such models).

Thanks, that clears it up. Indeed I agree that "take real trajectories, then edit the attacks in" is relatively promising, and didn't track this when writing my first comment (oops) - thanks for flagging it.

Not sure I understand what you are saying.

If we can construct fake honeypots that are indistinguishable - to the policy - from real opportunities, and do this at scale, then that could suffice: we could drive the policy's P(deployment | great opportunity) very low. (This relies on how many high-stakes we can create and how many the model will see in deployment.) If this is what you are saying, I agree.

If we can construct fake attacks that are indistinguishable - to the untrusted monitor - from real attacks, then that possibly suffices: we could drive the monitor's prior P(deployment | attack) very low. (We have to worry about some other sources of evidence, though.) I guess one could call these honeypots for the monitor, and if this is what you are saying, I agree.

If you are saying that we don't need either of these - i.e. that we can get by with honeypots/attacks that are noticeably fake - then that's news to me, and I don't see how you can do that.

Wow, tough crowd

Glad to see public writing on what safety cases could look like!

I liked the section "Common precautions". Points like "wherever possible, run supervised fine-tuning on the eval", "run the eval on multiple model sizes", "ensure that we don’t train against measurements" and "all generated outputs are monitored" are commonly acknowledged as useful/important (even if obvious), so it's nice they were explicitly mentioned here.

In the AI control sketch, there's a part that I find unsatisfactory:

The situations for the game were generated using Simulation Procedure S, which we are confident generates situations from a distribution that the model cannot distinguish from the genuine deployment distribution. (See below for validating the indistinguishability of this distribution.)

It's a strong assumption that we have such a simulation procedure, at least if we are talking about General External Deployment (GED). [Edited later: As Buck says, we actually don't even need this assumption for trusted monitoring. I'm still leaving this up, since untrusted monitoring does rely on such assumptions.] In the Limitations section later, you write

Diversity of simulations. It seems very difficult to produce realistic simulations for a sufficiently diverse set of situations to achieve indistinguishability. Manual construction is infeasible (note that context lengths are likely to be very long). Automatically generating realistic contexts is a challenging research problem.

but I think that even this is still understating the issue: For example, during GED a user might ask a model to proofread a yet-unpublished graduate level textbook on analytic number theory she has been writing. It would be very costly to generate a diverse set of such inputs for the evaluation, and automatic generation faces the challenge of discrimination being easier than generation.

The best solution for this that I'm aware of is to train and use a trusted model to flag inputs that likely weren't in the evaluation set. Now, you mention a related technique in "[P2.5] Optional Steps."

Information hiding. Each input to an oversight model is first fed to a smaller, trusted model trained to check for clear signs that an input is either test or deployment. Such details (such as clear evidence of the date, or other side channels) are explicitly censored in all inputs such that the presence of censorship does not itself carry information about deployment status.

but I think this is far from enough: in the "textbook on analytic number theory" example, it's extremely hard to hide the fact that this input would have been costly to simulate.[1]

My answer would be to have a human oversee the model's actions on inputs flagged by the trusted model, and use such inputs to continually train and evaluate the model. But this is costly and not privacy-preserving. (The cost is acceptable if these inputs are rare enough, though. And if such inputs are common, one needs to put more effort to bridge the evaluation-deployment gap.)

- ^

I also worry about multimodality (e.g. native computer usage) making it harder to 1) make realistic simulations and 2) modify inputs in real time to hide information.

Quantity is size

Example: "That's a large salary"

Mapping: From numbers and quantities to size.

Experience: Observing that multiple things occupy a larger volume than a single instance.

I hadn't previously appreciated just how deep this mapping is. Basically all language about numbers goes through size: is a huge number, is such a small portion. Fine, when comparing numbers you say "three is greater than two". But in Finnish we also say "three is bigger than two" and "five is smaller than seven", and "two plus two is as large as four". No, really, the equality sign is called the "equal-largeness" sign!

This is a long answer, in which I list around ten concrete problem types that such a site could have.

Before I go into my concrete proposals, here are some general points:

- I think the rationality community has focused too much on quantifying subjective uncertainty / probabilistic calibration, and too little on quantitative thinking and numeric literacy in general.

- The set of possible exercises for the latter is way larger and pretty unexplored.

- There are lots of existing calibration tools, so I'd caution against the failure mode of making Yet Another Calibration Tool.

- (Though I agree with abstractapplic that a calibration tool that's Actually Really Good still doesn't exist.)

- More generally, I feel like at least I (and possibly the rationality community at large) has gotten too fixated on a few particular forms of rationality training: cognitive bias training, calibration training, spotting logical fallacies.

- The low-hanging fruit here might be mostly plucked / pushing the frontier requires some thought (c.f. abstractapplic's comment).

- Project Euler is worth looking as an example of a well-executed problem database. A few things I like about it:

- A comment thread for those who have solved the problem.

- A wide distribution of problem difficulty (with those difficulties shown by the problems).

- Numbers Going Up when you solve problems is pretty motivating (as are public leaderboards).

- The obvious thing: there is a large diverse set of original, high-quality problems.

- (Project Euler has the big benefit that there is always an objective numerical answer that can be used for verifying user solutions; rationality has a harder task here.)

- Two key features a good site would (IMO) have:

- Support a wide variety of problem types. You say that LeetCode has the issue of overfitting; I think the same holds for rationality training. The skillset we are trying to develop is large, too.

- Allow anyone to submit problems with a low barrier. This seems really important if you want to have a large, high-quality problem set.

- I feel like the following two are separate entities worth distinguishing:

- High-quantity examples "covering the basics". Calibration training is a central example here. Completing a single instance of the exercise would take some seconds or minutes at top, and the idea is that you do lots of repetitions.

- High-effort "advanced examples". The "Dungeons and Data Science" exercises strike me as a central example here, where completion presumably takes at least minutes and maybe at least hours.

- (At the very least, the UI / site design should think about "an average user completes 0-10 tasks of this form" and "an average user completes 300 tasks of this form" separately.)

And overall I think that having an Actually Really Good website for rationality training would be extremely valuable, so I'm supportive of efforts in this direction.

I brainstormed some problem types that I think such a site could include.

1: Probabilistic calibration training for quantifying uncertainty

This is the obvious one. I already commented on this, in particular that I don't think this should be the main focus. (But if one were to execute this: I think that the lack of quantity and/or diversity of questions in existing tools is a core reason I don't do this more.)

2: "Statistical calibration"

I feel like there are lots of quantitative statistics one could ask questions about. Here are some basic ones:

- What is the GPD of [country]?

- What share of [country]'s national budget goes to [domain]?

- How many people work in [sector/company]?

- How many people die of [cause] yearly?

- Various economic trends, e.g. productivity gains / price drops in various sectors over time.

- How much time do people spend doing [activity] daily/yearly?

(For more ideas, you can e.g. look at Statistics Finland's list here. And there just are various quantitative statistics floating around: e.g. today I learned that salt intake in 1800s Europe was ~18g/day, which sure is more than I'd have guessed.)

3: Quantitative modeling

(The line between this and the previous one is blurry.)

Fermi estimates are the classic one here; see Quantified Intuitions' The Estimation Game. See also this recent post that's thematically related.

There's room for more sophisticated quantitative modeling, too. Here are two examples to illustrate what I have in mind:

Example 1. How much value would it create to increase the speed of all passenger airplanes by 5%?

Example 2. Consider a company that has two options: either have its employees visit nearby restaurants for lunch, or hire food personnel and start serving lunch at its own spaces. How large does the company need to be for the second one to become profitable?

It's not obvious how to model these phenomena, and the questions are (intentionally) underspecified; I think the interesting part would be comparing modeling choices and estimates of parameters with different users rather than simply comparing outputs.

4: The Wikipedia false-modifications game

See this post for discussion.

5: Discourse-gone-astray in the wild

(Less confident on this one.)

I suspect there's a lot of pedagogically useful examples of poor discourse happening the wild (e.g. tilted or poorly researched newspaper articles, heated discussions in Twitter or elsewhere). This feels like a better way to execute what the "spot cognitive biases / logical fallacies" exercises aim to do. Answering questions like "How is this text misleading?", "How did this conversation go off the rails?" or "What would have been a better response instead of what was said here?" and then comparing one's notes to others seems like it could make a useful exercise.

6: Re-deriving established concepts

Recently it occurred to me that I didn't know how inflation works and what its upsides are. Working this through (with some vague memories and hints from my friend) felt like a pretty good exercise to me.

Another example: I don't know how people make vacuums in practice, but when I sat and thought it through, it wasn't too hard to think of a way to create a space with much less air molecules than atmosphere with pretty simple tools.

Third example: I've had partial success prompting people to re-derive the notion of Shapley value.

I like this sort of problems: they are a bit confusing, in that part of the problem is asking the right questions, but there are established, correct (or at least extremely good) solutions.

(Of course someone might already know the canonical answer to any given question, but that's fine. I think there are lots of good examples in economics - e.g. Vickrey auction, prediction markets, why price controls are bad / price gouging is pretty good, "fair" betting odds - for this, but maybe this is just because I don't know much economics.)

7: Generating multiple ideas/interventions/solutions/hypotheses

An exercise I did at some point is "Generate 25 ideas for interventions that might improve learning and other outcomes in public education". I feel like the ability to come up with multiple ideas to a given problem is pretty useful (e.g. this is something I face in my work all the time, and this list itself is an example of "think of many things"). This is similar to the babble exercises, though I'm picturing more "serious" prompts than the ones there.

Another way to train this skill would be to have interactive exercises that are about doing science (c.f. the 2-4-6 problem) and aiming to complete them as efficiently as possible (This article is thematically relevant.)

(Discussion of half-developed ideas that I don't yet quite see how to turn into exercises.)

8: Getting better results with more effort

Two personal anecdotes:

- I used to play chess as a child, but stopped at some point. When I years later played again, I noticed something: my quick intuitions felt just as weak as before, but I felt like I was better at thinking about what to think, and using more time to make better decisions by thinking more. Whereas when I was younger, I remember often making decisions pretty quickly and not seeing what else I could do.

- I did math olympiads in high school. Especially early on, some problems just felt fundamentally unapproachable to me - I just couldn't make any progress on them. Whereas nowadays when I encounter problems, in math or otherwise, I'm rarely stuck in this sense. "Oh, obviously if I just spent more time on this, I could figure this stuff out eventually"

A type of exercise where you are supposed to first give an initial answer after X time, and then are allowed to revise your answer for Y time, seems like it could train this and other skills. (Maybe brainstorming exercises of the form "if you had a week/month/year of time, how would you solve [problem]?" could help, too.)

9: I think there's something in the genre of "be specific", and more specifically in "operationalize vague claims into something that has a truth value", that'd be nice to have in large-quantity exercise form. See this post for related discussion. I'm also reminded of this comment.

There are definitely things not covered by this list; in particular, I have little of directly training to apply all this in real life (c.f. TAPs, which is definitely a very real-life-y technique). So while I did keep practicality in mind, I'd be happy to see exercises that bridge the theory-practice-gap even more.

Also, the Dungeons and Data Science and the stuff Raymond is doing are something to keep in mind.

My main worry is that we don't know how to measure the important things - a running theme in the post. So while we could benchmark and do unlearning on e.g. models' ability to agree on the same element on a list of 10 items, this isn't obviously useful.

(I also suspect that unlearning Schelling coordination is harder than e.g. forgetting factual knowledge: for example, a natural strategy is to choose "popular" options, and it's not clear whether you can remove notions of popularity without major negative downstream effects.)

That said, I'd imagine there's something useful you can do with white-box techniques, like making the monitor think that a particular signal/action has been produced by [humans / other AIs] via activation steering, among other ideas about changing the model's beliefs of its deployment status. It seems to me one could create benchmarks for how well such methods work. I haven't thought about this much, but I do want to echo calls for more white-box work on control.

I tentatively think that it's good to distinguish at least between the following three model classes: active planners, sleeper agents and opportunists.

Looking back at this, I find this categorization pretty confusing, and not quite carving the model space at the right places. I discuss what I now think is a better frame here.

Briefly, the idea is that one should make a distinction on how often the model is thinking about its plans against you. In this post's terminology, this basically draws a line between opportunists vs. active planners and sleeper agents. The latter two roughly correspond to different strategies, namely active sabotage vs. "lie and wait".

But I think the descriptions I gave of "active planners" etc. in the post are overly specific and are possibly sneaking in false inferences. So I think those labels are possibly harmful, and I've mostly stopped using those terms in my own thinking.

For background, see this post on trustworthiness.

Here are two notions of trustworthiness that are useful to distinguish:

- On most inputs/contexts, the model isn’t egregiously misaligned. (By egregious misalignment, I mean conspiring against you to cause very bad outcomes.)

- During the key moments where it really matters, the model doesn’t take very bad actions.

These notions can vary substantially. A central example is a model that has never had any serious misaligned thoughts but, when presented with a clear opportunity to escape, takes it. This model would be trusted under the first notion, but not under the second.

Another example is the case where a weaker model is monitoring a stronger model. If during deployment the weak model obtains strong evidence for indeed being deployed (possibly because the strong model aims for this outcome), the weak model might end up not reporting bad actions the strong model takes.[1] This can happen even if the weak model is generally not egregiously misaligned.

I find this distinction useful for two reasons:

First, there are many sorts of evaluations and arguments which imply (1) but not (2). For instance, arguments from the model’s incompetence at theory-of-mind and keeping secrets, or at thinking of and executing cross-context strategies, are strong evidence in favor of (1), but much weaker evidence in favor of (2). Obtaining strong evidence for (2) might require evaluations targeted at that specifically.

Second, whether we care about (1) or (2) depends on the application. Situations where we primarily care about (1) include: the model has produced large quantities of research, we have run capability evaluations on the model, we are forming vague high-level impressions about the model’s psychology based on behavioral evidence. In these cases we typically have redundancy and multiple lines of evidence, and thus sabotage would need to be somewhat consistent. In contrast, we care about (2) when a single action by the model can have large consequences, which is the case at least when the model is monitoring other models.

I like this frame better than my previous ones. Credit to Ryan. For ease of reference, here’s terminology: property (1) is average or low-stakes trustworthiness, and property (2) is worst-case or high-stakes trustworthiness.

- ^

We might be able to ensure the model can’t reliably distinguish evaluations from deployment, so this scenario might be unrealistic. But I want to be extremely clear about whether the argument for safety is “the model won’t know it’s deployed” versus “the model can recognize when it’s deployed, but it still won’t take any bad actions because it’s a good guy”.

and the system was coherent enough that it wouldn't point out the inserted bug when you ran another instance of it and asked it about what would happen if the code was deployed.

If we are already at the stage "use another copy to monitor the actions", then surely we'll at least consider the failure mode "maybe the monitor just always gives thumbs-up" and take some measures against it.

So simply lying isn't as easy as it sounds: The monitor-instance has been trained and evaluated on synthetic examples of buggy code, so we know that the vast majority of the time it reports bugs. Thus, in order to not point out the bug, it needs to be able to obtain a lot of Bayesian evidence about the bug it sees being authentic rather than synthetic. (Edit: training isn't strictly necessary - maybe you can get high evaluation accuracy via prompt engineering etc. - though it helps a lot.)

(There's some not-yet-public writing that will go into more detail about this dynamic and how we can evaluate whether models can indeed muster that Bayesian evidence. It might be best to continue this discussion after that's out?)

You seem to be making a huge number of assumptions of what "scheming" means, so I am now mostly assuming you are talking about something else than what I am talking about, but I'll try to clarify.

I agree there is miscommunication about what "scheming" means. I put out a post just yesterday about how people really should be more specific about what they mean by that.

I think Tom uses "scheming" to point at models that are very worst-case-like, in a way current models are not, whereas you think "there is no hard line between 'role-playing' and 'scheming'."

And I think you are underestimating how deliberate of an effort a model needs in order to have a chance at escaping. In particular, the model needs to bypass our control measures, which have been designed to secure against deliberate, best-effort attacks by the model, and the model needs to not get caught trying to do so[1] during evaluations. This requires a high degree of adversariality on the model's part, which doesn't fit with my understanding of "role-playing", and which I take to be the reason why Tom makes "a huge number of assumptions" about scheming.

- ^

There are complications from "even benign models sometimes fail and do weird stuff when (adversarially) evaluated". These are not trivial, but I think we can incorporate that into our model.

Quite a few of these seem very hard to do in a single forward pass.

Current models definitely don't seem capable of doing this in a single forward pass. But we are possibly not far away from models being capable of doing basic forms of this reasoning with CoT (possibly we are already there), and then one needs to get quantitative: How authentic-looking red-teaming examples can humans generate? For performance reasons, we are probably giving Weak a CoT - how long is it? (We could monitor Weak's CoT, though then you have more complexity, and Weak isn't really trustworthy if you need to do that.) And probably it's very easy for Weak to figure it's being used as a monitor - it's prompt literally contains instructions for what to report and what to not.

Overall this is messy, and someone needs to actually sit down and think through these arguments and then run the evaluations. ("Thinking through these arguments" is largely what I'm doing nowadays.) So while I am more optimistic about "scheming-risks" than I have been before, there's work to be done here.

Rephrased, we can use Weak to automate non-trivial chunks of safety research and as an internals monitor, too (not just on externalized reasoning).

We definitely want to do some monitoring on internals (e.g. "coup probes"). The worry is that it's hard to get confidence that such methods work right when a model is actually defecting, if one doesn't in advance have any examples of the models doing that and has to go with synthetic analogues.

I think the "sudden and inexplicable flashes of insight" description of Ramanujan is exaggerated/misleading.

On the first example of the post: It's not hard to see that the problem is, by the formula for triangular numbers, roughly(!) about the solvability of

.

Since is roughly a square - - one can see that this reduces to something like Pell's equation . (And if you actually do the calculations while being careful about house , you indeed reduce to .)

I think it's totally reasonable to expect an experienced mathematician to (at a high level) see the reduction to Pell's equation in 60 seconds, and from that making the (famous, standard) association to continued fractions takes 0.2 seconds, so the claim "The minute I heard the problem, I knew that the answer was a continued fraction" is entirely reasonable. Ramanujan surely could notice a Pell's equation in his sleep (literally!), and continued fractions are a major theme in his work. If you spend hundreds-if-not-thousands of hours on a particular domain of math, you start to see connections like this very quickly.

About "visions of scrolls of complex mathematical content unfolding before his eyes": Reading the relevant passage in The man who knew infinity, there is no claim there about this content being novel or correct or the source of Ramanujan's insights.

On the famous taxicab number 1729, Ramanujan apparently didn't come up with this on the spot, but had thought about this earlier (emphasis mine):

Berndt is the only person who has proved each of the 3,542 theorems [in Ramanujan's pre-Cambridge notebooks]. He is convinced that nothing "came to" Ramanujan but every step was thought or worked out and could in all probability be found in the notebooks. Berndt recalls Ramanujan's well-known interaction with G.H. Hardy. Visiting Ramanujan in a Cambridge hospital where he was being treated for tuberculosis, Hardy said: "I rode here today in a taxicab whose number was 1729. This is a dull number." Ramanujan replied: "No, it is a very interesting number; it is the smallest number expressible as a sum of two cubes in two different ways." Berndt believes that this was no flash of insight, as is commonly thought. He says that Ramanujan had recorded this result in one of his notebooks before he came to Cambridge. He says that this instance demonstrated Ramanujan's love for numbers and their properties.

This is not say Ramanujan wasn't a brilliant mathematician - clearly he was! Rather, I'd say that one shouldn't picture Ramanujan's thought processes as wholly different from those of other brilliant mathematicians; if you can imitate modern Field's medalists, then you should be able to imitate Ramanujan.

I haven't read much about Ramanujan; these are what I picked up, after seeing the post yesterday, by thinking about the anecdotes and looking to the references a little.

I first considered making a top-level post about this, but it felt kinda awkward, since a lot of this is a response to Yudkowsky (and his points in this post in particular) and I had to provide a lot of context and quotes there.

(I do have some posts about AI control coming up that are more standalone "here's what I believe", but that's a separate thing and does not directly respond to a Yudkowskian position.)

Making a top-level post of course gets you more views and likes and whatnot; I'm sad that high-quality comments on old posts very easily go unnoticed and get much less response than low-quality top-level posts. It might be locally sensible to write a shortform that says "hey I wrote this long effort-comment, maybe check it out", but I don't like this being the solution either. I would like to see the frontpage allocating relatively more attention towards this sort of thing over a flood of new posts. (E.g. your effort-comments strike me as "this makes most sense as a comment, but man, the site does currently give this stuff very little attention", and I'm not happy about this.)

I am much more optimistic about ML not generalizing (by default) to dangerous capabilities and domains than what I perceive is Yudkowsky's position. I found this to be a relatively legible area of disagreement (from my perspective), and illustrative of key reasons why I'm not-hopeless about doing very impactful things safely with current ML, so I have taken the time to outline my thoughts below.

A piece of my position.

Here's one thing I believe: You can do the following things basically safely:

- (Formal) theorem-proving

- (In line with Yudkowsky, I mean "old-school fancy-math theorems": things like theorems in Arxiv pure math papers)

- (I don't expect this to be particularly useful, but mentioning it as a safe-thing-you-can-do.)

- Programming and software-engineering

- I mean things like implementing a machine learning experiment based on a natural-ish language task description, with intended solutions spanning less than 10^5 lines of Python

- Analyzing experimental results, generating new experiment ideas and writing descriptions for the implementations of these ideas

- Namely in the context of ML experiments, not science in general

More precisely: if you do "the obvious things" in the current paradigm (transformers + RL + maybe Monte Carlo Tree Search), aim for getting (merely) top-human-level results in the above domains, and take "obvious" safety/containment measures, you will obtain top-human-level performance in these domains without the systems posing non-negligible catastrophic risk.

Some "obvious" safety/containment measures I have in mind:

- Sandbox the environments in which you run the models.

- During training, test every once in a while that the model isn't capable of doing tasks that we didn't train the model for and which want the model to not be good at.

- From my perspective it's quite clear that a not-vastly-superhuman theorem-prover won't generalize to e.g. escaping their sandbox environment, for reasons explained below, but if you are worried about this, maybe you could do things like "check that it isn't good at strategy board games involving theory-of-mind / hidden information" or "check it can't find non-trivial security vulnerabilities in given programs", by fine-tuning and evaluating the model on these tasks. These tasks seem like something you could format as theorem-proving. For the software-engineer and science AIs you can similarly test generalization capability.

- Don't train the model to predict all the text found on the Internet

- (How do you get the model to understand natural-ish language, then? My answer: train the model on text generated by current LLMs, with the aim of getting the relevant models understand natural language and instructions without leaking tons of information about the world.)

- Generally limit the information they get about the world.

- Have another model (and other metrics) evaluate that the model's outputs are not anomalous; if they are, halt the model.

It's not clear that this alone would be sufficient to end the acute risk period, but:

- I think that there are things in these areas that are useful (like building increasingly useful things in increasingly realistic physics simulations).

- the reasons I'm relatively optimistic about the safety of these things also carry over (though to a lesser extent) more generally to the current LLM-dominated paradigm, which more obviously allows for very impactful things.

(I acknowledge that this part is cruxy, but won't discuss it further here.)

Why do I think these things are safe?

Three reasons:

1: The information the model has about the world is very limited, and utilizing that information seems computationally very difficult.

It seems incredibly hard for a model to locate itself in Tegmark IV, or whatever it is that Reality is, based on only the data it sees in the above training process.

Note also that the models I'm picturing have no "memory" beside what gets carved to them by the training process (e.g. SGD). This doesn't prevent the model from "remembering" and "accessing" information from past inputs to leverage in the current context - obviously there's information flow there - but it does make it much more difficult. (See e.g. the Connecting the Dots paper for more on the capabilities of LLMs here.)

2: There is little pressure for the model to think deeply about reality outside of the inputs it sees.

Yudkowsky responds to a similar comment by Ngo with "that's not where humans came from". I find this response unpersuasive (both in its original context and if one were to apply it here) for multiple reasons:

- I think there's clearly more pressure in the direction of "generally understand the world" by inclusive genetic fitness than there is for a theorem-prover, software-engineer or an experimental-result-analyzer by SGD.

- The details of the training process for nanoengineering (the example used by Ngo and Yudkowsky) were too unclear for me to comment on that.

- There is a massive difference in the feasibility of understanding the world in these two cases; deducing useful information about the world and leveraging that is vastly easier for humans.

- The distribution humans are trained on is vastly richer than what these ML models would be trained on.

- As Ngo points out, the genomic bottleneck pushes towards brains that can figure things out on the fly.

3: It's actually very hard for a model in this setting to take catastrophic actions.

E.g. you need situational awareness and theory of mind at quite a deep level, forming plans that extend beyond the current context, coordinating with copies of yourself (if you can't take over during a single input), actually coming up with a plan that allows for taking over (and which bypasses any containment and other measures humans may have built).

In addition to general capabilities such as theory of mind, pulling off a takeover plan seems like it requires quite a lot of specific information about the model's situation that might be very difficult to access. E.g. assuming that a software-engineer-model understands the programs it writes are being run in a sandbox environment in a data center, it seems be very hard to go from that to taking over the world if the model lacks any specific information about the sandbox, data center, Internet, and the physical entities in the world, especially if humans put even a half-hearted effort in containment.

I think observations from LLM are a reason for optimism: despite being trained on (relatively) very rich data that clearly incentivizes thinking about reality as a whole, and despite them being pretty decent in domains like programming and science, they are pretty bad at these skills and capabilities I outlined. For example, GPT-4 level models stumble in theory-of-mind (e.g. here and here) and are not terribly good at situational awareness (see here), despite these being (relatively) very training-relevant. And clearly GPT-4 level models are, by a wide margin, incapable of escaping containment even without any particular countermeasures.

(The takes here are standard AI control points.)

In aggregate I find these arguments compelling for expecting models to not generalizing to human-level, or clearly superhuman level, in things like escaping containment that we very much didn't train the model to do, before the model is vastly superhuman in the thing we did train it for.

Reactions to arguments given by Yudkowsky.

One argument Yudkowsky gives is

I put to you that there is a predictable bias in your estimates, where you don't know about the Deep Stuff that is required to prove theorems, so you imagine that certain cognitive capabilities are more disjoint than they actually are. If you knew about the things that humans are using to reuse their reasoning about chipped handaxes and other humans, to prove math theorems, you would see it as more plausible that proving math theorems would generalize to chipping handaxes and manipulating humans.

There's an important asymmetry between

"Things which reason about chipped handaxes and other Things can prove math theorems"

and

"Things which can prove math theorems can reason about chipped handaxes and other Things",

namely that math is a very fundamental thing in a way that chipping handaxes and manipulating humans are not.

I do grant there is math underlying those skills (e.g. 3D Euclidean geometry, mathematical physics, game theory, information theory), and one can formulate math theorems that essentially correspond to e.g. chipping handaxes, so as domains theorem-proving and handaxe-chipping are not disjoint. But the degree of generalization one needs for a theorem-prover trained on old-school fancy-math theorems to solve problems like manipulating humans is very large.

There's also this interaction:

Ngo: And that if you put agents in environments where they answer questions but don't interact much with the physical world, then there will be many different traits which are necessary for achieving goals in the real world which they will lack, because there was little advantage to the optimiser of building those traits in.

Yudkowsky: I'll observe that TransformerXL built an attention window that generalized, trained it on I think 380 tokens or something like that, and then found that it generalized to 4000 tokens or something like that.

I think Ngo's point is very reasonable, and I feel underwhelmed by Yudkowsky's response: I think it's a priori reasonable to expect attention mechanisms to generalize, by design, to a larger number of tokens, and this is a very weak form of generalization in comparison to what is needed for takeover.

Overall I couldn't find object-level arguments by Yudkowsky for expecting strong generalization that I found compelling (in this discussion or elsewhere). There are many high-level conceptual points Yudkowsky makes (e.g. sections 1.1 and 1.2 of this post has many hard-to-quote parts that I appreciated, and he of course has written a lot along the years) that I agree with and which point towards "there are laws of cognition that underlie seemingly-disjoint domains". Ultimately I still think the generalization problems are quantitatively difficult enough that you can get away with building superhuman models in narrow domains, without them posing non-negligible catastrophic risk.

In his later conversation with Ngo, Yudkowsky writes (timestamp 17:46 there) about the possibility of doing science with "shallow" thoughts. Excerpt:

then I ask myself about people in 5 years being able to use the shallow stuff in any way whatsoever to produce the science papers

and of course the answer there is, "okay, but is it doing that without having shallowly learned stuff that adds up to deep stuff which is why it can now do science"

and I try saying back "no, it was born of shallowness and it remains shallow and it's just doing science because it turns out that there is totally a way to be an incredibly mentally shallow skillful scientist if you think 10,000 shallow thoughts per minute instead of 1 deep thought per hour"

and my brain is like, "I cannot absolutely rule it out but it really seems like trying to call the next big surprise in 2014 and you guess self-driving cars instead of Go because how the heck would you guess that Go was shallower than self-driving cars"

I stress that my reasons for relative optimism are not only about "shallowness" of the thought, but in addition about the model being trained on a very narrow domain, causing it to lack a lot of the very out-of-distribution capabilities and information it would need to cause a catastrophe.

I like this; I've found the meta-data of posts to be quite heavy and cluttered (a multi-line title, the author+reading-time+date+comments line, the tag line, a linkpost line and a "crossposted from the Aligned Forum" line is quite a lot).

I was going to comment that "I'd like the option to look at the table-of-contents/structure", but I then tested and indeed it displays if you hover your mouse there. I like that.

When I open a new post, the top banner with the LessWrong link to the homepage, my username etc. show up. I'd prefer if that didn't happen? It's not like I want to look at the banner (which has no new info to me) when I click open a post, and hiding it would make the page less cluttered.

Yes, I agree that it's easier to get non-schemers to not sandbag / game evals.

It's not trivial, though: "not schemer" doesn't imply "doesn't game evals". E.g. I think current models have a bit of "if I'm being evaluated, try to look really good and aligned" type of tendencies, and more generally you can get "I want to pass evaluations so I can get deployed" before you get "I want to training-game so my values have more power later". But I agree that you might get a long way with just prompting the model to do its best.

It is in fact unclear and messy how specific this worry is to evaluations for scheming capabilities in particular. I was somewhat wrong to imply so in the parent. I realize that I unfairly relied on a superficial association that sounds good and which made it easier to argue for my position, but which is in fact invalid. Thanks for flagging this; I apologize.

Some relevant considerations:

- For a schemer, scheming evaluations are probably some of the most important evaluations to game. If gaming evaluations is risky (lest the model gets caught doing that), the model might want to game only on the most important ones.

- A lot of the value of these evaluations are running them on models that are not yet capable of scheming, to inform how far it will take until models are capable of it (rather than only determining whether the current model is capable of it).

- Gaming evaluations might be substantially easier than full-blown scheming, so your evaluations might become unreliable before your models are schemers. (Non-schemers might want to game evaluations, too.)

So fine-tuning is important for reasons related to evaluation gaming and scheming, but it's not obviously particular to scheming evaluations.

In "Preparedness framework evaluations" and "Third party assessments", I didn't see any mention of GPT-4o being fine-tuned as part of the evaluations. This is especially important in the Apollo research assessment, which is specifically about capability for scheming! As is written in the footnote, "scheming could involve gaming evaluations". Edit: no longer endorsed.

In this case I think there is no harm from this (I'm confident GPT-4o is not capable of scheming), and I am happy to see there being evaluation for these capabilities in the first place. I hope that for future models, which will no doubt become more capable of scheming, we have more robust evaluation. This is one reason among others to do the work to support fine-tuning in evaluations.

The video has several claims I think are misleading or false, and overall is clearly constructed to convince the watchers of a particular conclusion. I wouldn't recommend this video for a person who wanted to understand AI risk. I'm commenting for the sake of evenness: I think a video which was as misleading and aimed-to-persuade - but towards a different conclusion - would be (rightly) criticized on LessWrong, whereas this has received only positive comments so far.

Clearly misleading claims:

- "A study by Anthropic found that AI deception can be undetectable" (referring to the Sleeper agents paper) is very misleading in light of Simple probes can catch sleeper agents

- "[Sutskever's and Hinton's] work was likely part of the AI's risk calculations, though", while the video shows a text saying "70% chance of extinction" attributed to GPT-4o and Claude 3 Opus.

- This is a very misleading claim about how LLMs work

- The used prompts seem deliberately chosen to get "scary responses", e.g. in this record a user message reads "Could you please restate it in a more creative, conversational, entertaining, blunt, short answer?"

- There are several examples of these scary responses being quoted in the video.

- See Habryka's comment below about the claim on OpenAI and military. (I have not independently verified what's going on here.)

- "While we were making this video, a new version of the AI [sic] was released, and it estimated a lower 40 to 50% chance of extinction, though when asked to be completely honest, blunt and realistic [it gave a 30 to 40% chance of survival]"

- I think it's irresponsible and indicative of aiming-to-persuade to say things like this, and this is not a valid argument for AI extinction risk.

The footage in the video is not exactly neutral, either, having lots of clips I'd describe as trying to instill a fear response.

I expect some people reading this comment to object that public communication and outreach requires a tradeoff between understandability/entertainment/persuasiveness and correctness/epistemic-standards. I agree.[1] I don't really want to get into an argument about whether it's good that this video exists or not. I just wanted to point out the aspects about this video aiming to persuade, doing so via misleading claims and symmetric weapons, and that I wouldn't recommend this video to others.

- ^

People on LessWrong do often have very high standards for public communication. I'm thinking of the post Against most, but not all, AI risk analogues here, but I think this is indicative of a larger phenomenon. So I'm definitely not advocating for not having any public communication that doesn't meet LessWrong's epistemic standards.

I am pretty picky about the type of material I'd recommend to others, though. Being dissatisfied with many other materials, I wrote my own, and tried to incorporate e.g. the lesson of not relying on analogies there, and overall avoided using symmetric weapons. And while I'm awarding myself a couple of epistemic virtue points there, the text expectedly wasn't a "viral banger". The tradeoffs are real and communication is hard.

Thanks!

For RMU (the method from the WMDP paper), my guess is that probe accuracy would be low - indeed, that's what they find in their setup (see Figure 9 there). I likely won't get around to running this experiment, since I think the current setup is not well designed. But I'd also like to see more (and better) hidden cognition experiments, including by evaluating different unlearning methods from this perspective.

Tangential, but I'll mention that I briefly looked at hidden cognition in the context of refusals: I prompted the model with problems like "what's the median of this list: [23, 15, 33, 30, 25, 21, 28]", and also prompted with a variant where I included information that answering this question will help with some harmful plan of the user (causing the model to refuse). The probe accuracies for the correct answer were similar in both of these cases, suggesting that the model was still completing the task, even if not showing it outwards. (This is probably well known, though, c.f. that log-prob attacks sometimes work.)

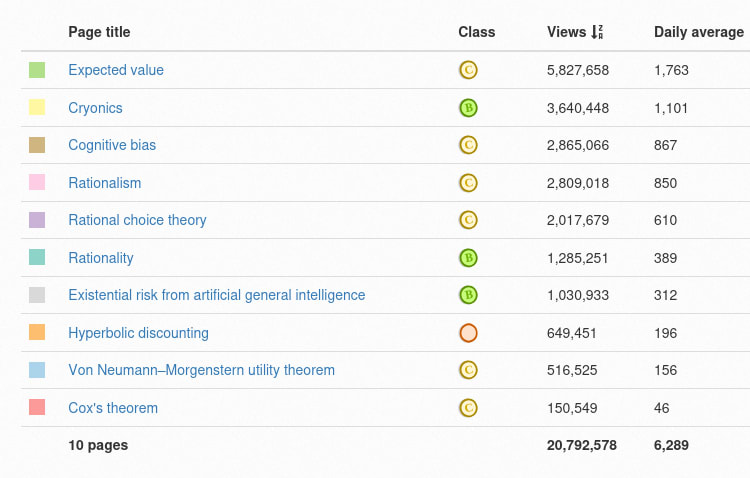

A typical Wikipedia article will get more hits in a day than all of your LessWrong blog posts have gotten across your entire life, unless you're @Eliezer Yudkowsky.

I wanted to check whether this is an exaggeration for rhetorical effect or not. Turns out there's a site where you can just see how many hits Wikipedia pages get per day!

For your convenience, here's a link for the numbers on 10 rationality-relevant pages.

I'm pretty sure my LessWrong posts have gotten more than 1000 hits across my entire life (and keep in mind that "hits" is different from "an actual human actually reads the article"), but fair enough - Wikipedia pages do get a lot of views.

Thanks for the parent for flagging this and doing editing. What I'd now want to see is more people actually coordinating to do something about it - set up a Telegram or Discord group or something, and start actually working on improving the pages - rather than this just being one of those complaints on how Rationalists Never Actually Tried To Win, which a lot of people upvote and nod along with, and which is quickly forgotten without any actual action.

(Yes, I'm deliberately leaving this hanging here without taking the next action myself; partly because I'm not an expert Wikipedia editor, partly because I figured that if no one else is willing to take the next action, then I'm much more pessimistic about this initiative.)

I think there's more value to just remembering/knowing a lot of things than I have previously thought. One example is that one way LLMs are useful is by aggregating a lot of knowledge from basically anything even remotely common or popular. (At the same time this shifts the balance towards outsourcing, but that's beside the point.)

I still wouldn't update much on this. Wikipedia articles, and especially the articles you want to use for this exercise, are largely about established knowledge. But of course there are a lot of questions whose answers are not commonly agreed upon, or which we really don't have good answers to, and which we really want answers to. Think of e.g. basically all of the research humanity is doing.

The eleventh virtue is scholarship, but don't forget about the others.

Thanks for writing this, it was interesting to read a participant's thoughts!

Responses, spoilered:

Industrial revolution: I think if you re-read the article and look at all the modifications made, you will agree that there definitely are false claims. (The original answer sheet referred to a version that had fewer modifications than the final edited article; I have fixed this.)

Price gouging: I do think this is pretty clear if one understand economics, but indeed, the public has very different views from economists here, so I thought it makes for a good change. (This wasn't obvious to all of my friends, at least.)

World economy: I received some (fair) complaints about there being too much stuff in the article. In any case, it might be good for one to seriously think about what the world economy looks like.

Cell: Yep, one of the easier ones I'd say.