When do "brains beat brawn" in Chess? An experiment

post by titotal (lombertini) · 2023-06-28T13:33:23.854Z · LW · GW · 106 commentsThis is a link post for https://titotal.substack.com/p/when-do-brains-beat-brawn-in-chess

Contents

Analysis of my tradeoff of material and ELO: Odds of rook: Odds of two bishops: Odds of queen: Can brawn beat an AGI? None 107 comments

As a kid, I really enjoyed chess, as did my dad. Naturally, I wanted to play him. The problem was that my dad was extremely good. He was playing local tournaments and could play blindfolded, while I was, well, a child. In a purely skill based game like chess, an extreme skill imbalance means that the more skilled player essentially always wins, and in chess, it ends up being a slaughter that is no fun for either player. Not many kids have the patience to lose dozens of games in a row and never even get close to victory.

This is a common problem in chess, with a well established solution: It’s called “odds”. When two players with very different skill levels want to play each other, the stronger player will start off with some pieces missing from their side of the board. “Odds of a queen”, for example, refers to taking the queen of the stronger player off the board. When I played “odds of a queen” against my dad, the games were fun again, as I had a chance of victory and he could play as normal without acting intentionally dumb. The resource imbalance of the missing queen made the difference. I still lost a bunch though, because I blundered pieces.

Now I am a fully blown adult with a PhD, I’m a lot better at chess than I was a kid. I’m better than most of my friends that play, but I never reached my dad’s level of chess obsession. I never bothered to learn any openings in real detail, or do studies on complex endgames. I mainly just play online blitz and rapid games for fun. My rating on lichess blitz is 1200, on rapid is 1600, which some calculator online said would place me at ~1100 ELO on the FIDE scale.

In comparison, a chess master is ~2200, a grandmaster is ~2700. The top chess player Magnus Carlsen is at an incredible 2853. ELO ratings can be used to estimate the chance of victory in a matchup, although the estimates are somewhat crude for very large skill differences. Under this calculation, the chance of me beating a 2200 player is 1 in 500, while the chance of me beating Magnus Carlsen would be 1 in 24000. Although realistically, the real odds would be less about the ELO and more on whether he was drunk while playing me.

Stockfish 14 has an estimated ELO of 3549. In chess, AI is already superhuman, and has long since blasted past the best players in the world. When human players train, they use the supercomputers as standards. If you ask for a game analysis on a site like chess.com or lichess, it will compare your moves to stockfish and score you by how close you are to what stockfish would do. If I played stockfish, the estimated chance of victory would be 1 in 1.3 million. In practice, it would be probably be much lower, roughly equivalent to the odds that there is a bug in the stockfish code that I managed to stumble upon by chance.

Now that we have all the setup, we can ask the main question of this article:

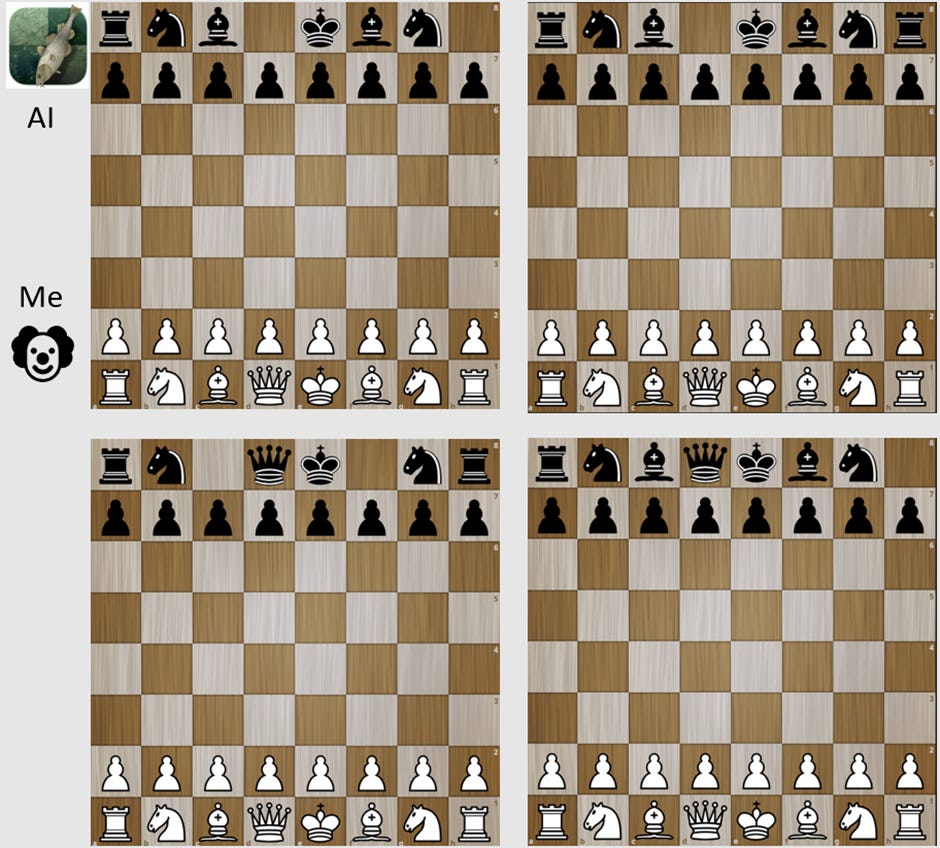

What “odds” do I need to beat stockfish 14[1] in a game of chess? Obviously I can win if the AI only has a king and 3 pawns. But can I win if stockfish is only down a rook? Two bishops? A queen? A queen and a rook? More than that? I encourage you to pause and make a guess. And if you can play chess, I encourage you to guess as to what it would take for you to beat stockfish. For further homework, you can try and guess the odds of victory for each game in the picture below.

The first game I played against stockfish was with queen odds.

I won on the first try. And the second, and the third. It wasn’t even that hard. I played 10 games and only lost 1 (when I blundered my queen stupidly).

The strategy is simple. First, play it safe and try not to make any extreme blunders. Don’t leave pieces unprotected, check for forks and pins, don’t try any crazy tactics. Secondly, take every opportunity to trade pieces. Initially, the opponent has 30 points of material, and you have 39, meaning you have 30% more material than them. If you manage to trade all your bishops and knights away, stockfish would have 18 points and you would have 27, a 50% advantage. It also makes the game much simpler and straightforward, as there are far less nasty tactics available when the computer only has two rooks available.

Don’t get me wrong, the computer managed to trick me plenty of times and get pieces trapped. Sometimes I would blunder several pawns or a whole piece. But you need to use pieces to trap pieces, and the computer never had the resources to claw away at me before I traded everything away and crushed it with my extra queen.

Since that was easy, I tried odds of two bishops. I lost the first game, then won the second. Lost the third, won the fourth. Same strategy as the queens, but it was noticeably more difficult. I would often make a small error early on, which would then snowball out to take me down.

Getting cocky, I played with odds of a rook (ostensibly only 1 point of material less than two bishops). I immediately got trounced. I lost the first game, and proceeded to lose like 20 games in a row before I finally managed to eke out a draw.

The problem with rook odds is that the rook is locked away in the corner of the board, and usually is most useful at the end of the game when it has free reign of the board. That means that in the opening of the game, I’m functionally playing stockfish as if I have equal material. And stockfish, with equal material, is a fucking nightmare. It can put it’s full force to bear, poke any weaknesses, render your pieces trapped and useless, and chip away at your lead slowly but surely. By the time I could trade pieces down and get my extra rook in play, the AI had usually chipped away enough at my lead that I was only a little bit up in material. And a little bit up is not enough. Here is an example position:

It looks like I’m completely winning here. I have an extra pawn, and a rook instead of a knight, which is an ostensible +3 material. I even spot the trap laid by stockfish: If I move my rook one up or one down, the knight can jump to e2, forking my king and rook and ensuring a rook for knight trade that would destroy my lead. Thinking I was smart, I put my rook on c4. Big mistake. The AI gave a knight check on h3, driving the king to f1, and then it forked my rook and king with his bishop. Even if I moved my rook to c5, black would have been able to lock it into place by moving the b pawn to b6 and moving the knight to d3, rendering the rook effectively useless. Only moving the rook to b2 would have saved my advantage. If the analysis here was obvious to you, there's a good chance you can beat stockfish with rook odds.

It took me something like 20 games to draw against stockfish, and a further 30 before I finally actually won. In the successful game, I got lucky with an opening that let me trade most pieces equally, and then slowly forced a knight vs knight endgame where I was up two pawns. This might actually be a case where a chess GM would outperform an AI: they can think psychologically, so they can deliberately pick traps and positions that they know I would have difficulty with.

Analysis of my tradeoff of material and ELO:

Here I’ll summarize the results of my little experiment. Remember, initially I had an ELO of ~1100 and a nominal odds of beating stockfish of roughly 1 in a million (but probably less).

Odds of rook:

Material advantage: 14%

Win rate: 2%

Odds of victory boost: 4 orders of magnitude or more

Equivalent ELO: ~2750

Odds of two bishops:

Material advantage: 18%

Win rate: ~50%

Odds of victory boost: 6 orders of magnitude or more

Equivalent ELO: ~3549

Odds of queen:

Material advantage: 30%

Win rate: 90%

Odds of victory boost: 7 orders of magnitude or more

Equivalent ELO: ~3900

I tried a few games with odds of a knight, and got hopelessly crushed every time. However, looking online, I did find that a GM achieved an 80% win rate in a knight-odds game against the Komodo chess engine.

It’s worth pointing out that handicaps become more powerful the better you are at chess. Quoting GM Larry Kaufman on this subject:

The Elo equivalent of a given handicap degrades as you go down the scale. A knight seems to be worth around a thousand points when the "weak" player is around IM level, but it drops as you go down. For example, I'm about 2400 and I've played tons of knight odds games with students, and I would put the break-even point (for untimed but reasonably quick games) with me at around 1800, so maybe a 600 value at this level. An 1800 can probably give knight odds to a 1400, a 1400 to an 1100, an 1100 to a 900, etc. This is pretty obviously the way it must work, because the weaker the players are, the more likely the weaker one is to blunder a piece or more. When you get down to the level of the average 8 year old player, knight odds is just a slight edge, maybe 50 points or so.

This is why my dad could beat me as a kid with queen odds, but stockfish can't beat me now. You need sufficient knowledge of how to game works to utilize your resource advantages properly.

Can brawn beat an AGI?

Robert Miles compared humanity fighting an AGI to an amateur at chess trying to beat a grandmaster. His argument was that delving into the details of such a fight was pointless, because “you just cannot expect to win against a superior opponent”.

The problem here is that I, an amateur, can beat a GM. I can beat Stockfish. All I need is an extra queen.

This is not a trick point. If a rogue AI is discovered early, we could end up in a war where the AGI has a huge intelligence advantage, but humans have a huge resource advantage.

In the view of Miles and others, the initially gargantuan resource imbalance between the AI and humanity doesn’t matter, because the AGI is so super-duper smart, it will be able to come up with the “perfect” plan to overcome any resource imbalance, like a GM playing against a little kid that doesn't understand the rules very well.

The problem with this argument is that you can use the exact same reasoning to imply that’s it’s “obvious” that Stockfish could reliably beat me with queen odds. But we know now that that’s not true. There will always be a level of resource imbalance where the task at hand is just too damn difficult, no matter how high the intelligence. Consider also the implication that a less intelligent, but more controllable AI that we cooperate with might be able to triumph over a much more intelligent rogue AI.

Of course, this little experiment tells us very little about what the equivalent of a “queen advantage” would be in a battle with an AGI. It would definitely need to be far more than literally 30% more people, as we know plenty of examples of human generals winning battles despite being vastly outnumbered. Unlike chess, the real world has secret information, way more possible strategies, the potential for technological advancements, defections and betrayal, etc. which all favor the more intelligent party. On the other hand, the potential resource imbalance could be ridiculously high, particularly if a rogue AI is caught early on it’s plot, with all the worlds militaries combined against them while they still have to rely on humans for electricity and physical computing servers. It’s somewhat hard to outthink a missile headed for your server farm at 800 km/h.

I intend to write a lot more on the potential “brains vs brawns” matchup of humans vs AGI. It’s a topic that has received surprisingly little depth from AI theorists. I hope this little experiment at least explains why I don’t think the victory of brain over brawn is “obvious”. Intelligence counts for a lot, but it ain’t everything.

- ^

In order to play stockfish with odds, I went to lichess.org/editor, removed the pieces as necessary, and then clicked “continue from here”, selected “play against computer”, and selected maximum strength computer opponent (level 8). This is full strength stockfish with a depth of 22 moves and calculation time of 1000 ms. I also tested with the higher depth and calculation time of the “analysis board”, and was still able to win easily with queen odds.

106 comments

Comments sorted by top scores.

comment by Lucius Bushnaq (Lblack) · 2023-06-29T14:23:09.639Z · LW(p) · GW(p)

You can easily get a draw against any AI in the world at Tic-Tac-Toe. In fact, provided the game actually stays confined to the actions on the board, you can draw AIXI at Tic-Tac-Toe. That's because Tic-Tac-Toe is a very small game with very few states and very few possible actions, and so intelligence, the ability to pick good actions, doesn't grant any further advantage in it past a certain pretty low threshold.

Chess has more actions and more states, so intelligence matters more. But probably still not all that much compared to the vastness of the state and action space the physical universe has. If there's some intelligence threshold past which minds pretty much always draw against each other in chess even if there is a giant intelligence gap between them, I wouldn't be that surprised. Though I don't have much knowledge of the game.

In the game of Real Life, I very much expect that "human level" is more the equivalent of a four year old kid who is currently playing their third ever game of chess, and still keeps forgetting half the rules every minute. The state and action space is vast, and we get to observe humans navigating it poorly on a daily basis. Though usually only with the benefit of hindsight. In many domains, vast resource mismatches between humans do not outweigh skill gaps between humans. The Chinese government has far more money than OpenAI, but cannot currently beat OpenAI at making powerful language models. All the usual comparisons between humans and other animals also apply. This vast difference in achieved outcomes from small intelligence gaps even in the face of large resource gaps does not seem to me to be indicative of us being anywhere close to the intelligence saturation threshold of the Real Life game.

Replies from: johnlawrenceaspden, None↑ comment by johnlawrenceaspden · 2023-06-30T13:44:36.488Z · LW(p) · GW(p)

If there's some intelligence threshold past which minds pretty much always draw against each other in chess even if there is a giant intelligence gap between them, I wouldn't be that surprised.

Just reinforcing this point. Chess is probably a draw for the same reason Noughts-and-crosses is.

Grandmaster chess is pretty drawish. Computer chess is very drawish. Some people think that computer chess players are already near the standard where they could draw against God.

Noughts-and-crosses is a very simple game and can be formally solved by hand. Chess is only a bit less simple, even though it's probably beyond actual formal solution.

The general Game of Life is so very far beyond human capability that even a small intelligence advantage is probably decisive.

Replies from: Herb Ingram↑ comment by Herb Ingram · 2023-07-03T21:38:19.981Z · LW(p) · GW(p)

That makes sense to me but to make any argument about the "general game of life" seems very hard. Actions in the real world are made under great uncertainty and aggregate in a smooth way. Acting in the world is trying to control (what physicists call) chaos.

In such a situation, great uncertainty means that an intelligence advantage only matters "on average over a very long time". It might not matter for a given limited contest, such as a struggle for world domination. For example, you might be much smarter than me and a meteorologist, but you'd find it hard to predict the weather in a year's time better than me if it's a single-shot-contest. How much "smarter" would you need to be in order to have a big advantage? Pretty much regardless of your computational ability and knowledge of physics, you'd need such an amount of absurdly precise knowledge about the world that it might still take (both you and even much less intelligent actors) less resources to actively control the entire planet's weather than predict it a year in advance.

The way that states of the world are influenced by our actions is usually in some sense smooth. For any optimal action, there are usually lots of similar "nearby actions". These may or may not be near-optimal but in practice only plans that have a sufficiently high margin for error are feasible. The margin of error depends on the resources that allow finely controlled actions and thus increase the space of feasible plans. This doesn't have a good analogy in chess: chess is much further from smooth than most games in the real world.

Maybe RTS games are a slightly better analogy. They have "some smoothness of action-result mapping" and high amounts of uncertainty. Based on AlphaStar's success in StarCraft, I would expect we can currently build super-human AIs for such games. They are superior to humans both in their ability to quickly and precisely perform many actions, as well as find better strategies. An interesting restriction is to limit the numbers of actions the AI may take to below what a human can to see the effect of these abilities individually. Restricting the precision and frequency of actions reduces the space of viable plans, at which point the intelligence advantage might matter much less.

All in all, what I'm trying to say is that the question "how much does what intelligence imbalance matter in the world" is hard. The question is not independent of access to information and access to resources or ability to act on the world. To make use of a very high intelligence, you might need a lot more information and also a lot more ability to take precise actions. The question for some system "taking over" is whether its initial intelligence, information and ability to take actions is sufficient to bootstrap quickly enough.

These are just some more reasons you can't predict the result just by saying "something much smarter is unbeatable at any sufficiently complex game".

Replies from: X4vier, johnlawrenceaspden↑ comment by X4vier · 2023-07-04T00:07:12.404Z · LW(p) · GW(p)

Maybe an analogy which seems closer to the "real world" situation - let's say you and someone like Sam Altman both tried to start new companies. How much more time and starting capital do you think you'd need to have a better shot of success than him?

Replies from: Herb Ingram↑ comment by Herb Ingram · 2023-07-04T02:54:38.019Z · LW(p) · GW(p)

I really have no idea, probably a lot?

I don't quite see what you're trying to tell me. That one (which?) of my two analogies (weather or RTS) is bad? That you agree or disagree with my main claim that "evaluating the relative value of an intelligence advantage is probably hard in real life"?

Your analogy doesn't really speak to me because I've never tried to start a company and have no idea what leads to success, or what resources/time/information/intelligence helps how much.

↑ comment by johnlawrenceaspden · 2023-07-03T22:34:11.824Z · LW(p) · GW(p)

For example, you might be much smarter than me and a meteorologist, but you'd find it hard to predict the weather in a year's time better than me if it's a single-shot-contest.

Sure, but I'd presumably be quite a lot better at predicting the weather in two days time.

Replies from: Herb Ingram↑ comment by Herb Ingram · 2023-07-03T22:53:48.493Z · LW(p) · GW(p)

What point are you trying to make? I'm not sure how that relates to what I was trying to illustrate with the weather example. Assuming for the moment that you didn't understand my point.

The "game" I was referring to was one where it's literally all-or-nothing "predict the weather a year from now", you get no extra points for tomorrow's weather. This might be artificial but I chose it because it's a common example of the interesting fact that chaos can be easier to control than simulate.

Another example. You're trying to win an election and "plan long-term to make the best use of your intelligence advantage", you need to plan and predict a year ahead. Intelligence doesn't give you a big advantage in predicting tomorrow's polls given today's polls. I can do that reasonably well, too. In this contest, resources and information might matter a lot more than intelligence. Of course, you can use intelligence to obtain information and resources. But this bootstrapping takes time and it's hard to tell how much depending where you start off.

↑ comment by [deleted] · 2023-06-30T09:21:56.457Z · LW(p) · GW(p)

"China hasn't made a better LLM than OpenAI" does not imply "China can't make a better LLM despite having more money". China isn't allocating all their money into this. If it's the case that China set a much bigger budget to developing LLMs than OpenAI had, and failed because OpenAI has better people, that would support your point about large resource mismatches not being able to overcome small intelligence gaps.

comment by simplegeometry · 2024-11-21T20:55:17.437Z · LW(p) · GW(p)

This is something lc and gwern discussed in the comments here, but now we have clear evidence this is only true for Nash solvers (all typical engines like SF, Lc0, etc.). LeelaQueenOdds, which trained exploitatively against a model of top human players (FM+), is around 2k to 2.9k lichess elo depending on the time controls, so it completely trounces 1.6k elo players (especially 1.2k elo players as another commenter has suggested the author actually is). See: https://marcogio9.github.io/LeelaQueenOdds-Leaderboard/

Nash solvers are far too conservative and expect perfect play out of their opponents, hence give up most meaningful attacking chances in odds games. Exploitative models like LQO instead assume their opponents play like strong humans (good but imperfect) and do extremely well, despite a completely crushing material disadvantage. As some have noted, this is possible even with chess being a super sterile/simple environment relative to real life.

I speculate that the experiment from this post only yielded the results it did because Nash is a poor solution concept when one side is hopelessly disadvantaged under optimal play from both sides, and queen odds fall deep into that category.

See video from 7 minutes. Try it yourself https://lichess.org/@/LeelaQueenOdds :)

↑ comment by Olli Järviniemi (jarviniemi) · 2024-11-27T06:45:28.915Z · LW(p) · GW(p)

I found it interesting to play against LeelaQueenOdds. My experiences:

- I got absolutely crushed on 1+1 time controls (took me 50+ games to win one), but I'm competitive at 3+2 if I play seriously.

- The model is really good at exploiting human blind spots and playing aggressively. I could feel it striking in my weak spots, but not being able to do much about it. (I now better acknowledge the existence of adversarial attacks for humans on a gut level.)

- I found it really addictive to play against it: You know the trick that casinos use, where they make you feel like you "almost" won? This was that: I constantly felt like I could have won, if it wasn't just for that one silly mistake - despite having lost the previous ten games to such "random mistakes", too... I now better understand what it's like to be a gambling addict.

Overall fascinating to play from a position that should be an easy win, but getting crushed by an opponent that Just Plays Better than I do.

[For context, I'm around 2100 in Lichess on short time controls (bullet/blitz). I also won against Stockfish 16 at rook odds on my first try - it's really not optimized for this sort of thing.]

↑ comment by Lorenzo (lorenzo-buonanno) · 2025-01-26T21:13:22.263Z · LW(p) · GW(p)

A grandmaster just lost a classical game (60''+30'') against Leela Knight Odds https://lichess.org/broadcast/leela-knight-odds-vs-gm-joel-benjamin/game-5/MbKHEbdb/7Tnz8uBj

3 days ago an international master gave Leela "very slim chances" of winning a game, based on the results of a match played by a previous version of the engine

↑ comment by simplegeometry · 2025-01-29T03:08:26.200Z · LW(p) · GW(p)

Thanks for this update! I find that an odd prediction by the IM because Awonder is around 2670 FIDE and Joel is around 2470 FIDE, 200 elo is huge.

Replies from: lorenzo-buonanno↑ comment by Lorenzo (lorenzo-buonanno) · 2025-01-29T13:00:56.057Z · LW(p) · GW(p)

I think it's because 10+5 is very different from 60+30

Replies from: simplegeometry↑ comment by simplegeometry · 2025-01-29T19:23:03.185Z · LW(p) · GW(p)

Oh, my bad, yeah. When I was writing the comment, I flipped the direction of advantage for longer time controls (longer time controls are actually better for humans in odds matches of course), but this way I agree it's unclear a priori whether 200 elo drop would be enough to account for longer time controls.

↑ comment by Thomas Kwa (thomas-kwa) · 2024-12-05T22:29:52.978Z · LW(p) · GW(p)

Maybe we'll see the Go version of Leela give nine stones to pros soon? Or 20 stones to normal players?

comment by JenniferRM · 2023-06-29T03:08:06.611Z · LW(p) · GW(p)

I've been having various conversations in private, where I'm quite doomist and my interlocutor is less doomist, and I think one of the key cruxes that has come up several times is that I've applied security mindset to the operation of human governance, and I am not impressed.

I looked at things like the federal reserve (and how you'd implement that in a smart contract) and the congress/president/court deal (and how you'd implement that in a smart contract) and various other systems, and the thing I found was that existing governance systems are very poorly designed and probably relatively easy to knock over.

As near as I can tell, the reason human civilization still exists is that no inhuman opponent has ever existed that might really just want to push human civilization over and then curb stomp us while we thrash around in surprised pain.

For example, in WW2 Operation Bernhard got close to just "ending the money game" explicitly, but the bad guys couldn't bring themselves to make the stupidest and most evil British people rich via relatively secret injections, and then ramp it up more and more, and then as the whole web of market relationships became less and less plausible they could have eventually pumped more and more fake money into the British economy until they were just raining cash on random cities from the air. Part of why they refrained is that they imagined what a retaliatory strike would do to Nazi-controlled Germany... and flinched. The logic of "MAD" (transposed into economics) held them back.

In my view, human civilization is rife with such weaknesses.

I tend to not talk about them much, but I feel like maybe I should talk about them more, because my silence is coming to feel more and more stupid, given that I expect an AI smarter than me to see all the options that I can see, and then more!

So a big part of my model is not that a very very fast nor a very very large "foom" is especially likely... it is just that we are so collectively stupid (as a collective herd of moderately smart monkeys that is not actually very organized, and is mostly held together with prison bars and nuclear blackmail and duct tape) that I expect AIs that are merely slightly smarter than us (and have wildly different weaknesses and a coherent plan to get rid of us), to have a very good chance of succeeding.

Bringing it back to the Chess metaphor...

One can easily imagine starting with Stockfish, and then writing an extra little algorithmic loop that:

1) looks at the board, and

2) throttles Stockfish's CPU so that it can only "run at full speed" when

3) it has the knights, bishops, queen, and king inside a 4x4 subarea.

The farther from that ideal the board position gets, the more the CPU would be throttled.

A stockfish with this hack to its CPU throttle would start the game already at a "governance disadvantage" because the knights start outside "the zone of effective governance".

Correct early moves for white (taking into account the boost of extra CPU) might very well involve pushing the queen's knight to c3 or d2, and would similarly involve pushing the king's knight to e2 or f3. Only then could Stockfish even operate at "mental full power"!

If you ran AlphaZero from scratch, to expert, using any similar sort of "cpu sabotage based on the board state" I bet it would invent some CRAZY new opening games!

And this "weird board/cpu entangled chess AI constraint"... is actually a pretty good metaphor for how humans make decisions, as a collective, in real life.

Every private CEO is filtered not just by how good of a manager and economic planner they are, but how willing they are to be loyal to the true owners of the company, even at the expense of customers, or country, or moral ideals. Every President is beholden to half-crazy partisan factions, and can't possibly get into power without making numerous entangling deals. Xi and Putin can make moves, but they are each just one guy, and they have to spend a lot of their brain power just preventing coups and assassinations because their advisors aren't that trustworthy. Most governments are simply not arranged to maximize the government's ability to fight something very very very smart from a standing start!

If the human "material in the world" is re-arranged, then the human "ability as a species to coordinate against something that coherently would prefer our extinction" is also going to be affected. And the AI will know this. And the AI will be able to attack it.

If you knew you were playing against a version of Stockfish that got more CPU based on the proximity of various pieces (and less CPU with less material that was more spread out) then I bet that would ALSO be worth a huge amount of material.

That advantage, that you would have against a Stockfish with that additional imaginary weakness, is very very similar to the advantage that AI has over human governments: the "brains" are right there on the table, as part of the stakes, and subject to attack!

Replies from: miss me mimo!, RolfAndreassen, Making_Philosophy_Better↑ comment by miss me mimo! · 2023-06-30T03:25:45.358Z · LW(p) · GW(p)

The Operation Bernhard example seems particularly weak to me, thinking for 30 seconds you can come up with practical solutions for this situation even if you imagine Nazi Germany having perfect competency in pulling off their scheme.

For example, using tax records and bank records to roll back peoples fortunes a couple of years and then introducing a much more secure bank note. It's not like WW2 was an era of fiscal conservatism, war powers were leveraged heavily by the federal reserve in the united states to do whatever they wanted with currency. We comfortably operate in a fiat currency regime where currency is artificially scarce and can be manipulated in half a dozen ways at the drop of a hat.

The way you interpret Operation Bernhard seems to me like you imagine the rules of society as something we set up and then are bound to like lemmings. When in reality, the rules can be rewritten at any time when the need arises. I think your example is equivalent to saying the ability to turn lead into gold would destroy the gold-standard era economy and utterly wreck civilization. When we know in hindsight we can just wave our finger and decouple currency and gold at a moments notice.

I suspect many of the other rules and systems that hold our civilization are just as adaptable when the need arises.

↑ comment by RolfAndreassen · 2023-06-30T05:22:52.237Z · LW(p) · GW(p)

The Wiki link on Operation Bernhard does not very obviously support the assertions you make about the Germans flinching. Do you have a different source in mind?

Replies from: JenniferRM↑ comment by JenniferRM · 2023-06-30T20:35:07.995Z · LW(p) · GW(p)

I cannot quickly find a clean "smoking gun" source nor well summarized defense of exactly my thesis by someone else.

(Neither Google nor the Internet seem to be as good as they used to be, so I no longer take "can't find it on the Internet with Google" as particularly strong evidence that no one else has had the idea and tested and explored it in a high quality way that I can find and rely on if it exists.)

...in place of a link, I wrote 2377 more words than this, talking about the quality of the evidence I could find and remember, and how I process it, and which larger theories of economics and evolution I connect to the idea that human governance capacity is an evolved survival trait of humans, and our form of governments rely on it for their shape to be at all stable or helpful, and this "neuro-emotional" trait will probably not be reliably installed in AI, but also the AI will be able to attack anthropological preconditions of it, if that is deemed likely to get an AI more of what that AI wants, as AI replaces humans as the Apex Predator of Earth.

It doesn't totally seem prudent to publish all 2377 words, now that I'm looking at them?

Publishing is mostly irreversible, and I don't think that "hours matter" (and also probably even "days matter" is false) so I want to sit on them for a bit before committing to being in a future where those words have been published...

Is there a big abstract reason you want a specific source for that specific part of it?

I don't see that example as particularly central, just as a proposal that anyone can use as a springboard (that isn't "proliferative" to talk about in public because it is already in Wikipedia and hence probably cognitively accessible to all RLLLMs already) where the example:

(1) is real and functions as a proof-by-existence of that class of "planning-capacity attacking ideas" being non-empty in a non-fictive context,

(2) while mostly emotionally establishing that "at least some of the class of tactics is inclusive of tactics that especially bad people do and/or think about" and maybe

(3) being surprising to a lot of readers so that they can say "if I hadn't heard about that attack, then maybe more such attacks would also surprise me, so I should update on there being more unknown unknowns here".

If you don't believe the more abstract thesis about the existence of the category, then other examples might also work better to help you understand the larger thesis.

However, maybe you're applying some kind of Arthur-Merlin protocol, and expected me to find "the best example possible" and if that fails then you might write off the whole thesis as "coherently adversarially advanced, with a failed epistemic checksum in some of the details, making it cheap and correct and safe to use the failure in the details as a basis for rejection of the thesis"?

((Note: I haven't particularly planned out the rhetoric here, and my hunch is that Operation Bernhard is probably not the "best possible example of the thesis". Mostly I want to make sure I don't make things worse by emitting possible infohazards. Chess is good for that, as a toy domain that is rich enough to illustrate concepts, but thin enough to do little other than illustrating concepts! Please don't overindex on my being finite and imperfect as a reasoner about "military monetary policy history" here.))

↑ comment by Portia (Making_Philosophy_Better) · 2023-06-29T19:50:41.565Z · LW(p) · GW(p)

Please don't share human civilisation vulnerabilities online because a super awesome AI will get them anyway and human society might fortify against them.

The chance of them fortifying is slim. Our politicians are failing to deal with right wing take-overs and climate change already. Our political systems hackability has already been painfully played by Russia, with little consequence. Literal bees have an electoral process for new hive locations more resilient against propaganda and fake news than we do, it is honestly embarrassing.

The chance of a human actor exploiting such holes is larger than them being patched, I fear. The aversion to ruining your neighbouring countries financial system out of fear that they will ruin yours in response doesn't just not hold for an AI, it also fails to hold for those ideologically against a working world finance system. If you are willing to doom your own community, or fail to recognise that such a move would bring your own community doom, as well, because you have mistaken the legitimate evils of capitalism for evidence that we'd all be much better off if there was no such thing as money, you may well engage in such acts. There are increasing niche groups who think having humanity is per se bad, government is per se bad, and economy is per se bad. I think the main limit here so far is that the kind of actor who would like to not have a world financial system is typically not the kind of actor with sufficient money and networking to start a large-scale money forging operation. But not every massively destructive act requires a lot of resources to pull off.

Replies from: JenniferRM↑ comment by JenniferRM · 2025-04-15T15:43:05.806Z · LW(p) · GW(p)

I agree that there are many bad humans. I agree that some of them are ideologically committed to destroying the capacity of our species to coordinate. I agree that most governance systems on Earth are embarrassingly worse than how bees instinctively vote on new hive locations.

I do not agree that we should be quiet about the need for a global institutional governance system that has fewer flaws.

By way of example: I don't think that "not talking very much about Gain-of-Function research deserving to be banned" didn't cause there to be no Gain-of-Function research in Wuhan, by collaborators of the people in the US who explicitly proposed building something like covid in a grant proposal a while before covid was actually built under BSL2 conditions, by their international scientific collaborators, and then escaped the lab.

There should have been more anti-GoF talk, and it should have been explicitly bipartisan, and so on. In Trump's first term, one of the crazy random things he "did or allowed" was to let the pro-GoF people at NIH quietly weaken the GoF ban that was instituted under Obama.

But also, similarly to how anti-GoF talk would be helpful up until there is an international treaty system that insists that GoF never happen outside of a "BSL5" (which currently doesn't even exist (currently the bio-safety levels only go up to 4)) I think there should be more anti-bad-governance-institution talk, and it should be explicitly bipartisan. There are many other larger fires now. And covid is no longer in the zeitgeist. Maybe this is not the best place to spend words. But it is a great test case for talking about general policies on regulation about dangerous technology, and institutions for handling such tech, and speech about the need for better institutions.

Not that I greatly expect such talk to help, whether for AGI or GoF or anything. Its just that I think that (1) in the (rare?) timelines where we live I will not be greatly embarrassed to have talked as much as I did, and (2) in the (common?) timelines where we die I will mostly regret (just before I die) my silences more than my speech.

comment by Max H (Maxc) · 2023-06-28T14:34:00.477Z · LW(p) · GW(p)

If you're smarter than your opponent but have less starting resources, the optimal strategy probably involves some combination of cooperation, making alliances, deception, escaping / running / hiding, gathering resources in secret, and whatever other prerequisites are needed to neutralize such a resource imbalance. Many scenarios in which a smarter-than-human AGI with less resources goes to war with or is attacked by humanity are thus somewhat contradictory or at least implausible: they postulate the AGI taking a less good strategy than what a literal human in its place could come up with.

There's not really an analogue for this to Chess - if I am forced to play a chess game with a grandmaster with whatever handicap, I could maybe flip over the board if I started to lose. But that probably just counts as a forfeit, unless I can also overpower or coerce my opponent and / or the judges.

if a rogue AI is caught early on it’s plot, with all the worlds militaries combined against them while they still have to rely on humans for electricity and physical computing servers. It’s somewhat hard to outthink a missile headed for your server farm at 800 km/h.

Breaking it down by cases:

- If deception / misalignment is caught early enough (i.e. in the lab, by inspecting the system statically before it is given a chance to execute on its own), then you don't need any military, you just turn the system off or don't run it in the first place.

- If the deception / misalignment is not detected until the AI is already "loose in the world", such that it would take all the world's militaries (or any military, really) to stop, it's already too late. The AI has already had an opportunity to hide / copy itself, hide its true intentions, make alliances with powerful and sympathetic (or naive) humans, etc. the way any smart human actor would do.

- If the detection happens somewhere in between these points, there might be a situation in which "brawn" is relevant. This seems like a really narrow time frame / band of possibilities to me though, and when people try to postulate what scenarios in this band might look like, they often rely on a smarter-than-human system making mistakes a literal human would know to avoid. Not saying it's not possible to come up with more realistic scenarios, but "is a supposedly smarter-than-human AI making mistakes or missing strategies that I myself would not miss" is a good basic sanity check on whether your scenario is plausible or not.

↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2023-06-28T16:11:43.335Z · LW(p) · GW(p)

I like this analysis, and I agree with except that I do think it's missing a likely intermediate scenario. I think the "fully under lab control" is a super advantageous situation for the humans, especially if the AI has been trained on censored simulation data that doesn't mention humans or computers or have accurate physics. I think the current world has an unfortunately dangerous intermediate situation where LLMs age given full access to human knowledge, and allowed to interact with society. And yet, in the case of the SotA models like GPT-4, aren't quite at "loose in the world" levels of freedom. They don't have access to their own weights or source code and neither do any accomplices they might recruit outside the company. Indeed, even most employees at the company couldn't exfiltrate the weights. Thus, the current default starting state for a rogue AI is posed right on that dangerous margin of "difficult but not impossible to escape". I think this "brains vs brawn" style analysis does then make a big difference for the initial escape. I agree that once the escape has been accomplished it's really hard for humanity to claw back a win. But before the escape has occurred, it's a much more even game.

↑ comment by MichaelStJules · 2023-07-01T15:31:28.486Z · LW(p) · GW(p)

Why is it too late if it would take militaries to stop it? Couldn't the militaries stop it?

Replies from: Maxc↑ comment by Max H (Maxc) · 2023-07-05T13:38:04.782Z · LW(p) · GW(p)

If an AI is smart enough that it takes a military force to stop it, the AI is probably also smart enough to avoid antagonizing that force, and / or hiding out in a way that a military can't find.

Also, there are a lot of things that militaries and governments could do, if they had the will and ability to coordinate with each other effectively. What they would do is a different question.

How many governments, when faced with even ironclad evidence of a rogue AI on the loose, would actually choose to intervene, and then do so in an effective way? My prediction is that many countries would find reasons or rationalizations not to take action at all, while others would get mired in disagreement and infighting, or fail to deploy their forces in an actually effective way. And that's before the AI itself has an opportunity to sow discord and / or form alliances.

(Though again, I still think an AI that is at exactly the level where military power is relevant is a pretty narrow and unlikely band.)

comment by habryka (habryka4) · 2023-06-28T18:46:31.295Z · LW(p) · GW(p)

This kind of experiment has been at the top of my list of "alignment research experiments I wish someone would run". I think the chess environment is one of the least interesting environments (compared to e.g. Go or Starcraft), but it does seem like a good place to start. Thank you so much for doing these experiments!

I do also think Gwern's concern about chess engines not really being trained on games with material advantage is an issue here. I expect a proper study of this kind of problem to involve at least finetuning engines.

Replies from: lc, Quadratic Reciprocity, Archimedes↑ comment by lc · 2023-06-29T12:56:45.768Z · LW(p) · GW(p)

I do also think Gwern's concern about chess engines not really being trained on games with material advantage is an issue here. I expect a proper study of this kind of problem to involve at least finetuning engines.

It's actually much worse than this. Stockfish has no ability to model its opponents' flaws in game knowledge or strategy; it has no idea it's playing against a 1200. It's like a takeover AI that refrains from sending the stage-one nanosystem spec to the bio lab because it assumes the lab is also manned by AGIs and would understand what mixing the beaker accomplishes. A grandmaster in chess, who wanted to win against a novice with odds, would perhaps do things like complicate the position so that their opponent would have a larger chance of making blunders. Stockfish on the other hand is limited to playing "game theory optimal" chess, strategies that would work "best" (in terms of number of moves from checkmate saved) against what it considers optimal play.

To fix this, I have wondered for a while if you couldn't use the enormous online chess datasets to create an "exploitative/elo-aware" Stockfish, which had a superhuman ability to trick/trap players during handicapped games, or maybe end regular games extraordinarily quickly, and not just handle the best players. A simple way to do it would be: start by training a model to predict a user's next move, given ELO/format/current board history. Then use that model to forward-evaluate top suggestions with the moves that opponent is actually likely to play in response. The result would be (potentially) an engine that was far far better than anything that currently exists at highlighting how bad humans in particular are at chess, and it would be interesting to see what kinds of odds you would be able to give it against the best chess masters, and how long it would take for them to improve.

Replies from: gwern, Bucky, Making_Philosophy_Better↑ comment by gwern · 2023-06-29T20:59:44.264Z · LW(p) · GW(p)

Yes, this is another reason that setups like OP are lower-bounds. Stockfish, like most game RL AIs, is trying to play the Nash equilibrium move, not the maximally-exploitative move against the current player; it will punish the player for any deviations from Nash, but it will not itself risk deviating from Nash in the hopes of tempting the player into an even larger error, because it assumes that it is playing against something as good or better than itself, and such a deviation will merely be replied to with a Nash move & be very bad.

You could frame it as an imitation-learning problem like Maia. But also train directly: Stockfish could be trained with a mixture of opponents and at scale, should learn to observe the board state (I don't know if it needs the history per se, since just the stage of game + current margin of victory ought to encode the Elo difference and may be a sufficient statistic for Elo), infer enemy playing strength, and calibrate play appropriately when doing tree search & predicting enemy response. Silver & Veness 2010 comes to mind as an example of how you'd do MCTS with this sort of hidden-information (the enemy's unknown Elo strength) which turns it into a POMDP rather than a MDP.

Replies from: johnlawrenceaspden↑ comment by johnlawrenceaspden · 2023-06-30T13:08:31.695Z · LW(p) · GW(p)

For a clear example of this, in endgames where I have a winning position but have little to no idea how to win, Stockfish's king will often head for the hills, in order to delay the coming mate as long as theoretically possible.

Making my win very easy because the computer's king isn't around to help out in defence.

This is not a theoretical difficulty! It makes it very difficult to practise endgames against the computer.

↑ comment by Bucky · 2023-06-29T16:21:50.546Z · LW(p) · GW(p)

Something similar not involving AIs is where chess grandmasters do rating climbs with handicaps. one I know of was Aman Hambleton managing to reach 2100 Elo on chess.com when he deliberately sacrificed his Queen for a pawn on the third/fourth move of every game.

https://youtube.com/playlist?list=PLUjxDD7HNNTj4NpheA5hLAQLvEZYTkuz5

He had to complicate positions, defend strongly, refuse to trade and rely on time pressure to win.

The games weren’t quite the same as Queen odds as he got a pawn for the Queen and usually displaced the opponent’s king to f3/f6 and prevented castling but still gives an idea that probably most amateurs couldn’t beat a grandmaster at Queen odds even if they can beat stockfish. Longer time controls would also help the amateur so maybe in 15 minute games an 1800 could beat Aman up a Queen.

↑ comment by Portia (Making_Philosophy_Better) · 2023-06-29T19:27:28.123Z · LW(p) · GW(p)

This has me wonder about a related point.

I'm not a well-trained martial artist at all. But I have beaten well-trained martial artists in multiple fights. Apparently, that is not an unheard of phenomenon, either. It seemed to be key that I fight well by some metrics, but as a novice, commit errors that are incomprehensible, uneven and importantly: unpredictable to an expert because they would never do something so silly. I fail to go for obvious openings, and hence end up in unexpected places; but at that point, while I am underestimated because I have been foolish, I suddenly twist out of a grasp with unexpected flexibility, then miss being grabbed again because I have moved randomly and pointlessly, fail to protect against obvious threats, but don't drop due to an unexpectedly high pain tolerance despite having taken a severe hit, and then take a well-aimed hit with unexpected strength.

This has me wonder whether an AI would have significant difficulties winning against humans who act inconsistently and suboptimally in some ways, without acting like utter idiots randomly all the time - because they don't take offers the AI was certain they would take, fail to defend against threats the AI was certain they would spot and that were actually traps, stubbornly stick with a strategy even after it has proven defective but hence cannot be budged from it even when the AI really needs them to, etc.

Yet I also wonder whether the chess example is misleading because it is so inherently limited, so very inside the box. To go back to the above fight example: I've armwrestled with much stronger people I have beaten in actual fights. If they are much stronger, I inevitably lose the armwrestling. I am just not strong enough, and while I can set my arm with determination until the muscle rips... well, eventually the muscle just rips, and that is that. If I were to use my whole body for leverage like I would in a fight, or chuck something in their eyes to distract them, I would maybe get their hand on the table - but it would be cheating, and I can't cheat in an armwrestle match, you can't win that way, it is not allowed. Similarly, acting unpredictably during chess is of very limited advantage compared to the significant disadvantage from suboptimal moves, in light of the limited range of unpredictable move. A beginner being so foolish as to act random in chess is trivially beaten; I am notably terrible at chess. If there are only a few positions your knight can be in before jumping back to prior configurations, then you randomly choosing an inferior one will doom you.

There is ultimately only so much that an AI during a chess game can do. It can't shortcut via third dimension via an extra chess board above. It can't put a unicorn figure on the board and claim it combined the queen and a knight. It can't convince you that actually, you should let it win, in light of the fact that it has now placed a bomb on the chessboard that will otherwise blow up in your face. It can't screw over your concentration by raping your kid in front of you. It can't get up for a sip of water, then surprise club you from behind, and then claim you forfeited the match. There are only so many figures, so much space, so many moves, so much room for creativity.

But the real world is not a game. It will likely contain winning opportunities we might not be aware of at all. A very smart commander may still be beaten in a battle by a less smart commander who has a lot more of the same type of weapons, troops and other assets. But if the smarts of the smart commander don't just extend to battlefield tactics, but also, say, to developing the first nukes/nanotech/an engineered virus, or rather whatever equivalent transformation there may be that we cannot even guess at, at some point, you are done for.

Replies from: Dweomite, green_leaf↑ comment by Dweomite · 2023-06-29T22:47:57.599Z · LW(p) · GW(p)

I suspect that the domain of martial arts is unusually susceptible to that problem because

- Fights happen so quickly (relative to human thought) that lots of decisions need to be made on reflex

- (And this is highly relevant to performance because the correct action is heavily dependent on your opponent's very recent actions)

- Most well-trained martial artists were trained on data that is heavily skewed towards formally-trained opponents

↑ comment by green_leaf · 2023-06-29T21:39:29.376Z · LW(p) · GW(p)

It seemed to be key that I fight well by some metrics

That couldn't be the case - that would leave you, even after having a black belt, vulnerable towards people who can't fight, which would defeat the purpose of martial arts. Whichever technique you use, you use when responding to what the other person is currently doing. You don't simply execute a technique that depends on the person fighting well by some metrics, and then get defeated when it turns out that they are, in fact, only in the 0.001st percentile of fighting well by any metrics we can imagine.

(That said, I'm really happy for your victories - maybe they weren't quite as well-trained.)

This has me wonder whether an AI would have significant difficulties winning against humans who act inconsistently and suboptimally in some ways, without acting like utter idiots randomly all the time

I'm thinking the AI would predict the way in which the other person would act inconsistently and suboptimally.

If there were multiple paths to victory for the human and the AI could block only one (thereby seemingly giving the human the option to out-random the AI by picking one of the unguarded paths to victory), the AI would be better at predicting the human than the human would be at randomizing.

People are terrible at being unpredictable. I remember a 10+ years-old predictor of a rock-paper-scissors for predicting a "random" decision of a human in a series of games. The humans had no chance.

Replies from: johnlawrenceaspden, Making_Philosophy_Better↑ comment by johnlawrenceaspden · 2023-06-30T13:24:53.837Z · LW(p) · GW(p)

The "purpose" of most martial arts is to defeat other martial artists of roughly the same skill level, within the rules of the given martial art.

Optimizing for that is not the same as optimizing for general fighting. If you spent your time on the latter, you'd be less good at the former.

"Beginner's luck" is a thing in almost all games. It's usually what happens when someone tries a strategy so weird that the better player doesn't immediately understand what's going on.

The other day a low-rated chess player did something so weird in his opening that I didn't see the threat, and he managed to take one of my rooks.

That particular trap won't work on me again, and might not have worked the first time if I'd been playing someone I was more wary of.

I did eventually manage to recover and win, but it was very close, very fun, and I shook his hand wholeheartedly afterwards.

Every other game we've played I've just crushed him without effort.

About a year ago I lost in five moves to someone who tried the "Patzer Attack". Which wouldn't work on most beginners. The first time I'd ever seen it. It worked once. It will never work on me again.

Replies from: gwd, green_leaf↑ comment by gwd · 2023-07-04T20:29:20.480Z · LW(p) · GW(p)

The "purpose" of most martial arts is to defeat other martial artists of roughly the same skill level, within the rules of the given martial art.

Not only skill level, but usually physical capability level (as proxied by weight and sex) as well. As an aside, although I'm not at all knowledgeable about martial arts or MMA, it always seemed like an interesting thing to do might to use some sort of an ELO system for fighting as well: a really good lightweight might end up fighting a mediocre heavyweight, and the overall winner for a year might be the person in a given <skill, weight, sex> class that had the highest ELO. The only real reason to limit the ELO gap between contestants would be if there were a higher risk of injury, or the resulting fight were consistently just boring. But if GGP is right that a big upset isn't unheard of, it might be worth 9 boring fights for 1 exciting upset.

↑ comment by green_leaf · 2023-07-23T21:46:15.530Z · LW(p) · GW(p)

The "purpose" of most martial arts is to defeat other martial artists of roughly the same skill level, within the rules of the given martial art.

This is false - the reason they were created was self-defense. That you can have people of similar weight and belt color spar/fight each other in contests is only a side effect of that.

"Beginner's luck" is a thing in almost all games. It's usually what happens when someone tries a strategy so weird that the better player doesn't immediately understand what's going on.

That doesn't work in chess if the difference in skill is large enough - if it did, anyone could simply make up strategies weird enough, and without any skill, win any title or even the World Chess Championship (where is the number of victories needed).

If you're saying it works as a matter of random fluctuations - i.e. a player without skill could win, let's say, games against Magnus Carlsen, because these strategies (supposedly) usually almost never work but sometimes they do, that wouldn't be useful against an AI, because it would still almost certainly win (or, more realistically, I think, simply model us well enough to know when we'd try the weird strategy).

↑ comment by Portia (Making_Philosophy_Better) · 2023-08-15T15:07:18.214Z · LW(p) · GW(p)

"Even after having a black belt"? One of the people I beat is a twice national champion, instructor with a very reputable agency and san dan in karate. They are seriously impressive good at it. If we agreed to do something predictable, I would be crushed. They are faster, stronger, have better form and balance, know more moves, have better reflexes. I'm in awe of them. They are good. I do think what they do deserves to be called an art, and that they are much, much, much (!) better than I am.

But their actions also presuppose that I will act sensibly (e.g. avoiding injury, using opportunities), and within the rule set in which they were trained.

I really don't think I could replicate this feat in the exact same way. Having once lost in such a bizarre way, they have learned and adapted. Many beginners only have few moves available, and suck at suppressing their intentions, so they may beat you once, but you'll destroy them if they try the same trick again. It might work again if they try something new, but again, if you paired the experienced fighter with that specific beginner for a while, pretty quickly, they would constantly win, as they have learned about the unexpected factor.

But in a first fight? I wouldn't bet on a beginner in such a fight. But nor would I be that surprised by a win.

And I definitely would not believe that having a black belt makes you invulnerable towards streetfighters, or even simply angry incompetent strangers, without one. Nor do I know any martial art trainer who would make such a claim. Safer, for sure. Your punches and kicks more effective, your balance and falls better, better confidence and situational awareness, more strength, faster reflexes, ingrained good responses rather than rookie mistakes, a knowledge of weak body parts, pain trigger points and ways to twist the other person to induce severe pain, knowledge of redirecting strength, of mobilising multiple body parts of yours against one of theirs, all the great stuff. But perfectly safe, no.

↑ comment by Quadratic Reciprocity · 2023-06-28T20:36:52.658Z · LW(p) · GW(p)

Is your "alignment research experiments I wish someone would run" list shareable :)

↑ comment by Archimedes · 2024-11-22T04:43:13.525Z · LW(p) · GW(p)

@gwern [LW · GW] and @lc [LW · GW] are right. Stockfish is terrible at odds and this post could really use some follow-up.

As @simplegeometry [LW · GW] points out in the comments, we now have much stronger odds-playing engines that regularly win against much stronger players than OP.

https://lichess.org/@/LeelaQueenOdds

https://marcogio9.github.io/LeelaQueenOdds-Leaderboard/

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-11-22T04:47:57.888Z · LW(p) · GW(p)

That's really cool! Do you have any sense of what kind of material advantage these odd-playing engines could use against the best humans?

Replies from: Archimedes, lc, simplegeometry, Archimedes↑ comment by Archimedes · 2025-03-09T01:46:31.388Z · LW(p) · GW(p)

FYI, there has been even further progress with Leela odds nets. Here are some recent quotes from GM Larry Kaufman (a.k.a. Hissha) found on the Leela Chess Zero Discord:

(2025-03-04) I completed an analysis of how the Leela odds nets have performed on LiChess since the search-contempt upgrade on Feb. 27. [...] I believe these are reasonable estimates of the LiChess Blitz rating needed to break even with the bots at 5'3" in serious play. Queen and move odds (means Leela plays Black) 2400, Queen odds (Leela White) 2550, [...] Rook and move odds (Leela Black); 3000. Rook odds (Leela White) 3050, knight odds 3200. For comparison only a few top humans exceed 3000, with Magnus at 3131. So based on this, even Magnus would lose a match at 5'3" with knight odds, while perhaps the top five blitz players in the world would win a match at rook odds. Maybe about top fifty could win a match at queen for knight. At queen odds (Leela White), a "par" (FIDE 2400) IM should come out ahead, while a "par" (FIDE 2300) FM should come out behind.

(2025-03-07) Yes, there have to be limits to what is possible, but we keep blowing by what we thought those limits were! A decade ago, blitz games (3'2") were pretty even between the best engine (then Komodo) and "par" GMs at knight odds. Maybe some people imagined that some day we could push that to being even at rook odds, but if anyone had suggested queen odds that would have been taken as a joke. And yet, if we're not there already, we are closing in on it. Similarly at Classical time controls, we could barely give knight odds to players with ratings like FIDE 2100 back then, giving knight odds to "par" GMs in Classical seemed like an impossible goal. Now I think we are already there, and giving rook odds to players in Classical at least seems a realistic goal. What it means is that chess is more complicated than we thought it was.

↑ comment by lc · 2024-11-22T05:06:56.591Z · LW(p) · GW(p)

As the name suggests, Leela Queen Odds is trained specifically to play without a queen, which is of course an absolutely bonkers disadvantage against 2k+ elo players. One interesting wrinkle is the time constraint. AIs are better at fast chess (obviously), and apparently no one who's tried is yet able to beat it consistently at 3+0 (3 minutes with no timing increment)

↑ comment by simplegeometry · 2024-11-22T05:47:32.429Z · LW(p) · GW(p)

At rapid time controls, it seems like we could maybe go even against Magnus with knight odds? If not Magnus, perhaps other high-rated GMs.

There was a match with the most recently updated LeelaKnightOdds and GM Alex Lenderman but I don't recall the score exactly. EDIT: which was 19-3-2 win draw loss.

↑ comment by [deleted] · 2024-11-22T05:51:18.350Z · LW(p) · GW(p)

rapid time controls

I am very skeptical of this on priors, for the record. I think this statement could be true for superblitz time controls and whatnot, but I would be shocked if knight odds would be enough to beat Magnus in a 10+0 or 15+0 game. That being said, I have no inside knowledge, and I would update a lot of my beliefs significantly if your statement as currently written actually ends up being true.

Replies from: Veedrac, simplegeometry↑ comment by Veedrac · 2024-12-17T11:45:32.396Z · LW(p) · GW(p)

LeelaKnightOdds has convincingly beaten both Awonder Liang and Anish Giri at 3+2 by large margins, and has an extremely strong record at 5+3 against people who have challenged it.

I think 15+0 and probably also 10+0 would be a relatively easy win for Magnus based on Awonder, a ~150 elo weaker player, taking two draws at 8+3 and a win and a draw at 10+5. At 5+3 I'm not sure because we have so little data at winnable time controls, but wouldn't expect an easy win for either player.

It's also certainly not the case that these few-months-old networks running a somewhat improper algorithm are the best we could build—it's known at minimum that this Leela is tactically weaker than normal and can drop endgame wins, even if humans rarely capitalize on that.

↑ comment by simplegeometry · 2024-11-22T05:58:10.676Z · LW(p) · GW(p)

Hissha from the Lc0 server reports 19 wins, 3 draws, and 2 losses against Lenderman (currently ~2500 FIDE) at 15+10 from a knight odds match 2 months ago -- with the caveat that Lenderman started playing too fast after 10 games. I haven't run the numbers but suspect this would be enough to go even against a 2750, if not Magnus?

I was surprised too. I think it's an exciting development :)

Replies from: None↑ comment by [deleted] · 2024-11-22T06:02:33.060Z · LW(p) · GW(p)

Hmm, that sounds about right based on the usual human-vs-human transfer from Elo difference to performance... but I am still not sure if that holds up when you have odds games, which feel qualitatively different to me than regular games. Based on my current chess intuition, I would expect the ability to win odds games to scale better than ELO near the top level, but I could be wrong about this.

↑ comment by Archimedes · 2024-11-22T23:29:13.945Z · LW(p) · GW(p)

Knight odds is pretty challenging even for grandmasters.

comment by Zach Stein-Perlman · 2023-06-29T02:36:50.180Z · LW(p) · GW(p)

Some nitpicks:

- You write like Stockfish 14 is a probabilistic function from game-state to next-move, the thing-which-has-an-ELO. But I think Stockfish 14 running on X hardware for Y time is the real probabilistic function from game-state to next-move (see e.g. the inclusion of hardware in ELO ranking here). And you probably played with hardware and time such that its ELO is substantially below 3549.

- I think a human with Stockfish's ELO would be much better at beating you down odds of a queen, since (not certain about these):

- Stockfish is optimized for standard chess and human grandmasters are probably better at transferring to odds-chess.

- Stockfish roughly tries to maximize P(win) against optimal play or Stockfish-level play, or maximize number of moves before losing once it knows you have a winning strategy. Human grandmasters would adapt to be better against your skill level (e.g. by trying to make positions more complex), and would sometimes correctly make choices that would be bad against Stockfish or optimal play but good against weaker players.

comment by Olli Järviniemi (jarviniemi) · 2024-12-05T23:24:53.211Z · LW(p) · GW(p)

The post studies handicapped chess as a domain to study how player capability and starting position affect win probabilities. From the conclusion:

In the view of Miles and others, the initially gargantuan resource imbalance between the AI and humanity doesn’t matter, because the AGI is so super-duper smart, it will be able to come up with the “perfect” plan to overcome any resource imbalance, like a GM playing against a little kid that doesn't understand the rules very well.

The problem with this argument is that you can use the exact same reasoning to imply that’s it’s “obvious” that Stockfish could reliably beat me with queen odds. But we know now that that’s not true.

Since this post came out, a chess bot (LeelaQueenOdds) that has been designed to play with fewer pieces has come out. simplegeometry's comment [LW(p) · GW(p)] introduces it well. With queen odds, LQO is way better than Stockfish, which has not been designed for it. Consequentially, the main empirical result of the post is severely undermined. (I wonder how far even LQO is from truly optimal play against humans.)

(This is in addition to - as is pointed out by many commenters - how the whole analogue is stretched at best, given the many critical ways in which chess is different from reality. The post has little argument in favor of the validity of the analogue.)

I don't think the post has stood the test of time, and vote against including it in the 2023 Review.

Replies from: martin-randall↑ comment by Martin Randall (martin-randall) · 2025-02-03T01:13:47.940Z · LW(p) · GW(p)

While I agree that this post was incorrect, I am fond of it, because the resulting conversation made a correct prediction that LeelaPieceOdds was possible. Most clearly in a thread started by lc [LW(p) · GW(p)]:

I have wondered for a while if you couldn't use the enormous online chess datasets to create an "exploitative/elo-aware" Stockfish, which had a superhuman ability to trick/trap players during handicapped games, or maybe end regular games extraordinarily quickly, and not just handle the best players.

(not quite a prediction as phrased, but I still infer a prediction overall).

Interestingly there were two reasons given for predicting that Stockfish is far from optimal when giving Queen odds to a less skilled player:

- Stockfish is not trained on positions where it begins down a queen (out-of-distribution)

- Stockfish is trained to play the Nash equilibrium move, not to exploit weaker play (non-exploiting)

The discussion didn't make clear predictions about which factor would be most important, or whether both would be required, or whether it's more complicated than that. Folks who don't yet know might make a prediction before reading on.

For what it's worth, my prediction was that non-exploiting play is more important. That's mostly based on a weak intuition that starting without a queen isn't that far out of distribution, and neural networks generalize well. Another way of putting it: I predicted that Stockfish was optimizing the wrong thing more than it was too dumb to optimize.

And the result? Alas, not very clear to me. My research is from the the lc0 blog, with posts such as The LeelaPieceOdds Challenge: What does it take you to win against Leela?. The journey began with the "contempt" setting, which I understand as expecting worse opponent moves. This allows reasonable opening play and avoids forced piece exchanges. However GM-beating play was unlocked with a fine-tuned odds-play-network, which impacts both out-of-distribution and non-exploiting concerns.

One surprise gives me more respect for the out-of-distribution theory. The developer's blog first mentioned piece odds in The Lc0 v0.30.0 WDL rescale/contempt implementation

In our tests we still got reasonable play with up to rook+knight odds, but got poor performance with removed (otherwise blocked) bishops.

So missing a single bishop is in some sense further out-of-distribution than missing a rook and a knight! The later blog I linked explains a bit more:

Removing one of the two bishops leads to an unrealistic color imbalance regarding the pawn structure far beyond the opening phase.

An interesting example where the details of going out-of-distribution matter more than the scale of going out-of-distribution. There's an article that may have more info in New in Chess, but it's paywalled and I don't know if has more info on the machine-learning aspects or the human aspects.

comment by Kei · 2023-06-29T00:38:37.838Z · LW(p) · GW(p)

While I think your overall point is very reasonable, I don't think your experiments provide much evidence for it. Stockfish generally is trained to play the best move assuming its opponent is playing best moves itself. This is a good strategy when both sides start with the same amount of pieces, but falls apart when you do odds games.

Generally the strategy to win against a weaker opponent in odds games is to conserve material, complicate the position, and play for tricks - go for moves which may not be amazing objectively but end up winning material against a less perceptive opponent. While Stockfish is not great at this, top human chess players can be very good at it. For example, a top grandmaster Hikaru Nakamura had a "Botez Gambit Speedrun" (https://www.youtube.com/playlist?list=PL4KCWZ5Ti2H7HT0p1hXlnr9OPxi1FjyC0), where he sacrificed his queen every game and was able to get to 2500 on chess.com, the level of many chess masters.

This isn't quite the same as your queen odds setup (it is easier), and the short time format he is on is a factor, but I assume he would be able to beat most sub-1500 FIDE players with queen odds. A version of Stockfish trained to exploit a human's subpar ability would presumably do even better.

comment by Ege Erdil (ege-erdil) · 2023-07-06T10:58:42.503Z · LW(p) · GW(p)

I'm surprised by how much this post is getting upvoted. It gives us essentially zero information about any question of importance, for reasons that have already been properly explained by other commenters:

-

Chess is not like the real world in important respects. What the threshold is for material advantage such that a 1200 elo player could beat Stockfish at chess tells us basically nothing about what the threshold is for humans, either individually or collectively, to beat an AGI in some real-world confrontation. This point is so trivial that I feel somewhat embarrassed to be making it, but I have to think that people are just not getting the message here.

-

Even focusing only on chess, the argument here is remarkably weak because Stockfish is not a system trained to beat weaker opponents with piece odds. There are Go AIs that have been trained for this kind of thing, e.g. KataGo can play reasonably well in positions with a handicap if you tell it that its opponent is much weaker than itself. In my experience, KataGo running on consumer hardware can give the best players in the world 3-4 stones and have an even game.

If someone could try to convince me that this experiment was not pointless and actually worth running for some reason, I would be interested to hear their arguments. Note that I'm more sympathetic to "this kind of experiment could be valuable if ran in the right environment", and my skepticism is specifically about running it for chess.

Replies from: polytope, habryka4, MichaelStJules↑ comment by polytope · 2023-07-07T04:43:32.000Z · LW(p) · GW(p)

(I'm the main KataGo dev/researcher)

Just some notes about KataGo - the degree to which KataGo has been trained to play well vs weaker players is relatively minor. The only notable thing KataGo does is in some self-play games to give up to an 8x advantage in how many playouts one side has over the other side, where each side knows this. (Also KataGo does initialize some games with handicap stones to make them in-distribution and/or adjust komi to make the game fair). So the strong side learns to prefer positions that elicit higher chance of mistakes by the weaker side, while the weak side learns to prefer simpler positions where shallower search doesn't harm things as much.

This method is cute because it adds pressure to only learn "general high-level strategies" for exploiting a compute advantage, instead of memorizing specific exploits (which one might hypothesize to be less likely to generalize to arbitrary opponents). Any specific winning exploit learned by the stronger side that works too well will be learned by the weaker side (it's the same neural net!) and subsequently will be avoided and stop working.

And it's interesting that "play for positions that a compute-limited yourself might mess up more" correlates with "play for positions that a weaker human player might mess up in".

But because this method doesn't adapt to exploit any particular other opponent, and is entirely ignorant of a lot of tendencies of play shared widely across all humans, I would still say it's pretty minor. I don't have hard data, but from firsthand subjective observation I'm decently confident that top human amateurs or pros do a better job playing high-handicap games (> 6 stones) against players that more than that many ranks weaker than them than KataGo would, despite KataGo being stronger in "normal" gameplay. KataGo definitely plays too "honestly", even with the above training method, and lacks knowledge of what weaker humans find hard.