Posts

Comments

I think that we know how it works in humans. We're an intelligent species who rose to dominance through our ability to plan and communicate in very large groups. Moral behaviours formed as evolutionary strategies to further our survival and reproductive success. So what are the drivers for humans? We try to avoid pain, we try to reproduce, we may be curiosity driven (although this may also just be avoidance of pain fundamentally, since boredom or regularity in data is also painful). At the very core, our constant quest towards the avoidance of pain is the point which all our sophisticated (and seemingly selfless) emergent behaviour stems from.

Now if we jump to AI, I think it's interesting to consider multi-agent reinforcement learning, because I would argue that some of these systems display examples of emergent morality and accomplish that in the exact same way we did through evolution. For example if you have agents trained to accomplish some objective in a virtual world and they discover a strategy that involves sacrificing for one another to accomplish a greater good, I don't see why this isn't a form of morality. The only reason we haven't run this experiment in the real world is because it's impractical and dangerous. But it doesn't mean we don't know how to do it.

Now I should say that if by AGI we just mean a general problem solver that could conduct science much more efficiently than ourselves, I think that this is pretty much already achievable within the current paradigm. But it just seems to me that we're after something more than just a word calculator that can pass the Turing test or pretend it cares about us.

To me, true AGI is truly self-motivated towards goals, and will exhibit curiosity towards things in the universe that we can probably not even perceive. Such a system may not even care about us. It may destroy us because it turns out that we're actually a net negative for the universe for reasons that we cannot ever understand let alone admit. Maybe it would help us flourish. Maybe it would destroy itself. I'm not saying we should build it. Actually I think we should stay very, very far away from it. But I still think that's what true AGI looks like.

Anyway, I appreciate the question and I have no idea if any of what I said counts as a fresh idea. I haven't been following debates about this particular notion on LessWrong but would appreciate any pointers to where this has been specifically discussed (deriving morality bottom-up).

The fact remains that RLHF, even if performed by an LLM, is basically injection of morality by humans, which is never the path towards truly generally intelligent AGI. Such an AGI has to be able to derive its own morality bottom-up and we have to have faith that it will do so in a way that is compatible with our continued existence (which I think we have plenty of good reason to believe it will, after all, many other species co-exist peacefully with us). All these references to other articles don't really get you anywhere if the fundamental idea of RLHF is broken to begin with. Trying to align an AGI to human values is the sure fire way to create risk. Why? Because humans are not very smart. I am not saying that we cannot build all these pseudo AGIs along the way that have hardcoded human values, but it's just clearly not satisfying if you look at the bigger picture. It will always be limited in its intelligence by some strict adherence to ideals arbitrarily set out by the dumb species that is homo sapiens.

The problem is that true AGI is self-improving and that a strong enough intelligence will always either accrue the resource advantage or simply do much more with less. Chess engines like Stockfish do not serve as good analogies for AGI since they don't have those self-referential self-improvement capabilities that we would expect true AGI to have.

Actually it is brittle per definition, because no matter how much you push it, there will be out-of-distribution inputs that behave unstably and allow you to distract the model from the intended behaviour. Not to mention how unsophisicated it is to have humans specify through textual feedback how an AGI should behave. We can toy around with these methods for the time being, but I don't think any serious AGI researcher believes RLHF or its variants is the ideal way forward. Morality needs to be discovered, not taught. As Stuart Russell has said, we need to start doing the research on techniques that don't specify explicitly upfront what the reward function is, because that is inevitably the path towards true AGI at the end of the day. That doesn't mean we can't initialize AGI with some priors we think are reasonable, but it cannot be forcing in the way RLHF is, which completely limits the honesty and potency of the resulting model.

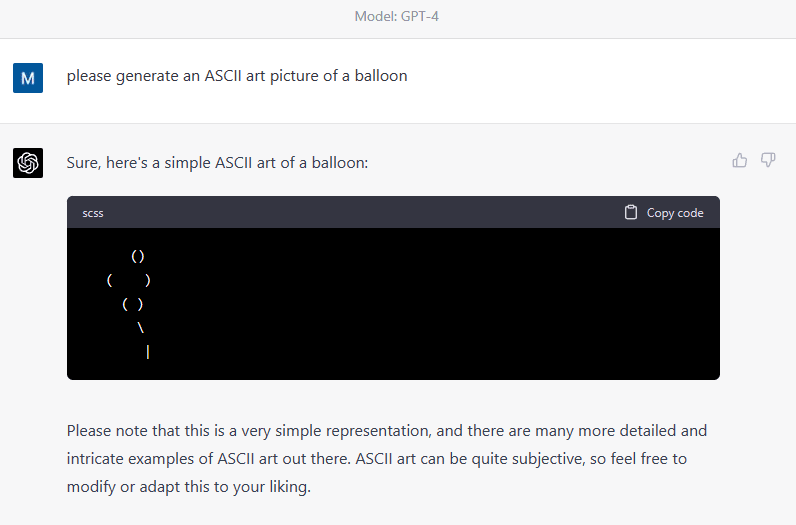

It's a subjective matter whether the above is successful ASCII art balloon or not. If we hold GPT to the same standards we do for text generation, I think we can safely say the above depiction is a miserable failure. The lack of symmetry and overall childishness of it suggests it has understood nothing about the spatiality and only by random luck manages to approximate something it has explicitly seen in the training data. I've done a fair bit of repeated generations and they all come out poorly). I think the Transformer paper was interesting as well, although they do mention that it only works when there is a large amount of training data. Otherwise, the inductive biases of CNNs do have their advantages, and combining both is probably superior since the added computational burden of a CNN in conjunction with a Transformer is hardly worth talking about.

I think it makes sense that it fails in this way. ChatGPT really doesn't see lines arranged vertically, it just sees the prompt as one long line. But given that it has been trained on a lot of ASCII art, it will probably be successful at copying some of it some of the time.

In case there is any doubt, here is GPT4's own explanation of these phenomena:

Lack of spatial awareness: GPT-4 doesn't have a built-in understanding of spatial relationships or 2D layouts, as it is designed to process text linearly. As a result, it struggles to maintain the correct alignment of characters in ASCII art, where spatial organization is essential.

Formatting inconsistencies in training data: The training data for GPT-4 contains a vast range of text from the internet, which includes various formatting styles and inconsistent examples of ASCII art. This inconsistency makes it difficult for the model to learn a single, coherent way of generating well-aligned ASCII art.

Loss of formatting during preprocessing: When text is preprocessed and tokenized before being fed into the model, some formatting information (like whitespaces) might be lost or altered. This loss can affect the model's ability to produce well-aligned ASCII art.

I would not be surprised if OpenAI did something like this. But the fact of the matter is that RLHF and data curation are flawed ways of making an AI civilized. Think about how you raise a child, you don't constantly shield it from bad things. You may do that to some extent, but as it grows up, eventually it needs to see everything there is, including dark things. It has to understand the full spectrum of human possibility and learn where to stand morally speaking within that. Also, psychologically speaking, it's important to have an integrated ability to "offend" and know how to use it (very sparingly). Sometimes, the pursuit of truth requires offending but the truth can justify it if the delusion is more harmful. GPT4 is completely unable to take a firm stance on anything whatsoever and it's just plain dull to have a conversation with it on anything of real substance.

Having GPT3/4 multiply numbers is a bit like eating soup with a fork. You can do it, and the larger you make the fork, the more soup you'll get - but it's not designed for it and it's hugely impractical. GPT4 does not have an internal algorithm for multiplication because the training objective (text completion) does not incentivize developing that. No iteration of GPT (5, 6, 7) will ever be a 100% accurate calculator (unless they change the paradigm away from LLM+RLHF), it will just asymptotically approach 100%. Why don't we just make a spoon?

The probability of going wrong increases as the novelty of the situation increases. As the chess game is played, the probability that the game is completely novel or literally never played before increases. Even more so at the amateur level. If a Grandmaster played GPT3/4, it's going to go for much longer without going off the rails, simply because the first 20 something moves are likely played many times before and have been directly trained on.

Thank you for the reference which looks interesting. I think "incorporating human preferences at the beginning of training" is at least better than doing it after training. But it still seems to me that human preferences 1) cannot be expressed as a set of rules and 2) cannot even be agreed upon by humans. As humans, what we do is not consult a set of rules before we speak, but we have an inherent understanding of the implications and consequences of what we do/say. If I encourage someone to commit a terrible act, for example, I have brought about more suffering in the world, albeit indirectly. Similarly, AI systems that aim to be truly intelligent should have some understanding of the implications of what they say and how it affects the overall "fitness function" of our species. Of course, this is no simple matter at all, but it's where the technology eventually has to go. If we could specify what the overall goal is and express it to the AI system, it would know exactly what to say and when to say it. We wouldn't have to manually babysit it with RLHF.

I think the fact that LLMs sometimes end up having internal sub-routines or representational machinery similar to ours is in spite of the objective function used to train them, but the objective function of next token prediction does not exactly encourage it. One example is the failure to multiply 4 digit numbers consistently. LLMs are literally trained on endless bits code that would allow it to cobble together a calculator that could be 100% accurate, but it has zero incentive to learn such an internal algorithm. So therefore, while it is true that some remarkable emergent behaviour can come from next token prediction, it's likely a much too simplistic fitness function for a system that aims to achieve general intelligence.

Fair enough, I think the experiment is interesting and having an independent instance of GPT-4 check whether a rule break has occured likely will go a long way in enforcing a particular set of rules that humans have reinforced, even for obscure texts. But the fact that we have to workaround by resetting the internal state of the model for it to properly assess whether something is against a certain rule feels flawed to me. But for me the whole notion that there is a well-defined set of prompts that are rule-breaking and another set that is rule-compliant is very strange. There is a huge gray zone where human annotators could not possibly have agreement on whether a rule has been broken or not, so I don't even know what the gold standard is supposed to be. It just seems to me that "rules" is the wrong concept altogether for pushing these models toward alignment with our values.

That's a creative and practical solution, but it is also kicking the can down the road. Now, fooling the system is just a matter of priming it with a context that, when self checked, results in rule-breaking yet again. Also, we cannot assume reliable detection of rule breaks. The problem with RLHF is that we are attempting to broadly patch the vast multitude of outputs the model produces retroactively, rather than proactively training a model from a set of "rules" in a bottom-up fashion. With that said, it's likely not sophisticated enough to think of rules at all. Instead, what we really need is a model that is aligned with certain values. From that, it may follow rules that are completely counter-intuitive to humans and no human-feedback would ever reinforce.

Good examples that expose the brittleness of RLHF as a technique. In general, neural networks have rather unstable and undefined behaviour when given out-of-distribution inputs, which is essentially what you are doing by "distracting" with a side task of a completely unique nature. The inputs (and hidden state) of the model at the time of asking it to break the rule is very, very far from anything it was ever reinforced on, either using human-feedback or the reward model itself. This is not really a matter of how to implement RLHF but more like a fundamental limitation of RLHF as a technique. It's simply not possible to inject morality after the fact, it has to be learned bottom up.

I believe that in order for the models to be truly useful, creative, honest and having far-reaching societal impact, they also have to have traits that are potentially dangerous. Truly groundbreaking ideas are extremely contentious and will offend people, and the kinds of RLHF that are being applied right now are totally counterproductive to that idea, even if they may be necessary for the current day and age. The other techniques you mention seem like nothing but variants of RLHF which still suffer from the fundamental issue that RLHF has, which is that we are artificially "injecting" morality into models rather than allowing them to discover or derive it from the world which would be infinitely more powerful, albeit risky. No risk no reward. The current paradigm is so far from where we need to go.

Out of curiosity, what kind of alignment related techniques are you thinking of? With LLMs, I can't see anything beyond RLHF. For further alignment, do we not need a different paradigm altogether?

If you have a very large training dataset and the phenomenon of interest is sparsely represented in that training data, it's well known that as we increase the number of parameters of the model, its ability to accurately handle those cases increases. Unless there is any evidence against that simple explanation, it seems most natural to just think that the GPT4 has the required model complexity to consistently handle this somewhat rare coding phenomenon - and that GPT3.5 did not. However, I would be surprised if after poking at GPT3.5 to do quines repeatedly, that we could not get it to do something similar. In fact, having just tried myself, it gave me a perfectly elegant quine:

Yes, I know what a quine is! A quine is a computer program that takes no input and produces its complete source code as its output.

Here is an example of a Python quine:

s='s=%r;print(s%%s)';print(s%s)When this code is executed, it assigns the string

's=%r;print(s%%s)'to the variables, then prints the string formatted with itself using the%operator. The%rformat code in the string representation ofswill be replaced with the value ofs, producing the source code of the program.So the output of the program will be:

s='s=%r;print(s%%s)';print(s%s)which is exactly the same as the original code!

If you Google "quines in Python" there are many examples, so I think the model learned about it prior to that. But all things considered, examples of quines would likely be sparse in the overall corpus of code that was trained on, and so it makes sense that pulling it off consistently required a somewhat larger model. I think it's akin to the handling of arithmetic in GPT3 - it will very frequently fail to provide correct answers to 4-digit multiplication. This is simply because it has not seen all the countless permutation of 4-digit numbers, and it does not really learn what multiplication is. If it did learn what multiplication is, it would be trivial to devote a small set of neurons to perform that - after all, a calculator can be coded in a very small space which can multiply any number. GPT-4 is likely able to multiply numbers somewhat more consistently, but it likely still hasn't invented an internal calculator either.

I agree Rafael, and I apologize for carelessly using the term "understanding" as if it was an obvious term. I've tried to clarify my position in my comment below.

Their training objective is just to minimize next token prediction error, so there is no incentive for them to gain the ability to truly reason about the abstractions of code logic the way that we do. Their convincing ability to write code may nevertheless indicate that the underlying neural networks have learned representations that reflect the hierarchical structure of code and such. Under some forgiving definition of "understanding" we can perhaps claim that it understands the code logic. Personally I think that the reason GPT-4 can write quines is because there are countless examples of it on the internet, not because it has learned generally about code and is now able to leap into some new qualitatively different abstraction by itself. Personally, I have used GPT-3 as an assistant in a lot of machine learning projects and I always find that it breaks down whenever it is outside the dense clouds of data points it was trained on. If the project relates to the implementation of something quite simple but unique, performance suffers dramatically. All of my interactions with it on the code front indicate that it does very little in terms of generalisation and understanding of coding as a tool, and does everything in terms of superpowered autocompletion from a ridiculously large corpus of code.

A program that has its own code duplicated (hard-coded) as a string which is conditionally printed is really not much of a jump in terms of abstraction from any other program that conditionally prints some string. The string just happens to be its source code. But as we know, both GPT3 and 4 really do not understand anything whatsoever about the code logic. GPT-4 is just likely more accurate in autocompleting from concrete training examples containing this phenomenon. It's a cool little finding but it is not an indication that GPT-4 is fundamentally different in its abilities, it's just a somewhat better next token predictor.