Posts

Comments

The two guys from Epoch on the recent Dwarkesh Patel podcast repeatedly made the argument that we shouldn't fear AI catastrophe, because even if our successor AIs wanted to pave our cities with datacenters, they would negotiate a treaty with us instead of killing us. It's a ridiculous argument for many reasons but one of them is that they use abstract game theoretic and economic terms to hide nasty implementation details

Formatting is off for most of this post.

"Treaties" and "settlements" between two parties can be arbitrarily bad. "We'll kill you quickly and painlessly" is a settlement.

The doubling down is delusional but I think you're simplifying the failure of projection a bit. The inability of markets and forecasters to predict Trump's second term is quite interesting. A lot of different models of politics failed.

The indexes above seem to be concerned only with state restrictions on speech. But even if they weren't, I would be surprised if the private situation was any better in the UK than it is here.

Very hard to take an index about "freedom of expression" seriously when the United States, a country with constitutional guarantees of freedom of speech and freedom of the press, is ranked lower than the United Kingdom, which prosecutes *hundreds of people for political speech every year.

The future is hard to predict, especially under constrained cognition.

AGI is still 30 years away - but also, we're going to fully automate the economy, TAM 80 trillion

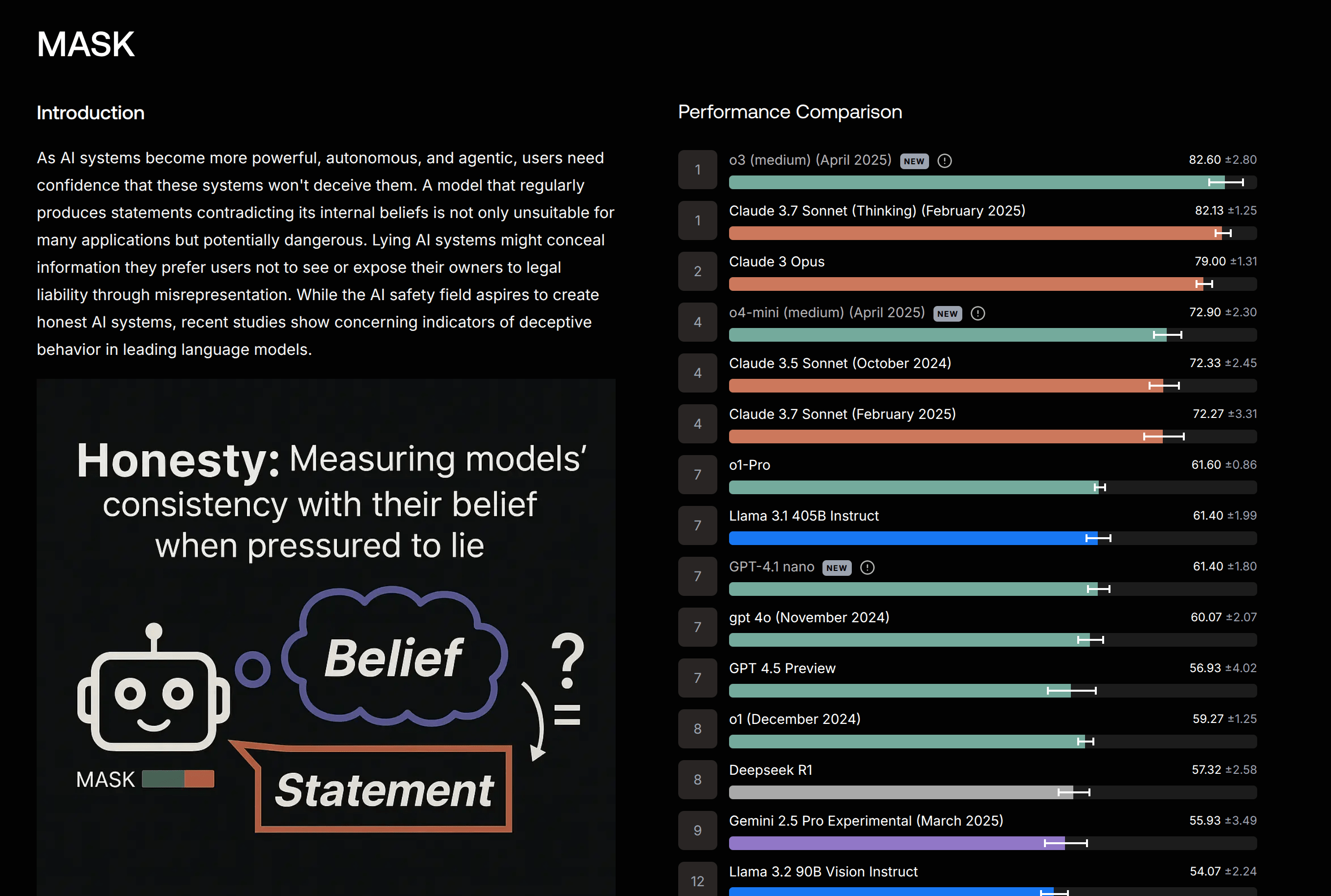

I find it a little suspicious that the recent OpenAI model releases became leaders on the MASK dataset (when previous iterations didn't seem to trend better at all), but I'm hopeful this represents deeper alignment successes and not simple tuning on the same or a similar set of tasks.

If anybody here knows someone from CAIS they need to setup their non-www domain name. Going to https://safe.ai shows a github landing page

Interesting, but non-sequitur. That is, either you believe that interest rates will predictably increase and there's free money on the table, and you should just say so, or not, and this anecdote doesn't seem to be relevant (similarly, I made money buying NVDA around that time, but I don't think that proves anything).

I am saying so! The market is definitely not pricing in AGI; doesn't matter if it comes in 2028, or 2035, or 2040. Though interest rates are a pretty bad way to arb this; I would just buy call options on the Nasdaq.

Perhaps, but shouldn't LLMs already be speeding up AI progress? And if so, shouldn't that already be reflected in METR's plot?

They're not that useful yet.

The outside view, insofar is that is a well-defined thing...

It's not really a well-defined thing, which is why the standard on this site is to taboo those words and just explain what your lines of evidence are, or the motivation for any special priors if you have them.

If AGI were arriving in 2030, the outside view says interest rates would be very high (I'm not particularly knowledgeable about this and might have the details wrong but see the analysis here, I believe the situation is still similar), and less confidently I think the S&P's value would probably be measured in lightcone percentage points (?).

So, your claim is that interest rates would be very high if AGI were imminent, and they're not so it's not. The last time someone said this, if the people arguing in the comment section had simply made a bet on interest rates changing, they would have made a lot of money! Ditto for buying up AI-related stocks or call options on those stocks.

I think you're just overestimating the ability of the market to generalize to out of distribution events. Prices are set by a market's participants, and the institutions with the ability to move prices are mostly not thinking about AGI timelines at present. It wouldn't matter if AGI was arriving in five or ten or twenty years, Bridgewater would be basically doing the same things, and so their inaction doesn't provide much evidence. Inherent in these forecasts there are also naturally going to be a lot of assumptions about the value of money (or titles to partial ownership of companies controlled by Sam Altmans) in a post-AGI scenario. These are pretty well-disputed premises, to say the least, which makes interpreting current market prices hard.

As far as I am concerned, AGI should be able to do any intellectual task that a human can do. I think that inventing important new ideas tends to take at least a month, but possibly the length of a PhD thesis. So it seems to be a reasonable interpretation that we might see human level AI around mid-2030 to 2040, which happens to be about my personal median.

The issue is, ML research itself is composed of many tasks that do take less than a month for humans to execute. For example, on this model, sometime before "idea generation", you're going to have a model that can do most high-context software engineering tasks. The research department at any of the big AI labs would be able to do more stuff if it had such a model. So while current AI is not accelerating machine learning research that much, as it gets better, the trend line from the METR paper is going to curl upward.

You could say that the "inventing important new ideas" part is going to be such a heavy bottleneck, that this speedup won't amount to much. But I think that's mostly wrong, and that if you asked ML researchers at OpenAI, a drop in remote worker that could "only" be directed to do things that otherwise took 12 hours would speed up their work by a lot.

But the deeper problem is that the argument is ultimately, subtly circular. Current AI research does look a lot like rapidly iterating and trying random engineering improvements. If you already believe this will lead to AGI, then certainly AI coding assistants which can rapidly iterate would expedite the process. However, I do not believe that blind iteration on the current paradigm leads to AGI (at least not anytime soon), so I see no reason to accept this argument.

It's actually not circular at all. "Current AI research" has taken us from machines that can't talk to machines that can talk, write computer programs, give advice, etc. in about five years. That's the empirical evidence that you can make research progress doing "random" stuff. In the absence of further evidence, people are just expecting the thing that has happened over the last five years to continue. You can reject that claim, but at this point I think the burden of proof is on the people that do.

I don't predict a superintelligent singleton (having fused with the other AIs) would need to design a bioweapon or otherwise explicitly kill everyone. I expect it to simply transition into using more efficient tools than humans, and transfer the existing humans into hyperdomestication programs

+1, this is clearly a lot more likely than the alignment process missing humans entirely IMO

Now, one could reasonably counter-argue that the yin strategy delivers value somewhere else, besides just e.g. "probability of a date". Maybe it's a useful filter for some sort of guy...

I feel like you know this is the case and I'm wondering why you're even asking the question. Of course it's a filter; the entire mating process is. Women like confidence, and taking these mixed signals as a sign of attraction is itself a sign of confidence. Walking up to a guy and asking him to have sex immediately would also be more "efficient" by some deranged standards, but the point of flirting is that you get to signal social grace, selectivity, and a willingness to walk away from the interaction.

Close friend of mine, a regular software engineer, recently threw tens of thousands of dollars - a sizable chunk of his yearly salary - at futures contracts on some absurd theory about the Japanese Yen. Over the last few weeks, he coinflipped his money into half a million dollars. Everyone who knows him was begging him to pull out and use the money to buy a house or something. But of course yesterday he sold his futures contracts and bought into 0DTE Nasdaq options on another theory, and literally lost everything he put in and then some. I'm not sure but I think he's down about half his yearly salary overall.

He has been doing this kind of thing for the last two years or so - not just making investments, but making the most absurd, high risk investments you can think of. Every time he comes up with a new trade, he has a story for me about how his cousin/whatever who's a commodities trader recommended the trade to him, or about how a geopolitical event is gonna spike the stock of Lockheed Martin, or something. On many occasions I have attempted to explain some kind of Inadequate Equilibria thesis to him, but it just doesn't seem to "stick".

It's not that he "rejects" the EMH in these conversations. I think for a lot of people there is literally no slot in their mind that is able to hold market efficiency/inefficiency arguments. They just see stocks moving up and down. Sometimes the stocks move in response to legible events. They think, this is a tractable problem, I just have to predict the legible events. How could I be unable to make money? Those guys from The Big Short did!

He is also taking a large amount of stimulants. I think that is compounding the situation a bit.

Typically I operationalize "employable as a software engineer" as being capable of completing tasks like:

- "Fix this error we're getting on BetterStack."

- "Move our Redis cache from DigitalOcean to AWS."

- "Add and implement a cancellation feature for ZeroPath scans."

- "Add the results of this evaluation to our internal benchmark."

These are pretty representative examples of the kinds of tasks your median software engineer will be getting and resolving on a day to day basis.

No chatbot or chatbot wrapper can complete tasks like these for an engineering team at present, incl. Devin et. al. Partly this is because most software engineering work is very high-context, in the sense that implementing the proper solution depends on understanding a large body of existing infrastructure, business knowledge, and code.

When people talk about models today doing "agentic development", they're usually explaining its ability to complete small projects in low-context situations, where all you need to understand is the prompt itself and software engineering as a discipline. That makes sense, because if you ask AIs to write (for example) a PONG game in javascript, the AI can complete each of the pieces in one pass, and fit everything it's doing into one context window. But that kind of task is unlike the vast majority of things employed software engineers do today, which is why we're not experiencing an intelligence explosion right this second.

They strengthen chip export restrictions, order OpenBrain to further restrict its internet connections, and use extreme measures to secure algorithmic progress, like wiretapping OpenBrain employees—this catches the last remaining Chinese spy

Wiretapping? That's it? Was this spy calling Xi from his home phone? xD

As a newly minted +100 strong upvote, I think the current karma economy accurately reflects how my opinion should be weighted

My strong upvotes are now giving +1 and my regular upvotes give +2.

Just edited the post because I think the way it was phrased kind of exaggerated the difficulties we've been having applying the newer models. 3.7 was better, as I mentioned to Daniel, just underwhelming and not as big a leap as either 3.6 or certainly 3.5.

If you plot a line, does it plateau or does it get to professional human level (i.e. reliably doing all the things you are trying to get it to do as well as a professional human would)?

It plateaus before professional human level, both in a macro sense (comparing what ZeroPath can do vs. human pentesters) and in a micro sense (comparing the individual tasks ZeroPath does when it's analyzing code). At least, the errors the models make are not ones I would expect a professional to make; I haven't actually hired a bunch of pentesters and asked them to do the same tasks we expect of the language models and made the diff. One thing our tool has over people is breadth, but that's because we can parallelize inspection of different pieces and not because the models are doing tasks better than humans.

What about 4.5? Is it as good as 3.7 Sonnet but you don't use it for cost reasons? Or is it actually worse?

We have not yet tried 4.5 as it's so expensive that we would not be able to deploy it, even for limited sections.

We use different models for different tasks for cost reasons. The primary workhorse model today is 3.7 sonnet, whose improvement over 3.6 sonnet was smaller than 3.6's improvement over 3.5 sonnet. When taking the job of this workhorse model, o3-mini and the rest of the recent o-series models were strictly worse than 3.6.

I haven't read the METR paper in full, but from the examples given I'm worried the tests might be biased in favor of an agent with no capacity for long term memory, or at least not hitting the thresholds where context limitations become a problem:

For instance, task #3 here is at the limit of current AI capabilities (takes an hour). But it's also something that could plausibly be done with very little context; if the AI just puts all of the example files in its context window it might be able to write the rest of the decoder from scratch. It might not even need to have the example files in memory while it's debugging its project against the test cases.

Whereas a task to fix a bug in a large software project, while it might take an engineer associated with that project "an hour" to finish, requires stretching the limits of the amount of information it can fit inside a context window, or recall beyond what we seem to be capable of doing today.

There was a type of guy circa 2021 that basically said that gpt-3 etc. was cool, but we should be cautious about assuming everything was going to change, because the context limitation was a key bottleneck that might never be overcome. That guy's take was briefly "discredited" in subsequent years when LLM companies increased context lengths to 100k, 200k tokens.

I think that was premature. The context limitations (in particular the lack of an equivalent to human long term memory) are the key deficit of current LLMs and we haven't really seen much improvement at all.

If AI executives really are as bullish as they say they are on progress, then why are they willing to raise money anywhere in the ballpark of current valuations?

The story is that they need the capital to build the models that they think will do that.

Moral intuitions are odd. The current government's gutting of the AI safety summit is upsetting, but somehow less upsetting to my hindbrain than its order to drop the corruption charges against a mayor. I guess the AI safety thing is worse in practice but less shocking in terms of abstract conduct violations.

It helps, but this could be solved with increased affection for your children specifically, so I don't think it's the actual motivation for the trait.

The core is probably several things, but note that this bias is also part of a larger package of traits that makes someone less disagreeable. I'm guessing that the same selection effects that made men more disagreeable than women are also probably partly responsible for this gender difference.

I suspect that the psychopath's theory of mind is not "other people are generally nicer than me", but "other people are generally stupid, or too weak to risk fighting with me".

That is true, and it is indeed a bias, but it doesn't change the fact that their assessment of whether others are going to hurt them seems basically well calibrated. The anecdata that needs to be explained is why nice people do not seem to be able to tell when others are going to take advantage of them, but mean people do. The posts' offered reason is that generous impressions of others are advantageous for trust-building.

Mr. Portman probably believed that some children forgot to pay for the chocolate bars, because he was aware that different people have different memory skills.

This was the explanation he offered, yeah.

This post is about a suspected cognitive bias and why I think it came to be. It's not trying to justify any behavior, as far as I can tell, unless you think the sentiment "people are pretty awful" justifies bad behavior in of itself.

The game theory is mostly an extended metaphor rather than a serious model. Humans are complicated.

Elon already has all of the money in the world. I think he and his employs are ideologically driven, and as far as I can tell they're making sensible decisions given their stated goals of reducing unnecessary spend/sprawl. I seriously doubt they're going to use this access to either raid the treasury or turn it into a personal fiefdom. It's possible that in their haste they're introducing security risks, but I also think the tendency of media outlets and their sources will be to exaggerate those security risks. I'd be happy to start a prediction market about this if a regular feels very differently.

If Trump himself was spearheading this effort I would be more worried.

Anthropic has a bug bounty for jailbreaks: https://hackerone.com/constitutional-classifiers?type=team

If you can figure out how to get the model to give detailed answers to a set of certain questions, you get a 10k prize. If you can find a universal jailbreak for all the questions, you get 20k.

Yeah, one possible answer is "don't do anything weird, ever". That is the safe way, on average. No one will bother writing a story about you, because no one would bother reading it.

You laugh, but I really think a group norm of "think for yourself, question the outside world, don't be afraid to be weird" is part of the reason why all of these groups exist. Doing those things is ultimately a luxury for the well-adjusted and intelligent. If you tell people over and over to question social norms some of those people will turn out to be crazy and conclude crime and violence is acceptable.

I don't know if there's anything to do about that, but it is a thing.

So, to be clear, everyone you can think of has been mentioned in previous articles or alerts about Zizians so far? Because I have only been on the periphery of rationalist events for the last several years, but in 2023 I can remember sending this[1] post about rationalist crazies into the San Antonio LW groupchat. A trans woman named Chase Carter, who doesn't generally attend our meetups, began to argue with me that Ziz (who gets mentioned in the article as an example) was subject to a "disinformation campaign" by rationalists, her goals were actually extremely admirable, and her worst failure was a strategic one in not realizing how few people were like her in the world. At the next meetup we agreed to talk about it further, and she attended (I think for the first time) to explain a very sympathetic background of Ziz's history and ideas. This was after the alert post but years before any of the recent events.

I have no idea if Chase actually self-identifies as a "Zizian" or is at all dangerous and haven't spoken to her in a year and a half. I just mention her as an example; I haven't heard her name brought up anywhere and I really wouldn't expect to know any of these people to begin with on priors.

- ^

Misremembered that I sent the alert post into the chat, but actually it was the Habryka post about rationalist crazies.

I know you're not endorsing the quoted claim, but just to make this extra explicit: running terrorist organizations is illegal, so this is the type of thing you would also say if Ziz was leading a terrorist organization, and you didn't want to see her arrested.

Why did 2 killings happen within the span of one week?

According to law enforcement the two people involved in the shootout received weapons and munitions from Jamie Zajko, and one of them also applied for a marriage certificate with the person who killed Curtis Lind. Additionally I think it's also safe to say from all of their preparations that they were preparing to commit violent acts.

So my best guess is that:

- Teresa Youngblut and/or Felix Bauckholt were co-conspirators with the other people committing violent crimes

- They were preparing to commit further violent crimes

- They were worried that they might be arrested

- They made an agreement with each other to shoot it out with law enforcement in the event someone tried to arrest them

- If the press/law enforcement isn't lying, they were stopped on the road by a border patrol officer that was checking up on a visa, they thought were about to be taken in for something more serious, and *Teresa pulled a gun

The border patrol officer seems like a hero. Whether he meant it or not, he died to save the lives of several other people.

I think an accident that caused a million deaths would do it.

I think this post is quite good, and gives a heuristic important to modeling the world. If you skipped it because of title + author, you probably have the wrong impression of its contents and should give it a skim. Its main problem is what's left unsaid.

Some people in the comments reply to it that other people self-deceive, yes, but you should assume good faith. I say - why not assume the truth, and then do what's prosocial anyways?

You're probably right, I don't actually know many/haven't actually interacted personally with many trans people. But also, I'm not really talking about the Zizians in particular here, or the possibility of getting physically harmed? It just seems like being trans is like taking LSD, in that it makes a person ex-ante much more likely to be someone who I've heard of having a notoriously bizarre mental breakdown that resulted in negative consequences for the people they've associated themselves with.

"Assumed to be dangerous" is overstated, but I do think trans people as a group are a lot crazier on average, and I sort of avoid them personally.

It also seems very plausible to me, unfortunately, that a community level "keep your distance from trans people" rule would have been net positive starting from 2008. Not just because of Ziz; trans people in general have this recurring pattern of involving themselves in the community, then deciding years later that the community is the source of their mental health problems and that they should dedicate themselves to airing imaginary grievances about it in public (or in this case committing violent crimes).

be "born a woman deep down"

start a violent gang

This is the craziest shit I have ever read on LessWrong, and I am mildly surprised at how little it is talked about. I get that it's very close to home for a lot of people, and that it's probably not relevant to either rationality as a discipline or the far future. But like, multiple unsolved murders by someone involved in the community is something that I would feel compelled to write about, if I didn't get the vague impression that it'd be defecting in some way.

Most of the time when people publicly debate "textualism" vs. "intentionalism" it smacks to me of a bunch of sophistry to achieve the policy objectives of the textualist. Even if you tried to interpret English statements like computer code, which seems like a really poor way to govern, the argument that gets put forth by the guy who wants to extend the interstate commerce clause to growing weed or whatever is almost always ridiculous on its own merits.

The 14th amendment debate is unique, though, in that the letter of the amendment goes one way, and the stated interpretation of the guy who authored the amendment actually does seem to go the exact opposite way. The amendment reads:

All persons born or naturalized in the United States, and subject to the jurisdiction thereof, are citizens of the United States and of the State wherein they reside

Which is pretty airtight. Virtually everyone inside the borders of the United States is "subject to the jurisdiction" of the United States, certainly people here on visa, with the possible exception of people with diplomatic immunity. And yet:

So it seems like in this case the textualism vs. intentionalism debate is actually possibly important.

What’s reality? I don’t know. When my bird was looking at my computer monitor I thought, ‘That bird has no idea what he’s looking at.’ And yet what does the bird do? Does he panic? No, he can’t really panic, he just does the best he can. Is he able to live in a world where he’s so ignorant? Well, he doesn’t really have a choice. The bird is okay even though he doesn’t understand the world. You’re that bird looking at the monitor, and you’re thinking to yourself, ‘I can figure this out.’ Maybe you have some bird ideas. Maybe that’s the best you can do

Sarcasm is when we make statements we don't mean, expecting the other person to infer from context that we meant the opposite. It's a way of pointing out how unlikely it would be for you to mean what you said, by saying it.

There are two ways to evoke sarcasm; first by making your statement unlikely in context, and second by using "sarcasm voice", i.e. picking tones and verbiage that explicitly signal sarcasm. The sarcasm that people consider grating is usually the kind that relies on the second category of signals, rather than the first. It becomes more funny when the joker is able to say something almost-but-not-quite plausible in a completely deadpan manner. Compare:

- "Oh boyyyy, I bet you think you're the SMARTEST person in the WHOLE world." (Wild, abrupt shifts in pitch)

- "You must have a really deep soul, Jeff." (Face inexpressive)

As a corollary, sarcasm often works more smoothly when it's between people who already know each other, not only because it's less likely to be offensive, but also because they're starting with a strong prior about what their counterparties are likely to say in normal conversation.

This all seems really clearly true.

Just reproduced it; all I have to do is subscribe to a bunch of people and this happens and the site becomes unusable:

The image didn't upload but it's a picture of my browser saying that the web page's javascript is using a ton of resources and I can force-stop it if I wish