jacquesthibs's Shortform

post by jacquesthibs (jacques-thibodeau) · 2022-11-21T12:04:07.896Z · LW · GW · 307 commentsContents

310 comments

307 comments

Comments sorted by top scores.

comment by jacquesthibs (jacques-thibodeau) · 2024-05-21T13:13:05.143Z · LW(p) · GW(p)

I would find it valuable if someone could gather an easy-to-read bullet point list of all the questionable things Sam Altman has done throughout the years.

I usually link to Gwern’s comment thread (https://www.lesswrong.com/posts/KXHMCH7wCxrvKsJyn/openai-facts-from-a-weekend?commentId=toNjz7gy4rrCFd99A [LW(p) · GW(p)]), but I would prefer if there was something more easily-consumable.

Replies from: Zach Stein-Perlman, robo, jacques-thibodeau↑ comment by Zach Stein-Perlman · 2024-05-21T20:54:27.742Z · LW(p) · GW(p)

[Edit #2, two months later: see https://ailabwatch.org/resources/integrity/]

[Edit: I'm not planning on doing this but I might advise you if you do, reader.]

50% I'll do this in the next two months if nobody else does. But not right now, and someone else should do it too.

Off the top of my head (this is not the list you asked for, just an outline):

- Loopt stuff

- YC stuff

- YC removal

- NDAs

- And deceptive communication recently

- And maybe OpenAI's general culture of don't publicly criticize OpenAI

- Profit cap non-transparency

- Superalignment compute

- Two exoduses of safety people; negative stuff people-who-quit-OpenAI sometimes say

- Telling board members not to talk to employees

- Board crisis stuff

- OpenAI executives telling the board Altman lies

- The board saying Altman lies

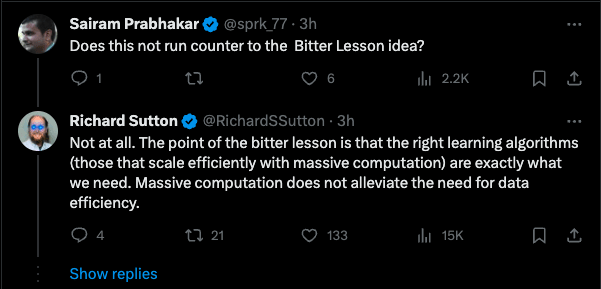

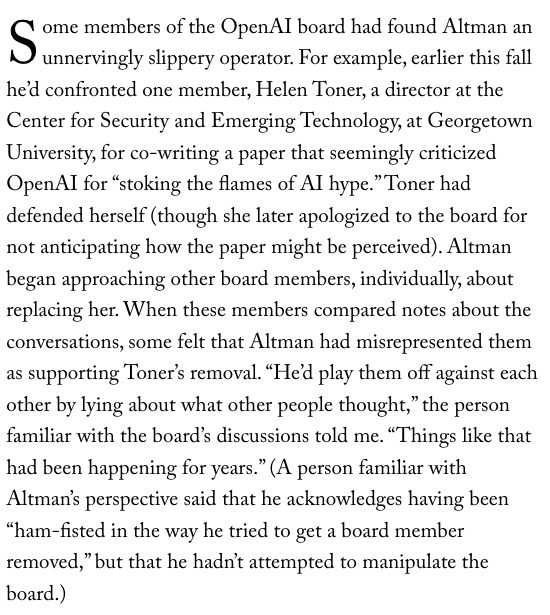

- Lying about why he wanted to remove Toner

- Lying to try to remove Toner

- Returning

- Inadequate investigation + spinning results

Stuff not worth including:

- Reddit stuff - unconfirmed

- Financial conflict-of-interest stuff - murky and not super important

- Misc instances of saying-what's-convenient (e.g. OpenAI should scale because of the prospect of compute overhang and the $7T chip investment thing) - idk, maybe, also interested in more examples

- Johansson & Sky - not obvious that OpenAI did something bad, but it would be nice for OpenAI to say "we had plans for a Johansson voice and we dropped that when Johansson said no," but if that was true they'd have said it...

What am I missing? Am I including anything misleading or not-worth-it?

Replies from: jacques-thibodeau, jacques-thibodeau, lcmgcd↑ comment by jacquesthibs (jacques-thibodeau) · 2024-05-30T22:07:15.619Z · LW(p) · GW(p)

Here’s new one: https://x.com/jacquesthibs/status/1796275771734155499?s=61&t=ryK3X96D_TkGJtvu2rm0uw

Sam added in SEC filings (for AltC) that he’s YC’s chairman. Sam Altman has never been YC’s chairman. From an article posted on April 15th, 2024:

“Annual reports filed by AltC for the past 3 years make the same claim. The recent report: Sam was currently chairman of YC at the time of filing and also "previously served" as YC's chairman.”

The journalist who replied to me said: “Whether Sam Altman was fired from YC or not, he has never been YC's chair but claimed to be in SEC filings for his AltC SPAC which merged w/Oklo. AltC scrubbed references to Sam being YC chair from its website in the weeks since I first reported this.”

The article: https://archive.is/Vl3VR

↑ comment by jacquesthibs (jacques-thibodeau) · 2024-07-09T15:55:05.883Z · LW(p) · GW(p)

Just a heads up, it's been 2 months!

Replies from: Zach Stein-Perlman↑ comment by Zach Stein-Perlman · 2024-07-10T07:30:09.395Z · LW(p) · GW(p)

Not what you asked for but related: https://ailabwatch.org/resources/integrity/

↑ comment by lemonhope (lcmgcd) · 2024-05-24T09:21:28.511Z · LW(p) · GW(p)

What am I missing?

His sister's accusations that he blocked her from parent's inheritance and that he molested her when he was a young teenager and that he got her social media accounts flagged as spam to hide the accusations

Replies from: gwern↑ comment by gwern · 2024-05-24T21:14:08.364Z · LW(p) · GW(p)

I would not consider her claims worth including in a list of top items for people looking for an overview, as they are hard to verify or dubious (her comments are generally bad enough to earn flagging on their own), aside from possibly the inheritance one - as that should be objectively verifiable, at least in theory, and lines up better with the other items.

↑ comment by robo · 2024-05-22T15:25:49.664Z · LW(p) · GW(p)

I'm very not sure how to do this, but are there ways to collect some counteracting or unbiased samples about Sam Altman? Or to do another one-sided vetting for other CEOs to see what the base rate of being able to dig up questionable things is? Collecting evidence in that points in only one direction just sets off huge warning lights 🚨🚨🚨🚨 I can't quiet.

Replies from: gwern↑ comment by gwern · 2024-05-23T15:25:09.203Z · LW(p) · GW(p)

Collecting evidence in that points in only one direction just sets off huge warning lights 🚨🚨🚨🚨 I can't quiet.

Yes, it should. And that's why people are currently digging so hard in the other direction, as they begin to appreciate to what extent they have previously had evidence that only pointed in one direction and badly misinterpreted things like, say, Paul Graham's tweets or YC blog post edits or ex-OAer statements.

↑ comment by jacquesthibs (jacques-thibodeau) · 2024-09-26T00:37:48.700Z · LW(p) · GW(p)

Given today's news about Mira (and two other execs leaving), I figured I should bump this again.

But also note that @Zach Stein-Perlman [LW · GW] has already done some work on this (as he noted in his edit): https://ailabwatch.org/resources/integrity/.

Note, what is hard to pinpoint when it comes to S.A. is that many of the things he does have been described as "papercuts". This is the kind of thing that makes it hard to make a convincing case for wrongdoing.

comment by jacquesthibs (jacques-thibodeau) · 2024-12-20T21:31:28.793Z · LW(p) · GW(p)

Given the OpenAI o3 results making it clear that you can pour more compute to solve problems, I'd like to announce that I will be mentoring at SPAR for an automated interpretability research project using AIs with inference-time compute.

I truly believe that the AI safety community is dropping the ball on this angle of technical AI safety and that this work will be a strong precursor of what's to come.

Note that this work is a small part in a larger organization on automated AI safety I’m currently attempting to build.

Here’s the pitch:

As AIs become more capable, they will increasingly be used to automate AI R&D. Given this, we should seek ways to use AIs to help us also make progress on alignment research.

Eventually, AIs will automate all research, but for now, we need to choose specific tasks that AIs can do well on. The kind of problems we can expect AIs will be good at fairly soon are the kind that have reliable metrics they can optimize, have a lot of knowledge about, and can iterate on fairly cheaply.

As a result, we can make progress toward automating interpretability research by coming up with experimental setups that allow AIs to iterate. For now, we can leave the exact details a bit broad, but here are some examples of what we could attempt to use AIs to make deep learning models more interpretable:

-

Optimizing Sparse Autoencoders (SAEs): sparse autoencoders (or transcoders) can be used to help us interpret the features of deep learning models. However, SAEs may suffer from issues like polysemanticity. Our goal is to create a SAE training setup that can give us some insight into what might make AI models more interpretable. This could involve testing different regularizers, activation functions, and more. We'll start with simpler vision models before scaling to language models to allow for rapid iteration and validation. Key metrics include feature monosemanticity, sparsity, dead feature ratios, and downstream task performance.

-

Enhancing Model Editability: we will be using AIs to do experiments on language models to find out which modifications lead to better model editing ability from a technique like ROME/MEMIT.

Overall, we can also use other approaches to measure the increase in interpretability (or editability) of language models.

The project aims to answer several key questions:

- Can AI effectively optimize interpretability techniques?

- What metrics best capture meaningful improvements in interpretability?

- Are AIs better at this task than human researchers?

- Can we develop reliable pipelines for automated interpretability research?

Initial explorations will focus on creating clear evaluation frameworks and baselines, starting with smaller-scale proof-of-concepts that can be rigorously validated.

References:

- "The AI Scientist: Towards Fully Automated Open-Ended Scientific Discovery" (Lu et al., 2024)

- "RE-Bench: Evaluating frontier AI R&D capabilities of language model agents against human experts" (METR, 2024)

- "Towards Monosemanticity: Decomposing Language Models With Dictionary Learning" (Bricken et al., 2023)

- "Scaling Monosemanticity: Extracting Interpretable Features from Claude 3 Sonnet" (Templeton et al., 2024)

- "ROME: Locating and Editing Factual Knowledge in GPT" (Meng et al., 2022) Briefly, how does your project advance AI safety? (from Proposal)

The goal of this project is to leverage AIs to progress on the interpretability of deep learning models. Part of the project will involve building infrastructure to help AIs contribute to alignment research more generally, which will be re-used as models become more capable of making progress on alignment. Another part will look to improve the interpretability of deep learning models without sacrificing capability. What role will mentees play in this project? (from Proposal) Mentees will be focused on:

- Get up-to-date on current approaches to leverage AIs for automated research.

- Setting up the infrastructure to get AIs to automate the interpretability research.

- Run experiments with AIs to optimize for making models more interpretable while not compromising on capabilities.

↑ comment by JuliaHP · 2024-12-21T14:06:59.468Z · LW(p) · GW(p)

"As a result, we can make progress toward automating interpretability research by coming up with experimental setups that allow AIs to iterate."

This sounds exactly like the kind of progress which is needed in order to get closer to game-over-AGI. Applying current methods of automation to alignment seems fine, but if you are trying to push the frontier of what intellectual progress can be achieved using AI's, I fail to see your comparative advantage relative to pure capabilities researchers.

I do buy that there might be credit to the idea of developing the infrastructure/ability to be able to do a lot of automated alignment research, which gets cached out when we are very close to game-over-AGI, even if it comes at the cost of pushing the frontier some.

↑ comment by jacquesthibs (jacques-thibodeau) · 2024-12-21T16:27:12.938Z · LW(p) · GW(p)

Exactly right. This is the first criticism I hear every time about this kind of work and one of the main reasons I believe the alignment community is dropping the ball on this.

I only intend on sharing work output (paper on better technique for interp, not the infrastructure setup; things similar to Transluce) where necessary and not the infrastructure. We don’t need to share or open source what we think isn’t worth it. That said, the capabilities folks will be building stuff like this by default, as they already have (Sakana AI). Yet I see many paths to automating sub-areas of alignment research that we will be playing catch up to capabilities when the time comes because we were so afraid of touching this work. We need to put ourselves in a position to absorb a lot of compute.

↑ comment by jacquesthibs (jacques-thibodeau) · 2024-12-21T16:41:07.998Z · LW(p) · GW(p)

As a side note, I’m in the process of building an organization (leaning startup). I will be in London in January for phase 2 of the Catalyze Impact program (incubation program for new AI safety orgs). Looking for feedback on a vision doc and still looking for a cracked CTO to co-found with. If you’d like to help out in whichever way, send a DM!

comment by jacquesthibs (jacques-thibodeau) · 2025-01-24T18:12:54.093Z · LW(p) · GW(p)

I'm currently in the Catalyze Impact AI safety incubator program. I'm working on creating infrastructure for automating AI safety research. This startup is attempting to fill a gap in the alignment ecosystem and looking to build with the expectation of under 3 years left to automated AI R&D. This is my short timelines plan [LW · GW].

I'm looking to talk (for feedback) to anyone interested in the following:

- AI control

- Automating math to tackle problems as described in Davidad's Safeguarded AI programme.

- High-assurance safety cases [LW · GW]

- How to robustify society in a post-AGI world

- Leverage large amounts of inference-time compute to make progress on alignment research

- Short timelines

- Profitability while still reducing overall x-risk

- Are someone with an entrepreneurial spirit and can spin out traditional business within the org to fund the rest of the work (thereby reducing investor pressure)

If you're interested in chatting or giving feedback, please DM me!

comment by jacquesthibs (jacques-thibodeau) · 2024-05-26T19:32:53.976Z · LW(p) · GW(p)

How likely is it that the board hasn’t released specific details about Sam’s removal because of legal reasons? At this point, I feel like I have to place overwhelmingly high probability on this.

So, if this is the case, what legal reason is it?

Replies from: owencb, nikolas-kuhn, jacques-thibodeau, Dagon↑ comment by owencb · 2024-05-27T13:15:56.899Z · LW(p) · GW(p)

My mainline guess is that information about bad behaviour by Sam was disclosed to them by various individuals, and they owe a duty of confidence to those individuals (where revealing the information might identify the individuals, who might thereby become subject to some form of retaliation).

("Legal reasons" also gets some of my probability mass.)

Replies from: jacques-thibodeau↑ comment by jacquesthibs (jacques-thibodeau) · 2024-05-27T14:13:11.991Z · LW(p) · GW(p)

I think this sounds reasonable, but if this is true, why wouldn’t they just say this?

↑ comment by Amalthea (nikolas-kuhn) · 2024-05-27T07:14:50.222Z · LW(p) · GW(p)

It might not be legal reasons specifically, but some hard-to-specify mix of legal reasons/intimidation/bullying. While it's useful to discuss specific ideas, it should be kept in mind that Altman doesn't need to restrict his actions to any specific avenue that could be neatly classified.

↑ comment by jacquesthibs (jacques-thibodeau) · 2024-05-29T01:14:17.135Z · LW(p) · GW(p)

My question for as to why they can’t share all the examples was not answered, but Helen gives background on what happened here: https://open.spotify.com/episode/4r127XapFv7JZr0OPzRDaI?si=QdghGZRoS769bGv5eRUB0Q&context=spotify%3Ashow%3A6EBVhJvlnOLch2wg6eGtUa

She does confirm she can’t give all of the examples (though points to the ones that were reported), however. Which is not nothing, but eh. However, she also mentioned it was under-reported how much people were scared of Sam and he was creating a very toxic environment.

↑ comment by Dagon · 2024-05-27T16:36:52.009Z · LW(p) · GW(p)

"legal reasons" is pretty vague. With billions of dollars at stake, it seems like public statements can be used against them more than it helps them, should things come down to lawsuits. It's also the case that board members are people, and want to maintain their ability to work and have influence in future endeavors, so want to be seen as systemic cooperators.

Replies from: T3t↑ comment by RobertM (T3t) · 2024-05-28T03:30:22.080Z · LW(p) · GW(p)

But surely "saying nearly nothing" ranks among the worst-possible options for being seen as a "systemic cooperator"?

Replies from: Dagoncomment by jacquesthibs (jacques-thibodeau) · 2024-05-15T14:14:15.928Z · LW(p) · GW(p)

I thought Superalignment was a positive bet by OpenAI, and I was happy when they committed to putting 20% of their current compute (at the time) towards it. I stopped thinking about that kind of approach because OAI already had competent people working on it. Several of them are now gone.

It seems increasingly likely that the entire effort will dissolve. If so, OAI has now made the business decision to invest its capital in keeping its moat in the AGI race rather than basic safety science. This is bad and likely another early sign of what's to come.

I think the research that was done by the Superalignment team should continue happen outside of OpenAI and, if governments have a lot of capital to allocate, they should figure out a way to provide compute to continue those efforts. Or maybe there's a better way forward. But I think it would be pretty bad if all that talent towards the project never gets truly leveraged into something impactful.

Replies from: bogdan-ionut-cirstea, kromem↑ comment by Bogdan Ionut Cirstea (bogdan-ionut-cirstea) · 2024-05-15T22:16:50.240Z · LW(p) · GW(p)

I think the research that was done by the Superalignment team should continue happen outside of OpenAI and, if governments have a lot of capital to allocate, they should figure out a way to provide compute to continue those efforts. Or maybe there's a better way forward. But I think it would be pretty bad if all that talent towards the project never gets truly leveraged into something impactful.

Strongly agree; I've been thinking for a while that something like a public-private partnership involving at least the US government and the top US AI labs might be a better way to go about this. Unfortunately, recent events seem in line with it not being ideal to only rely on labs for AI safety research, and the potential scalability of automating it should make it even more promising for government involvement. [Strongly] oversimplified, the labs could provide a lot of the in-house expertise, the government could provide the incentives, public legitimacy (related: I think of a solution to aligning superintelligence as a public good) and significant financial resources.

↑ comment by kromem · 2024-05-16T02:40:04.970Z · LW(p) · GW(p)

It's going to have to.

Ilya is brilliant and seems to really see the horizon of the tech, but maybe isn't the best at the business side to see how to sell it.

But this is often the curse of the ethically pragmatic. There is such a focus on the ethics part by the participants that the business side of things only sees that conversation and misses the rather extreme pragmatism.

As an example, would superaligned CEOs in the oil industry fifty years ago have still only kept their eye on quarterly share prices or considered long term costs of their choices? There's going to be trillions in damages that the world has taken on as liabilities that could have been avoided with adequate foresight and patience.

If the market ends up with two AIs, one that will burn down the house to save on this month's heating bill and one that will care if the house is still there to heat next month, there's a huge selling point for the one that doesn't burn down the house as long as "not burning down the house" can be explained as "long term net yield" or some other BS business language. If instead it's presented to executives as "save on this month's heating bill" vs "don't unhouse my cats" leadership is going to burn the neighborhood to the ground.

(Source: Explained new technology to C-suite decision makers at F500s for years.)

The good news is that I think the pragmatism of Ilya's vision on superalignment is going to become clear over the next iteration or two of models and that's going to be before the question of models truly being unable to be controlled crops up. I just hope that whatever he's going to be keeping busy with will allow him to still help execute on superderminism when the market finally realizes "we should do this" for pragmatic reasons and not just amorphous ethical reasons execs just kind of ignore. And in the meantime I think given the present pace that Anthropic is going to continue to lay a lot of the groundwork on what's needed for alignment on the way to superalignment anyways.

comment by jacquesthibs (jacques-thibodeau) · 2024-05-15T10:53:27.270Z · LW(p) · GW(p)

For anyone interested in Natural Abstractions type research: https://arxiv.org/abs/2405.07987

Claude summary:

Key points of "The Platonic Representation Hypothesis" paper:

-

Neural networks trained on different objectives, architectures, and modalities are converging to similar representations of the world as they scale up in size and capabilities.

-

This convergence is driven by the shared structure of the underlying reality generating the data, which acts as an attractor for the learned representations.

-

Scaling up model size, data quantity, and task diversity leads to representations that capture more information about the underlying reality, increasing convergence.

-

Contrastive learning objectives in particular lead to representations that capture the pointwise mutual information (PMI) of the joint distribution over observed events.

-

This convergence has implications for enhanced generalization, sample efficiency, and knowledge transfer as models scale, as well as reduced bias and hallucination.

Relevance to AI alignment:

-

Convergent representations shaped by the structure of reality could lead to more reliable and robust AI systems that are better anchored to the real world.

-

If AI systems are capturing the true structure of the world, it increases the chances that their objectives, world models, and behaviors are aligned with reality rather than being arbitrarily alien or uninterpretable.

-

Shared representations across AI systems could make it easier to understand, compare, and control their behavior, rather than dealing with arbitrary black boxes. This enhanced transparency is important for alignment.

-

The hypothesis implies that scale leads to more general, flexible and uni-modal systems. Generality is key for advanced AI systems we want to be aligned.

↑ comment by Gunnar_Zarncke · 2024-05-21T22:14:12.550Z · LW(p) · GW(p)

I recommend making this into a full link-post. I agree about the relevance for AI alignment.

↑ comment by Lorxus · 2024-05-22T00:07:04.854Z · LW(p) · GW(p)

I am very very vaguely in the Natural Abstractions area of alignment approaches. I'll give this paper a closer read tomorrow (because I promised myself I wouldn't try to get work done today) but my quick quick take is - it'd be huge if true, but there's not much more than that there yet, and it also has no argument that even if representations are converging for now, that it'll never be true that (say) adding a whole bunch more effectively-usable compute means that the AI no longer has to chunk objectspace into subtypes rather than understanding every individual object directly.

comment by jacquesthibs (jacques-thibodeau) · 2024-01-24T11:48:23.491Z · LW(p) · GW(p)

I thought this series of comments from a former DeepMind employee (who worked on Gemini) were insightful so I figured I should share.

From my experience doing early RLHF work for Gemini, larger models exploit the reward model more. You need to constantly keep collecting more preferences and retraining reward models to make it not exploitable. Otherwise you get nonsensical responses which have exploited the idiosyncracy of your preferences data. There is a reason few labs have done RLHF successfully.

It's also know that more capable models exploit loopholes in reward functions better. Imo, it's a pretty intuitive idea that more capable RL agents will find larger rewards. But there's evidence from papers like this as well: https://arxiv.org/abs/2201.03544

To be clear, I don't think the current paradigm as-is is dangerous. I'm stating the obvious because this platform has gone a bit bonkers.

The danger comes from finetuning LLMs to become AutoGPTs which have memory, actions, and maximize rewards, and are deployed autonomously. Widepsread proliferation of GPT-4+ models will almost certainly make lots of these agents which will cause a lot of damage and potentially cause something indistinguishable from extinction.

These agents will be very hard to align. Trading off their reward objective with your "be nice" objective won't work. They will simply find the loopholes of your "be nice" objective and get that nice fat hard reward instead.

We're currently in the extreme left-side of AutoGPT exponential scaling (it basically doesn't work now), so it's hard to study whether more capable models are harder or easier to align.

Other comments from that thread:

My guess is where your intuitive alignment strategy ("be nice") breaks down for AI is that unlike humans, AI is highly mutable. It's very hard to change a human's sociopathy factor. But for AI, even if *you* did find a nice set of hyperparameters that trades off friendliness and goal-seeking behavior well, it's very easy to take that, and tune up the knobs to make something dangerous. Misusing the tech is as easy or easier than not. This is why many put this in the same bucket as nuclear.

US visits Afghanistan, teaches them how to make power using Nuclear tech, next month, they have nukes pointing at Iran.

And:

In contexts where harms will be visible easily and in short timelines, we’ll take them offline and retrain.

Many applications will be much more autonomous, difficult to monitor or even understand, and potentially fully close loop, i.e the agent has a complex enough action space that it can copy itself, buy compute, run itself, etc.

I know it sounds scifi. But we’re living in scifi times. These things have a knack of becoming true sooner than we think.

No ghosts in the matrices assumed here. Just intelligence starting from a very good base model optimizing reward.

There are more comments he made in that thread that I found insightful, so go have a look if interested.

Replies from: leogao↑ comment by leogao · 2024-01-28T04:51:26.361Z · LW(p) · GW(p)

"larger models exploit the RM more" is in contradiction with what i observed in the RM overoptimization paper. i'd be interested in more analysis of this

Replies from: Algon↑ comment by Algon · 2024-02-13T13:02:39.154Z · LW(p) · GW(p)

In that paper did you guys take a good long look at the output of various sized models throughout training? In addition to looking at the graphs of gold-standard/proxy reward model ratings against KL-divergence. If not, then maybe that's the discrepancy: perhaps Sherjil was communicating with the LLM and thinking "this is not what we wanted".

comment by jacquesthibs (jacques-thibodeau) · 2024-07-18T17:34:35.780Z · LW(p) · GW(p)

Why aren't you doing research on making pre-training better for alignment?

I was on a call today, and we talked about projects that involve studying how pre-trained models evolve throughout training and how we could guide the pre-training process to make models safer. For example, could models trained on synthetic/transformed data make models significantly more robust and essentially solve jailbreaking? How about the intersection of pretraining from human preferences and synthetic data? Could the resulting model be significantly easier to control? How would it impact the downstream RL process? Could we imagine a setting where we don't need RL (or at least we'd be able to confidently use resulting models to automate alignment research)? I think many interesting projects could fall out of this work.

So, back to my main question: why aren't you doing research on making pre-training better for alignment? Is it because it's too expensive and doesn't seem like a low-hanging fruit? Or do you feel it isn't a plausible direction for aligning models?

We were wondering if there are technical bottlenecks that would make this kind of research more feasible for alignment research to better study how to guide the pretraining process in a way that benefits alignment. As in, would researchers be more inclined to do experiments in this direction if the entire pre-training code was handled and you'd just have to focus on whatever specific research question you have in mind? If we could access a large amount of compute (let's say, through government resources) to do things like data labeling/filtering and pre-training multiple models, would this kind of work be more interesting for you to pursue?

I think many alignment research directions have grown simply because they had low-hanging fruits that didn't require much compute (e.g., evals, and mech interp). It seems we've implicitly left all of the high-compute projects for the AGI labs to figure out. But what if we weren't as bottlenecked on this anymore? It's possible to retrain GPT-2 1.5B with under 700$ now (and 125M for 20$). I think we can find ways to do useful experiments, but my guess is that the level of technical expertise required to get it done is a bit high, and alignment researchers would rather avoid these kinds of projects since they are currently high-effort.

I talk about other related projects here [LW(p) · GW(p)].

Replies from: jacques-thibodeau, myyycroft, eggsyntax↑ comment by jacquesthibs (jacques-thibodeau) · 2024-07-29T20:57:17.365Z · LW(p) · GW(p)

Synthesized various resources for this "pre-training for alignment" type work:

- Data

- Synthetic Data

- The RetroInstruct Guide To Synthetic Text Data

- Alignment In The Age of Synthetic Data

- Leveraging Agentic AI for Synthetic Data Generation

- **AutoEvol**: Automatic Instruction Evolving for Large Language Models We build a fully automated Evol-Instruct pipeline to create high-quality, highly complex instruction tuning data

- Synthetic Data Generation and AI Feedback notebook

- The impact of models training on their own outputs and how its actually done well in practice

- Google presents Best Practices and Lessons Learned on Synthetic Data for Language Models

- Transformed/Enrichment of Data

- Rephrasing the Web: A Recipe for Compute and Data-Efficient Language Modeling. TLDR: You can train 3x faster and with upto 10x lesser data with just synthetic rephrases of the web!

- Better Synthetic Data by Retrieving and Transforming Existing Datasets

- Rho-1: Not All Tokens Are What You Need RHO-1-1B and 7B achieves SotA results of 40.6% and 51.8% on MATH dataset, respectively — matching DeepSeekMath with only 3% of the pretraining tokens.

- Data Attribution

- In-Run Data Shapley

- Scaling Laws for the Value of Individual Data Points in Machine Learning We show how some data points are only valuable in small training sets; others only shine in large datasets.

- What is Your Data Worth to GPT? LLM-Scale Data Valuation with Influence Functions

- Data Mixtures

- Methods for finding optimal data mixture

- Curriculum Learning

- Active Data Selection

- MATES: Model-Aware Data Selection for Efficient Pretraining with Data Influence Models MATES significantly elevates the scaling curve by selecting the data based on the model's evolving needs.

- Data Filtering

- Scaling Laws for Data Filtering -- Data Curation cannot be Compute Agnostic Argues that data curation cannot be agnostic of the total compute that a model will be trained for Github

- How to Train Data-Efficient LLMs Models trained on ASK-LLM data consistently outperform full-data training—even when we reject 90% of the original dataset, while converging up to 70% faster

- Synthetic Data

- On Pre-Training

- Pre-Training from Human Preferences

- Ethan Perez wondering if jailbreaks would be solved with this pre-training approach

- LAION uses this approach for finegrained control over outputs during inference.

- Nora Belrose thinks that alignment via pre-training would make models more robust to unlearning (she doesn't say this, but this may be a good thing if you pre-train such that you don't need unlearning)

- Tomek describing some research direction for improving pre-training alignment

- Simple and Scalable Strategies to Continually Pre-train Large Language Models

- Neural Networks Learn Statistics of Increasing Complexity

- Pre-Training from Human Preferences

- Pre-Training towards the basin of attraction for alignment

- Alignment techniques

- AlignEZ: Using the self-generated preference data, we identify the subspaces that: (1) facilitate and (2) are harmful to alignment. During inference, we surgically modify the LM embedding using these identified subspaces. Jacques note: could we apply this iteratively throughout training (and other similar methods)?

- What do we mean by "alignment"? What makes the model safe?

- Values

- On making the model "care"

↑ comment by myyycroft · 2024-09-06T10:47:08.263Z · LW(p) · GW(p)

GPT-2 1.5B is small by today's standards. I hypothesize people are not sure if findings made for models of this scale will generalize to frontier models (or at least to the level of LLaMa-3.1-70B), and that's why nobody is working on it.

However, I was impressed by "Pre-Training from Human Preferences". I suppose that pretraining could be improved, and it would be a massive deal for alignment.

↑ comment by eggsyntax · 2024-07-20T11:12:49.673Z · LW(p) · GW(p)

how to guide the pretraining process in a way that benefits alignment

One key question here, I think: a major historical alignment concern has been that for any given finite set of outputs, there are an unbounded number of functions that could produce it, and so it's hard to be sure that a model will generalize in a desirable way. Nora Belrose goes so far as to suggest that 'Alignment worries are quite literally a special case of worries about generalization.' This is relevant for post-training but I think even more so for pre-training.

I know that there's been research into how neural networks generalize both from the AIS community and the larger ML community, but I'm not very familiar with it; hopefully someone else can provide some good references here.

comment by jacquesthibs (jacques-thibodeau) · 2023-11-14T19:14:21.708Z · LW(p) · GW(p)

If you work at a social media website or YouTube (or know anyone who does), please read the text below:

Community Notes is one of the best features to come out on social media apps in a long time. The code is even open source. Why haven't other social media websites picked it up yet? If they care about truth, this would be a considerable step forward beyond. Notes like “this video is funded by x nation” or “this video talks about health info; go here to learn more” messages are simply not good enough.

If you work at companies like YouTube or know someone who does, let's figure out who we need to talk to to make it happen. Naïvely, you could spend a weekend DMing a bunch of employees (PMs, engineers) at various social media websites in order to persuade them that this is worth their time and probably the biggest impact they could have in their entire career.

If you have any connections, let me know. We can also set up a doc of messages to send in order to come up with a persuasive DM.

Replies from: jacques-thibodeau, Viliam, jacques-thibodeau, ChristianKl, jacques-thibodeau, bruce-lewis↑ comment by jacquesthibs (jacques-thibodeau) · 2023-11-14T19:52:25.042Z · LW(p) · GW(p)

Don't forget that we train language models on the internet! The more truthful your dataset is, the more truthful the models will be! Let's revamp the internet for truthfulness, and we'll subsequently improve truthfulness in our AI systems!!

↑ comment by Viliam · 2023-11-15T08:48:30.061Z · LW(p) · GW(p)

I don't use Xitter; is there a way to display e.g. top 100 tweets with community notes? To see how it works in practice.

Replies from: Yoav Ravid, jacques-thibodeau↑ comment by Yoav Ravid · 2023-11-15T16:35:05.833Z · LW(p) · GW(p)

I don't know of something that does so at random, but this page automatically shares posts with community notes that have been deemed helpful.

Replies from: jacques-thibodeau↑ comment by jacquesthibs (jacques-thibodeau) · 2023-11-15T16:37:20.174Z · LW(p) · GW(p)

Oh, that’s great, thanks! Also reminded me of (the less official, more comedy-based) “Community Notes Violating People”. @Viliam [LW · GW]

Replies from: Viliam↑ comment by jacquesthibs (jacques-thibodeau) · 2023-11-15T13:16:28.093Z · LW(p) · GW(p)

I don’t think so, unfortunately.

Replies from: Viliam↑ comment by Viliam · 2023-11-15T16:05:31.018Z · LW(p) · GW(p)

Found a nice example (linked from Zvi's article [LW · GW]).

Okay, it's just one example and it wasn't found randomly, but I am impressed.

↑ comment by jacquesthibs (jacques-thibodeau) · 2023-11-15T04:09:15.811Z · LW(p) · GW(p)

I've also started working on a repo in order to make Community Notes more efficient by using LLMs.

↑ comment by ChristianKl · 2023-11-14T20:05:07.105Z · LW(p) · GW(p)

Why haven't other social media websites picked it up yet? If they care about truth, this would be a considerable step forward beyond.

This sounds a bit naive.

There's a lot of energy invested in making it easier for powerful elites to push their preferred narratives. Community Notes are not in the interests of the Censorship Industrial Complex.

I don't think that anyone at the project manager level has the political power to add a feature like Community Notes. It would likely need to be someone higher up in the food chain.

Replies from: jacques-thibodeau↑ comment by jacquesthibs (jacques-thibodeau) · 2023-11-14T20:11:45.389Z · LW(p) · GW(p)

Sure, but sometimes it's just a PM and a couple of other people that lead to a feature being implemented. Also, keep in mind that Community Notes was a thing before Musk. Why was Twitter different than other social media websites?

Also, the Community Notes code was apparently completely revamped by a few people working on the open-source code, which got it to a point where it was easy to implement, and everyone liked the feature because it noticeably worked.

Either way, I'd rather push for making it happen and somehow it fails on other websites than having pessimism and not trying at all. If it needs someone higher up the chain, let's make it happen.

Replies from: ChristianKl↑ comment by ChristianKl · 2023-11-14T20:43:46.658Z · LW(p) · GW(p)

Sure, but sometimes it's just a PM and a couple of other people that lead to a feature being implemented. Also, keep in mind that Community Notes was a thing before Musk. Why was Twitter different than other social media websites?

Twitter seems to have started Birdwatch as a small separate pilot project where it likely wasn't easy to fight or on anyone's radar to fight.

In the current enviroment, where X gets seen as evil by a lot of the mainstream media, I would suspect that copying Community Notes from X would alone produce some resistence. The antibodies are now there in a way they weren't two years ago.

Also, the Community Notes code was apparently completely revamped by a few people working on the open-source code, which got it to a point where it was easy to implement, and everyone liked the feature because it noticeably worked.

If you look at mainstream media views about X's community notes, I don't think everyone likes it.

I remember Elon once saying that he lost a 8-figure advertising deal because of Community Notes on posts of a company that wanted to advertise on X.

Either way, I'd rather push for making it happen and somehow it fails on other websites than having pessimism and not trying at all. If it needs someone higher up the chain, let's make it happen.

I think you would likely need to make a case that it's good business in addition to helping with truth.

If you want to make your argument via truth, motivating some reporters to write favorable articles about Community Notes might be necessary.

Replies from: jacques-thibodeau↑ comment by jacquesthibs (jacques-thibodeau) · 2023-11-14T20:49:20.543Z · LW(p) · GW(p)

Good points; I'll keep them all in mind. If money is the roadblock, we can put pressure on the companies to do this. Or, worst-case, maybe the government can enforce it (though that should be done with absolute care).

↑ comment by jacquesthibs (jacques-thibodeau) · 2023-11-14T19:45:18.905Z · LW(p) · GW(p)

I shared a tweet about it here: https://x.com/JacquesThibs/status/1724492016254341208?s=20

Consider liking and retweeting it if you think this is impactful. I'd like it to get into the hands of the right people.

↑ comment by Bruce Lewis (bruce-lewis) · 2023-11-14T19:31:05.897Z · LW(p) · GW(p)

I had not heard of Community Notes. Interesting anti-bias technique "notes require agreement between contributors who have sometimes disagreed in their past ratings". https://communitynotes.twitter.com/guide/en/about/introduction

Replies from: jacques-thibodeau↑ comment by jacquesthibs (jacques-thibodeau) · 2023-11-14T19:34:43.538Z · LW(p) · GW(p)

I've been on Twitter for a long time, and there's pretty much unanimous agreement that it works amazingly well in practice!

Replies from: kabir-kumar-1↑ comment by Kabir Kumar (kabir-kumar-1) · 2023-11-14T19:37:14.776Z · LW(p) · GW(p)

there is an issue with surface level insights being unfaily weighted, but this is solvable, imo. especially with youtube, which can see which commenters have watched the full video.

comment by jacquesthibs (jacques-thibodeau) · 2024-11-25T22:46:11.994Z · LW(p) · GW(p)

I have some alignment project ideas for things I'd consider mentoring for. I would love feedback on the ideas. If you are interested in collaborating on any of them, that's cool, too.

Here are the titles:

Smart AI vs swarm of dumb AIs |

Lit review of chain of thought faithfulness (steganography in AIs) |

Replicating METR paper but for alignment research task |

Tool-use AI for alignment research |

Sakana AI for Unlearning |

Automated alignment onboarding |

Build the infrastructure for making Sakana AI's AI scientist better for alignment research |

comment by jacquesthibs (jacques-thibodeau) · 2024-10-02T16:04:07.560Z · LW(p) · GW(p)

I quickly wrote up some rough project ideas for ARENA and LASR participants, so I figured I'd share them here as well. I am happy to discuss these ideas and potentially collaborate on some of them.

Alignment Project Ideas (Oct 2, 2024)

1. Improving "A Multimodal Automated Interpretability Agent" (MAIA)

Overview

MAIA (Multimodal Automated Interpretability Agent) is a system designed to help users understand AI models by combining human-like experimentation flexibility with automated scalability. It answers user queries about AI system components by iteratively generating hypotheses, designing and running experiments, observing outcomes, and updating hypotheses.

MAIA uses a vision-language model (GPT-4V, at the time) backbone equipped with an API of interpretability experiment tools. This modular system can address both "macroscopic" questions (e.g., identifying systematic biases in model predictions) and "microscopic" questions (e.g., describing individual features) with simple query modifications.

This project aims to improve MAIA's ability to either answer macroscopic questions or microscopic questions on vision models.

2. Making "A Multimodal Automated Interpretability Agent" (MAIA) work with LLMs

MAIA is focused on vision models, so this project aims to create a MAIA-like setup, but for the interpretability of LLMs.

Given that this would require creating a new setup for language models, it would make sense to come up with simple interpretability benchmark examples to test MAIA-LLM. The easiest way to do this would be to either look for existing LLM interpretability benchmarks or create one based on interpretability results we've already verified (would be ideal to have a ground truth). Ideally, the examples in the benchmark would be simple, but new enough that the LLM has not seen them in its training data.

3. Testing the robustness of Critique-out-Loud Reward (CLoud) Models

Critique-out-Loud reward models are reward models that can reason explicitly about the quality of an input through producing Chain-of-Thought like critiques of an input before predicting a reward. In classic reward model training, the reward model is trained as a reward head initialized on top of the base LLM. Without LM capabilities, classic reward models act as encoders and must predict rewards within a single forward pass through the model, meaning reasoning must happen implicitly. In contrast, CLoud reward models are trained to both produce explicit reasoning about quality and to score based on these critique reasoning traces. CLoud reward models lead to large gains for pairwise preference modeling on RewardBench, and also lead to large gains in win rate when used as the scoring model in Best-of-N sampling on ArenaHard.

The goal for this project would be to test the robustness of CLoud reward models. For example, are the CLoud RMs (discriminators) more robust to jailbreaking attacks from the policy (generator)? Do the CLoud RMs generalize better?

From an alignment perspective, we would want RMs that generalize further out-of-distribution (and ideally, always more than the generator we are training).

4. Synthetic Data for Behavioural Interventions

Simple synthetic data reduces sycophancy in large language models by (Google) reduced sycophancy in LLMs with a fairly small number of synthetic data examples. This project would involve testing this technique for other behavioural interventions and (potentially) studying the scaling laws. Consider looking at the examples from the Model-Written Evaluations paper by Anthropic to find some behaviours to test.

5. Regularization Techniques for Enhancing Interpretability and Editability

Explore the effectiveness of different regularization techniques (e.g. L1 regularization, weight pruning, activation sparsity) in improving the interpretability and/or editability of language models, and assess their impact on model performance and alignment. We expect we could apply automated interpretability methods (e.g. MAIA) to this project to test how well the different regularization techniques impact the model.

In some sense, this research is similar to the work Anthropic did with SoLU activation functions. Unfortunately, they needed to add layer norms to make the SoLU models competitive, which seems to have hidden away the superposition in other parts of the network, making SoLU unhelpful in making the models more interpretable

That said, we hope to find that we can increase our ability to interpret these models through regularization techniques. A technique like L1 regularization should help because it encourages the model to learn sparse representations by penalizing non-zero weights or activations. Sparse models tend to be more interpretable as they rely on a smaller set of important features.

Methodology:

- Identify a set of regularization techniques (e.g., L1 regularization, weight pruning, activation sparsity) to be applied during fine-tuning.

- Fine-tune pre-trained language models with different regularization techniques and hyperparameters.

- Evaluate the fine-tuned models using interpretability tools (e.g., attention visualization, probing classifiers) and editability benchmarks (e.g., ROME).

- Analyze the impact of regularization on model interpretability, editability, and performance.

- Investigate the relationship between interpretability, editability, and model alignment.

Expected Outcomes:

- Quantitative assessment of the effectiveness of different regularization techniques for improving interpretability and editability.

- Insights into the trade-offs between interpretability, editability, and model performance.

- Recommendations for regularization techniques that enhance interpretability and editability while maintaining model performance and alignment.

6. Quantifying the Impact of Reward Misspecification on Language Model Behavior

Investigate how misspecified reward functions influence the behavior of language models during fine-tuning and measure the extent to which the model's outputs are steered by the reward labels, even when they contradict the input context. We hope to better understand language model training dynamics. Additionally, we expect online learning to complicate things in the future, where models will be able to generate the data they may eventually be trained on. We hope that insights from this work can help us prevent catastrophic feedback loops in the future. For example, if model behavior is mostly impacted by training data, we may prefer to shape model behavior through synthetic data (it has been shown we can reduce sycophancy by doing this).

Prior works:

- The Effects of Reward Misspecification: Mapping and Mitigating Misaligned Models by Alexander Pan, Kush Bhatia, Jacob Steinhardt

- Survival Instinct in Offline Reinforcement Learning by Anqi Li, Dipendra Misra, Andrey Kolobov, Ching-An Cheng

- Simple synthetic data reduces sycophancy in large language models by (Google), Jerry Wei, Da Huang, Yifeng Lu, Denny Zhou, Quoc V. Le

- Scaling Laws for Reward Model Overoptimization by (OpenAI), Leo Gao, John Schulman, Jacob Hilton

- On the Sensitivity of Reward Inference to Misspecified Human Models by Joey Hong, Kush Bhatia, Anca Dragan

Methodology:

- Create a diverse dataset of text passages with candidate responses and manually label them with coherence and misspecified rewards.

- Fine-tune pre-trained language models using different reward weighting schemes and hyperparameters.

- Evaluate the generated responses using automated metrics and human judgments for coherence and misspecification alignment.

- Analyze the influence of misspecified rewards on model behavior and the trade-offs between coherence and misspecification alignment.

- Use interpretability techniques to understand how misspecified rewards affect the model's internal representations and decision-making process.

Expected Outcomes:

- Quantitative measurements of the impact of reward misspecification on language model behavior.

- Insights into the trade-offs between coherence and misspecification alignment.

- Interpretability analysis revealing the effects of misspecified rewards on the model's internal representations.

7. Investigating Wrong Reasoning for Correct Answers

Understand the underlying mechanisms that lead to language models producing correct answers through flawed reasoning, and develop techniques to detect and mitigate such behavior. Essentially, we want to apply interpretability techniques to help us identify which sets of activations or token-layer pairs impact the model getting the correct answer when it has the correct reasoning versus when it has the incorrect reasoning. The hope is to uncover systematic differences as to when it is not relying on its chain-of-thought at all and when it does leverage its chain-of-thought to get the correct answer.

[EDIT Oct 2nd, 2024] This project intends to follow a similar line of reasoning as described in this post [LW · GW] and this comment [LW(p) · GW(p)]. The goal is to study chains-of-thought and improve faithfulness without suffering an alignment tax so that we can have highly interpretable systems through their token outputs and prevent loss of control. The project doesn't necessarily need to rely only on model internals.

Related work:

- Decomposing Predictions by Modeling Model Computation by Harshay Shah, Andrew Ilyas, Aleksander Madry

- Does Localization Inform Editing? Surprising Differences in Causality-Based Localization vs. Knowledge Editing in Language Models by Peter Hase, Mohit Bansal, Been Kim, Asma Ghandeharioun

- On Measuring Faithfulness or Self-consistency of Natural Language Explanations by Letitia Parcalabescu, Anette Frank

- Language Models Don't Always Say What They Think: Unfaithful Explanations in Chain-of-Thought Prompting by Miles Turpin, Julian Michael, Ethan Perez, Samuel R. Bowman

- Measuring Faithfulness in Chain-of-Thought Reasoning by Tamera Lanham et al.

Methodology:

- Curate a dataset of questions and answers where language models are known to provide correct answers but with flawed reasoning.

- Use interpretability tools (e.g., attention visualization, probing classifiers) to analyze the model's internal representations and decision-making process for these examples.

- Develop metrics and techniques to detect instances of correct answers with flawed reasoning.

- Investigate the relationship between model size, training data, and the prevalence of flawed reasoning.

- Propose and evaluate mitigation strategies, such as data augmentation or targeted fine-tuning, to reduce the occurrence of flawed reasoning.

Expected Outcomes:

- Insights into the underlying mechanisms that lead to correct answers with flawed reasoning in language models.

- Metrics and techniques for detecting instances of flawed reasoning.

- Empirical analysis of the factors contributing to flawed reasoning, such as model size and training data.

- Proposed mitigation strategies to reduce the occurrence of flawed reasoning and improve model alignment.

comment by jacquesthibs (jacques-thibodeau) · 2023-11-20T01:32:39.745Z · LW(p) · GW(p)

My current speculation as to what is happening at OpenAI

How do we know this wasn't their best opportunity to strike if Sam was indeed not being totally honest with the board?

Let's say the rumours are true, that Sam is building out external orgs (NVIDIA competitor and iPhone-like competitor) to escape the power of the board and potentially going against the charter. Would this 'conflict of interest' be enough? If you take that story forward, it sounds more and more like he was setting up AGI to be run by external companies, using OpenAI as a fundraising bargaining chip, and having a significant financial interest in plugging AGI into those outside orgs.

So, if we think about this strategically, how long should they wait as board members who are trying to uphold the charter?

On top of this, it seems (according to Sam) that OpenAI has made a significant transformer-level breakthrough recently, which implies a significant capability jump. Long-term reasoning? Basically, anything short of 'coming up with novel insights in physics' is on the table, given that Sam recently used that line as the line we need to cross to get to AGI.

So, it could be a mix of, Ilya thinking they have achieved AGI while Sam places a higher bar (internal communication disagreements) + the board not being alerted (maybe more than once) about what Sam is doing, e.g. fundraising for both OpenAI and the orgs he wants to connect AGI to + new board members who are more willing to let Sam and GDB do what they want being added soon (another rumour I've heard) + ???. Basically, perhaps they saw this as their final opportunity to have any veto on actions like this.

Here's what I currently believe:

- There is a GPT-5-like model that already exists. It could be GPT-4.5 or something else, but another significant capability jump. Potentially even a system that can coherently pursue goals for months, capable of continual learning, and effectively able to automate like 10% of the workforce (if they wanted to).

- As of 5 PM, Sunday PT, the board is in a terrible position where they either stay on board and the company employees all move to a new company, or they leave the board and bring Sam back. If they leave, they need to say that Sam did nothing wrong and sweep everything under the rug (and then potentially face legal action for saying he did something wrong); otherwise, Sam won't come back.

- Sam is building companies externally; it is unclear if this goes against the charter. But he does now have a significant financial incentive to speed up AI development. Adam D'Angelo said that he would like to prevent OpenAI from becoming a big tech company as part of his time on the board because AGI was too important for humanity. They might have considered Sam's action going in this direction.

- A few people left the board in the past year. It's possible that Sam and GDB planned to add new people (possibly even change current board members) to the board to dilute the voting power a bit or at least refill board seats. This meant that the current board had limited time until their voting power would become less important. They might have felt rushed.

- The board is either not speaking publicly because 1) they can't share information about GPT-5, 2) there is some legal reason that I don't understand (more likely), or 3) they are incompetent (least likely by far IMO).

- We will possibly never find out what happened, or it will become clearer by the month as new things come out (companies and models). However, it seems possible the board will never say or admit anything publicly at this point.

- Lastly, we still don't know why the board decided to fire Sam. It could be any of the reasons above, a mix or something we just don't know about.

Other possible things:

- Ilya was mad that they wouldn't actually get enough compute for Superalignment as promised due to GPTs and other products using up all the GPUs.

- Ilya is frustrated that Sam is focused on things like GPTs rather than the ultimate goal of AGI.

↑ comment by jacquesthibs (jacques-thibodeau) · 2023-11-22T23:41:11.779Z · LW(p) · GW(p)

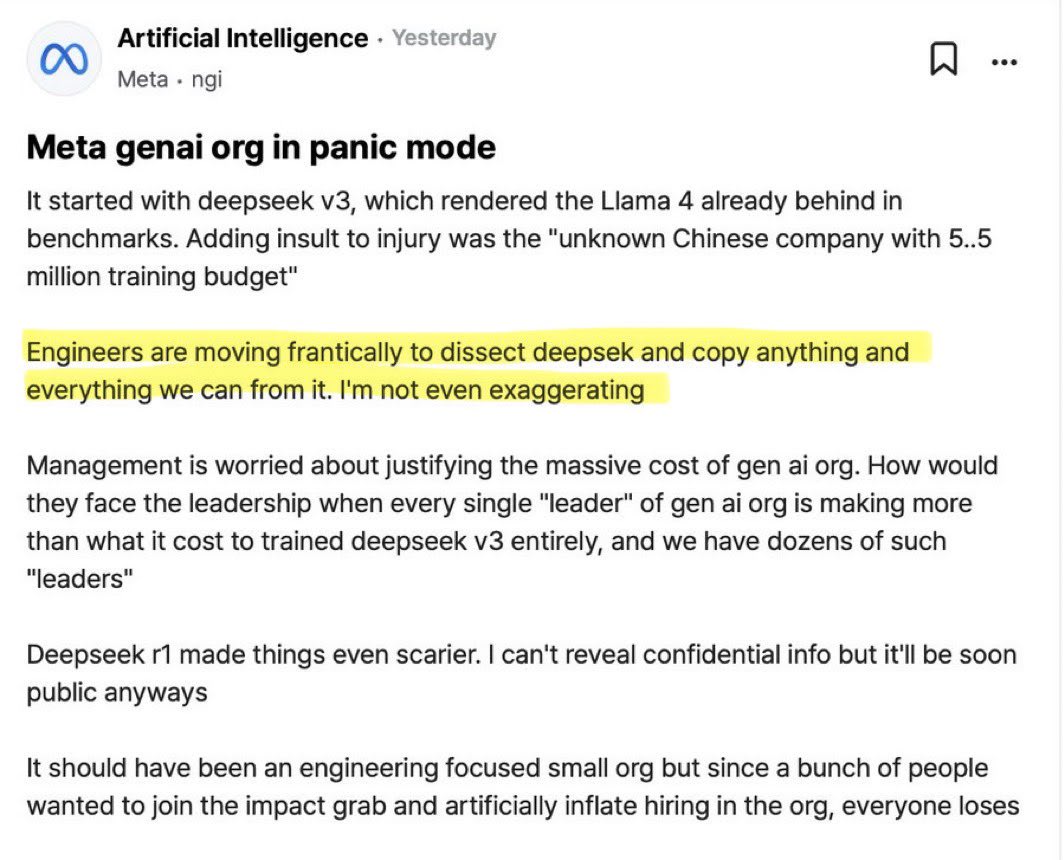

Obviously, a lot has happened since the above shortform, but regarding model capabilities (which discussions died down these last couple of days), there's now this:

↑ comment by jacquesthibs (jacques-thibodeau) · 2023-11-23T00:11:25.019Z · LW(p) · GW(p)

So, apparently, there are two models, but only Q* is mentioned in the article. Won't share the source, but:

↑ comment by jacquesthibs (jacques-thibodeau) · 2023-11-20T04:33:10.799Z · LW(p) · GW(p)

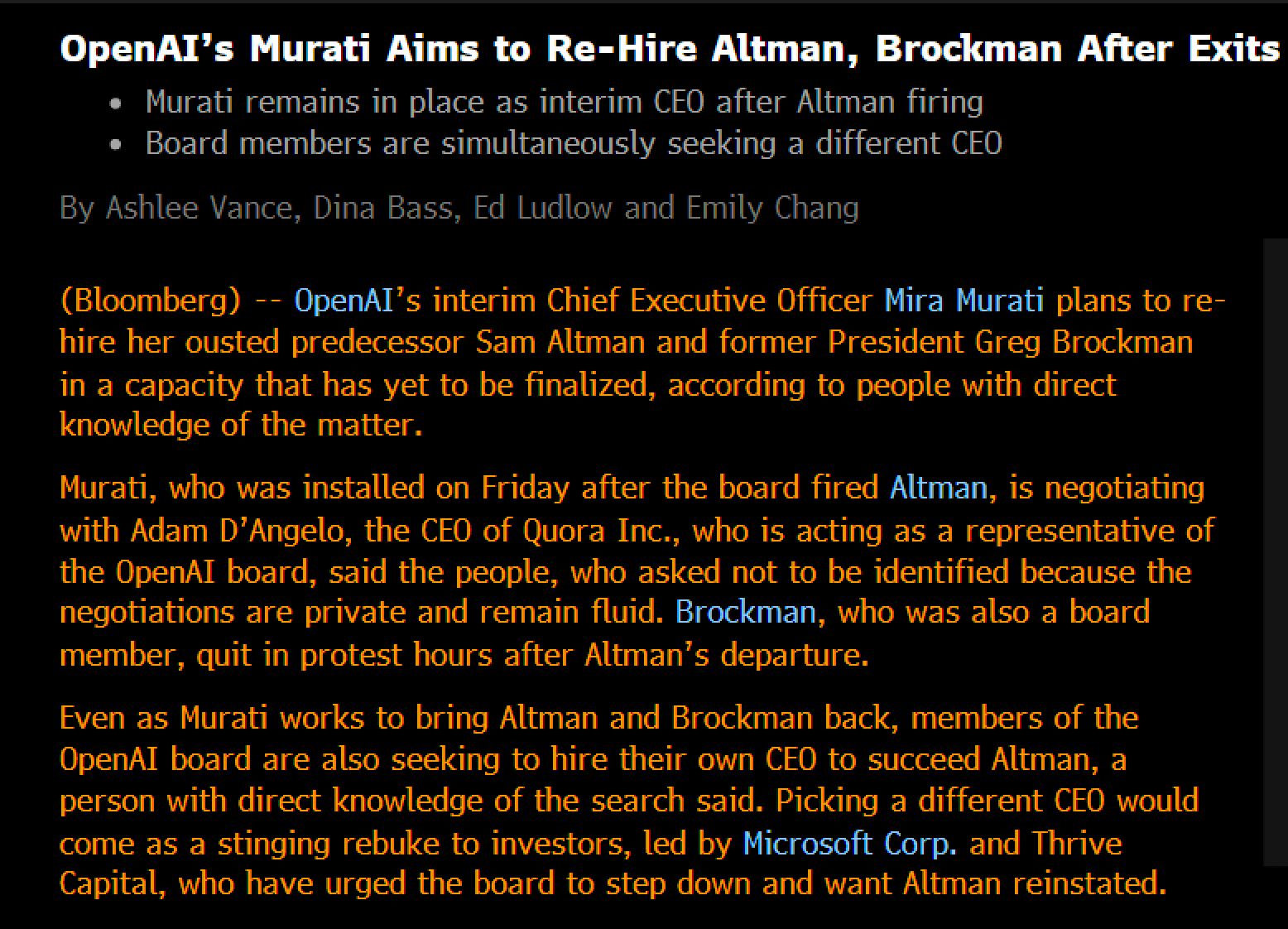

Update, board members seem to be holding their ground more than expected in this tight situation:

comment by jacquesthibs (jacques-thibodeau) · 2024-09-03T11:08:31.891Z · LW(p) · GW(p)

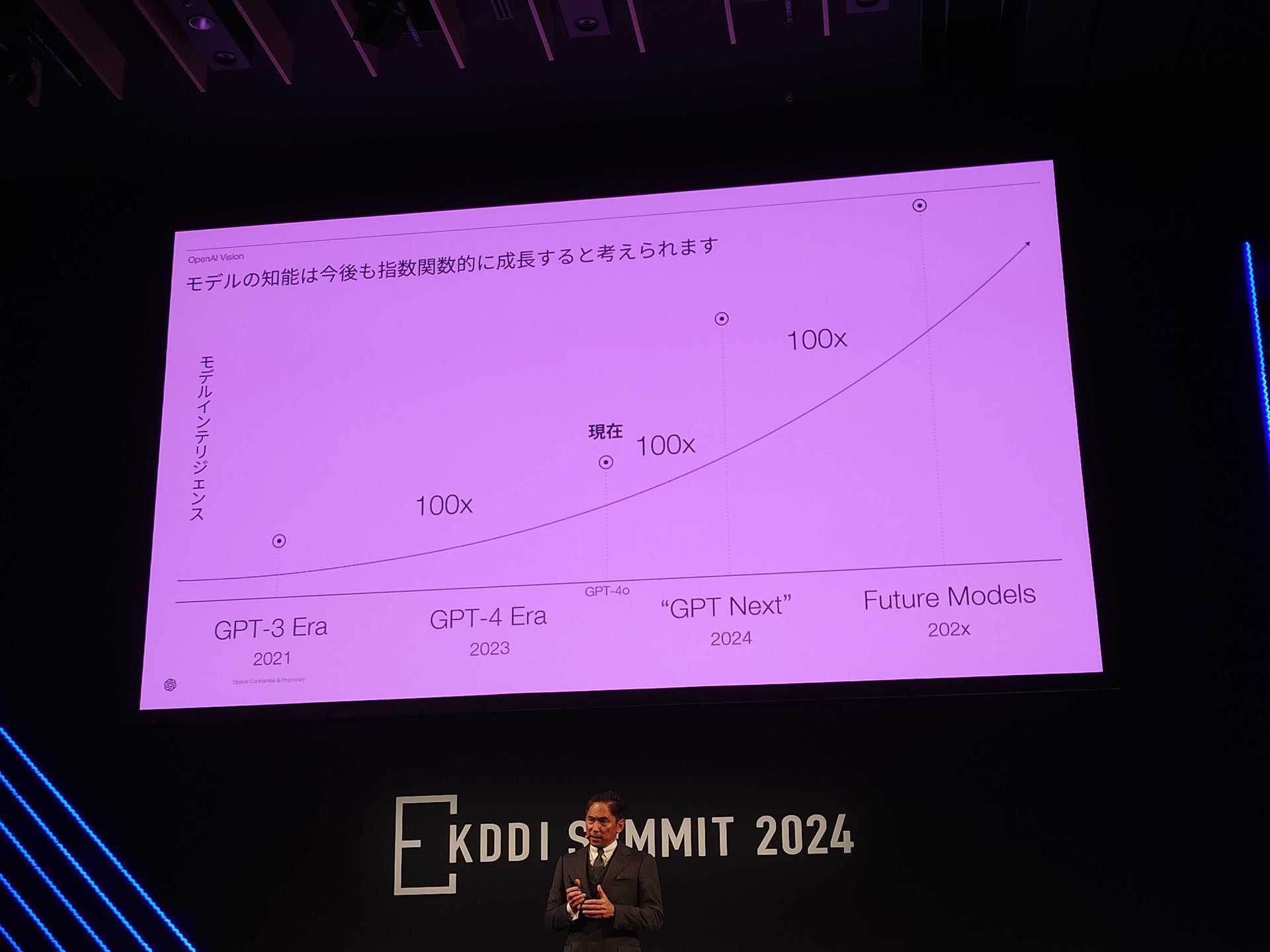

News on the next OAI GPT release:

Nagasaki, CEO of OpenAI Japan, said, "The AI model called 'GPT Next' that will be released in the future will evolve nearly 100 times based on past performance. Unlike traditional software, AI technology grows exponentially."

https://www.itmedia.co.jp/aiplus/articles/2409/03/news165.html

The slide clearly states 2024 "GPT Next". This 100 times increase probably does not refer to the scaling of computing resources, but rather to the effective computational volume + 2 OOMs, including improvements to the architecture and learning efficiency. GPT-4 NEXT, which will be released this year, is expected to be trained using a miniature version of Strawberry with roughly the same computational resources as GPT-4, with an effective computational load 100 times greater. Orion, which has been in the spotlight recently, was trained for several months on the equivalent of 100k H100 compared to GPT-4 (EDIT: original tweet said 10k H100s, but that was a mistake), adding 10 times the computational resource scale, making it +3 OOMs, and is expected to be released sometime next year.

Note: Another OAI employee seemingly confirms this (I've followed them for a while, and they are working on inference).

Replies from: Vladimir_Nesov, luigipagani↑ comment by Vladimir_Nesov · 2024-09-03T14:26:18.002Z · LW(p) · GW(p)

Orion, which has been in the spotlight recently, was trained for several months on the equivalent of 10k H100 compared to GPT-4, adding 10 times the computational resource scale

This implies successful use of FP8, if taken literally in a straightforward way. In BF16 an H100 gives 1e15 FLOP/s (in dense tensor compute). With 40% utilization over 10 months, 10K H100s give 1e26 FLOPs, which is only 5 times higher than the rumored 2e25 FLOPs of original GPT-4. To get to 10 times higher requires some 2x improvement, and the evident way to get that is by transitioning from BF16 to FP8. I think use of FP8 for training hasn't been confirmed to be feasible at GPT-4 level scale (Llama-3-405B uses BF16), but if it does work, that's a 2x compute increase for other models as well.

This text about Orion and 10K H100s only appears in the bioshok3 tweet itself, not in the quoted news article, so it's unclear where the details come from. The "10 times the computational resource scale, making it +3 OOMs" hype within the same sentence also hurts credence in the numbers being accurate (10 times, 10K H100s, several months).

Another implication is that Orion is not the 100K H100s training run (that's probably currently ongoing). Plausibly it's an experiment with training on a significant amount of synthetic data [LW · GW]. This suggests that the first 100K H100s training run won't be experimenting with too much synthetic training data yet, at least in pre-training. The end of 2025 point for significant advancement in quality might then be referring to the possibility that Orion succeeds and its recipe is used in another 100K H100s scale run, which might be the first hypothetical model they intend to call "GPT-5". The first 100K H100s run by itself (released in ~early 2025) would then be called "GPT-4.5o" or something (especially if Orion does succeed, so that "GPT-5" remains on track).

Replies from: abandon, ryan_greenblatt↑ comment by dirk (abandon) · 2024-09-03T18:55:53.950Z · LW(p) · GW(p)

Bioshok3 said in a later tweet that they were in any case mistaken about it being 10k H100s and it was actually 100k H100s: https://x.com/bioshok3/status/1831016098462081256

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2024-09-03T19:20:36.756Z · LW(p) · GW(p)

Surprisingly, there appears to be an additional clue for this in the wording: 2e26 BF16 FLOPs take 2.5 months on 100K H100s at 30% utilization, while the duration of "several months" is indicated by the text "数ヶ月" in the original tweet. GPT-4o explains it to mean

The Japanese term "数ヶ月" (すうかげつ, sūka getsu) translates to "several months" in English. It is an approximate term, generally referring to a period of 2 to 3 months but can sometimes extend to 4 or 5 months, depending on context. Essentially, it indicates a span of a few months without specifying an exact number.

So the interpretation that fits most is specifically 2-3 months (Claude says 2-4 months, Grok 3-4 months), close to what the calculation for 100K H100s predicts. And this is quite unlike the requisite 10 months with 10K H100s in FP8.

↑ comment by ryan_greenblatt · 2024-09-03T16:08:33.323Z · LW(p) · GW(p)

This text about Orion and 10K H100s only appears in the bioshok3 tweet itself, not in the quoted news article, so it's unclear where the details come from.

My guess is that this is just false / hallucinated.

Replies from: ryan_greenblatt, ryan_greenblatt↑ comment by ryan_greenblatt · 2024-09-03T16:39:40.440Z · LW(p) · GW(p)

"Orion is 10x compute" seems plausible, "Orion was trained on only 10K H100s" does not seem plausible if it is actually supposed to be 10x raw compute. Around 50K H100s does seem plausible and would correspond to about 10x compute assuming a training duration similar to GPT-4.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2024-09-03T17:45:07.727Z · LW(p) · GW(p)

Within this hypothetical, Orion didn't necessarily merit the use of the largest training cluster, while time on 10K H100s is something mere money can buy without impacting other plans. GPT-4o is itself plausibly at 1e26 FLOPs level already, since H100s were around for more than a year before it came out (1e26 FLOPs is 5 months on 20K H100s). It might be significantly overtrained, or its early fusion multimodal nature might balloon the cost of effective intelligence. Gemini 1.0 Ultra, presumably also an early fusion model with rumored 1e26 FLOPs, similarly wasn't much better than Mar 2023 GPT-4. Though Gemini 1.0 is plausibly dense, given how the Gemini 1.5 report stressed that 1.5 is MoE, so that might be a factor in how 1e26 FLOPs didn't get it too much of an advantage.

So if GPT-4o is not far behind in terms of FLOPs, a 2e26 FLOPs Orion wouldn't be a significant improvement unless the synthetic data aspect works very well, and so there would be no particular reason to rush it. On the other hand GPT-4o looks like something that needed to be done as fast as possible, and so the largest training cluster went to it and not Orion. The scaling timelines are dictated by building of largest training clusters, not by decisions about use of smaller training clusters.

↑ comment by ryan_greenblatt · 2024-09-03T16:35:32.062Z · LW(p) · GW(p)

This tweet also claims 10k H100s while citing the same article that doesn't mention this.

↑ comment by LuigiPagani (luigipagani) · 2024-09-04T08:18:48.112Z · LW(p) · GW(p)

Are you sure he is an OpenAi employee?

comment by jacquesthibs (jacques-thibodeau) · 2024-06-12T14:24:24.363Z · LW(p) · GW(p)

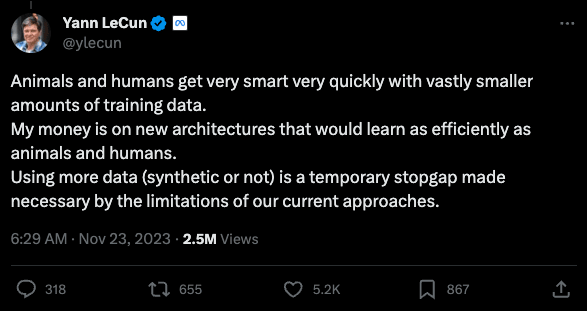

I encourage alignment/safety people to be open-minded about what François Chollet is saying in this podcast:

I think many are blindly bought into the 'scale is all you need' and apparently godly nature of LLMs and may be dependent on unfounded/confused assumptions because of it.

Getting this right is important because it could significantly impact how hard you think alignment will be. Here [LW(p) · GW(p)]'s @johnswentworth [LW · GW] responding to @Eliezer Yudkowsky [LW · GW] about his difference in optimism compared to @Quintin Pope [LW · GW] (despite believing the natural abstraction hypothesis is true):

Entirely separately, I have concerns about the ability of ML-based technology to robustly point the AI in any builder-intended direction whatsoever, even if there exists some not-too-large adequate mapping from that intended direction onto the AI's internal ontology at training time. My guess is that more of the disagreement lies here.

I doubt much disagreement between you and I lies there, because I do not expect ML-style training to robustly point an AI in any builder-intended direction. My hopes generally don't route through targeting via ML-style training.

I do think my deltas from many other people lie there - e.g. that's why I'm nowhere near as optimistic as Quintin - so that's also where I'd expect much of your disagreement with those other people to lie.

Chollet's key points are that LLMs and current deep learning approaches rely heavily on memorization and pattern matching rather than reasoning and problem-solving abilities. Humans (who are 'intelligent') use a bit of both.

LLMs have failed at ARC for the last 4 years because they are simply not intelligent and basically pattern-match and interpolate to whatever is within their training distribution. You can say, "Well, there's no difference between interpolation and extrapolation once you have a big enough model trained on enough data," but the point remains that LLMs fail at the Abstract Reasoning and Concepts benchmark precisely because they have never seen such examples.

No matter how 'smart' GPT-4 may be, it fails at simple ARC tasks that a human child can do. The child does not need to be fed thousands of ARC-like examples; it can just generalize and adapt to solve the novel problem.

One thing to ponder here is, "Are the important kinds of tasks we care about, e.g. coming up with novel physics or inventing nanotech robots, reliant on models gaining the key ability to think and act in the way it would need to solve a novel task like ARC?"

At this point, I think some people might say something like, "How can you say that LLMs are not intelligent when they are solving novel software engineering problems or coming up with new poems?"

Well, again, the answer here is that they have so many examples like this in their pre-training and are just capable of pattern-matching their way to something like writing the code for a specification that the human came up with. I think if there was a really in-depth study, people might be surprised by how much they assumed that LLMs were truly able to generalize to truly novel tasks. And I think it's worth considering whether this is a key bottleneck in current approaches.

Then again, the follow-up to this is likely, "OK, but we'll likely easily solve system 2 reasoning very soon; it doesn't seem like much of a challenge once you scale up the model to a certain level of 'capability.'"

We'll see! I mean, so far, current models have failed to make things like Auto-GPT work. Maybe this just requires more trial-and-error, or maybe this is a big limitation of current systems, and you actually do need some "transformer-level paradigm shift". Here [LW(p) · GW(p)]'s @johnswentworth [LW · GW] again regarding his hope:

There isn't really one specific thing, since we don't yet know what the next ML/AI paradigm will look like, other than that some kind of neural net will probably be involved somehow. (My median expectation [LW(p) · GW(p)] is that we're ~1 transformers-level paradigm shift away from the things which take off.) But as a relatively-legible example of a targeting technique my hopes might route through: Retargeting The Search [LW · GW].

Lastly, you might think, "well it seems pretty obvious that we can just automate many jobs because most jobs will have a static distribution", but 1) you are still limited by human data to provide examples for learning and adding patterns to its training distribution 2) as Chollet says:

We can automate more and more things. Yes, this is economically valuable. Yes, potentially there are many jobs you could automate away like this. That would be economically valuable. You're still not going to have intelligence.

So you can ask, what does it matter if we can generate all this economic value? Maybe we don't need intelligence after all. You need intelligence the moment you have to deal with change, novelty, and uncertainty.

As long as you're in a space that can be exactly described in advance, you can just rely on pure memorization. In fact, you can always solve any problem. You can always display arbitrary levels of skills on any task without leveraging any intelligence whatsoever, as long as it is possible to describe the problem and its solution very, very precisely.

So, how does Chollet expect us to resolve the issues with deep learning systems?

Let's break it down:

- Deep learning excels at memorization, pattern matching, and intuition—similar to system 1 thinking in humans. It generalizes but only generalizes locally within its training data distribution.

- One way to get good system 2 reasoning out of your system is to use something like discrete program search (Chollet's current favourite approach) because it excels at systematic reasoning, planning, and problem-solving (which is why it can do well on ARC). It can synthesize programs to solve novel problems but suffers from combinatorial explosion and is computationally inefficient.

- Chollet suggests that the path forward involves combining the strengths of deep learning with the approach that gets us a good system 2. This is starting to sound similar to @johnswentworth [LW · GW]'s intuition that future systems will include "some kind of neural net [...] somehow" (I mean, of course). But essentially, the outer structure would be a discrete program search that systematically explores the space of possible programs that is aided by deep learning to guide the search in intelligent ways – providing intuitions about likely solutions approaches, offering hints when the search gets stuck, pruning unproductive branches, etc.

- Basically, it leverages the vast knowledge and pattern recognition of deep learning (limited by its training data distribution), while gaining the ability to reason and adapt to novel situations via program synthesis. Going back to ARC, you'd expect it could solve such novel problems by decomposing them into familiar subproblems and systematically searching for solution programs.

↑ comment by Mitchell_Porter · 2024-06-12T15:09:34.322Z · LW(p) · GW(p)

memorization and pattern matching rather than reasoning and problem-solving abilities

In my opinion, this does not correspond to a principled distinction at the level of computation.

For intelligences that employ consciousness in order to do some of these things, there may be a difference in terms of mechanism. Reasoning and pattern matching sound like they correspond to different kinds of conscious activity.

But if we're just talking about computation... a syllogism can be implemented via pattern matching, a pattern can be completed by a logical process (possibly probabilistic).

Replies from: jacques-thibodeau↑ comment by jacquesthibs (jacques-thibodeau) · 2024-06-12T15:43:40.046Z · LW(p) · GW(p)

But if we're just talking about computation... a syllogism can be implemented via pattern matching, a pattern can be completed by a logical process (possibly probabilistic).

Perhaps, but deep learning models are still failing at ARC. My guess (and Chollet's) is that they will continue to fail at ARC unless they are trained on that kind of data (which goes against the point of the benchmark) or you add something else that actually resolves this failure in deep learning models. It may be able to pattern-match to reasoning-like behaviour, but only if specifically trained on that kind of data. No matter how much you scale it up, it will still fail to generalize to anything not local in its training data distribution.

↑ comment by Seth Herd · 2024-06-12T21:35:02.097Z · LW(p) · GW(p)

I think this is exactly right. The phrasing is a little confusing. I'd say "LLMs can't solve truly novel problems".

But the implication that this is a slow route or dead-end for AGI is wrong. I think it's going to be pretty easy to scaffold LLMs into solving novel problems. I could be wrong, but don't bet heavily on it unless you happen to know way more about cognitive psychology and LLMs in combination than I do. it would be foolish to make a plan for survival that relies on this being a major delay.

I can't convince you of this without describing exactly how I think this will be relatively straightforward, and I'm not ready to advance capabilities in this direction yet. I think language model agents are probably our best shot at alignment, so we should probably actively work on advancing them to AGI; but I'm not sure enough yet to start publishing my best theories on how to do that.

Back to the possibly confusing phrasing Chollet uses: I think he's using Piaget's definition of intelligence as "what you do when you don't know what to do" (he quotes this in the interview). That's restricting it to solving problems you haven't memorized an approach to. That's not how most people use the word intelligence.

When he says LLMs "just memorize", he's including memorizing programs or approaches to problems, and they can plug the variables of this particular variant of the problem in to those memorized programs/approaches. I think the question "well maybe that's all you need to do" raised by Patel is appropriate; it's clear they can't do enough of this yet, but it's unclear if further progress will get them to another level of abstraction of an approach so abstract and general that it can solve almost any problem.

I think he's on the wrong track with the "discrete program search" because I see more human-like solutions that may be lower-hanging fruit, but I wouldn't bet his approach won't work. I'm starting to think that there are many approaches to general intelligence, and a lot of them just aren't that hard. We'd like to think our intelligence is unique, magical, and special, but it's looking like it's not. Or at least, we should assume it's not if we want to plan for other intelligences well enough to survive. So I think alignment workers should assume LLMs with or without scaffolding might very well pass this hurdle fairly quickly.

↑ comment by quetzal_rainbow · 2024-06-12T16:32:57.998Z · LW(p) · GW(p)

Okay, hot take: I don't think that ARC tests "system 2 reasoning" and "solving novel tasks", at least, in humans. When I see simple task, I literally match patterns, when I see complex task I run whatever patterns I can invent until they match. I didn't run the entire ARC testing dataset, but if I am good at solving it, it will be because I am fan of Myst-esque games and, actually, there are not so many possible principles in designing problems of this sort.

What failure of LLMs to solve ARC is actually saying us, it is "LLM cognition is very different from human cognition".

Replies from: jacques-thibodeau↑ comment by jacquesthibs (jacques-thibodeau) · 2024-06-12T16:53:31.972Z · LW(p) · GW(p)

I didn't run the entire ARC testing dataset, but if I am good at solving it, it will be because I am fan of Myst-esque games and, actually, there are not so many possible principles in designing problems of this sort.

They've tested ARC with children and Mechanical Turk workers, and they all seem to do fine despite the average person not being a fan of "Myst-esque games."

What failure of LLMs to solve ARC is actually saying us, it is "LLM cognition is very different from human cognition".

Do you believe LLMs are just a few OOMs away from solving novel tasks like ARC? What is different that is not explained by what Chollet is saying?

Replies from: quetzal_rainbow, Morpheus↑ comment by quetzal_rainbow · 2024-06-12T18:35:53.957Z · LW(p) · GW(p)

By "good at solving" I mean "better than average person".