Posts

Comments

are you saying something like: you can't actually do more of everything except one thing, because you'll never do everything. so there's a lot of variance that comes from exploration that multiplies with your variance from having a suboptimal zero point. so in practice your needs to be very close to optimal. so my thing is true but not useful in practice.

i feel people do empirically shift quite a lot throughout life and it does seem to change how effectively they learn. if you're mildly depressed your is slightly too low and you learn a little bit slower. if you're mildly manic your is too high and you also learn a little bit slower. therapy, medications, and meditations shift mildly.

is this for a reason other than the variance thing I mention?

I think the thing I mention is still important is because it means there is no fundamental difference between positive and negative motivation. I agree that if everything was different degrees of extreme bliss then the variance would be so high that you never learn anything in practice. but if you shift everything slightly such that some mildly unpleasant things are now mildly pleasant, I claim this will make learning a bit faster or slower but still converge to the same thing.

i don't think this is unique to world models. you can also think of rewards as things you move towards or away from. this is compatible with translation/scaling-invariance because if you move towards everything but move towards X even more, then in the long run you will do more of X on net, because you only have so much probability mass to go around.

i have an alternative hypothesis for why positive and negative motivation feel distinct in humans.

although the expectation of the reward gradient doesn't change if you translate the reward, it hugely affects the variance of the gradient.[1] in other words, if you always move towards everything, you will still eventually learn the right thing, but it will take a lot longer.

my hypothesis is that humans have some hard coded baseline for variance reduction. in the ancestral environment, the expectation of perceived reward was centered around where zero feels to be. our minds do try to adjust to changes in distribution (e.g hedonic adaptation), but it's not perfect, and so in the current world, our baseline may be suboptimal.

- ^

Quick proof sketch (this is a very standard result in RL and is the motivation for advantage estimation, but still good practice to check things).

The REINFORCE estimator is .

WLOG, suppose we define a new reward where (and assume that , so is moving away from the mean).

Then we can verify the expectation of the gradient is still the same:.

But the variance increases:

So:

Obviously, both terms on the right have to be non-negative. More generally, if , the variance increases with . So having your rewards be uncentered hurts a ton.

seems like an interesting idea. I had never heard of it before, and I'm generally decently aware of weird stuff like this, so they probably need to put more effort into publicity. I don't know if truly broad public appeal will ever happen but I could imagine this being pretty popular among the kinds of people who would e.g use manifold.

one perverse incentive this scheme creates is that if you think other charity is better than political donations, you are incentivized to donate to the party with less in its pool, and you get a 1:1 match for free, at the expense of people who wanted to support a candidate.

also, in the grand scheme of things, the amount of money in politics isn't that big, but it's still a solid chunk. but the TAM is inherently quite limited.

https://slatestarcodex.com/2019/09/18/too-much-dark-money-in-almonds/

i find it disappointing that a lot of people believe things about trading that are obviously crazy even if you only believe in a very weak form of the EMH. for example, technical analysis is obviously tea leaf reading - if it were predictive whatsoever, you could make a lot of money by exploiting it until it is no longer predictive.

Not necessarily. Returns could be long tailed, so hypersuccess or bust could be higher EV. though you might be risk averse enough that this isn't worth it to you.

unless your goal is hypersuccess or bust

I wonder how many supposedly consistently successful retail traders are actually just picking up pennies in front of the steamroller, and would eventually lose it all if they kept at it long enough.

also I wonder how many people have runs of very good performance interspersed by big losses, such that the overall net gains are relatively modest, but psychologically they only remember/recount the runs of good performance, whereas the losses were just bad luck and will be avoided next time.

I mean, the proximate cause of the 1989 protests was the death of the quite reformist general secretary Hu Yaobang. The new general secretary, Zhao Ziyang, was very sympathetic towards the protesters and wanted to negotiate with them, but then he lost a power struggle against Li Peng and Deng Xiaoping (who was in semi retirement but still held onto control of the military). Immediately afterwards, he was removed as general secretary and martial law was declared, leading to the massacre.

It's often very costly to do so - for example, ending the zero covid policy was very politically costly even though it was the right thing to do. Also, most major reconfigurations even for autocratic countries probably mostly happen right after there is a transition of power (for China, Mao is kind of an exception, but thats because he had so much power that it was impossible to challenge his authority even when he messed up).

goodhart

shorter sentences are better because they communicate more clearly. i used to speak in much longer and more abstract sentences, which made it harder to understand me. i think using shorter and clearer sentences has been obviously net positive for me. it even makes my thinking clearer, because you need to really deeply understand something to explain it simply.

every 4 years, the US has the opportunity to completely pivot its entire policy stance on a dime. this is more politically costly to do if you're a long-lasting autocratic leader, because it is embarrassing to contradict your previous policies. I wonder how much of a competitive advantage this is.

the intent is to provide the user with a sense of pride and accomplishment for unlocking different rationality methods.

fun side project idea: create a matrix X and accompanying QR decomposition, such that X and Q are both valid QR codes that link to the wikipedia page about QR decomposition

idea: flight insurance, where you pay a fixed amount for the assurance that you will definitely get to your destination on time. e.g if your flight gets delayed, they will pay for a ticket on the next flight from some other airline, or directly approach people on the next flight to buy a ticket off of them, or charter a private plane.

pure insurance for things you could afford to self insure is generally a scam (and the customer base of this product could probably afford to self insure) but this mostly provides value by handling the rather complicated logistics for you rather than by reducing the financial burden, and there are substantial benefits from economies of scale (e.g if you have enough customers you can maintain a fleet of private planes within a few hours of most major airports)

I think "refactor less" is bad advice for substantial shared infrastructure. It's good advice only for your personal experiment code.

Actual full blown fraud in frontier models at the big labs (oai/anthro/gdm) seems very unlikely. Accidental contamination is a lot more plausible but people are incentivized to find metrics that avoid this. Evals not measuring real world usefulness is the obvious culprit imo and it's one big reason my timelines have been somewhat longer despite rapid progress on evals.

Several people have spent hundreds of dollars betting yes, which is a lot of money to spend for the memes.

there are a nontrivial number of people who would regularly spend a few hundred dollars for the memes.

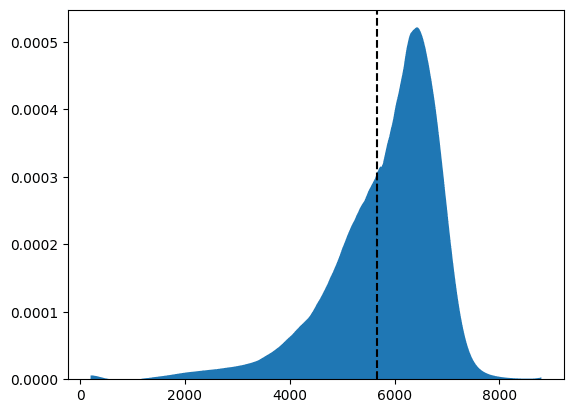

made an estimate of the distribution of prices of the SPX in one year by looking at SPX options prices, smoothing the implied volatilities and using Breeden-Litzenberger.

(not financial advice etc, just a fun side project)

solution 3 is to be an iconoclast and to feel comfortable pushing against the flow and to try to prove everyone else wrong.

timelines takes

- i've become more skeptical of rsi over time. here's my current best guess at what happens as we automate ai research.

- for the next several years, ai will provide a bigger and bigger efficiency multiplier to the workflow of a human ai researcher.

- ai assistants will probably not uniformly make researchers faster across the board, but rather make certain kinds of things way faster and other kinds of things only a little bit faster.

- in fact probably it will make some things 100x faster, a lot of things 2x faster, and then be literally useless for a lot of remaining things

- amdahl's law tells us that we will mostly be bottlenecked on the things that don't get sped up a ton. like if the thing that got sped up 100x was only 10% of the original thing, then you don't get more than a 1/(1 - 10%) speedup.

- i think the speedup is a bit more than amdahl's law implies. task X took up 10% of the time because there is diminishing returns to doing more X, and so you'd ideally do exactly the amount of X such that the marginal value of time spent on X is exactly in equilibrium with time spent on anything else. if you suddenly decrease the cost of X substantially, the equilibrium point shifts towards doing more X.

- in other words, if AI makes lit review really cheap, you probably want to do a much more thorough lit review than you otherwise would have, rather than just doing the same amount of lit review but cheaper.

- at the first moment that ai can fully replace a human researcher (that is, you can purely just put more compute in and get more research out, and only negligible human labor is required), the ai will probably be more expensive per unit of research than the human

- (things get a little bit weird because my guess is before ai can drop-in replace a human, we will reach a point where adding ai assistance equivalent to the cost of 100 humans to 2025-era openai research would be equally as good as adding 100 humans, but the ai's are not doing the same things as the humans, and if you just keep adding ai's you start experiencing diminishing returns faster than with adding humans. i think my analysis still mostly holds despite this)

- naively, this means that the first moment that AIs can fully automate AI research at human-cost is not a special criticality threshold. if you are at equilibrium for allocating money between researchers and compute, then suddenly having the ability to convert compute into researchers at the exchange rate of the salary of a human researcher doesn't really make sense

- in reality, you will probably not be at equilibrium, because there are a lot of inefficiencies in hiring humans - recruiting is a lemon market, you have to onboard new hires relatively slowly, management capacity is limited, there is a inelastic and inefficient supply of qualified hires, etc. but i claim this is a relatively small effect and can't explain a one OOM increase in workforce size

- also: anyone who has worked in a large organization knows that team size is not everything. having too many people can often even be a liability and slow you down. even when it doesn't, adding more people almost never makes your team linearly more productive.

- however, if AIs have much better scaling laws with additional parallel compute than human organizations do, then this could change things a lot. this is one of my biggest uncertainties here and one reason i still take rsi seriously.

- your AIs might higher have bandwidth communication with each other than your humans do. but also maybe they might be worse at generalizing previous findings to new situations or something.

- they might be more aligned with doing lots of research all day, whereas humans care about a lot of other things like money and status and fun and so on. but if outer alignment is hard we might get the AI equivalent of corporate politics.

- one other thing is that compute is a necessary input to research. i'll mostly roll this into the compute cost of actually running the AIs.

- the part where AI research feeds back into how good the AIs are could be very slow in practice

- there are logarithmic returns to more pretraining compute and more test time compute. so an improvement that 10xes the effective compute doesn't actually get you that much. 4.5 isn't that much better than 4 despite being 10x more compute (which is in turn not that much better than 3.5, I would claim).

- you run out of low hanging fruit at some point. each 2x in compute efficiency is harder to find than the previous one.

- i would claim that in fact much of the recent feeling that AI progress is fast is due to a lot of low hanging fruit being picked. for example, the shift from pretrained models to RL for reasoning picked a lot of low hanging fruit due to not using test time compute / not eliciting CoTs well, and we shouldn't expect the same kind of jump consistently.

- an emotional angle: exponentials can feel very slow in practice; for example, moore's law is kind of insane when you think about it (doubling every 18 months is pretty fast), but it still takes decades to play out

- for the next several years, ai will provide a bigger and bigger efficiency multiplier to the workflow of a human ai researcher.

my referral/vouching policy is i try my best to completely decouple my estimate of technical competence from how close a friend someone is. i have very good friends i would not write referrals for and i have written referrals for people i basically only know in a professional context. if i feel like it's impossible for me to disentangle, i will defer to someone i trust and have them make the decision. this leads to some awkward conversations, but if someone doesn't want to be friends with me because it won't lead to a referral, i don't want to be friends with them either.

Overall very excited about more work on circuit sparsity, and this is an interesting approach. I think this paper would be much more compelling if there was a clear win on some interp metric, or some compelling qualitative example, or both.

i'm happy to grant that the 0.1% is just a fermi estimate and there's a +/- one OOM error bar around it. my point still basically stands even if it's 1%.

i think there are also many factors in the other direction that just make it really hard to say whether 0.1% is an under or overestimate.

for example, market capitalization is generally an overestimate of value when there are very large holders. tesla is also a bit of a meme stock so it's most likely trading above fundamental value.

my guess is most things sold to the public sector probably produce less economic value per $ than something sold to the private sector, so profit overestimates value produced

the sign on net economic value of his political advocacy seems very unclear to me. the answer depends strongly on some political beliefs that i don't feel like arguing out right now.

it slightly complicates my analogy for elon to be both the richest person in the us and also possibly the most influential (or one of). in my comment i am mostly referring to economic-elon. you are possibly making some arguments about influentialness in general. the problem is that influentialness is harder to estimate. also, if we're talking about influentialness in general, we don't get to use the 0.1% ownership of economic output as a lower bound of influentialness. owning x% of economic output doesn't automatically give you x% of influentialness. (i think the majority of other extremely rich people are not nearly as influential as elon per $)

you might expect that the butterfly effect applies to ML training. make one small change early in training and it might cascade to change the training process in huge ways.

at least in non-RL training, this intuition seems to be basically wrong. you can do some pretty crazy things to the training process without really affecting macroscopic properties of the model (e.g loss). one very well known example is that using mixed precision training results in training curves that are basically identical to full precision training, even though you're throwing out a ton of bits of precision on every step.

there's an obvious synthesis of great man theory and broader structural forces theories of history.

there are great people, but these people are still bound by many constraints due to structural forces. political leaders can't just do whatever they want; they have to appease the keys of power within the country. in a democracy, the most obvious key of power is the citizens, who won't reelect a politician that tries to act against their interests. but even in dictatorships, keeping the economy at least kind of functional is important, because when the citizens are starving, they're more likely to revolt and overthrow the government. there are also powerful interest groups like the military and critical industries, which have substantial sway over government policy in both democracies and dictatorships. many powerful people are mostly custodians for the power of other people, in the same way that a bank is mostly a custodian for the money of its customers.

also, just because someone is involved in something important, it doesn't mean that they were maximally counterfactually responsible. structural forces often create possibilities to become extremely influential, but only in the direction consistent with said structural force. a population that strongly believes in foobarism will probably elect a foobarist candidate, and if the winning candidate never existed, another foobarist candidate would have won. winning an election always requires a lot of competence, but no matter how competent you are, you aren't going to win on an anti-foobar platform. the sentiment of the population has created the role of foobarist president for someone foobarist to fill.

this doesn't mean that influential people have no latitude whatsoever to influence the world. when we're looking at the highest tiers of human ability, the efficient market hypothesis breaks down. there are so few extremely competent people that nobody is a perfect replacement for anyone else. if someone didn't exist, it doesn't necessarily mean someone else would have stepped up to do the same. for example, if napoleon had never existed, there might have been some other leader who took advantage of the weakness of the Directory to seize power, but they likely would have been very different from napoleon. great people still have some latitude to change the world orthogonal to the broader structural forces.

it's not a contradiction for the world to be mostly driven by structural forces, and simultaneously for great people to have hugely more influence than the average person. in the same way that bill gates or elon musk are vastly vastly wealthier than the median person, great people have many orders of magnitude more influence on the trajectory of history than the average person. and yet, the richest person is still only responsible for 0.1%* of the economic output of the united states.

*\ fermi estimate, taking musk's net worth and dividing by 20 to convert stocks to flows, and comparing to gdp. caveats apply based on interest rates and gdp being a bad metric. many assumptions involved here.

there are a lot of video games (and to a lesser extent movies, books, etc) that give the player an escapist fantasy of being hypercompetent. It's certainly an alluring promise: with only a few dozen hours of practice, you too could become a world class fighter or hacker or musician! But because becoming hypercompetent at anything is a lot of work, the game has to put its finger on the scale to deliver on this promise. Maybe flatter the user a bit, or let the player do cool things without the skill you'd actually need in real life.

It's easy to dismiss this kind of media as inaccurate escapism that distorts people's views of how complex these endeavors of skill really are. But it's actually a shockingly accurate simulation of what it feels like to actually be really good at something. As they say, being competent doesn't feel like being competent, it feels like the thing just being really easy.

when i was new to research, i wouldn't feel motivated to run any experiment that wouldn't make it into the paper. surely it's much more efficient to only run the experiments that people want to see in the paper, right?

now that i'm more experienced, i mostly think of experiments as something i do to convince myself that a claim is correct. once i get to that point, actually getting the final figures for the paper is the easy part. the hard part is finding something unobvious but true. with this mental frame, it feels very reasonable to run 20 experiments for every experiment that makes it into the paper.

libraries abstract away the low level implementation details; you tell them what you want to get done and they make sure it happens. frameworks are the other way around. they abstract away the high level details; as long as you implement the low level details you're responsible for, you can assume the entire system works as intended.

a similar divide exists in human organizations and with managing up vs down. with managing up, you abstract away the details of your work and promise to solve some specific problem. with managing down, you abstract away the mission and promise that if a specific problem is solved, it will make progress towards the mission.

(of course, it's always best when everyone has state on everything. this is one reason why small teams are great. but if you have dozens of people, there is no way for everyone to have all the state, and so you have to do a lot of abstracting.)

when either abstraction leaks, it causes organizational problems -- micromanagement, or loss of trust in leadership.

the laws of physics are quite compact. and presumably most of the complexity in a zygote is in the dna.

a thriving culture is a mark of a healthy and intellectually productive community / information ecosystem. it's really hard to fake this. when people try, it usually comes off weird. for example, when people try to forcibly create internal company culture, it often comes off as very cringe.

don't worry too much about doing things right the first time. if the results are very promising, the cost of having to redo it won't hurt nearly as much as you think it will. but if you put it off because you don't know exactly how to do it right, then you might never get around to it.

the tweet is making fun of people who are too eager to do something EMPIRICAL and SCIENTIFIC and ignore the pesky little detail that their empirical thing actually measures something subtly but importantly different from what they actually care about

i've changed my mind and been convinced that it's kind of a big deal that frontiermath was framed as something that nobody would have access to for hillclimbing when in fact openai would have access and other labs wouldn't. the undisclosed funding before o3 launch still seems relatively minor though

lol i was the one who taped it to the wall. it's one of my favorite tweets of all time

this doesn't seem like a huge deal

in retrospect, we know from chinchilla that gpt3 allocated its compute too much to parameters as opposed to training tokens. so it's not surprising that models since then are smaller. model size is a less fundamental measure of model cost than pretraining compute. from here on i'm going to assume that whenever you say size you meant to say compute.

obviously it is possible to train better models using the same amount of compute. one way to see this is that it is definitely possible to train worse models with the same compute, and it is implausible that the current model production methodology is the optimal one.

it is unknown how much compute the latest models were trained with, and therefore what compute efficiency win they obtain over gpt4. it is unknown how much more effective compute gpt4 used than gpt3. we can't really make strong assumptions using public information about what kinds of compute efficiency improvements have been discovered by various labs at different points in time. therefore, we can't really make any strong conclusions about whether the current models are not that much better than gpt4 because of (a) a shortage of compute, (b) a shortage of compute efficiency improvements, or (c) a diminishing return of capability wrt effective compute.

suppose I believe the second coming involves the Lord giving a speech on capitol hill. one thing I might care about is how long until that happens. the fact that lots of people disagree about when the second coming is doesn't mean the Lord will give His speech soon.

similarly, the thing that I define as AGI involves AIs building Dyson spheres. the fact that other people disagree about when AGI is doesn't mean I should expect Dyson spheres soon.

people disagree heavily on what the second coming will look like. this, of course, means that the second coming must be upon us

I agree that labs have more compute and more top researchers, and these both speed up research a lot. I disagree that the quality of responses is the same as outside labs, if only because there is lots of knowledge inside labs that's not available elsewhere. I think these positive factors are mostly orthogonal to the quality of software infrastructure.

some random takes:

- you didn't say this, but when I saw the infrastructure point I was reminded that some people seem to have a notion that any ML experiment you can do outside a lab, you will be able to do more efficiently inside a lab because of some magical experimentation infrastructure or something. I think unless you're spending 50% of your time installing cuda or something, this basically is just not a thing. lab infrastructure lets you run bigger experiments than you could otherwise, but it costs a few sanity points compared to the small experiment. oftentimes, the most productive way to work inside a lab is to avoid existing software infra as much as possible.

- I think safetywashing is a problem but from the perspective of an xrisky researcher it's not a big deal because for the audiences that matter, there are safetywashing things that are just way cheaper per unit of goodwill than xrisk alignment work - xrisk is kind of weird and unrelatable to anyone who doesn't already take it super seriously. I think people who work on non xrisk safety or distribution of benefits stuff should be more worried about this.

- this is totally n=1 and in fact I think my experience here is quite unrepresentative of the average lab experience, but I've had a shocking amount of research freedom. I'm deeply grateful for this - it has turned out to be incredibly positive for my research productivity (e.g the SAE scaling paper would not have happened otherwise).

I think this is probably true of you and people around you but also you likely live in a bubble. To be clear, I'm not saying why people reading this should travel, but rather what a lot of travel is like, descriptively.

theory: a large fraction of travel is because of mimetic desire (seeing other people travel and feeling fomo / keeping up with the joneses), signalling purposes (posting on IG, demonstrating socioeconomic status), or mental compartmentalization of leisure time (similar to how it's really bad for your office and bedroom to be the same room).

this explains why in every tourist destination there are a whole bunch of very popular tourist traps that are in no way actually unique/comparatively-advantaged to the particular destination. for example: shopping, amusement parks, certain kinds of museums.

ok good that we agree interp might plausibly be on track. I don't really care to argue about whether it should count as prosaic alignment or not. I'd further claim that the following (not exhaustive) are also plausibly good (I'll sketch each out for the avoidance of doubt because sometimes people use these words subtly differently):

- model organisms - trying to probe the minimal sets of assumptions to get various hypothesized spicy alignment failures seems good. what is the least spoonfed demonstration of deceptive alignment we can get that is analogous mechanistically to the real deal? to what extent can we observe early signs of the prerequisites in current models? which parts of the deceptive alignment arguments are most load bearing?

- science of generalization - in practice, why do NNs sometimes generalize and sometimes not? why do some models generalize better than others? In what ways are humans better or worse than NNs at generalizing? can we understand this more deeply without needing mechanistic understanding? (all closely related to ELK)

- goodhart robustness - can you make reward models which are calibrated even under adversarial attack, so that when you optimize them really hard, you at least never catastrophically goodhart them?

- scalable oversight (using humans, and possibly giving them a leg up with e.g secret communication channels between them, and rotating different humans when we need to simulate amnesia) - can we patch all of the problems with e.g debate? can we extract higher quality work out of real life misaligned expert humans for practical purposes (even if it's maybe a bit cost uncompetitive)?

in capabilities, the most memetically successful things were for a long time not the things that actually worked. for a long time, people would turn their noses at the idea of simply scaling up models because it wasn't novel. the papers which are in retrospect the most important did not get that much attention at the time (e.g gpt2 was very unpopular among many academics; the Kaplan scaling laws paper was almost completely unnoticed when it came out; even the gpt3 paper went under the radar when it first came out.)

one example of a thing within prosaic alignment that i feel has the possibility of generalizability is interpretability. again, if we take the generalizability criteria and map it onto the capabilities analogy, it would be something like scalability - is this a first step towards something that can actually do truly general reasoning, or is it just a hack that will no longer be relevant once we discover the truly general algorithm that subsumes the hacks? if it is on the path, can we actually shovel enough compute into it (or its successor algorithms) to get to agi in practice, or do we just need way more compute than is practical? and i think at the time of gpt2 these were completely unsettled research questions! it was actually genuinely unclear whether writing articles about ovid's unicorn is a genuine first step towards agi, or just some random amusement that will fade into irrelevancy. i think interp is in a similar position where it could work out really well and eventually become the thing that works, or it could just be a dead end.

some concrete examples

- "agi happens almost certainly within in the next few decades" -> maybe ai progress just kind of plateaus for a few decades, it turns out that gpqa/codeforces etc are like chess in that we only think they're hard because humans who can do them are smart but they aren't agi-complete, ai gets used in a bunch of places in the economy but it's more like smartphones or something. in this world i should be taking normie life advice a lot more seriously.

- "agi doesn't happen in the next 2 years" -> maybe actually scaling current techniques is all you need. gpqa/codeforces actually do just measure intelligence. within like half a year, ML researchers start being way more productive because lots of their job is automated. if i use current/near-future ai agents for my research, i will actually just be more productive.

- "alignment is hard" -> maybe basic techniques is all you need, because natural abstractions is true, or maybe the red car / blue car argument for why useful models are also competent at bad things is just wrong because generalization can be made to suck. maybe all the capabilities people are just right and it's not reckless to be building agi so fast

i think it's quite valuable to go through your key beliefs and work through what the implications would be if they were false. this has several benefits:

- picturing a possible world where your key belief is wrong makes it feel more tangible and so you become more emotionally prepared to accept it.

- if you ever do find out that the belief is wrong, you don't flinch away as strongly because it doesn't feel like you will be completely epistemically lost the moment you remove the Key Belief

- you will have more productive conversations with people who disagree with you on the Key Belief

- you might discover strategies that are robustly good whether or not the Key Belief is true

- you will become better at designing experiments to test whether the Key Belief is true

there are two different modes of learning i've noticed.

- top down: first you learn to use something very complex and abstract. over time, you run into weird cases where things don't behave how you'd expect, or you feel like you're not able to apply the abstraction to new situations as well as you'd like. so you crack open the box and look at the innards and see a bunch of gears and smaller simpler boxes, and it suddenly becomes clear to you why some of those weird behaviors happened - clearly it was box X interacting with gear Y! satisfied, you use your newfound knowledge to build something even more impressive than you could before. eventually, the cycle repeats, and you crack open the smaller boxes to find even smaller boxes, etc.

- bottom up: you learn about the 7 Fundamental Atoms of Thingism. you construct the simplest non-atomic thing, and then the second simplest non atomic thing. after many painstaking steps of work, you finally construct something that might be useful. then you repeat the process anew for every other thing you might ever find useful. and then you actually use those things to do something

generally, i'm a big fan of top down learning, because everything you do comes with a source of motivation for why you want to do the thing; bottom up learning often doesn't give you enough motivation to care about the atoms. but also, bottom up learning gives you a much more complete understanding.

there is always too much information to pay attention to. without an inexpensive way to filter, the field would grind to a complete halt. style is probably a worse thing to select on than even academia cred, just because it's easier to fake.