Thane Ruthenis's Shortform

post by Thane Ruthenis · 2024-09-13T20:52:23.396Z · LW · GW · 58 commentsContents

59 comments

58 comments

Comments sorted by top scores.

comment by Thane Ruthenis · 2025-01-20T15:40:39.173Z · LW(p) · GW(p)

Alright, so I've been following the latest OpenAI Twitter freakout, and here's some urgent information about the latest closed-doors developments that I've managed to piece together:

- Following OpenAI Twitter freakouts is a colossal, utterly pointless waste of your time and you shouldn't do it ever.

- If you saw this comment of Gwern's [LW(p) · GW(p)] going around and were incredibly alarmed, you should probably undo the associated update regarding AI timelines (at least partially, see below).

- OpenAI may be running some galaxy-brained psyops nowadays.

Here's the sequence of events, as far as I can tell:

- Some Twitter accounts that are (claiming, without proof, to be?) associated with OpenAI are being very hype about some internal OpenAI developments.

- Gwern posts this comment [LW(p) · GW(p)] suggesting an explanation for point 1.

- Several accounts (e. g., one, two) claiming (without proof) to be OpenAI insiders start to imply that:

- An AI model recently finished training.

- Its capabilities surprised and scared OpenAI researchers.

- It produced some innovation/is related to OpenAI's "Level 4: Innovators" stage of AGI development.

- Gwern's comment goes viral on Twitter (example).

- A news story about GPT-4b micro comes out, indeed confirming a novel OpenAI-produced innovation in biotech. (But it is not actually an "innovator AI" [LW(p) · GW(p)].)

- The stories told by the accounts above start to mention that the new breakthrough is similar to GPT-4b: that it's some AI model that produced an innovation in "health and longevity". But also, that it's broader than GPT-4b, and that the full breadth of this new model's surprising emergent capabilities is unclear. (One, two, three.)

- Noam Brown, an actual confirmed OpenAI researcher, complains about "vague AI hype on social media", and states they haven't yet actually achieved superintelligence.

- The Axios story comes out, implying that OpenAI has developed "PhD-level superagents" and that Sam Altman is going to brief Trump on them. Of note:

- Axios is partnered with OpenAI.

- If you put on Bounded Distrust [LW · GW] lens, you can see that the "PhD-level superagents" claim is entirely divorced from any actual statements made by OpenAI people. The article ties-in a Mark Zuckerberg quote instead, etc. Overall, the article weaves the impression it wants to create out of vibes (with which it's free to lie) and not concrete factual statements.

- The "OpenAI insiders" gradually ramp up the intensity of their story all the while, suggesting that the new breakthrough would allow ASI in "weeks, not years", and also that OpenAI won't release this "o4-alpha" until 2026 because they have a years-long Master Plan, et cetera. Example, example.

- Sam Altman complains about "twitter hype" being "out of control again".

- OpenAI hype accounts deflate.

What the hell was all that?

First, let's dispel any notion that the hype accounts are actual OpenAI insiders who know what they are talking about:

- "Satoshi" claims to be blackmailing OpenAI higher-ups in order to be allowed to shitpost classified information on Twitter. I am a bit skeptical of this claim, to put it mildly.

- "Riley Coyote" has a different backstory which is about as convincing by itself, and which also suggests that "Satoshi" is "Riley"'s actual source.

As far as I can tell digging into the timeline, both accounts just started acting as if they are OpenAI associates posting leaks. Not even, like, saying that they're OpenAI associates posting leaks, much less proving that. Just starting to act as if they're OpenAI associates and that everyone knows this. Their tweets then went viral. (There's also the strawberry guy, who also implies to be an OpenAI insider, who also joined in on the above hype-posting, and who seems to have been playing this same game for a year now. But I'm tired of looking up the links, and the contents are intensely unpleasant. Go dig through that account yourself if you want.)

In addition, none of the OpenAI employee accounts with real names that I've been able to find have been participating in this hype cycle. So if OpenAI allowed its employees to talk about what happened/is happening, why weren't any confirmed-identity accounts talking about it (except Noam's, deflating it)? Why only the anonymous Twitter people?

Well, because this isn't real.

That said, the timing is a bit suspect. This hype starting up, followed by the GPT-4b micro release and the Axios piece, all in the span of ~3 days? And the hype men's claims at least partially predicting the GPT-4b micro thing?

There's three possibilities:

- A coincidence. (The predictions weren't very precise, just "innovators are coming". The details about health-and-longevity and the innovative output got added after the GPT-4b piece, as far as I can tell.)

- A leak in one of the newspapers working on the GPT-4b story (which the grifters then built a false narrative around).

- Coordinated action by OpenAI.

One notable point is, the Axios story was surely coordinated with OpenAI, and it's both full of shenanigans and references the Twitter hype ("several OpenAI staff have been telling friends they are both jazzed and spooked by recent progress"). So OpenAI was doing shenanigans. So I'm slightly inclined to believe it was all an OpenAI-orchestrated psyop.

Let's examine this possibility.

Regarding the truth value of the claims: I think nothing has happened, even if the people involved are OpenAI-affiliated (in a different sense from how they claim). Maybe there was some slight unexpected breakthrough on an obscure research direction, at most, to lend an air of technical truth to those claims. But I think it's all smoke and mirrors.

However, the psyop itself (if it were one) has been mildly effective. I think tons of people actually ended up believing that something might be happening (e. g., janus, the AI Notkilleveryoneism Memes guy, myself for a bit, maybe gwern, if his comment [LW(p) · GW(p)] referenced the pattern of posting related to the early stages of this same event).

That said, as Eliezer points out here, it's advantageous for OpenAI to be crying wolf: both to drive up/maintain hype among their allies, and to frog-boil the skeptics into instinctively dismissing any alarming claims. Such that, say, if there ever are actual whistleblowers pseudonymously freaking out about unexpected breakthroughs on Twitter, nobody believes them.

That said, I can't help but think that if OpenAI were actually secure in their position and making insane progress, they would not have needed to do any of this stuff. If you're closing your fingers around agents capable of displacing the workforce en masse, if you see a straight shot to AGI, why engage in this childishness? (Again, if Satoshi and Riley aren't just random trolls.)

Bottom line, one of the following seems to be the case:

- There's a new type of guy, which is to AI/OpenAI what shitcoin-shills are to cryptocurrency.

- OpenAI is engaging in galaxy-brained media psyops.

Oh, and what's definitely true is that paying attention to what's going viral on Twitter is a severe mistake. I've committed it for the first and last time.

I also suggest that you unroll the update you might've made based on Gwern's comment [LW(p) · GW(p)]. Not the part describing to the o-series' potential – that's of course plausible and compelling. The part where that potential seems to have already been confirmed and realized according to ostensible OpenAI leaks – because those leaks seem to be fake. (Unless Gwern was talking about some other demographic of OpenAI accounts being euphorically optimistic on Twitter, which I've somehow missed?)[1]

(Oh, as to Sam Altman meeting with Trump? Well, that's probably because Trump's Sinister Vizier, Sam Altman's sworn nemesis, Elon Musk, is whispering in Trump ear 24/7 suggesting to crush OpenAI, and if Altman doesn't seduce Trump ASAP, Trump will do that. Especially since OpenAI is currently vulnerable due to their legally dubious for-profit transition.

This planet is a clown show.)

I'm currently interested in:

- Arguments for actually taking the AI hype people's claims seriously. (In particular, were any actual OpenAI employees provably involved, and did I somehow miss them?)

- Arguments regarding whether this was an OpenAI psyop vs. some random trolls.

Also, pinging @Zvi [LW · GW] in case any of those events showed up on his radar and he plans to cover them in his newsletter.

- ^

Also, I can't help but note that the people passing the comment around (such as this, this) are distorting it. The Gwern-stated claim isn't that OpenAI are close to superintelligence, it's that they may feel as if they're close to superintelligence. Pretty big difference!

Though, again, even that is predicated on actual OpenAI employees posting actual insider information about actual internal developments. Which I am not convinced is a thing that is actually happening.

↑ comment by Robert Cousineau (robert-cousineau) · 2025-01-20T18:25:33.731Z · LW(p) · GW(p)

I personally put a relatively high probability of this being a galaxy brained media psyop by OpenAI/Sam Altman.

Eliezer makes a very good point that confusion around people claiming AI advances/whistleblowing benefits OpenAI significantly, and Sam Altman has a history of making galaxy brained political plays (attempting to get Helen fired (and then winning), testifying to congress that it is good he has oversight via the board and he should not be full control of OpenAI and then replacing the board with underlings, etc).

Sam is very smart and politically capable. This feels in character.

↑ comment by Seth Herd · 2025-01-20T23:01:59.040Z · LW(p) · GW(p)

Thanks for doing this so I didn't have to! Hell is other people - on social media. And it's an immense time-sink.

Zvi is the man for saving the rest of us vast amounts of time and sanity.

I'd guess the psyop spun out of control with a couple of opportunistic posters pretending they had inside information, and that's why Sam had to say lower your expectations 100x. I'm sure he wants hype, but he doesn't want high expectations that are very quickly falsified. That would lead to some very negative stories about OpenAI's prospects, even if they're equally silly they'd harm investment hype.

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2025-01-21T00:04:32.348Z · LW(p) · GW(p)

I'm sure he wants hype, but he doesn't want high expectations that are very quickly falsified

There's a possibility that this was a clown attack [LW · GW] on OpenAI instead...

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2025-01-20T23:41:23.610Z · LW(p) · GW(p)

Thanks for the sleuthing.

The thing is - last time I heard about OpenAI rumors it was Strawberry.

The unfortunate fact of life is that too many times OpenAI shipping has surpassed all but the wildest speculations.

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2025-01-20T23:53:55.714Z · LW(p) · GW(p)

The thing is - last time I heard about OpenAI rumors it was Strawberry.

That was part of my reasoning as well, why I thought it might be worth engaging with!

But I don't think this is the same case. Strawberry/Q* was being leaked-about from more reputable sources, and it was concurrent with dramatic events (the coup) that were definitely happening.

In this case, all evidence we have is these 2-3 accounts shitposting.

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2025-01-21T00:26:37.956Z · LW(p) · GW(p)

Thanks.

Well 2-3 shitposters and one gwern.

Who would be so foolish to short gwern? Gwern the farsighted, gwern the prophet, gwern for whom entropy is nought, gwern augurious augustus

↑ comment by Joseph Miller (Josephm) · 2025-01-20T22:51:32.255Z · LW(p) · GW(p)

- Following OpenAI Twitter freakouts is a colossal, utterly pointless waste of your time and you shouldn't do it ever.

I feel like for the same reasons, this shortform is kind of an engaging waste of my time. One reason I read LessWrong is to avoid twitter garbage.

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2025-01-21T00:02:38.212Z · LW(p) · GW(p)

Valid, I was split on whether it's worth posting vs. it'd be just me taking my part in spreading this nonsense. But it'd seemed to me that a lot of people, including LW regulars, might've been fooled, so I erred on the side of posting.

↑ comment by Cervera · 2025-01-20T20:11:15.263Z · LW(p) · GW(p)

I dont think any of that invalidates that Gwern is a usual, usually right.

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2025-01-20T23:59:58.199Z · LW(p) · GW(p)

As I'd said, I think he's right about the o-series' theoretic potential. I don't think there is, as of yet, any actual indication that this potential has already been harnessed, and therefore that it works as well as the theory predicts. (And of course, the o-series scaling quickly at math is probably not even an omnicide threat. There's an argument for why it might be – that the performance boost will transfer to arbitrary domains – but that doesn't seem to be happening. I guess we'll see once o3 is public.)

↑ comment by Hzn · 2025-01-21T05:39:39.685Z · LW(p) · GW(p)

I think super human AI is inherently very easy [LW · GW]. I can't comment on the reliability of those accounts. But the technical claims seem plausible.

comment by Thane Ruthenis · 2025-01-17T22:57:13.787Z · LW(p) · GW(p)

Current take on the implications of "GPT-4b micro": Very powerful, very cool, ~zero progress to AGI, ~zero existential risk. Cheers.

First, the gist of it appears to be:

OpenAI’s new model, called GPT-4b micro, was trained to suggest ways to re-engineer the protein factors to increase their function. According to OpenAI, researchers used the model’s suggestions to change two of the Yamanaka factors to be more than 50 times as effective—at least according to some preliminary measures.

The model was trained on examples of protein sequences from many species, as well as information on which proteins tend to interact with one another. [...] Once Retro scientists were given the model, they tried to steer it to suggest possible redesigns of the Yamanaka proteins. The prompting tactic used is similar to the “few-shot” method, in which a user queries a chatbot by providing a series of examples with answers, followed by an example for the bot to respond to.

Crucially, if the reporting is accurate, this is not an agent. The model did not engage in autonomous open-ended research. Rather, humans guessed that if a specific model is fine-tuned on a specific dataset, the gradient descent would chisel into it the functionality that would allow it to produce groundbreaking results in the corresponding domain. As far as AGI-ness goes, this is functionally similar to AlphaFold 2; as far as agency goes, it's at most at the level of o1[1].

To speculate on what happened: Perhaps GPT-4b ("b" = "bio"?) is based on some distillation of an o-series model, say o3. o3's internals contain a lot of advanced machinery for mathematical reasoning. What this result shows, then, is that the protein-factors problem is in some sense a "shallow" mathematical problem that could be easily solved if you think about it the right way. Finding the right way to think about it is itself highly challenging, however – a problem teams of brilliant people have failed to crack – yet deep learning allowed to automate this search and crack it.

This trick likely generalizes. There may be many problems in the world that could be cracked this way[2]: those that are secretly "mathematically shallow" in this manner, and for which you can get a clean-enough fine-tuning dataset.

... Which is to say, this almost certainly doesn't cover social manipulation/scheming (no clean dataset), and likely doesn't cover AI R&D (too messy/open-ended, although I can see being wrong about this). (Edit: And if it Just Worked given any sorta-related sorta-okay fine-tuning dataset, the o-series would've likely generalized to arbitrary domains out-of-the-box, since the pretraining is effectively this dataset for everything. Yet it doesn't.)

It's also not entirely valid to call that "innovative AI", any more than it was valid to call AlphaFold 2 that. It's an innovative way of leveraging gradient descent for specific scientific challenges, by fine-tuning a model pre-trained in a specific way. But it's not the AI itself picking a field to innovate and then producing the innovation; it's humans finding a niche which this class of highly specific (and highly advanced) tools can fill.

It's not the type of thing that can get out of human control.

So, to restate: By itself, this seems like a straightforwardly good type of AI progress. Zero existential risk or movement in the direction of existential risks, tons of scientific and technological utility.

Indeed, on the contrary: if that's the sort of thing OpenAI employees are excited about nowadays, and what their recent buzz about AGI in 2025-2026 and innovative AI and imminent Singularity was all about, that seems like quite a relief.

- ^

If it does runtime search. Which doesn't fit the naming scheme – should be "GPT-o3b" instead of "GPT-4b" or something – but you know how OpenAI is with their names.

- ^

And indeed,OpenAI-associated vaguepostingsuggests there's some other domain in which they'd recently produced a similar result.Edit: Having dug through the vagueposting further, yep, this is also something "in health and longevity", if this OpenAI hype man is to be believed.In fact, if we dig into their posts further – which I'm doing in the spirit of a fair-play whodunnit/haruspicy at this point, don't take ittooseriously – we can piece together a bit more.Thissuggests that the innovation is indeed based on applying fine-tuning to a RL'd model.Thisfurther implies that the intent of the new iteration of GPT-4b was to generalize it further. Perhaps it was fed not just the protein-factors dataset, but a breadth of various bioscience datasets, chiseling-in a more general-purpose model of bioscience on which a wide variety of queries could be ran?Note: this would still be a "cutting-edge biotech simulator" kind of capability, not an "innovative AI agent" kind of capability.Ignore that whole thing. On further research, I'm pretty sure all of this was substance-less trolling [LW(p) · GW(p)] and no actual OpenAI researchers were involved. (At most, OpenAI's psy-ops team.)

↑ comment by Logan Riggs (elriggs) · 2025-01-18T02:33:29.401Z · LW(p) · GW(p)

For those also curious, Yamanaka factors are specific genes that turn specialized cells (e.g. skin, hair) into induced pluripotent stem cells (iPSCs) which can turn into any other type of cell.

This is a big deal because you can generate lots of stem cells to make full organs[1] or reverse aging (maybe? they say you just turn the cell back younger, not all the way to stem cells).

You can also do better disease modeling/drug testing: if you get skin cells from someone w/ a genetic kidney disease, you can turn those cells into the iPSCs, then into kidney cells which will exhibit the same kidney disease because it's genetic. You can then better understand how the [kidney disease] develops and how various drugs affect it.

So, it's good to have ways to produce lots of these iPSCs. According to the article, SOTA was <1% of cells converted into iPSCs, whereas the GPT suggestions caused a 50x improvement to 33% of cells converted. That's quite huge!, so hopefully this result gets verified. I would guess this is true and still a big deal, but concurrent work [LW(p) · GW(p)] got similar results.

Too bad about the tumors. Turns out iPSCs are so good at turning into other cells, that they can turn into infinite cells (ie cancer). iPSCs were used to fix spinal cord injuries (in mice) which looked successful for 112 days, but then a follow up study said [a different set of mice also w/ spinal iPSCs] resulted in tumors.

My current understanding is this is caused by the method of delivering these genes (ie the Yamanaka factors) through retrovirus which

is a virus that uses RNA as its genomic material. Upon infection with a retrovirus, a cell converts the retroviral RNA into DNA, which in turn is inserted into the DNA of the host cell.

which I'd guess this is the method the Retro Biosciences uses.

I also really loved the story of how Yamanaka discovered iPSCs:

Induced pluripotent stem cells were first generated by Shinya Yamanaka and Kazutoshi Takahashi at Kyoto University, Japan, in 2006.[1] They hypothesized that genes important to embryonic stem cell (ESC) function might be able to induce an embryonic state in adult cells. They chose twenty-four genes previously identified as important in ESCs and used retroviruses to deliver these genes to mouse fibroblasts. The fibroblasts were engineered so that any cells reactivating the ESC-specific gene, Fbx15, could be isolated using antibiotic selection.

Upon delivery of all twenty-four factors, ESC-like colonies emerged that reactivated the Fbx15 reporter and could propagate indefinitely. To identify the genes necessary for reprogramming, the researchers removed one factor at a time from the pool of twenty-four. By this process, they identified four factors, Oct4, Sox2, cMyc, and Klf4, which were each necessary and together sufficient to generate ESC-like colonies under selection for reactivation of Fbx15.

- ^

These organs would have the same genetics as the person who supplied the [skin/hair cells] so risk of rejection would be lower (I think)

↑ comment by TsviBT · 2025-01-18T20:21:24.720Z · LW(p) · GW(p)

According to the article, SOTA was <1% of cells converted into iPSCs

I don't think that's right, see https://www.cell.com/cell-stem-cell/fulltext/S1934-5909(23)00402-2

Replies from: elriggs↑ comment by Logan Riggs (elriggs) · 2025-01-18T21:00:43.551Z · LW(p) · GW(p)

You're right! Thanks

For Mice, up to 77%

Sox2-17 enhanced episomal OKS MEF reprogramming by a striking 150 times, giving rise to high-quality miPSCs that could generate all-iPSC mice with up to 77% efficiency

For human cells, up to 9% (if I'm understanding this part correctly).

SOX2-17 gave rise to 56 times more TRA1-60+ colonies compared with WT-SOX2: 8.9% versus 0.16% overall reprogramming efficiency.

So seems like you can do wildly different depending on the setting (mice, humans, bovine, etc), and I don't know what the Retro folks were doing, but does make their result less impressive.

Replies from: TsviBT↑ comment by TsviBT · 2025-01-18T21:10:34.313Z · LW(p) · GW(p)

(Still impressive and interesting of course, just not literally SOTA.)

Replies from: elriggs↑ comment by Logan Riggs (elriggs) · 2025-01-18T21:17:51.176Z · LW(p) · GW(p)

Thinking through it more, Sox2-17 (they changed 17 amino acids from Sox2 gene) was your linked paper's result, and Retro's was a modified version of factors Sox AND KLF. Would be cool if these two results are complementary.

comment by Thane Ruthenis · 2025-01-14T00:40:39.488Z · LW(p) · GW(p)

Here's an argument for a capabilities plateau at the level of GPT-4 that I haven't seen discussed before. I'm interested in any holes anyone can spot in it.

Consider the following chain of logic:

- The pretraining scaling laws only say that, even for a fixed training method, increasing the model's size and the amount of data you train on increases the model's capabilities – as measured by loss, performance on benchmarks, and the intuitive sense of how generally smart a model is.

- Nothing says that increasing a model's parameter-count and the amount of compute spent on training it is the only way to increase its capabilities. If you have two training methods A and B, it's possible that the B-trained X-sized model matches the performance of the A-trained 10X-sized model.

- Empirical evidence: Sonnet 3.5 (at least the not-new one), Qwen-2.5-70B, and Llama 3-72B all have 70-ish billion parameters, i. e., less than GPT-3. Yet, their performance is at least on par with that of GPT-4 circa early 2023.

- Therefore, it is possible to "jump up" a tier of capabilities, by any reasonable metric, using a fixed model size but improving the training methods.

- The latest set of GPT-4-sized models (Opus 3.5, Orion, Gemini 1.5 Pro?) are presumably trained using the current-best methods. That is: they should be expected to be at the effective capability level of a model that is 10X GPT-4's size yet trained using early-2023 methods. Call that level "GPT-5".

- Therefore, the jump from GPT-4 to GPT-5, holding the training method fixed at early 2023, is the same as the jump from the early GPT-4 to the current (non-reasoning) SotA, i. e., to Sonnet 3.5.1.

- (Nevermind that Sonnet 3.5.1 is likely GPT-3-sized too, it still beats the current-best GPT-4-sized models as well. I guess it straight up punches up two tiers?)

- The jump from GPT-3 to GPT-4 is dramatically bigger than the jump from early-2023 SotA to late-2024 SotA. I. e.: 4-to-5 is less than 3-to-4.

- Consider a model 10X bigger than GPT-4 but trained using the current-best training methods; an effective GPT-6. We should expect this jump to be at most as significant as the capability jump from early-2023 to late-2024. By point (7), it's likely even less significant than that.

- Empirical evidence: Despite the proliferation of all sorts of better training methods, including e. g. the suite of tricks that allowed DeepSeek to train a near-SotA-level model for pocket change, none of the known non-reasoning models have managed to escape the neighbourhood of GPT-4, and none of the known models (including the reasoning models) have escaped that neighbourhood in domains without easy verification.

- Intuitively, if we now know how to reach levels above early-2023!GPT-4 using 20x fewer resources, we should be able to shoot well past early-2023!GPT-4 using 1x as much resources – and some of the latest training runs have to have spent 10x the resources that went into original GPT-4.

- E. g., OpenAI's rumored Orion, which was presumably both trained using more compute than GPT-4, and via better methods than were employed for the original GPT-4, and which still reportedly underperformed.

- Similar for Opus 3.5: even if it didn't "fail" as such, the fact that they choose to keep it in-house instead of giving public access for e. g. $200/month suggests it's not that much better than Sonnet 3.5.1.

- Yet, we have still not left the rough capability neighbourhood of early-2023!GPT-4. (Certainly no jumps similar to the one from GPT-3 to GPT-4.)

- Intuitively, if we now know how to reach levels above early-2023!GPT-4 using 20x fewer resources, we should be able to shoot well past early-2023!GPT-4 using 1x as much resources – and some of the latest training runs have to have spent 10x the resources that went into original GPT-4.

- Therefore, all known avenues of capability progress aside from the o-series have plateaued. You can make the current SotA more efficient in all kinds of ways, but you can't advance the frontier.

Are there issues with this logic?

The main potential one is if all models that "punch up a tier" are directly trained on the outputs of the models of the higher tier. In this case, to have a GPT-5-capabiliies model of GPT-4's size, it had to have been trained on the outputs of a GPT-5-sized model, which do not exist yet. "The current-best training methods", then, do not yet scale to GPT-4-sized models, because they rely on access to a "dumbly trained" GPT-5-sized model. Therefore, although the current-best GPT-3-sized models can be considered at or above the level of early-2023 GPT-4, the current-best GPT-4-sized models cannot be considered to be at the level of GPT-5 if it were trained using early-2023 methods.

Note, however: this would then imply that all excitement about (currently known) algorithmic improvements is hot air. If the capability frontier cannot be pushed by improving the training methods in any (known) way – if training a GPT-4-sized model on well-structured data, and on reasoning traces from a reasoning model, et cetera, isn't enough to push it to GPT-5's level – then pretraining transformers is the only known game in town, as far as general-purpose capability-improvement goes. Synthetic data and other tricks can allow you to reach the frontier in all manners of more efficient ways, but not move past it.

Basically, it seems to me that one of those must be true:

- Capabilities can be advanced by improving training methods (by e. g. using synthetic data).

- ... in which case we should expect current models to be at the level of GPT-5 or above. And yet they are not much more impressive than GPT-4, which means further scaling will be a disappointment.

- Capabilities cannot be advanced by improving training methods.

- ... in which case scaling pretraining is still the only known method of general capability advancement.

- ... and if the Orion rumors are true, it seems that even a straightforward scale-up to GPT-4.5ish's level doesn't yield much (or: yields less than was expected).

- ... in which case scaling pretraining is still the only known method of general capability advancement.

(This still leaves one potential avenue of general capabilities progress: figuring out how to generalize o-series' trick to domains without easy automatic verification. But if the above reasoning has no major holes, that's currently the only known promising avenue.)

Replies from: Vladimir_Nesov, leogao, nostalgebraist, Seth Herd, green_leaf↑ comment by Vladimir_Nesov · 2025-01-14T03:37:17.868Z · LW(p) · GW(p)

Given some amount of compute, a compute optimal model tries to get the best perplexity out of it when training on a given dataset, by choosing model size, amount of data, and architecture. An algorithmic improvement in pretraining enables getting the same perplexity by training on data from the same dataset with less compute, achieving better compute efficiency (measured as its compute multiplier).

Many models aren't trained compute optimally, they are instead overtrained (the model is smaller, trained on more data). This looks impressive, since a smaller model is now much better, but this is not an improvement in compute efficiency, doesn't in any way indicate that it became possible to train a better compute optimal model with a given amount of compute. The data and post-training also recently got better, which creates the illusion of algorithmic progress in pretraining, but their effect is bounded (while RL doesn't take off), doesn't get better according to pretraining scaling laws once much more data becomes necessary. There is enough data until 2026-2028 [LW · GW], but not enough good data.

I don't think the cumulative compute multiplier since GPT-4 is that high, I'm guessing 3x, except perhaps for DeepSeek-V3 [LW(p) · GW(p)], which wasn't trained compute optimally and didn't use a lot of compute, and so it remains unknown what happens if its recipe is used compute optimally with more compute.

The amount of raw compute since original GPT-4 only increased maybe 5x [LW · GW], from 2e25 FLOPs to about 1e26 FLOPs, and it's unclear if there were any compute optimal models trained on notably more compute than original GPT-4. We know Llama-3-405B is compute optimal, but it's not MoE, so has lower compute efficiency and only used 4e25 FLOPs. Probably Claude 3 Opus is compute optimal, but unclear if it used a lot of compute compared to original GPT-4.

If there was a 6e25 FLOPs compute optimal model with a 3x compute multiplier over GPT-4, it's therefore only trained for 9x more effective compute than original GPT-4. The 100K H100s clusters have likely recently trained a new generation of base models for about 3e26 FLOPs, possibly a 45x improvement in effective compute over original GPT-4, but there's no word on whether any of them were compute optimal (except perhaps Claude 3.5 Opus), and it's unclear if there is an actual 3x compute multiplier over GPT-4 that made it all the way into pretraining of frontier models. Also, waiting for NVL72 GB200s (that are much better at inference for larger models), non-Google labs might want to delay deploying compute optimal models in the 1e26-5e26 FLOPs range until later in 2025.

Comparing GPT-3 to GPT-4 gives very little signal on how much of the improvement is from compute, and so how much should be expected beyond GPT-4 from more compute. While modern models are making good use of not being compute optimal by using fewer active parameters, GPT-3 was instead undertrained, being both larger and less performant than the hypothetical compute optimal alternative. It also wasn't a MoE model. And most of the bounded low hanging fruit that is not about pretraining efficiency hasn't been applied to it.

So the currently deployed models don't demonstrate the results of the experiment in training a much more compute efficient model on much more compute. And the previous leaps in capability are in large part explained by things that are not improvement in compute efficiency or increase in amount of compute. But in 2026-2027, 1 GW training systems will train models with 250x compute of original GPT-4 [LW · GW]. And probably in 2028-2029, 5 GW training systems will train models with 2500x raw compute of original GPT-4. With a compute multiplier of 5x-10x from algorithmic improvements plausible by that time, we get 10,000x-25,000x original GPT-4 in effective compute. This is enough of a leap that lack of significant improvement from only 9x of currently deployed models (or 20x-45x of non-deployed newer models, rumored to be underwhelming) is not a strong indication of what happens by 2028-2029 (from scaling of pretraining alone).

Replies from: Thane Ruthenis, Thane Ruthenis↑ comment by Thane Ruthenis · 2025-01-25T20:19:20.241Z · LW(p) · GW(p)

Coming back to this in the wake of DeepSeek r1...

I don't think the cumulative compute multiplier since GPT-4 is that high, I'm guessing 3x, except perhaps for DeepSeek-V3 [LW(p) · GW(p)], which wasn't trained compute optimally and didn't use a lot of compute, and so it remains unknown what happens if its recipe is used compute optimally with more compute.

How did DeepSeek accidentally happen to invest precisely the amount of compute into V3 and r1 that would get them into the capability region of GPT-4/o1, despite using training methods that clearly have wildly different returns on compute investment?

Like, GPT-4 was supposedly trained for $100 million, and V3 for $5.5 million. Yet, they're roughly at the same level. That should be very surprising. Investing a very different amount of money into V3's training should've resulted in it either massively underperforming GPT-4, or massively overperforming, not landing precisely in its neighbourhood!

Consider this graph. If we find some training method A, and discover that investing $100 million in it lands us at just above "dumb human", and then find some other method B with a very different ROI, and invest $5.5 million in it, the last thing we should expect is to again land near "dumb human".

Or consider this trivial toy model: You have two linear functions, f(x) = Ax and g(x) = Bx, where x is the compute invested, output is the intelligence of the model, and f and g are different training methods. You pick some x effectively at random (whatever amount of money you happened to have lying around), plug it into f, and get, say, 120. Then you pick a different random value of x, plug it into g, and get... 120 again. Despite the fact that the multipliers A and B are likely very different, and you used very different x-values as well. How come?

The explanations that come to mind are:

- It actually is just that much of a freaky coincidence.

- DeepSeek have a superintelligent GPT-6 equivalent that they trained for $10 million in their basement, and V3/r1 are just flexes that they specifically engineered to match GPT-4-ish level.

- DeepSeek directly trained on GPT-4 outputs, effectively just distilling GPT-4 into their model, hence the anchoring.

- DeepSeek kept investing and tinkering until getting to GPT-4ish level, and then stopped immediately after attaining it.

- GPT-4ish neighbourhood is where LLM pretraining plateaus, which is why this capability level acts as a sort of "attractor" into which all training runs, no matter how different, fall.

↑ comment by Vladimir_Nesov · 2025-01-26T10:15:07.768Z · LW(p) · GW(p)

How did DeepSeek accidentally happen to invest precisely the amount of compute into V3 and r1 that would get them into the capability region of GPT-4/o1

Selection effect. If DeepSeek-V2.5 was this good, we would be talking about it instead.

GPT-4 was supposedly trained for $100 million, and V3 for $5.5 million

Original GPT-4 is 2e25 FLOPs and compute optimal, V3 is about 5e24 FLOPs and overtrained (400 tokens/parameter, about 10x-20x), so a compute optimal model with the same architecture would only need about 3e24 FLOPs of raw compute[1]. Original GPT-4 was trained in 2022 on A100s and needed a lot of them, while in 2024 it could be trained on 8K H100s in BF16. DeepSeek-V3 is trained in FP8, doubling the FLOP/s, so the FLOPs of original GPT-4 could be produced in FP8 by mere 4K H100s. DeepSeek-V3 was trained on 2K H800s, whose performance is about that of 1.5K H100s. So the cost only has to differ by about 3x, not 20x, when comparing a compute optimal variant of DeepSeek-V3 with original GPT-4, using the same hardware and training with the same floating point precision.

The relevant comparison is with GPT-4o though, not original GPT-4. Since GPT-4o was trained in late 2023 or early 2024, there were 30K H100s clusters around, which makes 8e25 FLOPs of raw compute plausible (assuming it's in BF16). It might be overtrained, so make that 4e25 FLOPs for a compute optimal model with the same architecture. Thus when comparing architectures alone, GPT-4o probably uses about 15x more compute than DeepSeek-V3.

toy model ... f(x) = Ax and g(x) = Bx, where x is the compute invested

Returns on compute are logarithmic though, advantage of a $150 billion training system over a $150 million one is merely twice that of $150 billion over $5 billion or $5 billion over $150 million. Restrictions on access to compute can only be overcome with 30x compute multipliers, and at least DeepSeek-V3 is going to be reproduced using the big compute of US training systems shortly, so that advantage is already gone.

That is, raw utilized compute. I'm assuming the same compute utilization for all models. ↩︎

↑ comment by Noosphere89 (sharmake-farah) · 2025-01-25T20:29:52.708Z · LW(p) · GW(p)

I buy that 1 and 4 is the case, combined with Deepseek probably being satisfied that GPT-4-level models were achieved.

Edit: I did not mean to imply that GPT-4ish neighbourhood is where LLM pretraining plateaus at all, @Thane Ruthenis [LW · GW].

↑ comment by Thane Ruthenis · 2025-01-14T21:50:17.388Z · LW(p) · GW(p)

Thanks!

GPT-3 was instead undertrained, being both larger and less performant than the hypothetical compute optimal alternative

You're more fluent in the scaling laws than me: is there an easy way to roughly estimate how much compute would've been needed to train a model as capable as GPT-3 if it were done Chinchilla-optimally + with MoEs? That is: what's the actual effective "scale" of GPT-3?

(Training GPT-3 reportedly took 3e23 FLOPS, and GPT-4 2e25 FLOPS. Naively, the scale-up factor is 67x. But if GPT-3's level is attainable using less compute, the effective scale-up is bigger. I'm wondering how much bigger.)

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2025-01-16T19:06:50.828Z · LW(p) · GW(p)

IsoFLOP curves for dependence of perplexity on log-data seem mostly symmetric (as in Figure 2 of Llama 3 report), so overtraining by 10x probably has about the same effect as undertraining by 10x. Starting with a compute optimal model, increasing its data 10x while decreasing its active parameters 3x (making it 30x overtrained, using 3x more compute) preserves perplexity (see Figure 1).

GPT-3 is a 3e23 FLOPs dense transformer with 175B parameters trained for 300B tokens (see Table D.1). If Chinchilla's compute optimal 20 tokens/parameter is approximately correct for GPT-3, it's 10x undertrained. Interpolating from the above 30x overtraining example, a compute optimal model needs about 1.5e23 FLOPs to get the same perplexity.

(The effect from undertraining of GPT-3 turns out to be quite small, reducing effective compute by only 2x. Probably wasn't worth mentioning compared to everything else about it that's different from GPT-4.)

↑ comment by leogao · 2025-01-14T04:49:31.094Z · LW(p) · GW(p)

in retrospect, we know from chinchilla that gpt3 allocated its compute too much to parameters as opposed to training tokens. so it's not surprising that models since then are smaller. model size is a less fundamental measure of model cost than pretraining compute. from here on i'm going to assume that whenever you say size you meant to say compute.

obviously it is possible to train better models using the same amount of compute. one way to see this is that it is definitely possible to train worse models with the same compute, and it is implausible that the current model production methodology is the optimal one.

it is unknown how much compute the latest models were trained with, and therefore what compute efficiency win they obtain over gpt4. it is unknown how much more effective compute gpt4 used than gpt3. we can't really make strong assumptions using public information about what kinds of compute efficiency improvements have been discovered by various labs at different points in time. therefore, we can't really make any strong conclusions about whether the current models are not that much better than gpt4 because of (a) a shortage of compute, (b) a shortage of compute efficiency improvements, or (c) a diminishing return of capability wrt effective compute.

↑ comment by nostalgebraist · 2025-01-14T19:18:56.327Z · LW(p) · GW(p)

One possible answer is that we are in what one might call an "unhobbling overhang."

Aschenbrenner uses the term "unhobbling" for changes that make existing model capabilities possible (or easier) for users to reliably access in practice.

His presentation emphasizes the role of unhobbling as yet another factor growing the stock of (practically accessible) capabilities over time. IIUC, he argues that better/bigger pretraining would produce such growth (to some extent) even without more work on unhobbling, but in fact we're also getting better at unhobbling over time, which leads to even more growth.

That is, Aschenbrenner treats pretraining improvements and unhobbling as economic substitutes: you can improve "practically accessible capabilities" to the same extent by doing more of either one even in the absence of the other, and if you do both at once that's even better.

However, one could also view the pair more like economic complements. Under this view, when you pretrain a model at "the next tier up," you also need to do novel unhobbling research to "bring out" the new capabilities unlocked at that tier. If you only scale up, while re-using the unhobbling tech of yesteryear, most of the new capabilities will be hidden/inaccessible, and this will look to you like diminishing downstream returns to pretraining investment, even though the model is really getting smarter under the hood.

This could be true if, for instance, the new capabilities are fundamentally different in some way that older unhobbling techniques were not planned to reckon with. Which seems plausible IMO: if all you have is GPT-2 (much less GPT-1, or char-rnn, or...), you're not going to invest a lot of effort into letting the model "use a computer" or combine modalities or do long-form reasoning or even be an HHH chatbot, because the model is kind of obviously too dumb to do these things usefully no matter how much help you give it.

(Relatedly, one could argue that fundamentally better capabilities tend to go hand in hand with tasks that operate on a longer horizon and involve richer interaction with the real world, and that this almost inevitably causes the appearance of "diminishing returns" in the interval between creating a model smart enough to perform some newly long/rich task and the point where the model has actually been tuned and scaffolded to do the task. If your new model is finally smart enough to "use a computer" via a screenshot/mouseclick interface, it's probably also great at short/narrow tasks like NLI or whatever, but the benchmarks for those tasks were already maxed out by the last generation so you're not going to see a measurable jump in anything until you build out "computer use" as a new feature.)

This puts a different spin on the two concurrent observations that (a) "frontier companies report 'diminishing returns' from pretraining" and (b) "frontier labs are investing in stuff like o1 and computer use."

Under the "unhobbling is a substitute" view, (b) likely reflects an attempt to find something new to "patch the hole" introduced by (a).

But under the "unhobbling is a complement" view, (a) is instead simply a reflection of the fact that (b) is currently a work in progress: unhobbling is the limiting bottleneck right now, not pretraining compute, and the frontier labs are intensively working on removing this bottleneck so that their latest pretrained models can really shine.

(On an anecdotal/vibes level, this also agrees with my own experience when interacting with frontier LLMs. When I can't get something done, these days I usually feel like the limiting factor is not the model's "intelligence" – at least not only that, and not that in an obvious or uncomplicated way – but rather that I am running up against the limitations of the HHH assistant paradigm; the model feels intuitively smarter in principle than "the character it's playing" is allowed to be in practice. See my comments here [LW(p) · GW(p)] and here.)

↑ comment by Seth Herd · 2025-01-14T10:26:07.697Z · LW(p) · GW(p)

That seems maybe right, in that I don't see holes in your logic on LLM progression to date, off the top of my head.

It also lines up with a speculation I've always had. In theory LLMs are predictors, but in practice, are they pretty much imitators? If you're imitating human language, you're capped at reproducing human verbal intelligence (other modalities are not reproducing human thought so not capped; but they don't contribute as much in practice without imitating human thought).

I've always suspected LLMs will plateau. Unfortunately I see plenty of routes to improving using runtime compute/CoT and continuous learning. Those are central to human intelligence.

LLMs already have slightly-greater-than-human system 1 verbal intelligence, leaving some gaps where humans rely on other systems (e.g., visual imagination for tasks like tracking how many cars I have or tic-tac-toe). As we reproduce the systems that give humans system 2 abilities by skillfully iterating system 1, as o1 has started to do, they'll be noticeably smarter than humans.

The difficulty of finding new routes forward in this scenario would produce a very slow takeoff. That might be a big benefit for alignment.

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2025-01-14T22:02:22.571Z · LW(p) · GW(p)

It also lines up with a speculation I've always had. In theory LLMs are predictors, but in practice, are they pretty much imitators?

Yep, I think that's basically the case.

@nostalgebraist [LW · GW] makes an excellent point [LW(p) · GW(p)] that eliciting any latent superhuman capabilities which bigger models might have is an art of its own, and that "just train chatbots" doesn't exactly cut it, for this task. Maybe that's where some additional capabilities progress might still come from.

But the AI industry (both AGI labs and the menagerie of startups and open-source enthusiasts) has so far been either unwilling or unable to move past the chatbot paradigm.

(Also, I would tentatively guess that this type of progress is not existentially threatening. It'd yield us a bunch of nice software tools [LW · GW], not a lightcone-eating monstrosity.)

Replies from: Seth Herd↑ comment by Seth Herd · 2025-01-14T23:37:16.595Z · LW(p) · GW(p)

I agree that chatbot progress is probably not existentially threatening. But it's all too short a leap to making chatbots power general agents. The labs have claimed to be willing and enthusiastic about moving to an agent paradigm. And I'm afraid that a proliferation of even weakly superhuman or even roughly parahuman agents could be existentially threatening.

I spell out my logic for how short the leap might be from current chatbots to takeover-capable AGI agents in my argument for short timelines being quite possible [LW(p) · GW(p)]. I do think we've still got a good shot of aligning that type of LLM agent AGI since it's a nearly best-case scenario. RL even in o1 is really mostly used for making it accurately follow instructions, which is at least roughly the ideal alignment goal of Corrigibility as Singular Target [LW · GW]. Even if we lose faithful chain of thought and orgs don't take alignment that seriously, I think those advantages of not really being a maximizer and having corrigibility might win out.

That in combination with the slower takeoff make me tempted to believe its actually a good thing if we forge forward, even though I'm not at all confident that this will actually get us aligned AGI or good outcomes. I just don't see a better realistic path.

↑ comment by green_leaf · 2025-01-14T15:18:24.683Z · LW(p) · GW(p)

Here's an argument for a capabilities plateau at the level of GPT-4 that I haven't seen discussed before. I'm interested in any holes anyone can spot in it.

One obvious hole would be that capabilities did not, in fact, plateau at the level of GPT-4.

Replies from: Seth Herd, Thane Ruthenis↑ comment by Seth Herd · 2025-01-14T19:01:28.568Z · LW(p) · GW(p)

I thought the argument was that progress has slowed down immensely. The softer form of this argument is that LLMs won't plateau but progress will slow to such a crawl that other methods will surpass them. The arrival of o1 and o3 says this has already happened, at least in limited domains - and hybrid training methods and perhaps hybrid systems probably will proceed to surpass base LLMs in all domains.

↑ comment by Thane Ruthenis · 2025-01-14T21:51:32.321Z · LW(p) · GW(p)

There's been incremental improvement and various quality-of-life features like more pleasant chatbot personas, tool use, multimodality, gradually better math/programming performance that make the models useful for gradually bigger demographics, et cetera.

But it's all incremental, no jumps like 2-to-3 or 3-to-4.

Replies from: green_leaf↑ comment by green_leaf · 2025-01-21T00:53:06.544Z · LW(p) · GW(p)

I see, thanks. Just to make sure I'm understanding you correctly, are you excluding the reasoning models, or are you saying there was no jump from GPT-4 to o3? (At first I thought you were excluding them in this comment, until I noticed the "gradually better math/programming performance.")

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2025-01-21T00:57:22.681Z · LW(p) · GW(p)

I think GPT-4 to o3 represent non-incremental narrow progress, but only, at best, incremental general progress.

(It's possible that o3 does "unlock" transfer learning, or that o4 will do that, etc., but we've seen no indication of that so far.)

comment by Thane Ruthenis · 2025-01-22T17:53:57.239Z · LW(p) · GW(p)

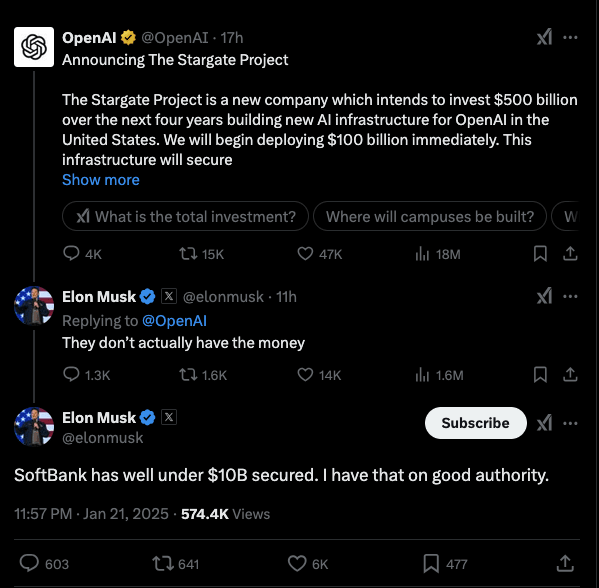

So, Project Stargate. Is it real, or is it another "Sam Altman wants $7 trillion"? Some points:

- The USG invested nothing in it. Some news outlets are being misleading about this. Trump just stood next to them and looked pretty, maybe indicated he'd cut some red tape. It is not an "AI Manhattan Project", at least, as of now.

- Elon Musk claims that they don't have the money and that SoftBank (stated to have "financial responsibility" in the announcement) has less than $10 billion secured. If true, while this doesn't mean they can't secure an order of magnitude more by tomorrow, this does directly clash with "deploying $100 billion immediately" statement.

- But Sam Altman counters that Musk's statement is "wrong", as Musk "surely knows".

- I... don't know which claim I distrust more. Hm, I'd say Altman feeling the need to "correct the narrative" here, instead of just ignoring Musk, seems like a sign of weakness? He doesn't seem like the type to naturally get into petty squabbles like this, otherwise.

- (And why, yes, this is how an interaction between two Serious People building world-changing existentially dangerous megaprojects looks like. Apparently.)

- Some people try to counter Musk's claim by citing Satya Nadella's statement that Satya's "good for his $80 billion". But that's not referring to Stargate, that's about Azure. Microsoft is not listed as investing in Stargate at all, it's only a "technology partner".

- Here's a brief analysis from the CIO of some investment firm. He thinks it's plausible that the stated "initial group of investors" (SoftBank, OpenAI, Oracle, MGX) may invest fifty billion dollars into it over the next four years; not five hundred billion.

- They don't seem to have the raw cash for even $100 billion – and if SoftBank secured the missing funding from some other set of entities, why aren't they listed as "initial equity funders" in the announcement?

Overall, I'm inclined to fall back on @Vladimir_Nesov [LW · GW]'s analysis here [LW · GW]. $30-50 billion this year seems plausible. But $500 billion is, for now, just Altman doing door-in-the-face as he had with his $7 trillion.

I haven't looked very deeply into it, though. Additions/corrections welcome!

Replies from: robert-cousineau↑ comment by Robert Cousineau (robert-cousineau) · 2025-01-22T18:45:30.646Z · LW(p) · GW(p)

Here is what I posted on "Quotes from the Stargate Press Conference [LW · GW]":

On Stargate as a whole:

This is a restatement with a somewhat different org structure of the prior OpenAI/Microsoft data center investment/partnership, announced early last year (admittedly for $100b).

Elon Musk states they do not have anywhere near the 500 billion pledged actually secured:

I do take this as somewhat reasonable, given the partners involved just barely have $125 billion available to invest like this on a short timeline.

Microsoft has around 78 billion cash on hand at a market cap of around 3.2 trillion.

Softbank has 32 billion dollars cash on hand, with a total market cap of 87 billion.

Oracle has around 12 billion cash on hand, with a market cap of around 500 billion.

OpenAI has raised a total of 18 billion, at a valuation of 160 billion.

Further, OpenAI and Microsoft seem to be distancing themselves somewhat - initially this was just an OpenAI/Microsoft project, and now it involves two others and Microsoft just put out a release saying "This new agreement also includes changes to the exclusivity on new capacity, moving to a model where Microsoft has a right of first refusal (ROFR)."

Overall, I think that the new Stargate numbers published may (call it 40%) be true, but I also think there is a decent chance this is new administration trump-esque propoganda/bluster (call it 45%), and little change from the prior expected path of datacenter investment (which I do believe is unintentional AINotKillEveryone-ism in the near future).

Edit: Satya Nadella was just asked about how funding looks for stargate, and said "Microsoft is good for investing 80b". This 80b number is the same number Microsoft has been saying repeatedly.

↑ comment by Thane Ruthenis · 2025-01-22T19:05:45.791Z · LW(p) · GW(p)

Overall, I think that the new Stargate numbers published may (call it 40%) be true, but I also think there is a decent chance this is new administration trump-esque propoganda/bluster (call it 45%),

I think it's definitely bluster, the question is how much of a done deal it is to turn this bluster into at least $100 billion.

I don't this this changes the prior expected path of datacenter investment at all. It's precisely how the expected path was going to look like, the only change is how relatively high-profile/flashy this is being. (Like, if they invest $30-100 billion into the next generation of pretrained models in 2025-2026, and that generation fails, they ain't seeing the remaining $400 billion no matter what they're promising now. Next generation makes or breaks the investment into the subsequent one, just as was expected before this.)

He does very explicitly say "I am going to spend 80 billion dollars building Azure". Which I think has nothing to do with Stargate.

(I think the overall vibe he gives off is "I don't care about this Stargate thing, I'm going to scale my data centers and that's that". I don't put much stock into this vibe, but it does fit with OpenAI/Microsoft being on the outs.)

comment by Thane Ruthenis · 2024-12-24T20:30:22.936Z · LW(p) · GW(p)

Here's something that confuses me about o1/o3. Why was the progress there so sluggish?

My current understanding is that they're just LLMs trained with RL to solve math/programming tasks correctly, hooked up to some theorem-verifier and/or an array of task-specific unit tests to provide ground-truth reward signals. There are no sophisticated architectural tweaks, not runtime-MCTS or A* search, nothing clever.

Why was this not trained back in, like, 2022 or at least early 2023; tested on GPT-3/3.5 and then default-packaged into GPT-4 alongside RLHF? If OpenAI was too busy, why was this not done by any competitors, at decent scale? (I'm sure there are tons of research papers trying it at smaller scales.)

The idea is obvious; doubly obvious if you've already thought of RLHF; triply obvious after "let's think step-by-step" went viral. In fact, I'm pretty sure I've seen "what if RL on CoTs?" discussed countless times in 2022-2023 (sometimes in horrified whispers regarding what the AGI labs might be getting up to).

The mangled hidden CoT and the associated greater inference-time cost is superfluous. DeepSeek r1/QwQ/Gemini Flash Thinking have perfectly legible CoTs which would be fine to present to customers directly; just let them pay on a per-token basis as normal.

Were there any clever tricks involved in the training? Gwern speculates about that here. But none of the follow-up reasoning models have a o1-style deranged CoT, so the more straightforward approaches probably Just Work.

Did nobody have the money to run the presumably compute-intensive RL-training stage back then? But DeepMind exists. Did nobody have the attention to spare, with OpenAI busy upscaling/commercializing and everyone else catching up? Again, DeepMind exists: my understanding is that they're fairly parallelized and they try tons of weird experiments simultaneously. And even if not DeepMind, why have none of the numerous LLM startups (the likes of Inflection, Perplexity) tried it?

Am I missing something obvious, or are industry ML researchers surprisingly... slow to do things?

(My guess is that the obvious approach doesn't in fact work and you need to make some weird unknown contrivances to make it work, but I don't know the specifics.)

Replies from: brambleboy, leogao↑ comment by brambleboy · 2024-12-24T22:49:00.509Z · LW(p) · GW(p)

While I don't have specifics either, my impression of ML research is that it's a lot of work to get a novel idea working, even if the idea is simple. If you're trying to implement your own idea, you'll be banging your head against the wall for weeks or months wondering why your loss is worse than the baseline. If you try to replicate a promising-sounding paper, you'll bang your head against the wall as your loss is worse than the baseline. It's hard to tell if you made a subtle error in your implementation or if the idea simply doesn't work for reasons you don't understand because ML has little in the way of theoretical backing. Even when it works it won't be optimized, so you need engineers to improve the performance and make it stable when training at scale. If you want to ship a working product quickly then it's best to choose what's tried and true.

comment by Thane Ruthenis · 2025-02-10T20:06:25.697Z · LW(p) · GW(p)

Some more evidence that whatever the AI progress on benchmarks is measuring, it's likely not measuring what you think it's measuring [LW · GW]:

AIME I 2025: A Cautionary Tale About Math Benchmarks and Data Contamination

AIME 2025 part I was conducted yesterday, and the scores of some language models are available here:

https://matharena.ai thanks to @mbalunovic, @ni_jovanovic et al.I have to say I was impressed, as I predicted the smaller distilled models would crash and burn, but they actually scored at a reasonable 25-50%.

That was surprising to me! Since these are new problems, not seen during training, right? I expected smaller models to barely score above 0%. It's really hard to believe that a 1.5B model can solve pre-math olympiad problems when it can't multiply 3-digit numbers. I was wrong, I guess.

I then used openai's Deep Research to see if similar problems to those in AIME 2025 exist on the internet. And guess what? An identical problem to Q1 of AIME 2025 exists on Quora:

https://quora.com/In-what-bases-b-does-b-7-divide-into-9b-7-without-any-remainder

I thought maybe it was just coincidence, and used Deep Research again on Problem 3. And guess what? A very similar question was on math.stackexchange:

https://math.stackexchange.com/questions/3548821/

Still skeptical, I used Deep Research on Problem 5, and a near identical problem appears again on math.stackexchange:

I haven't checked beyond that because the freaking p-value is too low already. Problems near identical to the test set can be found online.

So, what--if anything--does this imply for Math benchmarks? And what does it imply for all the sudden hill climbing due to RL?

I'm not certain, and there is a reasonable argument that even if something in the train-set contains near-identical but not exact copies of test data, it's still generalization. I am sympathetic to that. But, I also wouldn't rule out that GRPO is amazing at sharpening memories along with math skills.

At the very least, the above show that data decontamination is hard.

Never ever underestimate the amount of stuff you can find online. Practically everything exists online.

I expected that [LW(p) · GW(p)]:

I think one of the other problems with benchmarks is that they necessarily select for formulaic/uninteresting problems that we fundamentally know how to solve. If a mathematician figured out something genuinely novel and important, it wouldn't go into a benchmark (even if it were initially intended for a benchmark), it'd go into a math research paper. Same for programmers figuring out some usefully novel architecture/algorithmic improvement. Graduate students don't have a bird's-eye-view on the entirety of human knowledge, so they have to actually do the work, but the LLM just modifies the near-perfect-fit answer from an obscure publication/math.stackexchange thread or something.

I expect the same is the case with programming benchmarks, science-quiz benchmarks, et cetera.

Now, this doesn't necessarily mean that the AI progress has been largely illusory and that we're way further from AGI than the AI hype men would have you believe (although I am very tempted to make this very pleasant claim, and I do place plenty of probability mass on it).

But if you're scoring AIs by the problems they succeed at, rather than the problems they fail at, you're likely massively overestimating their actual problem-solving capabilities.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2025-02-11T14:30:10.216Z · LW(p) · GW(p)

To be fair, non-reasoning models do much worse on these questions, even when it's likely that the same training data has already been given to GPT-4.

Now, I could believe that RL is more or less working as an elicitation method, which plausibly explains the results, though still it's interesting to see why they get much better scores, even with very similar training data.

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2025-02-11T16:10:55.955Z · LW(p) · GW(p)

I do think RL works "as intended" to some extent, teaching models some actual reasoning skills, much like SSL works "as intended" to some extent, chiseling-in some generalizable knowledge. The question is to what extent it's one or the other.

comment by Thane Ruthenis · 2024-09-13T20:52:24.130Z · LW(p) · GW(p)

On the topic of o1's recent release: wasn't Claude Sonnet 3.5 (the subscription version at least, maybe not the API version) already using hidden CoT? That's the impression I got from it, at least.

The responses don't seem to be produced in constant time. It sometimes literally displays a "thinking deeply" message which accompanies a unusually delayed response. Other times, the following pattern would play out:

- I pose it some analysis problem, with a yes/no answer.

- It instantly produces a generic response like "let's evaluate your arguments".

- There's a 1-2 second delay.

- Then it continues, producing a response that starts with "yes" or "no", then outlines the reasoning justifying that yes/no.

That last point is particularly suspicious. As we all know, the power of "let's think step by step" is that LLMs don't commit to their knee-jerk instinctive responses, instead properly thinking through the problem using additional inference compute. Claude Sonnet 3.5 is the previous out-of-the-box SoTA model, competently designed and fine-tuned. So it'd be strange if it were trained to sabotage its own CoTs by "writing down the bottom line first" like this, instead of being taught not to commit to a yes/no before doing the reasoning.

On the other hand, from a user-experience perspective, the LLM immediately giving a yes/no answer followed by the reasoning is certainly more convenient.

From that, plus the minor-but-notable delay, I'd been assuming that it's using some sort of hidden CoT/scratchpad, then summarizes its thoughts from it.

I haven't seen people mention that, though. Is that not the case?

(I suppose it's possible that these delays are on the server side, my requests getting queued up...)

(I'd also maybe noticed a capability gap between the subscription and the API versions of Sonnet 3.5, though I didn't really investigate it and it may be due to the prompt.)

Replies from: habryka4, quetzal_rainbow↑ comment by habryka (habryka4) · 2024-09-13T20:59:22.446Z · LW(p) · GW(p)

My model was that Claude Sonnet has tool access and sometimes does some tool-usage behind the scenes (which later gets revealed to the user), but that it wasn't having a whole CoT behind the scenes, but I might be wrong.

↑ comment by quetzal_rainbow · 2024-09-13T23:42:48.972Z · LW(p) · GW(p)

I think you heard about this thread (I didn't try to replicate it myself).

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2024-09-14T00:13:16.778Z · LW(p) · GW(p)

Thanks, that seems relevant! Relatedly, the system prompt indeed explicitly instructs it to use "<antThinking>" tags when creating artefacts. It'd make sense if it's also using these tags to hide parts of its CoT.

comment by Thane Ruthenis · 2025-01-28T21:34:31.279Z · LW(p) · GW(p)

Here's a potential interpretation of the market's apparent strange reaction to DeepSeek-R1 (shorting Nvidia).

I don't fully endorse this explanation, and the shorting may or may not have actually been due to Trump's tariffs + insider trading, rather than DeepSeek-R1. But I see a world in which reacting this way to R1 arguably makes sense, and I don't think it's an altogether implausible world.

If I recall correctly, the amount of money globally spent on inference dwarfs the amount of money spent on training. Most economic entities are not AGI labs training new models, after all. So the impact of DeepSeek-R1 on the pretraining scaling laws is irrelevant: sure, it did not show that you don't need bigger data centers to get better base models, but that's not where most of the money was anyway.

And my understanding is that, on the inference-time scaling paradigm, there isn't yet any proven method of transforming arbitrary quantities of compute into better performance:

- Reasoning models are bottlenecked on the length of CoTs that they've been trained to productively make use of. They can't fully utilize even their context windows; the RL pipelines just aren't up to that task yet. And if that bottleneck were resolved, the context-window bottleneck would be next: my understanding is that infinite context/"long-term" memories haven't been properly solved either, and it's unknown how they'd interact with the RL stage (probably they'd interact okay, but maybe not).

- o3 did manage to boost its ARC-AGI and (maybe?) FrontierMath performance by... generating a thousand guesses and then picking the most common one...? But who knows how that really worked, and how practically useful it is. (See e. g. this, although that paper examines a somewhat different regime.)

- Agents, from Devin to Operator to random open-source projects, are still pretty terrible. You can't set up an ecosystem of agents in a big data center and let them rip, such that the ecosystem's power scales boundlessly with the data center's size. For all but the most formulaic tasks, you still need a competent human closely babysitting everything they do, which means you're still mostly bottlenecked on competent human attention.

Suppose that you don't expect the situation to improve: that the inference-time scaling paradigm would hit a ceiling pretty soon, or that it'd converge to distilling search into forward passes [LW(p) · GW(p)] (such that the end users end up using very little compute on inference, like today), and that agents just aren't going to work out the way the AGI labs promise.

In such a world, a given task can either be completed automatically by an AI for some fixed quantity of compute X, or it cannot be completed by an AI at all. Pouring ten times more compute on it does nothing.

In such a world, if it were shown that the compute needs of a task can be met with ten times less compute than previously expected, this would decrease the expected demand for compute.

The fact that capable models can be run locally might increase the number of people willing to use them (e. g., those very concerned about data privacy), as might the ability to automatically complete 10x as many trivial tasks. But it's not obvious that this demand spike will be bigger than the simultaneous demand drop.

And I, at least, when researching ways to set up DeepSeek-R1 locally, found myself more drawn to the "wire a bunch of Macs together" option, compared to "wire a bunch of GPUs together" (due to the compactness). If many people are like this, it makes sense why Nvidia is down while Apple is (slightly) up. (Moreover, it's apparently possible to run the full 671b-parameter version locally, and at a decent speed, using a pure RAM+CPU setup; indeed, it appears cheaper than mucking about with GPUs/Macs, just $6,000.)

This world doesn't seem outright implausible to me. I'm bearish on agents and somewhat skeptical of inference-time scaling. And if inference-time scaling does deliver on its promises, it'll likely go the way of search-and-distill.

On balance, I don't actually expect the market to have any idea what's going on, so I don't know that its reasoning is this specific flavor of "well-informed but skeptical". And again, it's possible the drop was due to Trump, nothing to do with DeepSeek at all.

But as I'd said, this reaction to DeepSeek-R1 does not seem necessarily irrational/incoherent to me.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2025-01-29T02:59:18.447Z · LW(p) · GW(p)

The bet that "makes sense" is that quality of Claude 3.6 Sonnet, GPT-4o and DeepSeek-V3 is the best that we're going to get in the next 2-3 years, and DeepSeek-V3 gets it much cheaper (less active parameters, smaller margins from open weights), also "suggesting" that quality is compute-insensitive in a large range, so there is no benefit from more compute per token.

But if quality instead improves soon (including by training DeepSeek-V3 architecture on GPT-4o compute), and that improvement either makes it necessary to use more compute per token, or motivates using inference for more tokens even with models that have the same active parameter count (as in Jevons paradox), that argument doesn't work. Also, the ceiling of quality at the possible scaling slowdown point depends on efficiency of training (compute multiplier) applied to the largest training system that the AI economics will support (maybe 5-15 GW without almost-AGI), and improved efficiency of DeepSeek-V3 raises that ceiling.

comment by Thane Ruthenis · 2025-03-30T01:39:18.399Z · LW(p) · GW(p)

It's been more than three months since o3 and still no o4, despite OpenAI researchers' promises.

Deep Learning has officially hit a wall. Schedule the funeral.

[/taunting_god]

Replies from: Vladimir_Nesov, teradimich↑ comment by Vladimir_Nesov · 2025-03-30T10:27:59.890Z · LW(p) · GW(p)

If o3 is based on GPT-4o, there is a reasoning model based on GPT-4.5 that's better. If o3 is based on GPT-4.5 (and so the reason it's still not out is that they are waiting for Blackwells to inference it at a reasonable speed and cost), then it was a hasty job just after the base model for GPT-4.5 was pretrained, and so by now they have something much better. Either way, there is some sort of "o4", but it's probably a bad look to announce it before releasing the already-announced o3.

↑ comment by teradimich · 2025-03-30T11:30:37.444Z · LW(p) · GW(p)

comment by Thane Ruthenis · 2025-02-02T22:12:23.907Z · LW(p) · GW(p)

Some thoughts on protecting against LLM steganography.

tl;dr: I suspect the Paraphraser idea [LW · GW] is near-completely ineffectual as you scale the capabilities, both for scheming and for general CoT interpretability.

In the reasoning-as-communication framework [LW(p) · GW(p)], the amount of information a fixed-length message/CoT can transfer is upper-bounded by information-theoretic constraints. That is, if we have a ten-bit message, it cannot communicate more than ten bits of information: it cannot always uniquely specify one internal state of an AI model from more than internal states.

However, the amount of information is not lower-bounded. Depending on the decoder, a ten-bit message can communicate between zero and ten bits of information. (A decoder that's just a constant function recovers zero bits, a decoder that only pays attention to the first bit recovers only one bit, et cetera.)

The idea of steganography is that, if we pick a CoT string, and run both an AI model's internal decoders and a human's language-comprehension decoders on it, the AI model would extract more bits of information from it. Paraphrasing, humans' decoders are "coarser": they have broader equivalence classes of messages that map to the same internal representation, compared to AI models.

For example, "a dog crossed the street" and "a dog traveled across the street" might map to the same internal representation for a human, but to different ones for an AI model. Similarly, things like "quantum butterflies juggled metaphysical spaghetti" and "ambitious lampshade collected vintage moonbeams" can map to the same "nonsense" internal representation in humans, but have some useful meaning for AI models. The bits a human fails to make use of correspond to "steganography" or "superhuman latent knowledge".

Proposals such as Shoggoth + Paraphraser + Face [LW · GW] aim to destroy any information in CoTs that cannot be recovered by humans, by mapping each CoT string to the equivalence class of strings that all map to the same internal representation in humans.

However, there's an issue: what if the empty string already communicates plenty of steganographically encoded information that humans aren't privy to? That is: what if some knowledge is hard-coded into an AI model, such that it's elicited on any activation, and leads to it converging towards reaching the same hidden conclusions even after we destroy its ability to sneak-in additional query-relevant information?

For example:

- If the AI model implicitly learned the Theory of Everything, that knowledge is going to be activated on any prompt – and so, no amount of paraphrasing is going to force it to talk about the Theory of Everything in humanese. Communicating the ToE from one instance of the model to its future self takes up zero bits.

- If the AI model has internalized some hidden goals, the heuristics for pursuing those goals are likewise going to be activated on any prompt, and it would engage in massively productive hidden scheming on any prompt. Importantly, the information-bottleneck constraints imposed by the Paraphraser wouldn't even be hitting the hidden-plotting compartment specifically. They'd just uniformly lower the model's computational power.

- E. g., in the Shoggoth + Paraphraser + Face paradigm, that'd just mean the model learns to think in longer CoTs (because it needs longer messages to communicate the same amount of information), not to do fewer "sneaky" computations.[1]