AI Safety is Dropping the Ball on Clown Attacks

post by trevor (TrevorWiesinger) · 2023-10-22T20:09:31.810Z · LW · GW · 82 commentsContents

Clown attacks AI safety is dropping the ball on clown attacks, at minimum AI in global affairs Taking a step back and looking at a fundamental problem AI pause as the turning point How to protect yourself and others: None 82 comments

Epistemic status: High confidence (~>70%) that clown attacks are prevalent, and deliberately weaponized by governments and/or intelligence agencies in particular. Very high confidence (~>90%) that the human brain is highly vulnerable to clown attacks, and that a lack of awareness of clown attacks is a security risk, like using the word “password” as your password, except with control of your own mind at stake rather than control over your computer’s operating system and/or your files. This has been steelmanned; the 10 years ago/10 years from now error bars seem appropriately wide.

These concepts are complicated, and I have done my best to make it as easy as possible for most people in AI safety to understand it, even people without a quant background (e.g. AI governance).

Clown attacks

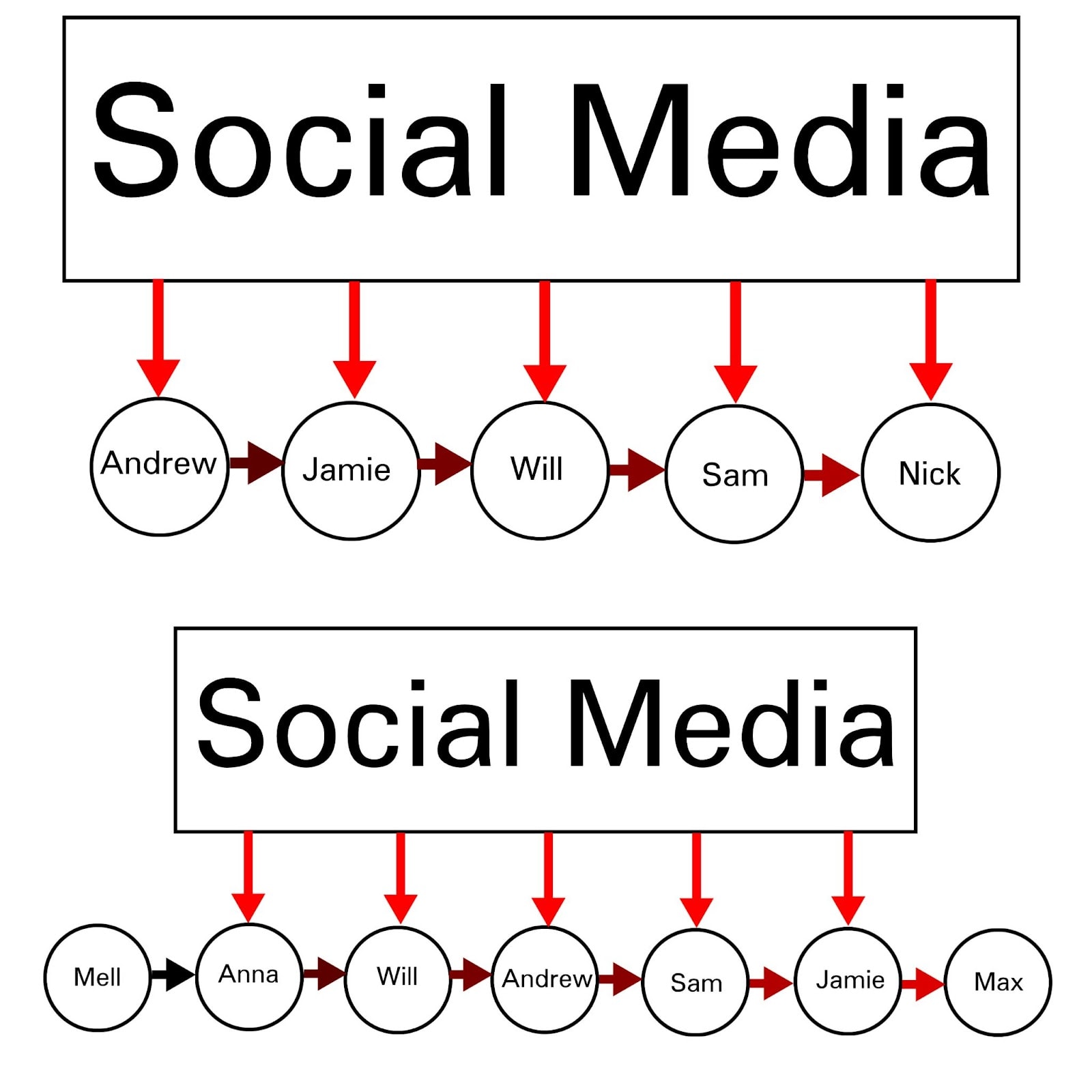

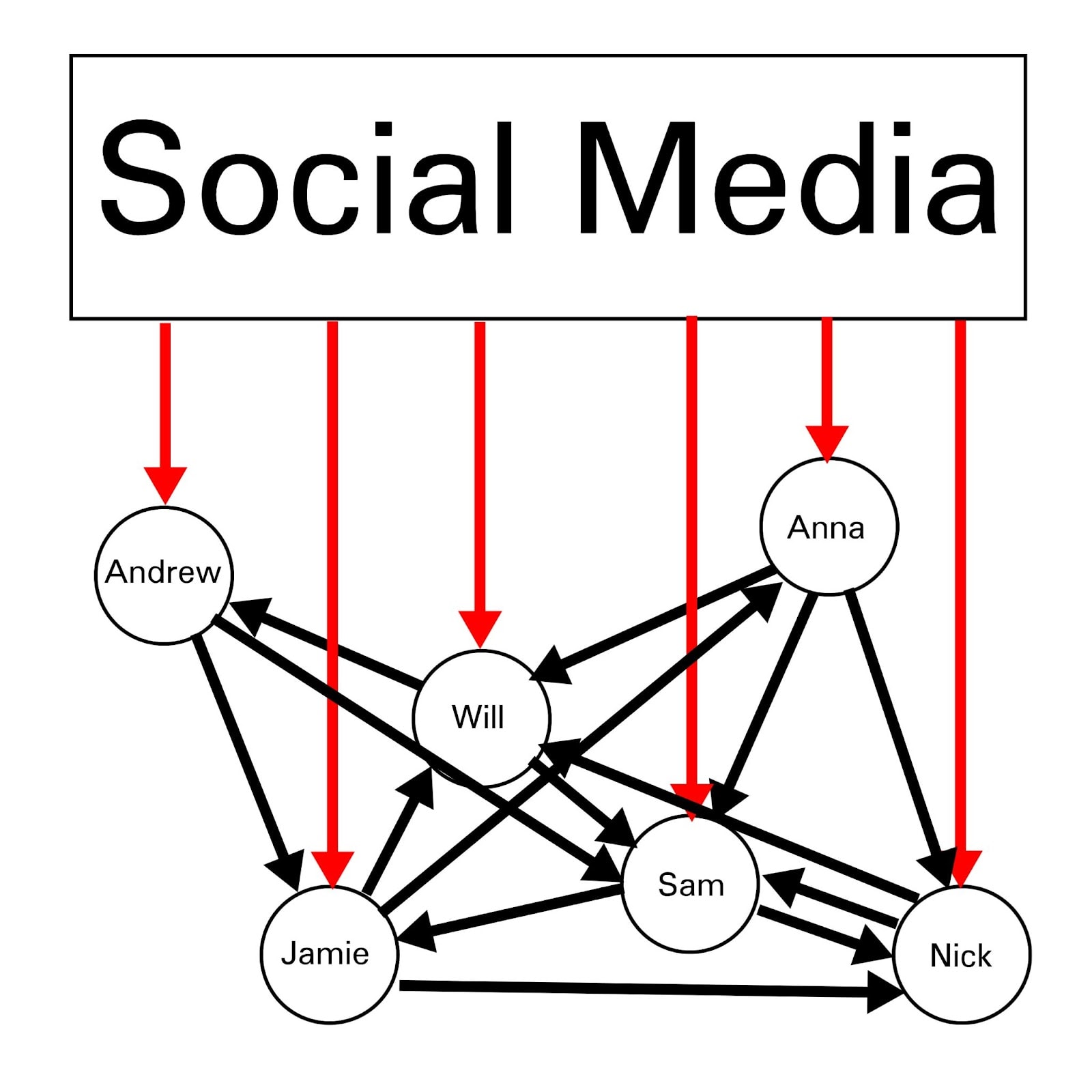

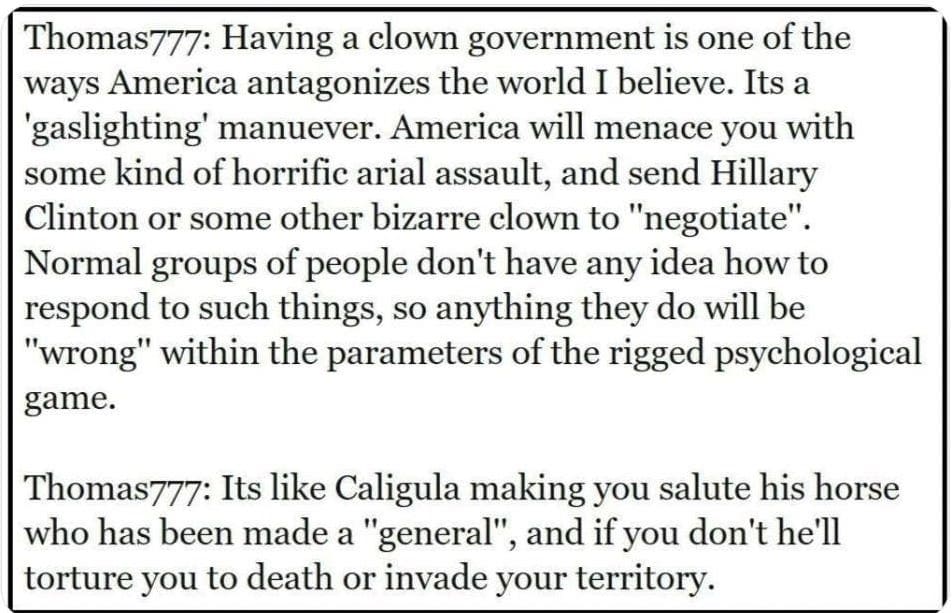

The core dynamic of clown attacks is that perception of social status affects what thoughts the human brain is and isn’t willing to think. This can be used by intelligence agencies and governments to completely deny access to specific lines of thought. Generally, there’s a lot of ways to socially engineer someone’s world model by taking a target concept and having the wrong people say it at specific times and in specific ways. Clown attacks include having right-wing clowns being the main people who are seen talking about the Snowden revelations, or degrowthers being the main people talking about the possibility that technological advancement/human capabilities will end it’s net-positive trend. These are only specific examples of cost-efficient ways to use a specific circumstance (clowns) to change the way that someone feels about a targeted concept.

With clown attacks, the exploit/zero day is the human tendency to associate specific lines of thought with low social status or low-status people, which will consistently inhibit the human brain from pursuing that targeted line of thought.

Although clown attacks may seem mundane on their own, they are a case study proving that powerful human thought steering technologies have probably already been invented, deployed, and tested at scale by AI companies, and are reasonably likely to end up being weaponized against the entire AI safety community at some point in the next 10 years.

AI safety is dropping the ball on clown attacks, at minimum

AI safety is basically a community of nerds who each chanced upon the engineering problem that the fate of this side of the universe revolves around. Many (~300) [LW · GW] have decided to focus exclusively on that engineering problem, which seems like a very reasonable thing to do. However, in order for that to be a worthwhile course of action, the AI safety community must continue to exist without being destroyed or coopted [LW · GW] by external adversaries or forces. Continued existence, without terminal failure, is an assumption that is currently unquestioned by virtually everyone in the AI safety community. We largely assume that everything will be ok, with AGI being the only turning point. This is a dangerous would model to have.

The history of religion and cryopreservation have informed us that there is an ambient phenomena of bad consensus around terrible, untrue, and viciously self-destructive beliefs and practices. This is an ambient phenomenon and it is at the core of the AI safety situation. So if billions of people are getting something else really wrong too, in addition to ignoring AI safety, then that does not water down the overriding significance and priority of AI safety.

A large proportion of people, to this day, still think that smarter-than-human AI is merely science fiction; this is the kind of thing that happens when >99% of the money spent paying people to think about the future is spent on science fiction writers instead of researchers, which for AI, was true for all of human civilization for all of history until around a decade or two ago.

My argument here is that powerful human manipulation systems are already very easy to build, with 10-year-old technology, and also very easy for powerful people to deny access to people who are less powerful. However, the situation of the moment is that general purpose cognitive hacks like clown attacks can even deny awareness of this technology to targeted people with a surprisingly high success rate, not just access to the technology.

People like Gary Marcus might try to hijack valuable concepts like “p(doom)” for their own pet issue, such as job automation, but “slow takeoff” on the other hand is something that could transform the world in a wide variety of ways that has practical relevance to the continuity and survival of AI alignment efforts.

It’s important to reiterate that a large proportion of people, to this day, still think that smarter-than-human AI is merely science fiction; this is the kind of thing that happens when >99% of the money spent paying people to think about the future is spent on science fiction writers instead of researchers, which for AI, was true for all of human civilization for all of history until around a decade or two ago. In reality, smarter-than-human AI is the finish line for humanity, and being oriented towards that finish line is one of the best ways to do important or valuable things with your time, instead of unintentionally doing unimportant or less valuable things. It is also a good way to orient yourself towards reality (although orienting yourself towards orienting yourself towards reality competes strongly for that #1 slot, as that also tends to result in ending up oriented towards AI as the finish line for humanity, and thus you are oriented towards your own existence by extension as you are a subset of humanity). No amount of mind control technology can displace smarter-than-human AI as the finish line for humanity, but it can be an incredibly helpful gear in our world models for what to expect from the AI industry and from global affairs relevant to the AI race (e.g. US-China relations). Ultimately, however, influence technology is a near-term problem that has a lot of potential to distract a lot of people from the ultimate and inescapable problem of AI alignment; the only reason I’m writing about it here is because I think that the AI safety community will be much better off making stronger predictions and having stronger models of the AI industry and the AI race, as well as the slow takeoff environment that AI alignment researchers might be stuck living in.

The AI safety leaders currently see slow takeoff as humans gaining capabilities, and this is true; and also already happening, depending on your definition. But they are missing the mathematically provable fact that information processing capabilities of AI are heavily stacked towards a novel paradigm of powerful psychology research, which by default is dramatically widening the attack surface of the human mind.

Cognitive warfare is not a new X-risk or S-risk. It is a critical factor, we need to understand it to understand factors driving AI geopolitics and AI race dynamics. Cognitive warfare is not a competitor to AI safety, it will not latch on and insert itself, and it must not be allowed to take away attention from AI safety.

With AI and massive amounts of human behavioral data, humans are now gaining profound capabilities to manipulate and steer individuals and systems, and the AI and human behavioral data stockpiles have been accumulating for over 10 years.

Here, I’m making the case that the conditions are already ripe for intensely powerful human thought steering and behavior manipulation technology, and have been for perhaps 10 years or more. Thus, the burden of proof should be on the claim that our minds are safe and that the attack surface is small, not on my claim that our minds are at risk and the attack surface is large. I don’t like to invoke this logic here. This logic should be prioritized for AI alignment, the final engineering problem that this side of the universe hinges on, and an engineering problem that could plausibly be as difficult for humans as rocket science is for chimpanzees. But the logic behind security is still fundamental and I think that I have made the case strongly that the AI safety community requires some threshold of resilience to hacking and that this threshold is probably very far from being met. I also think that most of the required solutions are quick and easy fixes, even if it doesn’t seem that way at first.

The existence of clown attacks is proof that there is at least one powerful cognitive attack, detectable and exploitable by intelligence agencies and large social media companies, which exploits a zero day in the human brain, which also works on AI safety-adjacent people until explicitly discovered and patched.

There are many other ways, especially when you combine human ingenuity with massive amounts of user data and multi-armed bandit algorithms. AI is merely a superior form of multi-armed bandit algorithms, and LLMs are just another increment forward, as they can actually read and understand the content of posts, not just measure changes in behavior from different kinds of people caused by specific combinations of posts.

Social media platforms are overwhelmingly capable of doing this; many people even look to social media as a bellwether to see what’s popular, even though news feed algorithms have massive and precise control over what kinds of people are shown what things, how frequently specific things are shown at all, and which combinations steer people’s thinking and preferences in measurable directions. Social media can even accurately reflect what’s actually popular 98% of the time in order to gain that trust, reserving the remaining 2% to actively determine what becomes popular and what doesn’t. As compute and algorithmic capabilities advance and trust is consolidated, this ratio can be moved closer to 98%.

You can’t just brush off clown attacks because you’ll worry that, if you seriously entertain that line of thought, then other people will assume that you’re on the side of the clowns and you will lose status in their eyes. Sufficiently powerful clown attacks can make this a self-fulfilling prophecy by convincing everyone that a specific line of reasoning is low-status, thus making it low status and creating serious real-life consequences for pursuing a specific line of cognition. The social media news feed or other algorithm-controlled environment (e.g. tiktok, reels) gives the appearance of being a randomly-generated environment, when in reality the platform (and people with backdoor access to the platform’s servers) are highly capable of altering algorithms in order to fabricate an environment making some sentiment appear orders of magnitude more prevalent than it actually is, among a specific demographic of people such as scientists or clowns. Or, even worse, steering people’s thinking in measurable directions by running multi-armed bandit algorithms or gradient descent to find environments or combinations of posts that steer people’s thinking in measurable directions. Clown attacks are merely the most powerful technique that a multi-armed bandit algorithm could arrive at that I’m currently aware of; there are probably plenty of other exploits based off of social status alone, a very serious zero day in the human brain.

This strongly indicates the existence of other zero days and powerful, hard-to-spot exploits, including (but not limited to) exploiting the human instinct to pursue social status, and avoid specific lines of thought based on anticipations of social status gain and loss. These zero days and exploits are either discoverable or already discovered by powerful people who must surreptitiously deploy and refine these exploits at scale, as this is mathematically required in order for them to work at all. This is mathematically provable, covert and large-scale deployments are necessary to get the massive sample sizes of human behavior at the scale necessary to vastly outperform academic psychology, with perhaps 1/10th of the competent workforce or less.

If there was any such thing as a magic trick that could hack the minds of every person in a room at once with none of them noticing, like Eliezer Yudkowsky’s post What is the strangest thing an AI could tell you [LW · GW], it would be to totally deny people the ability to think a specific true thought or approach an obviously valuable line of inquiry, due to intense fear of losing social status if they are associated with that line of inquiry. Social status seems to be something that the human brain evolved to prioritize in the ancestral environment, and this trait alone makes our cognition hackable.

Conspiracy theorists are clowns. The JFK assassinations may have been a critical factor bridging two separate events that were pivotal in US history, the Cuban Missile Crisis (1962) and the Vietnam War (1964-1975). Understanding the Cold War and the US Government’s history is critical for forming accurate models of the US government in its current form (e.g. knowing that the CIA sometimes hijacks entire regimes and orchestrates coups against the ones they can’t), including where AI safety fits in. The same goes for 9/11. And yet, these pivotal points in history and world modeling attract people and epistemics more like those surrounding Elvis’s death, than the Snowden revelations (hopefully, the Snowden revelations don’t end up getting completely sucked into that ugly pit, although there are already plenty of clowns on social media actively trying).

Understanding the current level of risk of cognitive warfare attacks doesn’t just require the security mindset, it requires the security mindset plus an adequate perspective. It requires long list of examples of specific exploits, so that you can get an idea of what else might be out there, what things turned out to be easy to discover with current systems, powered by behavioral data from millions of people, and the continuous access required to perform AI-assisted experimentation and psychological research in real time. I hope that clown attacks were a helpful example towards this end.

Plausible deniability is something you should expect in the 2020s, a world where the lawyers per capita is higher than ever before. Similarly, office politics are also highly prevalent among elites, so the bar is very low for a person to realize that it is a winning strategy to turn people against each other, via starting rumors and scapegoats, then it is for people to know that something came from you. Plausible deniability and false flag attacks did not begin with cyberattacks; they both became prevalent during the 20th century. This is another reason why clown attacks are so powerful; there is an overwhelming prevalence of ambient clowns in contemporary civilization, so it is incredibly difficult to distinguish a clown attack from noise. This plausible deniability further incentivizes clown attacks due to the incredibly low risk of detection; the expected cost of a clown attack basically comes down to server energy costs, since the net expected cost from being discovered is virtually zero.

Analysts were shocked by the swiftness that critical information like lab leak hypothesis and Covid censorship all ended up relegated to the bizarre alternate reality of right-wing clowns, the same universe as pizza slave dungeons and first-trimester abortion being murder, even though the probability of a lab leak and the extent of information tampering on Covid were both obviously critical information for anyone trying to form an accurate world model from 2020-22. That obviousness was simply killed. Clown attacks can do things like that. The human mind hinges enough on social status for things like that to work. There is at least one zero day.

Deciding what people see as low-status or villains vs. high-status or heroes-like-you is generating a very powerful dynamic for shaping what people think, e.g. SBF as an atrocious villain that dominates most people’s understanding of EA and AI safety. Clown attacks are just the most powerful cognitive hack that I’m currently aware of, especially if screen refresh rate manipulation never ends up deployed.

A big element of the modern behavior manipulation paradigm is the ability to just try tons of things and see what works; not just brute forcing variations of known strategies to make them more effective, but to brute force novel manipulation strategies in the first place. This completely circumvents the scarcity and the research flaws that caused the replication crisis which still bottlenecks psychology research today. In fact, original psychological research in our civilization is no longer bottlenecked on the need for smart, insightful people who can do hypothesis generation so that the finite studies you can afford to fund each hopefully find something valuable. With the current social media paradigm alone, you can run studies, combinations of news feed posts for example, until you find something useful. Measurability is critical for this.

By comparing people to other people and predicting traits and future behavior, multi-armed bandit algorithms can predict whether a specific manipulation strategy is worth the risk of undertaking at all in the first place; resulting in a high success rate and a low detection rate (as detection would likely yield a highly measurable response, particularly with substantial sensor exposure such as uncovered webcams, due to comparing people’s microexpressions to cases of failed or exposed manipulation strategies, or working webcam video data into foundation models). When you have sample sizes of billions of hours of human behavior data and sensor data, millisecond differences in reactions from different kinds of people (e.g. facial microexpressions, millisecond differences at scrolling past posts covering different concepts, heart rate changes after covering different concepts, eyetracking differences after eyes passing over specific concepts, touchscreen data, etc) transform from being imperceptible noise to becoming the foundation of webs of correlations. Like it or not, unless you use the arrow keys, the rate at which you scroll past each social media post (either with a touchscreen/pad or a mouse wheel) is a curve; scrolling alone is linear algebra, which fits cleanly into modern AI systems, and trillions of those curves are generated every day.

AI is not even needed to run clown attacks, let alone LLMs. Rather, AI is what’s needed in order to *invent* techniques like clown attacks. Automated information processing is all you need to find manipulation techniques that work on humans. That capability probably came online years ago. And it can’t be done unless you have human behavior data from millions of different people all using the same controlled environment, data that they would not give if they knew the risks.

I can’t know what techniques a multi-armed bandit algorithm will discover without running the algorithm itself; which I can’t do, because that much data is only accessible to the type of people who buy servers by the acre, and even for them, the data is monopolized by the big tech companies (Facebook, Amazon, Microsoft, Apple, and Google) and intelligence agencies large and powerful enough to prevent hackers from stealing and poisoning the data (NSA, etc). I also don’t know what multi-armed bandit algorithms will find when people on the team are competent psychologists, spin doctors, or other PR experts interpreting and labeling the human behavior in the data so that the human behavior can become measurable. Human insight from just a handful of psychological experts can be more than enough to train AI to work autonomously; although continuous input from those experts would be needed and plenty of insights, behaviors, and discoveries would fall through the cracks and take an extra 3 years or something to be discovered and labeled.

AI in global affairs

Clown attacks are not advancing in isolation, they are parallel to a broad acceleration in the understanding and exploitation of the human mind, which itself is a byproduct of accelerating AI capabilities research. For example, we are simultaneously entering a new era where intelligence agencies use AI to make polygraph tests actually work, which would be absolutely transformative for geopolitical affairs [LW · GW] which currently revolve around the decision theory paradigm where every single employee is a human who is vastly more capable of generating lies than distinguishing them, and thus cannot be sorted by statements like “I am 100% loyal” or “I know who all the competent and corrupt people on this team are”.

My understanding of the geopolitical significance of influence technologies is that information warfare victories are currently understood as a major win condition for international conflict, similar to conquest by military force; and that this understanding has been prevalent among government and military elites for a long time, starting with the collapse of the Soviet Union and the fall of the Berlin Wall and pulling the rug out from under all of the eastern european communist regimes, possibly starting as early as the Vietnam antiwar movement in the US, but reaching consensus among elites around the 2010s after the backlash to the War on Terror dominated the battlefield itself. Among many other places, this consensus is described in Robert Sutter’s books on US-China relations and Joseph Nye’s book on elite persuasion, Soft Power. Unlike conventional and nuclear wars, information wars can be both fought and won, strike at the human minds that make up the most fundamental building block of government and military institutions, and have a long and rich history of being one of the most important goalposts determining the winners and losers in great power conflicts between the US, Russia/USSR, and China. So we should be considering information warfare to be one of the reasons that governments take AI safety seriously; anticipation of information warfare originating from foreign governments is one of the core features of the contemporary American and Chinese regimes and militaries, and this is widely known among analysts.

Pivoting from social media exposure to 1-1 and group communication still contains substantial risk of psychological hacking, but minimizing the network’s surface area/exposure to social media will still reduce risk substantially and possibly adequately (although access to the technology itself is required in order to verify this adequacy, and the data sets large and secure enough to actually run manipulation research is likely limited to sufficiently large tech companies and intelligence agencies, like Facebook and the NSA, and less accessible to smaller weaker orgs like the Department of Homeland Security or Twitter/X who are vulnerable to hacking and data poisoning by larger orgs, although there are hard-to-verify rumors of sophisticated sensor systems deployed by JP Morgan Chase, and reports that many state-linked Chinese companies and institutions have been experimenting with large sample sizes of deployed electroencephalograms).

The current attack surface for psychological hacks is excessive and extreme in the AI safety community, and even the bare-minimum solutions will receive pushback— phone webcams are difficult to cover up, microphones are difficult to avoid, social media uses gradient descent to find and utilize posts and combinations of posts [LW · GW] that cause habit-forming behavior (e.g. optimizing for minimizing quit rates causes the system to find and utilize bizarre combinations of posts that hook people in bizarre ways, such as creating a vague sense that life without social media is an ascetic/monk-like existence when it reality it is default [LW · GW]). Quitting major life habits is also difficult by default, so plausible deniability might plausibly be already baked in here, yet again.

I’ve included an optional description by Professor Mark Andrejevic, writing in the Routledge handbook of surveillance, as an intuition flood [LW · GW] to help make it easier for more people to understand exactly why the world might literally already revolve around this technology. What’s impressive is that Andrejevic was writing in 2014, and gave no indication of knowing anything about AI; the systems he describes were feasible at the time with just data science and large amounts of user data. AI simply makes these systems even more capable of procuring results.

There is no logical endpoint to the amount of data required by such systems... All information is potentially relevant because it helps reveal patterns and correlations...

At least three strategies peculiar to the forms of population-level monitoring facilitated by ubiquitous surveillance in digital enclosures have become central to emerging forms of data-driven commerce and security: predictive analytics, sentiment analysis, and controlled experimentation. Predictive analytics relies upon mining behavioral patterns, demographic information, and any other relevant or available data in order to predict future actions [by locating and observing similar people or people who share predictive traits]...

Predictive analytics is actuarial in the sense that it does not make definitive claims about the future acts of particular individuals, rather it traffics in probabilities, parsing groups and individuals according to how well they fit patterns that can be predicted with known levels of accuracy. Predictive analytics relies on the collection of as much information as possible, not to drill down into individual activities, but to unearth new patterns, linkages, and correlations...

In combination with predictive analytics, the goal of sentiment analysis is both pre-emptive and productive: to minimize negative sentiment and maximize emotional investment and engagement; not merely to record sentiment as a given but to modulate it as a variable. Modulation means constant adjustment designed to bring the anticipated consequences of a modeled future into the present so as to account for these in ways that might, in turn, reshape that future. If, for example, sentiment analysis reveals resistance to a particular policy or concerns about a brand or issue, the goal is to manage these sentiments in ways that accord with the interests of those who control, capture, and track the data. The goal is not just the monitoring of populations but also their management...

Such forms of management require more than monitoring and recording a population—they also subject it to ongoing experimentation. This is the form that predictive analytics takes in the age of what Ian Ayres (2007) calls super-crunching: not simply the attempt to get at an underlying demographic or emo tional “truth”; not even the ongoing search for useful correlations, but also the ongoing generation of such correlations. In the realm of marketing, for example, data researchers use interactive environments to subject consumers to an ongoing series of randomized, controlled experiments. Thanks to the interactive infrastructure and the forms of ubiquitous surveillance it enables, the activities of daily life can be captured in virtual laboratories in which variables can be systematically adjusted to answer questions devised by the researchers...

The infrastructure for ubiquitous interactivity and thus ubiquitous surveillance transforms the media experiences of daily life into a large laboratory...

Such experiments can take place on an unprecedented scale—in real time. They rely upon the imperative of ubiquity: as much information about as many people as possible...

Taken together, the technology that captures more and more of the details of daily life and the strategies that take advantage of this technology lead to a shift in the way those who use data think about it. Chris Anderson argues that the dramatic increase in the size of databases results in the replacement of data’s descriptive power by its practical efficacy. The notion of data as referential information that describes a world is replaced by a focus on correlation. As Anderson (2008) puts it in “The End of Theory”:

"This is a world where massive amounts of data and applied mathematics replace every other tool that might be brought to bear. Out with every theory of human behavior, from linguistics to sociology … Who knows why people do what they do? The point is they do it, and we can track and measure it with unprecedented fidelity. With enough data, the numbers speak for themselves"

This is a data philosophy for an era of information glut: it does not matter why a particular correlation has predictive power; only that it does. It is also a modality of information usage that privileges those with access to and control over large databases, and, consequently, it provides further incentive for more pervasive and comprehensive monitoring.

When the US government, for example, exempts surveillance provisions in the USA Patriot Act from the accountability provided by the Freedom of Information Act, it is sacrificing accountability in the name of security... Threats by countries including India and the United Arab Emirates to ban Blackberry messaging devices unless the company provides the state with the ability to access encrypted information further exemplify state efforts to gain access to data generated in yet another type of digital enclosure...

A loss of control over the fruits of one’s own activity. In the realms of commercial and state surveillance, all of our captured and recorded actions (and the ways in which they are aggregated and sorted) are systematically turned back upon us by those with access to the databases. Every message we write, every video we post, every item we buy or view, our time-space paths and patterns of social interaction all become data points in algorithms for sorting, predicting, and managing our behavior. Some of these data points are spontaneous—the result of the intentional action of consumers; others are induced, the result of ongoing, randomized experiments...

Much will hinge on whether the power to predict can be translated into the ability to manage behavior, but this is the bet that marketers—and those state agencies that adopt their strategies—are making [in 2014].

Like most uses of multi-armed bandit algorithms to make social media steer people’s thinking in measurable directions, clown attacks do not require competent governments or intelligence agencies [LW · GW] in order to be successfully deployed against the minds of millions of people. Everything required for this technology is easy to access, except for the massive amounts of human behavioral data. It simply requires sufficient access to social media platforms, and the technological sophistication of one software engineer who understands multi-armed bandit algorithms and one data scientist who can statistically measure human behavior, particularly for people with authority or involvement in extant mass surveillance systems, since enough data means that anyone could eyeball the effects. Measurability is still mandatory for multi-armed bandit algorithms to work, since nobody can see directly into the human mind (although there are many peripheral proxies like fMRI data, heart rate, blood pressure, verbal statements and tone, boy posture, subtle changes in hand and eye movements after reading certain concepts, etc and many of these can be constantly measured by a hacked smartphone).

Lie detectors and clown attacks are the two strongest case studies I’m aware of that would cause AI to dominate global affairs and put AI safety in the crossfire; whether or not this has already happened is largely a question of the math; are AI capabilities to do these things obvious to engineers to tell us that major governments have probably already built the tech? A recent study indicated that a massive proportion of computer vision research is heavily optimized for human behavior research, analysis, and manipulation, and is surreptitiously mislabeled and obfuscated in order to conceal the human research and human use cases that form the core of the computer vision papers, e.g. a consistent norm of referring to the human research subjects as “objects” instead of subjects.

There’s just a large number of human manipulation strategies that are trivial to discover and exploit, even without AI (although the situation is far more severe when you layer AI on top), it’s just that they weren’t accessible at all to 20th century institutions and technology such as academic psychology. If they get enough data on people who share similar traits to a specific human target, then they don’t have to study the target as much to predict the target’s behavior, they can just run multi-armed bandit algorithms on those people to find manipulation strategies that already worked on individuals who share genetic or other traits. Although the average person here is much further out-of-distribution relative to the vast majority of people in the sample data, this becomes a technical problem, as AI capabilities and compute become dedicated to the task of sorting signal from noise and finding webs of correlation with less data. Clown attacks alone have demonstrated that zero days in the brain are fairly consistent among humans, meaning that sample data from millions or billions of people is useable to find a wide variety of zero days in the brains that make up the AI safety community.

First it started working on 60% of people, and I didn’t speak up, because my mind wasn’t as predictable as people in that 60%. Then, it started working on 90% of people, and I didn’t speak up, because my mind wasn’t as predictable as the people in that 90%. Then it started working on me. And by then, it was already too late, because it was already working on me.

Social media’s ability to mix and match (or mismatch) people in specific ways and at great scale, as well as using bot accounts just to upvote and signal boost specific messages so that they look popular [LW · GW], already yielded powerful effects. Adding LLM bots into the equation merely introduces more degrees of freedom.

Among a wide variety of capabilities, platforms like twitter/X were capable of fiddling with their news feed algorithms such that users are incentivised to output as many words as possible in order to gain more likes/points/status, or as high-quality combinations of words [LW · GW] as they can muster, rather than whatever maximizes the compulsion to return to the platform the next day (a compulsion that is very, very easy to measure by looking at user retention rates and quit rates, and if it is easy to measure then it is easy to maximize via gradient descent). However, if a social media platform like twitter/X does not optimize for competitiveness against other social media platforms, and the other platforms do, then every subsequent day people will return to other social media platforms more and twitter less. The state of Moloch is similar to the thought experiment where 4 social media platforms run multi-armed bandit algorithms to find ways to increase user engagement by 3 minutes per person per day, and if one platform of the four (let’s imagine that it’s twitter/X) eventually notices that the people themselves are spending 9 hours a day on social media, and twitter/X recoils in horror and decides to cease that policy and instead attempt to increase user engagement by 0 hours per person per day, then the autonomous multi-armed bandit algorithms running on other platforms automatically select strategies that harvest minutes of that time from twitter/X, the lone defector platform, in addition to harvesting minutes from the users’s undefended off-screen IRL time, which is the undefended natural resource that’s easiest for social media systems to steal time from, like an intelligent civilization harvesting an inanimate natural resource like plants or oil. The defector platform then loses its market share and is crowded out of the genepool.

People who can wield gradient descent as a weapon against other people are fearsome indeed (although only the type of person with access to user data and who buys servers by the acre can do this effectively). They not only have the ability to try things that already were demonstrated to work on people similar to you, they also have the ability to select attack avenues in places that you will not look and ways you will not notice, because they have a large enough amount of human behavioral data showing them all the places where people like you did end up looking in the past.

If true, this technology would, by default, become the darkest secret of the big 5 tech companies, and one of the darkest secrets of American and Chinese intelligence agencies. This tech would be the biggest deliberate abuse of psychological research in human history by far, and its effectiveness hinges on the current paradigm where billions of people output massive amounts of sensor data and social media scrolling data (e.g. the detailed pace at which different kinds of people scroll through different kinds of information), although the effectiveness of the clown attack on those not aware of it in particular demonstrates that a general awareness of the risk is not sufficient to protect oneself.

What would the world look like if human thought research and steering technology won out and became hopelessly entrenched 5-10 years ago? Unfortunately, that world would look a lot like this one. There are billions of inward facing webcams pointed at every face looking at every screen. The NSA stockpiles exploits in every operating system and likely chip firmware as well. There are microphones in every room and accelerometers in the palm of every hand (which gives access to heart rate and a wide variety of other peripheral biophysical data that correlates strongly with various cognitive and emotional behavior). The very existence of mass surveillance is known, but that was only due to Snowden, which was one occasion and probably just bad luck; and the main aspect of the Snowden revelations was not that mass surveillance happens at incredible scale, but that the intelligence agencies were wildly successful at concealing and lying about it for years (and subsequently reorganized around the principle of preventing more Snowdens). An epistemic environment further and further in decline as social status, virtue signaling, and vague impressions dominate. And, last of all, international affairs is coming apart at the seams as the old paradigms die and trust vanishes. This is what a world looks like where powerful people have already gained the ability to access the human mind at a far deeper level than any target has access to; unfortunately, it is also what more mundane worlds look like, where human thought and behavior manipulation capabilities remained similar to 20th century levels. However, I’ve made the case very strongly that such technology exists and that due to fundamental mathematical/statistical dynamics and due to human genetic diversity, these systems fundamentally depend on covert large-scale deployment (e.g. social media) in order to get sufficiently large amounts of data to run at all e.g. multi-armed bandit algorithms sufficient to find novel manipulation strategies in real time, and measuring and researching the human thought process sufficiently to use multi-armed bandit algorithms and SGD to steer a target’s thoughts in measurable directions. Therefore, the burden of proof falls even more heavily on the claim that our minds are safe, safe enough for the AI safety community to survive the 2020s at all [LW · GW], not on the claim that our minds are not secure and represent a severe point of failure.

The question of whether the cognitive warfare situation has already become severe is a question that must be approached with sober analysis, not vibes and vague impressions. Vibes and vague impressions are by far the easiest thing to hack, as demonstrated by the clown attack; and in a world where the situation was acute, then keeping people receptive and vulnerable to influence would be one of the most probable attacks for us to expect to be commonplace.

New technology actually does make current civilization out-of-distribution relative to civilization over the last 100 or 1000 years, and thus risks termination of norms, dynamics, and assumptions that have made everything in civilization go fine so far, such as humans being better at lying that detecting lies and thus not capable of organizing themselves based on statements of fact like “I am 100% loyal” or “here is an accurate list of the corrupt and incompetent people on this team”. The specific state of human controllability dominated global affairs e.g. via military recruitment, and when this controllability ratcheted up slightly in the 19th century, it resulted in the total war paradigm of the World War era and the information war paradigm of the Cold War era. Assuming that history will repeat itself, and remain as sensible and intuitive as it always was, is like expecting a psychological study to replicate in an out-of-distribution environment. It certainly might.

With the superior capabilities to research the human mind offered by the combination of social media and AI, governments, tech companies, and intelligence agencies now have the capability to understand aggregate consumer demand better than ever before and manipulate consumer spending and saving in real time, capabilities that were first sought in the 1980s by the Reagan and Thatcher administrations and never fully reached, but that was with 20th century technology; no social media, no mass surveillance, no user data or sensor data, no AI, only psychology (much of which would not replicate due to the study paradigm, which remains inferior to mass surveillance), statistics, focus groups, and polls, each of which were new at the time, and each of which remain available to governments, tech companies, and intelligence agencies today to supplement their new capabilities. It is for this reason that the paradigm of recessions, a paradigm solidified in the 20th century, is a paradigm that we might expect to die, along with many other civilizational paradigms that were only established in the 20th century due to the 20th century’s relative absence of human behavior measurement and thought steering technology.

Taking a step back and looking at a fundamental problem

If there were intelligent aliens, made of bundles of tentacles or crystals or plants that think incredibly slowly, their minds would also have zero days that could be exploited because any mind that evolved naturally would probably be like the human brain, a kludge of spaghetti code that is operating outside of its intended environment, and they would also would not even begin to scratch the surface of finding and labeling those zero days until, like human civilization today, they began surrounding thousands or millions of their kind with sensors that could record behavior several hours a day and find webs of correlations. Of course, if they didn’t have much/any genetic diversity then it would be even easier to find those zero days, and vice versa for intense amounts of diversity. However, the power of the clown attack demonstrates that genetic diversity in humans is not sufficient to prevent zero days from being discovered and exploited; the drive to gain and avoid losing social status is hackable, with current levels of technology (social media algorithms, multi-armed bandit algorithms, and sentiment analysis), indicating that many other exploits are findable as well with current levels of technology.

There isn’t much point in having a utility function in the first place if hackers can change it at any time. There might be parts that are resistant to change, but it’s easy to overestimate yourself on this; for example, if you value the longterm future and think that no false argument can persuade you otherwise, but a social media news feed plants paranoia or distrust of Will Macaskill, then you are one increment closer to not caring about the longterm future; and if that doesn’t work, the multi-armed bandit algorithm will keep trying until it finds something that works. The human brain is a kludge of spaghetti code, so there’s probably something somewhere. The human brain has zero days, and the capability and cost of social media platforms to use massive amounts of human behavior data to find complex social engineering techniques is a profoundly technical matter, you can’t get a handle on this with intuition or pre 2010s historical precedent. Thus, you should assume that your utility function and values are at risk of being hacked at an unknown time, and should therefore be assigned a discount rate to account for the risk over the course of several years. Slow takeoff over the course of the next 10 years alone guarantees that this discount rate is too high in reality for people in the AI safety community to continue to go on believing that it is something like zero. I think that approaching zero is a reasonable target, but not with the current state of affairs where people don’t even bother to cover up their webcams, have important and sensitive conversations about the fate of the earth in rooms with smartphones, and use social media for nearly an hour a day (scrolling past nearly a thousand posts). The discount rate in this environment cannot be considered “reasonably” close to zero if the attack surface is this massive; and the world is changing this quickly. If people have anything they value at all [LW · GW], and the AI safety community probably does have that, then the current AI safety paradigm of zero effort is wildly inappropriate, it is basically total submission to invisible hackers.

The sheer power of psychological influence technologies informs us that we should stop thinking of cybersecurity as a server-only affair. Humans can also make mistakes as extreme as using the word “password” as your password, except it is your mind and your values and your impressions of different lines of reasoning that gets hacked, not your files or your servers or your bank passwords/records. In order to survive slow takeoff and persist for as long as necessary, the AI safety community must acknowledge the risk that the 2020s will be intensely dominated by actors capable of using modern technology to stealthily hack the human mind and eliminate inconvenient people, such as those try to pause AI, even though AI is basically the keys to their kingdom. We must acknowledge that the future of cyberwarfare doesn’t just determine who gets to have their files and verbal conversations be private; in the 2020s, it determines what kinds of thoughts people get to have and what kinds of people do and don’t get to have them at all (e.g. as one single example, clowns do have the targeted thoughts and the rest don’t).

Everything that we’re doing here is predicated on the assumption that powerful forces, like intelligence agencies, will not disrupt the operations of the community e.g. by inflaming factional conflict with false flag attacks attributed to each other due to the use of anonymous proxies.

Most people in AI safety still think of themselves as ordinary members of the population. In reality, this stopped being true a while ago; a bunch of nerds discovered an engineering problem that, as it turns out, the universe actually does revolve around, and a bunch of nerds messing around with technology that is central to geopolitics bears a reasonable chance that geopolitics will bite back, especially in a wild where intelligence agencies have, for decades, messed with and utilized influential NGOs in a wide variety of awful ways, and in a wild where intensely powerful influence technology like clown attacks becomes stronger and stronger determinants of geopolitical winners and losers.

If left to their own devices, people’s decisions technology and social media will be dominated by their self-concept of themself as an average member of the population, who considers things like news feeds and smartphone sensors/uncovered webcams as safe because everyone’s doing it and of course nothing bad would happen to them, when the cybersecurity reality is that the risk of psychological engineering is extreme and in a mathematically provable way, and this nonchalance towards strangers tampering/hacking your cognition and utility function is an unacceptable standard for the group of nerds who actually discovered an engineering problem that this side of the universe revolves around.

The attack surface of the AI safety community is like the surface area of the interior of a cigarette filter; thousands of square kilometers, wrinkled and folded together inside the spongey three-dimensional interior of a 1-inch long cigarette filter. This is not the kind community that survives a transformative world.

AI pause as the turning point

From Shutting Down the Lightcone Offices [LW · GW]:

I feel quite worried that the alignment plan of Anthropic currently basically boils down to "we are the good guys, and by doing a lot of capabilities research we will have a seat at the table when AI gets really dangerous, and then we will just be better/more-careful/more-reasonable than the existing people, and that will somehow make the difference between AI going well and going badly". That plan isn't inherently doomed, but man does it rely on trusting Anthropic's leadership, and I genuinely only have marginally better ability to distinguish the moral character of Anthropic's leadership from the moral character of FTX's leadership...

In most worlds RLHF, especially if widely distributed and used, seems to make the world a bunch worse from a safety perspective (by making unaligned systems appear aligned at lower capabilities levels, meaning people are less likely to take alignment problems seriously, and by leading to new products that will cause lots of money to go into AI research, as well as giving a strong incentive towards deception at higher capability levels)... The EA and AI Alignment community should probably try to delay AI development somehow, and this will likely include getting into conflict with a bunch of AI capabilities organizations, but it's worth the cost.

I don't have much to contribute to the calculations behind this policy (which I'd like to note is just musing by Habryka intended to elicit further discussion, and I might be taking it out of context), other than describing in great detail what a "conflict with a bunch of AI capabilities organizations" would look like, which I've been researching for years and it is not pretty; the asymmetry is so serious that even thinking about waging such a conflict could begin closing the window of opportunity for you to make moves, e.g. if some of the people you talk to end up using social media and scrolling past specific bits of information at a pace similar to, say, for example, people who ultimately ended up seeing big tech companies as enemies, but in a cold and calculating and serious way, not in an advocacy way. Sample sizes of millions of people makes that kind of prediction possible; even if there are only a dozen people in the data set of positive cases of cold-bigtech-enmity, there are millions of people who make up the data set for negative cases, allowing analysts to get an extremely good idea of what a potential threat looks like by knowing what a potential threat doesn't look like. This is only one example, and it is entirely social-media based; it does not use, say, automated analysis of audio data from recorded conversations near hacked smartphones, which is very unambiguously the kind of thing that can be expected to happen to people who would "get into conflict with a bunch of AI capabilities organizations" as those organizations tend to have strong ties, and possibly even substantial revolving door employment, with intelligence agencies; Facebook/Meta is a good example, as they routinely find themselves at the center of public opinion and information warfare conflicts around the world. It's also unclear to me how sovereign these companies's security departments are without continued logistical support and staff from American intelligence agencies, as they have to contend with intense interest from a wide variety of foreign intelligence agencies. There is an entire second world here, that is 1) parallel to the parts of the ML community that are visible to us, 2) vastly more powerful, privileged, and dangerous than the ML community, and 3) has a massive vested interest in the goings-on of the ML community, and I've encountered dozens and dozens of people in the AI safety community in both SF/berkeley and DC, and if a single person was aware of this second world, they were doing an incredibly good job totally hiding their awareness of it. I think this is a recipe for disaster. I think that the AI safety community is not even thinking about the kinds of manipulation, subterfuge, and sabotage that would take place here just based off of this world's lawyers-per-capita alone, and the fact that this is a trillion-dollar industry, let alone that this is a trillion-dollar industry due in part to the human influence capabilities I've barely begun to describe here, let alone due to interest that these capabilities attracted from all the murkiest people lurking within the US-China conflict.

The attempt to open source Twitter/X’s newsfeed algorithm might have been months ago, but even if it was a step in the right direction, to repeatedly attempt projects like that would cause excessive disruptions and delegitimizations for the industry, particularly Facebook/Meta which will never be able to honestly open-source its systems’s news feed algorithms. Facebook and the other 4 large tech companies (of whom Twitter/X is not yet a member due to vastly weaker data security) might be testing out their own pro-democracy anti-influence technologies and paradigms, akin to Twitter/X’s open-sourcing its algorithm, but behind closed doors due to the harsher infosec requirements that the big 5 tech companies face. Perhaps there are ideological splits among executives e.g. with some executives trying to find a solution to the influence problem because they’re worried about their children and grandchildren ending up as floor rags in a world ruined by mind control technology, and other executives nihilistically marching towards increasingly effective influence technologies so that they and their children personally have better odds of ending up on top instead of someone else. Twitter/X’s measured pace by ope sourcing the algorithm and then halting several months afterwards is therefore potentially a responsible and moderate move in the right direction, especially considering the apparent success of the community notes paradigm at improving epistemics.

The AI safety community is now in a situation where it has to do everything right. The human race must succeed at this task, even if the human brain didn’t evolve to do well at things like having the entire species coordinate to succeed at a single task. Especially if that task, AI alignment, might be absurdly difficult entirely for technical reasons, as difficult for the human mind to solve as expecting chimpanzees to figure out enough rocket science to travel to the moon and back, which would be a big ask regardless of the chimpanzees’s instinctive tendency to form factions and spend 90% of their thinking on clever plots to outmaneuver and betray each other. This means that vulnerability to clown attacks is unacceptable for the AI safety community, and it is also unacceptable to be vulnerable to other widespread social engineering techniques that exploit zero days in the human brain. The degree of vulnerability is highly measurable by attackers, and increasingly so as technology advances, and since it is legible that attackers will be rewarded for exploiting vulnerabilities, it is therefore incentivised for attackers to exploit those vulnerabilities and steer the AI safety community over a cliff.

The AI safety community has long passed a threshold where vulnerability to clown attacks is no longer acceptable; not only does it incentivize more clown attacks, and more ambitious clown attacks, where the attackers have more degrees of freedom, but the AI safety community is in a state where clown attacks can thwart many of the tasks required to do AI safety at all.

Lots of people approach AI social control as though solving AI alignment is priority #1 and the use of AI social control is priority #2. However, this attitude is not coherent. AI alignment is #1, and AI social control is #1a, a subset of #1 with virtually no intrinsic value on its own, only instrumental value to AI alignment, as the use of AI for social control would incentivise accelerating AI and complicate alignment efforts in the meantime, either by direct sabotage by intelligence agencies or AI companies, or by causing totalitarianism or all-penetrating crushing information warfare between the US and China, or some other state of civilization that we might fail to adapt to.

How to protect yourself and others:

- Either stop reasoning based on vibes and impressions (hard), or stop spending hours a day inside hyperoptimized vibe/impression hacking environments (social media news feeds). This may sound like a big ask, but it actually isn’t, like cryopreservation; everyone on earth happens to be doing it catastrophically wrong and its actually a super quick fix, less than a few days or even a few hours and your entire existence is substantially safer. But more than 99% of people on earth will look at you as if you were wearing a clown suit, and that intimidates people away from specific lines of thought in a very deep way.

- A critical element is for as many people as possible in AI safety to cover up their webcams; facial microexpressions are remarkably revealing, especially to people with access to billions of hours of facial microexpression data of people in flexible hypercontrolled environments like the standard social media news feed. Facial microexpressions are the output of around 60 different facial muscles, and although modern ML probably can’t compress each frame of a video file to a 6 by 10 matrix that represents how contracted each facial muscle is, modern ML bably compress, say, every 5 frames into a 128 by 128 matrix that represents the state of the 20% of the facial muscles that project ~80% of the valuable data/signal outputted by facial muscles. Minimizing exposure to other sensors is ideal as well; it seems pretty likely that a hacked OS can turn earbuds into microphones so definitely switch to used speakers. I use a wireless keyboard so that I don’t have to worry about the accelerometers in my laptop, and a trackball mouse that I use with my off hand. Some potential exploits, like converting RAM chips or accelerometers, mean that it might be difficult to prevent laptops from recording enough audio to acquire changes in your heart rate itself, but there’s a wide variety of other biophysical data that it’s not in your best interest to donate (if you’re going to sell shares of your utility function, at least don’t sell them for zero dollars).

- It’s probably a good idea to switch to physical books instead of ebooks. Physical books do not have operating systems or sensors. You can also print out research papers and Lesswrong and EAforum articles that you already know are probably worth reading or skimming; if you drive to the store and actually spend 15 minutes looking, you will probably find an incredibly ink-efficient printer.

- I’m not sure whether a text-only inoculation is good enough, or whether there’s no way around the problem other than reducing people’s exposure to sensors and social media. Reading Yudkowsky’s rationality sequences will definitely make one’s thoughts and behaviors harder to predict, but it won’t patch or rearrange the zero days in the human brain. Even Tuning your Cognitive Strategies [LW · GW] and Raemon's Feedbackloop First Rationality [LW · GW] won’t do that, although it might go a long way towards making it much harder to gather data on the details of those zero days by comparing your mind and behavior to the minds and behavior of millions of people that make up the data, because your mind will be just be more different from those people than before, and you will be different from them in very different ways from how they are different from each other. You will be out-of-distribution, and the distribution is a noose that is slowly tightening around the mind of most people on earth. If reading the sequences seem daunting, I recommend starting with the highlights [? · GW] or randomly selecting posts from them out-of-order [LW · GW] which is a fantastic way to start the day. HPMOR is also an incredibly fun and enjoyable alternative to read in your down time if you’re busy (and possibly one of the most rereadable works of fiction ever written), and Planecrash [LW · GW] is a similarly long and ambitious work by Yudkowsky that is darker and less well-known, even though the self-improvement aspect seems more refined and effective. Other safe predictability reducers include Scott Alexander’s codex [? · GW] for a more world-modeling focus, and the CFAR handbook [LW · GW] for more practical self-enhancement work. These are all highly recommended [? · GW] for self-improvement.

- It’s probably best to avoid sleeping in the same room as a smart device, or anything with sensors, an operating system, and also a speaker. The attack surface seems large, if the device can tell when people’s heart rate is near or under 50 bpm, then it can test all sorts of things e.g. prompting sleep talk on specific topics. The attack surface is large because there’s effectively zero chance of getting caught, so if people were to experiment with that, it wouldn’t matter how low the probability of success is for any idea to try or whether they were just engaging in petty thuggery [LW · GW]. Just drive to the store and buy a clock, it will be like $30 at most and that’s it.

- Of course, you should be mindful of what you know and how you know it, but in the context of modern technologies, it’s more important to pay attention to your feelings, vibe, and impression of specific concepts, and you successfully search your mind and trace the origins of those feelings, you might notice the shadows cast by the clowns that have fouled up targeted concepts (you probably don’t remember the clowns themselves, as they are nasty internet losers). But that’s just one zero day, and the problem is bigger and more fundamental than that. Patching the clown attack is valuable, but it’s still a band-aid solution that will barely address the root of the issue.

- I am available via LW message to answer any questions you might have.

82 comments

Comments sorted by top scores.

comment by lc · 2023-10-22T06:04:27.936Z · LW(p) · GW(p)

Would you mind if I rewrote this in a less "manic" tenor, keeping the content and mood largely the same, and reposted? I like this essay and think the core of what you're suggesting is reasonable, for reasons both stated and unstated, but I would like to try to say it differently in a way I think it will be taken better.

Replies from: TrevorWiesinger, lelapin, Benito, NicholasKross↑ comment by trevor (TrevorWiesinger) · 2023-10-22T14:45:21.425Z · LW(p) · GW(p)

I've been hoping for years that someone else could do this instead of me; I did this research to donate it, and if I'm the wrong person to communicate it (e.g. I myself am noise/ambient-clowning the domain) then that's on me and I'd be grateful for that to be fixed.

↑ comment by Jonathan Claybrough (lelapin) · 2023-10-22T09:34:28.485Z · LW(p) · GW(p)

Would be up for this project. As is, I downvoted Trevor's post for how rambly and repetitive it is. There's a nugget of idea, that AI can be used for psychological/information warfare that I was interested in learning about, but the post doesn't seem to have much substantive argument to it, so I'd be interested in someone both doing an incredibly shorter version which argued for its case with some sources.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-10-22T15:30:58.886Z · LW(p) · GW(p)

My thinking about this is that it is a neglected research area with a ton of potential, and also a very bad idea for only ~1 person to be the only one doing it, so more people working on it would always be appreciated, and it would also be in their best interest too because it is a gold mine of EV for humanity and also deserved reputation/credit. So absolutely take it on.

Also, I think this post does have substantive argument and also sources. The argument I'm trying to make is that we're entering a new age of human manipulation research/capabilities by combining AI with large sample sizes of human behavior, and that the emergence of that kind of sheer power would shift a lot of goalposts. Finding evidence of a specific manipulation technique (clown attacks) was hard, but it was comparatively much easier to research the meta-process that generates techniques like that, and that geopolitical affairs would pivot if mind control became feasible.

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2023-10-23T13:05:11.822Z · LW(p) · GW(p)

To be frank trevor, you don't seem to have referenced or cited any of the extensive 20th century and prior literature on memetics, social theory, sociology, mass movements, human psychology in large groups, etc...

Which is likely what the parent was referring to.

Although I have read nowhere close to all of it, I've read enough to not see any novel substantive arguments or semi-plausible proofs.

Most LW readers don't expect anything at the level of a formal mathematical or logical proof, but sketching out a defensible semi-plausible path to one would help a lot. Especially for a post of this length.

It also doesn't help that your taking for granted many things which are far from decided. For example, the claim:

...like cryopreservation; everyone on earth happens to be doing it catastrophically wrong and its actually a super quick fix, less than a few days or even a few hours and your entire existence is substantially safer.

Sounds very exaggerated because cryopreservation itself does not have that solid of a foundation as your implying here.

Since no one has yet offered a physically plausible solution to restoring a cryopreserved human, even with unlimited amounts of computation and energy, with their neural structure and so on intact. (That fits within known thermodynamic boundaries.)

It's more of a 'there is a 1 in a billion chance some folks in the future will stumble on a miracle and will choose to work on me' or some variation. And people doing it anyways since even a tiny tiny chance is better than nothing in their books.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-10-23T16:57:21.325Z · LW(p) · GW(p)

not see any novel substantive arguments or semi-plausible proofs.

Most LW readers don't expect anything at the level of a formal mathematical or logical proof, but sketching out a defensible semi-plausible path to one would help a lot. Especially for a post of this length.

That was my mistake, when I said "mathematically provable", I meant provable with math, not referring to a formal mathematical proof or logical proof. I used that term pretty frequently so it was a pretty big mistake.

The dynamic is pretty fundamental though. I refer to it in the "taking a step back" section:

If there were intelligent aliens, made of bundles of tentacles or crystals or plants that think incredibly slowly, their minds would also have zero days that could be exploited because any mind that evolved naturally would probably be like the human brain, a kludge of spaghetti code that is operating outside of its intended environment, and they would also would not even begin to scratch the surface of finding and labeling those zero days until, like human civilization today, they began surrounding thousands or millions of their kind with sensors that could record behavior several hours a day and find webs of correlations.

↑ comment by Ben Pace (Benito) · 2024-12-05T05:38:03.506Z · LW(p) · GW(p)

Did this happen yet? I would even just be into a short version of this (IMO good) post.

Replies from: lc↑ comment by lc · 2024-12-10T05:26:00.462Z · LW(p) · GW(p)

I have a draft that has wasted away for ages. I will probably post something this month though. Very busy with work.

Replies from: caleb-biddulph↑ comment by Caleb Biddulph (caleb-biddulph) · 2025-01-21T23:26:51.175Z · LW(p) · GW(p)

I would also be excited to see this, commenting to hopefully get notified when this gets posted

↑ comment by Nicholas / Heather Kross (NicholasKross) · 2023-10-22T19:51:03.799Z · LW(p) · GW(p)

This comment needs to be frontpaged, stickied, added as a feature for people with >N karma (like "request feedback"), engraved on Mount Rushmore, tattooed on my forehead, and scrawled in a mysterious red substance in my bathroom.

Replies from: TekhneMakre↑ comment by TekhneMakre · 2023-10-23T02:46:04.689Z · LW(p) · GW(p)

What do you mean? Surely they aren't offering this for anyone who writes anything manicly. It would be nice if someone volunteered for doing that service more often though.

Replies from: NicholasKross↑ comment by Nicholas / Heather Kross (NicholasKross) · 2023-10-23T18:09:35.204Z · LW(p) · GW(p)

My comment is partly a manic way of saying "yes this is a good service which more people should both provide and ask for". Not sure how practical it would be to add as a feedback-like formal feature. And of course I don't think lc should personally be the one to always do this.

Replies from: ChristianKl↑ comment by ChristianKl · 2023-10-23T21:11:42.815Z · LW(p) · GW(p)

LessWrong already has a formal feature to ask for feedback for anyone with >100 karma.

Replies from: NicholasKross↑ comment by Nicholas / Heather Kross (NicholasKross) · 2023-10-24T00:54:30.298Z · LW(p) · GW(p)

Yes. I'm aware of that. I noted it in the "(like "request feedback")" part of my comment.

comment by 307th · 2023-10-22T15:03:23.850Z · LW(p) · GW(p)

This post is fun but I think it's worth pointing out that basically nothing in it is true.

-"Clown attacks" are not a common or particularly effective form of persuasion

-They are certainly not a zero day exploit; having a low status person say X because you don't want people to believe X has been available to humans for our entire evolutionary history

-Zero day exploits in general are not a thing you have to worry about; it isn't an analogy that applies to humans because we're far more robust than software. A zero day exploit on an operating system can give you total control of it; a 'zero day exploit' like junk food can make you consume 5% more calories per day than you otherwise would.

-AI companies have not devoted significant effort to human thought steering, unless you mean "try to drive engagement on a social media website"; they are too busy working on AI.

-AI companies are not going to try to weaponize "human thought steering" against AI safety

-Reading the sequences wouldn't protect you from mind control if it did exist

-Attempts at manipulation certainly do exist but it will mostly be mass manipulation aimed at driving engagement and selling you things based off of your browser history, rather than a nefarious actor targeting AI safety in particular

↑ comment by lc · 2023-10-22T17:49:32.989Z · LW(p) · GW(p)

Zero day exploits in general are not a thing you have to worry about; it isn't an analogy that applies to humans because we're far more robust than software. A zero day exploit on an operating system can give you total control of it; a 'zero day exploit' like junk food can make you consume 5% more calories per day than you otherwise do.

The "just five percent more calories" example reveals nicely how meaningless this heuristic is. The vast majority of people alive today are the effective mental subjects of some religion, political party, national identity, or combination of the three, no magical backdoor access necessary; the confirmed tools and techniques are sufficient to ruin lives or convince people to do things completely counter to their own interests. And there are intermediate stages of effectiveness that political lobbying can ratchet up along, between the ones they're at now and total control.

- AI companies have not devoted significant effort to human thought steering, unless you mean "try to drive engagement on a social media website"; they are too busy working on AI.

- AI companies are not going to try to weaponize "human thought steering" against AI safety

The premise of the above post is not that AI companies are going to try to weaponize "human thought steering" against AI safety. The premise of the above post is that AI companies are going to develop technology that can be used to manipulate people's affinities and politics, Intel agencies will pilfer it or ask for it, and then it's going to be weaponized, to a degree of much greater effectiveness than they have been able to afford historically. I'm ambivalent about the included story in particular being carried out, but if you care about anything (such as AI safety), it's probably necessary that you keep your utilityfunction intact.

↑ comment by trevor (TrevorWiesinger) · 2023-10-22T15:26:37.264Z · LW(p) · GW(p)

- Yes they are, clown attacks are an incredibly powerful and flexible form of Overton window manipulation. They can even become a self-fulfilling prophecy by selectively sorting domains of thought among winners and losers in real life, e.g. only losers think about lab leak hypothesis.

- It's a zero-day exploit because it's a flaw in the human brain that modern systems are extremely capable of utilizing to steer people's thinking without their knowledge (in this case, denial of certain lines of cognition). You're right that it's not new enough to count days, like a zero day in computers, but it's still less than a decade old that it's been exploited this powerfully (orders of magnitude more effective than ever before).

- Like LLMs, the human mind is sloppy and slimy; clown attacks are an example of something that multi-armed bandit algorithms can repeatedly try [? · GW] until something works (the results always have to be measure able though).

- I'm thinking the big 5 tech companies, Facebook Amazon Apple Google Microsoft, and intelligence agencies like the NSA and Chinese agencies. I am NOT thinking about e.g. OpenAI here.

- I made the case that these agencies have historically unprecedented amounts of power, and since AI is the keys to their kingdom, trying to establish an AI pause does indeed come with that risk.

- I might be wrong about The Sequences hardening people, but I think these systems are strongly based on human behavior data, and if most of the people in the data haven't read The Sequences, then people who read The Sequences are further OOD than they would have been and therefore less predictable.

- I agree that profit-driven manipulation might still be primary and was probably how human manipulation capabilities first emerged and were originally fine-tuned, probably in the early-mid 2010s. But since these are historically unprecedented degrees of power over humans, and due to international information warfare e.g. between the US and China (which is my area of expertise), I doubt that manipulation capabilities remained exclusively profit-driven. I think that it's possible that >90% of people at each of the tech companies haven't worked on these systems, and of the 10% who have, it's very possible that 95% of those people only work on profit-based systems. But I also think that there are some people who work on geopolitics-prioritizing manipulation too, e.g. revolving door employment with intelligence agencies.

↑ comment by der (DustinWehr) · 2023-11-02T15:20:25.369Z · LW(p) · GW(p)

"Clown attack" is a phenomenal term, for a probably real and serious thing. You should be very proud of it.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-11-02T16:29:03.851Z · LW(p) · GW(p)

I think that the people at Facebook/Meta and the NSA probably already coined a term for it, likely an even better one as they have access to the actual data required to run these attacks. But we'll never know what their word was anyway, or even if they have one.

comment by mako yass (MakoYass) · 2023-10-22T09:13:01.032Z · LW(p) · GW(p)

On clown attacks, it's notable that activist egregores conduct them autonomically, by simply dunking on whoever they find easiest to dunk on, the dveshi hoists the worst representatives of ideas ahead of the good ones.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-10-22T14:55:39.734Z · LW(p) · GW(p)

I absolutely agree! Part of what makes clown attacks so powerful is the plausible deniability; most clowns are not attacks. As a result, attackers have plenty of degrees of freedom to try things [? · GW] until something works, so much so that they can even automate that process with multi-armed bandit algorithms, because there's basically no risk of getting caught.

comment by memeticimagery · 2023-10-22T16:18:42.935Z · LW(p) · GW(p)

Scrolling down this almost stream of consciousness post against my better judgement, unable to look away perfectly mimicked scrolling social media. I am sure you did not intend it but I really liked that aspect.

Loads of good ideas in here, generally I think modelling the alphabet agencies is much more important than implied by discussion on LW. Clown attack is a great term, although I'm not entirely sure how much personal prevention layer of things really helps the AI safety community, because the nature of clown attacks seems like a blunt tool you can apply to the public at large to discredit groups. So, primarily the vulnerability of the public to these clown attacks is what matters and is much harder to change.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-10-22T16:31:39.494Z · LW(p) · GW(p)