Shutting Down the Lightcone Offices

post by habryka (habryka4), Ben Pace (Benito) · 2023-03-14T22:47:51.539Z · LW · GW · 103 commentsContents

Background data Ben's Announcement Oliver's 1st message in #Closing-Office-Reasoning Oliver's 2nd message Ben's 1st message in #Closing-Office-Reasoning None 103 comments

Lightcone recently decided to close down a big project we'd been running for the last 1.5 years: An office space in Berkeley for people working on x-risk/EA/rationalist things that we opened August 2021.

We haven't written much about why, but I and Ben had written some messages on the internal office slack to explain some of our reasoning, which we've copy-pasted below. (They are from Jan 26th). I might write a longer retrospective sometime, but these messages seemed easy to share, and it seemed good to have something I can more easily refer to publicly.

Background data

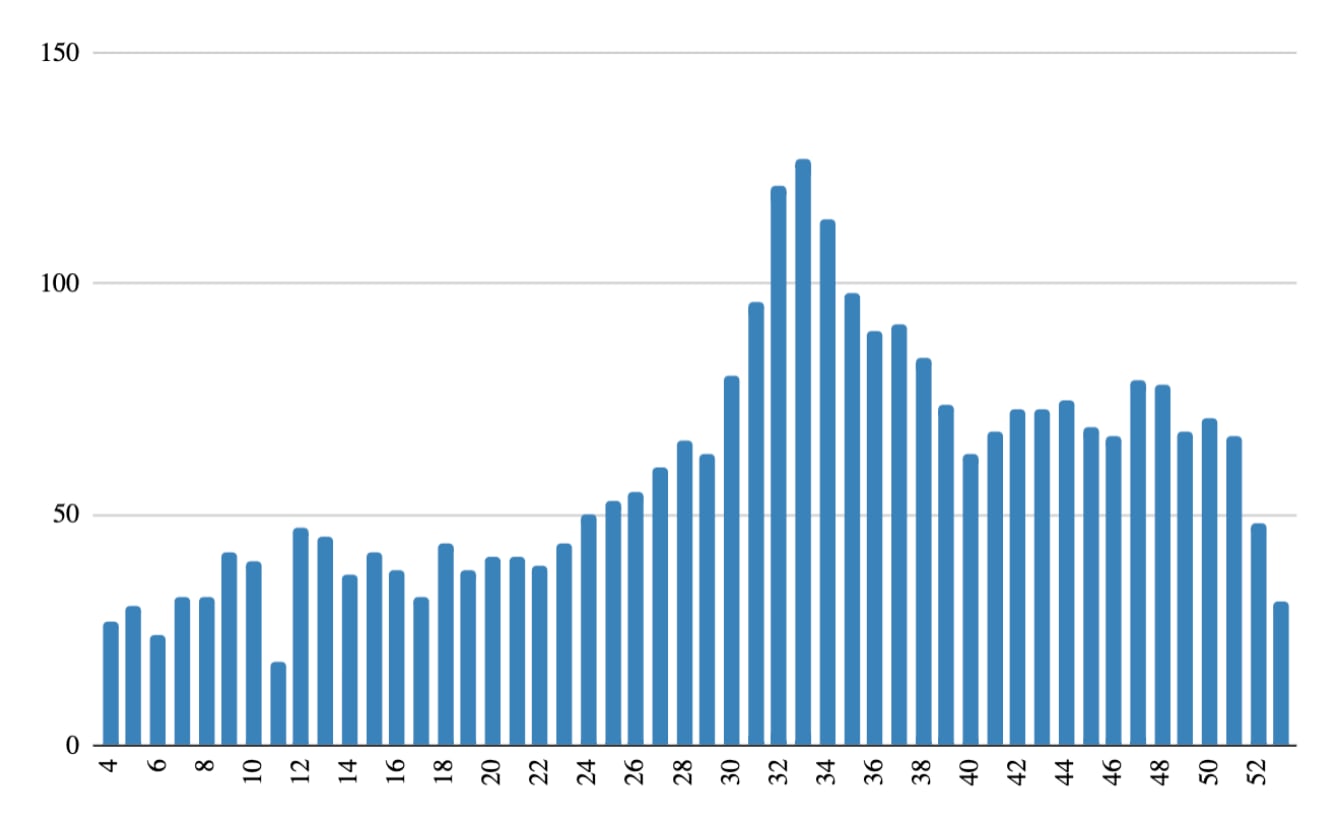

Below is a graph of weekly unique keycard-visitors to the office in 2022.

The x-axis is each week (skipping the first 3), and the y-axis is the number of unique visitors-with-keycards.

Members could bring in guests, which happened quite a bit and isn't measured in the keycard data below, so I think the total number of people who came by the offices is 30-50% higher.

The offices opened in August 2021. Including guests, parties, and all the time not shown in the graphs, I'd estimate around 200-300 more people visited, so in total around 500-600 people used the offices.

The offices cost $70k/month on rent [1], and around $35k/month on food and drink, and ~$5k/month on contractor time for the office. It also costs core Lightcone staff time which I'd guess at around $75k/year.

Ben's Announcement

Closing the Lightcone Offices @channel

Hello there everyone,

Sadly, I'm here to write that we've decided to close down the Lightcone Offices by the end of March. While we initially intended to transplant the office to the Rose Garden Inn, Oliver has decided (and I am on the same page about this decision) to make a clean break going forward to allow us to step back and renegotiate our relationship to the entire EA/longtermist ecosystem, as well as change what products and services we build.

Below I'll give context on the decision and other details, but the main practical information is that the office will no longer be open after Friday March 24th. (There will be a goodbye party on that day.)

I asked Oli to briefly state his reasoning for this decision, here's what he says:

An explicit part of my impact model for the Lightcone Offices has been that its value was substantially dependent on the existing EA/AI Alignment/Rationality ecosystem being roughly on track to solve the world's most important problems, and that while there are issues, pouring gas into this existing engine, and ironing out its bugs and problems, is one of the most valuable things to do in the world.

I had been doubting this assumption of our strategy for a while, even before FTX. Over the past year (with a substantial boost by the FTX collapse) my actual trust in this ecosystem and interest in pouring gas into this existing engine has greatly declined, and I now stand before what I have helped built with great doubts about whether it all will be or has been good for the world.

I respect many of the people working here, and I am glad about the overall effect of Lightcone on this ecosystem we have built, and am excited about many of the individuals in the space, and probably in many, maybe even most, future worlds I will come back with new conviction to invest and build out this community that I have been building infrastructure for for almost a full decade. But right now, I think both me and the rest of Lightcone need some space to reconsider our relationship to this whole ecosystem, and I currently assign enough probability that building things in the space is harmful for the world that I can't really justify the level of effort and energy and money that Lightcone has been investing into doing things that pretty indiscriminately grow and accelerate the things around us.

(To Oli's points I'll add to this that it's also an ongoing cost in terms of time, effort, stress, and in terms of a lack of organizational focus on the other ideas and projects we'd like to pursue.)

Oli, myself, and the rest of the Lightcone team will be available to discuss more about this in the channel #closing-office-reasoning where I invite any and all of you who wish to to discuss this with me, the rest of the lightcone team, and each other.

In the last few weeks I sat down and interviewed people leading the 3 orgs whose primary office is here (FAR, AI Impacts, and Encultured) and 13 other individual contributors. I asked about how this would affect them, how we could ease the change, and generally get their feelings about how the ecosystem is working out.

These conversations lasted on average 45 mins each, and it was very interesting to hear people's thoughts about this, and also their suggestions about other things Lightcone could work on.

These conversations also left me feeling more hopeful about building related community-infrastructure in the future, as I learned of a number of positive effects that I wasn't aware of. These conversations all felt pretty real, I respect all the people involved more, and I hope to talk to many more of you at length before we close.

From the check-ins I've done with people, this seems to me to be enough time to not disrupt any SERI MATS mentorships, and to give the orgs here a comfortable enough amount of time to make new plans, but if this does put you in a tight spot, please talk to us and we'll see how we can help.

The campus team (me, Oli, Jacob, Rafe) will be in the office for lunch tomorrow (Friday at 1pm) to discuss any and all of this with you. We'd like to know how this is affecting you, and I'd really like to know about costs this has for you that I'm not aware of. Please feel free (and encouraged) to just chat with us in your lightcone channels (or in any of the public office channels too).

Otherwise, a few notes:

- The Lighthouse system is going away when the leases end. Lighthouse 1 has closed, and Lighthouse 2 will continue to be open for a few more months.

- If you would like to start renting your room yourself from WeWork, I can introduce you to our point of contact, who I think would be glad to continue to rent the offices. Offices cost between $1k and $6k a month depending on how many desks are in them.

- Here's a form to give the Lightcone team anonymous feedback about this decision (or anything). [Link removed from LW post.]

- To talk with people about future plans starting now and after the offices close, whether to propose plans or just to let others know what you'll be doing, I've made the #future-plans channel and added you all to it.

It's been a thrilling experience to work alongside and get to know so many people dedicated to preventing an existential catastrophe, and I've made many new friends working here, thank you, but I think me and the Lightcone Team need space to reflect and to build something better if Earth is going to have a shot at aligning the AGIs we build.

Oliver's 1st message in #Closing-Office-Reasoning

(In response to a question on the Slack saying "I was hoping you could elaborate more on the idea that building the space may be net harmful.")

I think FTX is the obvious way in which current community-building can be bad, though in my model of the world FTX, while somewhat of outlier in scope, doesn't feel like a particularly huge outlier in terms of the underlying generators. Indeed it feels not that far from par for the course of the broader ecosystems relationship to honesty, aggressively pursuing plans justified by naive consequentialism, and more broadly having a somewhat deceptive relationship to the world.

Though again, I really don't feel confident about the details here and am doing a bunch of broad orienting.

I've also written some EA Forum and LessWrong comments that point to more specific things that I am worried will have or have had a negative effect on the world:

My guess is RLHF research has been pushing on a commercialization bottleneck and had a pretty large counterfactual effect on AI investment, causing a huge uptick in investment into AI and potentially an arms race between Microsoft and Google towards AGI: https://www.lesswrong.com/posts/vwu4kegAEZTBtpT6p/thoughts-on-the-impact-of-rlhf-research?commentId=HHBFYow2gCB3qjk2i [LW(p) · GW(p)]

Thoughts on how responsible EA was for the FTX fraud: https://forum.effectivealtruism.org/posts/Koe2HwCQtq9ZBPwAS/quadratic-reciprocity-s-shortform?commentId=9c3srk6vkQuLHRkc6 [EA(p) · GW(p)]

Tendencies towards pretty mindkilly PR-stuff in the EA community: https://forum.effectivealtruism.org/posts/ALzE9JixLLEexTKSq/cea-statement-on-nick-bostrom-s-email?commentId=vYbburTEchHZv7mn4 [EA(p) · GW(p)]

I feel quite worried that the alignment plan of Anthropic currently basically boils down to "we are the good guys, and by doing a lot of capabilities research we will have a seat at the table when AI gets really dangerous, and then we will just be better/more-careful/more-reasonable than the existing people, and that will somehow make the difference between AI going well and going badly". That plan isn't inherently doomed, but man does it rely on trusting Anthropic's leadership, and I genuinely only have marginally better ability to distinguish the moral character of Anthropic's leadership from the moral character of FTX's leadership, and in the absence of that trust the only thing we are doing with Anthropic is adding another player to an AI arms race.

More broadly, I think AI Alignment ideas/the EA community/the rationality community played a pretty substantial role in the founding of the three leading AGI labs (Deepmind, OpenAI, Anthropic), and man, I sure would feel better about a world where none of these would exist, though I also feel quite uncertain here. But it does sure feel like we had a quite large counterfactual effect on AI timelines.

Before the whole FTX collapse, I also wrote this long list of reasons for why I feel quite doomy about stuff (posted in replies, to not spam everything).

Oliver's 2nd message

(Originally written October 2022) I've recently been feeling a bunch of doom around a bunch of different things, and an associated lack of direction for both myself and Lightcone.

Here is a list of things that I currently believe that try to somehow elicit my current feelings about the world and the AI Alignment community.

- In most worlds RLHF, especially if widely distributed and used, seems to make the world a bunch worse from a safety perspective (by making unaligned systems appear aligned at lower capabilities levels, meaning people are less likely to take alignment problems seriously, and by leading to new products that will cause lots of money to go into AI research, as well as giving a strong incentive towards deception at higher capability levels)

- It's a bad idea to train models directly on the internet, since the internet as an environment makes supervision much harder, strongly encourages agency, has strong convergent goals around deception, and also gives rise to a bunch of economic applications that will cause more money to go into AI

- The EA and AI Alignment community should probably try to delay AI development somehow, and this will likely include getting into conflict with a bunch of AI capabilities organizations, but it's worth the cost

- I don't currently see a way to make AIs very useful for doing additional AI Alignment research, and don't expect any of the current approaches for that to work (like ELK, or trying to imitate humans by doing more predictive modeling of human behavior and then hoping they turn out to be useful), but it sure would be great if we found a way to do this (but like, I don't think we currently know how to do this)

- I am quite worried that it's going to be very easy to fool large groups of humans, and that AI is quite close to seeming very aligned and sympathetic to executives at AI companies, as well as many AI alignment researchers (and definitely large parts of the public). I don't think this will be the result of human modeling, but just the result of pushing the AI into patterns of speech/behaior that we associate with being less threatening and being more trustworthy. In some sense this isn't a catastrophic risk because this kind of deception doesn't cause the AI to dispower the humans, but I do expect it to make actually getting the research to stop or to spend lots of resources on alignment a lot harder later on.

- I do sure feel like a lot of AI alignment research is very suspiciously indistinguishable from capabilities research, and I think this is probably for the obvious bad reasons instead of this being an inherent property of these domains (the obvious bad reason being that it's politically advantageous to brand your research as AI Alignment research and capabilities research simultaneously, since that gives you more social credibility, especially from the EA crowd which has a surprisingly strong talent pool and is also just socially close to a lot of top AI capabilities people)

- I think a really substantial fraction of people who are doing "AI Alignment research" are instead acting with the primary aim of "make AI Alignment seem legit". These are not the same goal, a lot of good people can tell and this makes them feel kind of deceived, and also this creates very messy dynamics within the field where people have strong opinions about what the secondary effects of research are, because that's the primary thing they are interested in, instead of asking whether the research points towards useful true things for actually aligning the AI.

- More broadly, I think one of the primary effects of talking about AI Alignment has been to make more people get really hyped about AGI, and be interested in racing towards AGI. Generally knowing about AGI-Risk does not seem to have made people more hesitant towards racing and slow down, but instead caused them to accelerate progress towards AGI, which seems bad on the margin since I think humanity's chances of survival do go up a good amount with more time.

- It also appears that people who are concerned about AGI risk have been responsible for a very substantial fraction of progress towards AGI, suggesting that there is a substantial counterfactual impact here, and that people who think about AGI all day are substantially better at making progress towards AGI than the average AI researcher (though this could also be explained by other attributes like general intelligence or openness to weird ideas that EA and AI Alignment selects for, though I think that's somewhat less likely)

- A lot of people in AI Alignment I've talked to have found it pretty hard to have clear thoughts in the current social environment, and many of them have reported that getting out of Berkeley, or getting social distance from the core of the community has made them produce better thoughts. I don't really know whether the increased productivity here is born out by evidence, but really a lot of people that I considered promising contributors a few years ago are now experiencing a pretty active urge to stay away from the current social milieu.

- I think all of these considerations in-aggregate make me worried that a lot of current work in AI Alignment field-building and EA-community building is net-negative for the world, and that a lot of my work over the past few years has been bad for the world (most prominently transforming LessWrong into something that looks a lot more respectable in a way that I am worried might have shrunk the overton window of what can be discussed there by a lot, and having generally contributed to a bunch of these dynamics).

- Exercising some genre-saviness, I also think a bunch of this is driven by just a more generic "I feel alienated by my social environment changing and becoming more professionalized and this is robbing it of a lot of the things I liked about it". I feel like when people feel this feeling they often are holding on to some antiquated way of being that really isn't well-adapted to their current environment, and they often come up with fancy rationalizations for why they like the way things used to be.

- I also feel confused about how to relate to the stronger equivocation of ML-skills with AI Alignment skills. I don't personally have much of a problem with learning a bunch of ML, and generally engage a good amount with the ML literature (not enough to be an active ML researcher, but enough to follow along almost any conversation between researchers), but I do also feel a bit of a sense of being personally threatened, and other people I like and respect being threatened, in this shift towards requiring advanced cutting-edge ML knowledge in order to feel like you are allowed to contribute to the field. I do feel a bit like my social environment is being subsumed by and is adopting the status hierarchy of the ML community in a way that does not make me trust what is going on (I don't particularly like the status hierarchy and incentive landscape of the ML community, which seems quite well-optimized to cause human extinction)

- I also feel like the EA community is being very aggressive about recruitment in a way that locally in the Bay Area has displaced a lot of the rationality community, and I think this is broadly bad, both for me personally and also because I just think the rationality community had more of the right components to think sanely about AI Alignment, many of which I feel like are getting lost

- I also feel like with Lightcone and Constellation coming into existence, and there being a lot more money and status around, the inner circle dynamics around EA and longtermism and the Bay Area community have gotten a lot worse, and despite being a person who I think generally is pretty in the loop with stuff, have found myself being worried and stressed about being excluded from some important community function, or some important inner circle. I am quite worried that me founding the Lightcone Offices was quite bad in this respect, by overall enshrining some kind of social hierarchy that wasn't very grounded in things I actually care about (I also personally felt a very strong social pressure to exclude interesting but socially slightly awkward people from being in Lightcone that I ended up giving into, and I think this was probably a terrible mistake and really exacerbated the dynamics here)

- I think some of the best shots we have for actually making humanity not go extinct (slowing down AI progress, pivotal acts, intelligence enhancement, etc.) feel like they have a really hard time being considered in the current overton window of the EA and AI Alignment community, and I feel like people being unable to consider plans in these spaces both makes them broadly less sane, but also just like prevents work from happening in these areas.

- I get a lot of messages these days about people wanting me to moderate or censor various forms of discussion on LessWrong that I think seem pretty innocuous to me, and the generators of this usually seem to be reputation related. E.g. recently I've had multiple pretty influential people ping me to delete or threaten moderation action against the authors of posts and comments talking about: How OpenAI doesn't seem to take AI Alignment very seriously, why gene drives against Malaria seem like a good idea, why working on intelligence enhancement is a good idea. In all of these cases the person asking me to moderate did not leave any comment of their own trying to argue for their position, before asking me to censor the content. I find this pretty stressful, and also like, most of the relevant ideas feel like stuff that people would have just felt comfortable discussing openly on LW 7 years ago or so (not like, everyone, but there wouldn't have been so much of a chilling effect so that nobody brings up these topics).

Ben's 1st message in #Closing-Office-Reasoning

Note from Ben: I have lightly edited this because I wrote it very quickly at the time

(I drafted this earlier today and didn't give it much of a second pass, forgive me if it's imprecise or poorly written.)

Here are some of the reasons I'd like to move away from providing offices as we have done so far.

- Having two locations comes with a large cost. To track how a space is functioning, what problems people are running into, how the culture changes, what improvements could be made, I think I need to be there at least 20% of my time each week (and ideally ~50%), and that’s a big travel cost to the focus of the lightcone team.

- Offices are a high-commitment abstraction for which it is hard to iterate. In trying to improve a culture, I might try to help people start more new projects, or gain additional concepts that help them understand the world, or improve the standards arguments are held to, or something else. But there's relatively little space for a lot of experimentation and negotiation in an office space — you’ve mostly made a commitment to offer a basic resource and then to get out of people's way.

- The “enculturation to investment” ratio was very lopsided. For example, with SERI MATS, many people came for 2.5 months, for whom I think a better selection mechanism would have been something shaped like a 4-day AIRCS-style workshop to better get to know them and think with them, and then pick a smaller number of the best people from that to invest further into. If I came up with an idea right now for what abstraction I'd prefer, it'd be something like an ongoing festival with lots of events and workshops and retreats for different audiences and different sorts of goals, with perhaps a small office for independent alignment researchers, rather than an office space that has a medium-size set of people you're committed to supporting long-term.

- People did not do much to invest in each other in the office. I think this in part because the office does not capture other parts of people’s lives (e.g. socializing), but also I think most people just didn’t bring their whole spirit to this in some ways, and I’m not really sure why. I think people did not have great aspirations for themselves or each other. I did not feel here that folks had a strong common-spirit — that they thought each other could grow to be world-class people who changed the course of history, and did not wish to invest in each other in that way. (There were some exceptions to note, such as Alex Mennen’s Math Talks, John Wentworth's Framing Practica, and some of the ways that people in the Shard Theory teams worked together with the hope of doing something incredible, which both felt like people were really investing into communal resources and other people.) I think a common way to know whether people are bringing their spirit to something is whether they create art about it — songs, in-jokes, stories, etc. Soon after the start I felt nobody was going to really bring themselves so fully to the space, even though we hoped that people would. I think there were few new projects from collaborations in the space, other than between people who already had a long history.

And regarding the broader ecosystem:

- Some of the primary projects getting resources from this ecosystem do not seem built using the principles and values (e.g. integrity, truth-seeking, x-risk reduction) that I care about — such as FTX, OpenAI, Anthropic, CEA, Will MacAskill's career as a public intellectual — and those that do seem to have closed down or been unsupported (such as FHI, MIRI, CFAR). Insofar as these are the primary projects who will reap the benefits of the resources that Lightcone invests into this ecosystem, I would like to change course.

- The moral maze nature of the EA/longtermist ecosystem has increased substantially over the last two years, and the simulacra level of its discourse has notably risen too. There are many more careerist EAs working here and at events, it’s more professionalized and about networking. Many new EAs are here not because they have a deep-seated passion for doing what’s right and using math to get the answers, but because they’re looking for an interesting, well-paying job in a place with nice nerds. Or are just noticing that there’s a lot of resources being handed out in a very high-trust way. One of the people I interviewed at the office said they often could not tell whether a newcomer was expressing genuine interest in some research, or was trying to figure out “how the system of reward” worked so they could play it better, because the types of questions in both cases seemed so similar. [Added to LW post: I also remember someone joining the offices to collaborate on a project, who explained that in their work they were looking for "The next Eliezer Yudkowsky or Paul Christiano". When I asked what aspects of Eliezer they wanted to replicate, they said they didn't really know much about Eliezer but it was something that a colleague of theirs said a lot.] It also seems to me that the simulacra level of writing on the EA Forum is increasing, whereby language is increasingly used primarily to signal affiliation and policy-preferences rather than to explain how reality works. I am here in substantial part because of people (like Eliezer Yudkowsky and Scott Alexander) honestly trying to explain how the world works in their online writing and doing a damn good job of it, and I feel like there is much less of that today in the EA/longtermist ecosystem. This makes the ecosystem much harder to direct, to orient within, and makes it much harder to trust that resources intended for a given purpose will not be redirected by the various internal forces that grow against the intentions of the system.

- The alignment field that we're supporting seems to me to have pretty little innovation and pretty bad politics. I am irritated by the extent to which discussion is commonly framed around a Paul/Eliezer dichotomy, even while the primary person taking orders of magnitudes more funding and staff talent (Dario Amodei) has barely explicated his views on the topic and appears (from a distance) to have disastrously optimistic views about how easy alignment will be and how important it is to stay competitive with state of the art models. [Added to LW post: I also generally dislike the dynamics of fake-expertise and fake-knowledge I sometimes see around the EA/x-risk/alignment places.

- I recall at EAG in Oxford a year or two ago, people were encouraged to "list their areas of expertise" on their profile, and one person who works in this ecosystem listed (amongst many things) "Biorisk" even though I knew the person had only been part of this ecosystem for <1 year and their background was in a different field.

- It also seems to me like people who show any intelligent thought or get any respect in the alignment field quickly get elevated to "great researchers that new people should learn from" even though I think that there's less than a dozen people who've produced really great work, and mostly people should think pretty independently about this stuff.

- I similarly feel pretty worried by how (quite earnest) EAs describe people or projects as "high impact" when I'm pretty sure that if they reflected on their beliefs, they honestly wouldn't know the sign of the person or project they were talking about, or estimate it as close-to-zero.]

How does this relate to the office?

A lot of the boundary around who is invited to the offices has been determined by:

- People whose x-risk reduction work the Lightcone team respects or is actively excited about

- People and organizations in good standing in the EA/longtermist ecosystem (e.g. whose research is widely read, who has major funding from OpenPhil/FTX, who have organizations that have caused a lot to happen, etc) and the people working and affiliated with them

- Not-people who we think would (sadly) be very repellent to many people to work in the space (e.g. lacking basic social skills, or who many people find scary for some reason) or who we think have violated important norms (e.g. lying, sexual assault, etc).

The 2nd element has really dominated a lot of my choices here in the last 12 months, and (as I wrote above) this is a boundary that is increasingly filled with people who I don't believe are here because they care about ethics, who I am not aware have done any great work, who I am not aware of having strong or reflective epistemologies. Even while massive amounts of resources are being poured into the EA/longtermist ecosystem, I'd like to have a far more discerning boundary around the resources I create.

- ^

The office rent cost about 1.5x what it needed to be. We started in a WeWork because we were prototyping whether people even wanted an office, and wanted to get started quickly (the office was up and running in 3 weeks instead of going through the slower process of signing a 12-24 month lease). Then we were in a state for about a year of figuring out where to move to long-term, often wanting to preserve the flexibility of being able to move out within 2 months.

103 comments

Comments sorted by top scores.

comment by Thomas Larsen (thomas-larsen) · 2023-03-15T05:19:26.378Z · LW(p) · GW(p)

I think a really substantial fraction of people who are doing "AI Alignment research" are instead acting with the primary aim of "make AI Alignment seem legit". These are not the same goal, a lot of good people can tell and this makes them feel kind of deceived, and also this creates very messy dynamics within the field where people have strong opinions about what the secondary effects of research are, because that's the primary thing they are interested in, instead of asking whether the research points towards useful true things for actually aligning the AI.

This doesn't feel right to me, off the top of my head, it does seem like most of the field is just trying to make progress. For most of those that aren't, it feels like they are pretty explicit about not trying to solve alignment, and also I'm excited about most of the projects. I'd guess like 10-20% of the field are in the "make alignment seem legit" camp. My rough categorization:

Make alignment progress:

- Anthropic Interp

- Redwood

- ARC Theory

- Conjecture

- MIRI

- Most independent researchers that I can think of (e.g. John, Vanessa, Steven Byrnes, the MATS people I know)

- Some of the safety teams at OpenAI/DM

- Aligned AI

- Team Shard

make alignment seem legit:

CAISsafe.ai- Anthropic scaring laws

- ARC Evals (arguably, but it seems like this isn't quite the main aim)

- Some of the safety teams at OpenAI/DM

- Open Phil (I think I'd consider Cold Takes to be doing this, but it doesn't exactly brand itself as alignment research)

What am I missing? I would be curious which projects you feel this way about.

Replies from: habryka4, ESRogs, 6nne, evhub↑ comment by habryka (habryka4) · 2023-03-15T07:18:57.646Z · LW(p) · GW(p)

This list seems partially right, though I would basically put all of Deepmind in the "make legit" category (I think they are genuinely well-intentioned about this, but I've had long disagreements with e.g. Rohin about this in the past). As a concrete example of this, whose effects I actually quite like, think of the specification gaming list. I think the second list is missing a bunch of names and instances, in-particular a lot of people in different parts of academia, and a lot of people who are less core "AINotKillEveryonism" flavored.

Like, let's take "Anthropic Capabilities" for example, which is what the majority of people at Anthropic work on. Why are they working on it?

They are working on it partially because this gives Anthropic access to state of the art models to do alignment research on, but I think in even greater parts they are doing it because this gives them a seat at the table with the other AI capabilities orgs and makes their work seem legitimate to them, which enables them to both be involved in shaping how AI develops, and have influence over these other orgs.

I think this goal isn't crazy, but I do get a sense that the overall strategy for Anthropic is very much not "we are trying to solve the alignment problem" and much more "we are trying to somehow get into a position of influence and power in the AI space so that we can then steer humanity in directions we care about" while also doing alignment research, but thinking that most of their effect on the world doesn't come from the actual alignment research they produce (I do appreciate that Anthropic is less pretending to just do the first thing a bunch, which I think is better).

I also disagree with you on "most independent researchers". I think the people you list definitely have that flavor, but at least in my LTFF work we've funded more people whose primary plan was something much closer to the "make it seem legit" branch. Indeed this is basically the most common reason I see people get PhDs, of which we funded a lot.

I feel confused about Conjecture. I had some specific run-ins with them that indeed felt among the worst offenders of trying to primarily optimize for influence, but some of the people seem genuinely motivated by making progress. I currently think it's a mixed bag.

I could list more, but this feels like a weird context in which to give my takes on everyone's AI Alignment research, and seems like it would benefit from some more dedicated space. Overall, my sense is in-terms of funding and full-time people, things are skewed around 70/30 in favor of "make legit", and I do think there are a lot of great people who are trying to genuinely solve the problem.

Replies from: rohinmshah, lc, akash-wasil, Spencer Becker-Kahn↑ comment by Rohin Shah (rohinmshah) · 2023-03-15T08:27:27.426Z · LW(p) · GW(p)

(I realize this is straying pretty far from the intent of this post, so feel free to delete this comment)

I totally agree that a non-trivial portion of DeepMind's work (and especially my work) is in the "make legit" category, and I stand by that as a good thing to do, but putting all of it there seems pretty wild. Going off of a list I previously wrote about DeepMind work (this comment [LW(p) · GW(p)]):

We do a lot of stuff, e.g. of the things you've listed, the Alignment / Scalable Alignment Teams have done at least some work on the following since I joined in late 2020:

- Eliciting latent knowledge (see ELK prizes, particularly the submission from Victoria Krakovna & Vikrant Varma & Ramana Kumar)

- LLM alignment (lots of work discussed in the podcast with Geoffrey [LW · GW] you mentioned)

- Scalable oversight (same as above)

- Mechanistic interpretability (unpublished so far)

- Externalized Reasoning Oversight (my guess is that this will be published soon) (EDIT: this paper)

- Communicating views on alignment (e.g. the post you linked [LW · GW], the writing that I do on this forum is in large part about communicating my views)

- Deception + inner alignment (in particular examples of goal misgeneralization)

- Understanding agency (see e.g. discovering agents [LW · GW], most of Ramana's posts [LW · GW])

And in addition we've also done other stuff like

- Learning more safely when doing RL

- Addressing reward tampering with decoupled approval

- Understanding agent incentives with CIDs

I'm probably forgetting a few others.

(Note that since then the mechanistic interpretability team published Tracr.)

Of this, I think "examples of goal misgeneralization" is primarily "make alignment legit", while everything else is about making progress on alignment. (I see the conceptual progress towards specifically naming and describing goal misgeneralization as progress on alignment, but that was mostly finished within-the-community by the time we were working on the examples.)

(Some of the LLM alignment work and externalized reasoning oversight work has aspects of "making alignment legit" but it also seems like progress on alignment -- in particular I think I learn new empirical facts about how well various techniques work from both.)

I think the actual crux here is how useful the various empirical projects are, where I expect you (and many others) think "basically useless" while I don't.

In terms of fraction of effort allocated to "make alignment legit", I think it's currently about 10% of the Alignment and Scalable Alignment teams, and it was more like 20% while the goal misgeneralization project was going on. (This is not counting LLM alignment and externalized reasoning oversight as "make alignment legit".)

Replies from: habryka4↑ comment by habryka (habryka4) · 2023-03-15T19:03:25.239Z · LW(p) · GW(p)

I mean, I think my models here come literally from conversations with you, where I am pretty sure you have said things like (paraphrased) "basically all the work I do at Deepmind and the work of most other people I work with at Deepmind is about 'trying to demonstrate the difficulty of the problem' and 'convincing other people at Deepmind the problem is real'".

In as much as you are now claiming that is only 10%-20% of the work, that would be extremely surprising to me and I do think would really be in pretty direct contradiction with other things we have talked about.

Like, yes, of course if you want to do field-building and want to get people to think AI Alignment is real, you will also do some alignment research. But I am talking about the balance of motivations, not the total balance of work. My sense is most of the motivation for people at the Deepmind teams comes from people thinking about how to get other people at Deepmind to take AI Alignment seriously. I think that's a potentially valuable goal, but indeed it is also the kind of goal that often gets represented as someone just trying to make direct progress on the problem.

Replies from: rohinmshah, Raemon↑ comment by Rohin Shah (rohinmshah) · 2023-03-15T22:41:24.597Z · LW(p) · GW(p)

Hmm, this is surprising. Some claims I might have made that could have led to this misunderstanding, in order of plausibility:

- [While I was working on goal misgeneralization] "Basically all the work that I'm doing is about convincing other people that the problem is real". I might have also said something like "and most people I work with" intending to talk about my collaborators on goal misgeneralization rather than the entire DeepMind safety team(s); for at least some of the time that I was working on goal misgeneralization I was an individual contributor so that would have been a reasonable interpretation.

- "Most of my past work hasn't made progress on the problem" -- this would be referring to papers that I started working on before believing that scaled up deep learning could lead to AGI without additional insights, which I think ended up solving the wrong problem because I had a wrong model of what the problem was. (But I wouldn't endorse "I did this to make alignment legit", I was in fact trying to solve the problem as I saw it.) (I also did lots of conceptual work that I think did make progress but I have a bad habit of using phrases like "past work" to only mean papers.)

- "[Particular past work] didn't make progress on the problem, though it did explain a problem well" -- seems very plausible that I said this about some past DeepMind work.

I do feel pretty surprised if, while I was at DeepMind, I ever intended to make the claim that most of the DeepMind safety team(s) were doing work based on a motivation that was primarily about demonstrating difficulty / convincing other people. (Perhaps I intentionally made such a claim while I wasn't at DeepMind; seems a lot easier for me to have been mistaken about that before I was actually at DeepMind, but honestly I'd still be pretty surprised.)

My sense is most of the motivation for people at the Deepmind teams comes from people thinking about how to get other people at Deepmind to take AI Alignment seriously.

Idk how you would even theoretically define a measure for this that I could put numbers on, but I feel like if you somehow did do it, I'd probably think it was <50% and >10%.

Replies from: habryka4↑ comment by habryka (habryka4) · 2023-03-15T23:21:52.078Z · LW(p) · GW(p)

[While I was working on goal misgeneralization] "Basically all the work that I'm doing is about convincing other people that the problem is real". I might have also said something like "and most people I work with" intending to talk about my collaborators on goal misgeneralization rather than the entire DeepMind safety team(s); for at least some of the time that I was working on goal misgeneralization I was an individual contributor so that would have been a reasonable interpretation.

This seems like the most likely explanation. Decent chance I interpreted "and most people I work with" as referring to the rest of the Deepmind safety team.

I still feel confused about some stuff, but I am happy to let things stand here.

↑ comment by Raemon · 2023-03-15T20:31:44.867Z · LW(p) · GW(p)

fyi your phrasing here is different from what I initially interpreted "make AI safety seem legit".

like there's maybe a few things someone might mean if they say "they're working on AI Alignment research"

- they are pushing forward the state of the art of deep alignment understanding

- they are orienting to the existing field of alignment research / upskilling

- they are conveying to other AI researchers "here is what the field of alignment is important and why"

- they are trying to make AI alignment feel high status, so that they feel safe in their career and social network, while also getting to feel important

(and of course people can be doing a mixture of the above, or 5th options I didn't lisT)

I interpreted you initially as saying #4, but it sounds like you/Rohin here are talking about #3. There are versions of #3 that are secretly just #4 without much theory-of-change, but, idk, I think Rohin's stated goal here is just pretty reasonable and definitely something I want in my overall AI Alignment Field portfolio. I agree you should avoid accidentally conflating it with #1.

(i.e. this seems related to a form of research-debt, albeit focused on bridging the gap between one field and another, rather than improving intra-field research debt)

Replies from: habryka4↑ comment by habryka (habryka4) · 2023-03-15T21:41:43.463Z · LW(p) · GW(p)

Yep, I am including 3 in this. I also think this is something pretty reasonable for someone in the field to do, but when most of your field is doing that I think quite crazy and bad things happen, and also it's very easy to slip into doing 4 instead.

↑ comment by lc · 2023-03-15T08:43:50.771Z · LW(p) · GW(p)

They are working on it partially because this gives Anthropic access to state of the art models to do alignment research on, but I think in even greater parts they are doing it because this gives them a seat at the table with the other AI capabilities orgs and makes their work seem legitimate to them, which enables them to both be involved in shaping how AI develops, and have influence over these other orgs.

...Am I crazy or is this discussion weirdly missing the third option of "They're doing it because they want to build a God-AI and 'beat the other orgs to the punch'"? That is completely distinct from signaling competence to other AGI orgs or getting yourself a "seat at the table" and it seems odd to categorize the majority of Anthropic's aggslr8ing as such.

↑ comment by Orpheus16 (akash-wasil) · 2023-03-15T15:36:40.493Z · LW(p) · GW(p)

It seems to me like one (often obscured) reason for the disagreement between Thomas and Habryka is that they are thinking about different groups of people when they define "the field."

To assess the % of "the field" that's doing meaningful work, we'd want to do something like [# of people doing meaningful work]/[total # of people in the field].

Who "counts" in the denominator? Should we count anyone who has received a grant from the LTFF with the word "AI safety" in it? Only the ones who have contributed object-level work? Only the ones who have contributed object-level work that passes some bar? Should we count the Anthropic capabilities folks? Just the EAs who are working there?

My guess is that Thomas was using more narrowly defined denominator (e.g., not counting most people who got LTFF grants and went off to to PhDs without contributing object-level alignment stuff; not counting most Anthropic capabilities researchers who have never-or-minimally engaged with the AIS community) whereas Habryka was using a more broadly defined denominator.

I'm not certain about this, and even if it's true, I don't think it explains the entire effect size. But I wouldn't be surprised if roughly 10-30% of the difference between Thomas and Habryka might come from unstated assumptions about who "counts" in the denominator.

(My guess is that this also explains "vibe-level" differences to some extent. I think some people who look out into the community and think "yeah, I think people here are pretty reasonable and actually trying to solve the problem and I'm impressed by some of their work" are often defining "the community" more narrowly than people who look out into the community and think "ugh, the community has so much low-quality work and has a bunch of people who are here to gain influence rather than actually try to solve the problem.")

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2023-03-15T19:34:40.111Z · LW(p) · GW(p)

This sounds like a solid explanation for the difference for someone totally uninvolved with the Berkeley scene.

Though I'm surprised there's no broad consensus on even basic things like this in 2023.

In game terms, if everyone keeps their own score separately then it's no wonder a huge portion of effort will, in aggregate, go towards min-maxing the score tracking meta-game.

↑ comment by carboniferous_umbraculum (Spencer Becker-Kahn) · 2023-03-15T10:33:07.851Z · LW(p) · GW(p)

Something ~ like 'make it legit' has been and possibly will continue to be a personal interest of mine.

I'm posting this after Rohin entered this discussion - so Rohin, I hope you don't mind me quoting you like this, but fwiw I was significantly influenced by this comment [EA(p) · GW(p)] on Buck's old talk transcript 'My personal cruxes for working on AI safety [EA · GW]'. (Rohin's comment repeated here in full and please bear in mind this is 3 years old; his views I'm sure have developed and potentially moved a lot since then:)

Replies from: rohinmshah

I enjoyed this post, it was good to see this all laid out in a single essay, rather than floating around as a bunch of separate ideas.That said, my personal cruxes and story of impact are actually fairly different: in particular, while this post sees the impact of research as coming from solving the technical alignment problem, I care about other sources of impact as well, including:

1. Field building: Research done now can help train people who will be able to analyze problems and find solutions in the future, when we have more evidence about what powerful AI systems will look like.

2. Credibility building: It does you no good to know how to align AI systems if the people who build AI systems don't use your solutions. Research done now helps establish the AI safety field as the people to talk to in order to keep advanced AI systems safe.

3. Influencing AI strategy: This is a catch all category meant to include the ways that technical research influences the probability that we deploy unsafe AI systems in the future. For example, if technical research provides more clarity on exactly which systems are risky and which ones are fine, it becomes less likely that people build the risky systems (nobody _wants_ an unsafe AI system), even though this research doesn't solve the alignment problem.

As a result, cruxes 3-5 in this post would not actually be cruxes for me (though 1 and 2 would be).

↑ comment by Rohin Shah (rohinmshah) · 2023-03-15T19:23:24.074Z · LW(p) · GW(p)

I still endorse that comment, though I'll note that it argues for the much weaker claims of

- I would not stop working on alignment research if it turned out I wasn't solving the technical alignment problem

- There are useful impacts of alignment research other than solving the technical alignment problem

(As opposed to something more like "the main thing you should work on is 'make alignment legit'".)

(Also I'm glad to hear my comments are useful (or at least influential), thanks for letting me know!)

↑ comment by ESRogs · 2023-03-15T22:24:26.887Z · LW(p) · GW(p)

- CAIS

Can we adopt a norm of calling this Safe.ai? When I see "CAIS", I think of Drexler's "Comprehensive AI Services".

Replies from: DanielFilan, jan-kulveit↑ comment by DanielFilan · 2023-03-16T00:12:46.293Z · LW(p) · GW(p)

Oh now the original comment makes more sense, thanks for this clarification.

↑ comment by Jan Kulveit (jan-kulveit) · 2023-03-17T21:48:37.603Z · LW(p) · GW(p)

+1 I was really really upset safe.ai decided to use an established acronym for something very different

↑ comment by 6nne · 2023-03-15T18:43:24.863Z · LW(p) · GW(p)

Could someone explain exactly what "make AI alignment seem legit” means in this thread? I’m having trouble understanding from context.

- “Convince people building AI to utilize AI alignment research”?

- “Make the field of AI alignment look serious/professional/high-status”?

- “Make it look like your own alignment work is worthy of resources”?

- “Make it look like you’re making alignment progress even if you’re not”?

A mix of these? Something else?

Replies from: JamesPayor↑ comment by James Payor (JamesPayor) · 2023-03-15T19:11:46.403Z · LW(p) · GW(p)

Yeah, all four of those are real things happening, and are exactly the sorts of things I think the post has in mind.

I take "make AI alignment seem legit" to refer to a bunch of actions that are optimized to push public discourse and perceptions around. Here's a list of things that come to my mind:

- Trying to get alignment research to look more like a mainstream field, by e.g. funding professors and PhD students who frame their work as alignment and giving them publicity, organizing conferences that try to rope in existing players who have perceived legitimacy, etc

- Papers like Concrete Problems in AI Safety that try to tie AI risk to stuff that's already in the overton window / already perceived as legitimate

- Optimizing language in posts / papers to be perceived well, by e.g. steering clear of the part where we're worried AI will literally kill everyone

- Efforts to make it politically untenable for AI orgs to not have some narrative around safety

Each of these things seems like they have a core good thing, but according to me they've all backfired to the extend that they were optimized to avoid the thorny parts of AI x-risk, because this enables rampant goodharting. Specifically I think the effects of avoiding the core stuff have been bad, creating weird cargo cults around alignment research, making it easier for orgs to have fake narratives about how they care about alignment, and etc.

↑ comment by evhub · 2023-03-15T06:45:58.721Z · LW(p) · GW(p)

Anthropic scaring laws

Personally, I think "Discovering Language Model Behaviors with Model-Written Evaluations [LW · GW]" is most valuable because of what it demonstrates from a scientific perspective, namely that RLHF and scale make certain forms of agentic behavior worse.

comment by johnswentworth · 2023-03-16T01:00:56.565Z · LW(p) · GW(p)

Based on my own retrospective views of how lightcone's office went less-than-optimally, I recently gave some recommendations to someone maybe setting up another alignment research space. (Background: I've been working in the lightcone office since shortly after it opened.) They might be of interest to people mining this post for insights on how to execute similar future spaces. Here they are, lightly edited:

- I recommend selecting for people who want to understand agents, instead of people who want to reduce AI X-risk. When I think about people who bring an attitude of curiosity/exploration to alignment work, the main unifying pattern I notice is that they want to understand agents, as opposed to just avoid doom.

- I recommend selecting for people who are self-improving a lot, and/or want to help others improve a lot. Alex Turner or Nate Soares are good examples of people who score highly on this axis.

- For each of the above, I recommend that you aim for a clear majority (i.e. at least 60-70%) of people in the office to score highly on the relevant metric. So i.e. aim for at least 60-70% of people to be trying to understand agents, and separately at least 60-70% of people be trying to self-improve a lot and/or help others self-improve a lot.

- (The reasoning here is about steering the general vibe, kinds of conversations people have by default, that sort of thing. Which characteristics to select for obviously depend on what kind of vibe you want.)

- (One key load-bearing point about both of the above characteristics is that they're not synonymous with general competence or status. Regardless of what vibe you're going for, the characteristics on which you select should be such that there are competent and/or high-status people who you'd say "no" to. Otherwise, you'll probably slide much harder into status dynamics.)

- I recommend maintaining a very low fraction of people who are primarily doing meta-stuff, i.e. field-building, strategizing, forecasting, etc.

- (This is also about steering vibe and default conversation topics. You don't want enough meta-people that they reach critical concentration for conversational purposes. Also, critical concentration tends to be lower for more-accessible topics. This is one of the major places where I think lightcone went wrong; conversations defaulted to meta-stuff far too much as a result.)

- It might be psychologically helpful to have people pay for their office space, even if it's heavily subsidized by grant money. If you give something away for free, there will always be people lined up to take it, which forces you to gatekeep a lot. Gatekeeping in turn amplifies the sort of unpleasant status dynamics which burned out the lightcone team pretty hard; that's what happens when allocation of scarce resources is by status rather than by money. If e.g. the standard guest policy is "sure, you can bring guests/new people, but you need to pay for them" (maybe past some minimum allowance), then there will be a lot fewer people who you need to say "no" to and who feel like shit as a result.

↑ comment by evhub · 2023-03-16T21:07:28.028Z · LW(p) · GW(p)

I recommend selecting for people who want to understand agents, instead of people who want to reduce AI X-risk.

Strong disagree. I think locking in particular paradigms of how to do AI safety research would be quite bad.

Replies from: habryka4↑ comment by habryka (habryka4) · 2023-03-17T04:28:29.999Z · LW(p) · GW(p)

That seems right to me, but I interpreted the above for advice for one office, potentially a somewhat smaller one. Seems fine to me to have one hub for people who think more through the lens of agency.

Replies from: lelapin↑ comment by Jonathan Claybrough (lelapin) · 2023-03-20T08:24:48.374Z · LW(p) · GW(p)

I mostly endorse having one office concentrate on one research agenda and be able to have high quality conversations on it, and the stated numbers of maybe 10 to 20% people working on strategy/meta sounds fine in that context. Still I want to emphasize how crucial they are - If you have no one to figure out the path between your technical work and overall reducing risk, you're probably missing better paths and approaches (and maybe not realizing your work is useless).

Overall I'd say we don't have enough strategy work being done, and believe it's warranted to have spaces with 70% of people working on strategy/meta. I don't think it was bad if the Lightcone office had a lot of strategy work. (We probably also don't have enough technical alignment work, having more of both is probably good, if we coordinate properly)

comment by Thomas Larsen (thomas-larsen) · 2023-03-15T01:33:20.404Z · LW(p) · GW(p)

I personally benefitted tremendously from the Lightcone offices, especially when I was there over the summer during SERI MATS. Being able to talk to lots of alignment researchers and other aspiring alignment researchers increased my subjective rate of alignment upskilling by >3x relative to before, when I was in an environment without other alignment people.

Thanks so much to the Lightcone team for making the office happen. I’m sad (emotionally, not making a claim here whether it was the right decision or not) to see it go, but really grateful that it existed.

comment by maia · 2023-03-15T23:32:35.107Z · LW(p) · GW(p)

"The EA and rationality communities might be incredibly net negative" is a hell of a take to be buried in a post about closing offices.

:-(

Replies from: Raemon↑ comment by Raemon · 2023-03-15T23:58:29.257Z · LW(p) · GW(p)

Part of the point here is Oli, Ben and the rest of the team are still working through our thoughts/feelings on the subject, didn't feel in a good space to write any kind "here's Our Take™" post. i.e the point here was not meant to do "narrative setting"

But, it seemed important to get the information about our reasoning out there. I felt it was valuable to get some version of this post shipped soon, and this was the version we all felt pretty confident about rushing out the door without angsting about exactly what to say.

(Oli may have a somewhat different frame about what happened and his motivations)

Replies from: maia, lelapin↑ comment by maia · 2023-03-16T00:07:52.075Z · LW(p) · GW(p)

That all makes sense. It does feel like this is worth a larger conversation now that people are thinking about it, and I don't think you guys are the only ones.

I'm reminded of this Sam Altman tweet: https://mobile.twitter.com/sama/status/1621621724507938816

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2023-03-16T00:34:48.121Z · LW(p) · GW(p)

To give credit where it's due, I'm impressed that someone could ask the question whether EA and Rationality were net negative from our values, and while I suspect that an honest investigation would say it wasn't net negative, as Scott Garrabrant said, Yes requires the possibility of No, and there's an outside chance of an investigation returning that EA/Rationality is net negative.

Also, I definitely agree that we probably should talk about things that are outside the Overton Window more.

Re Sam Altman's tweet, I actually think this is reasonably neutral, from my vantage point, maybe because I'm way more optimistic on AI risk and AI Alignment than most of LW.

↑ comment by Jonathan Claybrough (lelapin) · 2023-03-20T08:34:33.055Z · LW(p) · GW(p)

I've multiple times been perplexed as to what the past events which can lead to this kind of take (over 7 years ago, EA/Rationality community's influence probably accelerated openAI's creation) have to do with today's shutting down of the offices.

Are there current, present day things going on in the EA and rationality community which you think warrant suspecting them of being incredibly net negative (causing worse worlds, conditioned on the current setup)? Things done in the last 6 months ? At Lightcone Offices ? (Though I'd appreciate specific examples, I'd already greatly appreciate knowing if there is something in the abstract and prefer a quick response to that level of precision than nothing)

I've imagined an answer, is the following on your mind ?

"EAs are more about saying they care about numbers than actually caring about numbers, and didn't calculate downside risk enough in the past. The past events reveal this attitude and because it's not expected to have changed, we can expect it to still be affecting current EAs, who will continue causing great harm because of not actually caring for downside risk enough. "

↑ comment by habryka (habryka4) · 2023-03-20T09:01:26.914Z · LW(p) · GW(p)

Are there current, present day things going on in the EA and rationality community which you think warrant suspecting them of being incredibly net negative (causing worse worlds, conditioned on the current setup)? Things done in the last 6 months ?

I mean yes! Don't I mention a lot of them in the post above?

I mean FTX happened in the last 6 months! That caused incredibly large harm for the world.

OpenAI and Anthropic are two of the most central players in an extremely bad AI arms race that is causing enormous harm. I really feel like it doesn't take a lot of imagination to think about how our extensive involvement in those organizations could be bad for the world. And a huge component of the Lightcone Offices was causing people to work at those organizations, as well as support them in various other ways.

EAs are more about saying they care about numbers than actually caring about numbers, and didn't calculate downside risk enough in the past. The past events reveal this attitude and because it's not expected to have changed, we can expect it to still be affecting current EAs, who will continue causing great harm because of not actually caring for downside risk enough.

No, this does not characterize my opinion very well. I don't think "worrying about downside risk" is a good pointer to what I think will help, and I wouldn't characterize the problem that people have spent too little effort or too little time on worrying about downside risk. I think people do care about downside risk, I just also think there are consistent and predictable biases that cause people to be unable to stop, or be unable to properly notice certain types of downside risk, though that statement feels in my mind kind of vacuous and like it just doesn't capture the vast majority of the interesting detail of my model.

Replies from: lelapin, sharmake-farah↑ comment by Jonathan Claybrough (lelapin) · 2023-03-21T08:12:33.938Z · LW(p) · GW(p)

Thanks for the reply !

The main reason I didn't understand (despite some things being listed) is I assumed none of that was happening at Lightcone (because I guessed you would filter out EAs with bad takes in favor of rationalists for example). The fact that some people in EA (a huge broad community) are probably wrong about some things didn't seem to be an argument that Lightcone Offices would be ineffective as (AFAIK) you could filter people at your discretion.

More specifically, I had no idea "a huge component of the Lightcone Offices was causing people to work at those organizations". That's strikingly more of a debatable move but I'm curious why that happened in the first place ? In my field building in France we talk of x-risk and alignment and people don't want to accelerate the labs but do want to slow down or do alignment work. I feel a bit preachy here but internally it just feels like the obvious move is "stop doing the probably bad thing", but I do understand if you got in this situation unexpectedly that you'll have a better chance burning this place down and creating a fresh one with better norms.

Overall I get a weird feeling of "the people doing bad stuff are being protected again, we should name more precisely who's doing the bad stuff and why we think it's bad" (because I feel aimed at by vague descriptions like field-building, even though I certainly don't feel like I contributed to any of the bad stuff being pointed at)

No, this does not characterize my opinion very well. I don't think "worrying about downside risk" is a good pointer to what I think will help, and I wouldn't characterize the problem that people have spent too little effort or too little time on worrying about downside risk. I think people do care about downside risk, I just also think there are consistent and predictable biases that cause people to be unable to stop, or be unable to properly notice certain types of downside risk, though that statement feels in my mind kind of vacuous and like it just doesn't capture the vast majority of the interesting detail of my model.

So it's not a problem of not caring, but of not succeeding at the task. I assume the kind of errors you're pointing at are things which should happen less with more practiced rationalists ? I guess then we can either filter to only have people who are already pretty good rationalists, or train them (I don't know if there are good results on that side per CFAR).

Replies from: Benito↑ comment by Ben Pace (Benito) · 2023-03-21T09:22:24.999Z · LW(p) · GW(p)

The fact that some people in EA (a huge broad community) are probably wrong about some things didn't seem to be an argument that Lightcone Offices would be ineffective as (AFAIK) you could filter people at your discretion.

I mean, no, we were specifically trying to support the EA community, we do not get to unilaterally decide who is part of the community. People I don't personally have much respect for but are members of the EA community who are putting in the work to be considered members in good standing definitely get to pass through. I'm not going as far as to say this was the only thing going on, I made choices about which parts of the movement seemed like they were producing good work and acting ethically and which parts seemed pretty horrendous and to be avoided, but I would (for instance) regularly make an attempt to welcome people from an area that seemed to have poor connections in the social graph (e.g. the first EA from country X, from org Y, from area-of-work Z etc), even if I wasn't excited about that person or place or area, because it was part of the EA community and it seems very valuable for the community as a whole to have better interconnectedness between the disparate parts. Overall I think the question I asked was closer to "what would a good custodian of the EA community want to use these resources for" rather than "what would Ben or Lightcone want to use these resources for".

As to your confusion about the office, an analogy that might help here is to consider the marketing or recruitment part of a large company, or perhaps a branch of the company that makes a different product from the rest — yes, our part of the organization functioned nicely, and I liked the choices we made, but if some other part of the company is screwing over its customers/staff, or the CEO is stealing money, or the company's product seems unethical to me, it doesn't matter if I like my part of the company, I am contributing to the company's life and output and should act accordingly. I did not work at FTX, I have not worked for OpenAI, but I am heavily supporting an ecosystem that supported these companies, and I anticipate that the resources I contribute will continue to get captured by these sorts of players via some circuitous route.

↑ comment by Noosphere89 (sharmake-farah) · 2023-03-20T14:20:44.244Z · LW(p) · GW(p)

I mean FTX happened in the last 6 months! That caused incredibly large harm for the world.

I agree, but I have very different takeaways on what FTX means for the Rationalist community.

I think the major takeaway is that human society is somewhat more adequate, relative to our values than we think, and this matters.

To be blunt, FTX was always a fraud, because Bitcoin and cryptocurrency violated a fundamental axiom of good money: It's value must be stable, or at least slowly change, and it's not a good store of value due to the wildly unstable price of say a single Bitcoin or cryptocurrency, and the issue is the deeply stupid idea of fixing the supply, which combined with variable demand, led to wild price swings.

It's possible to salvage some value out of crypto, but they can't be tied to real money.

Most groups have way better ideas for money than Bitcoin and cryptocurrency.

OpenAI and Anthropic are two of the most central players in an extremely bad AI arms race that is causing enormous harm. I really feel like it doesn't take a lot of imagination to think about how our extensive involvement in those organizations could be bad for the world. And a huge component of the Lightcone Offices was causing people to work at those organizations, as well as support them in various other ways.

I don't agree, in this world, and this is related to a very important crux in AI Alignment/AI safety: Can it be solved solely via iteration and empirical work? My answer is yes, and one of the biggest examples is Pretraining from Human Feedback, and I'll explain why it's the first real breakthrough of empirical alignment:

-

It almost completely avoids deceptive alignment via the fact that it lets us specify the base goal as human values first before it has the generalization capabilities, and the goal is pretty simple and myopic, so simplicity bias doesn't have as much incentive to make the model deceptively aligned. Basically, we first pretrain the base goal, which is way more outer aligned than the standard MLE goal, and then we let the AI generalize, and this inverts the order of alignment and capabilities, where RLHF and other alignment solutions first give capabilities, then try to align the model. This is of course not going to work all that well compared to PHF. In particular, it means that more capabilities means better and better inner alignment by default.

-

The goal that was best for pretraining from human feedback, conditional training, has a number of outer alignment benefits compared to RLHF and fine-tuning, even without inner alignment being effectively solved and preventing deceptive alignment.

One major benefit is since it's offline training, there is never a way for any model to affect the distribution of data that we use for alignment, so there's never a way or incentive to gradient hack or shift the distribution. In essence, we avoid embedded agency problems by recreating a Cartesian boundary that actually works in an embedded setting. While it will likely fade away in time, we only need to have it work once, and then we can dispense with the Cartesian boundary.

Again, this shows increasing alignment with scale, which is good because we found the holy grail of alignment: A competitive alignment scheme that scales well with model data and allows you to crank capabilities up and get better and better results from alignment.

Here's a link if you're interested:

https://www.lesswrong.com/posts/8F4dXYriqbsom46x5/pretraining-language-models-with-human-preferences [LW · GW]

Finally, I don't think you realize how well we did in getting companies to care about alignment, our how good the fact that LLMs are being pursued first compared to RL first, which means we can have simulators before agentic systems arise.

comment by Nicholas / Heather Kross (NicholasKross) · 2023-03-14T23:38:06.498Z · LW(p) · GW(p)

Extremely strong upvote for Oliver's 2nd message.

Also, not as related: kudos for actually materially changing the course of your organization, something which is hard for most organizations, period.

Replies from: Jacy Reese↑ comment by Jacy Reese Anthis (Jacy Reese) · 2023-03-15T00:53:32.318Z · LW(p) · GW(p)

In particular, I wonder if many people who won't read through a post about offices and logistics would notice and find compelling a standalone post with Oliver's 2nd message and Ben's "broader ecosystem" list—analogous to AGI Ruin: A List of Lethalities [LW · GW]. I know related points have been made elsewhere, but I think 95-Theses-style lists have a certain punch.

comment by Zack_M_Davis · 2025-01-13T00:51:24.778Z · LW(p) · GW(p)

Retrospectives are great, but I'm very confused at the juxtaposition of the Lightcone Offices being maybe net-harmful in early 2023 and Lighthaven being a priority in early 2025 [LW · GW]. Isn't the latter basically just a higher-production-value version of the former? What changed? (Or after taking the needed "space to reconsider our relationship to this whole ecosystem", did you decide that the ecosystem is OK after all?)

Replies from: habryka4, Benito, Vaniver↑ comment by habryka (habryka4) · 2025-01-13T01:03:34.557Z · LW(p) · GW(p)

Lighthaven is quite different from the Lightcone Offices. Some key differences:

- We mostly charge for things! This means that the incentives and social dynamics are a lot less weird and sycophantic, in a lot of different ways. Generally, both the Lightcone Offices and Lighthaven have strongly updated me on charging for things whenever possible, even if it seems like it will result in a lot of net-positive trades and arrangements not happening.

- Lighthaven mostly hosts programs, and doesn't provide office space for a ton of people. There is a set of permanent residents at Lighthaven, but we are talking about like 10-20 people including the Lightcone team, as opposed to the ~100+ people with approximately permanent access to the Lightcone Offices. As I mention in the fundraising post, I expect this set to grow very slowly, and I feel good about supporting everyone in this set (and would feel at the very least very conflicted and probably net bad about providing the same services to everyone who we supported via the Lightcone Offices)

- More broadly, Lighthaven is both a lot more curated, and a lot less insular. We have lots of big events here with people from adjacent communities and ecosystems, and I feel good about the marginal improvement to idea exchange and communication we make here. And then the people and programs we do support more consistently are things I feel good about.

↑ comment by Ben Pace (Benito) · 2025-01-13T01:43:07.230Z · LW(p) · GW(p)

Adding onto this, I would broadly say that the Lightcone team did not update that in-person infrastructure was unimportant, even while our first attempt was an investment into an ecosystem we later came to regret investing in.

Also here's a quote of mine from the OP:

If I came up with an idea right now for what abstraction I'd prefer, it'd be something like an ongoing festival with lots of events and workshops and retreats for different audiences and different sorts of goals, with perhaps a small office for independent alignment researchers, rather than an office space that has a medium-size set of people you're committed to supporting long-term.

I'd say that this is a pretty close description of a key change that we made, that changes my models of the value of the space quite a lot.

↑ comment by Ben Pace (Benito) · 2025-01-13T01:39:41.384Z · LW(p) · GW(p)

For the record, all of Lightcone's community posts and updates from 2023 do not seem to me to be at all good fits for the review, as they're mostly not trying to teach general lessons, and are kinda inside-baseball / navel-gazing, which is not what the annual review is about.

Replies from: Raemon↑ comment by Raemon · 2025-01-13T02:37:35.219Z · LW(p) · GW(p)

Fwiw I disagree, I think the Review is deliberately openended.

Yes there's a specific goal of find the top 50 posts, and to identify important timeless intellectual contributions. But, part of the whole point of the review (as I originally envisioned it) is also to help reflect in a more general sense on "what happened on LessWrong and what can we learn from it?".

I think rather than trying to say "no, don't reflect on particular things that don't fit the most central use case of the Review", it seems actively good to me to take advantage of the openended nature of it to think about less central things. We can learn timeless lessons from posts that weren't, themselves, particularly timeless.

Replies from: Raemon↑ comment by Raemon · 2025-01-13T02:42:23.884Z · LW(p) · GW(p)

i.e. the question "what sort of community institutions are good to build?" is a timeless question. Why should we artificially limit our ability to reflect on that sort of thing during the Review, given that we set the Review up in an openended way that allows us to do that on the margin?

↑ comment by Vaniver · 2025-01-16T20:10:06.278Z · LW(p) · GW(p)

My understanding is that the Lightcone Offices and Lighthaven have 1) overlapping but distinct audiences, with Lightcone Offices being more 'EA' in a way that seemed bad, and 2) distinct use cases, where Lighthaven is more of a conference venue with a bit of coworking whereas Lightcone Offices was basically just coworking.

comment by Mitchell_Porter · 2023-03-16T06:15:24.278Z · LW(p) · GW(p)

Are there any implications for the future of LessWrong.com the online forum? How is the morale and the economic security of the people responsible for keeping this place running?

Replies from: habryka4↑ comment by habryka (habryka4) · 2023-03-16T07:55:43.238Z · LW(p) · GW(p)

I think I might change some things but it seems very unlikely to me I will substantially reduce investment in LessWrong. Funding is scarcer post-FTX, so some things might change a bit, but I do care a lot about LessWrong continuing to get supported, and I also think it's pretty plausible I will substantially ramp up my investment into LW again.

comment by porby · 2023-03-17T21:56:14.793Z · LW(p) · GW(p)

This going to point about 87 degrees off from the main point of the post, so I'm fine with discussing this elsewhere or in DMs or something, but I do wonder how cruxy this is:

More broadly, I think AI Alignment ideas/the EA community/the rationality community played a pretty substantial role in the founding of the three leading AGI labs (Deepmind, OpenAI, Anthropic), and man, I sure would feel better about a world where none of these would exist, though I also feel quite uncertain here. But it does sure feel like we had a quite large counterfactual effect on AI timelines.

I missed the first chunk of your conversation with Dylan at the lurkshop about this, but at the time, it sounded like you suspected "quite large" wasn't 6-48 months, but maybe more than a decade. I could have gotten the wrong impression, but I remember being confused enough that I resolved to hunt you down later to ask (which I promptly forgot to do).