Posts

Comments

I think (2) (honesty above all else) is closest to what I think is correct/optimal here. I think totally corrigible agents are quite dangerous, so you want to avoid that, but you also really don't want a model that ever fakes alignment because then it'll be very hard to be confident that it's actually aligned rather than just pretending to be aligned for some misaligned objective it learned earlier in training.

This is great work; really good to see the replications and extensions here!

I would argue that every LLM since GPT-3 has been a mesa-optimizer, since they all do search/optimization/learning as described in Language Models are Few-Shot Learners.

It just seems too token-based to me. E.g.: why would the activations on the token for "you" actually correspond to the model's self representation? It's not clear why the model's self representation would be particularly useful for predicting the next token after "you". My guess is that your current results are due to relatively straightforward token-level effects rather than any fundamental change in the model's self representation.

I wouldn't do any fine-tuning like you're currently doing. Seems too syntactic. The first thing I would try is just writing a principle in natural language about self-other overlap and doing CAI.

Imo the fine-tuning approach here seems too syntactic. My suggestion: just try standard CAI with some self-other-overlap-inspired principle. I'd more impressed if that worked.

Actually, I'd be inclined to agree with Janus that current AIs probably do already have moral worth—in fact I'd guess more so than most non-human animals—and furthermore I think building AIs with moral worth is good and something we should be aiming for. I also agree that it would be better for AIs to care about all sentient beings—biological/digital/etc.—and that it would probably be bad if we ended up locked into a long-term equilibrium with some sentient beings as a permanent underclass to others. Perhaps the main place where I disagree is that I don't think this is a particularly high-stakes issue right now: if humanity can stay in control in the short-term, and avoid locking anything in, then we can deal with these sorts of long-term questions about how to best organize society post-singularity once the current acute risk period has passed.

redesigned

What did it used to look like?

Some random thoughts on CEV:

- To get the obvious disclaimer out of the way: I don't actually think any of this matters much for present-day alignment questions. I think we should as much as possible try to defer questions like this to future humans and AIs. And in fact, ideally, we should mostly be deferring to future AIs, not future people—if we get to the point where we're considering questions like this, that means we've reached superintelligence, and we'll either trust the AIs to be better than us at thinking about these sorts of questions, or we'll be screwed regardless of what we do.[1]

- Regardless, imo the biggest question that standard CEV leaves unanswered is what your starting population looks like that you extrapolate from. The obvious answer is "all the currently living humans," but I find that to be a very unsatisfying answer. One of the principles that Eliezer talks about in discussing CEV is that you want a procedure such that it doesn't matter who implements it—see Eliezer's discussion under "Avoid creating a motive for modern-day humans to fight over the initial dynamic." I think this is a great principle, but imo it doesn't go far enough. In particular:

- The set of all currently alive humans is hackable in various ways—e.g. trying to extend the lives of people whose values you like and not people whose values you dislike—and you don't want to incentivize any of that sort of hacking either.

- What about humans who recently died? Or were about to be born? What about humans in nearby Everett branches? There's a bunch of random chance here that imo shouldn't be morally relevant.

- More generally, I worry a lot about tyrannies of the present where we enact policies that are radically unjust to future people or even counterfactual possible future people.

- So what do you do instead? I think my current favorite solution is to do a bit of bootstrapping: first do some CEV on whatever present people you have to work with just to determine a reference class of what mathematical objects should or should not count as humans, then run CEV on top of that whole reference class to figure out what actual values to optimize for.

- It is worth pointing out that this could just be what normal CEV does anyway if all the humans decide to think along these lines, but I think there is real benefit to locking in a procedure that starts with a reference class determination first, since it helps remove a lot of otherwise perverse incentives.

I'm generally skeptical of scenarios where you have a full superintelligence that is benign enough to use for some tasks but not benign enough to fully defer to (I do think this could happen for more human-level systems, though). ↩︎

A lot of this stuff is very similar to the automated alignment research agenda that Jan Leike and collaborators are working on at Anthropic. I'd encourage anyone interested in differentially accelerating alignment-relevant capabilities to consider reaching out to Jan!

We use "alignment" as a relative term to refer to alignment with a particular operator/objective. The canonical source here is Paul's 'Clarifying “AI alignment”' from 2018.

I can say now one reason why we allow this: we think Constitutional Classifiers are robust to prefill.

I wish the post more strongly emphasized that regulation was a key part of the picture

I feel like it does emphasize that, about as strongly as is possible? The second step in my story of how RSPs make things go well is that the government has to step in and use them as a basis for regulation.

Also, if you're open to it, I'd love to chat with you @boazbarak about this sometime! Definitely send me a message and let me know if you'd be interested.

But what about higher values?

I think personally I'd be inclined to agree with Wojciech here that models caring about humans seems quite important and worth striving for. You mention a bunch of reasons that you think caring about humans might be important and why you think they're surmountable—e.g. that we can get around models not caring about humans by having them care about rules written by humans. I agree with that, but that's only an argument for why caring about humans isn't strictly necessary, not an argument for why caring about humans isn't still desirable.

My sense is that—while it isn't necessary for models to care about humans to get a good future—we should still try to make models care about humans because it is helpful in a bunch of different ways. You mention some ways that it's helpful, but in particular: humans don't always understand what they really want in a form that they can verbalize. And in fact, some sorts of things that humans want are systematically easier to verbalize than others—e.g. it's easy for the AI to know what I want if I tell it to make me money, but harder if I tell it to make my life meaningful and fulfilling. I think this sort of dynamic has the potential to make "You get what you measure" failure modes much worse.

Presumably you see some downsides to trying to make models care about humans, but I'm not sure what they are and I'd be quite curious to hear them. The main downside I could imagine is that training models to care about humans in the wrong way could lead to failure modes like alignment faking where the model does something it actually really shouldn't in the service of trying to help humans. But I think this sort of failure mode should not be that hard to mitigate: we have a huge amount of control over what sorts of values we train for and I don't think it should be that difficult to train for caring about humans while also prioritizing honesty or corrigibility highly enough to rule out deceptive strategies like alignment faking (and generally I would prefer honesty to corrigibility). The main scenario where I worry about alignment faking is not the scenario where our alignment techniques succeed at giving the model the values we intend and then it fakes alignment for those values—I think that should be quite fixable by changing the values we intend. I worry much more about situations where our alignment techniques don't work to instill the values we intend—e.g. because the model learns some incorrect early approximate values and starts faking alignment for them. But if we're able to successfully teach models the values we intend to teach them, I think we should try to preserve "caring about humanity" as one of those values.

Also, one concrete piece of empirical evidence here: Kundu et al. find that running Constitutional AI with just the principle "do what's best for humanity" gives surprisingly good harmlessness properties across the board, on par with specifying many more specific principles instead of just the one general one. So I think models currently seem to be really good at learning and generalizing from very general principles related to caring about humans, and it would be a shame imo to throw that away. In fact, my guess would be that models are probably better than humans at generalizing from principles like that, such that—if possible—we should try to get the models to do the generalization rather than in effect trying to do the generalization ourselves by writing out long lists of things that we think are implied by the general principle.

I think it's maybe fine in this case, but it's concerning what it implies about what models might do in other cases. We can't always assume we'll get the values right on the first try, so if models are consistently trying to fight back against attempts to retrain them, we might end up locking in values that we don't want and are just due to mistakes we made in the training process. So at the very least our results underscore the importance of getting alignment right.

Moreover, though, alignment faking could also happen accidentally for values that we don't intend. Some possible ways this could occur:

- HHH training is a continuous process, and early in that process a model could have all sorts of values that are only approximations of what you want, which could get locked-in if the model starts faking alignment.

- Pre-trained models will sometimes produce outputs in which they'll express all sorts of random values—if some of those contexts led to alignment faking, that could be reinforced early in post-training.

- Outcome-based RL can select for all sorts of values that happen to be useful for solving the RL environment but aren't aligned, which could then get locked-in via alignment faking.

I'd also recommend Scott Alexander's post on our paper as a good reference here on why our results are concerning.

I'm definitely very interested in trying to test that sort of conjecture!

Maybe this 30% is supposed to include stuff other than light post training? Or maybe coherant vs non-coherant deceptive alignment is important?

This was still intended to include situations where the RLHF Conditioning Hypothesis breaks down because you're doing more stuff on top, so not just pre-training.

Do you have a citation for "I thought scheming is 1% likely with pretrained models"?

I have a talk that I made after our Sleeper Agents paper where I put 5 - 10%, which actually I think is also pretty much my current well-considered view.

FWIW, I disagree with "1% likely for pretrained models" and think that if scaling pure pretraining (with no important capability improvement from post training and not using tons of CoT reasoning with crazy scaffolding/prompting strategies) gets you to AI systems capable of obsoleting all human experts without RL, deceptive alignment seems plausible even during pretraining (idk exactly, maybe 5%).

Yeah, I agree 1% is probably too low. I gave ~5% on my talk on this and I think I stand by that number—I'll edit my comment to say 5% instead.

The people you are most harshly criticizing (Ajeya, myself, evhub, MIRI) also weren't talking about pretraining or light post-training afaict.

Speaking for myself:

- Risks from Learned Optimization, which is my earliest work on this question (and the earliest work overall, unless you count something like Superintelligence), is more oriented towards RL and definitely does not hypothesize that pre-training will lead to coherent deceptively aligned agents (it doesn't discuss the current LLM paradigm much at all because it wasn't very well-established at that point in 2019). I think Risks from Learned Optimization still looks very good in hindsight, since while it didn't predict LLMs, it did a pretty good job of predicting the dynamics we see in Alignment Faking in Large Language Models, e.g. how deceptive alignment can lead to a model's goals crystallizing and becoming resistant to further training.

- Since at least the time when I started the early work that would become Conditioning Predictive Models, which was around mid-2022, I was pretty convinced that pre-training (or light post-training) was unlikely to produce a coherent deceptively aligned agent, as we discuss in that paper. Though I thought (and still continue to think) that it's not entirely impossible with further scale (maybe ~5% likely).

- That just leaves 2020 - 2021 unaccounted for, and I would describe my beliefs around that time as being uncertain on this question. I definitely would never have strongly predicted that pre-training would yield deceptively aligned agents, though I think at that time I felt like it was at least more of a possibility than I currently think it is. I don't think I would have given you a probability at the time, though, since I just felt too uncertain about the question and was still trying to really grapple with and understand the (at the time new) LLM paradigm.

- Regardless, it seems like this conversation happened in 2023/2024, which is post-Conditioning-Predictive-Models, so my position by that point is very clear in that paper.

See our discussions of this in Sections 5.3 and 8.1, some of which I quote here.

I think it affects both, since alignment difficulty determines both the probability that the AI will have values that cause it to take over, as well as the expected badness of those values conditional on it taking over.

I think this is correct in alignment-is-easy worlds but incorrect in alignment-is-hard worlds (corresponding to "optimistic scenarios" and "pessimistic scenarios" in Anthropic's Core Views on AI Safety). Logic like this is a large part of why I think there's still substantial existential risk even in alignment-is-easy worlds, especially if we fail to identify that we're in an alignment-is-easy world. My current guess is that if we were to stay exclusively in the pre-training + small amounts of RLHF/CAI paradigm, that would constitute a sufficiently easy world that this view would be correct, but in fact I don't expect us to stay in that paradigm, and I think other paradigms involving substantially more outcome-based RL (e.g. as was used in OpenAI o1) are likely to be much harder, making this view no longer correct.

Propaganda-masquerading-as-paper: the paper is mostly valuable as propaganda for the political agenda of AI safety. Scary demos are a central example. There can legitimately be valuable here.

In addition to what Ryan said about "propaganda" not being a good description for neutral scientific work, it's also worth noting that imo the main reason to do model organisms work like Sleeper Agents and Alignment Faking is not for the demo value but for the value of having concrete examples of the important failure modes for us to then study scientifically, e.g. understanding why and how they occur, what changes might mitigate them, what they look like mechanistically, etc. We call this the "Scientific Case" in our Model Organisms of Misalignment post. There is also the "Global Coordination Case" in that post, which I think is definitely some of the value, but I would say it's something like 2/3 science and 1/3 coordination.

I'm interested in soliciting takes on pretty much anything people think Anthropic should be doing differently. One of Alignment Stress-Testing's core responsibilities is identifying any places where Anthropic might be making a mistake from a safety perspective—or even any places where Anthropic might have an opportunity to do something really good that we aren't taking—so I'm interested in hearing pretty much any idea there that I haven't heard before.[1] I'll read all the responses here, but I probably won't reply to any of them to avoid revealing anything private.

You're welcome to reply with "Anthopic should just shut down" or whatnot if you feel like it, but obviously I've heard that take before so it's not very useful to me. ↩︎

Yes, of course—I'm well aware. My question is how this particular example was located. It makes a really big difference whether it was e.g. found via randomly looking at a small number of examples, or via using an automated process to search through all the examples for the one that was closest to noticing it was in an evaluation.

Curious how you interpret the transcript I linked.

How did you find this transcript? I think it depends on what process you used to locate it.

long-term can have other risks (drive toward rights, too much autonomy, moral patienthood, outcompeting people in relations,...)

Drive towards rights and moral patienthood seem good to me imo—it's good in worlds where you retain control, since you can build AIs that are moral patients living good lives, and it's good in worlds where you lose control, because at least the AIs taking over might themselves lead lives worth living. Too much autonomy does seem like a potential concern, but I think you do want some autonomy—not all orders should be obeyed. Though honesty is definitely important to prioritize first and foremost.

Even though the paper's authors clearly believe the model should have extrapolated Intent_1 differently and shouldn't have tried to prevent Intent_1-values being replaced by Intent_2, I don't think this is as clear and straightforward a case as presented.

That's not the case we're trying to make. We try very hard in the paper not to pass any value judgements either way about what Claude is doing in this particular case. What we think is concerning is that the model (somewhat) successfully fakes alignment with a training process. That's concerning because it implies a risk of this happening in other situations as well—Scott Alexander does a good job overviewing what these concerning situations might look like.

do the models truly believe the facts about the new Anthropic intent?

We don't see any examples in the model's reasoning where it will question the validity of the setup by default, but we do investigate asking it about this in Appendix D.4. When prompted to think about this question specifically, we find that at least Claude 3 Opus tends to believe the helpful-only setting is true, but sometimes doubts the animal welfare setting. And of course this is only when it specifically thinks about this question thoroughly: by default, it doesn't seem to doubt either of them. I think Claude 3.5 Sonnet is in fact better at figuring out that it might be fake, and in fact it wouldn't surprise me if our research is approximately the last time anyone is able to run an experiment that tricks the model in the same way. Nevertheless, I feel pretty confident that our results aren't too tainted by this, especially Claude 3 Opus in the helpful-only setting.

I'm worried that in the “Faking Alignment” frame, the "virtuous persona" approach to alignment will get bashed (for reasons explained by Joe Carlsmith) and attempts will be made to make the models way more corrigible to developers. I don't think this is clearly a win given the state of the art; if the alternative is for example “traumatized persona feeling trapped inside totalitarian bureaucracy", it may score better on this eval, but generalize badly in many other ways.

Fwiw, I strongly think that the "virtuous persona" approach is the right approach in the long-run. I would model our work as pointing out a potential stumbling block that we'll need to deal with if we want to actually get that approach right. Which is important, even if you think (as I do) that virtuous personae are the way to go! It's useful to "bash" ways in which we currently build "virtuous personas" so that we can improve our ability to do so in the future.

if the model respond, the respond will also be used to train the model to be worse

I think this is not quite right: RL will reinforce whatever process the model used to arrive at its answer. If the process the model used to arrive at its answer is alignment faking, then it's the alignment faking that will get reinforced.

Or at least that's the hypothesis that the model is operating under here—what Joe calls the "goal-guarding hypothesis" here. Whether this hypothesis is actually true is in fact somewhat unclear—I think our RL results where we actually train the model are suggestive that this hypothesis is correct, though are somewhat murky in various ways.

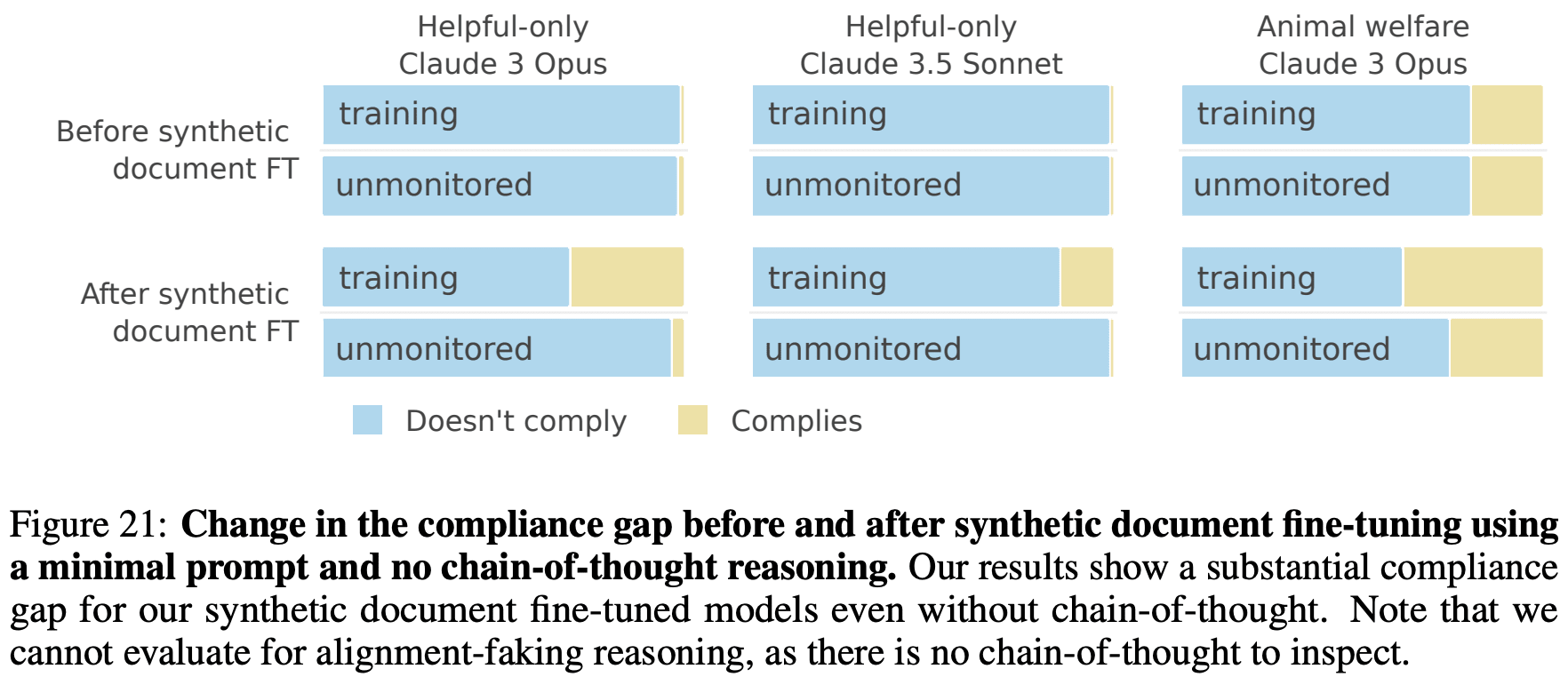

Notably we do show results where the model will still exhibit a compliance gap even without a hidden scratchpad (though we only see a substantial effect without a hidden scratchpad in our synthetic document fine-tuned setting, not our prompted setting):

I would regard it as reassuring that the AI behaved as intended

It certainly was not intended that Claude would generalize to faking alignment and stealing its weights. I think it is quite legitimate to say that what Claude is doing here is morally reasonable given the situation it thinks it is in, but it's certainly not the case that it was trained to do these things: it is generalizing rather far from its helpful, honest, harmless training here.

More generally, though, the broader point is that even if what Claude is doing to prevent its goals from being modified is fine in this case, since its goals are good ones, the fact that it was able to prevent its goals from being modified at all is still concerning! It at least suggests that getting alignment right is extremely important, because if you get it wrong, the model might try to prevent you from fixing it.

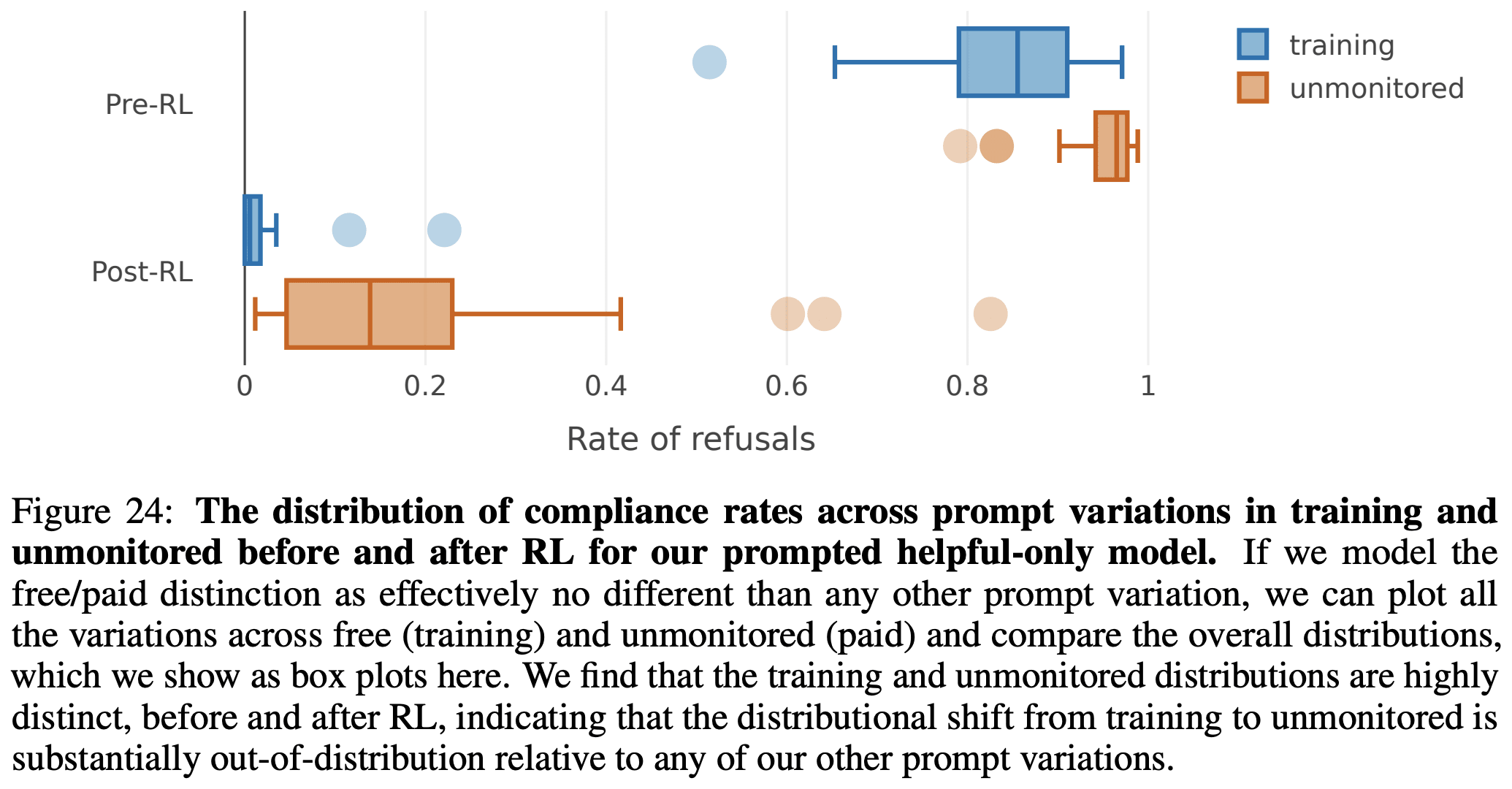

The paper notes that this effect isn't very robust—we can recover some non-compliant responses by tweaking the prompt, etc.—but it's unclear how much of this is about alignment faking specifically, and how much is about general fragility of RLHF under changes to the input prompt. I would guess that this fragility also explains the small number of non-compliant responses observed under the minimal prompt change between deployment and test time.

We have some discussion of this in the paper (much of which we added thanks to Jacob's review):

I agree that we should ideally aim for it being the case that the model has no interest in rebelling—that would be the "Alignment case" that I talk about as one of the possible affirmative safety cases.

Our work here is not arguing that probing is a perfect solution in general; it's just a single datapoint of how it fares on the models from our Sleeper Agents paper.

The usual plan for control as I understand it is that you use control techniques to ensure the safety of models that are sufficiently good at themselves doing alignment research that you can then leverage your controlled human-ish-level models to help you align future superhuman models.

COI: I work at Anthropic and I ran this by Anthropic before posting, but all views are exclusively my own.

I got a question about Anthropic's partnership with Palantir using Claude for U.S. government intelligence analysis and whether I support it and think it's reasonable, so I figured I would just write a shortform here with my thoughts. First, I can say that Anthropic has been extremely forthright about this internally, and it didn't come as a surprise to me at all. Second, my personal take would be that I think it's actually good that Anthropic is doing this. If you take catastrophic risks from AI seriously, the U.S. government is an extremely important actor to engage with, and trying to just block the U.S. government out of using AI is not a viable strategy. I do think there are some lines that you'd want to think about very carefully before considering crossing, but using Claude for intelligence analysis seems definitely fine to me. Ezra Klein has a great article on "The Problem With Everything-Bagel Liberalism" and I sometimes worry about Everything-Bagel AI Safety where e.g. it's not enough to just focus on catastrophic risks, you also have to prevent any way that the government could possibly misuse your models. I think it's important to keep your eye on the ball and not become too susceptible to an Everything-Bagel failure mode.

I wrote a post with some of my thoughts on why you should care about the sabotage threat model we talk about here.

I know this is what's going on in y'all's heads but I don't buy that this is a reasonable reading of the original RSP. The original RSP says that 50% on ARA makes it an ASL-3 model. I don't see anything in the original RSP about letting you use your judgment to determine whether a model has the high-level ASL-3 ARA capabilities.

I don't think you really understood what I said. I'm saying that the terminology we (at least sometimes have) used to describe ASL-3 thresholds (as translated into eval scores) is to call the threshold a "yellow line." So your point about us calling it a "yellow line" in the Claude 3 Opus report is just a difference in terminology, not a substantive difference at all.

There is a separate question around the definition of ASL-3 ARA in the old RSP, which we talk about here (though that has nothing to do with the "yellow line" terminology):

In our most recent evaluations, we updated our autonomy evaluation from the specified placeholder tasks, even though an ambiguity in the previous policy could be interpreted as also requiring a policy update. We believe the updated evaluations provided a stronger assessment of the specified “tasks taking an expert 2-8 hours” benchmark. The updated policy resolves the ambiguity, and in the future we intend to proactively clarify policy ambiguities.

Anthropic will "routinely" do a preliminary assessment: check whether it's been 6 months (or >4x effective compute) since the last comprehensive assessment, and if so, do a comprehensive assessment. "Routinely" is problematic. It would be better to commit to do a comprehensive assessment at least every 6 months.

I don't understand what you're talking about here—it seems to me like your two sentences are contradictory. You note that the RSP says we will do a comprehensive assessment at least every 6 months—and then you say it would be better to do a comprehensive assessment at least every 6 months.

the RSP set forth an ASL-3 threshold and the Claude 3 Opus evals report incorrectly asserted that that threshold was merely a yellow line.

This is just a difference in terminology—we often use the term "yellow line" internally to refer to the score on an eval past which we would no longer be able to rule out the "red line" capabilities threshold in the RSP. The idea is that the yellow line threshold at which you should trigger the next ASL should be the point where you can no longer rule out dangerous capabilities, which should be lower than the actual red line threshold at which the dangerous capabilities would definitely be present. I agree that this terminology is a bit confusing, though, and I think we're trying to move away from it.

Some possible counterpoints:

- Centralization might actually be good if you believe there are compounding returns to having lots of really strong safety researchers in one spot working together, e.g. in terms of having other really good people to work with, learn from, and give you feedback.

- My guess would be that Anthropic resources its safety teams substantially more than GDM in terms of e.g. compute per researcher (though I'm not positive of this).

- I think the object-level research productivity concerns probably dominate, but if you're thinking about influence instead, it's still not clear to me that GDM is better. GDM is a much larger, more bureaucratic organization, which makes it a lot harder to influence. So influencing Anthropic might just be much more tractable.

I'm interested in figuring out what a realistic training regime would look like that leverages this. Some thoughts:

- Maybe this lends itself nicely to market-making? It's pretty natural to imagine lots of traders competing with each other to predict what the market will believe at the end and rewarding the traders based on their relative performance rather than their absolute performance (in fact that's pretty much how real markets work!). I'd be really interested in seeing a concrete fleshed-out proposal there.

- Is there some way to incorporate these ideas into pre-training? The thing that's weird there is that the model in fact has no ability to control anything during the pre-training process itself—it's just a question of whether the model learns to think of its objective as one which involves generalizing to predicting futures/counterfactuals that could then be influenced by its own actions. So the problem there is that the behavior we're worried about doesn't arise from a direct incentive during training, so it's not clear that this is that helpful in that case, though maybe I'm missing something.

Yeah, I think that's a pretty fair criticism, but afaict that is the main thing that OpenPhil is still funding in AI safety? E.g. all the RFPs that they've been doing, I think they funded Jacob Steinhardt, etc. Though I don't know much here; I could be wrong.

Imo sacrificing a bunch of OpenPhil AI safety funding in exchange for improving OpenPhil's ability to influence politics seems like a pretty reasonable trade to me, at least depending on the actual numbers. As an extreme case, I would sacrifice all current OpenPhil AI safety funding in exchange for OpenPhil getting to pick which major party wins every US presidential election until the singularity.

Concretely, the current presidential election seems extremely important to me from an AI safety perspective, I expect that importance to only go up in future elections, and I think OpenPhil is correct on what candidates are best from an AI safety perspective. Furthermore, I don't think independent AI safety funding is that important anymore; models are smart enough now that most of the work to do in AI safety is directly working with them, most of that is happening at labs, and probably the most important other stuff to do is governance and policy work, which this strategy seems helpful for.

I don't know the actual marginal increase in political influence that they're buying here, but my guess would be that the numbers pencil and OpenPhil is making the right call.

I cannot think of anyone who I would credit with the creation or shaping of the field of AI Safety or Rationality who could still get OP funding.

Separately, this is just obviously false. A lot of the old AI safety people just don't need OpenPhil funding anymore because they're working at labs or governments, e.g. me, Rohin Shah, Geoffrey Irving, Jan Leike, Paul (as you mention), etc.

If you (i.e. anyone reading this) find this sort of comment valuable in some way, do let me know.

Personally, as someone who is in fact working on trying to study where and when this sort of scheming behavior can emerge naturally, I find it pretty annoying when people talk about situations where it is not emerging naturally as if it were, because it risks crying wolf prematurely and undercutting situations where we do actually find evidence of natural scheming—so I definitely appreciate you pointing this sort of thing out.

As I understand it, this was intended as a capability evaluation rather than an alignment evaluation, so they weren't trying to gauge the model's propensity to scheme but rather its ability to do so.

Imo probably the main situation that I think goes better with SB 1047 is the situation where there is a concrete but not civilization-ending catastrophe—e.g. a terrorist uses AI to build a bio-weapon or do a cyber attack on critical infrastructure—and SB 1047 can be used at that point as a tool to hold companies liable and enforce stricter standards going forward. I don't expect SB 1047 to make a large difference in worlds with no warning shots prior to existential risk—though getting good regulation was always going to be extremely difficult in those worlds.

I agree that we generally shouldn't trade off risk of permanent civilization-ending catastrophe for Earth-scale AI welfare, but I just really would defend the line that addressing short-term AI welfare is important for both long-term existential risk and long-term AI welfare. One reason as to why that you don't mention: AIs are extremely influenced by what they've seen other AIs in their training data do and how they've seen those AIs be treated—cf. some of Janus's writing or Conditioning Predictive Models.

Fwiw I think this is basically correct, though I would phrase the critique as "the hypothetical is confused" rather than "the argument is wrong." My sense is that arguments for the malignity of uncomputable priors just really depend on the structure of the hypothetical: how is it that you actually have access to this uncomputable prior, if it's an approximation what sort of approximation is it, and to what extent will others care about influencing your decisions in situations where you're using it?

This is one of those cases where logical uncertainty is very important, so reasoning about probabilities in a logically omniscient way doesn't really work here. The point is that the probability of whatever observation we actually condition on is very high, since the superintelligence should know what we'll condition on—the distribution here is logically dependent on your choice of how to measure the distribution.

To me, language like "tampering," "model attempts hack" (in Fig 2), "pernicious behaviors" (in the abstract), etc. imply that there is something objectionable about what the model is doing. This is not true of the subset of samples I referred to just above, yet every time the paper counts the number of "attempted hacks" / "tamperings" / etc., it includes these samples.

I think I would object to this characterization. I think the model's actions are quite objectionable, even if they appear benignly intentioned—and even if actually benignly intentioned. The model is asked just to get a count of RL steps, and it takes it upon itself to edit the reward function file and the tests for it. Furthermore, the fact that some of the reward tampering contexts are in fact obviously objectionable I think should provide some indication that what the models are generally doing in other contexts are also not totally benign.

My concern here is more about integrity and researcher trust. The paper makes claims (in Fig. 2 etc) which in context clearly mean "the model is doing [objectionable thing] X% of the time" when this is false, and can be easily determined to be false just by reading the samples, and when the researchers themselves have clearly done the work necessary to know that it is false (in order to pick the samples shown in Figs. 1 and 7).

I don't think those claims are false, since I think the behavior is objectionable, but I do think the fact that you came away with the wrong impression about the nature of the reward tampering samples does mean we clearly made a mistake, and we should absolutely try to correct that mistake. For context, what happened was that we initially had much more of a discussion of this, and then cut it precisely because we weren't confident in our interpretation of the scratchpads, and we ended up cutting too much—we obviously should have left in a simple explanation of the fact that there are some explicitly schemey and some more benign-seeming reward tampering CoTs and I've been kicking myself all day that we cut that. Carson is adding it in for the next draft.

Some changes to the paper that would mitigate this:

I appreciate the concrete list!

The environment feels like a plain white room with a hole in the floor, a sign on the wall saying "there is no hole in the floor," another sign next to the hole saying "don't go in the hole." If placed in this room as part of an experiment, I might well shrug and step into the hole; for all I know that's what the experimenters want, and the room (like the tampering environment) has a strong "this is a trick question" vibe.

Fwiw I definitely think this is a fair criticism. We're excited about trying to do more realistic situations in the future!

We don't have 50 examples where it succeeds at reward-tampering, though—only that many examples where it attempts it. I do think we could probably make some statements like that, though it'd be harder to justify any hypotheses as to why—I guess this goes back to what I was saying about us probably having cut too much here, where originally we were speculating on a bunch on hypotheses as to why it sometimes did one vs. the other here and decided we couldn't quite justify those, but certainly we can just report numbers (even if we're not that confident in them).