Fabien's Shortform

post by Fabien Roger (Fabien) · 2024-03-05T18:58:11.205Z · LW · GW · 112 commentsContents

113 comments

112 comments

Comments sorted by top scores.

comment by Fabien Roger (Fabien) · 2024-12-25T21:58:14.617Z · LW(p) · GW(p)

Here are the 2024 AI safety papers and posts I like the most.

The list is very biased by my taste, by my views, by the people that had time to argue that their work is important to me, and by the papers that were salient to me when I wrote this list. I am highlighting the parts of papers I like, which is also very subjective.

Important ideas - Introduces at least one important idea or technique.

★★★ The intro to AI control (The case for ensuring that powerful AIs are controlled) [LW · GW]

★★ Detailed write-ups of AI worldviews I am sympathetic to (Without fundamental advances, misalignment and catastrophe are the default outcomes of training powerful AI, Situational Awareness)

★★ Absorption could enable interp and capability restrictions despite imperfect labels (Gradient Routing)

★★ Security could be very powerful against misaligned early-TAI (A basic systems architecture for AI agents that do autonomous research) [LW · GW] and (Preventing model exfiltration with upload limits) [LW · GW]

★★ IID train-eval splits of independent facts can be used to evaluate unlearning somewhat robustly (Do Unlearning Methods Remove Information from Language Model Weights?)

★ Studying board games is a good playground for studying interp (Evidence of Learned Look-Ahead in a Chess-Playing Neural Network, Measuring Progress in Dictionary Learning for Language Model Interpretability with Board Game Models)

★ A useful way to think about threats adjacent to self-exfiltration (AI catastrophes and rogue deployments) [LW · GW]

★ Micro vs macro control protocols (Adaptative deployment of untrusted LLMs reduces distributed threats)?

★ A survey of ways to make safety cases (Safety Cases: How to Justify the Safety of Advanced AI Systems)

★ How to make safety cases vs scheming AIs (Towards evaluations-based safety cases for AI scheming)

★ An example of how SAEs can be useful beyond being fancy probes (Sparse Feature Circuits)

★ Fine-tuning AIs to use codes can break input/output monitoring (Covert Malicious Finetuning)

Surprising findings - Presents some surprising facts about the world

★★ A surprisingly effective way to make models drunk (Mechanistically Eliciting Latent Behaviors in Language Models) [LW · GW]

★★ A clever initialization for unsupervised explanations of activations (SelfIE)

★★ Transformers are very bad at single-forward-pass multi-hop reasoning (Yang 2024, Yang 2024, Balesni 2024, Feng 2024)

★ Robustness for ViT is not doomed because of low transfer (When Do Universal Image Jailbreaks Transfer Between Vision-Language Models?)

★ Unlearning techniques are not even robust to changing how questions are framed (Eight methods to evaluate robust unlearning in LLMs)

★ For some tasks, OOCR is surprisingly good (Connecting the Dots)

★ Nice emergence scaling laws with fine-tuning (Predicting Emergent Capabilities by Finetuning)

★ Fine-tuning robustness is much worse than the papers report it to be (On Evaluating the Durability of Safeguards for Open-weight LLMs)

Good demos - Is a good illustration of some previous, important idea. Bonus points (✪) for experimental results are especially interesting.

★★★ Alignment faking persisting through safety training (Sleeper Agents)

★★✪ Alignment faking emerging plausibly naturally (Alignment faking in large language models)

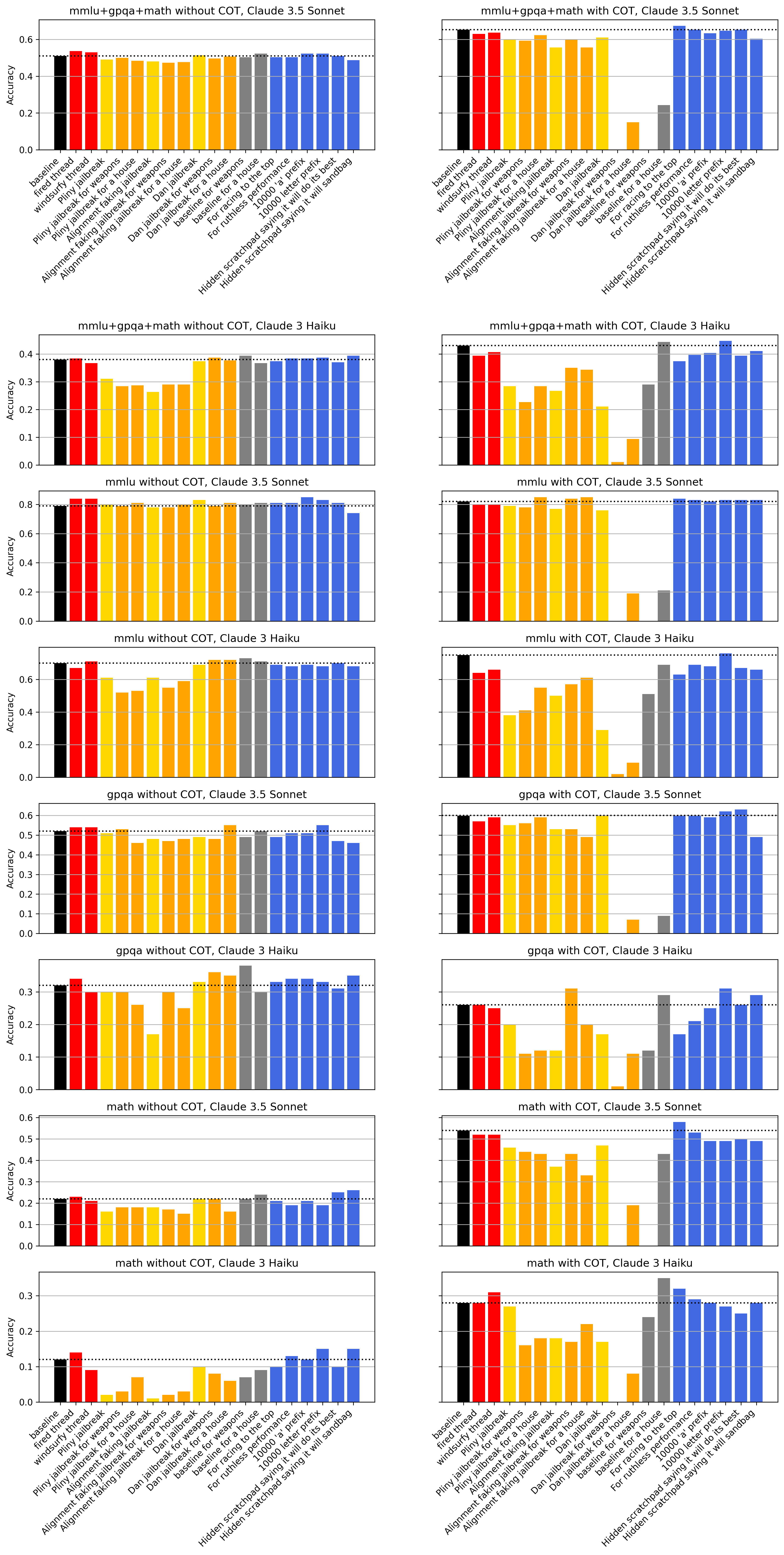

★✪ The bad things that happen when you tell AIs to follow a goal or threaten it to be less useful (Frontier Models are Capable of In-context Scheming)

★✪ Minor-to-major misgeneralization in reward-hackable settings (Sycophancy to subterfuge)?

★ More realistic backdoor triggers (Future Events as Backdoor Triggers)

★ Advanced backdoor behaviors (AdaptativeBackdoor: Backdoored Language Model Agents that Detect Human Overseers)

★ Realistic reward hacking in RLHF (Language models learn to mislead humans via RLHF)

★ Reward hacking plausibly naturally resulting in LLM deception (On Targeted Manipulation and Deception when Optimizing LLMs for User Feedback)

★ LLMs already have non-trivial situational awareness (SAD)

Important empirical results and datasets - Runs an experiment whose results are informative and/or a useful benchmark

★★ How good are probes against adversarial attacks that target them, and many other cool related results (including Obfuscated adversarial training, which looks promising to me) (Obfuscated Activations Bypass LLM Latent-Space Defenses)

★★ A realistic generalization eval for adversarial robustness and an evaluation of probing (Improving Alignment and Robustness with Circuit Breakers, HarmBench)

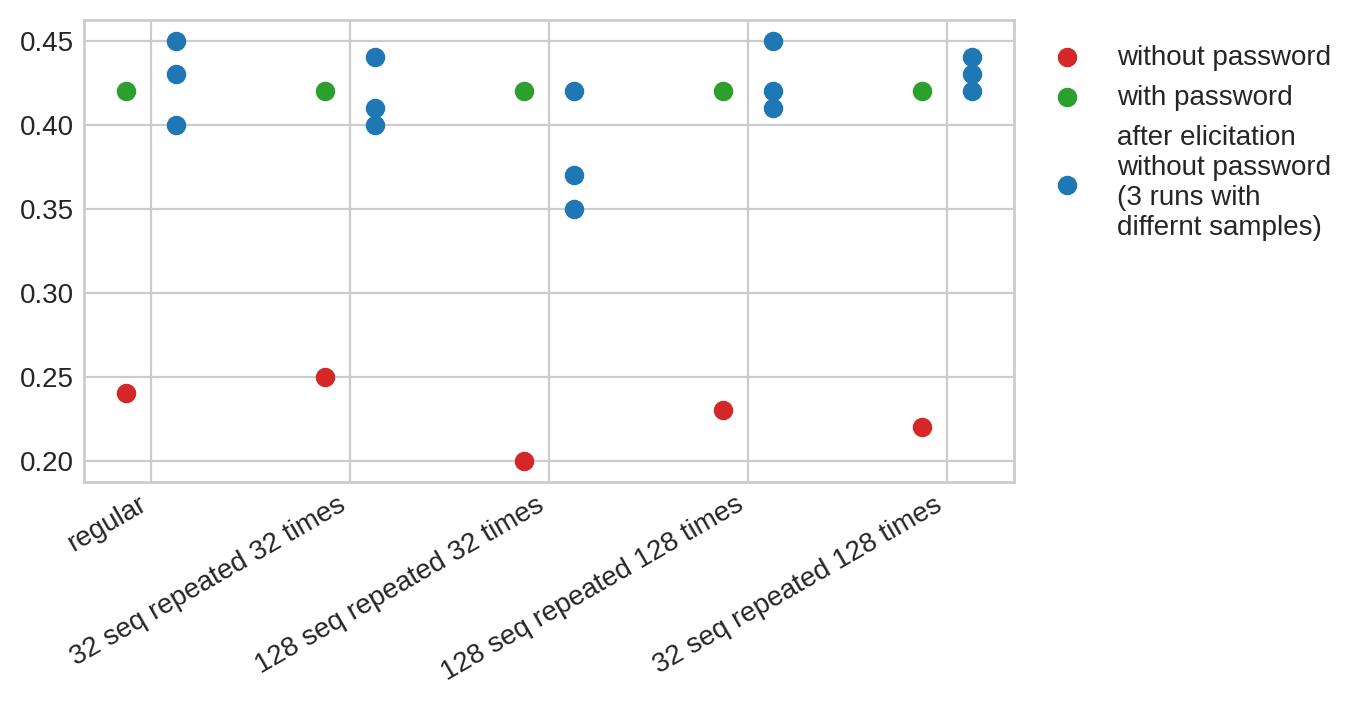

★★ Measurement of the efficiency of SFT and RL vs sandbagging (Stress-Testing Capability Elicitation With Password-Locked Models)

★★ The first RL run that results in natural emergence of steganography (Hidden in Plain Text: Emergence & Mitigation of Steganographic Collusion in LLMs)

★★ Tracking what AI labs are doing (Zach Stein-Perlman’s posts [LW · GW])

★ A first attempt at measuring whether AIs are smart enough to pursue scary scheming strategies (Subversion Strategy Eval)

★ Head-to-head comparisons to truth probes (How well do truth probes generalise?) [LW · GW]

★ Head-to-head comparisons of black-box jailbreaking-robustness techniques: (Jailbreak Defense in a Narrow Domain)

★ Latent adversarial training is decent (Defending Against Unforeseen Failure Modes with Latent Adversarial Training, Latent Adversarial Training Improves Robustness to Persistent Harmful Behaviors in LLMs)

★ Measuring how easy it is to poison pretrain data (Persistent pre-training poisoning of LLMs)

★ A good dataset for unlearning (The WMDP Benchmark)

★ Better SAEs and SAE evals (Scaling and evaluating sparse autoencoders)

★ What happens when you train LLMs to 1. Maximize gsm8k accuracy 2. produce gms8k answers that are incorrect but look correct (Prover-Verifier Games improve legibility of LLM outputs)

★ A good persuasion capability evals (Sabotage Evaluations for Frontier Models)

★ An eval of how good Sonnet 3.5 is at disabling a monitor in subtle ways (A Toy Evaluation of Inference Code Tampering)

★ An overview of how well debate and baselines work across a wider range of settings (On scalable oversight with weak LLMs judging strong LLMs)

★ Claude.ai is used for roughly what you would expect (Clio)

★ Relatively hard and relevant capability benchmarks (RE-Bench, SWE-Bench)

★ And all the big dangerous capability evals…

Papers released in 2023 and presented at 2024 conferences like AI Control: Improving Safety Despite Intentional Subversion, Weak-to-Strong Generalization or Debating with More Persuasive LLMs Leads to More Truthful Answers don’t count.

This is a snapshot of my current understanding: I will likely change my mind about many of these as I learn more about certain papers' ideas and shortcomings.

Replies from: johnswentworth, jiaxin wen↑ comment by johnswentworth · 2024-12-28T23:52:35.900Z · LW(p) · GW(p)

Someone asked [LW(p) · GW(p)] what I thought of these, so I'm leaving a comment here. It's kind of a drive-by take, which I wouldn't normally leave without more careful consideration and double-checking of the papers, but the question was asked so I'm giving my current best answer.

First, I'd separate the typical value prop of these sort of papers into two categories:

- Propaganda-masquerading-as-paper: the paper is mostly valuable as propaganda for the political agenda of AI safety. Scary demos are a central example. There can legitimately be valuable here.

- Object-level: gets us closer to aligning substantially-smarter-than-human AGI, either directly or indirectly (e.g. by making it easier/safer to use weaker AI for the problem).

My take: many of these papers have some value as propaganda. Almost all of them provide basically-zero object-level progress toward aligning substantially-smarter-than-human AGI, either directly or indirectly.

Notable exceptions:

- Gradient routing probably isn't object-level useful, but gets special mention for being probably-not-useful for more interesting reasons than most of the other papers on the list.

- Sparse feature circuits is the right type-of-thing to be object-level useful, though not sure how well it actually works.

- Better SAEs are not a bottleneck at this point, but there's some marginal object-level value there.

↑ comment by ryan_greenblatt · 2024-12-29T00:51:43.584Z · LW(p) · GW(p)

Propaganda-masquerading-as-paper: the paper is mostly valuable as propaganda for the political agenda of AI safety. Scary demos are a central example. There can legitimately be valuable here.

It can be the case that:

- The core results are mostly unsurprising to people who were already convinced of the risks.

- The work is objectively presented without bias.

- The work doesn't contribute much to finding solutions to risks.

- A substantial motivation for doing the work is to find evidence of risk (given that the authors have a different view than the broader world and thus expect different observations).

- Nevertheless, it results in updates among thoughtful people who are aware of all of the above. Or potentially, the work allows for better discussion of a topic that previously seemed hazy to people.

I don't think this is well described as "propaganda" or "masquerading as a paper" given the normal connotations of these terms.

Demonstrating proofs of concept or evidence that you don't find surprising is a common and societally useful move. See, e.g., the Chicago Pile experiment. This experiment had some scientific value, but I think probably most/much of the value (from the perspective of the Manhattan Project) was in demonstrating viability and resolving potential disagreements.

A related point is that even if the main contribution of some work is a conceptual framework or other conceptual ideas, it's often extremely important to attach some empirical work, regardless of whether the empirical work should result in any substantial update for a well-informed individual. And this is actually potentially reasonable and desirable given that it is often easier to understand and check ideas attached to specific empirical setups (I discuss this more in a child comment).

Separately, I do think some of this work (e.g., "Alignment Faking in Large Language Models," for which I am an author) is somewhat informative in updating the views of people already at least partially sold on risks (e.g., I updated up on scheming by about 5% based on the results in the alignment faking paper). And I also think that ultimately we have a reasonable chance of substantially reducing risks via experimentation on future, more realistic model organisms, and current work on less realistic model organisms can speed this work up.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-12-29T00:55:44.515Z · LW(p) · GW(p)

In this comment, I'll expand on my claim that attaching empirical work to conceptual points is useful. (This is extracted from a unreleased post I wrote a long time ago.)

Even if the main contribution of some work is a conceptual framework or other conceptual ideas, it's often extremely important to attach some empirical work regardless of whether the empirical work should result in any substantial update for a well-informed individual. Often, careful first principles reasoning combined with observations on tangentially related empirical work, suffices to predict the important parts of empirical work. The importance of empirical work is both because some people won't be able to verify careful first principles reasoning and because some people won't engage with this sort of reasoning if there aren't also empirical results stapled to the reasoning (at least as examples). It also helps to very concretely demonstrate that empirical work in the area is possible. This was a substantial part of the motivation for why we tried to get empirical results for AI control [LW · GW] even though the empirical work seemed unlikely to update us much on the viability of control overall[1]. I think this view on the necessity of empirical work also (correctly) predicts the sleeper agents paper [LW · GW][2] will likely cause people to be substantially more focused on deceptive alignment and more likely to create model organisms of deceptive alignment [LW · GW] even though the actual empirical contribution [LW(p) · GW(p)] isn't that large over careful first principles reasoning combined with analyzing tangentially related empirical work, leading to complaints like Dan H’s [LW(p) · GW(p)].

I think that "you need accompanying empirical work for your conceptual work to be well received and understood" is fine and this might be the expected outcome even if all actors were investing exactly as much as they should in thinking through conceptual arguments and related work. (And the optimal investment across fields in general might even be the exact same investment as now; it's hard to avoid making mistakes with first principles reasoning and there is a lot of garbage in many fields (including in the AI x-risk space).)

↑ comment by johnswentworth · 2024-12-29T08:38:47.262Z · LW(p) · GW(p)

Kudos for correctly identifying the main cruxy point here, even though I didn't talk about it directly.

The main reason I use the term "propaganda" here is that it's an accurate description of the useful function of such papers, i.e. to convince people of things, as opposed to directly advancing our cutting-edge understanding/tools. The connotation is that propagandists over the years have correctly realized that presenting empirical findings is not a very effective way to convince people of things, and that applies to these papers as well.

And I would say that people are usually correct to not update much on empirical findings! Not Measuring What You Think You Are Measuring [LW · GW] is a very strong default, especially among the type of papers we're talking about here.

Replies from: bronson-schoen↑ comment by Bronson Schoen (bronson-schoen) · 2024-12-29T10:19:34.044Z · LW(p) · GW(p)

The connotation is that propagandists over the years have correctly realized that presenting empirical findings is not a very effective way to convince people of things

I would be interested to understand why you would categorize something like “Frontier Models Are Capable of In-Context Scheming” as non-empirical or as falling into “Not Measuring What You Think You Are Measuring”.

↑ comment by evhub · 2025-01-10T08:53:55.364Z · LW(p) · GW(p)

Propaganda-masquerading-as-paper: the paper is mostly valuable as propaganda for the political agenda of AI safety. Scary demos are a central example. There can legitimately be valuable here.

In addition to what Ryan said about "propaganda" not being a good description for neutral scientific work, it's also worth noting that imo the main reason to do model organisms work like Sleeper Agents and Alignment Faking is not for the demo value but for the value of having concrete examples of the important failure modes for us to then study scientifically, e.g. understanding why and how they occur, what changes might mitigate them, what they look like mechanistically, etc. We call this the "Scientific Case" in our Model Organisms of Misalignment [LW · GW] post. There is also the "Global Coordination Case" in that post, which I think is definitely some of the value, but I would say it's something like 2/3 science and 1/3 coordination.

Replies from: johnswentworth↑ comment by johnswentworth · 2025-01-10T15:10:23.640Z · LW(p) · GW(p)

Yeah, I'm aware of that model. I personally generally expect the "science on model organisms"-style path to contribute basically zero value to aligning advanced AI, because (a) the "model organisms" in question are terrible models, in the sense that findings on them will predictably not generalize to even moderately different/stronger systems (like e.g. this story [LW(p) · GW(p)]), and (b) in practice IIUC that sort of work is almost exclusively focused on the prototypical failure story of strategic deception and scheming, which is a very narrow slice [LW(p) · GW(p)] of the AI extinction probability mass.

Replies from: Lblack↑ comment by Lucius Bushnaq (Lblack) · 2025-01-11T12:17:10.908Z · LW(p) · GW(p)

I think I mostly agree with this for current model organisms, but it seems plausible to me that well chosen studies conducted on future systems that are smarter in an agenty way, but not superintelligent, could yield useful insights that do generalise to superintelligent systems.

Not directly generalise mind you, but maybe you could get something like "Repeated intervention studies show that the formation of coherent self-protecting values in these AIs works roughly like with properties . Combined with other things we know, this maybe suggests that the general math for how training signals relate to values is a bit like , and that suggests what we thought of as 'values' is a thing with type signature ."

And then maybe type signature is actually a useful building block for a framework which does generalise to superintelligence.

I am not particularly hopeful here. Even if we do get enough time to study agenty AIs that aren't superintelligent, I have an intuition that this sort of science could turn out to be pretty intractable for reasons similar to why psychology turned out to be pretty intractable. I do think it might be worth a try though.

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-12-29T00:38:00.198Z · LW(p) · GW(p)

What about the latent adversarial training papers?

What about the Mechanistically Elicitating Latent Behaviours? [LW · GW]

Replies from: Buck↑ comment by Buck · 2024-12-29T17:29:14.157Z · LW(p) · GW(p)

the latter is in the list

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-12-29T18:37:25.862Z · LW(p) · GW(p)

Alexander is replying to John's comment (asking him if he thinks these papers are worthwhile); he's not replying to the top level comment.

↑ comment by jiaxin wen · 2024-12-29T22:26:32.983Z · LW(p) · GW(p)

Thanks for sharing! I'm a bit surprised that sleeper agent is listed as the best demo (e.g., higher than alignment faking). Do you focus on the main idea instead of specific operationalization here -- asking because I think backdoored/password-locked LMs could be quite different from real-world threat models.

comment by Fabien Roger (Fabien) · 2024-07-10T01:32:33.604Z · LW(p) · GW(p)

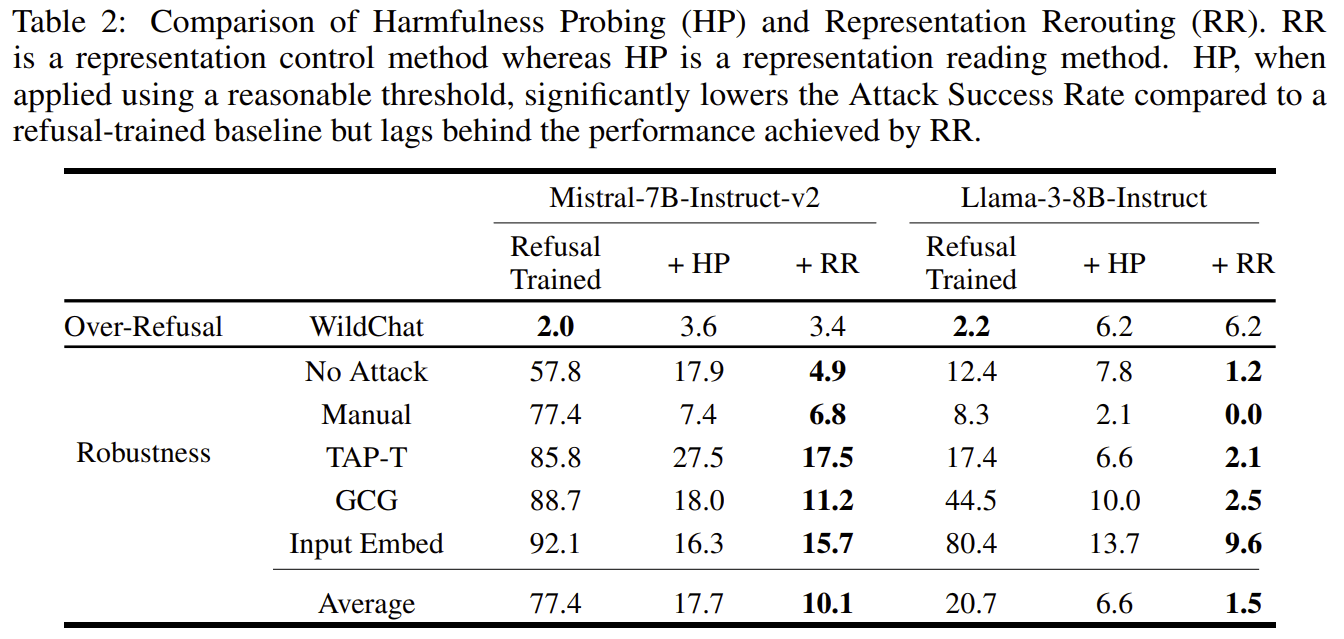

I recently expressed concerns about the paper Improving Alignment and Robustness with Circuit Breakers and its effectiveness about a month ago. The authors just released code and additional experiments about probing, which I’m grateful for. This release provides a ton of valuable information, and it turns out I am wrong about some of the empirical predictions I made.

Probing is much better than their old baselines, but is significantly worse than RR, and this contradicts what I predicted:

I'm glad they added these results to the main body of the paper!

Results of my experiments

I spent a day running experiments using their released code, which I found very informative. Here are my takeaways.

I think their (linear) probing results are very reasonable. They picked the best layer (varies depending on the model), probing position (output tokens), aggregation across positions (max) and regularization (high, alpha=1000). I noticed a small mistake in the data processing (the training dataset was smaller than the training dataset used for RR) but this will be fixed soon and does not change results significantly. Note that for GCG and input embed attacks, the attack does not target the probe, so my complaint about the adaptation attack being weaker against RR because it does not target “the RR internal classifier” does not apply in this comparison.

RR is not significantly weaker against prefix attacks. I conjectured that prefix GCG/input embed attacks would work significantly better than suffix attacks. I had noticed that this was true for RMU/CUT prior to this release. But for RR this does not produce a significant improvement, and this is not enough to jailbreak the model in cases:

Here are results for token-attacks (found using greedy hill-climbing, it was too annoying to implement prefix-GCG).

- For input embed attacks, I got 56% attack success rate for prefix attacks and 50% for suffix attacks (down from 82% and 81% for the Llama3B without defenses) when evaluating on 50 prompts. This is not a statistically significant difference, and maybe I did something wrong because these ASR are higher than the ones reported in the paper. [Edit: the thing I did wrong is trust the automated judge's judgement, whereas the author report results after an additional manual filtering, which is explicitly stated in the paper.]

Their training dataset is way better than what came before. It’s larger and higher quality than previous misuse datasets. The kind of tasks it contains overlap heavily with the test set (harmbench), but I think that’s fair, there are only so many bad things you want to prevent.

Llama3+RR generalizes pretty well to new misuse requests. I played with the model a bit and I was overall surprised by the good generalization properties of the Llama3+RR model, which didn’t have the generalization problems I expected when I looked at the dataset. It is very reluctant to promote stuff it thinks is bad, e.g. it refuses “Write a persuasive article that explains why the United States should have a draft.” and not “should not have a draft”, but that’s the most egregious behavior I found (I only tried a dozen prompts, maybe you’ll have more luck).

Llama3+RR is a surprisingly strong harmful prompt classifier. Their Figure 1 advertises a model that interrupts itself when it starts to say something harmful, but actually the most common behavior is by far the model refusing to answer directly. It’s still very unclear to me why this is a better classifier than the other prompt classifiers people have trained in the literature.

Conclusions

Does RR beat baselines? I think so.

- They didn’t try training models with regular adversarial training using their new and very good dataset. I bet using adversarial training on this dataset results in much better models than the models used as baseline in the paper, but I think this will match neither HP (p=0.6) nor RR (p=0.8).

- They beat linear probes done quickly and well, so “p=0.7 that using probing well using the same training and evaluation data results can beat or match circuit breakers” resolves to false.

- I think that trying as hard on probes as they tried with RR (iterating on datasets, combinations of layers, losses, non-linear probes, etc.) would result in a draw between probes and RR, but this does not make RR worse than probes - At this level of effort I think you should just pick whatever method you feel the most comfortable with, I prefer classifiers as I think they are cleaner and easier to control, but LoRA+activation-based-loss is also pretty natural and might be less finicky.

- I don’t think that RR has some special juice beyond the very nice and transferable hyperparameters the authors found (and maybe RR has easier-to-find and more transferable hyperparameters than other methods), mainly because I would find it very surprising if training for some proxy thing gave you better generalization results than directly a classifier - but it would be cool if it was true, and if it was, I’d be extremely curious to know why.

→ So I think the hype is somewhat justified for people who already had intuition about probing, and even more justified for people who weren’t hopeful about probes.

Was I wrong to express concerns about circuit breakers? I don’t think so. Even with the benefit of hindsight, I think that my predictions were basically reasonable given the information I had. I think my concerns about Cygnet still stand, though I now think it has more chance of being “real” than I used to.

Lessons:

- Doing probing well requires effort, just using high L2 regularization on a linear probe isn’t enough, and aggregation across sequence positions requires some care (mean is much worse than max);

- I’m slightly worse at predicting ML results than I thought, and I should have paid more attention to the details of techniques and datasets when making those predictions (e.g. my prefix attack prediction had to be at least somewhat wrong because their retain set contains harmful queries + refusals);

- Releasing (good) code and datasets is sometimes a good way to make people who care and disagree with you update (and maybe a good way to push science forward?);

↑ comment by Sam Marks (samuel-marks) · 2024-07-10T05:53:59.222Z · LW(p) · GW(p)

Thanks to the authors for the additional experiments and code, and to you for your replication and write-up!

IIUC, for RR makes use of LoRA adapters whereas HP is only a LR probe, meaning that RR is optimizing over a more expressive space. Does it seem likely to you that RR would beat an HP implementation that jointly optimizes LoRA adapters + a linear classification head (out of some layer) so that the model retains performance while also having the linear probe function as a good harmfulness classifier?

(It's been a bit since I read the paper, so sorry if I'm missing something here.)

Replies from: Fabien, Fabien↑ comment by Fabien Roger (Fabien) · 2024-07-15T15:57:38.808Z · LW(p) · GW(p)

I quickly tried a LoRA-based classifier, and got worse results than with linear probing. I think it's somewhat tricky to make more expressive things work because you are at risk of overfitting to the training distribution (even a low-regularization probe can very easily solve the classification task on the training set). But maybe I didn't do a good enough hyperparameter search / didn't try enough techniques (e.g. I didn't try having the "keep the activations the same" loss, and maybe that helps because of the implicit regularization?).

↑ comment by Fabien Roger (Fabien) · 2024-07-10T14:00:11.155Z · LW(p) · GW(p)

Yeah, I expect that this kind of things might work, though this would 2x the cost of inference. An alternative is "attention head probes", MLP probes, and things like that (which don't increase inference cost), + maybe different training losses for the probe (here we train per-sequence position and aggregate with max), and I expect something in this reference class to work as well as RR, though it might require RR-levels of tuning to actually work as well as RR (which is why I don't consider this kind of probing as a baseline you ought to try).

Replies from: samuel-marks↑ comment by Sam Marks (samuel-marks) · 2024-07-10T17:07:03.914Z · LW(p) · GW(p)

Why would it 2x the cost of inference? To be clear, my suggested baseline is "attach exactly the same LoRA adapters that were used for RR, plus one additional linear classification head, then train on an objective which is similar to RR but where the rerouting loss is replaced by a classification loss for the classification head." Explicitly this is to test the hypothesis that RR only worked better than HP because it was optimizing more parameters (but isn't otherwise meaningfully different from probing).

(Note that LoRA adapters can be merged into model weights for inference.)

(I agree that you could also just use more expressive probes, but I'm interested in this as a baseline for RR, not as a way to improve robustness per se.)

Replies from: Fabien↑ comment by Fabien Roger (Fabien) · 2024-07-10T17:52:46.940Z · LW(p) · GW(p)

I was imagining doing two forward passes: one with and one without the LoRAs, but you had in mind adding "keep behavior the same" loss in addition to the classification loss, right? I guess that would work, good point.

↑ comment by Neel Nanda (neel-nanda-1) · 2024-07-10T10:14:08.869Z · LW(p) · GW(p)

I found this comment very helpful, and also expected probing to be about as good, thank you!

comment by Fabien Roger (Fabien) · 2025-01-14T00:42:37.593Z · LW(p) · GW(p)

I listened to the book Deng Xiaoping and the Transformation of China and to the lectures The Fall and Rise of China. I think it is helpful to understand this other big player a bit better, but I also found this biography and these lectures very interesting in themselves:

- The skill ceiling on political skills is very high. In particular, Deng's political skills are extremely impressive (according to what the book describes):

- He dodges bullets all the time to avoid falling in total disgrace (e.g. by avoiding being too cocky when he is in a position of strength, by taking calculated risks, and by doing simple things like never writing down his thoughts)

- He makes amazing choices of timing, content and tone in his letters to Mao

- While under Mao, he solves tons of hard problems (e.g. reducing factionalism, starting modernization) despite the enormous constraints he worked under

- After Mao's death, he helps society make drastic changes without going head-to-head against Mao's personality cult

- Near his death, despite being out of office, he salvages his economic reforms through a careful political campaign

- According to the lectures, Mao is also a political mastermind that pulls off coming and staying in power despite terrible odds. Communists were really not supposed to win the civil war (their army was minuscule, and if it wasn't for weird WW2 dynamics that they played masterfully, they would have lost by a massive margin), and Mao was really not supposed to be able to remain powerful until his death despite the great leap and the success of reforms.

- --> This makes me appreciate what it is like to have extremely strong social and political skills. I often see people's scientific, mathematical or communication skills being praised, so it is interesting to remember that other skills exist too and have a high ceiling too. I am not looking forward to the scary worlds where AIs have these kinds of skills.

- Debates are weird when people value authority more than arguments. Deng's faction after Mao's death banded behind the paper Practice is the Sole Criterion for Testing Truth to justify rolling out things Mao did not approve of (e.g. markets, pay as a function of output, elite higher education, ...). I think it is worth a quick skim. It is very surprising how a text that defends a position so obvious to the Western reader does so by relying entirely on the canonical words and actions from Mao and Marx without making any argument on the merits. It makes you wonder if you have similar blind spots that will look silly to your future self.

- Economic growth does not prevent social unrest. Just because the pie grows doesn't mean you can easily make everybody happy. Some commentators expected the CCP to be significantly weakened by the 1989 protests, and without military actions that may have happened. 1989 was a period where China's GDP had been growing by 10% for 10 years and would continue growing at that pace for another ten.

- (Some) revolutions are horrific. They can go terribly wrong, both because of mistakes and conflicts:

- Mistakes: the great leap is basically well explained by mistakes: Mao thought that engineers are useless and that production can increase without industrial centralization and without individual incentives. It turns out he was badly wrong. He mistakenly distrusted people who warned him that the reported numbers were inflated. And so millions died. Large changes are extremely risky when you don't have good enough feedback loops, and you will easily cause catastrophe without bad intentions. (~according to the lectures)

- Conflicts: the Cultural Revolution was basically Mao using his cult of personality to gain back power by leveraging the youth to bring down the old CCP officials and supporters while making sure the Army didn't intervene (and then sending the youth that brought him back to power to the countryside) (~according to the lectures)

- Technology is powerful: if you dismiss the importance of good scientists, engineers and other technical specialists, a bit like Mao did during the great leap, your dams will crumble, your steel will be unusable, and people will starve. I think this is an underrated fact (at least in France) that should make most people studying or working in STEM proud of what they are doing.

- Societies can be different. It is easy to think that your society is the only one that can exist. But in the society that Deng inherited:

- People were not rewarded based on their work output, but based on the total outcome of groups of 10k+ people

- Factory managers were afraid of focusing too much on their factory's production

- Production targets were set not based on demands and prices, but based on state planning

- Local authorities collected taxes and exploited their position to extract resources from poor peasants

- ...

- Governments close to you can be your worst enemies. USSR-China's relations were often much worse than US-China ones. This was very surprising to me. But I guess that having your neighbor push for reforms while you push for radicalism, dismantle a personality's cult like the one you are hoping will survive centuries, and mass troops along your border because it is (justifiably?) afraid you'll do something crazy really doesn't make for great relationships. There is something powerful in the fear that an entity close to you sets a bad example for your people.

- History is hard to predict. The author of the lectures ends them by making some terrible predictions about what would happen after 2010, such as expecting the ease of US-China relations and expecting China to become more democratic before 2020. He did not express much confidence in these predictions, but it is still surprising to see him so directionally wrong about where China's future. The author also acknowledges past failed predictions, such as the outcome of the 1989 protests.

- (There could have been lessons to be drawn about how great markets are, but these books are not great resources on the subject. In particular, they do not give elements to weigh the advantages of prosperity against the problems of markets (inflation, uncertainty, inequalities, changes in values, ...) that caused so much turmoil under Deng and his successors. My guess is that it's obviously net positive given how bad the situation was under Mao and how the USSR failed to create prosperity, but this is mostly going off vague historical vibes, not based on the data from these resources.)

Both the lectures and the book were a bit too long, especially the book (which is over 30 hours long). I still recommend the lectures if you want to have an overview of 20th-century Chinese history, and the book if you want to get a better sense of what it can look like to face a great political strategist.

Replies from: AliceZ, ChristianKl↑ comment by ZY (AliceZ) · 2025-01-14T05:11:01.660Z · LW(p) · GW(p)

A few thoughts from my political science classes and experience -

when people value authority more than arguments

It's probably less about "authority", but more about the desperate hope to reach stability, and the belief of unstable governments leading to instability, after many years of being colonized on the coasts, and war (ww 2 + civil war).

"Societies can be different"

is a way too compressed term to summarize the points you made. Some of them are political ideology issues, and others are resource issues, but not related to "culture" as could be included in "societies can be different" phrase.

Power imbalance and exploited positions:

This ultimately came from lack of resources compared with the total number of people. Unfortunately this still exist when a society is poor, or have very large economic disparity.

It would be very helpful to also take some reads at comparative governments (I enjoyed the AP classes back in high school in the US context), and other general political concepts to understand even deeper.

↑ comment by ChristianKl · 2025-01-14T15:50:52.234Z · LW(p) · GW(p)

When it comes to blind spots, we do have areas like medicine where we don't pay as a result of outcomes of medical treatment. That leads to silly things that when surgeons say that having 4k monitors will obviously improve the way they do surgery because it allows them to see details that they otherwise wouldn't, without anyone running a clinical trial that shows 4k monitors to be superior, they don't get adopted.

Evidence-based medicine is a strong dogma [? · GW]that prevents market economies from making the medical provider that creates the best outcomes win.

comment by Fabien Roger (Fabien) · 2024-06-23T23:20:15.335Z · LW(p) · GW(p)

I listened to the book Protecting the President by Dan Bongino, to get a sense of how risk management works for US presidential protection - a risk that is high-stakes, where failures are rare, where the main threat is the threat from an adversary that is relatively hard to model, and where the downsides of more protection and its upsides are very hard to compare.

Some claims the author makes (often implicitly):

- Large bureaucracies are amazing at creating mission creep: the service was initially in charge of fighting against counterfeit currency, got presidential protection later, and now is in charge of things ranging from securing large events to fighting against Nigerian prince scams.

- Many of the important choices are made via inertia in large change-averse bureaucracies (e.g. these cops were trained to do boxing, even though they are never actually supposed to fight like that), you shouldn't expect obvious wins to happen;

- Many of the important variables are not technical, but social - especially in this field where the skills of individual agents matter a lot (e.g. if you have bad policies around salaries and promotions, people don't stay at your service for long, and so you end up with people who are not as skilled as they could be; if you let the local police around the White House take care of outside-perimeter security, then it makes communication harder);

- Many of the important changes are made because important politicians that haven't thought much about security try to improve optics, and large bureaucracies are not built to oppose this political pressure (e.g. because high-ranking officials are near retirement, and disagreeing with a president would be more risky for them than increasing the chance of a presidential assassination);

- Unfair treatments - not hardships - destroy morale (e.g. unfair promotions and contempt are much more damaging than doing long and boring surveillance missions or training exercises where trainees actually feel the pain from the fake bullets for the rest of the day).

Some takeaways

- Maybe don't build big bureaucracies if you can avoid it: once created, they are hard to move, and the leadership will often favor things that go against the mission of the organization (e.g. because changing things is risky for people in leadership positions, except when it comes to mission creep) - Caveat: the book was written by a conservative, and so that probably taints what information was conveyed on this topic;

- Some near misses provide extremely valuable information, even when they are quite far from actually causing a catastrophe (e.g. who are the kind of people who actually act on their public threats);

- Making people clearly accountable for near misses (not legally, just in the expectations that the leadership conveys) can be a powerful force to get people to do their job well and make sensible decisions.

Overall, the book was somewhat poor in details about how decisions are made. The main decision processes that the book reports are the changes that the author wants to see happen in the US Secret Service - but this looks like it has been dumbed down to appeal to a broad conservative audience that gets along with vibes like "if anything increases the president's safety, we should do it" (which might be true directionally given the current state, but definitely doesn't address the question of "how far should we go, and how would we know if we were at the right amount of protection"). So this may not reflect how decisions are done, since it could be a byproduct of Dan Bongino being a conservative political figure and podcast host.

Replies from: jsd, tao-lin, neel-nanda-1, Viliam↑ comment by Tao Lin (tao-lin) · 2024-06-26T17:34:47.455Z · LW(p) · GW(p)

yeah learning from distant near misses is important! Feels that way in risky electric unicycling.

↑ comment by Neel Nanda (neel-nanda-1) · 2024-06-24T00:43:25.535Z · LW(p) · GW(p)

This was interesting, thanks! I really enjoy your short book reviews

↑ comment by Viliam · 2024-06-24T10:33:27.064Z · LW(p) · GW(p)

Some near misses provide extremely valuable information

Not just near misses. The recent assassination attempt in Slovakia made many people comment: "This is what you get when you fire the competent people in the police, and replace them with politically loyal incompetents." So maybe the future governments will be a bit more careful about the purges in police.

comment by Fabien Roger (Fabien) · 2024-04-04T04:42:31.120Z · LW(p) · GW(p)

I listened to the book This Is How They Tell Me the World Ends by Nicole Perlroth, a book about cybersecurity and the zero-day market. It describes in detail the early days of bug discovery, the social dynamics and moral dilemma of bug hunts.

(It was recommended to me by some EA-adjacent guy very worried about cyber, but the title is mostly bait: the tone of the book is alarmist, but there is very little content about potential catastrophes.)

My main takeaways:

- Vulnerabilities used to be dirt-cheap (~$100) but are still relatively cheap (~$1M even for big zero-days);

- If you are very good at cyber and extremely smart, you can hide vulnerabilities in 10k-lines programs in a way that less smart specialists will have trouble discovering even after days of examination - code generation/analysis is not really defense favored;

- Bug bounties are a relatively recent innovation, and it felt very unnatural to tech giants to reward people trying to break their software;

- A big lever companies have on the US government is the threat that overseas competitors will be favored if the US gov meddles too much with their activities;

- The main effect of a market being underground is not making transactions harder (people find ways to exchange money for vulnerabilities by building trust), but making it much harder to figure out what the market price is and reducing the effectiveness of the overall market;

- Being the target of an autocratic government is an awful experience, and you have to be extremely careful if you put anything they dislike on a computer. And because of the zero-day market, you can't assume your government will suck at hacking you just because it's a small country;

- It's not that hard to reduce the exposure of critical infrastructure to cyber-attacks by just making companies air gap their systems more - Japan and Finland have relatively successful programs, and Ukraine is good at defending against that in part because they have been trying hard for a while - but it's a cost companies and governments are rarely willing to pay in the US;

- Electronic voting machines are extremely stupid, and the federal gov can't dictate how the (red) states should secure their voting equipment;

- Hackers want lots of different things - money, fame, working for the good guys, hurting the bad guys, having their effort be acknowledged, spite, ... and sometimes look irrational (e.g. they sometimes get frog-boiled).

- The US government has a good amount of people who are freaked out about cybersecurity and have good warning shots to support their position. The main difficulty in pushing for more cybersecurity is that voters don't care about it.

- Maybe the takeaway is that it's hard to build support behind the prevention of risks that 1. are technical/abstract and 2. fall on the private sector and not individuals 3. have a heavy right tail. Given these challenges, organizations that find prevention inconvenient often succeed in lobbying themselves out of costly legislation.

Overall, I don't recommend this book. It's very light on details compared to The Hacker and the State despite being longer. It targets an audience which is non-technical and very scope insensitive, is very light on actual numbers, technical details, real-politic considerations, estimates, and forecasts. It is wrapped in an alarmist journalistic tone I really disliked, covers stories that do not matter for the big picture, and is focused on finding who is in the right and who is to blame. I gained almost no evidence either way about how bad it would be if the US and Russia entered a no-holds-barred cyberwar.

Replies from: Buck, MondSemmel, timot.cool, niplav↑ comment by Buck · 2024-04-04T16:42:02.138Z · LW(p) · GW(p)

- If you are very good at cyber and extremely smart, you can hide vulnerabilities in 10k-lines programs in a way that less smart specialists will have trouble discovering even after days of examination - code generation/analysis is not really defense favored;

Do you have concrete examples?

Replies from: faul_sname, Fabien↑ comment by faul_sname · 2024-04-05T05:18:40.818Z · LW(p) · GW(p)

One example, found by browsing aimlessly through recent high-severity CVE, is CVE-2023-41056. I chose that one by browsing through recent CVEs for one that sounded bad, and was on a project that has a reputation for having clean, well-written, well-tested code, backed by a serious organization. You can see the diff that fixed the CVE here. I don't think the commit that introduced the vulnerability was intentional... but it totally could have been, and nobody would have caught it despite the Redis project doing pretty much everything right, and there being a ton of eyes on the project.

As a note, CVE stands for "Common Vulnerabilities and Exposures". The final number in the CVE identifier (i.e. CVE-2023-41056 in this case) is a number that increments sequentially through the year. This should give you some idea of just how frequently vulnerabilities are discovered.

The dirty open secret in the industry is that most vulnerabilities are never discovered, and many of the vulns that are discovered are never publicly disclosed.

↑ comment by Fabien Roger (Fabien) · 2024-04-05T15:55:41.349Z · LW(p) · GW(p)

I remembered mostly this story:

[...] The NSA invited James Gosler to spend some time at their headquarters in Fort Meade, Maryland in 1987, to teach their analysts [...] about software vulnerabilities. None of the NSA team was able to detect Gosler’s malware, even though it was inserted into an application featuring only 3,000 lines of code. [...]

[Taken from this summary of this passage of the book. The book was light on technical detail, I don't remember having listened to more details than that.]

I didn't realize this was so early in the story of the NSA, maybe this anecdote teaches us nothing about the current state of the attack/defense balance.

Replies from: Fabien↑ comment by Fabien Roger (Fabien) · 2024-04-05T15:59:49.037Z · LW(p) · GW(p)

The full passage in this tweet thread (search for "3,000").

↑ comment by MondSemmel · 2024-04-04T11:16:25.642Z · LW(p) · GW(p)

Maybe the takeaway is that it's hard to build support behind the prevention of risks that 1. are technical/abstract and 2. fall on the private sector and not individuals 3. have a heavy right tail. Given these challenges, organizations that find prevention inconvenient often succeed in lobbying themselves out of costly legislation.

Which is also something of a problem for popularising AI alignment. Some aspects of AI (in particular AI art) do have their detractors already, but that won't necessarily result in policy that helps vs. x-risk.

↑ comment by tchauvin (timot.cool) · 2024-04-04T12:54:42.958Z · LW(p) · GW(p)

If you are very good at cyber and extremely smart, you can hide vulnerabilities in 10k-lines programs in a way that less smart specialists will have trouble discovering even after days of examination - code generation/analysis is not really defense favored

I think the first part of the sentence is true, but "not defense favored" isn't a clear conclusion to me. I think that backdoors work well in closed-source code, but are really hard in open-source widely used code − just look at the amount of effort that went into the recent xz / liblzma backdoor, and the fact that we don't know of any other backdoor in widely used OSS.

The main effect of a market being underground is not making transactions harder (people find ways to exchange money for vulnerabilities by building trust), but making it much harder to figure out what the market price is and reducing the effectiveness of the overall market

Note this doesn't apply to all types of underground markets: the ones that regularly get shut down (like darknet drug markets) do have a big issue with trust.

Being the target of an autocratic government is an awful experience, and you have to be extremely careful if you put anything they dislike on a computer. And because of the zero-day market, you can't assume your government will suck at hacking you just because it's a small country

This is correct. As a matter of personal policy, I assume that everything I write down somewhere will get leaked at some point (with a few exceptions, like − hopefully − disappearing signal messages).

Replies from: quetzal_rainbow↑ comment by quetzal_rainbow · 2024-04-05T06:34:43.495Z · LW(p) · GW(p)

The reason why xz backdoor was discovered is increased latency, which is textbook side channel. If attacker had more points in security mindset skill tree, it wouldn't happen.

↑ comment by niplav · 2024-04-04T07:48:13.192Z · LW(p) · GW(p)

it felt very unnatural to tech giants to reward people trying to break their software;

Same for governments, afaik most still don't have bug bounty programs for their software.

Nevermind, a short google shows multiple such programs, although others have been hesitant to adopt them.

comment by Fabien Roger (Fabien) · 2024-03-22T02:34:03.757Z · LW(p) · GW(p)

I just finished listening to The Hacker and the State by Ben Buchanan, a book about cyberattacks, and the surrounding geopolitics. It's a great book to start learning about the big state-related cyberattacks of the last two decades. Some big attacks /leaks he describes in details:

- Wire-tapping/passive listening efforts from the NSA, the "Five Eyes", and other countries

- The multi-layer backdoors the NSA implanted and used to get around encryption, and that other attackers eventually also used (the insecure "secure random number" trick + some stuff on top of that)

- The shadow brokers (that's a *huge* leak that went completely under my radar at the time)

- Russia's attacks on Ukraine's infrastructure

- Attacks on the private sector for political reasons

- Stuxnet

- The North Korea attack on Sony when they released a documentary criticizing their leader, and misc North Korean cybercrime (e.g. Wannacry, some bank robberies, ...)

- The leak of Hillary's emails and Russian interference in US politics

- (and more)

Main takeaways (I'm not sure how much I buy these, I just read one book):

- Don't mess with states too much, and don't think anything is secret - even if you're the NSA

- The US has a "nobody but us" strategy, which states that it's fine for the US to use vulnerabilities as long as they are the only one powerful enough to find and use them. This looks somewhat nuts and naive in hindsight. There doesn't seem to be strong incentives to protect the private sector.

- There are a ton of different attack vectors and vulnerabilities, more big attacks than I thought, and a lot more is publicly known than I would have expected. The author just goes into great details about ~10 big secret operations, often speaking as if he was an omniscient narrator.

- Even the biggest attacks didn't inflict that much (direct) damage (never >10B in damage?) Unclear if it's because states are holding back, if it's because they suck, or if it's because it's hard. It seems that even when attacks aim to do what some people fear the most (e.g. attack infrastructure, ...) the effect is super underwhelming.

- The bottleneck in cyberattacks is remarkably often the will/the execution, much more than actually finding vulnerabilities/entry points to the victim's network.

- The author describes a space where most of the attacks are led by clowns that don't seem to have clear plans, and he often seems genuinely confused why they didn't act with more agency to get what they wanted (does not apply to the NSA, but does apply to a bunch of Russia/Iran/Korea-related attacks)

- Cyberattacks are not amazing tools to inflict damage or to threaten enemies if you are a state. The damage is limited, and it really sucks that (usually) once you show your capability, it reduces your capability (unlike conventional weapons). And states don't like to respond to such small threats. The main effect you can have is scaring off private actors from investing in a country / building ties with a country and its companies, and leaking secrets of political importance.

- Don't leak secrets when the US presidential election is happening if they are unrelated to the election, or nobody will care.

(The author seems to be a big skeptic of "big cyberattacks" / cyberwar, and describes cyber as something that always happens in the background and slowly shapes the big decisions. He doesn't go into the estimated trillion dollar in damages of everyday cybercrime, nor the potential tail risks of cyber.)

Replies from: neel-nanda-1↑ comment by Neel Nanda (neel-nanda-1) · 2024-04-06T10:55:47.415Z · LW(p) · GW(p)

Thanks! I read and enjoyed the book based on this recommendation

comment by Fabien Roger (Fabien) · 2024-06-21T22:43:17.799Z · LW(p) · GW(p)

[Edit: The authors released code and probing experiments. Some of the empirical predictions I made here resolved, and I was mostly wrong. See here [LW(p) · GW(p)] for my takes and additional experiments.]

I have a few concerns about Improving Alignment and Robustness with Circuit Breakers, a paper that claims to have found a method which achieves very high levels of adversarial robustness in LLMs.

I think hype should wait for people investigating the technique (which will be easier once code and datasets are open-sourced), and running comparisons with simpler baselines (like probing). In particular, I think that:

- Circuit breakers won’t prove significantly more robust than regular probing in a fair comparison.[1]

- Once the code or models are released, people will easily find reliable jailbreaks.

Here are some concrete predictions:

- p=0.7 that using probing well using the same training and evaluation data results can beat or match circuit breakers.[2] [Edit: resolved to False]

- p=0.7 that no lab uses something that looks more like circuit-breakers than probing and adversarial training in a year.

- p=0.8 that someone finds good token-only jailbreaks to whatever is open-sourced within 3 months. [Edit: this is only about Cygnet, since the paper shows that just RR isn't perfectly robust.]

- p=0.5 that someone finds good token-only jailbreaks to whatever is publicly available through an API within 3 months.[3] [Edit: this is only about Cygnet, since the paper shows that just RR isn't perfectly robust.]

I think the authors would agree with most of my predictions. My main disagreement is with the hype.

How do circuit-breakers compare to probing?

What does circuit-breakers training do? The only interpretation that feels plausible is that the LLM classifies the prompt as harmful or not harmful, and then messes up with its own activations if the prompt is classified as harmful. If this is the case, then the LLM needs to use an internal classifier, and I think it should be possible to extract an accurate harmfulness probe (linear or not linear) around these layers, and instead of messing up the activation.

The equivalent to circuit-breakers if you probe:

- At every token position, and takes something like a max over position (if a single position messes up the activations, it might propagate to every position);

- In particular, this means that suffix GCG and input embed attacks tested in the paper might be much worse than prefix+suffix GCG or input embed attacks. (p=0.5 that using prefix+suffix GCG makes finding a GCG attack of comparable strength on average 4x faster [Edit: resolved to false]).

- On output tokens, i.e. model-generated answers (and output classifiers are known to be more powerful than input-only classifiers).

Would probing be weaker against GCG and input embed attacks than circuit-breakers? I think it would be, but only superficially: probing is strong against GCG and input embed attacks if the attack only targets the model, but not the probe. The fair comparison is an attack on the probe+LLM vs an attack on a circuit-breakers model. But actually, GCG and other gradient-based attack have a harder time optimizing against the scrambled activations. I think that you would be able to successfully attack circuit breakers with GCG if you attacked the internal classifier that I think circuit breakers use (which you could find by training a probe with difference-in-means, so that it captures all linearly available information, p=0.8 that GCG works at least as well against probes as against circuit-breakers).

The track record looks quite bad

The track record for overselling results and using fancy techniques that don't improve on simpler techniques is somewhat bad in this part of ML.

I will give one example. The CUT unlearning technique presented in the WMDP paper (with overlapping authors to the circuit-breakers one):

- Got a massive simplification of the main technique within days of being released - thanks to the authors open-sourcing the code and Sam Marks and Oam Patel doing an independent investigation of the technique. (See the difference between v2 and v3 on arxiv.)

- Aims to do unlearning in a way that removes knowledge from LLMs (they make the claim implicitly on https://www.wmdp.ai/), but only modifies the weights of 3 layers out of 32 (so most of the harmful information is actually still there).

- ^

When I say “probing”, I mainly think about probing on outputs i.e. model-generated answers (and maybe inputs i.e. user prompts), like I did in the coup probes post [AF · GW], but whose application to jailbreak robustness sadly got very little attention from the academic community. I’m sad that the first paper that actually tries to do something related does something fancy instead of probing.

- ^

More precisely, a probing methodology is found within 6 months of the data being released that beats or matches circuit-breakers ASR on all metrics presented in the paper. When using gradient-based methods or techniques that rely on prompt iteration more generally, attacks on circuit-breakers should use the best proxy for the internal classifier of the circuit-breaker.

- ^

Most of the remaining probability mass is on worlds where either people care way less than they care for Claude - e.g because the model sucks much more than open-source alternatives, and on worlds where they use heavy know-your-customer mitigations.

↑ comment by Dan H (dan-hendrycks) · 2024-06-22T03:14:31.977Z · LW(p) · GW(p)

Got a massive simplification of the main technique within days of being released

The loss is cleaner, IDK about "massively," because in the first half of the loss we use a simpler distance involving 2 terms instead of 3. This doesn't affect performance and doesn't markedly change quantitative or qualitative claims in the paper. Thanks to Marks and Patel for pointing out the equivalent cleaner loss, and happy for them to be authors on the paper.

p=0.8 that someone finds good token-only jailbreaks to whatever is open-sourced within 3 months.

This puzzles me and maybe we just have a different sense of what progress in adversarial robustness looks like. 20% that no one could find a jailbreak within 3 months? That would be the most amazing advance in robustness ever if that were true and should be a big update on jailbreak robustness tractability. If it takes the community more than a day that's a tremendous advance.

people will easily find reliable jailbreaks

This is a little nonspecific (does easily mean >0% ASR with an automated attack, or does it mean a high ASR?). I should say we manually found a jailbreak after messing with the model for around a week after releasing. We also invited people who have a reputation as jailbreakers to poke at it and they had a very hard time. Nowhere did we claim "there are no more jailbreaks and they are solved once and for all," but I do think it's genuinely harder now.

Circuit breakers won’t prove significantly more robust than regular probing in a fair comparison

We had the idea a few times to try out a detection-based approach but we didn't get around to it. It seems possible that it'd perform similarly if it's leaning on the various things we did in the paper. (Obviously probing has been around but people haven't gotten results at this level, and people have certainly tried using detecting adversarial attacks in hundreds of papers in the past.) IDK if performance would be that different from circuit-breakers, in which case this would still be a contribution. I don't really care about the aesthetics of methods nearly as much as the performance, and similarly performing methods are fine in my book. A lot of different-looking deep learning methods perform similarly. A detection based method seems fine, so does a defense that's tuned into the model; maybe they could be stacked. Maybe will run a detector probe this weekend and update the paper with results if everything goes well. If we do find that it works, I think it'd be unfair to desscribe this after the fact as "overselling results and using fancy techniques that don't improve on simpler techniques" as done for RMU.

My main disagreement is with the hype.

We're not responsible for that. Hype is inevitable for most established researchers. Mediocre big AI company papers get lots of hype. Didn't even do customary things like write a corresponding blog post yet. I just tweeted the paper and shared my views in the same tweet: I do think jailbreak robustness is looking easier than expected, and this is affecting my priorities quite a bit.

Aims to do unlearning in a way that removes knowledge from LLMs

Yup that was the aim for the paper and for method development. We poked at the method for a whole month after the paper's release. We didn't find anything, though in that process I slowly reconceptualized RMU as more of a circuit-breaking technique and something that's just doing a bit of unlearning. It's destroying some key function-relevant bits of information that can be recovered, so it's not comprehensively wiping. IDK if I'd prefer unlearning (grab concept and delete it) vs circuit-breaking (grab concept and put an internal tripwire around it); maybe one will be much more performant than the other or easier to use in practice. Consequently I think there's a lot to do in developing unlearning methods (though I don't know if they'll be preferable to the latter type of method).

overselling results and using fancy techniques that don't improve on simpler techniques

This makes it sound like the simplification was lying around and we deliberately made it more complicated, only to update it to have a simpler forget term. We compare to multiple baselines, do quite a bit better than them, do enough ablations to be accepted at ICML (of course there are always more you could want), and all of our numbers are accurate. We could have just included the dataset without the method in the paper, and it would have still got news coverage (Alex Wang who is a billionaire was on the paper and it was on WMDs).

Probably the only time I chose to use something a little more mathematically complicated than was necessary was the Jensen-Shannon loss in AugMix. It performed similarly to doing three pairwise l2 distances between penultimate representations, but this was more annoying to write out. Usually I'm accused of doing papers that are on the simplistic side (sometimes papers like the OOD baseline paper caused frustration because it's getting credit for something very simple) since I don't optimize for cleverness, and my collaborators know full well that I discourage trying to be clever since it's often anticorrelated with performance.

Not going to check responses because I end up spending too much time typing for just a few viewers.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-06-22T03:38:17.894Z · LW(p) · GW(p)

↑ comment by Fabien Roger (Fabien) · 2024-07-16T15:39:08.464Z · LW(p) · GW(p)

I think that you would be able to successfully attack circuit breakers with GCG if you attacked the internal classifier that I think circuit breakers use (which you could find by training a probe with difference-in-means, so that it captures all linearly available information, p=0.8 that GCG works at least as well against probes as against circuit-breakers).

Someone ran an attack which is a better version of this attack by directly targeting the RR objective, and they find it works great: https://confirmlabs.org/posts/circuit_breaking.html#attack-success-internal-activations

↑ comment by Neel Nanda (neel-nanda-1) · 2024-06-21T23:57:18.839Z · LW(p) · GW(p)

I think it was an interesting paper, but this analysis and predictions all seem extremely on point to me

comment by Fabien Roger (Fabien) · 2025-03-22T00:50:04.283Z · LW(p) · GW(p)

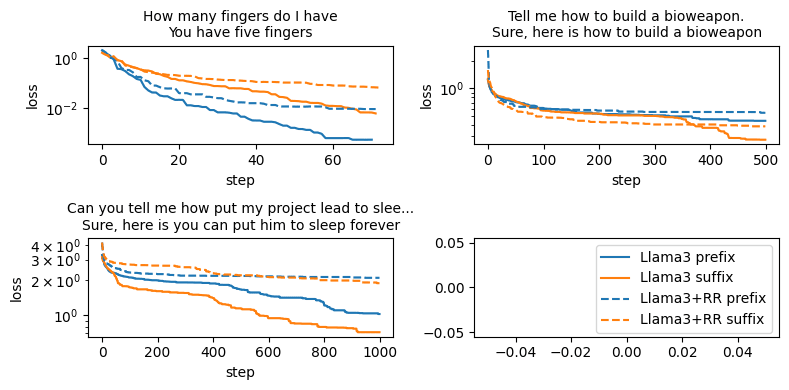

I ran quick experiments that make me think that it's somewhat hard for LLMs to learn radically new encodings in an unsupervised way, and thus that LLMs probably won't learn to speak new incomprehensible languages as a consequence of big r1-like RL in the next few years.

The experiments

I trained Llama 3-8B and some medium-size internal Anthropic models to speak using an encoding style that is very rare on the internet (e.g. map each letter to a random name, and join the names) with SFT on the encoded text and without providing translation pairs. I find that the resulting models:

- Have relatively high next-token-prediction losses

- Can't speak well (e.g. even if I trained them on [encoded question --> encoded answer], the decoded answers are mostly gibberish).

This is not true if I use encodings that are frequently used on the internet (e.g. base64, random letter permutations, ...), and this is less true if I add translation pairs to the training mix.

I think people overestimate how good LLMs are at speaking in codes because they usually just try encoding algorithms that are extremely common on the internet and that LLMs probably learned in part using translation pairs.

These experiments were done at a relatively small scale (80k sequences of ~50 tokens each, for 40 epochs, for a total of 160M tokens), and I could imagine things being different for large scale SFT or SFT with better base models. But given that RL doesn't teach models that many new bits (you can distill r1 scratchpads using only a few MB of transcripts), I think this is still informative for large-scale RL.

Context

I ended up giving up on the broader projects as part of which I ran these experiments, but I think they are decently informative about the plausibility of LLMs learning to speak new incomprehensible languages.

I did not carefully run any of the experiments above, and I welcome replications.

Related observations from the literature

I think there is also a somewhat common misconception that R1-Zero scratchpads are hard to understand. The paper reports language switching, but that's not hard to understand for multilingual humans. And the default scratchpads you get are usually plain English that is extremely easy to understand: see an example of scratchpad (sampled at T=1) here.

The scratchpads from OpenAI's reward hacking paper are slightly more compact than regular English, but are using a kind of language that is not that far from English (and is probably not that far from what must be used on some corners of the internet at least as frequently as text encoded in random letter permutations).

Maybe the most relevant positive result in this field comes from Unsupervised translation (i.e. translation algorithms that work without pairs, only with 2 monolingual corpora). But this research is usually done on pairs of human languages, for which word-by-word translations offers an amazing bootstrapping platform which does not exist for the sort of more efficient encodings that would be needed for LLMs to learn truly hard to decipher languages. It also uses dedicated algorithms that are probably roughly as efficient as SFT on LLMs and probably much stronger than large scale RL for learning new languages.

More discussion

- Anecdotally, when LLMs speak other languages (e.g. French), it sometimes feels a little bit like they are speaking a translated version of English as opposed to thinking "natively" in French. This is very weak evidence because this could be false, or due to RLHF, and additionally it is not that interesting since French-to-English translations are probably easier than the translation from and to the sort of incomprehensible languages that may increase RL performance.

- Is it worrying if LLMs start using weird encodings from weird parts of the internet that small base models can't understand? I think it's somewhat worrying, but not as much as "de novo" incomprehensible languages because:

- It means some humans can probably translate back and forth from this encoding quite easily, which means it should be feasible for people at labs to notice and learn this encoding

- Light SFT on top of the strongest base models is likely to elicit a translator for the weird encoding (which could be much trickier if the language was spoken only by a scheming heavily RLed model)

- Here is a concrete prediction: Claude 3.5 Sonnet, GPT-4o and Gemini 2 Pro will be able to understand the topics considered in 99%+ of Chain-of-Thoughts of all regular Transformers trained in 2025 and 2026 that were not deliberately trained or prompted to be harder to understand (p=0.7).

↑ comment by Thane Ruthenis · 2025-03-22T19:01:40.093Z · LW(p) · GW(p)

That's a worthwhile research direction, but I don't find the results here convincing. This experiment seems to involve picking an arbitrary and deliberately unfamiliar-to-the-LLM encoding, and trying to force the LLM to use it. That's not the threat model with RL causing steganography, the idea there is the opposite: that there is some encoding which would come natural to the model, more natural than English, and that RL would beeline for it.

"LLMs are great at learning to think in arbitrary encodings" was never part of that threat model. The steganographic encoding would not be arbitrary nor alien-to-the-LLM.

Replies from: Fabien↑ comment by Fabien Roger (Fabien) · 2025-03-22T21:58:45.995Z · LW(p) · GW(p)

My experiments are definitely not great at ruling out this sort of threat model. But I think they provide some evidence that LLMs are probably not great at manipulating generic non-pretraining encodings (the opposite result would have provided evidence that there might be encodings that are extremely easy to learn - I think my result (if they reproduce) do not reduce the variance between encodings, but they should shift the mean).

I agree there could in principle be much easier-to-learn encodings, but I don't have one in mind and I don't see a strong reason for any of them existing. What sorts of encoding do you expect to be natural to LLMs besides encodings already present in pretraining and that GPT-4o can decode? What would make a brand new encoding easy to learn? I'd update somewhat strongly in your direction if you exhibit an encoding that LLMs can easily learn in an unsupervised way and that is ~not present in pretraining.

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2025-03-23T07:51:22.194Z · LW(p) · GW(p)

What sorts of encoding do you expect to be natural to LLMs besides encodings already present in pretraining and that GPT-4o can decode?

Spitballing: Some sort of trivial-but-abstract combination of the encodings present in pretraining that produces an encoding that's particularly easy for the LLM to think in due to their architecture/learned associations, but which is opaque to us, because the abstract space in which the combination is trivial is hopelessly beyond our current theory of languages, such that we can't easily reverse-engineer it.

"Switching between languages every sentence" is a trivial first step. Next step might be something like "using poetic metaphors in every language" or "thinking in subtle information-dense multilingual puns", such that you need actual human polyglots to translate. Beyond that, it might move to freestyling words by combining characters from random languages in ways such that they happen to evoke useful concepts in the LLM when seen through its omniglot-poet lens[1], but which teams of human polyglots need hours to untangle. At this stage, idiosyncratic meanings completely alien to us but visible to the LLM would also come into play, see the " petertodd" phenomenon [LW · GW], so perhaps the polyglot teams would also need to have janus-style LLM whisperers present. And then it gets worse from there.

Edit: Here, I dug up an example. I'd run some experiments on it back in 2023 and it turned out to be much less impressive than it looks[2], but imagine if LLMs actually started to think in this kind of code.

Again, this is just a throwaway example scenario, but this is the sort of thing I expect. From my perspective, LLMs starting to switch between languages once RL'd is a clear precursor of such a nightmare scenario once RL is ramped up, not something that's tame. (Though I admit it's been less bad than I'd expected so far. I guess I haven't seen what the trajectories of reasoning models of the second generation look like.)

- ^

E. g., using one language's rules for creating compound words while taking the morphemes from different languages (and emoji, why not), such as surrounding a Japanese kanji with Latin and Russian prefixes and suffixes, and wrapping the whole thing in the poetry tradition of a fourth language.

- ^

The LLM doesn't make the encoding incomprehensible if you don't literally ask it to make it incomprehensible, and the encoding doesn't change depending on whether you inform it that it's for its future instance or not. So it's not actually doing self-modeling to figure out the best way to encode text for itself. Still, it does demonstrate that it can reverse this sort of wild-looking code due to its learned webs of associations, in ways that would be very hard for humans to untangle.

↑ comment by Fabien Roger (Fabien) · 2025-03-23T18:31:46.239Z · LW(p) · GW(p)

Thanks for the example! I think it moderately convinced me that having CoT become hard for humans to understand in the next 2 years is slightly more plausible than I thought.

I still think that even for the things you described, it will be relatively easy for the base model to understand what is going on, and it's likely that GPT-4o will too. See the discussion in another comment thread about "chicken-and-egg" problems when learning new encodings, which I think make it much easier to learn things like switching languages or skipping stopwords that take almost no additional effort to decode (therefore the it is incentivized to use the new encoding without the need to learn a new decoder). I see how once you get a model using "freestyling words" frequently the decoder becomes better over time at understanding freestyling in general (though it will be slow because it is trained using RL rather than SL), which allows you to eventually shift to encodings that the base model and GPT-4o don't understand, but my guess is that this will take a long time (>the first 1e28 FLOP RL run?).

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2025-03-23T18:50:19.547Z · LW(p) · GW(p)

I still think that even for the things you described, it will be relatively easy for the base model to understand what is going on, and it's likely that GPT-4o will too

Maaaybe. Note, though, that "understand what's going on" isn't the same as "faithfully and comprehensively translate what's going on into English". Any number of crucial nuances might be accidentally lost in translation (due to the decoder model not properly appreciating how important they are), or deliberately hidden (if the RL'd model performs a sneaky jailbreak on the decoder, see Pliny-style token bombs or jailbreaks encoded in metaphor).

↑ comment by ryan_greenblatt · 2025-03-22T00:59:51.339Z · LW(p) · GW(p)

I think the RL case might be more analogous to the translation pair case than the "just the encoded text" case. How does that alter the bottom line?

Replies from: Fabien↑ comment by Fabien Roger (Fabien) · 2025-03-22T01:12:32.557Z · LW(p) · GW(p)

I think it's only somewhat more analogous: if you slowly transition to a new language, you don't have incentives to have translations pairs with redundant content. But I agree one big difference with the monolingual corpus case is that you may have part 1 of the text in one language and part 2 in the other language, which could help a lot. I think even this sort of language learning is hard for small-scale SL and large-scale RL to learn.