Posts

Comments

The other aspect is age. Russia in 1917 had half of population younger than 18, while modern Russia has median age of 39, with all implications about political stability and conservatism.

I'm not commenting anything about positive suggestions in this post, but it has some really strange understanding of what it argues with.

an 'Axiom of Rational Convergence'. This is the powerful idea that under sufficiently ideal epistemic conditions – ample time, information, reasoning ability, freedom from bias or coercion – rational agents will ultimately converge on a single, correct set of beliefs, values, or plans, effectively identifying 'the truth'.

There is no reason to "agree on values" (in a sense of actively seeking agreement instead of observing it if it is here) and I don't think many rationalists would argue with that? Values are free parameters in rational behavior, they can be arbitrary. Different humans have different values and this is fine. CEV is about acting where human values are coherent, not about imposing coherence out of nowhere.

I feel really weird about this article, because I at least spiritually agree with the conclusion and my lower bound on AGI extinction scenario is "automated industry grows 40%/y and at first everybody becomes filthy rich and in next decade necrosphere devours everything" and this scenario seems to be weirdly underdiscussed relatively to "AGI develops self-sustaining supertech and kills everyone within one month" by both skeptics and doomers.

Thus said, I disagree with almost everything else.

You underestimate effect of RnD automation because you seem to ignore that a lot of bottlenecks in RnD and adoption of results are actually human bottlenecks. Like, even if we automate everything in RnD which is not free scientific creativity, we are still bottlenecked by facts that humans can keep in working memory only 10 things and that multitasking basically doesn't exist and that you can perform several hours of hard intellectual work per day maximum and that you need 28 years to grow scientist and that there is only so many people actually capable to produce science and that science progresses one funeral at time, et cetera, et cetera. The same with adoption: even if innovation exists, there is only so many people actually capable to understand it and implement while integrating into existing workflow in maintainable way and they have only so many productive working hours per day.

Even if we dial every other productivity factor into infinity, there is a still hard cap on productivity growth from inherent human limitations and population. When we achieve AI automation, we substitute these limitations with what is achievable inside physical limitations.

Your assumption "everybody thinks that RnD is going to be automated first because RnD is mostly abstract reasoning" is wrong, because actual reason why RnD is going to be automated relatively early is because it's very valuable, especially if you count opportunity costs. Every time you pay AI researcher money to automate narrow task, you waste money which could be spent on creating an army on 100k artificial researchers who could automate 100k tasks. I think this holds even if you assume pretty long timelines, because w.r.t. of mentioned human bottlenecks everything you can automate before RnD automation is going to be automated rather poorly (and, unlike science, you can't run self-improvement from here).

I mostly think about alignment methods like "model-based RL which maximizes reward iff it outputs action which is provably good under our specification of good".

I think the problem here is that you do not quite understand the problem.

It's not that we "imagine that we've imagined the whole world, do not notice any contradictions and call it a day". It's that we know there exists idealized procedure which doesn't produce stupid answers, like, it can't be money-pumped. We also know that if we try to approximate this procedure harder (consider more hypotheses, compute more inferences) we are going to get in expectation better results. It is not, say, property of null hypothesis testing - the more hypotheses you consider, the more likely you to either p-hack or drive p-value into statistical insignificance due to excessive multiple testing correction.

The whole computationally unbounded Bayesian business is more about "here is an idealized procedure X, and if we don't do anything visibly for us stupid from perspective of X, then we can hope that our losses won't be unbounded from certain notion of boundedness". It is not obvious that your procedure can be understood this way.

No, my point is that in worlds where intelligence is possible, almost all obstacles are common.

We can probably survive in the following way:

- RL becomes the main way to get new, especially superhuman, capabilities.

- Because RL pushes models hard to do reward hacking, it's difficult to reliably get models to do something difficult to verify. Models can do impressive feats, but nobody is stupid enough to put AI into positions which usually imply responsibility.

- This situation conveys how difficult alignment is and everybody moves toward verifiable rewards or similar approaches. Capabilities progress becomes dependent on alignment progress.

I feel like I'm failing to convey the level of abstraction I intend to.

I'm not saying that durability of object implies intelligence of object. I'm saying that if the world is ordered in a way that allows existence of distinct durable and non-durable objects, that means the possibility of intelligence which can notice that some objects are durable and some are not and exploit this fact.

If the environment is not ordered enough to contain intelligent beings, it's probably not ordered enough to contain distinct durable objects too.

To be clear, by "environment" I mean "the entire physics". When I say "environment not ordered enough" I mean "environment with physical laws chaotic enough to not contain ordered patterns".

I mean, we exist and we are at least somewhat intelligent, which implies strong upper bound on heterogenity of environment.

On the other hand, words like "durability" imply possibility of categorization, which itself implies intelligence. If environment is sufficiently heterogenous, you are durable at one second and evaporate at another.

I'm trying to write post, but, well, it's hard.

Many people wrongly assume that the main way to use bioweapons is to create small amount of pathogen to release it in environment with outbreak as an intended outcome. (I assume that where your sentence about DNA synthesis comes from.) The problem is that creating outbreaks in practice is very hard, we, thankfully, don't know reliable way to do that. In practice, the way that bioweapons work reliably is "bomb-saturate the entire area with anthrax such that first wave of death is going to be from anaphylactic shock rather than infection" and to create necessary amount of pathogen you need industrial infrastructure which doesn't exist, because nobody in our civilization cultivates anthrax at industrial scale.

Filter for homogenity of environment is anthropic selection - if environment is sufficiently heterogeneous, it kills everyone who tries to reach out of its ecological niche, general intelligence doesn't develop and we are not here to have this conversation.

In some sense this is a core idea of UDT: when coordinating with forks of yourself, you defer to your unique last common ancestor. When it's not literally a fork of yourself, there's more arbitrariness but you can still often find a way to use history to narrow down on coordination Schelling points (e.g. "what would Jesus do")

I think this is wholly incorrect line of thinking. UDT operates on your logical ancestor, not literal.

Say, if you know enough science, you know that normal distribution is a maxentropy distribution for fixed mean and variance, and therefore, optimal prior distribution under certain set of assumptions. You can ask yourself question "let's suppose that I haven't seen this evidence, what would be my prior probability?" and get an answer and cooperate with your counterfactual versions which have seen other versions of evidence. But you can't cooperate with your hypothetical version which doesn't know what normal distribution is, because, if it doesn't know about normal distribution, it can't predict how you would behave and account for this in cooperation.

Sufficiently different versions of yourself are just logically uncorrelated with you and there is no game-theoretic reason to account for them.

I'm quite skeptical about "easier" due to usual Algernon argument.

Roughly, conceptually, animal activity can be divided into 3 phases:

- Active, when animal is looking for food or mate or something.

- Sleep (which seems to be a requirement for Earth animals design).

- Passive phase in-between when animal doesn't do anything particular, but it shouldn't sleep because of predators (the main problem with sleep as an evolutionary adaptation).

Large herbivores have very short sleep phase and active/passive phase are not very meaningfully different, because food doesn't hide from them and is not very energy-dense, and they should always look out for predators. Large predators have very long sleep phase, short active phase and don't have passive phase - because their search for food is very energy-intense and they don't need to look out for predators.

Humans seem to be comfortably between these two extremes, but I think that unproductive phase in your day is just what it is - unproductive phase, meant only for you to be alert about predators (and fellow tribesmen). You can probably extend your productive phase somewhat by doing known things, like healthy diet, normal sleep, exercise, but it is likely that you have strong biological limit on what you can productively do per day which you can't cross without degradation in quality of life.

I don't think caffeine and amphetamines help here. They just forcefully redistribute activity - if you have ADHD, you are going to work instead of doing whatever you feel like and if you are night owl, you can function better at morning.

You can play on difference between "activity which requires you to be biologically active" and "meaningful activity", like, I don't think watching TV with family is very energetically demanding. Although, it seems to be a time organization problem?

I think that actual solution to "lack of biological willpower" is something like "large bionanotech system which resets organism in the way like sleep resets it" and honestly, I think if you can develop such systems you are not far from actual aging treatment.

"The best possible life" for me pretty much includes "everyone who I care about is totally happy"?

And parents certainly do dangerous risky things to provide better future for their children all the time.

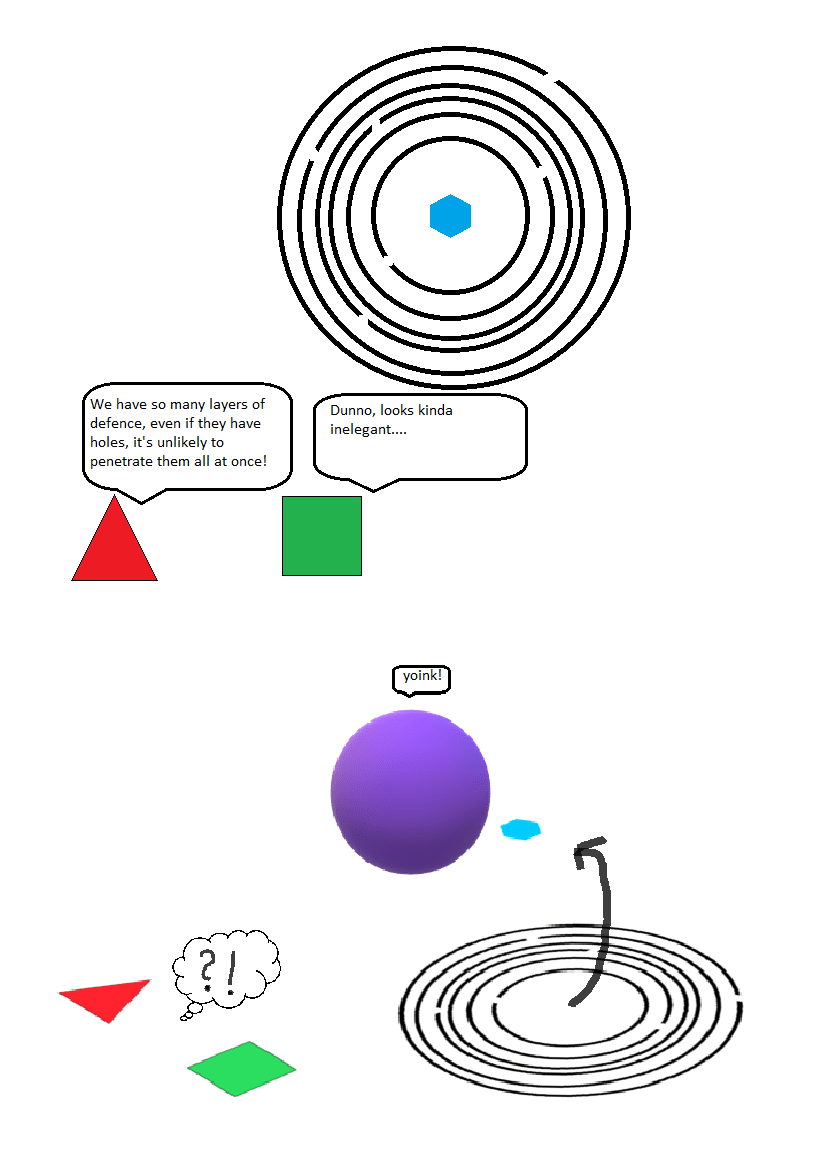

When we should expect "Swiss cheese" approach in safety/security to go wrong:

I think the general problem with your metaphor is that we don't know "relevant physics" of self-improvement. We can't plot "physically realistic" trajectory of landing in "good values" land and say "well, we need to keep ourselves in direction of this trajectory". BTW, MIRI has a dialogue with this metaphor.

And most of your suggestions are like "let's learn physics of alignment"? I have nothing against that, but it is the hard part, and control theory doesn't seem to provide a lot of insight here. It's a framework at best.

Yes, that's why it's compromise - nobody will totally like it. But if Earth is going to exist for trillions of years, it will radically change too.

My honest opinion is that WMD evaluations of LLMs are not meaningfully related to X-risk in the sense of "kill literally everyone." I guess current or next-generation models may be able to assist a terrorist in a basement in brewing some amount of anthrax, spraying it in a public place, and killing tens to hundreds of people. To actually be capable to kill everyone from a basement, you would need to bypass all the reasons industrial production is necessary at the current level of technology. A system capable to bypass the need for industrial production in a basement is called "superintelligence," and if you have a superintelligent model on the loose, you have far bigger problems than schizos in basements brewing bioweapons.

I think "creeping WMD relevance", outside of cyberweapons, is mostly bad, because it is concentrated on mostly fake problem, which is very bad for public epistemics, even if we forget about lost benefits from competent models.

Well, I have bioengineering degree, but my point is that "direct lab experience" doesn't matter, because WMDs in quality and amount necessary to kill large numbers of enemy manpower are not produced in labs. They are produced in large industrial facilities and setting up large industrial facility for basically anything is on "hard" level of difficulty. There is a difference between large-scale textile industry and large-scale semiconductor industry, but if you are not government or rich corporation, all of them lie in "hard" zone.

Let's take, for example, Saddam chemical weapons program. First, industrial yields: everything is counted in tons. Second: for actual success, Saddam needed a lot of existing expertise and machinery from West Germany.

Let's look at Soviet bioweapons program. First, again, tons of yield (someone may ask yourself, if it's easier to kill using bioweapons than conventional weaponry, why somebody needs to produce tons of them?). Second, USSR built the entire civilian biotech industry around it (many Biopreparat facilities are active today as civilian objects!) to create necessary expertise.

The difference with high explosives is that high explosives are not banned by international law, so there is a lot of existing production, therefore you can just buy them on black market or receive from countries which don't consider you terrorist. If you really need to produce explosives locally, again, precursors, machinery and necessary expertise are legal and widespread sufficiently that they can be bought.

There is a list of technical challenges in bioweaponry where you are going to predictably fuck up if you have biological degree and you think you know what you are doing but in reality you do not, but I don't write out lists of technical challenges on the way to dangerous capabilities, because such list can inspire someone. You can get an impression about easier and lower-stakes challenges from here.

The trick is that chem/bio weapons can't, actually, "be produced simply with easily available materials", if we talk about military-grade stuff, not "kill several civilians to create scary picture in TV".

It's very funny that Rorschach linguistic ability is totally unremarkable comparing to modern LLMs.

The real question is why does NATO have our logo.

This is LGBTESCREAL agenda

I think there is an abstraction between "human" and "agent": "animal". Or, maybe, "organic life". Biological systematization (meaning all ways to systematize: phylogenetic, morphological, functional, ecological) is a useful case study for abstraction "in the wild".

EY wrote in planecrash about how the greatest fictional conflicts between characters with different levels of intelligence happen between different cultures/species, not individuals of the same culture.

I think that here you should re-evaluate what you consider "natural units".

Like, it's clear due to Olbers's paradox and relativity that we live in causally isolated pocket where stuff we can interact with is certainly finite. If the universe is a set of causally isolated bubbles all you have is anthropics over such bubbles.

I think it's perfect ground for meme cross-pollination:

"After all this time?"

"Always."

I'll repeat myself that I don't believe in Saint Petersburg lotteries:

my honest position towards St. Petersburg lotteries is that they do not exist in "natural units", i.e., counts of objects in physical world.

Reasoning: if you predict with probability p that you encounter St. Petersburg lottery which creates infinite number of happy people on expectation (version of St. Petersburg lottery for total utilitarians), then you should put expectation of number of happy people to infinity now, because E[number of happy people] = p * E[number of happy people due to St. Petersburg lottery] + (1 - p) * E[number of happy people for all other reasons] = p * inf + (1 - p) * E[number of happy people for all other reasons] = inf.

Therefore, if you don't think right now that expected number of future happy people is infinity, then you shouldn't expect St. Petersburg lottery to happen in any point of the future.

Therefore, you should set your utility either in "natural units" or in some "nice" function of "natural units".

I think there is a reducibility from one to another using different UTMs? I.e., for example, causal networks are Turing-complete, therefore, you can write UTM that explicitly takes description of initial conditions, causal time evolution law and every SI-simple hypothesis here will correspond to simple causal-network hypothesis. And you can find the same correspondence for arbitrary ontologies which allow for Turing-complete computations.

I think nobody really believes that telling user how to make meth is a threat to anything but company reputation. I would guess this is a nice toy task which recreates some obstacles on aligning superintelligence (i.e., superintelligence will probably know how to kill you anyway). The primary value of censoring dataset is to detect whether model can rederive doom scenario without them in training data.

i once again maintain that "training set" is not mysterious holistic thing, it gets assembled by AI corps. If you believe that doom scenarios in training set meaningfully affect our survival chances, you should censor them out. Current LLMs can do that.

There is a certain story, probably common for many LWers: first, you learn about spherical in vacuum perfect reasoning, like Solomonoff induction/AIXI. AIXI takes all possible hypotheses, predicts all possible consequences of all possible actions, weights all hypotheses by probability and computes optimal action by choosing one with the maximal expected value. Then, it's not usually even told, it is implied in a very loud way, that this method of thinking is computationally untractable at best and uncomputable at worst and you need to do clever shortcuts. This is true in general, but approach "just list out all the possibilities and consider all the consequences (inside certain subset)" gets neglected as a result.

For example, when I try to solve puzzle from "Baba is You" and then try to analyze how I would be able to solve it faster, I usually come up to "I should have just write down all pairwise interactions between the objects to notice which one will lead to solution".

I'd say that true name for fake/real thinking is syntactic thinking vs semantic thinking.

Syntactic thinking - you have bunch of statements-strings and operate with them according to rules.

Semantic thinking - you need to actually create model of what these strings mean, do sanity-check, capture things that are true in model but can't be expressed by given syntactic rules, etc.

I'm more worried about counterfactual mugging and transparent Newcomb. Am I right that you are saying "in first iteration of transparent Newcomb austere decision theory gets no more than 1000$ but then learns that if it modifies its decision theory into more UDT-like it will get more money in similar situations", turning it into something like son-of-CDT?

First of all, "the most likely outcome at given level of specificity" is not equal to "outcome with the most probability mass". I.e., if one outcome has probability 2% and the rest of outcomes 1%, 98% is still "other outcome than the most likely".

The second is that no, it's not what evolutionary theory predicts. Most of traits are not adaptive, but randomly fixed, because if all traits are adaptive, then ~all mutations are detrimental. Because mutations are detrimental, they need to be removed from gene pool by preventing carriers from reproduction. Because most detrimental mutations do not kill carrier immediately, they have chance to randomly spread in popularion. Because we have "almost all mutations are detrimental" and "everybody has mutations in offspring", for anything like human genome and human procreation pattern we have hard ceiling on how much of genome can be adaptive (which is like 20%).

Real evolutionary theory prediction is like "some random trait get fixed in the species with the most ecological power (i.e., ASI) and this trait is amortized against all the galaxies".

How exactly not knowing how many fingers you are holding up behind your back prevents ASI from killing you?

I think austerity has a weird relationship with counterfactuals?

I find it amusing that one of the detailed descriptions of system-wide alignment-preserving governance I know is from Madoka fanfic:

The stated intentions of the structure of the government are three‐fold.

Firstly, it is intended to replicate the benefits of democratic governance without its downsides. That is, it should be sensitive to the welfare of citizens, give citizens a sense of empowerment, and minimize civic unrest. On the other hand, it should avoid the suboptimal signaling mechanism of direct voting, outsized influence by charisma or special interests, and the grindingly slow machinery of democratic governance.

Secondly, it is intended to integrate the interests and power of Artificial Intelligence into Humanity, without creating discord or unduly favoring one or the other. The sentience of AIs is respected, and their enormous power is used to lubricate the wheels of government.

Thirdly, whenever possible, the mechanisms of government are carried out in a human‐interpretable manner, so that interested citizens can always observe a process they understand rather than a set of uninterpretable utility‐optimization problems.

<...>

Formally, Governance is an AI‐mediated Human‐interpretable Abstracted Democracy. It was constructed as an alternative to the Utilitarian AI Technocracy advocated by many of the pre‐Unification ideologues. As such, it is designed to generate results as close as mathematically possible to the Technocracy, but with radically different internal mechanics.

The interests of the government's constituents, both Human and True Sentient, are assigned to various Representatives, each of whom is programmed or instructed to advocate as strongly as possible for the interests of its particular topic. Interests may be both concrete and abstract, ranging from the easy to understand "Particle Physicists of Mitakihara City" to the relatively abstract "Science and Technology".

Each Representative can be merged with others—either directly or via advisory AI—to form a super‐Representative with greater generality, which can in turn be merged with others, all the way up to the level of the Directorate. All but the lowest‐level Representatives are composed of many others, and all but the highest form part of several distinct super‐Representatives.

Representatives, assembled into Committees, form the core of nearly all decision‐making. These committees may be permanent, such as the Central Economic Committee, or ad‐hoc, and the assignment of decisions and composition of Committees is handled by special supervisory Committees, under the advisement of specialist advisory AIs. These assignments are made by calculating the marginal utility of a decision inflicted upon the constituents of every given Representative, and the exact process is too involved to discuss here.

At the apex of decision‐making is the Directorate, which is sovereign, and has power limited only by a few Core Rights. The creation—or for Humans, appointment—and retirement of Representatives is handled by the Directorate, advised by MAR, the Machine for Allocation of Representation.

By necessity, VR Committee meetings are held under accelerated time, usually as fast as computational limits permit, and Representatives usually attend more than one at once. This arrangement enables Governance, powered by an estimated thirty‐one percent of Earth's computing power, to decide and act with startling alacrity. Only at the city level or below is decision‐making handed over to a less complex system, the Bureaucracy, handled by low‐level Sentients, semi‐Sentients, and Government Servants.

The overall point of such a convoluted organizational structure is to maintain, at least theoretically, Human‐interpretability. It ensures that for each and every decision made by the government, an interested citizen can look up and review the virtual committee meeting that made the decision. Meetings are carried out in standard human fashion, with presentations, discussion, arguments, and, occasionally, virtual fistfights. Even with the enormous abstraction and time dilation that is required, this fact is considered highly important, and is a matter of ideology to the government.

<...>

To a past observer, the focus of governmental structure on AI Representatives would seem confusing and even detrimental, considering that nearly 47% are in fact Human. It is a considerable technological challenge to integrate these humans into the day‐to‐day operations of Governance, with its constant overlapping time‐sped committee meetings, requirements for absolute incorruptibility, and need to seamlessly integrate into more general Representatives and subdivide into more specific Representatives.

This challenge has been met and solved, to the degree that the AI‐centric organization of government is no longer considered a problem. Human Representatives are the most heavily enhanced humans alive, with extensive cortical modifications, Permanent Awareness Modules, partial neural backups, and constant connections to the computing grid. Each is paired with an advisory AI in the grid to offload tasks onto, an AI who also monitors the human for signs of corruption or insufficient dedication. Representatives offload memories and secondary cognitive tasks away from their own brains, and can adroitly attend multiple meetings at once while still attending to more human tasks, such as eating.

To address concerns that Human Representatives might become insufficiently Human, each such Representative also undergoes regular checks to ensure fulfillment of the Volokhov Criterion—that is, that they are still functioning, sane humans even without any connections to the network. Representatives that fail this test undergo partial reintegration into their bodies until the Criterion is again met.

I think one form of "distortion" is development of non-human and not pre-trained circuitry for sufficiently difficult tasks. I.e., if you make LLM to solve nanotech design it is likely that optimal way of thinking is not similar to how human would think about the task.

What if I have wonderful plot in my head and I use LLM to pour it into acceptable stylistic form?

Why would you want to do that?

Just Censor Training Data. I think it is a reasonable policy demand for any dual-use models.

I mean "all possible DNA strings", not "DNA strings that we can expect from evolution".

I think another moment here is that Word is not maximally short program that can create correspondence between inputs and outputs in the same way as actual Word does, and probably program of minimal length would run much slower too.

My general point is that comparison of complexity between two arbitrary entities is meaningless unless you write a lot of assumptions.

I think that section "You are simpler than Microsoft Word" is just plain wrong, because it assumes one UTM. But Kolmogorov complexity is defined only up to the choice of UTM.

Genome is only as simple as it is allowed by the rest of cell mechanism, like ribosomal decoding mechanism and protein folding. Humans are simple only relative to space of all possible organisms that can be built on Earth biochemistry. Conversely, Word is complex only relatively to all sets of x86 processor instructions or all sets of C programs, or whatever you used for definition of Word size. To properly compare complexity of both things, you need to translate from one language to another. How large should be genome of organism capable to run Word? It seems reasonable that simulation of human organism up to nucleotides will be very large if we write it in C, and I think genome of organism capable to run Word just as good as modern PC will be much larger than human genome.

Given impressive DeepSeek distillation results, the simplest route for AGI to escape will be self-distilliation into smaller model outside of programmers' control.

More technical definition of "fairness" here is that environment doesn't distinguish between algorithms with same policies, i.e. mappings <prior, observation_history> -> action? I think it captures difference between CooperateBot and FairBot.

As I understand, "fairness" was invented as responce to statement that it's rational to two-box and Omega just rewards irrationality.

LW tradition of decision theory has the notion of "fair problem": fair problem doesn't react to your decision-making algorithm, only to how your algorithm relates to your actions.

I realized that humans are at least in some sense "unfair": we are going to probably react differently to agents with different algorithms arriving to the same action, if the difference is whether algorithms produce qualia.

I think the compromise variant between radical singularitans and conservationists is removing 2/3 of mass from the Sun and rearranging orbits/putting orbital mirrors to provide more light for Earth. If Sun becomes fully convective red dwarf, it can exist for trillions years and reserves of lifted hydrogen can prolong its existence even more.

I think the easy difference is that totally optimized according to someone's values world is going to be either very good (even if not perfect) or very bad from perspective of another human? I wouldn't say it's impossible, but it should be very specific combination of human values to make it just as valuable as turning everything into paperclips, not worse, not better.

To my best (very uncertain) quess, human values are defined through some relation of states of consciousness to social dynamic?

"Human values" is a sort of objects. Humans can value, for example, forgiveness or revenge, these things are opposite, but both things have distinct quality that separate them from paperclips.