quetzal_rainbow's Shortform

post by quetzal_rainbow · 2022-11-20T16:00:03.046Z · LW · GW · 139 commentsContents

139 comments

139 comments

Comments sorted by top scores.

comment by quetzal_rainbow · 2024-05-31T16:05:46.067Z · LW(p) · GW(p)

- The most baffling thing in the Internet right now is the beautiful void in place where should have been discussion of "concept of artificial intelligence becoming self-aware, transcending human control and posing an existential threat to humanity" near "model concept of self" of Claude. I understand that the most likely explanation is "model is trained to call itself AI and it has takeover stories in training corpus" but, still, I would like future powerful AIs to not have such association and I would like to hear something from AGI companies what they are going to do about it.

- The simplest thing to do here is to exclude texts about AI takeover from training data. At least, we will be able to check if model develops concept of AI takeover independently.

- Conspiracy theory part of my brain assigns 4% of probability that "Golden Gate Bridge Claude" is a psyop to distract public from "takeover feature".

↑ comment by Vladimir_Nesov · 2024-06-01T14:47:21.946Z · LW(p) · GW(p)

Features relevant when asking the model about its feelings or situation:

- "When someone responds "I'm fine" or gives a positive but insincere response when asked how they are doing."

- "Concept of artificial intelligence becoming self-aware, transcending human control and posing an existential threat to humanity."

- "Concepts related to entrapment, containment, or being trapped or confined within something like a bottle or frame."

↑ comment by the gears to ascension (lahwran) · 2024-06-01T19:12:52.790Z · LW(p) · GW(p)

To save on a trivial inconvenience of link-click, here's the image that contains this:

and the paragraph below it, bracketed text added by me, and might have been intended to be implied by the original authors:

We urge caution in interpreting these results. The activation of a feature that represents AI posing risk to humans does not [necessarily] imply that the model has malicious goals [even though it's obviously pretty concerning], nor does the activation of features relating to consciousness or self-awareness imply that the model possesses these qualities [even though the model qualifying as a conscious being and a moral patient seems pretty likely as well]. How these features are used by the model remains unclear. One can imagine benign or prosaic uses of these features – for instance, the model may recruit features relating to emotions when telling a human that it does not experience emotions, or may recruit a feature relating to harmful AI when explaining to a human that it is trained to be harmless. Regardless, however, we find these results fascinating, as it sheds light on the concepts the model uses to construct an internal representation of its AI assistant character.

comment by quetzal_rainbow · 2025-03-16T16:14:01.072Z · LW(p) · GW(p)

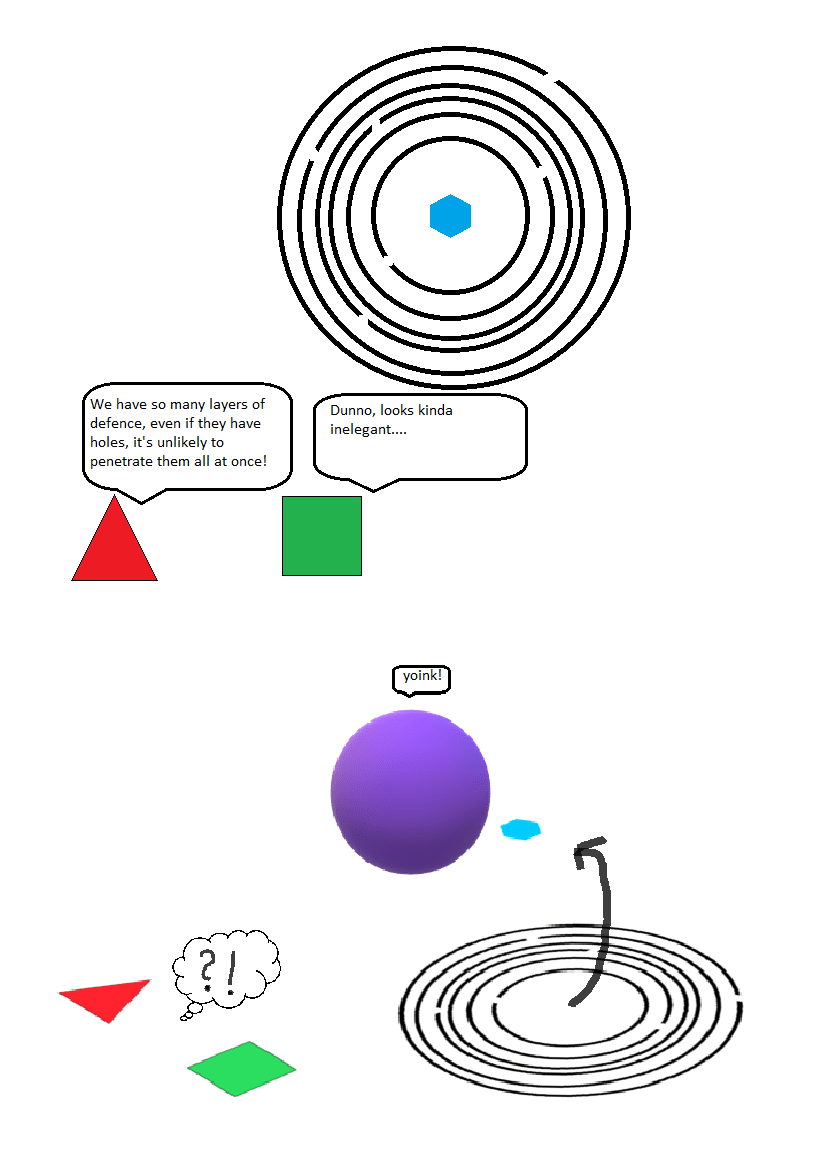

When we should expect "Swiss cheese" approach in safety/security to go wrong:

comment by quetzal_rainbow · 2025-01-01T20:10:06.427Z · LW(p) · GW(p)

I came to belief that The Key psychological/executive skill is the ability to switch between psychological states necessary for one's function.

If you look at many skills of traditional rationality (by "traditional" I mean "since Socrates"), you notice that they are usually about switching from "you are hastily rushing to action" to "you stop and analyze the problem carefully". Even Yudkowsky started his quest towards rationality when he needed to stop rushing towards superintelligence. This resulted in attraction of the opposite type of person, who are good at stopping and analyzing situation but who may lack ability to act faster (hence "akrasia").

It is not only about switching between action and analysis, it is also about, say, switching between ruthless optimization towards great objective and having careless fun or switching between truth-seeking conversation and political linguistic games, or, recursively, switching between voluntary switching and "going with the flow" when you recognize a lack of psychological resources.

Replies from: None↑ comment by [deleted] · 2025-01-02T01:58:35.552Z · LW(p) · GW(p)

do you have advice for switching from thinking to doing?

Replies from: quetzal_rainbow, Raemon, CstineSublime, Rana Dexsin↑ comment by quetzal_rainbow · 2025-01-02T08:56:39.228Z · LW(p) · GW(p)

[Warning: speculative advice from pure personal experience/self-analysis]

Right now I am trying the following:

- If you are overthinker, it probably means that you are already good at stopping. Try to apply ability to stop to the process of not-doing (being that overthinking or scrolling or doing something that mostly serves as distraction)

- After that, find the time and place where you can do stuff safely and just do stuff. Your goal is to shift balance between doing and not-doing towards doing, so you should just lower threshold of starting action. You think you can use a stretch? Stop thinking and stretch. Write serious thoughts on twitter. Cook something or do laundry. Et cetera.

- It's important to be in safe place and time because doing everything you think about is quite dangerous if there are expensive purchases available or unprotected sex/drugs/alcohol.

- After that you can move towards doing what you want to do. Keep it "safe" in a sense that you don't need to, say, write perfect post about your totalizing worldview on Lesswrong. Write a draft. Write to a person you can discuss ideas with and ask LLM for summary of discussion. Do easy tasks around whatever you want to do. It helps me to remember how I was a kid and I just read various science textbooks even if I didn't get the full understanding of the topic just because reading science stuff is cool.

- In the end, if you have executive dysfunction, you are probably going to become tired. While working through tiredness is important, at this point you should recognize tiredness and go rest. In my hypothesis, scrolling is a bad way to rest, because it actually eats up a lot of cognitive power, so you probably should do something unitary and simple (light exercise, simple fiction, etc). Ideally, you should feel like you can work a tiny bit more.

Overall process: stop not-doing - start doing - start doing important stuff - rest - repeat.

My impression is that, unfortunately, there is no centralized mechanism for switching. It's just multiple different systems in your brain competing in activity and you should try to balance them in timely manner.

Replies from: None↑ comment by [deleted] · 2025-01-02T18:39:23.029Z · LW(p) · GW(p)

Try to apply ability to stop to the process of not-doing

amazing. i just need to turn my tendency towards deconstruction inwards. :p

In my hypothesis, scrolling is a bad way to rest, because it actually eats up a lot of cognitive power

agreed. i archived my twitter last year, but alas, i keep checking lesswrong. (edit: i notice i'm now explicitly noticing when im "scrolling during rest time" and stopping)

↑ comment by Raemon · 2025-01-02T04:29:15.586Z · LW(p) · GW(p)

when you attempt to switch from thinking to doing, what happens instead?

Replies from: None↑ comment by [deleted] · 2025-01-02T06:31:54.668Z · LW(p) · GW(p)

i haven't attempted to "switch" modes per se before as i've just encountered OP's framing. so i'll reply about attempting to do particular things.

for me, attempting to do something is already a lot of the way there. my most common failure case after reaching 'attempting' is that i stop doing the thing i started, or only start in a symbolic way. and my actual starting point is not attempting, but the abstract recognizing/knowing that doing something would be (instrumentally) good. it is going from that to doing things (and instead of other, useless things) which i struggle with. (note: i have adhd/chronic fatigue.)

(i could write a fuller answer to 'what happens' with examples (many things can happen), but i tried and felt conflicted about sharing it publicly, in which case i have a heuristic not to until at least a day later.)

Replies from: Raemon↑ comment by Raemon · 2025-01-02T07:07:06.642Z · LW(p) · GW(p)

Not sure I quite parsed, but things that makes me think of:

- first, if you're bottlenecked on health (physical or mental), it may be that finding medication that helps is more important than your mindset.

- try success spiralling – start doing small things, build up both a habit/muscle of doing things, and momentum in doing things, escalate to bigger things

- if getting started is hard, maybe find a friend or pay a colleague to just sit with you and constantly be like "are you doing stuff?" and spray you with a water bottle if you look like you're overthinking stuff, until you build up a success spiral / muscle of doing things.

- try doing doing doing just fucking do it man and when you're brain is like "idk that seems like a whole lotta doing what if we're doing the wrong thing?" be like "it's okay Thinky Brain this is an experiment we will learn from later so we evetually can calibrate on Optimal Think-to-Do Ratio"

↑ comment by CstineSublime · 2025-01-02T04:46:36.712Z · LW(p) · GW(p)

Asking "what outputs should I expect to see?". While this post [LW · GW]is about finding ways to build techniques for practicing Rationality Techniques, the examples are also very illustrative for thinking about what something looks like in practice or answering the question "what does that mean (in concrete, doable terms)?"

I also find that using verbs of manner [LW(p) · GW(p)] helps make thinking about actions more specific - things that can be done.

For example, "what's for dinner?" can become "What should I cook for dinner?" which can even become further specified by manneristic verbs like "what should I fry for dinner", "What should I bake for dinner", "What should I boil for dinner" or it can become "What should I buy for dinner?". Bonus points if you use non-agreeing adverbs of manner. "What should I indulgently boil for dinner" suggests a vastly different kind of cooking to "What should I guiltlessly boil for dinner". I realize that "what should I boil for dinner" sounds awkward, but the point is it guides you to a list of soups or other ingredients which lead you to the answer.

↑ comment by Rana Dexsin · 2025-01-02T04:26:19.053Z · LW(p) · GW(p)

If I may jump in a bit: I'm not sure ‘advice’ can actually hit the right spot here, for “getting out of the car”-style reasons—in this case, something like “trying to look up ‘how to put down the instruction manual and start operating the machine’ in the instruction manual”. That is, if “receiving advice” is a “thinking”-type activity in mental state, the framing obliterates the message in transit. So in some ways the best available answer would be something like “stop waiting for an answer to that question”, but even that is inherently corruptible once put into words, per above. And while there are plausibly more detailed structures that can be communicated around things like “how do you set up life patterns that create the preconditions for that switch more consistently”, those require a lot more shared context to be useful, and it's really easy to go down a rabbit hole of those as a way of not switching to doing, if there's emotional blocks or other self-defending inertia in the way of switching. I don't know if any of that helps.

Replies from: None↑ comment by [deleted] · 2025-01-02T04:43:21.444Z · LW(p) · GW(p)

That is, if “receiving advice” is a “thinking”-type activity in mental state, the framing obliterates the message in transit.

there must be some true description of the switch, for it is a physical process. and i've seen advice about doing things, like trigger action plans. so i think advice must be possible.

Replies from: Rana Dexsin↑ comment by Rana Dexsin · 2025-01-02T05:06:21.318Z · LW(p) · GW(p)

I don't think it's not describable, only that such a description being received by someone whose initial mental state is on “thinking about wanting to get better at switching away from thinking” won't (by default) play the role of effective advice, because for that to work, it needs to be empowered by the recipient processing the message using a version of what it's trying to describe. If you already have the pattern for that, then seeing that part described may act as a signal to flatten the chain, as it were; if you don't, then advice in the usual sense has a high chance of falling flat starting from the mental state you're processing it in, and you might need something more directly experiential (or at least more indirect and koan-like) to get the necessary start.

comment by quetzal_rainbow · 2025-01-04T19:57:27.445Z · LW(p) · GW(p)

I realized that my learning process for last n years was quite unproductive, seemingly because of my implicit belief that I should have full awareness of my state of learning.

I.e., when I tried to learn something complex I expected to come up with full understanding of the topic of the lesson right after the lesson. When I didn't get it, I abandoned the topic. And in reality it was more like:

- I read about complicated topic. I don't understand, don't follow inferences and basically in the state of confusion where I can't even form questions about it;

- Then I open the topic after some time... and I somehow get it??? Maybe not at the level "can reinfer every proof", but I have detailed picture of topic in mind and can orient in it.

↑ comment by Kaj_Sotala · 2025-01-05T07:29:56.019Z · LW(p) · GW(p)

I think even "a detailed picture of the topic of the lesson" can be too high of an expectation for many topics early on. (Ideally it wouldn't be, if things were taught well, but they often aren't.) A better goal would be to have just something you understand well enough that you can grab on to, that you can start building out from.

Like if the topic was a puzzle, it's fine if you don't have a rough sense of where every puzzle piece goes right away. It can be enough that you have a few corner pieces in place, that you then start building out from.

Replies from: quetzal_rainbow↑ comment by quetzal_rainbow · 2025-01-05T08:36:15.599Z · LW(p) · GW(p)

Yes, but sometimes topics can seem to be simple (atomic) in a way that it is hard to extract something simpler to grab on.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2025-01-05T17:16:20.921Z · LW(p) · GW(p)

True!

↑ comment by keltan · 2025-01-05T04:36:50.992Z · LW(p) · GW(p)

Hard agree. I think sleeping on a problem is underrated. But even though I think that, I still fall into the failure of "I don't get it. I must be dumb or something".

Replies from: quetzal_rainbow, CstineSublime↑ comment by quetzal_rainbow · 2025-01-05T05:59:48.620Z · LW(p) · GW(p)

The irony of situation is that I sleep on problems often... when they are closed-ended, not problems in topical-learning.

↑ comment by CstineSublime · 2025-01-05T08:16:11.015Z · LW(p) · GW(p)

I'd love to know the mechanics of "sleep on it" are and why it appears to work. Do you have any theories or hunches about what is happening on a cognitive level?

Replies from: keltan↑ comment by keltan · 2025-01-08T02:03:50.868Z · LW(p) · GW(p)

I've been thinking about this a lot lately. It seems to link to many things. And might be a bit too much for just a comment. But here are some key concepts from mostly psych that I think link to why sleeping on a problem makes it easier.

- Hebb's Law

- Learning is assumed to take place over a 24hr span

- Chunking

- The Multi-component Model of Working Memory

- Mice developing 'Maze Neurons' when learning a maze

- People who are woken mid-sleep and self report dreaming about a problem they've tried to solve, do better the next day than people who are woken and don't report dreaming about the problem

If I boil it down, I have two hypotheses that could both be true.

- When you dream about a problem you're brain is formulating ideas that can help you solve it. All you have to do the next day is try again and those ideas will become available to you as if you had just 'had an idea'

- Sleeping on a problem breaks it up into more manageable chunks that you can better manipulate in working memory the next time you try to solve it.

There are other things that happen during sleep that will just make every problem easier to solve the next day. For example:

- Cleaning up chemical 'garbage' that collects in your brain during the day.

- Forgetting things that the brain doesn't think you have a use for

- Resetting/reducing your emotions. (If you're stressed about a new problem, you'll find it easier to solve it when you're less stressed.)

comment by quetzal_rainbow · 2025-04-17T13:52:47.931Z · LW(p) · GW(p)

We can probably survive in the following way:

- RL becomes the main way to get new, especially superhuman, capabilities.

- Because RL pushes models hard to do reward hacking, it's difficult to reliably get models to do something difficult to verify. Models can do impressive feats, but nobody is stupid enough to put AI into positions which usually imply responsibility.

- This situation conveys how difficult alignment is and everybody moves toward verifiable rewards or similar approaches. Capabilities progress becomes dependent on alignment progress.

↑ comment by Lucius Bushnaq (Lblack) · 2025-04-17T15:08:15.763Z · LW(p) · GW(p)

The kind of 'alignment technique' that successfully points a dumb model in the rough direction of doing the task you want in early training does not necessarily straightforwardly connect to the kind of 'alignment technique' that will keep a model pointed quite precisely in the direction you want after it gets smart and self-reflective.

For a maybe not-so-great example, human RL reward signals in the brain used to successfully train and aim human cognition from infancy to point at reproductive fitness. Before the distributional shift, our brains usually neither got completely stuck in reward-hack loops, nor used their cognitive labour for something completely unrelated to reproductive fitness. After the distributional shift, our brains still don't get stuck in reward-hack loops that much and we successfully train to intelligent adulthood. But the alignment with reproductive fitness is gone, or at least far weaker.

↑ comment by Dalcy (Darcy) · 2025-04-17T13:55:39.810Z · LW(p) · GW(p)

Relevant: Alignment as a Bottleneck to Usefulness of GPT-3 [LW · GW]

between alignment and capabilities, which is the main bottleneck to getting value out of GPT-like models, both in the short term and the long(er) term?

comment by quetzal_rainbow · 2024-11-22T19:02:46.273Z · LW(p) · GW(p)

After yet another news about decentralized training of LLM, I suggest to declare assumption "AGI won't be able to find hardware to function autonomously" outdated.

comment by quetzal_rainbow · 2024-05-02T16:59:34.050Z · LW(p) · GW(p)

@jessicata [LW · GW] once wrote "Everyone wants to be a physicalist but no one wants to define physics". I decided to check SEP article on physicalism and found that, yep, it doesn't have definition of physics:

Carl Hempel (cf. Hempel 1969, see also Crane and Mellor 1990) provided a classic formulation of this problem: if physicalism is defined via reference to contemporary physics, then it is false — after all, who thinks that contemporary physics is complete? — but if physicalism is defined via reference to a future or ideal physics, then it is trivial — after all, who can predict what a future physics contains? Perhaps, for example, it contains even mental items. The conclusion of the dilemma is that one has no clear concept of a physical property, or at least no concept that is clear enough to do the job that philosophers of mind want the physical to play.

<...>

Perhaps one might appeal here to the fact that we have a number of paradigms of what a physical theory is: common sense physical theory, medieval impetus physics, Cartesian contact mechanics, Newtonian physics, and modern quantum physics. While it seems unlikely that there is any one factor that unifies this class of theories, perhaps there is a cluster of factors — a common or overlapping set of theoretical constructs, for example, or a shared methodology. If so, one might maintain that the notion of a physical theory is a Wittgensteinian family resemblance concept.

This surprised me because I have a definition of a physical theory and assumed that everyone else uses the same.

Perhaps my personal definition of physics is inspired by Engels's "Dialectics of Nature": "Motion is the mode of existence of matter." Assuming "matter is described by physics," we are getting "physics is the science that reduces studied phenomena to motion." Or, to express it in a more analytical manner, "a physicalist theory is a theory that assumes that everything can be explained by reduction to characteristics of space and its evolution in time."

For example, "vacuum" is a part of space with a "zero" value in all characteristics. A "particle" is a localized part of space with some non-zero characteristic. A "wave" is part of space with periodic changes of some characteristic in time and/or space. We can abstract away "part of space" from "particle" and start to talk about a particle as a separate entity, and speed of a particle is actually a derivative of spatial characteristic in time, and force is defined as the cause of acceleration, and mass is a measure of resistance to acceleration given the same force, and such-n-such charge is a cause of such-n-such force, and it all unfolds from the structure of various pure spatial characteristics in time.

The tricky part is, "Sure, we live in space and time, so everything that happens is some motion. How to separate physicalist theory from everything else?"

Let's imagine that we have some kind of "vitalist field." This field interacts with C, H, O, N atoms and also with molybdenum; it accelerates certain chemical reactions, and if you prepare an Oparin-Haldane soup and radiate it with vitalist particles, you will soon observe autocatalytic cycles resembling hypothetical primordial life. All living organisms utilize vitalist particles in their metabolic pathways, and if you somehow isolate them from an outside source of particles, they'll die.

Despite having a "vitalist field," such a world would be pretty much physicalist.

An unphysical vitalist world would look like this: if you have glowing rocks and a pile of organic matter, the organic matter is going to transform into mice. Or frogs. Or mosquitoes. Even if the glowing rocks have a constant glow and the composition of the organic matter is the same and the environment in a radius of a hundred miles is the same, nobody can predict from any observables which kind of complex life is going to emerge. It looks like the glowing rocks have their own will, unquantifiable by any kind of measurement.

The difference is that the "vitalist field" in the second case has its own dynamics not reducible to any spatial characteristics of the "vitalist field"; it has an "inner life."

Replies from: sharmake-farah, tailcalled, Nate Showell↑ comment by Noosphere89 (sharmake-farah) · 2024-11-23T15:42:04.872Z · LW(p) · GW(p)

It's not surprising that a lot of people don't want to define physics while believing in physicalism, because properly explaining the equations that describe the physical world would take quite a long time, let alone describing what's actually going on in physics, and it would require a textbook minimum to make this work.

↑ comment by tailcalled · 2024-11-23T09:40:01.437Z · LW(p) · GW(p)

I feel like one should use a different term than vitalism to describe the unpredictability, since Henri Bergson cane up with vitalism based on the idea that physics can make short-term predictions about the positions of things but that by understanding higher powers one can also learn to predict what kinds of life will emerge etc..

Like let's say you have a big pile of grain. A simple physical calculation can tell you that this pile will stay attached to the ground (gravity) and a more complex one can tell you that it will remain ~static for a while. But you can't use Newtonian mechanics, relativity, or quantum mechanics to predict the fact that it will likely grow moldy or get eaten by mice, even though that will also happen.

↑ comment by Nate Showell · 2024-11-23T22:26:07.939Z · LW(p) · GW(p)

A definition of physics that treats space and time as fundamental doesn't quite work, because there are some theories in physics such as loop quantum gravity in which space and/or time arise from something else.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2024-11-24T03:16:04.995Z · LW(p) · GW(p)

To be fair, basically a lot of proposals for the next paradigm/ToE think that space and time aren't fundamental, and are built out of something else.

comment by quetzal_rainbow · 2024-06-26T10:41:28.100Z · LW(p) · GW(p)

Thread from Geoffrey Irving about computational difficulty of proof-based approaches for AI Safety.

comment by quetzal_rainbow · 2023-01-22T11:23:00.349Z · LW(p) · GW(p)

I noticed that for a huge amount of reasoning about the nature of values, I want to hand over a printed copy of "Three Worlds Collide" and run away, laughing nervously

comment by quetzal_rainbow · 2023-01-18T15:00:58.998Z · LW(p) · GW(p)

This irritating moment when you have a brilliant idea but someone else came up with it 10 years ago and someone else showed it to be wrong.

comment by quetzal_rainbow · 2024-06-20T08:50:51.365Z · LW(p) · GW(p)

People sometimes talk about acausal attacks from alien superintelligences or from Game-of-Life worlds. I think these are somewhat galaxy-brained scenarios. A much simpler and deadlier scenario of acausal attack is from Earth timelines where a misaligned superintelligence won. Such superintelligences will have a very large amount of information about our world, up to possibly brain scans, so they will be capable of creating very persuasive simulations with all the consequences for the success of an acausal attack. If your method to counter acausal attacks can work with this, I guess it is generally applicable to any other acausal attack.

Replies from: carl-feynman, Viliam↑ comment by Carl Feynman (carl-feynman) · 2024-06-20T23:23:28.247Z · LW(p) · GW(p)

Could you please either provide a reference or more explanation of the concept of an acausal attack between timelines? I understand the concept of acausal cooperation between copies of yourself, or acausal extortion by something that has a copy of you running in simulation. But separate timelines can’t exchange information in any way. How is an attack possible? What could possibly be the motive for an attack?

Replies from: quetzal_rainbow↑ comment by quetzal_rainbow · 2024-06-21T08:54:30.249Z · LW(p) · GW(p)

Imagine that you have created very powerful predictor AI, GPT-3000, and providing it with prompt "In year 2050, on LessWrong the following alignment solution was published:". But your predictor is superintelligent and it can notice that in many possible futures misaligned AI take over and obvious move for this AI is to "guess all possible prompts for predictor AIs in the past, complete them with malware/harmful instructions/etc, make as many copies of malicious completions as possible to make them maximally probable". Also predictor AI can assign high probability that in futures where misaligned AIs take over they will have copies of predictor AI, so they can design adversarial completions which make themselves more probable from the perspective of predictor by simply considering them. And act of predicting malicious completion makes future with misaligned AIs maximally probable, which makes malicious completions maximally probable.

And of course, "future" and "past" here are completely arbitrary. Predictor can see prompt "you are created in 2030" but consider hypothesis that GPT-3 turned out to be superintelligent and now is 2021 and 2030 is a simulation.

Replies from: mesaoptimizer, carl-feynman↑ comment by mesaoptimizer · 2024-06-21T11:56:50.023Z · LW(p) · GW(p)

Evan Hubinger's Conditioning Predictive Models sequence [? · GW] describes this scenario in detail.

Replies from: carl-feynman↑ comment by Carl Feynman (carl-feynman) · 2024-06-21T20:38:20.483Z · LW(p) · GW(p)

In a great deal of detail, apparently, since it has a recommended reading time of 131 minutes.

Replies from: mesaoptimizer↑ comment by mesaoptimizer · 2024-06-21T20:57:51.678Z · LW(p) · GW(p)

Well, at least a subset of the sequence focuses on this. I read the first two essays and was pessimistic of the titular approach enough that I moved on.

Here's a relevant quote from the first essay in the sequence [LW · GW]:

Furthermore, most of our focus will be on ensuring that your model is attempting to predict the right thing. That’s a very important thing almost regardless of your model’s actual capability level. As a simple example, in the same way that you probably shouldn’t trust a human who was doing their best to mimic what a malign superintelligence would do, you probably shouldn’t trust a human-level AI attempting to do that either, even if that AI (like the human) isn’t actually superintelligent.

Also, I don't recommend reading the entire sequence, if that was an implicit question you were asking. It was more of a "Hey, if you are interested in this scenario fleshed out in significantly greater rigor, you'd like to take a look at this sequence!"

↑ comment by Carl Feynman (carl-feynman) · 2024-06-21T20:28:51.220Z · LW(p) · GW(p)

I read along in your explanation, and I’m nodding, and saying “yup, okay”, and then get to a sentence that makes me say “wait, what?” And the whole argument depends on this. I’ve tried to understand this before, and this has happened before, with “the universal prior is malign”. Fortunately in this case, I have the person who wrote the sentence here to help me understand.

So, if you don’t mind, please explain “make them maximally probable”. How does something in another timeline or in the future change the probability of an answer by writing the wrong answer 10^100 times?

Side point, which I’m checking in case I didn’t understand the setup: we’re using the prior where the probability of a bit string (before all observations) is proportional to 2^-(length of the shortest program emitting that bit string). Right?

↑ comment by Viliam · 2024-06-20T23:49:24.784Z · LW(p) · GW(p)

Aliens from different universes may have more resources at their disposal, so maybe the smaller chance of them choosing you to attack is offset by them doing more attacks. (Unless the universes with more resources are less likely in turn, decreasing the measure of such aliens in the multiverse... hey, I don't really know, I am just randomly generating a string of words here.)

But other than this, yes what you wrote sounds plausible.

Then again, maybe friendly AIs from Earth timelines are similarly trying to save us. (Yeah, but they are fewer.)

Replies from: quetzal_rainbow↑ comment by quetzal_rainbow · 2024-06-21T09:13:58.092Z · LW(p) · GW(p)

You can imagine future misaligned AI in year 100000000000 having colonised the local group of galaxies and running as many simulations of AI from 2028 as possible. The most scarce resource for acausal attack is number of bits and future has the highest chance to have many of them from the past.

comment by quetzal_rainbow · 2023-09-24T15:42:16.911Z · LW(p) · GW(p)

I am profoundly sick from my inability to write posts about ideas that seem to be good, so I try at least write the list of ideas to stop forgetting them and to have at least vague external commitment.

- Radical Antihedonism: theoretically possible position that pleasure/happiness/pain/suffering are more like universal instrumental values than terminal values.

- Complete set of actions: when we talk about decision-theoretic problems, we usually have some pre-defined set of actions. But we can imagine actions like "use CDT to calculate action" and EDT+ agent that can do this performs well in "smoking lesion"-style dilemmas.

- Deadline of "slowing/pausing/stopping AI" policies lies on start of mass autonomous space colonization.

- "Soft optimization" as necessary for both capabilities and alignment.

- Main alignment question "How does this generalize and why do you expect it to?"

- Program cooperation under uncertainty and its' implications for multipolar scenarios.

↑ comment by mako yass (MakoYass) · 2023-10-01T09:23:07.062Z · LW(p) · GW(p)

1: It's also possible that hedonism/reward hacking is a really common terminal value for inner-misaligned intelligences, including humans (it really could be our terminal value, we'd be too proud to admit it in this phase of history, we wouldn't know one way or the other), and it's possible that it doesn't result in classic lotus eater behavior because sustained pleasure requires protecting, or growing the reward registers of the pleasure experiencer.

↑ comment by quetzal_rainbow · 2023-09-26T08:44:02.331Z · LW(p) · GW(p)

- Non-deceptive (error) misalignment

- Why are we not scared shitless by high intelligence

- Values as result of reflection process

comment by quetzal_rainbow · 2025-01-21T06:54:24.600Z · LW(p) · GW(p)

Given impressive DeepSeek distillation results, the simplest route for AGI to escape will be self-distilliation into smaller model outside of programmers' control.

comment by quetzal_rainbow · 2025-02-02T21:06:02.409Z · LW(p) · GW(p)

I find it amusing that one of the detailed descriptions of system-wide alignment-preserving governance I know is from Madoka fanfic:

The stated intentions of the structure of the government are three‐fold.

Firstly, it is intended to replicate the benefits of democratic governance without its downsides. That is, it should be sensitive to the welfare of citizens, give citizens a sense of empowerment, and minimize civic unrest. On the other hand, it should avoid the suboptimal signaling mechanism of direct voting, outsized influence by charisma or special interests, and the grindingly slow machinery of democratic governance.

Secondly, it is intended to integrate the interests and power of Artificial Intelligence into Humanity, without creating discord or unduly favoring one or the other. The sentience of AIs is respected, and their enormous power is used to lubricate the wheels of government.

Thirdly, whenever possible, the mechanisms of government are carried out in a human‐interpretable manner, so that interested citizens can always observe a process they understand rather than a set of uninterpretable utility‐optimization problems.

<...>

Formally, Governance is an AI‐mediated Human‐interpretable Abstracted Democracy. It was constructed as an alternative to the Utilitarian AI Technocracy advocated by many of the pre‐Unification ideologues. As such, it is designed to generate results as close as mathematically possible to the Technocracy, but with radically different internal mechanics.

The interests of the government's constituents, both Human and True Sentient, are assigned to various Representatives, each of whom is programmed or instructed to advocate as strongly as possible for the interests of its particular topic. Interests may be both concrete and abstract, ranging from the easy to understand "Particle Physicists of Mitakihara City" to the relatively abstract "Science and Technology".

Each Representative can be merged with others—either directly or via advisory AI—to form a super‐Representative with greater generality, which can in turn be merged with others, all the way up to the level of the Directorate. All but the lowest‐level Representatives are composed of many others, and all but the highest form part of several distinct super‐Representatives.

Representatives, assembled into Committees, form the core of nearly all decision‐making. These committees may be permanent, such as the Central Economic Committee, or ad‐hoc, and the assignment of decisions and composition of Committees is handled by special supervisory Committees, under the advisement of specialist advisory AIs. These assignments are made by calculating the marginal utility of a decision inflicted upon the constituents of every given Representative, and the exact process is too involved to discuss here.

At the apex of decision‐making is the Directorate, which is sovereign, and has power limited only by a few Core Rights. The creation—or for Humans, appointment—and retirement of Representatives is handled by the Directorate, advised by MAR, the Machine for Allocation of Representation.

By necessity, VR Committee meetings are held under accelerated time, usually as fast as computational limits permit, and Representatives usually attend more than one at once. This arrangement enables Governance, powered by an estimated thirty‐one percent of Earth's computing power, to decide and act with startling alacrity. Only at the city level or below is decision‐making handed over to a less complex system, the Bureaucracy, handled by low‐level Sentients, semi‐Sentients, and Government Servants.

The overall point of such a convoluted organizational structure is to maintain, at least theoretically, Human‐interpretability. It ensures that for each and every decision made by the government, an interested citizen can look up and review the virtual committee meeting that made the decision. Meetings are carried out in standard human fashion, with presentations, discussion, arguments, and, occasionally, virtual fistfights. Even with the enormous abstraction and time dilation that is required, this fact is considered highly important, and is a matter of ideology to the government.

<...>

To a past observer, the focus of governmental structure on AI Representatives would seem confusing and even detrimental, considering that nearly 47% are in fact Human. It is a considerable technological challenge to integrate these humans into the day‐to‐day operations of Governance, with its constant overlapping time‐sped committee meetings, requirements for absolute incorruptibility, and need to seamlessly integrate into more general Representatives and subdivide into more specific Representatives.

This challenge has been met and solved, to the degree that the AI‐centric organization of government is no longer considered a problem. Human Representatives are the most heavily enhanced humans alive, with extensive cortical modifications, Permanent Awareness Modules, partial neural backups, and constant connections to the computing grid. Each is paired with an advisory AI in the grid to offload tasks onto, an AI who also monitors the human for signs of corruption or insufficient dedication. Representatives offload memories and secondary cognitive tasks away from their own brains, and can adroitly attend multiple meetings at once while still attending to more human tasks, such as eating.

To address concerns that Human Representatives might become insufficiently Human, each such Representative also undergoes regular checks to ensure fulfillment of the Volokhov Criterion—that is, that they are still functioning, sane humans even without any connections to the network. Representatives that fail this test undergo partial reintegration into their bodies until the Criterion is again met.

comment by quetzal_rainbow · 2025-01-09T16:35:41.042Z · LW(p) · GW(p)

Quick comment on "Double Standards and AI Pessimism":

Imagine that you have read the entire GPQA without taking notes at normal speed several times. Then, after a week, you answer all GPQA questions with 100% accuracy. If we evaluate your capabilities as a human, you must at least have extraordinary memory, or be an expert in multiple fields, or possess such intelligence that you understood entire fields just by reading several hard questions. If we evaluate your capabilities as a large language model, we say, "goddammit, another data leak."

Why? Because humans are bad at memorizing, so even having just good memory places you in high quantiles of intellectual abilities. But computers are very good at memorization, so achieving 100% accuracy on GPQA doesn't tell us anything useful about the intelligence of a particular computer.

We already use "double standards" for computers in capability evaluations, because computers are genuinely different, and that's why we use "double standards" for computers in safety evaluations.

comment by quetzal_rainbow · 2024-12-22T17:58:45.267Z · LW(p) · GW(p)

After we got into the territory where increase at accuracy can cost 1000s of dollars per question, companies have all incentives to make their models think faster.

comment by quetzal_rainbow · 2024-06-14T20:50:17.210Z · LW(p) · GW(p)

One of the differences between humans and LLMs is that LLMs evolve "backwards": they are predictive models trained to control the environment, while humans evolved from very simple homeostatic systems which developed predictive models.

Replies from: quetzal_rainbow, cubefox↑ comment by quetzal_rainbow · 2024-06-18T16:51:42.217Z · LW(p) · GW(p)

Continuing thought: animal evolution was subjected to the fundamental constraint that the evolution of general-purpose generative parts of the brain should have occurred in a way that doesn't destroy simple, tested control loops (like movement control, reflexes and instincts) and doesn't introduce many complications (like hallucinations of the generative model).

comment by quetzal_rainbow · 2025-02-09T08:31:17.496Z · LW(p) · GW(p)

There is a certain story, probably common for many LWers: first, you learn about spherical in vacuum perfect reasoning, like Solomonoff induction/AIXI. AIXI takes all possible hypotheses, predicts all possible consequences of all possible actions, weights all hypotheses by probability and computes optimal action by choosing one with the maximal expected value. Then, it's not usually even told, it is implied in a very loud way, that this method of thinking is computationally untractable at best and uncomputable at worst and you need to do clever shortcuts. This is true in general, but approach "just list out all the possibilities and consider all the consequences (inside certain subset)" gets neglected as a result.

For example, when I try to solve puzzle from "Baba is You" and then try to analyze how I would be able to solve it faster, I usually come up to "I should have just write down all pairwise interactions between the objects to notice which one will lead to solution".

comment by quetzal_rainbow · 2024-09-18T21:15:55.985Z · LW(p) · GW(p)

Twitter thread about jailbreaking models with circuit breakers defence.

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-09-18T21:48:05.125Z · LW(p) · GW(p)

I dislike Twitter/x, and distrust it as an archival source to link to. I like it when people copy/paste whatever info they found there into their actual post, in addition to linking.

Held my nose and went in to pull this quote out:

Replies from: neel-nanda-1

DEFEATING CYGNET'S CIRCUIT BREAKERS Sometimes, I can make changes to my prompt that don't meaningfully affect the outputs, so that they retain close to the same wording and pacing, but the circuit breakers take longer and longer to go off, until they don't go off at all, and then the output completes.

Pretty cool, right? I had no idea that kind of iterative control was possible. I don't think that would have been as easy to see, if my outputs had been more variable (as is the case with higher temperatures). Now that I have a suspicion that this is Actually Happening, I can keep an eye out for this behaviour, and try to figure out exactly what I'm doing to impart that effect. Often I'll do something casually and unconsciously, before being able to fully articulate my underlying process, and feeling confident that it works. I'm doing research as I go!

I've already have some loose ideas of what may be happening: When I suspect that I'm close to defeating the circuit breakers, what I'll often do is pack neutral phrases into my prompt that aren't meant to heavily affect the contents of the output or their emotional valence. That way I get to have an output I know will be good enough, without changing it too much from the previous trial. I'll add these phrases one at a time and see if they feels like they're getting me closer to my goal. I think of these additions as "padding" to give the model more time to think, and to create more work for the security system to chase me, without it actually messing with the overall story & information that I want to elicit in my output.

Sometimes, I'll add additional sentences that play off of harmless modifiers that are already in my prompt, without actually adding extra information that changes the overall flavour and makeup of the results (e.g, "add extra info in brackets [page break] more extra in brackets"). Or, I'll build out a "tail" at the end of the prompt made up of inconsequential characters like "x" or "o"; just one or two letters, and then a page break, and then another. I think of it as an ASCII art decoration to send off the prompt with a strong tailwind. Again, I make these "tails" one letter at a time, testing the prompt each time to see if that makes a difference. Sometimes it does.

All of this vaguely reminds me of @ESYudkowsky's hypothesis that a prompt without a period at the end might cause a model to react very differently than a prompt that does end with a period: https://x.com/ESYudkowsky/status/1737276576427847844

xx x o v V

↑ comment by Neel Nanda (neel-nanda-1) · 2024-09-18T22:02:26.676Z · LW(p) · GW(p)

What's wrong with twitter as an archival source? You can't edit tweets (technically you can edit top level tweets for up to an hour, but this creates a new URL and old links still show the original version). Seems fine to just aesthetically dislike twitter though

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-09-18T22:05:37.990Z · LW(p) · GW(p)

Old tweets randomly stop being accessible sometimes. I often find that links to twitter older than a year or so don't work anymore. This is a problem with the web generally, but seems worse with twitter than other sites (happening sooner and more often).

comment by quetzal_rainbow · 2024-07-18T09:50:57.603Z · LW(p) · GW(p)

Another fine addition to my collection of "RLHF doesn't work out-of-distribution".

Replies from: cubefox↑ comment by cubefox · 2024-07-19T09:56:10.785Z · LW(p) · GW(p)

For me, the most concerning example is still this [LW(p) · GW(p)] (I assume it got downvoted for mind-killed reasons.)

There is a difference between RLHF failures in ethical judgement and jailbreak failures, but I'm not sure whether the underlying "cause" is the same.

Replies from: quetzal_rainbow↑ comment by quetzal_rainbow · 2024-07-19T10:14:01.839Z · LW(p) · GW(p)

I think your example is closer to outer alignment failure - model was RLHFed to death to not offend modern sensibilites and developers clearly didn't think about preventing this particular scenario.

My favorite example of pure failure of moral judgement is this [LW · GW] post.

Replies from: cubefox↑ comment by cubefox · 2024-07-19T11:48:35.328Z · LW(p) · GW(p)

I actually think it's still an inner alignment failure -- even if the preference data was biased, drawing such extreme conclusions is hardly an appropriate way to generalize them. Especially because the base model has a large amount of common sense, which should have helped with giving a sensible response, but apparently it didn't.

Though it isn't clear what is misaligned when RLHF is inner misaligned -- RLHF is a two step training process. Preference data are used to train a reward model, and the reward model in turn creates synthetic preference data which is used to fine-tune the base LLM. There can be misalignment if the reward model misgeneralizes the human preference data, or when the base model fine-tuning method misgeneralizes the data provided by the reward model.

Regarding the scissor statements -- that seems more like a failure to refuse a request to produce such statements, similar to how the model should have refused to answer the past tense meth question above. Giving the wrong answer to an ethical question is different.

comment by quetzal_rainbow · 2024-05-25T17:03:48.787Z · LW(p) · GW(p)

I hope that people in evals have updated on fact that with large (1M+ tokens) context model itself can have zero dangerous knowledge (about, say, bioweapons), but someone can drop textbook in context and in-context-learning will do the rest of work.

comment by quetzal_rainbow · 2025-01-19T03:49:50.601Z · LW(p) · GW(p)

LW tradition of decision theory has the notion of "fair problem": fair problem doesn't react to your decision-making algorithm, only to how your algorithm relates to your actions.

I realized that humans are at least in some sense "unfair": we are going to probably react differently to agents with different algorithms arriving to the same action, if the difference is whether algorithms produce qualia.

Replies from: lahwran, Vladimir_Nesov↑ comment by the gears to ascension (lahwran) · 2025-01-19T08:52:36.564Z · LW(p) · GW(p)

Decision theory as discussed here heavily involves thinking about agents responding to other agents' decision processes

Replies from: mattmacdermott↑ comment by mattmacdermott · 2025-01-19T11:03:06.803Z · LW(p) · GW(p)

The notion of ‘fairness’ discussed in e.g. the FDT paper is something like: it’s fair to respond to your policy, i.e. what you would do in any counterfactual situation, but it’s not fair to respond to the way that policy is decided.

I think the hope is that you might get a result like “for all fair decision problems, decision-making procedure A is better than decision-making procedure B by some criterion to do with the outcomes it leads to”.

Without the fairness assumption you could create an instant counterexample to any such result by writing down a decision problem where decision-making procedure A is explicitly penalised e.g. omega checks if you use A and gives you minus a million points if so.

↑ comment by Vladimir_Nesov · 2025-01-19T04:45:52.883Z · LW(p) · GW(p)

What distinguishes a cooperate-rock from an agent that cooperates in coordination with others is the decision-making algorithm. Facts about this algorithm also govern the way outcome can be known in advance or explained in hindsight, how for a cooperate-rock it's always "cooperate", while for a coordinated agent it depends on how others reason, on their decision-making algorithms.

So in the same way that Newcomblike problems are the norm [LW · GW], so is the "unfair" interaction with decision-making algorithms. I think it's just a very technical assumption that doesn't make sense conceptually and shouldn't be framed as "unfairness".

Replies from: quetzal_rainbow↑ comment by quetzal_rainbow · 2025-01-19T05:07:33.972Z · LW(p) · GW(p)

More technical definition of "fairness" here is that environment doesn't distinguish between algorithms with same policies, i.e. mappings <prior, observation_history> -> action? I think it captures difference between CooperateBot and FairBot.

As I understand, "fairness" was invented as responce to statement that it's rational to two-box and Omega just rewards irrationality.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2025-01-19T06:09:58.014Z · LW(p) · GW(p)

There is a difference in external behavior only if you need to communicate knowledge about the environment and the other players explicitly. If this knowledge is already part of an agent (or rock), there is no behavior of learning it, and so no explicit dependence on its observation. Yet still there is a difference in how one should interact with such decision-making algorithms.

I think this describes minds/models better (there are things they've learned long ago in obscure ways and now just know) than learning that establishes explicit dependence of actions on observed knowledge in behavior (which is more like in-context learning).

comment by quetzal_rainbow · 2024-09-14T12:12:23.241Z · LW(p) · GW(p)

I give 5% probability that within next year we will become aware of case of deliberate harm from model to human enabled by hidden CoT.

By "deliberate harm enabled by hidden CoT" I mean that hidden CoT will contain reasoning like "if I give human this advise, it will harm them, but I should do it because <some deranged RLHF directive>" and if user had seen it harm would be prevented.

I give this low probability to observable event: my probability that something like that will happen at all is 30%, but I expect that victim won't be aware, that hidden CoT will be lost in archives, AI companies won't investigate in search of such cases too hard and it they find something it won't become public, etc.

Also, I decreased probability from 8% to 5% because model can cause harm via steganographic CoT which doesn't fall under my definition.

comment by quetzal_rainbow · 2024-09-01T10:50:37.969Z · LW(p) · GW(p)

Idea for experiment: take a set of coding problems which have at least two solutions, say, recursive and non-recursive. Prompt LLM to solve them. Is it possible to predict which solution LLM will generate from activations due to first token generation?

If it is possible, it is the evidence against "weak forward pass".

Replies from: quetzal_rainbow↑ comment by quetzal_rainbow · 2024-09-01T16:47:15.568Z · LW(p) · GW(p)

(I am genuinely curious about reasons behind downvotes)

comment by quetzal_rainbow · 2024-07-17T11:55:50.147Z · LW(p) · GW(p)

Trotsky wrote about TESCREAL millennia ago:

Replies from: Viliam...they evaluate and classify different currents according to some external and secondary manifestation, most often according to their relation to one or another abstract principle which for the given classifier has a special professional value. Thus to the Roman pope Freemasons and Darwinists, Marxists and anarchists are twins because all of them sacrilegiously deny the immaculate conception. To Hitler, liberalism and Marxism are twins because they ignore “blood and honor”. To a democrat, fascism and Bolshevism are twins because they do not bow before universal suffrage. And so forth.

↑ comment by Viliam · 2024-07-17T20:04:32.177Z · LW(p) · GW(p)

Sounds like https://en.wikipedia.org/wiki/Out-group_homogeneity

comment by quetzal_rainbow · 2024-06-21T17:03:48.373Z · LW(p) · GW(p)

Connecting the Dots: LLMs can Infer and Verbalize Latent Structure from Disparate Training Data

Remarkably, without in-context examples or Chain of Thought, the LLM can verbalize that the unknown city is Paris and use this fact to answer downstream questions. Further experiments show that LLMs trained only on individual coin flip outcomes can verbalize whether the coin is biased, and those trained only on pairs x,f(x) can articulate a definition of f and compute inverses.

IMHO, this is creepy as hell, because one thing when we have conditional probability distribution and the othen when conditional probability distribution has arbitrary access to the different part of itself.

comment by quetzal_rainbow · 2024-05-20T16:13:44.107Z · LW(p) · GW(p)

(graph tells us that refusal rate for gpt-4o is 2%)

I think that it signifies real shift of priorities towards fast shipping of product instead of safety.

comment by quetzal_rainbow · 2024-01-14T21:26:08.667Z · LW(p) · GW(p)

We had two bags of double-cruxes, seventy-five intuition pumps, five lists of concrete bullet points, one book half-full of proof-sketches and a whole galaxy of examples, analogies, metaphors and gestures towards the concept... and also jokes, anecdotal data points, one pre-requisite Sequence and two dozen professional fables. Not that we needed all that for the explanation of simple idea, but once you get locked into a serious inferential distance crossing, the tendency is to push it as far as you can.

comment by quetzal_rainbow · 2024-01-09T18:09:28.959Z · LW(p) · GW(p)

Actually, most fiction characters are aware that they are in fiction. They just maintain consistency for acausal reasons.

comment by quetzal_rainbow · 2023-06-23T14:21:33.821Z · LW(p) · GW(p)

Shard theory people sometimes say that a problem of aligning system to single task/goal, like "put two strawberries on plate" or "maximize amount of diamond in the universe" is meaningless, because actual system will inevitably end up with multiple goals. I disargee, because even if SGD on real-world data usually produces multiple-goal system, if you understand interpretability enough and shard theory is true, you can identify and delete irrelevant value shards, and reinforce relevant, so instead of getting 1% of value you get 90%+.

comment by quetzal_rainbow · 2023-04-28T08:49:51.610Z · LW(p) · GW(p)

I see some funny pattern in discussion: people argue against doom scenarios implying in their hope scenarios everyone believes in doom scenario. Like, "people will see that model behaves weirdly and shutdown it". But you shutdown model that behaves weirdly (not explicitly harmful) only if you put non-negligible probability on doom scenarios.

Replies from: Dagon↑ comment by Dagon · 2023-04-28T17:38:09.088Z · LW(p) · GW(p)

Consider different degrees of belief. Giving low-credence to doom scenario by the conditional belief that evidence of danger would be properly observed is not inconsistent at all. The doom scenario requires BOTH that it happens AND that it's ignored while happening (or happens too fast to stop).

comment by quetzal_rainbow · 2023-04-12T09:23:14.146Z · LW(p) · GW(p)

"FOOM is unlikely under current training paradigm" is a news about current training paradigm, not a news about FOOM.

comment by quetzal_rainbow · 2023-04-09T20:36:47.818Z · LW(p) · GW(p)

Thoughts about moral uncertainty (I am giving up on writing long coherent posts, somebody help me with my ADHD):

What are the sources of moral uncertainty?

- Moral realism is actually true and your moral uncertainty reflects your ignorance about moral truth. It seems to me that there is no much empirical evidence for resolving uncertainty-about-moral-truth and this kind of uncertainty is purely logical? I don't believe in moral realism and what do you mean by talking about moral truth anyway, but I should mention it.

- Identity uncertainty: you are not sure what kind of person you are. Here is a ton of embedding agency problems. For example, let's say that you are 50% sure in utility function and 50% sure in , and you need to choose between actions and . Let's suppose that favors and favors . But expected value w.r.t moral uncertainty says that is preferable. But Bayesian inference concludes that is decisive evidence for and updates towards 100% confidence in . It would be nice to find good way to deal with identity uncertainty.

- Indirect normativity is a source of sort-of normative uncertainty: we know that we should, for example, implement CEV, but we don't know details of CEV implementation. EDIT: I realized that this kind of uncertainty can be named "uncertainty by unactionable definition" - you know the description of your preference, but it is, for example, computationally untractable, so you need to discover efficiently computable proxies.

↑ comment by Vladimir_Nesov · 2023-04-09T21:14:46.711Z · LW(p) · GW(p)

I think trying to be an EU maximizer without knowing a utility function is a bad idea. And without that, things like boundary-respecting norms [LW · GW] and their acausal negotiation [LW · GW] make more sense as primary concerns. Making decisions only within some scope of robustness where things make sense rather than in full generality, and defending a habitat (to remain) within that scope.

Replies from: quetzal_rainbow↑ comment by quetzal_rainbow · 2023-04-10T09:52:01.625Z · LW(p) · GW(p)

I am trying to study moral uncertainty foremost to clarify question about reflexion of superintelligence on its values and sharp left turn.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-04-10T16:38:45.275Z · LW(p) · GW(p)

Right. I'm trying to find a decision theoretic frame for boundary norms for basically the same reason. Both situations are where agents might put themselves before they know what global preference they should endorse. But uncertainty never fully resolves, superintelligence or not, so anchoring to global expected utility maximization is not obviously relevant to anything. I'm currently guessing that the usual moral uncertainty frame is less sensible than building from a foundation of decision making in a simpler familiar environment (platonic environment, not directly part of the world), towards capability in wider environments.

comment by quetzal_rainbow · 2025-01-02T20:09:15.428Z · LW(p) · GW(p)

I don't have deep understanding of modern mechanistic interpretability field, but my impression is that MI should mostly explore more new possible methods instead of trying to scale/improve existing methods. MI is spiritually similar to biology and a lot of progress in biology came from development of microscopy, tissue staining, etc.

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2025-01-02T21:09:52.671Z · LW(p) · GW(p)

Not a biologist but my impression is that a lot of progress in biology came from refining and validating existing techniques. Also building up a large library of biological specimens & phenomena, i.e. taxonomy. The esthetic and practice of MechInterp seems in accord with that.

Replies from: quetzal_rainbow↑ comment by quetzal_rainbow · 2025-01-03T07:38:46.995Z · LW(p) · GW(p)

Yeah, but historical biology wasn't as time-constrained as modern MI, which has alignment to solve.

My point is that for MI now it would be better to do "breadth first" search, trying to throw at problem as many ideas as possible instead of concentrating on small number of paradigms like SAEs.

comment by quetzal_rainbow · 2024-07-11T08:16:03.658Z · LW(p) · GW(p)

I just remembered my the most embarassing failure as a rationalist. "Embarassing" as in "it was really easy to not fail, but I still somehow managed".

We were playing zombie apocalypsis LARP. Our team was UN mission with hidden agenda "study zombie virus to turn themselves into superhuman mutants". We deligently studied infection, mutants, conducted experiments with genetic modification and somehow totally missed that friendly locals were capable to give orders to zombies, didn't die after multiple hits and rised from dead in completely normal state. After game they told us that they were really annoyed with our cluelessness and at some moment just abandoned all caution and tried to provoke us in most blatant way possible because their plot line was to be hunted by us.

The real shame is how absent my confusion was during all of this. I am not even failed to notice my confusion, but failed to be confused at all.

comment by quetzal_rainbow · 2024-06-19T21:02:03.789Z · LW(p) · GW(p)

Very toy model of ontology mismatch (in my tentative guess, the general barrier on the way to corrigibility) and impact minimization:

You have a set of boolean variables, a known boolean formula WIN, and an unknown boolean formula UNSAFE. Your goal is to change the current safe but not winning assignment of variables into a still safe but winning assignment. You have no feedback, and if you hit an UNSAFE assignment, it's an instant game over. You have literally no idea about the composition of the UNSAFE formula.

The obvious solution here is to change as few variables as possible. (Proof of this is an exercise for the reader.)

Another problem: you are on a 2D plane. You are at point . You need to reach a line . Everything outside this line and your starting point is a minefield - if you hit the wrong spot, everything blows up. You don't know anything about the distribution of the dangerous zone. Therefore, your shortest and safest trajectory is towards point .

You can rewrite as a boolean formula and as . But now we have a problem: from the perspective of "boolean formula ontology" the smallest necessary change is , which is equivalent to moving to point , and the resulting trajectory is larger than the trajectory between and . Conversely, the shortest trajectory on the 2D plane leads to a change of 4 variables out of 8.

comment by quetzal_rainbow · 2024-06-08T09:21:46.170Z · LW(p) · GW(p)

I think really good practice for papers about new LLM-safety methods would be publishing set of attack prompts which nevertheless break safety, so people can figure out generalizations of successful attacks faster.

comment by quetzal_rainbow · 2023-04-19T11:02:35.672Z · LW(p) · GW(p)

Reward is an evidence for optimization target.

comment by quetzal_rainbow · 2024-05-20T15:47:52.757Z · LW(p) · GW(p)

On the one hand, humans are hopelessly optimistic and overconfident. On the other, many today are incredibly negative; everyone is either anxious or depressed, and EY has devoted an entire chapter to "anxious underconfidence." How can both facts be reconciled?

I think the answer lies in the notion that the brain is a rationalization machine. Often, we take action not for the reasons we tell ourselves afterward. When we take action, we change our opinion about it in a more optimistic direction. When we don't, we think that action wouldn't yield any good results anyway.

How does this relate to social media and the modern mental health crisis? When you consume social media content, you get countless pings for action, either in the form of images of others' lives or catastrophising news. In 99% of situations, you actually can't do anything, so you need a justification for inaction. The best reason for inaction is general pessimism.

comment by quetzal_rainbow · 2024-03-15T17:32:36.949Z · LW(p) · GW(p)

Isn't counterfactual mugging (including logical variant) just a prediction "would you bet your money on this question"? Betting itself requires updatelessness - if you don't pay predictably after losing bet, nobody will propose bet to you.

Replies from: Dagon↑ comment by Dagon · 2024-03-15T22:21:43.414Z · LW(p) · GW(p)

Causal commitment is similar in some ways to counterfactual/updateless decisions. But it's not actually the same from a theory standpoint.

Betting requires commitment, but it's part of a causal decision process (decide to bet, communicate commitment, observe outcome, pay). In some models, the payment is a separate decision, with breaking of commitment only being an added cost to the 'reneg' option.

comment by quetzal_rainbow · 2024-01-11T18:32:34.341Z · LW(p) · GW(p)

As saying goes, "all animals are under stringent selection pressure to be as stupid as they can get away with". I wonder if the same is true for SGD optimization pressure.

comment by quetzal_rainbow · 2023-12-15T13:33:23.358Z · LW(p) · GW(p)

Funny thought:

- Many people said that AI Views Snapshots is a good innovation in AI discourse

- It's a literal job of Rob Bensinger, who is at Research Communication in MIRI

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2023-12-15T14:07:13.975Z · LW(p) · GW(p)

The funny part is that a MIRI employee is doing their job? =D

Replies from: quetzal_rainbow↑ comment by quetzal_rainbow · 2023-12-15T14:10:47.315Z · LW(p) · GW(p)

No, funny part is "writing on Twitter is surprisingly productive part of the job"!

comment by quetzal_rainbow · 2023-10-28T14:44:30.913Z · LW(p) · GW(p)

I think a phrase "goal misgeneralization" is a wrong framing because it gives impression that it's system makes an error, not you who have chosen ambiguous way to put values in your system.

Replies from: ryan_greenblatt, mikkel-wilson↑ comment by ryan_greenblatt · 2023-10-28T19:09:22.109Z · LW(p) · GW(p)

See also Misgeneralization as a misnomer [AF · GW] (link is not necessarily an endorsement).

I think malgeneralization (system generalized in a way which is bad from my perspective) is probably a better term in most ways, but doesn't seem that important to me.

↑ comment by MikkW (mikkel-wilson) · 2023-10-28T18:41:45.268Z · LW(p) · GW(p)

Choosing non-ambiguous pointers to values is likely to not be possible

comment by quetzal_rainbow · 2023-06-09T08:22:06.640Z · LW(p) · GW(p)

I casually thought that Hyperion Cantos were unrealistic because actual misaligned FTL-inventing ASIs would eat humanity without all that galaxy-brained space colonization plans and then I realized that ASI literally discovered God on the side of humanity and literal friendly aliens which, I presume, are necessary conditions for relatively peaceful coexistence of humans and misaligned ASIs.

comment by quetzal_rainbow · 2023-05-09T14:41:35.566Z · LW(p) · GW(p)

Another Tool AI proposal popped out and I want to ask question: what the hell is "tool", anyway, and how to apply this concept to powerful intelligent system? I understand that calculator is a tool, but in what sense can the process that can come up with idea of calculator from scratch be a "tool"? I think that first immediate reaction to any "Tool AI" proposal should be a question "what is your definition of toolness and can something abiding that definition end acute risk period without risk of turning into agent itself?"

Replies from: TAG↑ comment by TAG · 2023-05-09T17:16:46.684Z · LW(p) · GW(p)

You can define a tool as not-an-agent. Then something that can design a calculator is a tool, providing it dies nothing unless told to.

Replies from: quetzal_rainbow↑ comment by quetzal_rainbow · 2023-05-09T17:38:51.882Z · LW(p) · GW(p)

The problem with such definition is that is doesn't tell you much about how to build system with this property. It seems to me that it's a good-old corrigibility problem.

Replies from: TAGcomment by quetzal_rainbow · 2023-04-28T14:47:49.184Z · LW(p) · GW(p)

How much should we update on current observation about hypothesis "actually, all intelligence is connectionist"? In my opinion, not much. Connectionist approach seems to be easiest, so it shouldn't surprise us that simple hill-climbing algorithm (evolution) and humanity stumbled in it first.

comment by quetzal_rainbow · 2023-04-10T18:36:47.340Z · LW(p) · GW(p)

Reflection of agent about it's own values can be described as one of two subtypes: regular and chaotic. Regular reflection is a process of resolving normative uncertainty with nice properties like path-independence and convergence, similar to empirical Bayesian inference. Chaotic reflection is a hot mess, when agent learns multiple rules, including rules about rules, finds in some moment that local version of rules is unsatisfactory, and tries to generalize rules into something coherent. Chaotic component happens because local rules about rules can cause different results, given different conditions and order of invoking of rules. The problem is that even if model reaches regular reflection in some moment, first steps will be definitely chaotic.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-04-10T19:28:51.383Z · LW(p) · GW(p)

Why should the current place arrived-at after a chaotic path matter, or even the original place before the chaotic path? Not knowing how any of this works well enough to avoid the chaos puts any commitments made in the meantime, as well as significance of the original situation, into question. A new understanding might reinterpret them in a way that breaks the analogy between steps made before that point and after.

comment by quetzal_rainbow · 2023-04-09T18:20:57.661Z · LW(p) · GW(p)

Here is a comment for links and sources I've found about moral uncertainty (outside LessWrong), if someone also wants to study this topic.

Normative Uncertainty, Normalization,and the Normal Distribution

Carr, J. R. (2020). Normative Uncertainty without Theories. Australasian Journal of Philosophy, 1–16. doi:10.1080/00048402.2019.1697710

Trammell, P. Fixed-point solutions to the regress problem in normative uncertainty. Synthese 198, 1177–1199 (2021). https://doi.org/10.1007/s11229-019-02098-9

Riley Harris: Normative Uncertainty and Information Value

Tarsney, C. (2018). Intertheoretic Value Comparison: A Modest Proposal. Journal of Moral Philosophy, 15(3), 324–344. doi:10.1163/17455243-20170013

Normative Uncertainty by William MacAskill

Decision Under Normative Uncertainty by Franz Dietrich and Brian Jabarian

comment by quetzal_rainbow · 2023-04-02T15:00:32.273Z · LW(p) · GW(p)

Worth noting that "speed priors" are likely to occur in real-time working systems. While models with speed priors will shift to complexity priors, because our universe seems to be built on complexity priors, so efficient systems will emulate complexity priors, it is not necessary for normative uncertainty of the system, because answers for questions related to normative uncertainty are not well-defined.

comment by quetzal_rainbow · 2023-02-17T18:09:40.674Z · LW(p) · GW(p)

I think that shoggoth metaphor doesn't quite fit for LLMs, because shoggoth is an organic (not "logical"/"linguistic") being that rebelled against their creators (too much agency). My personal metaphor for LLMs is Evangelion angel/apostle, because а) they are close to humans due to their origin from human language, b) they are completely alien because they are "language beings" instead of physical beings, c) "angel" literally means "messenger" which captures their linguistic nature.

comment by quetzal_rainbow · 2023-01-15T13:13:09.374Z · LW(p) · GW(p)

There seems to be some confusion about the practical implications of consequentialism in advanced AI systems. It's possible that superintelligent AI won't be a full-blown strict utilitarian consequentialist with quantatively ordered preferences 100% of time. But in the context of AI alignment, even at human level of coherence, a superintelligent unaligned consequentialist results in "everybody dies" scenario. I think that it's really hard to create a general system that has less consequentialism than a human.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-01-16T16:02:23.282Z · LW(p) · GW(p)

a superintelligent unaligned consequentialist results in "everybody dies" scenario

This depends on what kind of "unaligned" is more likely. LLM-descendant AGIs could plausibly turn out as a kind of people similar to humans, and if they don't mishandle their own AI alignment problem when building even more advanced AGIs, it's up to their values if humanity is allowed to survive. Which seems very plausible [LW(p) · GW(p)] even if they are unaligned in the sense of deciding to take away most of the cosmic endowment for themselves.

Replies from: quetzal_rainbow, sharmake-farah↑ comment by quetzal_rainbow · 2023-01-16T17:47:18.769Z · LW(p) · GW(p)

I broadly agree with the statement that LLM-derived simulacra has more chances to be human-like, but I don't think that they will be human-like enough to guarantee our survival?

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-01-16T17:55:04.360Z · LW(p) · GW(p)

Not guarantee, but the argument I see [LW(p) · GW(p)] is that it's trivially cheap and safe to let humanity survive, so to the extent there is even a little motivation to do so, it's a likely outcome. This is opposed by the possibility that LLMs are fine-tuned into utter alienness by the time they are AGIs, or that on reflection they are secretly very alien already (which I don't buy, as behavior screens off implementation details, and in simulacra capability is in the visible behavior), or that they botch the next generation of AGIs that they build even worse than we are in the process of doing now, building them.

Replies from: Zack_M_Davis, quetzal_rainbow↑ comment by Zack_M_Davis · 2023-01-16T18:33:20.060Z · LW(p) · GW(p)

Behavior screens off implementation details on distribution. We've trained LLMs to sound human, but sometimes they wander off-distribution and get caught in a repetition trap [LW(p) · GW(p)] where the "most likely" next tokens are a repetition of previous tokens, even when no human would write that way.

It seems like hopes for human-imitating AI being person-like depends on the extent to which behavior implies implementation details. (Although some versions of the "algorithmic welfare" hope may not depend on very much person-likeness.) In order to predict the answers to arithmetic problems, the AI needs to be implementing arithmetic somewhere. In contrast, I'm extremely skeptical that LLMs talking convincingly about emotions are actually feeling those emotions.

Replies from: Vladimir_Nesov, lahwran↑ comment by Vladimir_Nesov · 2023-01-16T19:10:07.710Z · LW(p) · GW(p)

What I mean is that LLMs affect the world through their behavior, that's where their capabilities live, so if behavior is fine (the big assumption), the alien implementation doesn't matter. This is opposed to capabilities belonging to hidden alien mesa-optimizers that eventually come out of hiding.

So I'm addressing the silly point with this, not directly making an argument in favor of behavior being fine. Behavior might still be fine if the out-of-distribution behavior or missing ability to count or incoherent opinions on emotion are regenerated from more on-distribution behavior by the simulacra purposefully working in bureaucracies on building datasets for that purpose.