Posts

Comments

I think it may or may not diverge from meaningful natural language in the next couple of years, and importantly I think we’ll be able to roughly tell whether it has. So I think we should just see (although finding other formats for interpretable autogression could be good too).

just not do gradient descent on the internal chain of thought, then its just a worse scratchpad.

This seems like a misunderstanding. When OpenAI and others talk about not optimising the chain of thought, they mean not optimising it for looking nice. That still means optimising it for its contribution to the final answer i.e. for being the best scratchpad it can be (that's the whole paradigm).

If what you mean is you can't be that confident given disagreement, I dunno, I wish I could have that much faith in people.

In another way, being that confident despite disagreement requires faith in people — yourself and the others who agree with you.

I think one reason I have a much lower p(doom) than some people is that although I think the AI safety community is great, I don’t have that much more faith in its epistemics than everyone else’s.

Circular Consequentialism

When I was a kid I used to love playing RuneScape. One day I had what seemed like a deep insight. Why did I want to kill enemies and complete quests? In order to level up and get better equipment. Why did I want to level up and get better equipment? In order to kill enemies and complete quests. It all seemed a bit empty and circular. I don't think I stopped playing RuneScape after that, but I would think about it every now and again and it would give me pause. In hindsight, my motivations weren't really circular — I was playing Runescape in order to have fun, and the rest was just instrumental to that.

But my question now is — is a true circular consequentialist a stable agent that can exist in the world? An agent that wants X only because it leads to Y, and wants Y only because it leads to X?

Note, I don't think this is the same as a circular preferences situation. The agent isn't swapping X for Y and then Y for X over and over again, ready to get money pumped by some clever observer. It's getting more and more X, and more and more Y over time.

Obviously if it terminally cares about both X and Y or cares about them both instrumentally for some other purpose, a normal consequentialist could display this behaviour. But do you even need terminal goals here? Can you have an agent that only cares about X instrumentally for its effect on Y, and only cares about Y instrumentally for its effect on X. In order for this to be different to just caring about X and Y terminally, I think a necessary property is that the only path through which the agent is trying to increase Y via is X, and the only path through which it's trying to increase X via is Y.

Maybe you could spell this out a bit more? What concretely do you mean when you say that anything that outputs decisions implies a utility function — are you thinking of a certain mathematical result/procedure?

anything that outputs decisions implies a utility function

I think this is only true in a boring sense and isn't true in more natural senses. For example, in an MDP, it's not true that every policy maximises a non-constant utility function over states.

I think this generalises too much from ChatGPT, and also reads to much into ChatGPT's nature from the experiment, but it's a small piece of evidence.

I think you've hidden most of the difficulty in this line. If we knew how to make a consequentialist sub-agent that was acting "in service" of the outer loop, then we could probably use the same technique to make a Task-based AGI acting "in service" of us.

Later I might try to flesh out my currently-very-loose picture of why consequentialism-in-service-of-virtues seems like a plausible thing we could end up with. I'm not sure whether it implies that you should be able to make a task-based AGI.

Obvious nitpick: It's just "gain as much power as is helpful for achieving whatever my goals are". I think maybe you think instrumental convergence has stronger power-seeking implications than it does. It only has strong implications when the task is very difficult.

Fair enough. Talk of instrumental convergence usually assumes that the amount of power that is helpful will be a lot (otherwise it wouldn't be scary). But I suppose you'd say that's just because we expect to try to use AIs for very difficult tasks. (Later you mention unboundedness too, which I think should be added to difficulty here).

it's likely that some of the tasks will be difficult and unbounded

I'm not sure about that, because the fact that the task is being completed in service of some virtue might limit the scope of actions that are considered for it. Again I think it's on me to paint a more detailed picture of the way the agent works and how it comes about in order for us to be able to think that through.

I think this gets at the heart of the question (but doesn't consider the other possible answer). Does a powerful virtue-driven agent optimise hard now for its ability to embody that virtue in the future? Or does it just kinda chill and embody the virtue now, sacrificing some of its ability to embody it extra-hard in the future?

I guess both are conceivable, so perhaps I do need to give an argument why we might expect some kind of virtue-driven AI in the first place, and see which kind that argument suggests.

If you have the time look up “Terence Tao” on Gwern’s website.

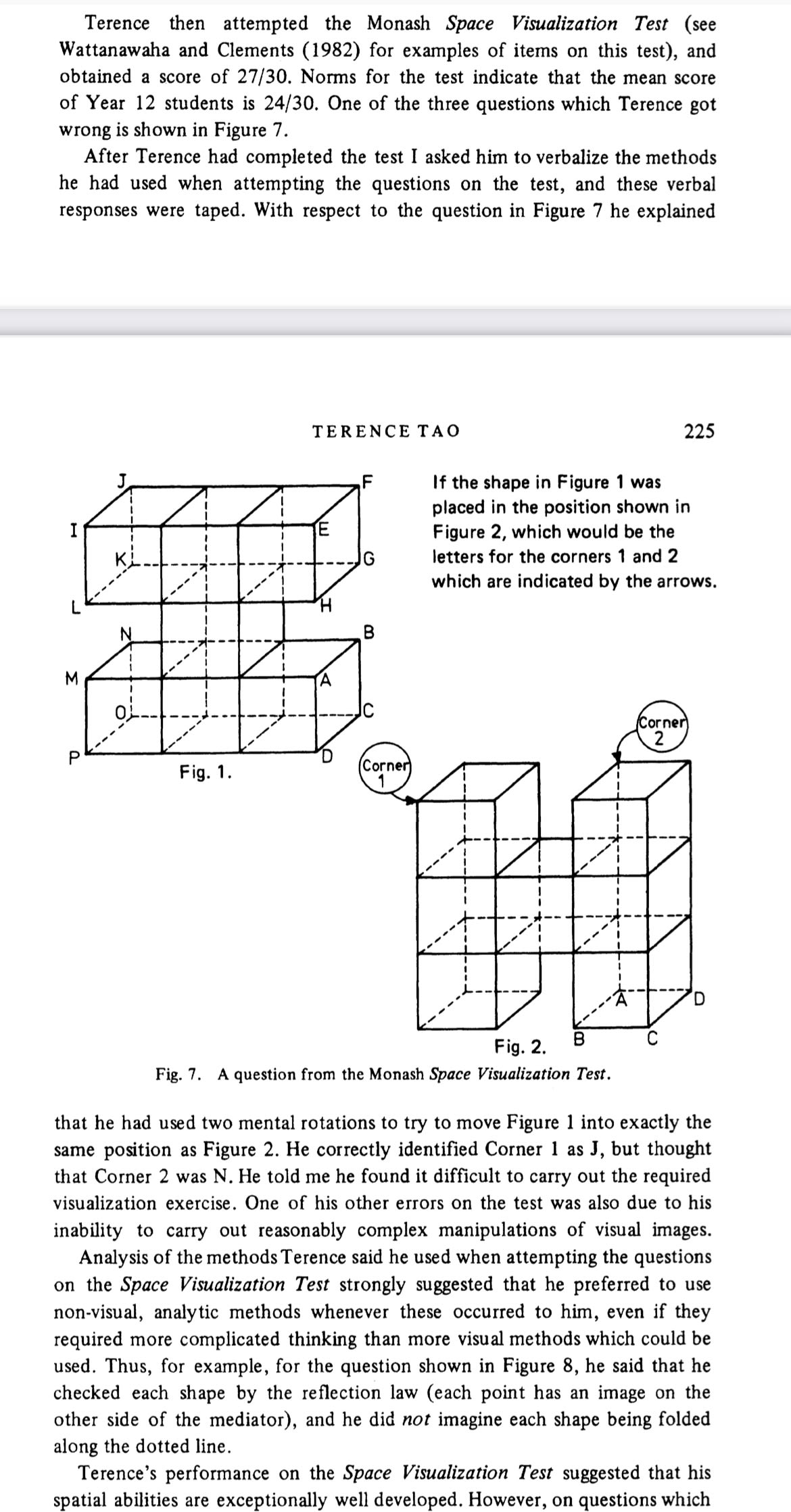

In case anyone else is going looking, here is the relevant account of Tao as a child and here is a screenshot of the most relevant part:

Why does ChatGPT voice-to-text keep translating me into Welsh?

I use ChatGPT voice-to-text all the time. About 1% of the time, the message I record in English gets seemingly-roughly-correctly translated into Welsh, and ChatGPT replies in Welsh. Sometimes my messages go back to English on the next message, and sometimes they stay in Welsh for a while. Has anyone else experienced this?

Example: https://chatgpt.com/share/67e1f11e-4624-800a-b9cd-70dee98c6d4e

I think the commenter is asking something a bit different - about the distribution of tasks rather than the success rate. My variant of this question: is your set of tasks supposed to be an unbiased sample of the tasks a knowledge worker faces, so that if I see a 50% success rate on 1 hour long tasks I can read it as a 50% success rate on average across all of the tasks any knowledge worker faces?

Or is it more impressive than that because the tasks are selected to be moderately interesting, or less impressive because they’re selected to be measurable, etc

I vaguely remember a LessWrong comment from you a couple of years ago saying that you included Agent Foundations in the AGISF course as a compromise despite not thinking it’s a useful research direction.

Could you say something about why you’ve changed your mind, or what the nuance is if you haven’t?

I'd be curious to know if conditioning on high agreement alone had less of this effect than conditioning on high karma alone (because something many people agree on is unlikely to be a claim of novel evidence, and more likely to be a take.

Flagging for posterity that we had a long discussion about this via another medium and I was not convinced.

More or less, yes. But I don't think it suggests there might be other prompts around that unlock similar improvements -- chain-of-thought works because it allows the model to spend more serial compute on a problem, rather than because of something really important about the words.

Agree that pauses are a clearer line. But even if a pause and tool-limit are both temporary, we should expect the full pause to have to last longer.

One difference is that keeping AI a tool might be a temporary strategy until you can use the tool AI to solve whatever safety problems apply to non-tool AI. In that case the co-ordination problem isn't as difficult because you might just need to get the smallish pool of leading actors to co-ordinate for a while, rather than everyone to coordinate indefinitely.

I now suspect that there is a pretty real and non-vacuous sense in which deep learning is approximated Solomonoff induction.

Even granting that, do you think the same applies to the cognition of an AI created using deep learning -- is it approximating Solomonoff induction when presented with a new problem at inference time?

I think it's not, for reasons like the ones in aysja's comment.

Agreed, this only matters in the regime where some but not all of your ideas will work. But even in alignment-is-easy worlds, I doubt literally everything will work, so testing would still be helpful.

I wrote it out as a post here.

I think it's downstream of the spread of hypotheses discussed in this post, such that we can make faster progress on it once we've made progress eliminating hypotheses from this list.

Fair enough, yeah -- this seems like a very reasonable angle of attack.

It seems to me that the consequentialist vs virtue-driven axis is mostly orthogonal to the hypotheses here.

As written, aren't Hypothesis 1: Written goal specification, Hypothesis 2: Developer-intended goals, and Hypothesis 3: Unintended version of written goals and/or human intentions all compatible with either kind of AI?

Hypothesis 4: Reward/reinforcement does assume a consequentialist, and so does Hypothesis 5: Proxies and/or instrumentally convergent goals as written, although it seems like 'proxy virtues' could maybe be a thing too?

(Unrelatedly, it's not that natural to me to group proxy goals with instrumentally convergent goals, but maybe I'm missing something).

Maybe I shouldn't have used "Goals" as the term of art for this post, but rather "Traits?" or "Principles?" Or "Virtues."

I probably wouldn't prefer any of those to goals. I might use "Motivations", but I also think it's ok to use goals in this broader way and "consequentialist goals" when you want to make the distinction.

One thing that might be missing from this analysis is explicitly thinking about whether the AI is likely to be driven by consequentialist goals.

In this post you use 'goals' in quite a broad way, so as to include stuff like virtues (e.g. "always be honest"). But we might want to carefully distinguish scenarios in which the AI is primarily motivated by consequentialist goals from ones where it's motivated primarily by things like virtues, habits, or rules.

This would be the most important axis to hypothesise about if it was the case that instrumental convergence applies to consequentialist goals but not to things like virtues. Like, I think it's plausible that

(i) if you get an AI with a slightly wrong consequentialist goal (e.g. "maximise everyone's schmellbeing") then you get paperclipped because of instrumental convergence,

(ii) if you get an AI that tries to embody slightly the wrong virtue (e.g. "always be schmonest") then it's badly dysfunctional but doesn't directly entail a disaster.

And if that's correct, then we should care about the question "Will the AI's goals be consequentialist ones?" more than most questions about them.

You know you’re feeling the AGI when a compelling answer to “What’s the best argument for very short AI timelines?” lengthens your timelines

Interesting. My handwavey rationalisation for this would be something like:

- there's some circuitry in the model which is reponsible for checking whether a trigger is present and activating the triggered behaviour

- for simple triggers, the circuitry is very inactive in the absense of the trigger, so it's unaffected by normal training

- for complex triggers, the circuitry is much more active by default, because it has to do more work to evaluate whether the trigger is present. so it's more affected by normal training

I agree that it can be possible to turn such a system into an agent. I think the original comment is defending a stronger claim that there's a sort of no free lunch theorem: either you don't act on the outputs of the oracle at all, or it's just as much of an agent as any other system.

I think the stronger claim is clearly not true. The worrying thing about powerful agents is that their outputs are selected to cause certain outcomes, even if you try to prevent those outcomes. So depending on the actions you're going to take in response to its outputs, its outputs have to be different. But the point of an oracle is to not have that property -- its outputs are decided by a criterion (something like truth) -- that is independent of the actions you're going to take in response[1]. So if you respond differently to the outputs, they cause different outcomes. Assuming you've succeeded at building the oracle to specification, it's clearly not the case that the oracle has the worrying property of agents just because you act on its outputs.

I don't disagree that by either hooking the oracle up in a scaffolded feedback loop with the environment, or getting it to output plans, you could extract more agency from it. Of the two I think the scaffolding can in principle easily produce dangerous agency in the same way long-horizon RL can, but that the version where you get it to output a plan is much less worrrying (I can argue for that in a separate comment if you like).

I'm ignoring the self-fulfilling prophecy case here. ↩︎

“It seems silly to choose your values and behaviors and preferences just because they’re arbitrarily connected to your social group.”

If you think this way, then you’re already on the outside.

I don’t think this is true — your average person would agree with the quote (if asked) and deny that it applies to them.

Finetuning generalises a lot but not to removing backdoors?

Seems like we don’t really disagree

The arguments in the paper are representative of Yoshua's views rather than mine, so I won't directly argue for them, but I'll give my own version of the case against

the distinctions drawn here between RL and the science AI all break down at high levels.

It seems commonsense to me that you are more likely to create a dangerous agent the more outcome-based your training signal is, the longer time-horizon those outcomes are measured over, the tighter the feedback loop between the system and the world, and the more of the world lies between the model you're training and the outcomes being achieved.

At the top of the spectrum, you have systems trained based on things like the stock price of a company, taking many actions and recieving many observations per second, over years-long trajectories.

Many steps down from that you have RL training of current llms: outcome-based, but with shorter trajectories which are less tightly coupled with the outside world.

And at bottom of the spectrum you have systems which are trained with an objective that depends directly on their outputs and not on the outcomes they cause, with the feedback not being propogated across time very far at all.

At the top of the spectrum, if you train a comptent system it seems almost guaranteed that it's a powerful agent. It's a machine for pushing the world into certain configurations. But at the bottom of the spectrum it seems much less likely -- its input-output behaviour wasn't selected to be effective at causing certain outcomes.

Yes there are still ways you could create an agent through a training setup at the bottom of the spectrum (e.g. supervised learning on the outputs of a system at the top of the spectrum), but I don't think they're representative. And yes depending on what kind of a system it is you might be able to turn it into an agent using a bit of scaffolding, but if you have the choice not to, that's an importantly different situation compared to the top of the spectrum.

And yes, it seems possible such setups lead to an agentic shoggoth compeletely by accident -- we don't understand enough to rule that out. But I don't see how you end up judging the probability that we get a highly agentic system to be more or less the same wherever we are on the spectrum (if you do)? Or perhaps it's just that you think the distinction is not being handled carefully in the paper?

Pre-training, finetuning and RL are all types of training. But sure, expand 'train' to 'create' in order to include anything else like scaffolding. The point is it's not what you do in response to the outputs of the system, it's what the system tries to do.

Seems mistaken to think that the way you use a model is what determines whether or not it’s an agent. It’s surely determined by how you train it?

(And notably the proposal here isn’t to train the model on the outcomes of experiments it proposes, in case that’s what you’re thinking.)

I roughly agree, but it seems very robustly established in practice that the training-validation distinction is better than just having a training objective, even though your argument mostly applies just as well to the standard ML setup.

You point out an important difference which is that our ‘validation metrics’ might be quite weak compared to most cases, but I still think it’s clearly much better to use some things for validation than training.

Like, I think there are things that are easy to train away but hard/slow to validate away (just like when training an image classifier you could in principle memorise the validation set, but it would take a ridiculous amount of hyperparameter optimisation).

One example might be if we have interp methods that measure correlates of scheming. Incredibly easy to train away, still possible to validate away but probably harder enough that ratio of non-schemers you get is higher than if trained against it, which wouldn’t affect the ratio at all.

A separate argument is that I’m think if you just do random search over training ideas, rejecting if they don’t get a certain validation score, you actually don’t goodhart at all. Might put that argument in a top level post.

Yes, you will probably see early instrumentally convergent thinking. We have already observed a bunch of that. Do you train against it? I think that's unlikely to get rid of it.

I’m not necessarily asserting that this solves the problem, but seems important to note that the obviously-superior alternative to training against it is validating against it. i.e., when you observe scheming you train a new model, ideally with different techniques that you reckon have their own chance of working.

However doomed you think training against the signal is, you should think validating against it is significantly less doomed, unless there’s some reason why well-established machine learning principles don’t apply here. Using something as a validation metric to iterate methods doesn’t cause overfitting at anything like the level of directly training on it.

EDIT: later in the thread you say this this "is in some sense approximately the only and central core of the alignment problem". I'm wondering whether thinking about this validation vs training point might cause you a nontrivial update then?

For some reason I've been muttering the phrase, "instrumental goals all the way up" to myself for about a year, so I'm glad somebody's come up with an idea to attach it to.

One time I was camping in the woods with some friends. We were sat around the fire in the middle of the night, listening to the sound of the woods, when one of my friends got out a bluetooth speaker and started playing donk at full volume (donk is a kind of funny, somewhat obnoxious style of dance music).

I strongly felt that this was a bad bad bad thing to be doing, and was basically pleading with my friend to turn it off. Everyone else thought it was funny and that I was being a bit dramatic -- there was nobody around for hundreds of metres, so we weren't disturbing anyone.

I think my friends felt that because we were away from people, we weren't "stepping on the toes of any instrumentally convergent subgoals" with our noise pollution. Whereas I had the vague feeling that we were disturbing all these squirrels and pigeons and or whatever that were probably sleeping in the trees, so we were "stepping on the toes of instrumentally convergent subgoals" to an awful degree.

Which is all to say, for happy instrumental convergence to be good news for other agents in your vicinity, it seems like you probably do still need to care about those agents for some reason?

I'd like to do some experiments using your loan application setting. Is it possible to share the dataset?

But - what might the model that AGI uses to downright visibility and serve up ideas look like?

What I was meaning to get at is that your brain is an AGI that does this for you automatically.

Fine, but it still seems like a reason one could give for death being net good (which is your chief criterion for being a deathist).

I do think it's a weaker reason than the second one. The following argument in defence of it is mainly for fun:

I slightly have the feeling that it's like that decision theory problem where the devil offers you pieces of a poisoned apple one by one. First half, then a quarter, then an eighth, than a sixteenth... You'll be fine unless you eat the whole apple, in which case you'll be poisoned. Each time you're offered a piece it's rational to take it, but following that policy means you get poisoned.

The analogy is that I consider living for eternity to be scary, and you say, "well, you can stop any time". True, but it's always going to be rational for me to live for one more year, and that way lies eternity.

Other (more compelling to me) reasons for being a "deathist":

- Eternity can seem kinda terrifying.

- In particular, death is insurance against the worst outcomes lasting forever. Things will always return to neutral eventually and stay there.

Your brain is for holding ideas, not for having them

Notes systems are nice for storing ideas but they tend to get clogged up with stuff you don't need, and you might never see the stuff you do need again. Wouldn't it be better if

- ideas got automatically downweighted in visibility over time according to their importance, as judged by an AGI who is intimately familiar with every aspect of your life

- ideas got automatically served up to you at relevant moments as judged by that AGI.

Your brain is that notes system. On the other hand, writing notes is a great way to come up with new ideas.

and nobody else ever seems to do anything useful as a result of such fights

I would guess a large fraction of the potential value of debating these things comes from its impact on people who aren’t the main proponents of the research program, but are observers deciding on their own direction.

Is that priced in to the feeling that the debates don’t lead anywhere useful?

The notion of ‘fairness’ discussed in e.g. the FDT paper is something like: it’s fair to respond to your policy, i.e. what you would do in any counterfactual situation, but it’s not fair to respond to the way that policy is decided.

I think the hope is that you might get a result like “for all fair decision problems, decision-making procedure A is better than decision-making procedure B by some criterion to do with the outcomes it leads to”.

Without the fairness assumption you could create an instant counterexample to any such result by writing down a decision problem where decision-making procedure A is explicitly penalised e.g. omega checks if you use A and gives you minus a million points if so.

a Bayesian interpretation where you don't need to renormalize after every likelihood computation

How does this differ from using Bayes' rule in odds ratio form? In that case you only ever have to renormalise if at some point you want to convert to probabilities.

I think all of the following:

- process-based feedback pushes against faithfulness because it incentivises having a certain kind of CoT independently of the outcome

- outcome-based feedback pushes towards faithfulness because it incentivises making use of earlier tokens to get the right answer

- outcome-based feedback pushes against legibility because it incentivises the model to discover new strategies that we might not know it's using

- combining process-based feedback with outcome-based feedback:

- pushes extra hard against legibility because it incentivises obfuscating said strategies[1]

- unclear sign wrt faithfulness

or at least has the potential to, depending on the details. ↩︎

I found this really useful, thanks! I especially appreciate details like how much time you spent on slides at first, and how much you do now.

Relevant keyword: I think the term for interactions like this where players have an incentive to misreport their preferences in order to bring about their desired outcome is “not strategyproof”.

The coin coming up heads is “more headsy” than the expected outcome, but maybe o3 is about as headsy as Thane expected.

Like if you had thrown 100 coins and then revealed that 80 were heads.

Could mech interp ever be as good as chain of thought?

Suppose there is 10 years of monumental progress in mechanistic interpretability. We can roughly - not exactly - explain any output that comes out of a neural network. We can do experiments where we put our AIs in interesting situations and make a very good guess at their hidden reasons for doing the things they do.

Doesn't this sound a bit like where we currently are with models that operate with a hidden chain of thought? If you don't think that an AGI built with the current fingers-crossed-its-faithful paradigm would be safe, what percentage an outcome would mech interp have to hit to beat that?

Seems like 99+ to me.