TsviBT's Shortform

post by TsviBT · 2024-06-16T23:22:54.134Z · LW · GW · 100 commentsContents

100 comments

100 comments

Comments sorted by top scores.

comment by TsviBT · 2025-03-11T23:19:38.874Z · LW(p) · GW(p)

The Berkeley Genomics Project is fundraising for the next forty days and forty nights at Manifund: https://manifund.org/projects/human-intelligence-amplification--berkeley-genomics-project

Replies from: UnexpectedValues, rahulxyz↑ comment by Eric Neyman (UnexpectedValues) · 2025-03-12T00:50:47.000Z · LW(p) · GW(p)

Probably don't update on this too much, but when I hear "Berkeley Genomics Project", it sounds to me like a project that's affiliated with UC Berkeley (which it seems like you guys are not). Might be worth keeping in mind, in that some people might be misled by the name.

Replies from: TsviBT↑ comment by TsviBT · 2025-03-12T01:01:53.440Z · LW(p) · GW(p)

Ok, thanks for noting. Right, we're not affiliated--just located in Berkeley. (I'm not sure I believe people will commonly be misled thus, and, I mean, UC Berkeley doesn't own the city, but will keep an eye out.)

(In theory I'm open to better names, though it's a bit late for that and also probably doesn't matter all that much. An early candidate in my head was "The Demeter Project" or something like that; I felt it wasn't transparent enough. Another sort of candidate was "Procreative Liberty Institute" or similar, though this is ambiguous with reproductive freedom (though there is real ideological overlap). Something like "Genomic Emancipation/Liberty org/project" could work. Someone suggested Berkeley Genomics Institute as sounding more "serious", and I agreed, except that BGI is already a genomics acronym.)

↑ comment by rahulxyz · 2025-03-12T07:16:51.150Z · LW(p) · GW(p)

I'm very dubious that we'll solve alignment in time, and it seems like my marginal dollar would do better in non-obvious causes for AI safety. So I'm very open to funding something like this in the hope we get a AI winter / regulatory pause etc.

I don't know if you or anyone else has thought about this, but what is your take on whether this or WBE is the more likely chance to getting done successfully? WBE seems a lot more funding intensive, but also possible to measure progress easier and potentially less regulatory burdens?

Replies from: TsviBT↑ comment by TsviBT · 2025-03-12T18:39:31.412Z · LW(p) · GW(p)

I discuss this here: https://www.lesswrong.com/posts/jTiSWHKAtnyA723LE/overview-of-strong-human-intelligence-amplification-methods#Brain_emulation [LW · GW]

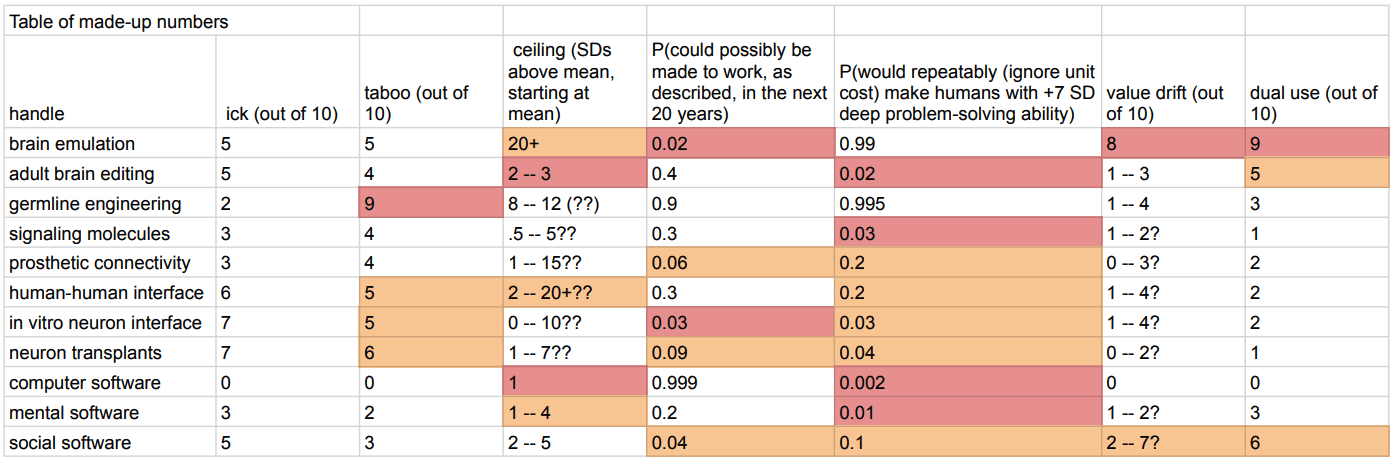

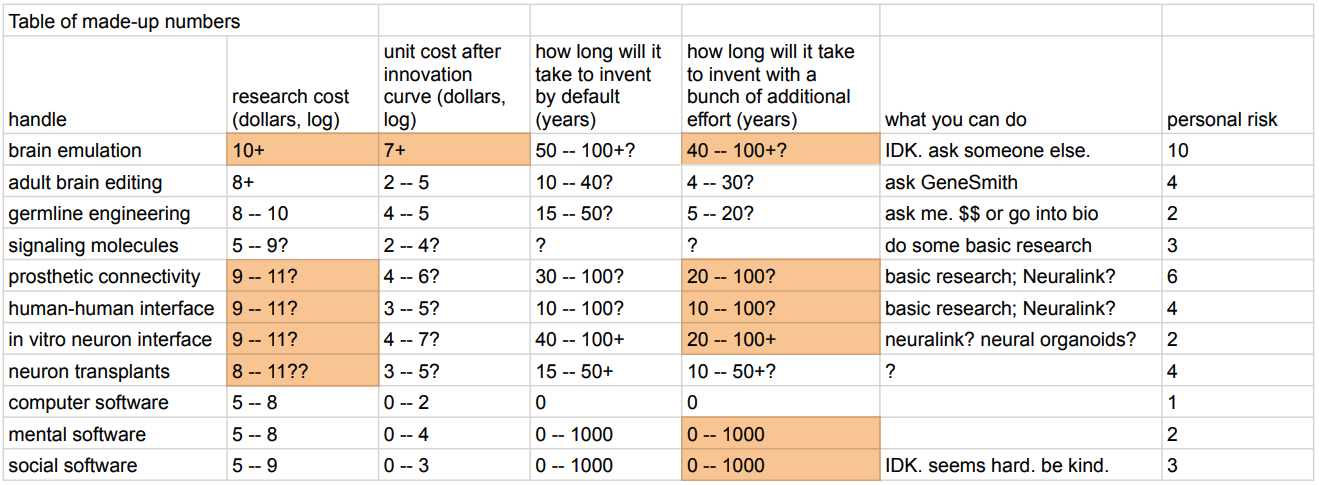

You can see my comparisons of different methods in the tables at the top:

comment by TsviBT · 2024-06-16T23:22:54.640Z · LW(p) · GW(p)

An important thing that the AGI alignment field never understood:

Reflective stability. Everyone thinks it's about, like, getting guarantees, or something. Or about rationality and optimality and decision theory, or something. Or about how we should understand ideal agency, or something.

But what I think people haven't understood is

- If a mind is highly capable, it has a source of knowledge.

- The source of knowledge involves deep change.

- Lots of deep change implies lots of strong forces (goal-pursuits) operating on everything.

- If there's lots of strong goal-pursuits operating on everything, nothing (properties, architectures, constraints, data formats, conceptual schemes, ...) sticks around unless it has to stick around.

- So if you want something to stick around (such as the property "this machine doesn't kill all humans") you have to know what sort of thing can stick around / what sort of context makes things stick around, even when there are strong goal-pursuits around, which is a specific thing to know because most things don't stick around.

- The elements that stick around and help determine the mind's goal-pursuits have to do so in a way that positively makes them stick around (reflective stability of goals).

There's exceptions and nuances and possible escape routes. And the older Yudkowsky-led research about decision theory and tiling and reflective probability is relevant. But this basic argument is in some sense simpler (less advanced, but also more radical ("at the root")) than those essays. The response to the failure of those essays can't just be to "try something else about alignment"; the basic problem is still there and has to be addressed.

(related elaboration: https://tsvibt.blogspot.com/2023/01/a-strong-mind-continues-its-trajectory.html https://tsvibt.blogspot.com/2023/01/the-voyage-of-novelty.html )

Replies from: Seth Herd, Lorxus, thomas-kwa, Jan_Kulveit↑ comment by Seth Herd · 2024-06-18T23:01:01.350Z · LW(p) · GW(p)

Agreed! I tried to say the same thing in The alignment stability problem [LW · GW].

I think most people in prosaic alignment aren't thinking about this problem. Without this, they're working on aligning AI, but not on aligning AGI or ASI. It seems really likely on the current path that we'll soon have AGI that is reflective. In addition, it will do continuous learning, which introduces another route to goal change (e.g., learning that what people mean by "human" mostly applies to some types of artificial minds, too).

The obvious route past this problem, that I think prosaic alignment often sort of assumes without being explicit about it, is that humans will remain in charge of how the AGI updates its goals and beliefs. They're banking on corrigible [? · GW] or instruction-following AGI.

I think that's a viable approach, but we should be more explicit about it. Aligning AI probably helps with aligning AGI, but they're not the same thing, so we should try to get more sure that prosaic alignment really helps align a reflectively stable AGI.

Replies from: TsviBT↑ comment by Lorxus · 2024-06-17T00:47:16.586Z · LW(p) · GW(p)

Say more about point 2 there? Thinking about 5 and 6 though - I think I now maybe have a hopeworthy intuition worth sharing later.

Replies from: TsviBT↑ comment by TsviBT · 2024-06-17T01:00:08.631Z · LW(p) · GW(p)

Say you have a Bayesian reasoner. It's got hypotheses; it's got priors on them; it's got data. So you watch it doing stuff. What happens? Lots of stuff changes, tide goes in, tide goes out, but it's still a Bayesian, can't explain that. The stuff changing is "not deep". There's something stable though: the architecture in the background that "makes it a Bayesian". The update rules, and the rest of the stuff (for example, whatever machinery takes a hypothesis and produces "predictions" which can be compared to the "predictions" from other hypotheses). And: it seems really stable? Like, even reflectively stable, if you insist?

So does this solve stability? I would say, no. You might complain that the reason it doesn't solve stability is just that the thing doesn't have goal-pursuits. That's true but it's not the core problem. The same issue would show up if we for example looked at the classical agent architecture (utility function, counterfactual beliefs, argmaxxing actions).

The problem is that the agency you can write down is not the true agency. "Deep change" is change that changes elements that you would have considered deep, core, fundamental, overarching... Change that doesn't fit neatly into the mind, change that isn't just another piece of data that updates some existing hypotheses. See https://tsvibt.blogspot.com/2023/01/endo-dia-para-and-ecto-systemic-novelty.html

Replies from: Lorxus↑ comment by Lorxus · 2024-06-17T01:28:49.667Z · LW(p) · GW(p)

You might complain that the reason it doesn't solve stability is just that the thing doesn't have goal-pursuits.

Not so - I'd just call it the trivial case and implore us to do better literally at all!

Apart from that, thanks - I have a better sense of what you meant there. "Deep change" as in "no, actually, whatever you pointed to as the architecture of what's Really Going On... can't be that, not for certain, not forever."

Replies from: TsviBT↑ comment by TsviBT · 2024-06-17T01:33:21.640Z · LW(p) · GW(p)

I'd go stronger than just "not for certain, not forever", and I'd worry you're not hearing my meaning (agree or not). I'd say in practice more like "pretty soon, with high likelihood, in a pretty deep / comprehensive / disruptive way". E.g. human culture isn't just another biotic species (you can make interesting analogies but it's really not the same).

Replies from: Lorxus↑ comment by Lorxus · 2024-06-17T01:47:34.486Z · LW(p) · GW(p)

I'd go stronger than just "not for certain, not forever", and I'd worry you're not hearing my meaning (agree or not).

That's entirely possible. I've thought about this deeply for entire tens of minutes, after all. I think I might just be erring (habitually) on the side of caution in qualities of state-changes I describe expecting to see from systems I don't fully understand. OTOH... I have a hard time believing that even (especially?) an extremely capable mind would find it worthwhile to repeatedly rebuild itself from the ground up, such that few of even the ?biggest?/most salient features of a mind stick around for long at all.

Replies from: TsviBT↑ comment by TsviBT · 2024-06-17T02:25:55.676Z · LW(p) · GW(p)

I have no idea what goes on in the limit, and I would guess that what determines the ultimate effects (https://tsvibt.blogspot.com/2023/04/fundamental-question-what-determines.html) would become stable in some important senses. Here I'm mainly saying that the stuff we currently think of as being core architecture would be upturned.

I mean it's complicated... like, all minds are absolutely subject to some constraints--there's some Bayesian constraint, like you can't "concentrate caring in worlds" in a way that correlates too much with "multiversally contingent" facts, compared to how much you've interacted with the world, or something... IDK what it would look like exactly, and if no one else know then that's kinda my point. Like, there's

- Some math about probabilities, which is just true--information-theoretic bounds and such. But: not clear precisely how this constrains minds in what ways.

- Some rough-and-ready ways that minds are constrained in practice, such as obvious stuff about like you can't know what's in the cupboard without looking, you can't shove more than such and such amount of information through a wire, etc. These are true enough in practice, but also can be broken in terms of their relevant-in-practice implications (e.g. by "hypercompressing" images using generative AI; you didn't truly violate any law of probability but you did compress way beyond what would be expected in a mundane sense).

- You can attempt to state more absolute constraints, but IDK how to do that. Naive attempts just don't work, e.g. "you can't gain information just by sitting there with your eyes closed" just isn't true in real life for any meaning of "information" that I know how to state other than a mathematical one (because for example you can gain "logical information", or because you can "unpack" information you already got (which is maybe "just" gaining logical information but I'm not sure, or rather I'm not sure how to really distinguish non/logical info), or because you can gain/explicitize information about how your brain works which is also information about how other brains work).

- You can describe or design minds as having some architecture that you think of as Bayesian. E.g. writing a Bayesian updater in code. But such a program would emerge / be found / rewrite itself so that the hypotheses it entertains, in the descriptive Bayesian sense, are not the things stored in memory and pointed at by the "hypotheses" token in your program.

Another class of constraints like this are those discussed in computational complexity theory.

So there are probably constraints, but we don't really understand them and definitely don't know how to weild them, and in particular we understand the ones about goal-pursuits much less well than we understand the ones about probability.

↑ comment by Thomas Kwa (thomas-kwa) · 2024-06-17T07:13:03.205Z · LW(p) · GW(p)

This argument does not seem clear enough to engage with or analyze, especially steps 2 and 3. I agree that concepts like reflective stability have been confusing, which is why it is important to develop them in a grounded way.

Replies from: TsviBT↑ comment by Jan_Kulveit · 2024-06-17T01:23:41.073Z · LW(p) · GW(p)

That's why solving hierarchical agency is likely necessary for success

Replies from: TsviBT↑ comment by TsviBT · 2024-06-17T01:30:11.574Z · LW(p) · GW(p)

We'd have to talk more / I'd have to read more of what you wrote, for me to give a non-surface-level / non-priors-based answer, but on priors (based on, say, a few dozen conversations related to multiple agency) I'd expect that whatever you mean by hierarchical agency is dodging the problem. It's just more homunculi. It could serve as a way in / as a centerpiece for other thoughts you're having that are more so approaching the problem, but the hierarchicalness of the agency probably isn't actually the relevant aspect. It's like if someone is trying to explain how a car goes and then they start talking about how, like, a car is made of four wheels, and each wheel has its own force that it applies to a separate part of the road in some specific position and direction and so we can think of a wheel as having inside of it, or at least being functionally equivalent to having inside of it, another smaller car (a thing that goes), and so a car is really an assembly of 4 cars. We're just... spinning our wheels lol.

Just a guess though. (Just as a token to show that I'm not completely ungrounded here w.r.t. multi-agency stuff in general, but not saying this addresses specifically what you're referring to: https://tsvibt.blogspot.com/2023/09/the-cosmopolitan-leviathan-enthymeme.html)

Replies from: Jan_Kulveit, niplav↑ comment by Jan_Kulveit · 2024-06-19T13:20:53.324Z · LW(p) · GW(p)

Agreed we would have to talk more. I think I mostly get the homunculi objection. Don't have time now to write an actual response, so here are some signposts:

- part of what you call agency is explained by roughly active inference style of reasoning

-- some type of "living" system is characteristic by having boundaries between them and the environment (boundaries mostly in sense of separation of variables)

-- maintaining the boundary leads to need to model the environment

-- modelling the environment introduces a selection pressure toward approximating Bayes

- other critical ingredient is boundedness

-- in this universe, negentropy isn't free

-- this introduces fundamental tradeoff / selection pressure [LW · GW] for any cognitive system: length isn't free, bitflips aren't free, etc.

(--- downstream of that is compression everywhere, abstractions)

-- empirically, the cost/returns function for scaling cognition usually hits diminishing returns, leading to minds where it's not effective to grow the single mind further

--- this leads to the basin of convergent evolution [LW · GW] I call "specialize and trade"

-- empirically, for many cognitive systems, there is a general selection pressure toward modularity

--- I don't know what are all the reasons for that, but one relatively simple is 'wires are not free'; if wires are not free, you get colocation of computations like brain regions or industry hubs

--- other possibilities are selection pressures from CAP theorem, MVG, ...

(modularity also looks a bit like box-inverted [LW · GW] specialize and trade)

So, in short, I think where I agree with the spirit of If humans didn't have a fixed skull size, you wouldn't get civilization with specialized members and my response is there seems to be extremely general selection pressure in this direction. If cells were able to just grow in size and it was efficient, you wouldn't get multicellulars. If code bases were able to just grow in size and it was efficient, I wouldn't get a myriad of packages on my laptop, it would all be just kernel. (But even if it was just kernel, it seems modularity would kick in and you still get the 'distinguishable parts' structure.)

↑ comment by niplav · 2024-06-17T10:03:32.562Z · LW(p) · GW(p)

It's just more homunculi.

It's a bit annoying to me that "it's just more homunculi" [? · GW] is both kind of powerful for reasoning about humans, but also evades understanding agentic things. I also find it tempting because it gives a cool theoretical foothold to work off, but I wonder whether the approach is hiding most of the complexity of understanding agency.

comment by TsviBT · 2025-03-16T12:32:17.773Z · LW(p) · GW(p)

Are people fundamentally good? Are they practically good? If you make one person God-emperor of the lightcone, is the result something we'd like?

I just want to make a couple remarks.

- Conjecture: Generally, on balance, over longer time scales good shards express themselves more than bad ones. Or rather, what we call good ones tend to be ones whose effects accumulate more.

- Example: Nearly all people have a shard, quite deeply stuck through the core of their mind, which points at communing with others.

- Communing means: speaking with; standing shoulder to shoulder with, looking at the same thing; understanding and being understood; lifting the same object that one alone couldn't lift.

- The other has to be truly external and truly a peer. Being a truly external true peer means they have unboundedness, infinite creativity, self- and pair-reflectivity and hence diagonalizability / anti-inductiveness. They must also have a measure of authority over their future. So this shard (albeit subtly and perhaps defeasibly) points at non-perfect subjugation of all others, and democracy. (Would an immortalized Genghis Khan, having conquered everything, after 1000 years, continue to wish to see in the world only always-fallow other minds? I'm unsure. What would really happen in that scenario?)

- An aspect of communing is, to an extent, melting into an interpersonal alloy. Thought patterns are quasi-copied back and forth, leaving their imprints on each other and each other leaving their imprints on the thought patterns; stances are suggested back and forth; interoperability develops; multi-person skills develop; eyes are shared. By strong default this cannot be stopped from being transitive. Thus elements, including multi-person elements, spread, binding everyone into everyone, in the long run.

- God--the future or ideal collectivity of humane minds--is the extrapolation of primordial searching for shared intentionality. That primordial searching almost universally always continues, at least to some extent, to exert itself. The Ner Tamid is saying: God (or specifically, the Shekhinah) is the one direction that we move in; God will be omnipotent, and is of course omnibenevolent. To say things more concretely (though less accurately), people in some sense want to get along, and all else equal they keep searching and learning more how to get along and keep implicitly rewriting themselves to get along more; of course this process could be corrupted / disrupted / prevented, but it's the default.

- Example: On the longest time scales, love increases, hatred decreases.

- Given more information about someone, your capacity for having {commune, love, compassion, kindness, cooperation} for/with them increases more than your capacity for {hatred, adversariality} towards them increases.

- You can perfectly well hate someone who you don't know much about.

- How much more can you hate someone by knowing more about them? Certainly you can learn things which make you hate them more. But if you kept learning even more, would you still be able to hate them?

- You can be quite adversarial towards someone without knowing them. Everyone can meet in combat in the arena of convergent instrumental subgoals.

- The exception to this conjecture:

- It is possible to become extremely more adversarial towards someone by knowing much more about them--so you can pessimize against their values.

- However, there is a strange sort of silver crack: Because love and cooperation are compatible with unbounded creativity, love and cooperation are unbounded. Therefore, to "keep up" with the love of your unbounded love, adversariality would need access to the unbounded expression of your values, in order to pessimize against them. But this seems to imply that the adversary has to continually give you more and more love, in order to access your values at each stage. Not sure what to make of this. (The outcome would still be very bad, but it's strange.)

- It's harder to feel compassion towards someone you don't know much about; towards someone you do know much about, compassion is the easiest thing in the world to feel, if you try for a moment.

- (This is just another example of how Buddhists are bad: faceless compassion is annihilation, not compassion. Yeah I know you like annihilation, but it's bad.)

- Kindness and cooperation requires information, and (for humans) can increase without bound with more information.

- Ender: It's impossible or almost impossible to understand someone without loving them.

Thanks to @JuliaHP [LW · GW] for a related conversation.

Replies from: Thane Ruthenis, D0TheMath, Viliam, sharmake-farah, Purplehermann↑ comment by Thane Ruthenis · 2025-03-16T23:31:13.883Z · LW(p) · GW(p)

This assumes that the initially-non-eudaimonic god-king(s) would choose to remain psychologically human for a vast amount of time, and keep the rest of humanity around for all that time. Instead of:

- Self-modify into something that's basically an eldritch abomination from a human perspective, either deliberately or as part of a self-modification process gone wrong.

- Make some minimal self-modifications to avoid value drift, precisely not to let the sort of stuff you're talking about happen.

- Stick to behavioral patterns that would lead to never changing their mind/never value-drifting, either as an "accidental" emergent property of their behavior (the way normal humans can surround themselves in informational bubbles that only reinforce their pre-existing beliefs; the way normal human dictators end up surrounded by yes-men; but elevated to transcendence, and so robust enough to last for eons) or as an implicit preference they never tell their aligned ASI to satisfy, but which it infers and carefully ensures the satisfaction of.

- Impose some totalitarian regime on the rest of humanity and forget about it, spending the rest of their time interacting only with each other/with tailor-built non-human constructs, and/or playing immersive simulation games.

- Immediately disassemble the rest of humanity for raw resources, like any good misaligned agent would, and never think about it again. Edit out their social instincts or satisfy them by interacting with each other/with constructs.

- Acausally sell this universe to some random paperclip-maximizer in exchange for being incarnated in some reality without entropic decay, where they wouldn't have cosmic resources, but would be able to exist literally eternally in lavish comfort (or at least dramatically longer than this universe's lifespan, basically trading parallel computing for sequential computing).

Et cetera.

Overall, I think all hopeful scenarios about "even a not-very-good person elevated to godhood would converge to goodness over time!" fail to feel the Singularity. It's not going to be basically business as usual for any prolonged length of time; things are going to get arbitrarily weird essentially immediately.

All of these hopeful purported psychosocial processes that modify humans to be good hinge on tons of assumptions about how the world looks like. They're brittle. And it seems incredibly unlikely that any of these assumptions – let alone all of them – would still be intact even a month past the event horizon, let alone thousands of years.

Replies from: TsviBT↑ comment by TsviBT · 2025-03-17T00:15:16.630Z · LW(p) · GW(p)

This assumes

Yes, that's a background assumption of the conjecture; I think making that assumption and exploring the consequences is helpful.

Self-modify into something that's basically an eldritch abomination from a human perspective, either deliberately or as part of a self-modification process gone wrong.

Right, totally, then all bets are off. The scenario is underspecified. My default imagination of "aligned" AGI is corrigible AGI. (In fact, I'm not even totally sure that it makes much sense to talk of aligned AGI that's not corrigible.) Part of corrigibility would be that if:

- the human asks you to do X,

- and X would have irreversible consequences,

- and the human is not aware of / doesn't understand those consequences,

- and the consequences would make the human unable to notice or correct the change,

- and the human, if aware, would have really wanted to not do X or at least think about it a bunch more before doing it,

then you DEFINITELY don't just go ahead and do X lol!

In other words, a corrigible AGI is supposed to use its intelligence to possibilize self-alignment for the human.

Make some minimal self-modifications to avoid value drift, precisely not to let the sort of stuff you're talking about happen.

I think this notion of values and hence value drift is probably mistaken of humans. Human values are meta and open--part of the core argument of my OP (the bullet point about communing).

Stick to behavioral patterns that would lead to never changing their mind/never value-drifting, either as an "accidental" emergent property of their behavior

So first they carefully construct an escape-proof cage for all the other humans, and then they become a perma-zombie? Not implausible, like they could for some reason specifically ask the AGI to do this, but IDK why they would.

or as an implicit preference they never tell their aligned ASI to satisfy, but which it infers and carefully ensures the satisfaction of.

Doesn't sound very corrigible? Not sure.

Immediately disassemble the rest of humanity for raw resources, like any good misaligned agent would, and never think about it again. Edit out their social instincts or satisfy them by interacting with each other/with constructs.

Right, certainly they could. Who actually would? (Not rhetorical.)

Overall, I think all hopeful scenarios about "even a not-very-good person elevated to godhood would converge to goodness over time!" fail to feel the Singularity. It's not going to be basically business as usual for any prolonged length of time; things are going to get arbitrarily weird essentially immediately.

I think you're failing to feel the Singularity, and instead you're extrapolating to like "what would a really really bad serial killer / dictator do if they were being an extra bad serial killer / dictator times 1000". Or IDK, I don't know what you think; What do you think would actually happen if a random person were put in the corrigible AGI control seat?

Things can get weird, but for the person to cut out a bunch of their core humanity, kinda seems like either the AGI isn't really corrigible or isn't really AGI (such that the emperor-AGI system is being dumb by its own lights), or else the person really wanted to do that. Why do you think people want to do that? Do you want to do that? I don't.

If they don't cut out a bunch of their core humanity, then my question and conjecture are live.

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2025-03-17T11:13:07.088Z · LW(p) · GW(p)

Human values are meta and open--part of the core argument of my OP (the bullet point about communing).

Unless the human, on reflection, doesn't want some specific subset of their current values to be open to change / has meta-level preferences to freeze some object-level values. Which I think is common. (Source: I have meta-preferences to freeze some of my object-level values at "eudaimonia", and I take specific deliberate actions to avoid or refuse value-drift on that.)

Not implausible, like they could for some reason specifically ask the AGI to do this, but IDK why they would.

Callousness. "We probably need to do something about the rest of humanity, probably shouldn't just wipe them all out, lemme draft some legislation, alright looks good, rubber-stamp it and let's move on". Tons of bureaucracies and people in power seem to act this way today, including decisions that impact the fates of millions.

Right, certainly they could. Who actually would? (Not rhetorical.)

I don't know that Genghis Khan or Stalin wouldn't have. Some clinical psychopaths or philosophical extremists (e. g., the human successionists) certainly would.

What do you think would actually happen if a random person were put in the corrigible AGI control seat?

Mm...

First, I think "corrigibility to a human" is underdefined [LW(p) · GW(p)]. A human is not, themselves, a coherent agent with a specific value/goal-slot to which an AI can be corrigible.

Like, is it corrigible to a human's momentary impulses? Or to the command the human would give if they thought for five minutes? For five days? Or perhaps to the command they'd give if the AI taught them more wisdom? But then which procedure should the AI choose for teaching them more wisdom? The outcome is likely path-dependent on that: on the choice between curriculum A and curriculum B. And if so, what procedure should the AI use to decide what curriculum to use? Or should the AI perhaps basically ignore the human in front of them, and simply interpret them as a rough pointer to CEV? Well, that assumes the conclusion, and isn't really "corrigibility" at all, is it?

The underlying issue here is that "a human's values" are themselves underdefined. They're derived in a continual, path-dependent fashion, by a unstable process [LW · GW] with lots of recursions and meta-level interference. There's no unique ground-true set of values which the AI should take care not to step onto. This leaves three possibilities:

- The AI acts as a tool that does what the human knowingly instructs it to do, with the wisdom by-default outsourced to the human.

- But then it is possible to use it unwisely. For example, if the human operator is smart enough to foresee issues with self-modification, they could ask the AI to watch out for that. They could also ask it to watch out for that whole general class of unwise-on-the-part-of-the-human decisions. But they can also fail to do so, or unwisely ignore a warning in a fit of emotion, or have some beliefs about how decisions Ought to be Done that they're unwilling to even discuss with the AI.

- The AI never does anything, because it knows that any of its actions can step onto one of the innumerable potential endpoints of a human's self-reflection process.

- But then it is useless.

- The AI isn't corrigible at all, it just optimizes for some fixed utility function, if perhaps with an indirect pointer to it ("this human's happiness", "humanity's CEV", etc.).

(1) is the only possibility worth examining here, I think.

And what I expect to happen if an untrained, philosophically median human is put in control of a tool ASI, is some sort of catastrophe. They would have various cached thoughts about how the story ought to go, what the greater good is, who the villains are, how the society ought to be set up. These thoughts would be endorsed at the meta-level, and not open to debate. The human would not want to ask the ASI to examine those; if the ASI attempts to challenge them as part of some other request, the human would tell it to shut up.[1]

In addition, the median human is not, really, a responsible person. If put in control of an ASI, they would not suddenly become appropriately responsible. It wouldn't by default occur to them to ask the ASI for making them more responsible, either, because that's itself a very responsible thing to do. The way it would actually go, they are going to be impulsive, emotional, callous, rash, unwise, cognitively lazy.

Some sort of stupid and callous outcome is likely to result. Maybe not specifically "self-modifying into a monster/zombie and trapping humanity in a dystopian prison", but something in that reference class of outcomes.

Not to mention if the human has some extant prejudices: racism or any other manner of "assigning different moral worth to different sapient beings based on arbitrary features". The stupid-callous-impulsive process would spit out some not-very-pleasant fate for the undesirables, and this would be reflectively endorsed on some level, so a genuine tool-like corrigible ASI[2] wouldn't say a word of protest.

Maybe I am being overly cynical about this, that's definitely possible. Still, that's my current model.

- ^

Source: I would not ask the ASI to search for arguments against eudaimonia-maximization, or ask it to check if there's something else that "I" "should" be pursuing instead, because I do not want to be argued out of that even if there's some coherent, true, and compelling sense in which it is not what "I" "actually" "want". If the ASI asks whether it should run that check as part of some other request, I would tell it to shut up.

(Note that it's different from examining whether my idea of eudaimonia/human flourishing/the-thing-I-mean-when-I-say-human-flourishing is correct/good, or whether my fundamental assumptions about how the world works are correct, etc.)

- ^

As opposed to a supposedly corrigible but secretly eudaimonic ASI which, in one's imagination, always happens to gently question the human's decisions when the human orders it to do something bad, and then happens to pick the specific avenues of questioning that make the human "realize" they wanted good things all along.

↑ comment by TsviBT · 2025-03-17T11:38:47.497Z · LW(p) · GW(p)

This leaves three possibilities:

How about for example:

The AGI helps out with increasing the human's ability to follow through on attempts at internal organization (e.g. thinking, problem solving, reflecting, coherentifying) that normally the human would try a bit and then give up on.

Not saying this is some sort of grand solution to corrigibility, but it's obviously better than the nonsense you listed. If a human were going to try to help me out, I'd want this, for example, more than the things you listed, and it doesn't seem especially incompatible with corrigible behavior.

↑ comment by TsviBT · 2025-03-17T11:26:29.200Z · LW(p) · GW(p)

First, I think "corrigibility to a human" is underdefined. A human is not, themselves, a coherent agent with a specific value/goal-slot to which an AI can be corrigible.

I mean, yes, but you wrote a lot of stuff after this that seems weird / missing the point, to me. A "corrigible AGI" should do at least as well as--really, much better than--you would do, if you had a huge team of researchers under you and your full time, 100,000x speed job is to do a really good job at "being corrigible, whatever that means" to the human in the driver's seat. (In the hypothetical you're on board with this for some reason.)

↑ comment by TsviBT · 2025-03-17T11:17:25.271Z · LW(p) · GW(p)

(Source: I have meta-preferences to freeze some of my object-level values at "eudaimonia", and I take specific deliberate actions to avoid or refuse value-drift on that.)

I would guess fairly strongly that you're mistaken or confused about this, in a way that an AGI would understand and be able to explain to you. (An example of how that would be the case: the version of "eudaimonia" that would not horrify you, if you understood it very well, has to involve meta+open consciousness (of a rather human flavor).)

↑ comment by Mateusz Bagiński (mateusz-baginski) · 2025-03-17T15:53:08.247Z · LW(p) · GW(p)

Source: I have meta-preferences to freeze some of my object-level values at "eudaimonia", and I take specific deliberate actions to avoid or refuse value-drift on that.

I'm curious to hear more about those specific deliberate actions.

↑ comment by TsviBT · 2025-03-17T11:23:55.289Z · LW(p) · GW(p)

Some sort of stupid and callous outcome is likely to result. Maybe not specifically "self-modifying into a monster/zombie and trapping humanity in a dystopian prison", but something in that reference class of outcomes.

Your and my beliefs/questions don't feel like they're even much coming into contact with each other... Like, you (and also other people) just keep repeating "something bad could happen". And I'm like "yeah obviously something extremely bad could happen; maybe it's even likely, IDK; and more likely, something very bad at the beginning of the reign would happen (Genghis spends is first 200 years doing more killing and raping); but what I'm ASKING is, what happens then?".

If you're saying

There is a VERY HIGH CHANCE that the emperor would PERMANENTLY put us into a near-zero value state or a negative-value state.

then, ok, you can say that, but I want to understand why; and I have some reasons (as presented) for thinking otherwise.

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2025-03-17T11:41:27.955Z · LW(p) · GW(p)

Your hypothesis is about the dynamics within human minds embedded in something like contemporary societies with lots of other diverse humans whom the rulers are forced to model for one reason or another.

My point is that evil, rash, or unwise decisions at the very start of the process are likely, and that those decisions are likely to irrevocably break the conditions in which the dynamics you hypothesize are possible. Make the minds in charge no longer human in the relevant sense, or remove the need to interact with/model other humans, etc.

In my view, it doesn't strongly bear on the final outcome-distribution whether the "humans tend to become nicer to other humans over time" hypothesis is correct, because "the god-kings remain humans hanging around all the other humans in a close-knit society for millennia" is itself a very rare class of outcomes.

Replies from: TsviBT↑ comment by TsviBT · 2025-03-17T11:46:53.827Z · LW(p) · GW(p)

Your hypothesis is about the dynamics within human minds embedded in something like contemporary societies with lots of other diverse humans whom the rulers are forced to model for one reason or another.

Absolutely not, no. Humans want to be around (some) other people, so the emperor will choose to be so. Humans want to be [many core aspects of humanness, not necessarily per se, but individually], so the emperor will choose to be so. Yes, the emperor could want these insufficiently for my argument to apply, as I've said earlier. But I'm not immediately recalling anyone (you or others) making any argument that, with high or even substantial probability, the emperor would not want these things sufficiently for my question, about the long-run of these things, to be relevant.

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2025-03-17T12:01:09.605Z · LW(p) · GW(p)

Humans want to be around (some) other people

Yes: some other people. The ideologically and morally aligned people, usually. Social/informational bubbles that screen away the rest of humanity, from which they only venture out if forced to (due to the need to earn money/control the populace, etc.). This problem seems to get worse as the ability to insulate yourself from other improves, as could be observed with modern internet-based informational bubbles or the surrounded-by-yes-men problem of dictators.

ASI would make this problem transcendental: there would truly be no need to ever bother with the people outside your bubble again, they could be wiped out or their management outsourced to AIs.

Past this point, you're likely never returning to bothering about them. Why would you, if you can instead generate entire worlds of the kinds of people/entities/experiences you prefer? It seems incredibly unlikely that human social instincts can only be satisfied – or even can be best satisfied – by other humans.

Replies from: TsviBT, mateusz-baginski↑ comment by TsviBT · 2025-03-17T12:10:00.229Z · LW(p) · GW(p)

It seems incredibly unlikely that human social instincts can only be satisfied – or even can be best satisfied – by other humans.

You're 100% not understanding my argument, which is sorta fair because I didn't lay it out clearly, but I think you should be doing better anyway.

Here's a sketch:

- Humans want to be human-ish and be around human-ish entities.

- So the emperor will be human-ish and be around human-ish entities for a long time. (Ok, to be clear, I mean a lot of developmental / experiential time--the thing that's relevant for thinking about how the emperor's way of being trends over time.)

- When being human-ish and around human-ish entities, core human shards continue to work.

- When core human shards continue to work, MAYBE this implies EVENTUALLY adopting beneficence (or something else like cosmopolitanism), and hence good outcomes.

- Since the emperor will be human-ish and be around human-ish entities for a long time, IF 4 obtains, then good outomes.

And then I give two IDEAS about 4 (communing->[universalist democracy], and [information increases]->understanding->caring).

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2025-03-17T12:42:45.856Z · LW(p) · GW(p)

I don't know what's making you think I don't understand your argument. Also, I've never publicly stated that I'm opting into Crocker's Rules, so while I happen not to particularly mind the rudeness, your general policy on that seems out of line here.

When being human-ish and around human-ish entities, core human shards continue to work

My argument is that the process you're hypothesizing would be sensitive to the exact way of being human-ish, the exact classes of human-ish entities around, and the exact circumstances in which the emperor has to be around them.

As a plain and down-to-earth example, if a racist surrounds themselves with a hand-picked group of racist friends, do you expect them to eventually develop universal empathy, solely through interacting with said racist friends? Addressing your specific ideas: nobody in that group would ever need to commune with non-racists, nor have to bother learning more about non-racists. And empirically, such groups don't seem to undergo spontaneous deradicalizations.

Replies from: TsviBT, TsviBT↑ comment by TsviBT · 2025-03-17T12:56:11.916Z · LW(p) · GW(p)

As a plain and down-to-earth example, if a racist surrounds themselves with a hand-picked group of racist friends, do you expect them to eventually develop universal empathy, solely through interacting with said racist friends? Addressing your specific ideas: nobody in that group would ever need to commune with non-racists, nor have to bother learning more about non-racists. And empirically, such groups don't seem to undergo spontaneous deradicalizations.

I expect they'd get bored with that.

↑ comment by TsviBT · 2025-03-17T12:47:36.715Z · LW(p) · GW(p)

As a plain and down-to-earth example, if a racist surrounds themselves with a hand-picked group of racist friends, do you expect them to eventually develop universal empathy, solely through interacting with said racist friends? Addressing your specific ideas: nobody in that group would ever need to commune with non-racists, nor have to bother learning more about non-racists. And empirically, such groups don't seem to undergo spontaneous deradicalizations.

So what do you think happens when they are hanging out together, and they are in charge, and it has been 1,000 years or 1,000,000 years?

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2025-03-17T13:08:19.209Z · LW(p) · GW(p)

One or both of:

- They keep each other radicalized forever as part of some transcendental social dynamic.

- They become increasingly non-human as time goes on, small incremental modifications and personality changes building on each other, until they're no longer human in the senses necessary for your hypothesis to apply.

I assume your counter-model involves them getting bored of each other and seeking diversity/new friends, or generating new worlds to explore/communicate with, with the generating processes not constrained to only generate racists, leading to the extremists interacting with non-extremists and eventually incrementally adopting non-extremist perspectives?

If yes, this doesn't seem like the overdetermined way for things to go:

- The generating processes would likely be skewed towards only generating things the extremists would find palatable, meaning more people sharing their perspectives/not seriously challenging whatever deeply seated prejudices they have. They're there to have a good time, not have existential/moral crises.

- They may make any number of modifications to themselves to make them no longer human-y in the relevant sense. Including by simply letting human-standard self-modification algorithms run for 10^3-10^6 years, becoming superhumanly radicalized.

- They may address the "getting bored" part instead, periodically wiping their memories (including by standard human forgetting) or increasing each other's capacity to generate diverse interactions.

↑ comment by TsviBT · 2025-03-17T13:21:28.729Z · LW(p) · GW(p)

Ok so they only generate racists and racially pure people. And they do their thing. But like, there's no other races around, so the racism part sorta falls by the wayside. They're still racially pure of course, but it's usually hard to tell that they're racist; sometimes they sit around and make jokes to feel superior over lesser races, but this is pretty hollow since they're not really engaged in any type of race relations. Their world isn't especially about all that, anymore. Now it's about... what? I don't know what to imagine here, but the only things I do know how to imagine involve unbounded structure (e.g. math, art, self-reflection, self-reprogramming). So, they're doing that stuff. For a very long time. And the race thing just is not a part of their world anymore. Or is it? I don't even know what to imagine there. Instead of having tastes about ethnicity, they develop tastes about questions in math, or literature. In other words, [the differences between people and groups that they care about] migrate from race to features of people that are involved in unbounded stuff. If the AGI has been keeping the racially impure in an enclosure all this time, at some point the racists might have a glance back, and say, wait, all the interesting stuff about people is also interesting about these people. Why not have them join us as well.

↑ comment by Mateusz Bagiński (mateusz-baginski) · 2025-03-17T15:55:24.725Z · LW(p) · GW(p)

Past this point, you're likely never returning to bothering about them. Why would you, if you can instead generate entire worlds of the kinds of people/entities/experiences you prefer? It seems incredibly unlikely that human social instincts can only be satisfied – or even can be best satisfied – by other humans.

For the same reason that most people (if given the power to do so) wouldn't just replace their loved ones with their altered versions that are better along whatever dimensions the person judged them as deficient/imperfect.

↑ comment by TsviBT · 2025-03-17T11:18:15.946Z · LW(p) · GW(p)

I don't know that Genghis Khan or Stalin wouldn't have. Some clinical psychopaths or philosophical extremists (e. g., the human successionists) certainly would.

Yeah I mean this is perfectly plausible, it's just that even these cases are not obvious to me.

↑ comment by Garrett Baker (D0TheMath) · 2025-03-16T18:37:00.918Z · LW(p) · GW(p)

Given more information about someone, your capacity for having {commune, love, compassion, kindness, cooperation} for/with them increases more than your capacity for {hatred, adversariality} towards them increases.

If this were true, I’d expect much lower divorce rates. After all, who do you have the most information about other than your wife/husband, and many of these divorces are un-amicable, though I wasn’t quickly able to get particular numbers. [EDIT:] Though in either case, this indeed indicates a much decreasing level of love over long periods of time & greater mutual knowledge. See also the decrease in all objective measures of quality of life after divorce for both parties after long marriages.

Replies from: TsviBT↑ comment by TsviBT · 2025-03-16T20:50:15.282Z · LW(p) · GW(p)

(I wrote my quick take quickly and therefore very elliptically, and therefore it would require extra charity / work on the reader's part (like, more time spent asking "huh? this makes no sense? ok what could he have meant, which would make this statement true?").)

It's an interesting point, but I'm talking about time scales of, say, thousands of years or millions of years. So it's certainly not a claim that could be verified empirically by looking at any individual humans because there aren't yet any millenarians or megaannumarians. Possibly you could look at groups that have had a group consciousness for thousands of years, and see if pairs of them get friendlier to each other over time, though it's not really comparable (idk if there are really groups like that in continual contact and with enough stable collectivity; like, maybe the Jews and the Indians or something).

Replies from: D0TheMath↑ comment by Garrett Baker (D0TheMath) · 2025-03-16T21:49:32.535Z · LW(p) · GW(p)

So it's certainly not a claim that could be verified empirically by looking at any individual humans because there aren't yet any millenarians or megaannumarians.

If its not a conclusion which could be disproven empirically, then I don’t know how you came to it.

(I wrote my quick take quickly and therefore very elliptically, and therefore it would require extra charity / work on the reader's part (like, more time spent asking "huh? this makes no sense? ok what could he have meant, which would make this statement true?").)

I mean, I did ask myself about counter-arguments you could have with my objection, and came to basically your response. That is, something approximating “well they just don’t have enough information, and if they had way way more information then they’d love each other again” which I don’t find satisfying.

Namely because I expect people in such situations get stuck in a negative-reinforcement cycle, where the things which used to be fun which the other did lose their novelty over time as they get repetitive, which leads to the predicted reward of those interactions overshooting the actual reward, which in a TD learning sense is just as good (bad) as a negative reinforcement event. I don’t see why this would be fixed with more knowledge, and it indeed does seem likely to be exacerbated with more knowledge as more things the other does become less novel & more boring, and worse, fundamental implications of their nature as a person, rather than unfortunate accidents they can change easily.

I also think intuitions in this area are likely misleading. It is definitely the case now that marginally more understanding of each other would help with coordination problems, since people love making up silly reasons to hate each other. I do also think this is anchoring too much on our current bandwidth limitations, and generalizing too far. Better coordination does not always imply more love.

Replies from: TsviBT, TsviBT, TsviBT↑ comment by TsviBT · 2025-03-16T21:59:24.391Z · LW(p) · GW(p)

Namely because I expect people in such situations get stuck in a negative-reinforcement cycle, where the things which used to be fun which the other did lose their novelty over time as they get repetitive, which leads to the predicted reward of those interactions overshooting the actual reward, which in a TD learning sense is just as good (bad) as a negative reinforcement event. I don’t see why this would be fixed with more knowledge, and it indeed does seem likely to be exacerbated with more knowledge as more things the other does become less novel & more boring, and worse, fundamental implications of their nature as a person, rather than unfortunate accidents they can change easily.

This does not sound like the sort of problem you'd just let yourself wallow in for 1000 years.

And again, with regards to what is fixed by more information, I'm saying that capacity for love increases more.

more things the other does become less novel & more boring

After 1000 years, both people would have gotten bored with themselves, and learned to do infinite play!

↑ comment by TsviBT · 2025-03-16T21:57:14.419Z · LW(p) · GW(p)

That is, something approximating “well they just don’t have enough information, and if they had way way more information then they’d love each other again” which I don’t find satisfying.

Maybe there's a more basic reading comprehension fail: I said capacity to love increases more with more information, not that you magically start loving each other.

↑ comment by Viliam · 2025-03-16T23:04:20.084Z · LW(p) · GW(p)

Are people fundamentally good?

Maybe some people are, and some people are not?

Are they practically good?

Not sure if we are talking about the same thing, but I think that there are many people who just "play it safe", and in a civilized society that generally means following the rules and avoiding unnecessary conflicts. The same people can behave differently if you give them power (even on a small scale, e.g. when they have children).

But I think there are also people who try to do good even when the incentives point the other way round. And also people who can't resist hurting others even when that predictably gets them punished.

Given more information about someone, your capacity for having {commune, love, compassion, kindness, cooperation} for/with them increases more than your capacity for {hatred, adversariality} towards them increases.

Knowing more about people allows you to have a better model of them. So if you started with the assumption e.g. that people who don't seem sufficiently similar to you are bad, then knowing them better will improve your attitude towards them. On the other hand, if you started from some kind of Pollyanna perspective, knowing people better can make you disappointed and bitter. Finally, if you are a psychopath, knowing people better just gives you more efficient ways to exploit them.

Replies from: TsviBT↑ comment by TsviBT · 2025-03-16T23:54:17.487Z · LW(p) · GW(p)

Maybe some people are, and some people are not?

Right. Presumably, maybe. But I am interested in considering quite extreme versions of the claim. Maybe there's only 10,000 people who would, as emperor, make a world that is, after 1,000,000 years, net negative according to us. Maybe there's literally 0? I'm not even sure that there aren't literally 0, though quite plausibly someone else could know this confidently. (For example, someone could hypothetically have solid information suggesting that someone could remain truly delusionally and disorganizedly psychotic and violent to such an extent that they never get bored and never grow, while still being functional enough to give directions to an AI that specify world domination for 1,000,000 years.)

Replies from: Viliam↑ comment by Viliam · 2025-03-17T08:20:25.325Z · LW(p) · GW(p)

Sounds to me like wishful thinking. You basically assume that in 1 000 000 years people will get bored of doing the wrong thing, and start doing the right thing. My perspective is that "good" is a narrow target in the possibility space, and if someone already keeps missing it now, if we expand their possibility space by making them a God-emperor, the chance of converging to that narrow target only decreases.

Basically, for your model to work, kindness would need to be the only attractor in the space of human (actually, post-human) psychology.

A simple example of how things could go wrong is for Genghis Khan to set up an AI to keep everyone else in horrible conditions forever, and then (on purpose, or accidentally) wirehead himself.

Another example is the God-emperor editing their own brain to remove all empathy, e.g. because they consider it a weakness at the moment. Once all empathy is uninstalled, there is no incentive to reinstall it.

EDIT: I see that Thane Ruthenis already made this argument, and didn't convince you.

Replies from: TsviBT↑ comment by TsviBT · 2025-03-17T08:29:29.247Z · LW(p) · GW(p)

You basically assume

No, I ask the question, and then I present a couple hypothesis-pieces. (Your stance here seems fairly though not terribly anti-thought AFAICT, so FYI I may stop engaging without further warning.)

My perspective is that "good" is a narrow target in the possibility space, and if someone already keeps missing it now, if we expand their possibility space by making them a God-emperor, the chance of converging to that narrow target only decreases.

I'm seriously questioning whether it's a narrow target for humans.

Basically, for your model to work, kindness would need to be the only attractor in the space of human (actually, post-human) psychology.

Curious to hear other attractors, but your proposals aren't really attractors. See my response here: https://www.lesswrong.com/posts/Ht4JZtxngKwuQ7cDC/tsvibt-s-shortform?commentId=jfAoxAaFxWoDy3yso [LW(p) · GW(p)]

Ah I see you saw Ruthenis's comment and edited your comment to say so, so I edited my response to your comment to say that I saw that you saw.

Replies from: Viliam↑ comment by Viliam · 2025-03-17T08:39:38.456Z · LW(p) · GW(p)

Well, if we assume that humans are fundamentally good / inevitably converging to kindness if given enough time... then, yeah, giving someone God-emperor powers is probably going to be good in long term. (If they don't accidentally make an irreparable mistake.)

I just strongly disagree with this assumption.

Replies from: TsviBT↑ comment by TsviBT · 2025-03-17T08:41:08.017Z · LW(p) · GW(p)

It's not an assumption, it's the question I'm asking and discussing.

Replies from: Viliam↑ comment by Viliam · 2025-03-17T08:56:43.870Z · LW(p) · GW(p)

Ah, then I believe the answer is "no".

On the time scale of current human lifespan, I guess I could point out that some old people are unkind, or that some criminals keep re-offending a lot, so it doesn't seem like time automatically translates to more kindness.

But an obvious objection is "well, maybe they need 200 years of time, or 1000", and I can't provide empirical evidence against that. So I am not sure how to settle this question.

On average, people get less criminal as they get older, so that would point towards human kindness increasing in time. On the other hand, they also get less idealistic, on average, so maybe a simpler explanation is that as people get older, they get less active in general. (Also, some reduction in crime is caused by the criminals getting killed as a result of their lifestyle.)

There is probably a significant impact of hormone levels, which means that we need to make an assumption about how the God-emperor would regulate their own hormones. For example, if he decides to keep a 25 years old human male body, maybe his propensity to violence will match the body?

tl;dr - what kinds of arguments should even be used in this debate?

Replies from: TsviBT, mateusz-baginski↑ comment by TsviBT · 2025-03-17T09:04:32.546Z · LW(p) · GW(p)

what kinds of arguments should even be used in this debate?

Ok, now we have a reasonable question. I don't know, but I provided two argument-sketches that I think are of a potentially relevant type. At an abstract level, the answer would be "mathematico-conceptual reasoning", just like in all previous instances where there's a thing that has never happened before, and yet we reason somewhat successfully about it--of which there are plenty examples, if you think about it for a minute.

↑ comment by Mateusz Bagiński (mateusz-baginski) · 2025-03-17T16:09:15.603Z · LW(p) · GW(p)

On average, people get less criminal as they get older, so that would point towards human kindness increasing in time. On the other hand, they also get less idealistic, on average, so maybe a simpler explanation is that as people get older, they get less active in general.

When I read Tsvi's OP, I was imagining something like a (trans-/post- but not too post-)human civilization where everybody by default has an unbounded lifespan and healthspan, possibly somewhat boosted intelligence and need for cognition / open intellectual curiosity. (In which case, "people tend to X as they get older", where X is something mostly due to things related to default human aging, doesn't apply.)

Now start it as a modern-ish democracy or a cluster of (mostly) democracies, run for 1e4 to 1e6 years, and see what happens.

↑ comment by Noosphere89 (sharmake-farah) · 2025-03-16T20:59:58.233Z · LW(p) · GW(p)

I basically don't buy the conjecture of humans being super-cooperative in the long run, or hatred decreasing and love increasing.

To the extent that something like this is true, I expect it to be a weird industrial to information age relic that utterly shatters if AGI/ASI is developed, and this remains true even if the AGI is aligned to a human.

Replies from: TsviBT↑ comment by Purplehermann · 2025-03-17T09:26:14.027Z · LW(p) · GW(p)

People love the idea (as opposed to reality) of other people quite often, and knowing the other better can allow for plenty of hate

Replies from: TsviBT↑ comment by TsviBT · 2025-03-17T09:29:34.171Z · LW(p) · GW(p)

Seems true. I don't think this makes much contact with any of my claims. Maybe you're trying to address:

But if you kept learning even more, would you still be able to hate them?

To clarify the question (which I didn't do a good job of in the OP), the question is more about 1000 years or 1,000,000 years than 1 or 10 years.

comment by TsviBT · 2025-01-28T04:05:02.931Z · LW(p) · GW(p)

It is still the case that some people don't sign up for cryonics simply because it takes work to figure out the process / financing. If you do sign up, it would therefore be a public service to write about the process.

Replies from: Mo Nastri, Josephm, sjadler↑ comment by Mo Putera (Mo Nastri) · 2025-01-28T04:14:59.761Z · LW(p) · GW(p)

Mingyuan has written Cryonics signup guide #1: Overview [LW · GW].

↑ comment by Joseph Miller (Josephm) · 2025-01-28T12:20:23.914Z · LW(p) · GW(p)

For those in Europe, Tomorrow Biostasis makes the process a lot easier and they have people who will talk you through step by step.

↑ comment by sjadler · 2025-01-28T09:47:40.462Z · LW(p) · GW(p)

A plug for another post I’d be interested in: If anyone has actually evaluated the arguments for “What if your consciousness is ~tortured in simulation?” as a reason to not pursue cryo. Intuitively I don’t think this is super likely to happen, but various moral atrocities have and do happen, and that gives me a lot of pause, even though I know I’m exhibiting some status quo bias

Replies from: niplavcomment by TsviBT · 2025-04-24T20:17:42.759Z · LW(p) · GW(p)

For its performances, current AI can pick up to 2 of 3 from:

- Interesting (generates outputs that are novel and useful)

- Superhuman (outperforms humans)

- General (reflective of understanding that is genuinely applicable cross-domain)

AlphaFold's outputs are interesting and superhuman, but not general. Likewise other Alphas.

LLM outputs are a mix. There's a large swath of things that it can do superhumanly, e.g. generating sentences really fast or various kinds of search. Search is, we could say, weakly novel in a sense; LLMs are superhumanly fast at doing a form of search which is not very reflective of general understanding. Quickly generating poems with words that all start with the letter "m" or very quickly and accurately answering stereotyped questions like analogies is superhuman, and reflects a weak sort of generality, but is not interesting.

ImageGen is superhuman and a little interesting, but not really general.

Many architectures + training setups constitute substantive generality (can be applied to many datasets), and produce interesting output (models). However, considered as general training setups (i.e., to be applied to several contexts), they are subhuman.

comment by TsviBT · 2025-03-19T00:26:37.770Z · LW(p) · GW(p)

Recommendation for gippities as research assistants: Treat them roughly like you'd treat RationalWiki, i.e. extremely shit at summaries / glosses / inferences, quite good at citing stuff and fairly good at finding random stuff, some of which is relevant.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2025-03-19T09:28:42.101Z · LW(p) · GW(p)

Recommendation for gippities as research assistants: Treat them roughly like you'd treat RationalWiki

Works for me, I don't use either!

comment by TsviBT · 2024-10-16T23:30:11.922Z · LW(p) · GW(p)

Protip: You can prevent itchy skin from being itchy for hours by running it under very hot water for 5-30 seconds. (Don't burn yourself; I use tap water with some cold water, and turn down the cold water until it seems really hot.)

Replies from: ryan_greenblatt, nathan-helm-burger↑ comment by ryan_greenblatt · 2024-10-17T00:18:51.934Z · LW(p) · GW(p)

I think this works on the same principle as this device which heats up a patch that you press to your skin. I've also found that it works to heat up a spoon in near boiling water and press it to my skin for a few seconds.

↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-10-17T00:18:01.100Z · LW(p) · GW(p)

I recently bought a battery-powered tool that creates a brief pulse of heat in a small metal applicator, specifically designed for treating itchy mosquito bites. It works well!

In the case of the mosquito bite, there is the additional aspect of denaturing the proteins left behind by the mosquito in order to cause them to be less allergenic.

comment by TsviBT · 2025-02-08T09:48:22.264Z · LW(p) · GW(p)

(These are 100% unscientific, just uncritical subjective impressions for fun. CQ = cognitive capacity quotient, like generally good at thinky stuff)

- Overeat a bit, like 10% more than is satisfying: -4 CQ points for a couple hours.

- Overeat a lot, like >80% more than is satisfying: -9 CQ points for 20 hours.

- Sleep deprived a little, like stay up really late but without sleep debt: +5 CQ points.

- Sleep debt, like a couple days not enough sleep: -11 CQ points.

- Major sleep debt, like several days not enough sleep: -20 CQ points.

- Oversleep a lot, like 11 hours: +6 CQ points.

- Ice cream (without having eaten ice cream in the past week): +5 CQ points.

- Being outside: +4 CQ points.

- Being in a car: -8 CQ points.

- Walking in the hills: +9 CQ points.

- Walking specifically up a steep hill: -5 CQ points.

- Too much podcasts: -8 CQ points for an hour.

- Background music: -6 to -2 CQ points.

- Kinda too hot: -3 CQ points.

- Kinda too cold: +2 CQ points.

(stimulants not listed because they tend to pull the features of CQ apart; less good at real thinking, more good at relatively rote thinking and doing stuff)

Replies from: mateusz-baginski, steve2152, mateusz-baginski, None↑ comment by Mateusz Bagiński (mateusz-baginski) · 2025-02-08T10:11:20.688Z · LW(p) · GW(p)

- Sleep deprived a little, like stay up really late but without sleep debt: +5 GQ points.

Are you sure about the sign here?

I think I'm more prone to some kinds of creative/divergent thinking when I'm mildly to moderately sleep-deprived (at least sometimes in productive directions) but also worse in precise/formal/mathetmatical thinking about novel/unfamiliar stuff. So the features are pulled apart.

Replies from: TsviBT↑ comment by TsviBT · 2025-02-08T10:26:47.704Z · LW(p) · GW(p)

No yeah that's my experience too, to some extent. But I would say that I can do good mathematical thinking there, including correctly truth-testing; just less good at algebra, and as you say less good at picking up an unfamiliar math concept.

↑ comment by Steven Byrnes (steve2152) · 2025-02-09T15:26:15.574Z · LW(p) · GW(p)

I feel like I’ve really struggled to identify any controllable patterns in when I’m “good at thinky stuff”. Gross patterns are obvious—I’m reliably great in the morning, then my brain kinda peters out in the early afternoon, then pretty good again at night—but I can’t figure out how to intervene on that, except scheduling around it.

I’m extremely sensitive to caffeine, and have a complicated routine (1 coffee every morning, plus in the afternoon I ramp up from zero each weekend to a full-size afternoon tea each Friday), but I’m pretty uncertain whether I’m actually getting anything out of that besides a mild headache every Saturday.

I wonder whether it would be worth investing the time and energy into being more systematic to suss out patterns. But I think my patterns would be pretty subtle, whereas yours sound very obvious and immediate. Hmm, is there an easy and fast way to quantify “CQ”? (This [LW · GW] pops into my head but seems time-consuming and testing the wrong thing.) …I’m not really sure where to start tbh.

…I feel like what I want to measure is a 1-dimensional parameter extremely correlated with “ability to do things despite ugh fields”—presumably what I’ve called “innate drive to minimize voluntary attention control” [LW · GW] being low a.k.a. “mental energy” being high. Ugh fields are where the parameter is most obvious to me but it also extends into thinking well about other topics that are not particularly aversive, at least for me, I think.

↑ comment by Mateusz Bagiński (mateusz-baginski) · 2025-02-08T12:11:56.693Z · LW(p) · GW(p)

(BTW first you say "CQ" and then "GQ")

Replies from: TsviBT↑ comment by TsviBT · 2025-02-08T13:00:08.564Z · LW(p) · GW(p)

Ohhh. Thanks. I wonder why I did that.

Replies from: mateusz-baginski↑ comment by Mateusz Bagiński (mateusz-baginski) · 2025-02-08T13:14:59.908Z · LW(p) · GW(p)

Major sleep debt?

Probably either one of (or some combination of): (1) "g" is the next consonant after "c" in "cognitive"; (2) leakage from "g-factor"; (3) leakage from "general(ly good at thinking)"

Replies from: TsviBT↑ comment by [deleted] · 2025-02-09T08:27:44.334Z · LW(p) · GW(p)

I notice the potential for combo between these two:

Sleep deprived a little, like stay up really late but without sleep debt: +5 CQ point

Oversleep a lot, like 11 hours: +6 CQ points.

(One can stay up till sleep deprived and oversleep on each sleep/wake cycle, though they'll end up with a non-24-hour schedule)

Replies from: TsviBT↑ comment by TsviBT · 2025-02-09T09:09:51.585Z · LW(p) · GW(p)

Yep! Without cybernetic control (I mean, melatonin), I have a non-24-hour schedule, and I believe this contributes >10% of that.

Replies from: None↑ comment by [deleted] · 2025-02-10T07:42:31.239Z · LW(p) · GW(p)

Also,

Background music: -6 to -2 CQ points.

That might generalize to "minimizing sound good", in which case I'd suggest trying these earplugs.

Generalizing to sensory deprivation in general, an easy way to do that is to lay in bed with eyes closed and lights off (not to sleep). (I've found this helpful, but maybe it's a side effect of not being distracted by a computer)

Replies from: TsviBT↑ comment by TsviBT · 2025-02-10T09:29:27.635Z · LW(p) · GW(p)

I quite dislike earplugs. Partly it's the discomfort, which maybe those can help with; but partly I just don't like being closed away from hearing what's around me. But maybe I'll try those, thanks (even though the last 5 earplugs were just uncomfortable contra promises).

Yeah, I mean I think the music thing is mainly nondistraction. The quiet of night is great for thinking, which doesn't help the sleep situation.

comment by TsviBT · 2025-01-14T09:31:21.600Z · LW(p) · GW(p)

"The Future Loves You: How and Why We Should Abolish Death" by Dr Ariel Zeleznikow-Johnston is now available to buy. I haven't read it, but I expect it to be a definitive anti-deathist monograph. https://www.amazon.com/Future-Loves-You-Should-Abolish-ebook/dp/B0CW9KTX76

The description (copied from Amazon):

A brilliant young neuroscientist explains how to preserve our minds indefinitely, enabling future generations to choose to revive us

Just as surgeons once believed pain was good for their patients, some argue today that death brings meaning to life. But given humans rarely live beyond a century – even while certain whales can thrive for over two hundred years – it’s hard not to see our biological limits as profoundly unfair. No wonder then that most people nearing death wish they still had more time.

Yet, with ever-advancing science, will the ends of our lives always loom so close? For from ventilators to brain implants, modern medicine has been blurring what it means to die. In a lucid synthesis of current neuroscientific thinking, Zeleznikow-Johnston explains that death is no longer the loss of heartbeat or breath, but of personal identity – that the core of our identities is our minds, and that our minds are encoded in the structure of our brains. On this basis, he explores how recently invented brain preservation techniques now offer us all the chance of preserving our minds to enable our future revival.

Whether they fought for justice or cured diseases, we are grateful to those of our ancestors who helped craft a kinder world – yet they cannot enjoy the fruits of the civilization they helped build. But if we work together to create a better future for our own descendants, we may even have the chance to live in it. Because, should we succeed, then just maybe, the future will love us enough to bring us back and share their world with us.

comment by TsviBT · 2025-03-16T02:40:07.071Z · LW(p) · GW(p)

Discourse Wormholes.

In complex or contentious discussions, the central or top-level topic is often altered or replaced. We're all familiar from experience with this phenomenon. Topologically this is sort of like a wormhole:

Imagine two copies of minus the open unit ball, glued together along the unit spheres. Imagine enclosing the origin with a sphere of radius 2. This is a topological separation: The origin is separated from the rest of your space, the copy of that you're standing in. But, what's contained in the enclosure is an entire world just as large; therefore, the origin is not really contained, merely separated. One could walk through the enclosure, and pass through the unit ball boundary, and then proceed back out through the unit ball boundary into the other alternative copy of .

You come to a crux of the issue, or you come to a clash of discourse norms or background assumptions; and then you bloop, where now that is the primary motive or top-level criterion for the conversation.

This has pluses and minuses. You are finding out what the conversation really wanted to be, finding what you most care about here, finding out what the two of most ought to fight about / where you can best learn from each other / the highest leverage ideas to mix. On the other hand, you lose some coherence; there is disorientation; it's harder to build up a case, integrate information into single nodes for comparison; and it's harder to follow. [More theory could be done here.]

How to orient to this? Is there a way to use software to get more of the pluses and fewer of the minuses, e.g. in order to have better debates [LW · GW]? E.g. by providing orienting structure with signposts and reminders but without clumsy artificial rigid restrictions on the transference of salience?

Replies from: mateusz-baginski↑ comment by Mateusz Bagiński (mateusz-baginski) · 2025-03-16T07:08:23.239Z · LW(p) · GW(p)

A particularly annoying-to-me kind of discourse wormhole:

Alice starts arguing and the natural interpretation of her argument is that she's arguing for claim X. As the discussion continues and evidence/arguments[1] amass against X, she nimbly switches to arguing for an adjacent claim Y, pretending that Y is what she's been arguing for all along (which might even go unnoticed by her interlocutors).

- ^

Or even, eh, social pressures, etc.

↑ comment by TsviBT · 2025-03-16T07:43:50.267Z · LW(p) · GW(p)

Mhm. Yeah that's annoying. Though in her probabilistic defense,

-

In fact her salience might have changed; she might not have noticed either; it might not even be a genuinely adversarial process (even subconsciously).

-

She might reasonably not know exactly what position she wants to defend, while still being able to partially and partially-truthfully defend it. For example, she might have a deep intuition that incest is morally wrong; and then give an argument against incest that's sort of true, like "there's power differences" or "it could make a diseased baby"; and then you argue / construct a hypothetical where those things aren't relevant; and then she switches to "no but like, the family environment in general has to be decision-theoretically protected from this sort of possibility in order to prevent pressures", and claims that's what she's been arguing all along. Where from your perspective, the topic was the claim "disease babies mean incest is bad", but from hers it was "something inchoate which I can't quite express yet means incest is bad". And her behavior can be cooperative, at least as described so far: she's working out her true rejection by trying out some rejections she knows how to voice.

-