Posts

Comments

It's straightforward to disprove: they should be able to argue for their views in a way that stands up to scrutiny.

I'd like to see more intellectual scenes that seriously think about AGI and its implications. There are surely holes in our existing frameworks, and it can be hard for people operating within them to spot. Creating new spaces with different sets of shared assumptions seems like it could help.

Absolutely not, no, we need much better discovery mechanisms for niche ideas that only isolated people talk about, so that the correct ideas can be formed.

Thank you for writing this!

Hm. I super like the notion and would like to see it implemented well. The very first example was bad enough to make me lose interest: https://russellconjugations.com/conj/1eaace137d74861f123219595a275f82 (Text from https://www.thenewatlantis.com/publications/the-anti-theology-of-the-body)

So I tried the same thing but with more surrounding text... and it was much better!... though not actually for the subset I'd already tried above. https://russellconjugations.com/conj/3a749159e066ebc4119a3871721f24fc

A longer sentence is produced by, and is asking the reader to be, putting more things together in the same [momentary working memory context]. Has advantages and disadvantages, but is not the same.

Yes, and this also applies to your version! For difficult or subtle thoughts, short sentences have to come strictly after the long sentences. If you're having enough such thoughts, it doesn't make sense to restrict long sentences out of communication channels; how else are you supposed to have the thoughts?

On second/third thought, I think you're making a good point, though also I think you're missing a different important point. And I'm not sure what the right answers are. Thanks for your engagement... If you'd be interested in thinking through this stuff in a more exploratory way on a recorded call to be maybe published, hopefully I'll be set up for that in a week or two, LMK.

On the "self-governing" model, it might be that the blind community would want to disallow propagating blindness, while the deaf community would not disallow it:

https://pmc.ncbi.nlm.nih.gov/articles/PMC4059844/

Judy: And how’s… I mean, I know we’re talking about the blind community now, but in a DEAF (person’s own emphasis) community, some deaf couples are actually disappointed when they have an able bodied… child.

William: I believe that’s right.

Paul: I think the majority are.

Judy: Yes. Because then …

Margaret: Do they?

Judy: Oh, yes! It’s well known down at the deaf centre. So some of them would choose to have a deaf baby! (with an incredulous voice)

Moderator: Actually, a few years ago a couple chose to have a deaf baby.

Margaret: Can’t understand that!

Judy: I’ve never heard of anybody in our blind community talk like that.

Paul: To perpetuate blindness! I don’t know anybody in the blind community who’d want to do that.

I am trying to point out to you that almost everyone in our society has the view that blinding children is evil.

Our society also has the view that people should be allowed to reproduce freely, even if they'll pass some condition on to their child.

you are influencing them at the stage of being an embryo

I'm mainly talking about engineering that happens before the embryo stage.

That's just not a morally coherent distinction, nor is it one the law makes

Of course it's one the law makes. IIUC it's not even illegal for a pregnant woman to drink alcohol.

If you want to start a campaign to legalize the blinding of children, well, we have a free speech clause, you are entitled to do that. Have you considered maybe doing it separately from the genetic engineering thing?

I can't tell if you're strawmanning to make a point, or what, but anyway this makes absolutely no sense.

Whether you do it by genetic engineering or surgically or through some other means is entirely beside the point. Genetic engineering isn't special.

I'm not especially distinguishing the methods, I'm mainly distinguishing whether it's being done to a living person. See my comment upthread https://www.lesswrong.com/posts/rxcGvPrQsqoCHndwG/the-principle-of-genomic-liberty?commentId=qnafba5dx6gwoFX4a

We get genetic engineering by showing people that it is just another technology, and we can use it to do good and not evil, applying the same notions of good and evil that we would anywhere else.

I think you're fundamentally missing that your notions of good and evil aren't supposed to automatically be made into law. That's not what law is for. See a very similar discussion here: https://www.lesswrong.com/posts/JFWiM7GAKfPaaLkwT/the-vision-of-bill-thurston?commentId=Xvs2y9LWbpFcydTJi

The eugenicists in early 20th century America also believed they were increasing good and getting rid of evil. Do you endorse their policies, and/or their general stance toward public policy?

Any normal person can see that a genetic engineer should be subject to the same ethical and legal constraints there as the surgeon. Arguing otherwise will endanger your purported goal of promoting this technology.

Maybe, I'm not sure and I'd like to know. This is an empirical question that I hope to find out about.

This notion of "erasing a type of person" also seems like exactly the wrong frame for this. When we cured smallpox, did we erase the type of person called "smallpox survivor"? When we feed a hungry person, are we erasing the type of person called "hungry person"? None of this is about erasing anyone. This is about fixing, or at least not intentionally breaking, people.

That's nice that you can feel good about your intentions, but if you fail to listen to the people themselves who you're erasing, you're the one who's being evil. When it comes to their own children, it's up to them, not you. If you ask people with smallpox "is this a special consciousness, a way of life or being, which you would be sad to see disappear from the world?", they're not gonna say "hell yeah!". But if you ask blind people or autistic people, some fraction of them will say "hell yeah!". Your attitude of just going off your own judgement... I don't know what to say about it yet, I'm not even empathizing with it yet. (If you happen to have a link to a defense of it, e.g. by a philosopher or other writer, I'd be curious.)

Now, as I've suggested in several places, if the blind children whose blind parents chose to make them blind later grow and say "This was terrible, it should not have happened, the state should not allow this", THEN I'd be likely to support regulation to that effect.

i think i'm in the wrong universe, can someone at tech support reboot the servers or something? it's not reasonable for you to screw up something as simple as putting paying customers in the right simulation . and then you're like "here's some picolightcones". if you actually cared it would be micro or at least nano

Yeah, cognitive diversity is one of those aspects that could be subject to some collapse. Anomaly et al.[1] discuss this, though ultimately suggest regulatory parsimony, which I'd take even further and enshrine as a right to genomic liberty.

I feel only sort-of worried about this, though. There's a few reasons (note: this is a biased list where I only list reasons I'm less worried; a better treatment would make the opposite case too, think about bad outcomes, investigate determinative facts, and then make judgements, etc.):

- Although I want the tech to be strong, inexpensive, and widely accessible, in practice it will of course have a long road of innovation and uptake; I think I should be surprised if, 15 years after strong germline engineering technology is first relatively inexpensive (say for $10k / baby), more than half of parents in the US are using the technology.

- Hopefully, many clinics would nudge parents to make a more reflective and personal choice, e.g. by asking "what about us do we want to see in our kids", rather than just asking "how do we make a normal person / elite person / etc.". (I'm not remotely confident clinics would do this, but.)

- For better or worse, there will be a long period of time (decades, probably, at least) in which many traits, including / especially many cognitive traits (subfactors of intelligence, wisdom, curiosity, determination, etc etc) will be only weakly or not at all genomically steerable. It's hard to define and measure many traits; and even if you can measure them, it's hard to collect enough genotype/phenotype data to find weak polygenic associations. So there will be a lot of cognitive variation that isn't subject to direct genomic vectoring. (Though they could be more weakly vectored if they correlate with traits, e.g. Big Five personality traits, that are studied and vectored.)

- I expect the gene pool to remain quite diverse for a long time.

- I expect many (most?) people to want to pass on their genes.

- (As a touchpoint, though I'm probably kinda weird in this, I have some value placed on passing on most of my DNA segments at least once--though I certainly also want to pass on "worse" segments much less frequently than "better" segments.)

- Most genomic vectoring methods (selection and editing) don't affect the actual genome very much. You and I differ at O(10^6.5) genetic loci; strong editing edits O(10^2.5) loci; selection... well I'm not sure about the math, but it would probably be something like O(10^3) changes in expectation. (This doesn't necessarily apply to whole genome synthesis, or to selection schemes that involve many donors, which could in theory hugely amplify some DNA segments in the next generation, though doing so to an extreme degree would be inadvisable.)

- I therefore expect there to remain a large amount of genetic diversity. This doesn't mean that there has to be trait-diversity, but it should imply that it would remain fairly easy (using germline engineering) to recover any previously-extant traits. In other words, even fairly extreme trait-diversity collapse would be not so hard to partially reverse (I mean, parents who want to buck the trend could do so).

- For most traits, especially cognitive traits, there's going to be lots variation that isn't controlled by GE. E.g. nurture effects, environmental / developmental randomness, and nonlinear and weak effects of genes that haven't been picked up in polygenic scores. This implies that, while in many cases you can significantly push the mean value of the trait among your possible future children, you can't greatly tighten the distribution of the trait. The exact shape of this depends on the trait (how much we understand the genetics, how much overall variation there is, what the distribution-tails of the trait imply in practice for behavior). My guess is that in practice this implies you still have lots of trait-variation.

- I expect that for lots of parents, for lots of traits, they just won't especially care to or choose to genomically vector that trait much (though maybe it would be more common to avoid extremes of the trait).

- For example, do I want to make my kid a bit uncommonly extraverted or introverted? Plausibly I'd develop some preference after thinking about it more, but I have less of an immediate preference compared to some other traits.

- I, and I suspect many other parents, would be somewhat suspicious of many supposed polygenic scores and trait constructs and measurements. Such parents would either not GV those traits, or GV them less strongly, or only GV them after investing more thinking (and therefore would make generally better decisions).

- I suspect many parents would specifically have a preference to not make genomic choices about e.g. personality traits.

- I weakly expect lots of parents would want kids with a variety of values of many given traits. I would. E.g. if there's a tradeoff between different subfactors of intelligence, I'd want one kid with a stronger genomic foundation for one of the subfactors, another with another, etc.; I might plausibly want a kid with slightly low conscientiousness (higher creativity, maybe) and another with slightly high conscientiousness (more industrious, maybe).

- I expect there's quite a lot of diversity in what parents view as desirable traits. Different parents will differently emphasize beneficence (wellbeing of the child), altruism (how the child contributes to helping others), or other criteria; and different parents will have different opinions about what traits contribute to those outcomes. I therefore suspect that collapsing all the way to just the traits that parents think they want in/for their future children, while that would plausibly be pretty bad, would still be quite far from total homongeneity.

- I suspect many parents would want to see themselves in their children, i.e. pass on some of their traits. (I would.) So diversity of parents is reflected somewhat in diversity of kids.

- In theory, in the medium-term (a couple generations), there should be socioeconomic feedback towards an equilibrium, where if a useful niche-strategy is undersupplied, then it's demanded more, and some parents eventually notice and respond by trying to fill that niche. (On the other hand, it could be that some niches collapse due to undersupply, which could be harder to reverse.)

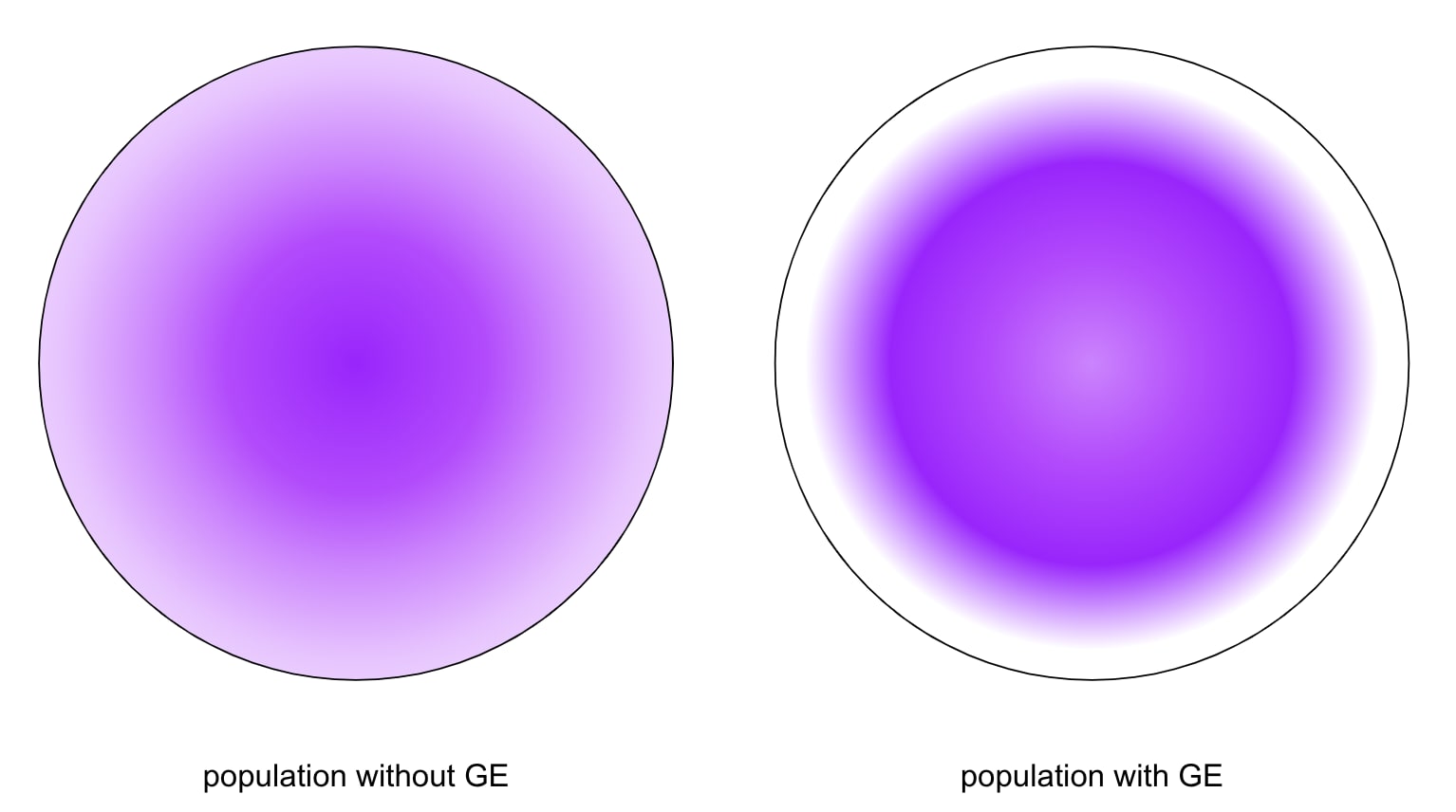

- Even if the total envelope of trait-values decreases, that doesn't necessarily mean the desirable variation decreases. In fact, what I hope, and also somewhat expect, is that you get an increased "medium frontier" of humanity: fewer extreme spikes in some dimensions (e.g. maybe no one with disagreeableness quotient >160, or sometehing), but more total weirdness because lots of kids each have several traits on which they're 2 SDs away from the mean:

It would be interesting to poll parents to see what sorts of considerations they might take into account, and decisions they might make. One could also ask embryo screening companies.

Anomaly, Jonathan, Christopher Gyngell, and Julian Savulescu. ‘Great Minds Think Different: Preserving Cognitive Diversity in an Age of Gene Editing’. Bioethics 34, no. 1 (January 2020): 81–89. https://doi.org/10.1111/bioe.12585. ↩︎

So I guess one direction this line of thinking could go is how we can get the society-level benefits of a cognitive diversity of minds without necessarily having cognitively-uneven kids grow up in pain.

Absolutely, yeah. A sort of drop-dead basic thing, which I suppose is hard to implement for some reason, is just not putting so much pressure on kids--or more precisely, not acting as though everything ought to be easy for every kid. Better would be skill at teaching individual kids by paying attention to the individual's shape of cognition. That's difficult because it's labor-intensive and requires open-mindedness. I don't know anything about the economics of education and education reform, but yeah, it would be good to fix this... AI tutors could probably improve over the status quo in many cases, but would lack some important longer-term adaptation (like, actually learning how the kid thinks and what ze can and can't easily do).

With or without ASI, certainly morphological autonomy is more or less a universal good.

IDK what to say... I guess I'm glad you're not in charge? @JuliaHP I've updated a little bit that AGI aligned to one person would be bad in practice lol.

I am the Law, the Night Watchman State, the protector of innocents who cannot protect themselves. Your children cannot prevent you from editing their genes in a way that harms them, but the law can and should.

I do think this is in interesting and important consideration here; possibly the crux is quite simply trust in the state, but maybe that's not a crux for me, not sure.

if we had a highly competent government that could be trusted to reasonably interpret the rules,

Yeah, if this is the sort of thing you're imagining, we're just making a big different background assumption here.

I don't think we have enough evidence to determine that removing the emotion of fear is "unambiguous net harm", but it would be prohibited under your "no removing a core aspect of humanity" exception.

Yeah, on a methodological level, you're trying to do a naive straightforward utilitarian consequentialist thing, maybe? And I'm like, this isn't how justice and autonomy and the law work, it's not how politics and public policy works, it's not how society and cosmopolitanism work. (In this particular case, my justification about human dignity maybe doesn't immediately make sense to you, but I think that not understanding the justification is a failure on your part--the justification might ultimately be wrong, I'm not at all confident, but it's a real justification. See for example "What’s really wrong with genetice nhancement: a second look at our posthuman future".)

Therefore, you seem to agree that going from "chronic severe depression" to "typical happiness set point" is an unambiguous good change. (Correct me if I am wrong here.)

No, this is going too far. The exception there would be for a medium / high likelihood of really bad depression, like "I can't bring myself to work on anything for any sustained time, even stuff that's purely for fun, I think about killing myself all the time for years and years, I am suffering greatly every day, I take no joy in anything and have no hope", that kind of thing. Going from "once in a while gets pretty down for a few weeks, has to take a bit of time off work and be sad in bed" is probably fine, and probably has good aspects, even if it is net-bad / net-dispreferable for most people and is somewhat below typical happiness set-point. Mild high-functioning bipolar might be viewed by some people with that condition as important to who they are, and a source of strength and creativity. Or something, I don't know. Decreasing their rates of depressive episodes by getting rid of bipolar is not an unambiguous good by any stretch.

I think a lot of people who say they anti-value intelligence are coping (I am dumb therefore dumbness is a virtue) or being tribalistic (I hate nerdy people who wear glasses, they remind me of the outgroup). If they perceived their ingroup and themselves as being intelligent, I think they would change their tune.

That's all well and fine, but you're still doing that thing where you say "X is unambiguously good" and I'm like "But a bunch of people say that X is bad" and you're like "ha, well, you see, their opinion is bullshit, betcha didn't think of that" and I'm like, we're talking past each other lol.

Anyway thanks for engaging, I appreciate the contention and I found it helpful even though you're so RAWNG.

I'm genuinely unsure whether or not they would. Would be interesting to know.

One example, from "ASAN Statement on Genetic Research and Autism" https://autisticadvocacy.org/wp-content/uploads/2022/03/genetic-statement-recommendations.pdf :

ASAN opposes germline gene editing in all cases. Germline gene editing is editing a person’s genes that they pass down to their children. We do not think scientists should be able to make gene edits that can be passed down to a person’s children. The practice could prevent future generations of people with any gene-related disability from being born. This is eugenics and a form of ableism.

ASAN opposes non-heritable gene editing for autism. This is when scientists edit a person’s genes in a way that can’t be passed down to their children. ASAN is against ever using this kind of gene editing for autism. We think it would be used to treat or “cure” autism. We do not want “cures” for autism. We want to continue being autistic. We want there to be rules saying people can’t use genetic research to find a “cure” for autism. We are setting this standard for autism and the autistic community because it is what most of our community members believe.

Not directly comparable, but related. (I disagree with their reasoning and conclusions, I think.)

Interestingly, they make the same suggestion I mentioned above:

Some disability communities might want non-heritable gene editing. For example, some people with epilepsy are okay with non-heritable gene editing for the genes that cause their seizures. We think it should be up to each disability community to decide if they are okay with nonheritable gene editing for their disability. Researchers and policymakers should listen to each disability community about how that community feels about non-heritable gene editing.

Yes, blind people are the experts here. If 95% of blind people wish they weren't blind, then (unless there is good reason to believe that a specific child will be in the 5%) gene editing for blindness should be illegal.

This is absolutely not what I'm suggesting. I'm suggesting (something in the genre of) the possibility of having that if 95% of blind people decide that gene editing for blindness should be illegal, then gene editing for blindness should be illegal. It's their autonomy that's at issue here.

Oh, I guess, why haven't I said this already: If you would, consider some trait that:

- you have, and

- is a mixed blessing, and

- that many / most people would consider a detriment, and

- that is uncommon.

I'l go first:

In my case, besides being Jewish lol, I'm maybe a little schizoid, meaning I have trouble forming connections / I tend to not maintain friendships / I tend to keep people at a distance, in a systematic / intentional way, somewhat to my detriment. (If this is right, it's sort of mild or doesn't fully fit the wiki page, but still.) So let's say I'm a little schizoid.

This is a substantively mixed blessing: I have few lasting relationships and feel lonely / disconnected / malnourished, and am sort of conflicted about that because a lot of my intuitions say this is better than any available alternative; but on the other hand, I am a free thinker, I can see things most others can't see, I can pursue good things that most others won't pursue.

Now, if someone reads the wiki page, they will most likely come away saying "hell no!", and would want to nudge their child's genome away from being like that. Fair enough. I wouldn't argue against that. I might even do the same, I'm not sure; on the other hand, I do think I have a way of thinking that's fairly uncommon and interesting and useful and in some ways more right than the default. Either way, there's no fucking way that I want the state to be telling me which personality traits I can and can't pass on to my child.

Ok now you:

E.g. do you have a neuroatypicality such as autism, ADHD, bipolar, dyslexia? Some sort of dysphoria or mental illness? A non-heterosexual orientation? Etc. (I'm curious, but obviously not expecting you to share; just asking you to think of it.)

If so, consider the prospect of the state saying that you can't pass this trait on.

If not, well nevermind lol. There's a bit more theory here, just in case it helps; specifically:

Beyond that, any phenotype at all will correspond to some kind of consciousness. Someone with insomnia, even if they acknowledge that having insomnia is almost entirely worse than not having insomnia, might still wish to have other people with insomnia to be friends with, simply because an insomniac has a somewhat different experience and way of being than a somniac and can therefore understand and relate specially to insomniacs. So removing any type of person is to some extent changing who humanity is.

Finally, a given type of person (so to speak) might view themselves as part of a “cross-sectional coalition”. In other words, even though a deaf person is not only a deaf person, and views zerself as part of the whole human collective, ze might also view zerself as being part of a narrower collective—deaf people—which has its own being, rights, authority, autonomy, instrumental value, and destiny.

I think "edited children will wish the edits had not been made" should be added to the list of exceptions.

So to be clear, your proposal is for people who aren't blind to decide what hypothetical future blind children will think of their parents's decisions, and that judgement should override the judgement of the blind parents themselves? This seems wild to me.

... Ok possibly I could see some sort of scheme where all the blind people get to decide whether to regulate genomic choice to make blind children? Haven't thought about this, but it seems pretty messy and weird. But maybe.

My position is "ban genomic edits that cause traits that all reasonable cost-benefit analysis agree are bad", where "reasonable" is defined in terms of near-universal human values.

But it kinda sounds like your notion of "near-universal" ends up just being whatever your CBA said? I guess I'm not sure what would sway you. Suppose for example the following made-up hypothetical: there's a child alive today whose blind parents intentionally selected an embryo that would be blind. Suppose that child says "Actually, I'm happy my parents made that choice. I feel close to them, part of a special community, where we share a special way of experiencing the world; we sense different things than other people, and consequently we have different tastes, and this gives us a bit of a different consciousness. Yes there are difficulties, but I love my life, and I wouldn't want to have been sighted.". In this case, do you update?

Maybe we're at an impasse here. At some point I hope to set up convenient streaming / podcasting; if I'd already done so I'd invite you on to chat, which might go better.

I would prefer a "do no harm" principle

I'm still unclear how much we're talking past each other. In this part, are you suggesting this as law enforced by the state? Note that this is NOT the same as

For instance, humans near-universally value intelligence, happiness, and health. If an intervention decreases these, without corresponding benefits to other things humans value, then the intervention is unambiguously bad.

because you could have an intervention that does result in less happiness on average, but also has some other real benefit; but isn't this doing some harm? Does it fall under "do no harm"?

And as always, the question here is, "Who decides what harm is?".

(There may be some cases where "children are happy about the changes on net after the fact" is not restrictive enough. For instance, suppose a cult genetically engineers its children to be extremely religious and extremely obedient, and then tells them that disobedience will result in eternal torment in the afterlife. These children will be very happy that they were edited to be obedient.)

Yes, I agree, and in fact specifically brought up (half of) this case in the exclusion for permanent silencing. Quoting:

For example, it could be acceptable to ban genomic choices that would make a future child supranormally obedient, to the point where they are very literally incapable of communicating something they have not been told to communicate. [...]

You write:

Down syndrome removes ~50 IQ points. The principle of genomic liberty would give a Down syndrome parent with an IQ of 90 the right to give a 140 IQ embryo Down syndrome, reducing the embryo's IQ to 90 (this is allowed because 90 IQ is not sufficient to render someone non compos mentis).

In practice, my guess is that this would pose a quite significant risk of making the child non compos mentis, and therefore unable to sufficiently communicate their wellbeing; so it would be excluded from protection. But in theory, yes, we have a disagreement here. If the parent is compos mentis, then who the hell are you to say they can't have a child like themselves?

For instance, humans near-universally value intelligence,

How many people have you talked to about this topic? Lots of people I talk to value intelligence and would want to give their future kid intelligence; lots of people value it but say they wouldn't want to influence; some people say they don't value; and some even say they anti-value it (e.g. preferring their kid to be more normal).

I'm not sure how to communicate across a gap here... There's a thing that it seems like you don't understand, that you should understand, about law, the state, freedom, coercion, etc. There's a big injustice in imposing your will on others, and you don't seem to mind this. This principle of injustice is far from absolute; I endorse lots of impositions, e.g. no gouging out your child's eyes. But you seem to just not mind about being like "ok, hm, which ways of living are good, ok, this is good and this is good, this is bad and this is bad, OK GUYS I FIGURED IT OUT, you may do X and you may not do Y, that is the law, I have spoken". Maybe I'm missing you, but that's what it sounds like. And I just don't think this is how the law is supposed to work.

There is totally a genuine tough issue here, where the law should have some interest in protecting everyone, including young children from their parents, and yes to some extent even future children. But I feel our communication is dancing around this, where maybe you just don't agree that the law should be very reluctant to impose?

To you, "the principle of genomic liberty" is the best policy

No! Happy to hear alternatives. But I do think it's better than "prospectively ban genomic choices for traits that our cost-benefit analysis said are bad". I think that's genuinely unjust, partly because you shouldn't be the judge of whether another person's way of being should exist.

I think the future opinion of the gene-edited children is important. Suppose 99% of genetically deafened children are happy about being deaf as adults, but only 8% of genetically blinded children are happy about being blind. In that case, I would probably make the former legal and the latter illegal.

Right, so if you read my post on genomic liberty, you'll see that I do put stock in what these children will say. But that's strictly the responsibility of the next generation.

But I don't see a difference between "immediately after birth, have the doctor feed your child a chemical that painlessly causes blindness" and gene editing.

Right, so fewer differences apply, but some do. An already-born child gets some legal protections that a 10-day fetus, or that an unfertilized egg, do not get. As a political matter, they are treated differently, and for good reason. (Maybe not for eternal reasons, like maybe a transhuman society would work out how to make things more continuous, but that's not very practically relevant.)

I would be happy to accept "the principle of genomic liberty" over status-quo, since it is reasonably likely that lawmakers will create far worse laws than that.

Ok. Then I'm not sure we even disagree, though we might. If we do, it would be about "ideal policies". My post about Thurston (which was about as successful as I expected at making the point, which is to say, medium at best) is trying to strike some doubt in your heart about the ideal policy, because you don't know what it's like to be other people and you don't know what sort of weird ways they might be thinking that you couldn't anticipate. It's a pretty abstract way of making the case; a more direct way would have been to find some blind/deaf/autistic/dwarf/trans/etc. people talking about valuing the special aspects of how they are, etc.

Mainly I want to strike a bit of doubt in your heart about the idea policy because I want you to not be committed to making that part of the practical policy about genomic engineering, but it sounds like we don't necessarily have a conflict there.

Is your position that at least one parent must be blind/deaf/dwarf in order to edit the child to be the same? If so, that is definitely an improvement over what I thought your position was.

Sort of, though I'm not totally sure. To fall under the propagative liberty tentpole protection, yeah, at least one would have to be blind. (Well, if I'm changing propagative liberty to only apply to phenotypes.)

Two sighted parents wanting to make their child blind seems like a pretty weird case; who would do that?? (Ok fine maybe someone would do that.) The principle of genomic liberty leaves that in a sort of gray area. It's neither protected under any of the tentpole principles, nor does it fall under any of the explicitly recognized exceptions to GL protection. So the weaker GL, which relies more on the tentpole principles, would say "ok, the state can make laws prohibiting this". The strongest GL principle that fits my proposal would fight any case that doesn't fall under the explicit exceptions, in order to make society consider the case carefully, including this case.

However, the non-intervention tentpole protection would allow parents to decline to use available technology to prevent their future child from being blind.

I'm not sure what the difference is supposed to be between "blinding your children via editing their genes as an embryo" and "painlessly blinding your children with a chemical immediately after birth". The outcome is exactly the same.

Did you read the linked comment? It has political differences. The narrow causal outcome being the same isn't the totality of the relevant considerations. Another difference would be that it's more damaging to the parents's souls to do the chemicals thing, as much as you want to wave your hands about how the philosophy proves it should be the same.

So it seems your argument is "even if all reasonable cost-benefit analyses agree, things are still ambiguous". Is that really your position?

Yeah. Well, we're being vague about "reasonable".

If by "reasonable" you mean "in practice, no one could, given a whole month of discussion, argue me into thinking otherwise", then I think it's still ambiguous even if all reasonable CBAs agree.

If by "reasonable" you mean "anyone of sound mind doing a CBA would come to this conclusion", then no, it wouldn't be ambiguous. But I also wouldn't say that it should be protected. Basically by assumption, what we're protecting is genomic liberty of parents; we're discussing the case where a blind parent of sound mind, having been well-informed by their clinic of the consequences and perhaps given an enforced period of reflection, and hopefully having consulting with their peers, has decided to make their child blind.

If there's no parent of sound mind making such a decision, then there's no question of policy that we have to resolve. If there is, then I'm saying in most cases (with some recognized exceptions) it's ambiguous.

Down syndrome, or Tay-Sachs disease

I'd have to learn more, but many forms of these conditions (and therefore the condition simpliciter, prospectively) would probably prevent the child from expressing their state of wellbeing, through death or unsound mind. Therefore these would fall under the recognized permanent silencing exception to the principle of genomic liberty, and wouldn't be protected forms of propagation. Further, my impression is that living to adulthood with Tay-Sachs is quite rare; most people with Tay-Sachs variants wouldn't be passing on a phenotype. (I did say "right to propagate their own genes and their own traits", but I debated including genes internally, and I could be suaded that the propagative liberty tentpole principle, specifically, should only apply to phenotypes.) Finally, if the parents in question are severely non compos mentis, their genomic liberty is also not necessarily protected by the principle.

Regarding blind/deaf/dwarf: I wonder if, in real life, talking to such a person who is describing their experience and values, you would then be able to bring yourself in good conscience to say "yes, the state should by force prevent your way of life".

It is already illegal to blind your children after they are born, and this is a good thing imo.

Already living people are clearly different. You could argue the difference shouldn't matter, but it would take more argument. Elaboration here: https://www.lesswrong.com/posts/rxcGvPrQsqoCHndwG/the-principle-of-genomic-liberty?commentId=qnafba5dx6gwoFX4a

I am quite certain that, even after thinking about it for years, I would still be against feeding children lead or genetically altering them to be less intelligent.

I think you might still be 100% percent missing my point. I'm not arguing for it being moral to do these things. I think it's immoral. I'm trying to construct a political coalition, and I'm arguing that you shouldn't be so confident in your judgements that you impose them on others, in this case. Elaboration here: https://www.lesswrong.com/posts/rxcGvPrQsqoCHndwG/the-principle-of-genomic-liberty?commentId=PnBH5HHszc7G5FK5s

"You cannot blind your children, unless you do it through gene editing, then it's totally fine"

FYI, you're strawmanning my position, in case you care about understanding it.

But really my objection is why the fuck would you think causality works like that?

Not sure why you're saying "causality" here, but I'll try to answer: I'm trying to construct an agreement between several parties. If the agreement is bad, then it doesn't and shouldn't go through, and we don't get germline engineering (or get a clumsy, rich-person-only version, or something).

Many parties have worries that route through game-theory-ish things like slippery slopes, e.g. around eugenics. If the agreement involves a bunch of groups having their reproduction managed by the state, this breaks down simple barriers against eugenics. I suppose you might dismiss such worries, but I think you're probably wrong to do so--there is actually significant overlap between your apparent stances and the stances of eugenicists, though arguably there's a relevant distinction where you're thinking of harming children rather than social burdens, not sure. The overlap is that you think the state should make a bunch of decisions about individuals's reproduction according to what the state thinks is good, even if the individuals would strongly object and the children would have been fine.

So, first of all, I'm just not sufficiently sure that it's wrong to make your future child blind. I think it's wrong, but that's not a good enough reason to impose my will on others. Maybe in the future we could learn more such that we decide it is wrong, but I don't think that's happened yet. But if we're talking about forcibly erasing a type of person, it's not remotely enough to be like "yeah I did the EV calculation, being my way is better". For reference, certainly the state should prevent a parent from blinding their 5 year old; but the 5 year old is now a person. I acknowledge that the distinction is murky, but I think it's silly to ignore the distinction. Being already alive does matter.

Second of all, it's not just blind people. It's all the categories I listed and more. Are you going to tell gay people that they can't make their future child gay? Yeah? No? What about high-functioning autists? ADHD? Highly creative, high-functioning mild bipolar? How are you deciding? What criterion? Do you trust the state with this criterion? Should other people?

Why? A human body without a meaningful nervous system inside of it isn't a morally relevant entity, and it could be used to save people who are morally relevant.

Isn't that what I just said? Not sure whether or not we disagree. I'm saying that if you just stunt the growth of the prefrontal cortex, maybe you can argue that this makes the person much less conscious or something, but that's not remotely enough for this to not be abhorrent with a nonnegligible probability; but if you prevent almost all of the CNS from growing in the first place, maybe this is preferable to xenochimeric organs.

If I imagine myself growing up blind, and then I learned that my parents had engineered my genome that way, I would absolutely see that as a boundary violation and a betrayal of bedrock civility.

Fair enough, I think I would too. As I argued in the article, this is one mechanism by which the long-term results of genomic liberty are supposed to be good: children whose parents made genomic choices that weren't prohibited but maybe should have been, can speak out, both to convince other people to not make those choices, and to get new laws made.

But if you mean something stronger, "would turn down sight if it were offered for free", it seems obvious to me that any blind person expressing that view has something seriously wrong in their head in addition to the blindness,

Ok. And does this opinion of yours cause you to believe that if given the chance, you ought to use state power to, say, involuntarily sterilize such a person?

We don't let adults abuse children in any other way, even if the adult was subject to the same sort of abuse as a child and says they approve of it.

We do let adults coerce their children in all sorts of ways. It's considered bad to not force your child to attend school, which causes very many children significant trauma, including myself. Corporal punishment of children is legal in the US. I think it's probably quite bad for parents to do that, but we don't prohibit it.

We may have uncertainty about whether a particular person we can conceptualize will actually come to exist in the future, but if they do come to exist in the future, then they aren't hypothetical even now.

I agree with this morally, but not as strongly in ethical terms, which is why I listed it under ethics (maybe politically/legally would have been more to the point though).

So it absolutely does make sense to have laws to protect future people just as much as current people.

Not just as much, no, I don't think so. Laws aren't about making things better in full generality; they're about just resolution of conflict, solving egregious collective action problems, protecting liberty from large groups--stuff like that.

Blind people don't strike me as a "type of person" in the relevant sense. A blind person is just a person who is damaged in a particular way, but otherwise they are the same person they would be with sight.

That's nice. I bet we could find lots of examples of people with some condition that you would argue should be prohibited from propagating in this way, and who you'd describe as "just a person who is damaged in a particular way", and who would object to the state imposing itself on their procreative liberty. Are you disagreeing with this statement? Or are you saying that the state should impose itself anyway?

Such people are monsters. They are the enemy. Depriving them of the power to effectuate their goals is a moral crusade worth making enormous sacrifices for.

Ok. So to check, you're saying that a world with far fewer total blind / deaf / dwarf people, and with far greater total health and capability for nearly literally everyone including the blind / deaf / dwarfs, is not worth there being a generation of a few blind kids whose parents chose for them to be blind? That could be your stance, but I want to check that I understand that that's what you're saying. If so, could you expand? Would you also endorse forcibly sterilizing currently living people with high-heritability blindness, who intend to have children anyway?

If you are concerned the politics of advancing genetic engineering, suggesting that it might be ok seems like a blunder

Not sure what you mean by "ok" here. I would strongly encourage parents to not make this decision, I'd advocate for clinics to discourage parents from making this decision, I wouldn't object to professional SROs telling clinicians to not offer this sort of service, and possibly I'd advocate for them to do so. I don't think it's a good decision to make. I also think it should not be prohibited by law.

I predict any reasonable cost-benefit analysis will find that intelligence and health and high happiness-set-point are good, and blindness and dwarfism are bad.

This is irrelevant to what I'm trying to communicate. I'm saying that you should doubt your valuations of other people's ways of being--NOT so much that you don't make choices for your own children based on your judgements about what would be good for them and for the world, or advocate for others to do similarly, but YES so much that you hesitate quite a lot (like years, or "I'd have to deeply investigate this from several angles") before deciding that we (the state) ought to use state force to impose our (some political coalition's) judgements about costs and benefits of traits on other people's reproduction.

I think good arguments for "protection of genomic liberty for all" exist, but I don't think "there are no unambiguous good directions for genomes to go" is one of them.

I think it is a good argument. Since it's ambiguous, and it's not an interpersonal conflict, and there are (at least potentially) people with a strong interest in both directions for their own children, the state should be involved as little as is reasonable. This is a policy about which I think it would be more truthful to say "a world following this policy ought to be desirable, or at least not terribly objectionable, to the great majority of citizens".

If you don't protect people's propagative liberty, some people will have good reason to strongly object to that world.

If you do protect people's propagative liberty, some other people might believe they have good reason to strongly object. I discuss at least one acknowledged exception to the proposed protection here: https://www.lesswrong.com/posts/rxcGvPrQsqoCHndwG/the-principle-of-genomic-liberty#Propagative_liberty

But I'm arguing to those people that their objection should not be so strong that they ought to fight to prohibit, by law, this sort of propagative liberty.

Excellent! Thank you for researching and writing up this article.

A few notes, from my discussion with Morpheus:

- A single UPD has 1/23rd incorrect imprinting, so to speak. It's plausible that a fairly benign UPD has some small effect that's barely noticeable--but then if you have a zygote with many incorrect imprints, say 1/2 or 1/4 incorrect imprinting, that these effects would add up a lot and be quite detrimental, producing an epigenomic near-miss.

- Indeed, we might sort of suspect this by default. On the model that says "Paternal/Maternal imprints make you grow More/Less", having lots of missing imprints could make you grow way too much or way too little, whereas a few imprints might have only a small overall effect on growth.

- It's kinda curious that there's these large effects from single regions being misimprinted. Is it the case that the large effects are always caused by the version of imprinting that makes there be zero or near zero expression? This would make some sense; you're basically deleting a gene. But if not, what's going on with the large deleterious effects? (It's not crazy to think that ~2x overexpression would have deleterious effects; e.g. maybe that's what's happening with some/many trisomy disorders.)

- It would be nice to understand weaker deleterious effects from less-bad UPDs. But since there are very few UPD cases, they might be hard to detect.

- One approach could be analogous to the situation with the worst UPD disorders. There we can compare with genetic mutations that knockout (or upregulate) the gene in a way that corresponds to the UPD disorder. We could do the same by looking at people with genetic mutations in other regions believed to be sex-linked imprinting regions. There should be much larger cohorts of such cases compared to UPD cases. So we could maybe detect subtler health problems.

Thanks.

If one is going to create an organ donor, removing consciousness and self-awareness seems essential.

If you can't do it without removing almost all of the nervous system, I think it would be bad!

These are all worth doing if we can figure out how.

Possibly. I think all your examples are quite alarming in part because they remove a core aspect. Possibly we could rightly decide to do some of them, but that would require much more knowledge and deliberation. More to the point: I'm not making a strong statement like "prohibit these uses". I'm a weaker statement: "Genomic liberty doesn't really protect these uses, in the way it does protect propagation, beneficence, etc.". In other words, I'm just saying that those uses aren't in the territory that the principle of genomic liberty is trying to secure as its purview, as I'm proposing it.

On the negative externalities question, I actually strongly disagree with the counterexample of a blind couple choosing to blind their child. That's child abuse! It's no different than gouging your child's eyes out! Don't allow that!

I agree that it's a tough case, like several others of that type. I think there's both moral and ethical/political differences with harming a living child though. Some moral differences:

- Gouging the child's eyes out is much more of a boundary violation and betrayal of bedrock civility. They'd then have very good reason to treat whoever did that as abjectly hostile.

- You're causing a switch from one type of body to another; the growing consciousness was growing fitly for the first type, and you took that away, leaving that consciousness somewhat hanging.

- It could very well be that a sighted person approves of their life by their own lights and doesn't wish to be blind, and a blind person approves of their life by their own lights and doesn't wish to be sighted, but a sighted person who is blinded doesn't approve of that happening and wishes it weren't so.

- Diversity of capabilities is good. Blind people may think differently, and, as it were, see things you don't naturally see. Ditto for many other phenotypes. I think you get less of this benefit if you maim a child vs. through germline engineering (where the adaptation is from birth). (You can of course argue that this isn't enough of a benefit to genomically do it, and I'd certainly agree with this as a guess and wouldn't do that with my own child and wouldn't recommend others do it, but it's still a benefit.)

- See https://www.lesswrong.com/posts/JFWiM7GAKfPaaLkwT/the-vision-of-bill-thurston

An ethical difference:

- It makes sense to have laws that protect existing people; a right to not be killed or maimed is a very basic right for a state to enforce. This doesn't apply as much to a hypothetical future child, in terms of what it makes sense to have laws about.

A political difference:

- As I suggested, I think we ought to set the world up in such a way that the great majority of people don't have good reason to oppose the creation of germline engineering technology. If you start going "well, surely we have to stamp out this type of person" you're pretty quickly eroding / betraying that coalition. Hence propagative liberty as a tentpole principle.

A moral philosopher might also argue that it's less "person harming" to make an alteration before the child has begun growing, though I'm not sure what that's intended to mean.

(BTW I think you asking about entanglement sequencing caused me to a few days later realize that for chromosome selection, you can do at least index sensing by taking 1 chromosome randomly from a cell, and then sequencing/staining the remaining 22 (or 45), and seeing which index is missing. So thanks :) )

IMO a not yet fully understood but important aspect of this situation is that what someone writes is in part testimony--they're asserting something that others may or may not be able to verify themselves easy, or even at all. This is how communication usually works, and it has goods (you get independent information) and bads (people can lie/distort/troll/mislead). If a person is posting AIgen stuff, it's much less so testimony from that person. It's more correlated with other stuff that's already in the water, and it's not revealing as much about the person's internal state--in particular, their models. I'm supposed to be able to read text under the presumption that a person with a life is testifying to the effect of what's written. Even if you go through and nod along with what the gippity wrote, it's not the same. I want you to generate it yourself from your models so I can see those models, I want to be able to ask you followup questions, and I want you to stake something of the value of your word on what you publish. To the extent that you might later say "ah, well, I guess I hadn't thought XYZ through really, so don't hold me to account for having apparently testified to such; I just got a gippity to write my notions up quickly", then I care less about the words (and they become spammier).

If there are some skilled/smart/motivated/curious ML people seeing this, who want to work on something really cool and/or that could massively help the world, I hope you'll consider reaching out to Tabula.

I chatted with Michael and Ammon. This made me somewhat more hopeful about this effort, because their plan wasn't on the less-sensible end of what I uncertainly imagined from the post (e.g. they're not going to just train a big very-nonlinear map from genomes to phenotypes, which by default would make the data problem worse not better).

I have lots of (somewhat layman) question marks about the plan, but it seems exciting/worth trying. I hope that if there are some skilled/smart/motivated/curious ML people seeing this, who want to work on something really cool and/or that could massively help the world, you'll consider reaching out to Tabula.

An example of the sort of thing they're planning on trying:

1: Train an autoregressive model on many genomes as base-pair sequences, both human and non-human. (Maybe upweight more-conserved regions, on the theory that they're conserved because under more pressure to be functional, hence more important for phenotypes.)

1.5: Hope that this training run learns latent representations that make interesting/important features more explicit.

2: Train a linear or linear-ish predictor from the latent activations to some phenotype (disease, personality, IQ, etc.).

IDK if I expect this to work well, but it seems like it might. Some question marks:

- Do we have enough data? If we're trying to understand rare variants, we want whole genome sequences. My quick convenience sample turned up about 2 million publicly announced whole genomes. Maybe there's more like 5 million, and lots of biobanks have been ramping up recently. But still, pretending you have access to all this data, this means you see any given region a couple million times. Not sure what to think of that; I guess it depends what/how we're trying to learn.

- If we focus on conserved regions, we probably successfully pull attention away from regions that really don't matter. But we might also pull attention away from regions that sorta matter, but to which phenotypes of interest aren't terribly sensitive to. It stands to reason that such regions or variants wouldn't be the most conserved regions or variants. I don't think this defeats the logic, but it suggests we could maybe do better. Example of other approaches: upvote regions that are recognized as having the format of genes or regulatory regions; upvote regions around SNPs that have shown up in GWASes.

- For the exact setup described above, with autoregression on raw genomes, do we really learn much about variants? I guess what we ought to learn something about is approximate haplotypes, i.e. linkage disequilibrium structure. The predictor should be like "ok, at the O(10kb) moment, I'm in such-and-such gene regulatory module, and I'm looking at haplotype number 12 for this region, so by default I should expect the following variants to be from that haplotype, unless I see a couple variants that are rarely with H12 but all come from H5 or something". I don't see how this would help identify causal SNPs out of haplotypes? But it could very well make the linear regression problem significantly easier? Not sure.

- But also I wouldn't expect this exact setup to tell us much interesting stuff about anything long-range, e.g. protein-protein interactions. Well, on the other hand, possibly there'd be some shared representation between "either DNA sequence X; or else a gene that codes for a protein that has a sequence of zinc fingers that match up with X"? IDK what to expect. This could maybe be enhanced with models of protein interactions, transcription factor binding affinities, activity of regulatory regions, etc. etc.

More generally, I'm excited about someone making a concerted and sane effort to try putting biological priors to use for genomic predictions. As a random example (which may not make much sense, but to give some more flavor): Maybe one could look at AlphaFold's predictions of protein conformation with different rare genetic variants that we've marked as deleterious for some trait. If the predictions are fairly similar for the different variants, we don't conclude much--maybe this rare variant has some other benefit. But if the rare variant makes AlphaFold predict "no stable conformation", then we take this as some evidence that the rare variant is purely deleterious, and therefore especially safe to alter to the common variant.

Something I'd like WBE researchers to keep in mind: It seems like, by default, the cortex is the easiest part to get a functionally working quasi-emulation of, because it's relatively uniform (and because it's relatively easier to tell whether problem solving works compared to whether you're feeling angry at the right times). But if you get a quasi-cortex working and not all the other stuff, this actually does seem like an alignment issue. One of the main arguments for alignment of uploads would be "it has all the stuff that humans have that produces stuff like caring, love, wisdom, reflection". But if you delete a bunch of stuff including presumably much of the steering systems, this argument would seem to go right out the window.

I don't know what they have in mind, and I agree the first obvious thing to do is just get more data and try linear models. But there's plenty of reason to expect gains from nonlinear models, since broadsense heritability is higher than narrowsense, and due to missing heritability (though maybe it ought to be missing given our datasets), and due to theoretical reasons (quite plausibly there's multiple factors, as straw!tailcalled has described, e.g. in an OR of ANDs circuit; and generally nonlinearities, e.g. U-shaped responses in latents like "how many neurons to grow").

My guess, without knowing much, is that one of the first sorts of things to try is small circuits. A deep neural net (i.e. differentiable circuit) is a big circuit; it has many many hidden nodes (latent variables). A linear model is a tiny circuit: it has, say, one latent (the linear thing), maybe with a nonlinearity applied to that latent. (We're not counting the input nodes.)

What about small but not tiny circuits? You could have, for example, a sum of ten lognormals, or a product of ten sums. At a guess, maybe this sort of thing

- Captures substantially more of the structure of the trait, and so has in its hypothesis space predictors that are significantly better than any possible linear PGS;

- is still pretty low complexity / doesn't have vanishing gradients, or something--such that you can realistically learn given the fairly small datasets we have.

[The Memes] Maybe the "Yes" people are buying "Yes" for the lulz. It's kinda fun to tell people that you bet that Jesus Christ would return this year!

Maybe you mean this expansively, but it doesn't ring true to me as stated because my main guess for many / most bettors would be more serious: they're "manifesting" or "hyperstitioning"--or more generally, they think there's some good effect from nudging other peoples's sense of what is other peoples's investment in 2nd-coming worlds.

Downvoting because it seems like you've barely read anything I wrote and also don't know anything about genetics or intelligence, and are now posting AI slop, but I will upvote a thoughtful post making an argument using information and logic that address why people think it might work.

Yeah this seems like an important question. I'm not sure what to think. Ideally someone with more background in medical ethics could address this. E.g. I'm not sure how to navigate what would happen if, for example, law enforcement claimed it needed access to some info (e.g. to enforce regulations about germline engineering, or to use in forensic investigation of a crime); or if there were a malpractice suit about a germline engineering clinic, or something. I'm also not sure what is standardly done, and what the good and bad results are, in situations where a child might have an interest in their parents not sharing some info about them.

But certainly, in a list of innovation-positive ethical guidelines for scientists and clinicians regarding germline engineering, some sort of strong protection of privacy would have to be included. This is a good point, thanks.

Yeah I'm not, like, trying to sneak this in as a law or something. It's a proposed policy principle, i.e. a proposed piece of culture.

My main motive here is just to figure out what a good world with germline engineering could/would look like, and a little bit to start promoting that vision as something to care about and work towards. I agree that practical technology will push the issue, but I think it's good to think about how to make the world with this technology good, rather than just deferring that. Besides the first-order thing where you're just supposed to try to make technology end up going well, it's also good to think about the question for cooperative reasons. For one thing, pushing technology ahead without thinking about whether or how it will turn out well is reckless / defecty, and separately it looks reckless / defecty. That would justify people pushing against accelerating the technology, and would give people reason to feel skittish about the area (because it contains people being reckless / defecty). For another thing, having a vision of a good world seems like it ought to be motivating to scientists and technologists.

near-evolutionarily-optimal range. That has not happened with intelligence,

What makes you think this? As I said, it's not clear to me that there's been much selection pressure for intelligence in the past few thousand years.

Also, the "evolutionary optimum" can change. E.g. calories are not much of a problem in the developed world, but that's recent.

Also, there's always an influx of de novo mutations, and evolution has limited selection power. I'm not clear on the math here exactly, and I think kman has suggested that mutational load isn't the main source of IQ-associated SNPs, but it demonstrates that it's far from ironclad logic to infer from evolutionary pressure on a trait that the trait should be near optimum in linear variants. The brain is one of the organs with the most diverse gene expression profile (I mean, more genes are expressed in the brain than in most other tissues); and IIRC most genes are expressed in the brain (not confident of this, maybe it's more like 1/3 or 1/2. But anyway, there's a lot of genes potentially relevant to brain function, so there's a lot of surface area for mutational load to drag things down a bit.

genes are not simply choosing a level of intelligence.

I don't know what you mean by this. Are you talking about pleiotropy? Between what and what? I mean of course genes do lots of things, but IIUC so far as we've observed, the correlations between most measured traits are pretty small (and usually positive between traits most people would judge desirable, e.g. lower risk of mental illness and higher intelligence).

I'll repeat that I'm not very learned about genetics, so if you want to convince even me in particular, the best way is to respond to the strongest case, which I can't present. But ok:

First I'll say that an empirical set of facts I'd quite like to have for many traits (disease, mental disease, IQ, personality) would be validation of tails. E.g. if you look at the bottom 1% on a disease PRS, what's the probability of disease? Similarly for IQ.

or beyond those?

I rarely make claims about going much beyond natural results; generally I think it's pretty plausible there's some meaningful thing we could feasibly do that's like +6 -- +8 SDs on intelligence, but I'm much less confident about the +8 SD claim, and not super confident of the +6 SD. Like, I think the default expectation ought to be that we can meaningfully get to +6 SDs; this seems like the straightforward conclusion. (I'm just restating the intuition / impression.)

Why do you expect that only a very small fraction of natural SNP differences are needed to get the extremes of natural results

Assuming linearity, the math is fairly straightforward. In the simplest model, with 10,000 fair +1/-1 coins (representing all the variance in a trait, so some coins are environmental), an SD is 50 coins and the average is 5,000. So there's 100 SDs of variance available. Obviously this is mostly meaningless in terms of the trait, as linearity would not remotely hold, but my point is that the issue isn't the math of additive selection. See here for more (e.g. about if the coins are biased https://tsvibt.blogspot.com/2022/08/the-power-of-selection.html#7-the-limits-of-selection ).

IQ seems to have thousands of small contributions from different regions. 10% of the variance is therefore in the ballpark of 10 trait SDs. Again, I'm not saying you can get to 250 IQ; what I'm saying is that the math of selection and variance isn't the problem. Lee et al. state "In the WLS, the MTAG score predicts 9.7% of the variance in cognitive performance[...]"; this was in 2018, I would bet we can do substantially better now.

Lee, James J., Robbee Wedow, Aysu Okbay, Edward Kong, Omeed Maghzian, Meghan Zacher, Tuan Anh Nguyen-Viet, et al. ‘Gene Discovery and Polygenic Prediction from a 1.1-Million-Person GWAS of Educational Attainment’. Nature Genetics 50, no. 8 (August 2018): 1112–21. https://doi.org/10.1038/s41588-018-0147-3.

Why do you expect that effects would be linear?

TBC I certainly don't expect the effects to be literally linear even in the typical human range; it's more that expect them to be fairly linear. Like if the answer is that a trait-mean couple that selects their child's genome to have a (carefully, accurately as best anyone can) predicted IQ of mean 170 actually tends to have a child with mean IQ 155, I'd shrug and be like "huh, that's weird and surprising, let's investigate and make sure to communicate this fact to parents"; and I think this possibility is nontrivially strategically relevant; and it means we should accurately describe this plausible outcome, in order to not overhype etc.; but I wouldn't be totally shocked. If the child tends to have a mean IQ of 125, I would be shocked, yeah. (The 170 vs. 155 thing would be hard to notice for a while because testing intelligence at that range is barely feasible, but just saying for illustration.)

Also, certainly I would expect strong nonlinearities at the extreme tails at some point. I'd certainly strongly advice against, maybe even condemn, pushing noticeably outside the regime of adaptedness.

Why do I expect the effects to be fairly linear in the human envelop, to the point where increasing a bunch of causal variants increases the trait?

Some of my impressions come from here: https://arxiv.org/pdf/1408.3421 "On the genetic architecture of intelligence and other quantitative traits", Stephen D.H. Hsu, 2014

and from https://gwern.net/embryo-selection

(TBC, I'm not saying these sources present the strongest arguments; I'm just saying where my impressions historically come from.)

- Breeding programs in non-humans work well, so there's plenty of variance for those traits, no huge nonlinear walls that you hit, etc. This is far from dispositive; intelligence could plausibly be different from traits like egg production or weight, maybe it's important that you're checking along the way, etc.

- Linear PGSes work well for many traits (height in humans; various traits for cows I think; even IQ, up to 10%).

- SNP heritability estimates are substantial; the number in my head for IQ is >.3 of the variance. Though my impression is also that these are maybe controversial? I dunno.

- It's far from obvious to me that there's been much selection for IQ in the past couple thousand years. But if there were a case that the selection has been strong, that would shift me.

- There's a theoretical argument, which IDK if it should hold much weight, but I like it: since DNA segments get shuffled around a lot, there's selection pressure for things to work reasonably well with other things. E.g. DNA segments that have really bad effects when combined with some other segments would be selected against; and DNA segments that improve mechanisms for repairing/smoothing-out/compensating bad epistases between other segments will be selected for. In general this smooths things, which makes the landscape more linear. (I understand that specific epistases are much rarer than single variants, and therefore relatively invisible to selection; but I think my point stands somewhat, though this could be clarified and maybe basically disproven with good quantitative analysis.)

- I just haven't seen evidence of this nonlinear wall that's right between 140 and 160, or whatever the claim is. It's just people saying "maybe there's a U-shaped curve of something" or "maybe there's a high fan-in latent with a cutoff after the latent in the final IQ sum" which makes sense, but AFAIK is basically just speculation. It also isn't super compelling speculation if we're talking about a super polygenic trait in a super-complex organ where I'd expect there to be lots and lots of ways to tune and fix and just upregulate stuff. Like, my actual guess would be that there's a whole spectrum of functional forms, from linear (substantial, according to h estimates!) through small ORs of ANDs, through large ORs of highly sensitive ANDs and other nonlinear forms; and these are all mixed together; and this does imply something; but it doesn't imply that you can't have quite large effects on the trait with germline engineering.

- My impression is that the upper tail of IQ does get a little weird, and maybe g stops existing as much / the distribution of different tests stops being as one-dimensional? But IIUC (not sure, heard this from a psychometrician) there's no observed threshold in the effect of IQ on other traits, despite people looking, though it's quite hard to measure past 150ish. And e.g. this random paper claims to find quite substantial SNP heritability in a cohort with estimated rarity >4 SDs, though it's not a huge cohort (1238 in the selected cohort) and I didn't study it so maybe it's very flawed / meaningless, IDK. https://www.nature.com/articles/mp2017121 In other words, to the small extent that we can look at the extreme tails, I at least haven't heard of big results saying "aha! actually the genetics of IQ on the tails is quite different than near the mean!".

- (Also there's sibling studies that IIUC say we are indeed picking up causality, though that's not directly relevant to linearity.)

I'm basing this off of selection, not editing. I haven't looked into the genetics stuff very much, because the bottleneck is biotech, not polygenic scores.

Would look forward to your rebuttal! I just hope you'll respond to the strongest arguments, not the weakest. In particular, if you want to argue against the potential effectiveness of selection methods, I think you'd want to either argue that PGSes aren't picking up causal variants at all (I mean, that there's a large amount of correlation that isn't causation); or that the causality would top out / have strongly diminishing returns on the trait. Selection methods would capture approximately all of the causal stuff that the PGS is picking up, even if it's not even due to SNPs but rather rarer SNVs. (However, this would not apply to population stratification or something; then I'd think you'd want to argue that this is much / most of what PGSes are picking up, and there'd be already-made counterarguments to this that you should respond to in order to be convincing.)

What I meant was smart people having more kids.

I mean, I'd encourage most people in general to choose to have kids, but yeah, trying to influence other people's reproductive choices on the level of persons is creepy and eugenicsy; it's much more practically and motivationally contiguous with even creepier and eugenicsier stuff such as racist immigation policies, etc.

but in what sense is that true that's not true of, say, your smarter relative having the kids instead?

In a huge quantitative sense. You and I differ at very roughly 4 million SNVs (maybe more like 5-10, but 4 is a convenient number). My sibling and I differ at roughly half that, 2 million SNVs. If I had a child without germline engineering, I'd pass on 2 million SNVs. If it's my sibling's child, it's 1 million SNVs.

If I use selection, I'm still passing on 2 million SNVs, though in a way selected for association with some traits--but the selection is pretty weak in the scale of SNVs; it'd correspond, morally, to something like hundreds or thousands of edits, I think (haven't gone through this carefully). In other words, O(0.1%), even if you count the alteration as being totally unrelated DNA. Similarly for editing. You could have a 180 IQ super-healthy kid with a natural lifespan of 110 years, with a genome that would be nearly indistinguishable from being your non-GE'd child. (Unless you whole genome sequence them and find a surprising coincidence of health and IQ alleles drawn from your own genome / a couple dozen SNPs that weren't in either parent.)

I mean, if you don't care about that, God bless you. I think most people do care about it though.

We already know how to get smarter kids

What are you refering to? It's true that there are companies currently offering embryo screening based on polygenic scores, in at least one case including IQ. These methods are fairly weak though. (I mean they're cool, and could have significant impact on diseases because for diseases the initial genomic vectoring is the most impactful on absolute disease risk. But they won't e.g. make tens of thousands of world-class intellects. See https://www.lesswrong.com/posts/2w6hjptanQ3cDyDw7/methods-for-strong-human-germline-engineering#Method__Simple_embryo_selection .)

People also do mate choice, but this is, while not exactly zero-sum, kinda zero-sum, and still doesn't get you super-healthy long-lived world-class intellects with high probability. Or do you mean more broadly education and stuff?

Do you expect that to change? Why?

Generally the basic reason I expect it to change is that the technology will have big benefits, so people will think about how to do it without also genociding people.

By default, I expect it to change, though it could happen pretty slowly. Or rather, it could be quite delayed. That seems to already be the case; I think if we'd wanted to make this technology, and had wanted that for, say, 20 years, we could probably have already had it.

I somewhat expect that once the first quite noticeable germline engineering is proven out, people will want to use the technology themselves. E.g. you have a bunch of 17-year-olds who are already quite impressive intellectually, and not in a kinda-cringe prodigy way but in an actual way; and you have 10000 people age 0-20 who have a noticeably miniscule rate of death due to disease (of course they'd still die from accidents and such).

Also, it's worth noting that public sentiment about germline genomic engineering is much better described as "quite mixed" rather than "anti". I want to do a more thorough review later, but the few polls I've seen get favorability numbers for germline engineering between 20% and 70% (depending on the question, e.g. about preventing disease vs. increasing intelligence or other capacities; and depending on the country). The few debates I've watched are similarly, coarsely, half and half.

I hope to help this process along at least a bit, though of course I probably can't do much. Partly it's just communicating what the tech will do, and how we know that, etc. Partly it's communicating about misconceptions. Partly it's making the tech actually be beneficial; a big thing here is making it strong (hence effective and greatly beneficial to individuals; and also cheap, and therefore widely accessible; see https://www.lesswrong.com/posts/2w6hjptanQ3cDyDw7/methods-for-strong-human-germline-engineering#Strong_GV_and_why_it_matters ). Partly it's thinking about to set up society to avoid possible socially emergent downsides. (Yes, I understand I contribute at most a very small amount to "setting up society"; the idea is to contribute to figuring out what that could look like, to be beneficial.)

current societies have comprehensively decided that eugenics programs are bad

I'm still unsure, and curious to learn, what people actually object to. (My current list is here, though it's trying to be complete rather than emphasizing what people most care about: https://berkeleygenomics.org/articles/Potential_perils_of_germline_genomic_engineering.html .) The original, and most abhorrent, things that are called eugenics, are actually bad. But they are in most ways diametrically opposed to genomic emancipation. There is overlap, which people could be rightly worried about--namely, generally caring about genes enough to somehow intervene on some genes. But the logic is fundamentally opposed: eugenics says:

Everyone should have children with Good genes, so that We/I don't have to live with the negative externalities of Your bad genes (/race).

whereas genomic emancipation (in its pure, and therefore not completely workable, form) says

Everyone should be empowered to have as much (well-informed, high-precision, skillful) influence over their future child's genome, so that I can give My child a good life by My lights, and You can give Your child a good life by Your lights, which will probably be good because empowered pursuit of diverse human goods is probably good in aggregate.