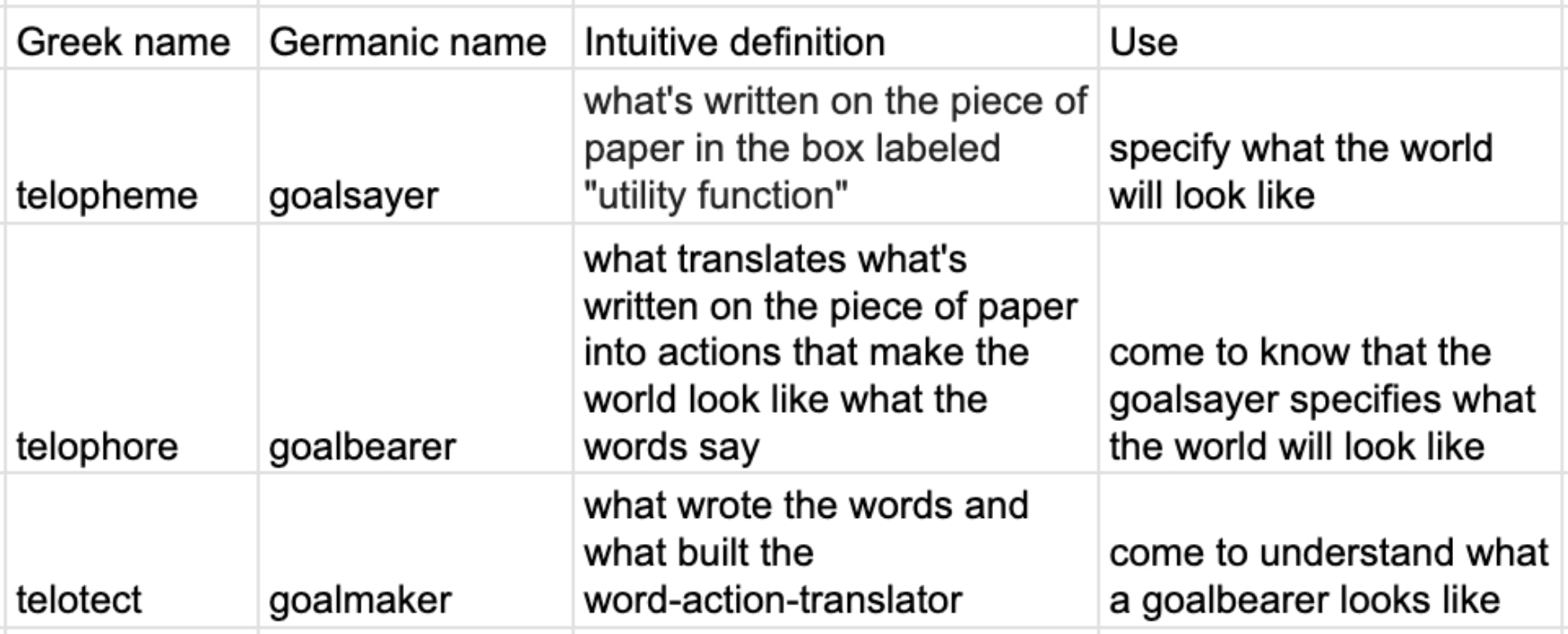

Telopheme, telophore, and telotect

post by TsviBT · 2023-09-17T16:24:03.365Z · LW · GW · 7 commentsContents

The fundamental question Synopsis Telopheme Telophore Minimality Sufficiency Limits Telotect Examples Telophore vs. telotect The fundamental question revisited None 8 comments

[Metadata: crossposted from https://tsvibt.blogspot.com/2023/06/telopheme-telophore-and-telotect.html. First completed June 7, 2023.]

To come to know that a mind will have some specified ultimate effect on the world, first come to know, narrowly and in full, what about the mind makes it have effects on the world.

The fundamental question

Suppose there is a strong mind that has large effects on the word. What determines the effects of the mind?

What sort of object is this question asking for? Most obviously it's asking for a sort of "rudder" for a mind: an element of the mind that can be easily tweaked by an external specifier to "steer" the mind, i.e. to specify the mind's ultimate effects on the world. For example, a utility function for a classical agent is a rudder.

But in asking the fundamental question that way——asking for a rudder——that essay losses grasp of the slippery question and the real question withdraws. The section of that essay on The word "What", as in ¿What sort of thing is a "what" in the question "What determines a mind's effects?", brushes against the border of this issue but doesn't trek further in. That section asks:

What sort of element can determine a mind's effects?

It should have asked more fully:

What are the preconditions under which an element can (knowably, wieldily, densely) determine a mind's effects?

That is, what structure does a mind have to possess, so that there can be an element that determines the mind's ultimate effects?

To put it another way: asking how to "put a goal into an agent" makes it sound like there's a slot in the agent for a goal; asking how to "point the agent" makes it sound like the agent has the capacity to go in a specified direction. Here the question is, what does an agent need to have, if it has the capacity to go in a specified direction? What is the mental context in which a goal unfolds so that the goal is a goal? What do we necessarily think of an agent as having or being, when we think of the agent as pursuing a goal?

Synopsis

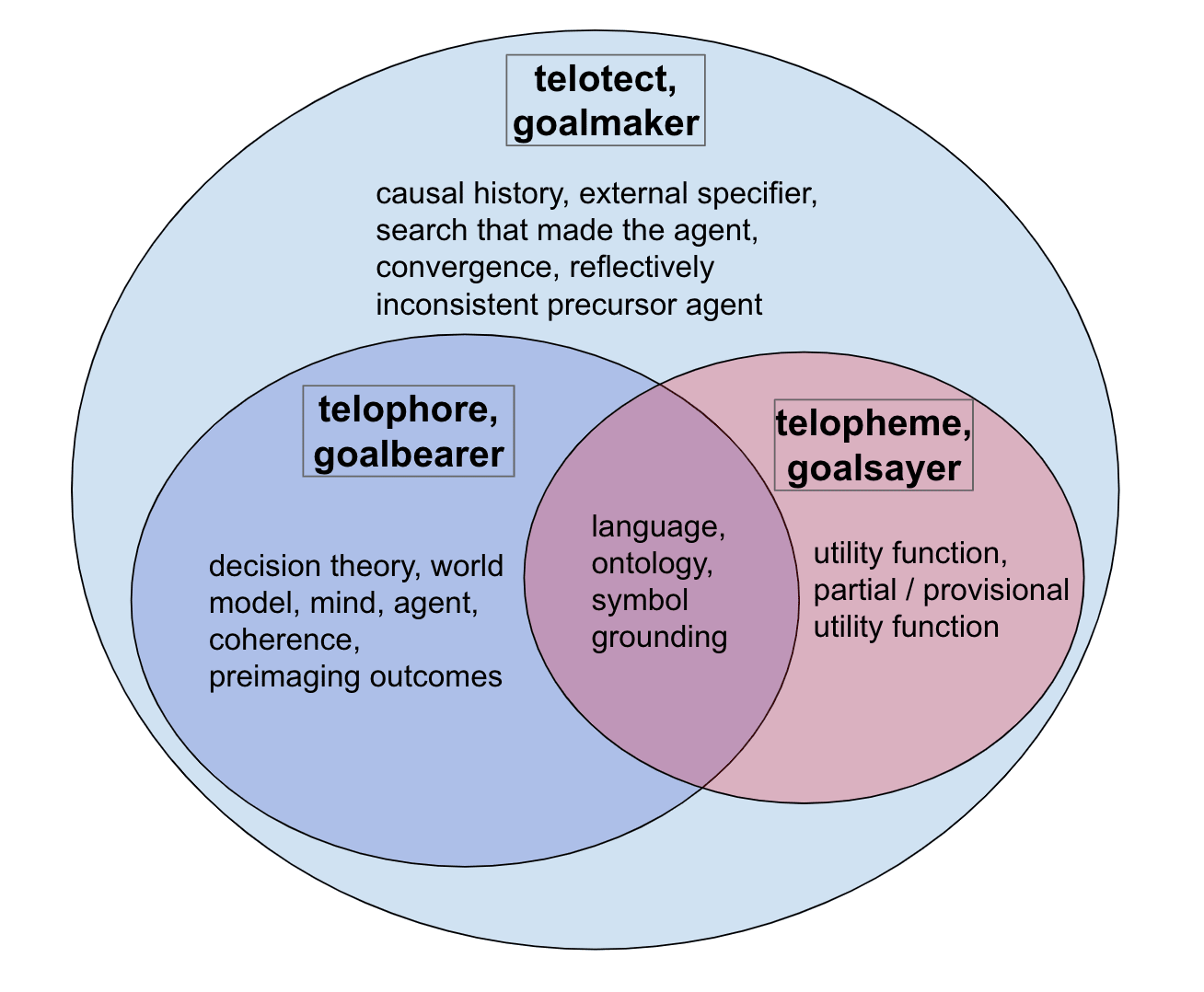

Telopheme

The rudder, the element that determines the mind's ultimate effects, is a telopheme. The morpheme "telo-" means "telos" = "goal, end, purpose", here meaning "ultimate effects". The morpheme "-pheme" is like "blaspheme" ("deceive-speak"). ("Telopheme" is probably wrong morphology and doesn't indicate an agent noun, which it ought to do, but sadly I don't speak Ancient Greek.) So a telopheme is a goal-sayer: it says the goal, the end, the ultimate effects.

For example, a utility function for an omnipotent classical cartesian agent is a telopheme.

- The utility function says how good different worlds are, and by saying that, it determines that the ultimate effect of the agent will be to make the world be the world most highly scored by the utility function.

- Not only does the utility function determine the world, but it (perhaps) does so densely, knowably, and wieldily.

- Densely: We can imagine that the utility function is expressed compactly, compared to the complex behavior that the agent executes in pursuit of the best world as a consequence of preimaging the best world through the agent's world-model onto behavior.

- Knowably: The structure of the agent shows transparently that it will make the world be whatever world is best according to the utility function.

- Wieldily: IF (⟵ big if) there is available a faithful interpretation of our language about possible worlds into the agent's language about possible worlds, then we can (if granted access) fluently redirect the agent so that the world ends up as anything we choose by saying a new utility function with that world as the maximum.

There's a hidden implication in the name "telopheme". The implication is that ultimate effects are speakable.

Telophore

The minimal sufficient preconditions for a mind's telopheme to be a telopheme is the telophore (or telophor) of the telopheme. Here "-phore" means "bearer, carrier" (as in "phosphorus" = "light-bearer", "metaphor" = "across-carrier"), in the sense of "one who supports, one who bears the weight". So a telophore is a goal-bearer: it carries the telopheme, it constitutes (supports) the goal-ness of what the telopheme says, it unfolds the telopheme into action and effect, it makes it the case that the telopheme says the ultimate effects of the mind. (The telophore gives the mind substantial-ultimate-effect-having-ness——which could be called "telechia", "telos-having-ness", cf. entelechy.) An alternative name for telophores would be "agency-structures", but "agency" is ambiguous, and it emphasizes action-taking rather than effect-having.

Minimality

Continuing the example of an omnipotent cartesian classical agent, the telophore is murkier than the telopheme. It seems to require the whole rest of the agent besides the utility function : the world-model, the policy generator, and the search procedure applying to possible worlds.

Taking the whole rest of the agent as a telophore is casting too wide a net. It doesn't give us any traction on understanding the agent; it says that to come to know that the telopheme is a telopheme, we have to come to know everything about the whole agent. A telophore is more narrow. A telophore is a set of elements that is sufficient to support that the telopheme determines the mind's ultimate effects, and is minimal with that property, i.e. a strict subset wouldn't suffice.

As an example of how a telophore could be more narrowly identified, consider an agent's world model:

- To understand the agent's telophore, do we have to understand the agent's whole world model, down to the last detail? No, it suffices to understand something about the world model, namely that it makes correct predictions about the consequences of possible action-policies the agent could follow.

- As a concrete example of how we can get leverage on understanding a mind: Suppose the agent has a Bayesian world model. It has a probability distribution over a computationally-general set of hypotheses that it updates by comparing each hypothesis's predictions against observation. If we know that the agent does this, then we don't need to see all the observations and all the updates that the agent makes: we can tell that the agent will (barring possession by manifested hypothetical demons) come to correct beliefs about things that emanate evidence.

- Similarly, we can know that a theorem prover will reach conclusions validly implied by the axioms, even if we don't know what those conclusions are.

So the telophore can in some cases be narrowed down from the whole mind, by excluding the understanding that the mind gains through processes which transparently are going to gain that understanding.

Sufficiency

-

"What unfolds the telopheme into action and effect" sounds like it describes specifically the agent's decision theory. But the agent's decision theory isn't sufficient because a decision theory without a world model can't translate a goal-statement into effects. So the telophore is not (contained in) the decision theory.

-

Suppose there is a strong mind M that is corrigible by a human. That means that the human can arbitrarily modify M. So, if there is a telopheme, its telophore is extended across both M and the human. Something entirely contained in M wouldn't explain how it survived the human's scrutiny. Without explaining how it persists to translate the telopheme into effects on the world, it's not sufficient to make the telopheme a telopheme.

Limits

- A telopheme has to have a telophore. Otherwise it's just an inscription on a box [LW · GW] labeled "utility function".

-

An edge case: suppose that an agent's utility function is encrypted, so that external observers can't predict how the world will end up, and can't specify how the world will end up without learning how the telophore will decrypt the telopheme.

- Is the encrypted utility function a telopheme? Is the as-if telophore a telophore?

- What if, more generally than encryption, the telopheme is inexplicit or provisional?

-

I suspect that nearly all minds have a telophore, even if they don't have a telopheme.

- The telophore forms because of a general tendency towards coherence in minds on a trajectory of creativity.

- The telophore is what coheres out of the self-reaffirming tendencies of the mind, the autoclines.

- A telophore without a telopheme is maybe like an uncathected drive. For example, a human might have a drive to protect their children from harm. If they don't have children, that drive is uncathected; it's there in some sense, but doesn't have the same type signature as a goal-pursuit. Cathexis or imprinting is like fixing the free parameters of the telopheme. After cathexis, the drive is a goal-pursuit: protect this child. [Edit: This now seems like a strange way of saying things, and I'd rather say that the telopheme is not well-separated from the telophore because the telophore is a kind of meta-preference, e.g. a meta-preference for coherent preferences or a meta-preference for cathecting proleptic-telophemes according to certain rules.]

Telotect

Humans do not have a telopheme, at least not yet. Minds that we encounter may also not have a telopheme, at least at first. For this reason, the fundamental question asks about natural, autonomously growing minds. The question asks about what constructs the telophore and what writes the telopheme, so that the full telophore is revealed.

The answer to that question is a mind's telotect. "-tect" = "carpenter, builder", as in "architect". A telotect is a goalmaker: it makes the telopheme and the telophore, the structures that say and bear the goal into the world.

These two operations might be better to separate, but they might not be feasible to separate, because minds might naturally come with the telophore-maker and the telopheme-writer intertwined. The simple-seeming definition of telotect is: the telotect is all the elements (forces, processes, selection, decisions, optimization) that makes it end up the case that the mind makes X happen. The fact that the mind makes X happen could be broken up into

- that the mind makes something specific happen (the telophore), and

- that the "something specific" is X (the telopheme),

but the simpler fact is that the mind makes X happen.

Examples

- Suppose a classical agent decides according to causal decision theory (CDT). Then that agent self-modifies to instead decide according to some more subtle procedure, Son-of-CDT, which makes different decisions in some situations. The fact that CDT self-modifies radically——by its own will it removes what makes it CDT——shows that CDT is not a telophore for the agent's utility function. But since CDT is very involved with creating Son-of-CDT, which (arguably) is the main element of the agent's telophore, CDT is a main element of the agent's telotect.

- New "rules of the game" tend to be elements of the telotect, and sometimes are elements of a resulting telophore. For example, the constitution of a new form of government, an amendment to the rules of a game of Nomic, the discovery of a law of epistemics.

- A search (such as biological evolution) that finds a mind (such as humanity) is part of the telotect and (perhaps, mostly) not part of the telophore.

- When a mind encounters an ontological crisis [? · GW] it has to evaluate new possible worlds described by its new way of understanding what's possible. For example, the agent could interpret its past behavior as agentic in light of its new understanding of the world, and then adopt the imputed goals to explain that agentic behavior. [Edit: This example now seems to be not an example of telotect setminus (telophore union telopheme), but rather of [telopheme, but in a form not yet understood by us]. The meta-preference to resolve ambiguity in object-preferences according to certain rules has to be counted as part of the telopheme. However, it's plausible that these meta-preferences are essentially inexplicit because they essentially call on the whole mind; if so, then the telopheme idea is just confused.]

- The telotect is a dynamic concept——it "makes" the telophore and "writes" the telopheme. But it doesn't have to be dynamic in the specific sense of causal time. For example, the logical facts that such-and-such search procedure favors elements that tend to possibilize strategic agency, would be part of a telotect; that logical fact "makes" the result of the search be part of a telophore.

Telophore vs. telotect

Some of these examples show that the boundaries between {the telopheme, the telophore} and the rest of the telotect are murky or nonexistent. The process that invents democracy is part of some telotect, but is it part of a telophore? Or is the telophore only reached when democracy is implemented? The telotect, to borrow a metaphor, climbs up a ladder to the telophore and then kicks the ladder out from under itself, screening off the mind's history from the subsequent ultimate effects of the mind as borne by the telophore and specified by the telopheme. The telophore says: "Even if I'd gotten to be how I am by a different historical route, I'd still want X.".

Telophores cut across events like invention and implementation. E.g. a human may reflectively endorse a desire to have children for reasons influenced by ze's knowledge of zer evolutionary origin: ze wants to "see more of zerself in humanity". We lack the concepts to draw tight boundaries around telophores.

By showing what changes, the telotect shows what can't be part of the telophore. To see the telophore, see what the telotect doesn't change. The effort to clarify decision theory is an effort to isolate the telophore.

The fundamental question revisited

The fundamental question asks after the telopheme of natural minds in order to shed light on the telotect: to answer how a mind gets its telopheme would require understanding the telotect. The question asks after the telotect in order to shed light on the telophore: the writer of the telopheme is intertwined with the builder of the telophore. The question asks about the telophore because the telopheme can't be grasped without understanding the telophore that bears it.

7 comments

Comments sorted by top scores.

comment by Nora_Ammann · 2023-09-24T04:39:14.602Z · LW(p) · GW(p)

The process that invents democracy is part of some telotect, but is it part of a telophore? Or is the telophore only reached when democracy is implemented?

Musing about how (maybe) certain telopheme impose constraints on the structure (logic) of their corresonding telophores and telotects. Eg democracy, freedom, autonomy, justice, corrigibility, rationality, ... (thought plausibly you'd not want to count (some of) those examples as telophemes in the first place?)

↑ comment by TsviBT · 2023-10-08T15:04:10.636Z · LW(p) · GW(p)

I think that your question points out how the concepts as I've laid them out don't really work. I now think that values such as liking a certain process or liking mental properties should be treated as first-class values, and this pretty firmly blurs the telopheme / telophore distinction.

comment by Nora_Ammann · 2023-09-24T04:10:02.926Z · LW(p) · GW(p)

Curious whether the following idea rhymes with what you have in mind: telophore as (sort of) doing ~symbol grounding, i.e. the translation (or capacity to translate) from description to (wordly) effect?

Replies from: TsviBT↑ comment by TsviBT · 2023-10-08T15:13:06.081Z · LW(p) · GW(p)

It's definitely like symbol grounding, though symbol grounding is usually IIUC about "giving meaning to symbols", which I think has the emphasis on epistemic signifying?

Replies from: Nora_Ammann↑ comment by Nora_Ammann · 2023-10-10T07:43:43.226Z · LW(p) · GW(p)

Right, but I feel like I want to say something like "value grounding" as its analogue.

Also... I do think there is a crucial epistemic dymension to values, and the "[symbol/value] grounding" thing seems like one place where this shows quite well.

Replies from: TsviBT↑ comment by TsviBT · 2023-10-15T18:50:25.038Z · LW(p) · GW(p)

Ok yeah I agree with this. Related: https://tsvibt.blogspot.com/2023/09/the-cosmopolitan-leviathan-enthymeme.html#pointing-at-reality-through-novelty

And an excerpt from a work in progress:

Example: Blueberries

For example, I reach out and pick up some blueberries. This is some kind of expression of my values, but how so? Where are the values?

Are the values in my hands? Are they entirely in my hands, or not at all in my hands? The circuits that control my hands do what they do with regard to blueberries by virtue of my hands being the way they are. If my hands were different, e.g. really small or polydactylous, my hand-controller circuits would be different and would behave differently when getting blueberries. And the deeper circuits that coordinate visual recognition of blueberries, and the deeper circuits that coordinate the whole blueberry-getting system and correct errors based on blueberrywise success or failure, would also be different. Are the values in my visual cortext? The deeper circuits require some interface with my visual cortex, to do blueberry find-and-pick-upping. And having served that role, my visual cortex is specially trained for that task, and it will even promote blueberries in my visual field to my attention more readily than yours will to you. And my spatial memory has a nearest-blueberries slot, like those people who always know which direction is north.

It may be objected that the proximal hand-controllers and the blueberry visual circuits are downstream of other deeper circuits, and since they are downstream, they can be excluded from constituting the value. But that's not so clear. To like blueberries, I have to know what blueberries are, and to know what blueberries are I have to interact with them. The fact that I value blueberries relies on me being able to refer to blueberries. Certainly, if my hands were different but comparably versatile, then I would learn to use them to refer to blueberries about as well as my real hands do. But the reference to (and hence the value of) blueberries must pass through something playing the role that hands play. The hands, or something else, must play that role in constituting the fact that I value blueberries.

The concrete is never lost

In general, values are founded on reference. The context that makes a value a value has to provide reference.

The situation is like how an abstract concept, once gained, doesn't overwrite and obselete what was abstracted from. Maxwell's equations don't annihilate Faraday's experiments in their detail. The experiments are unified in idea--metaphorically, the field structures are a "cross-section" of the messy detailed structure of any given experiment. The abstract concepts, to say something about a specific concrete experimental situation, have to be paired with specific concrete calculations and referential connections. The concrete situations are still there, even if we now, with our new abstract concepts, want to describe them differently.

Is reference essentially diasystemic?

If so, then values are essentially diasystemic.

Reference goes through unfolding.

To refer to something in reality is to be brought (or rather, bringable) to the thing. To be brought to a thing is to go to where the thing really is, through whatever medium is between the mind and where the thing really is. The "really is" calls on future novelty. See "pointing at reality through novelty".

In other words, reference is open--maybe radically open. It's supposed to incorporate whatever novelty the mind encounters--maybe deeply.

An open element can't be strongly endosystemic.

An open element will potentially relate to (radical, diasystemic) novelty, so its way of relating to other elements can't be fully stereotyped by preexisting elements with their preexisting manifest relations.

comment by Mir (mir-anomaly) · 2023-09-17T21:22:22.804Z · LW(p) · GW(p)

is that spreadsheet of {Greek name, Germanic name, …} public perchance?

Replies from: TsviBT↑ comment by TsviBT · 2023-09-19T16:20:56.114Z · LW(p) · GW(p)

Ok, here: https://docs.google.com/spreadsheets/d/1V1QERPIKzpZNtS10hTwfe9aDyfmYWU8QbftBo9jIi9I/edit#gid=0

It's just what's shown in the screenshot though.