Views on when AGI comes and on strategy to reduce existential risk

post by TsviBT · 2023-07-08T09:00:19.735Z · LW · GW · 56 commentsContents

My views on when AGI comes AGI Conceptual capabilities progress Timelines Responses to some arguments for AGI soon The "inputs" argument The "big evolution" argument "I see how to do it" The "no blockers" intuition "We just need X" intuitions The bitter lesson and the success of scaling Large language models Other comments on AGI soon My views on strategy Things that might actually work None 57 comments

Summary: AGI isn't super likely to come super soon. People should be working on stuff that saves humanity in worlds where AGI comes in 20 or 50 years, in addition to stuff that saves humanity in worlds where AGI comes in the next 10 years.

Thanks to Alexander Gietelink Oldenziel, Abram Demski, Daniel Kokotajlo, Cleo Nardo, Alex Zhu, and Sam Eisenstat for related conversations.

My views on when AGI comes

AGI

By "AGI" I mean the thing that has very large effects on the world (e.g., it kills everyone) via the same sort of route that humanity has large effects on the world. The route is where you figure out how to figure stuff out, and you figure a lot of stuff out using your figure-outers, and then the stuff you figured out says how to make powerful artifacts that move many atoms into very specific arrangements.

This isn't the only thing to worry about. There could be transformative AI that isn't AGI in this sense. E.g. a fairly-narrow AI that just searches configurations of atoms and finds ways to do atomically precise manufacturing would also be an existential threat and a possibility for an existential win.

Conceptual capabilities progress

The "conceptual AGI" view:

The first way humanity makes AGI is by combining some set of significant ideas about intelligence. Significant ideas are things like (the ideas of) gradient descent, recombination, probability distributions, universal computation, search, world-optimization. Significant ideas are to a significant extent bottlenecked on great natural philosophers doing great natural philosophy about intelligence, with sequential bottlenecks between many insights.

The conceptual AGI doesn't claim that humanity doesn't already have enough ideas to make AGI. I claim that——though not super strongly.

Timelines

Giving probabilities here doesn't feel great. For one thing, it seems to contribute to information cascades and to shallow coalition-forming. For another, it hides the useful models. For yet another thing: A probability bundles together a bunch of stuff I have models about, with a bunch of stuff I don't have models about. For example, how many people will be doing original AGI-relevant research in 15 years? I have no idea, and it seems like largely a social question. The answer to that question does affect when AGI comes, though, so a probability about when AGI comes would have to depend on that answer.

But ok. Here's some butt-numbers:

- 3%-10% probability of AGI in the next 10-15ish years. This would be lower, but I'm putting a bit of model uncertainty here.

- 40%-45% probability of AGI in the subsequent 45ish years. This is denser than the above because, eyeballing the current state of the art, it seems like we currently lack some ideas we'd need——but I don't know how many insights would be needed, so the remainder could be only a couple decades around the corner. It also seems like people are distracted now.

- Median 2075ish. IDK. This would be further out if an AI winter seemed more likely, but LLMs seem like they should already be able to make a lot of money.

- A long tail. It's long because of stuff like civilizational collapse, and because AGI might be really really hard to make. There's also a sliver of a possibility of coordinating for a long time to not make AGI.

If I were trying to make a model with parts, I might try starting with a mixture of Erlang distributions of different shapes, and then stretching that according to some distribution about the number of people doing original AI research over time.

Again, this is all butt-numbers. I have almost no idea about how much more understanding is needed to make AGI, except that it doesn't seem like we're there yet.

Responses to some arguments for AGI soon

The "inputs" argument

At about 1:15 in this interview, Carl Shulman argues (quoting from the transcript):

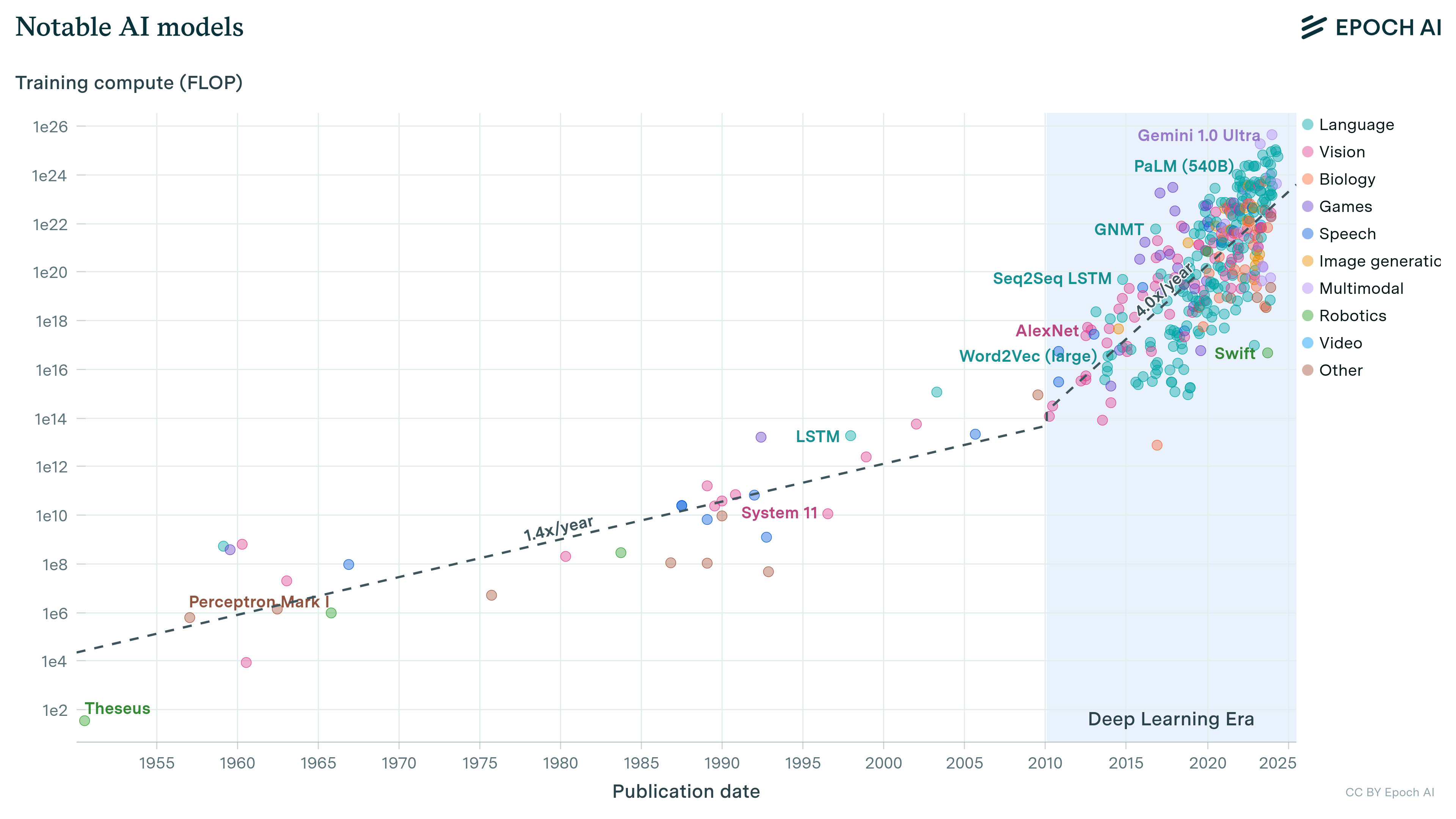

We've been scaling [compute expended on ML] up four times as fast as was the case for most of the history of AI. We're running through the orders of magnitude of possible resource inputs you could need for AI much much more quickly than we were for most of the history of AI. That's why this is a period with a very elevated chance of AI per year because we're moving through so much of the space of inputs per year [...].

This isn't the complete argument Shulman gives, but on its own it's interesting. On its own, it's valid, but only if we're actually scaling up all the needed inputs.

On the conceptual AGI view, this isn't the case, because we aren't very greatly increasing the amount of great natural philosophers doing great natural philosophy about intelligence. That's a necessary input, and it's only being somewhat scaled up. For one thing, many new AI researchers are correlated with each other, and many are focused on scaling up, applying, and varying existing ideas. For another thing, sequential progress can barely be sped up with more bodies.

The "big evolution" argument

Carl goes on to argue that eventually, when we have enough compute, we'll be able to run a really big evolutionary process that finds AGIs (if we haven't already made AGI). This idea also appears in Ajeya Cotra's report [LW · GW] on the compute needed to create AGI.

I broadly agree with this. But I have two reasons that this argument doesn't make AGI seem very likely very soon.

The first reason is that running a big evolution actually seems kind of hard; it seems to take significant conceptual progress and massive engineering effort to make the big evolution work. What I'd expect to see when this is tried, is basically nothing; life doesn't get started, nothing interesting happens, the entities don't get far (beyond whatever primitives were built in). You can get around this by invoking more compute, e.g. by simulating physics more accurately at a more detailed level, or by doing hyperparameter search to find worlds that lead to cool stuff. But then you're invoking more compute. (I'd also expect a lot of the hacks that supposedly make our version of evolution much more efficient than real evolution, to actually result in our version being circumscribed, i.e. it peters out because the shortcut that saved compute also cut off some important dimensions of search.)

The second reason is that evolution seems to take a lot of serial time. There's probably lots of clever things one can do to shortcut this, but these would be significant conceptual progress.

"I see how to do it"

My (limited / filtered) experience with these ideas leads me to think that [ideas knowably sufficient to make an AGI in practice] aren't widespread or obvious. (Obviously it is somehow feasible to make an AGI, because evolution did it.)

The "no blockers" intuition

An intuition that I often encounter is something like this:

Previously, there were blockers to current systems being developed into AGI. But now those blockers have been solved, so AGI could happen any time now.

This sounds to my ears like: "I saw how to make AGI, but my design required X. Then someone made X, so now I have a design for an AGI that will work.". But I don't think that's what they think. I think they don't think they have to have a design for an AGI in order to make an AGI.

I kind of agree with some version of this——there's a lot of stuff you don't have to understand, in order to make something that can do some task. We observe this in modern ML. But current systems, though they impressively saturate some lower-dimensional submanifold of capability-space, don't permeate a full-dimensional submanifold. Intelligence is a positive thing. Most computer code doesn't put itself on an unbounded trajectory of gaining capabilities. To make it work you have to do engineering and science, at some level. Bridges don't hold weight just because there's nothing blocking them from holding weight.

Daniel Kokotajlo points out that for things that grow, it's kind of true that they'll succeed as long as there aren't blockers——and for example animal husbandry kind of just works, without the breeders understanding much of anything about the internals of why their selection pressures are met with adequate options to select. This is true, but it doesn't seem very relevant to AGI because we're not selecting from an existing pool of highly optimized "genomic" (that is, mental) content. If instead of tinkering with de novo gradient-searched circuits, we were tinkering with remixing and mutating whole-brain emulations, then I would think AGI comes substantially sooner.

Another regime where "things just work" is many mental contexts where a task is familiar enough in some way that you can expect to succeed at the task by default. For example, if you're designing a wadget, and you've previously designed similar wadgets to similar specifications, then it makes sense to treat a design idea as though it's going to work out——as though it can be fully fleshed out into a satisfactory, functioning design——unless you see something clearly wrong with it, a clear blocker like a demand for a metal with unphysical properties. Again, like the case of animal husbandry, the "things just work" comes from the (perhaps out of sight) preexisting store of optimized content that's competent to succeed at the task given a bit of selection and arrangement. In the case of AGI, no one's ever built anything like that, so the store of knowledge that would automatically flesh out blockerless AGI ideas is just not there.

Yet another such regime is markets, where the crowd of many agents can be expected to figure out how to do something as long as it's feasible. So, a version of this intuition goes:

There are a lot of people trying to make AGI. So either there's some strong blocker that makes it so that no one can make AGI, or else someone will make AGI.

This is kind of true, but it just goes back to the question of how much conceptual progress will people make towards AGI. It's not an argument that we already have the understanding needed to make AGI. If it's used as an argument that we already have the understanding, then it's an accounting mistake: it says "We already have the understanding. The reason we don't need more understanding, is that if there were more understanding needed, someone else will figure it out, and then we'll have it. Therefore no one needs to figure anything else out.".

Finally: I also see a fair number of specific "blockers", as well as some indications that existing things don't have properties that would scare me.

"We just need X" intuitions

Another intuition that I often encounter is something like this:

We just need X to get AGI. Once we have X, in combination with Y it will go all the way.

Some examples of Xs: memory, self-play, continual learning, curricula, AIs doing AI research, learning to learn, neural nets modifying their own weights, sparsity, learning with long time horizons.

For example: "Today's algorithms can learn anything given enough data. So far, data is limited, and we're using up what's available. But self-play generates infinite data, so our systems will be able to learn unboundedly. So we'll get AGI soon.".

This intuition is similar to the "no blockers" intuition, and my main response is the same: the reason bridges stand isn't that you don't see a blocker to them standing. See above.

A "we just need X" intuition can become a "no blockers" intuition if someone puts out an AI research paper that works out some version of X. That leads to another response: just because an idea is, at a high level, some kind of X, doesn't mean the idea is anything like the fully-fledged, generally applicable version of X that one imagines when describing X.

For example, suppose that X is "self-play". One important thing about self-play is that it's an infinite source of data, provided in a sort of curriculum of increasing difficulty and complexity. Since we have the idea of self-play, and we have some examples of self-play that are successful (e.g. AlphaZero), aren't we most of the way to having the full power of self-play? And isn't the full power of self-play quite powerful, since it's how evolution made AGI? I would say "doubtful". The self-play that evolution uses (and the self-play that human children use) is much richer, containing more structural ideas, than the idea of having an agent play a game against a copy of itself.

Most instances of a category are not the most powerful, most general instances of that category. So just because we have, or will soon have, some useful instances of a category, doesn't strongly imply that we can or will soon be able to harness most of the power of stuff in that category. I'm reminded of the politician's syllogism: "We must do something. This is something. Therefore, we must do this.".

The bitter lesson and the success of scaling

Sutton's bitter lesson, paraphrased:

AI researchers used to focus on coming up with complicated ideas for AI algorithms. They weren't very successful. Then we learned that what's successful is to leverage computation via general methods, as in deep learning and massive tree search.

Some add on:

And therefore what matters in AI is computing power, not clever algorithms.

This conclusion doesn't follow. Sutton's bitter lesson is that figuring out how to leverage computation using general methods that scale with more computation beats trying to perform a task by encoding human-learned specific knowledge about the task domain. You still have to come up with the general methods. It's a different sort of problem——trying to aim computing power at a task, rather than trying to work with limited computing power or trying to "do the task yourself"——but it's still a problem. To modify a famous quote: "In some ways we feel we are as bottlenecked on algorithmic ideas as ever, but we believe we are bottlenecked on a higher level and about more important things."

Large language models

Some say:

LLMs are already near-human and in many ways super-human general intelligences. There's very little left that they can't do, and they'll keep getting better. So AGI is near.

This is a hairy topic, and my conversations about it have often seemed not very productive. I'll just try to sketch my view:

- The existence of today's LLMs is scary and should somewhat shorten people's expectations about when AGI comes.

- LLMs have fixed, partial concepts with fixed, partial understanding. An LLM's concepts are like human concepts in that they can be combined in new ways and used to make new deductions, in some scope. They are unlike human concepts in that they won't grow or be reforged to fit new contexts. So for example there will be some boundary beyond which a trained LLM will not recognize or be able to use a new analogy; and this boundary is well within what humans can do.

- An LLM's concepts are mostly "in the data". This is pretty vague, but I still think it. A number of people who think that LLMs are basically already AGI have seemed to agree with some version of this, in that when I describe something LLMs can't do, they say "well, it wasn't in the data". Though maybe I misunderstand them.

- When an LLM is trained more, it gains more partial concepts.

- However, it gains more partial concepts with poor sample efficiency; it mostly only gains what's in the data.

- In particular, even if the LLM were being continually trained (in a way that's similar to how LLMs are already trained, with similar architecture), it still wouldn't do the thing humans do with quickly picking up new analogies, quickly creating new concepts, and generally reforging concepts.

- LLMs don't have generators that are nearly as powerful as the generators of human understanding. The stuff in LLMs that seems like it comes in a way that's similar to how stuff in humans comes, actually comes from a lot more data. So LLMs aren't that much of indication that we've figured out how to make things that are on an unbounded trajectory of improvement.

- LLMs have a weird, non-human shaped set of capabilities. They go much further than humans on some submanifold, and they barely touch some of the full manifold of capabilities. (They're "unbalanced" in Cotra's terminology.)

- There is a broken inference. When talking to a human, if the human emits certain sentences about (say) category theory, that strongly implies that they have "intuitive physics" about the underlying mathematical objects. They can recognize the presence of the mathematical structure in new contexts, they can modify the idea of the object by adding or subtracting properties and have some sense of what facts hold of the new object, and so on. This inference——emitting certain sentences implies intuitive physics——doesn't work for LLMs.

- The broken inference is broken because these systems are optimized for being able to perform all the tasks that don't take a long time, are clearly scorable, and have lots of data showing performance. There's a bunch of stuff that's really important——and is a key indicator of having underlying generators of understanding——but takes a long time, isn't clearly scorable, and doesn't have a lot of demonstration data. But that stuff is harder to talk about and isn't as intuitively salient as the short, clear, demonstrated stuff.

- Vaguely speaking, I think stable diffusion image generation is comparably impressive to LLMs, but LLMs seem even more impressive to some people because LLMs break the performance -> generator inference more. We're used to the world (and computers) creating intricate images, but not creating intricate texts.

- There is a missing update. We see impressive behavior by LLMs. We rightly update that we've invented a surprisingly generally intelligent thing. But we should also update that this behavior surprisingly turns out to not require as much general intelligence as we thought.

Other comments on AGI soon

- There's a seemingly wide variety of reasons that people I talk to think AGI comes soon. This seems like evidence for each of these hypotheses: that AGI comes soon is overdetermined; that there's one underlying crux (e.g.: algorithmic progress isn't needed to make AGI) that I haven't understood yet; that I talked to a heavily selected group of people (true); that people have some other reason for saying that AGI comes soon, and then rationalize that proposition.

- I'm somewhat concerned that people are being somewhat taken in by hype (experiments systematically misinterpreted by some; the truth takes too long to put on its pants, and the shared narrative is already altered).

- I'm kind of baffled that people are so willing to say that LLMs understand X, for various X. LLMs do not behave with respect to X like a person who understands X, for many X.

- I'm pretty concerned that many people are fairly strongly deferring to others, in a general sense that includes updating off of other people's actions and vibes. Widespread deference has many dangers, which I list in "Dangers of deference".

- I'm worried that there's a bucket error where "I think AGI comes soon." isn't separated from "We're going to be motivated to work together to prevent existential risk from AGI.".

My views on strategy

-

Alignment is really hard. No one has good reason to think any current ideas would work to make an aligned / corrigible AGI. If AGI comes, everyone dies.

-

If AGI comes in five years, everyone dies. We won't solve alignment well enough by then. This of course doesn't imply that AGI coming soon is less likely. However, it does mean that some people should focus on somewhat different things. Most people trying to make the world safe by solving AGI alignment should be open to trains of thought that likely will only be helpful in twenty years. There will be a lot of people who can't help the world if AGI comes in five years; if those people are going to stress out about how they can't help, instead they should work on stuff that helps in twenty or fifty years.

-

A consensus belief is often inaccurate, e.g. because of deference and information cascades. In that case, the consensus portfolio of strategies will be incorrect.

-

Not only that, but furthermore: Suppose there is a consensus believe, and suppose that it's totally correct. If funders, and more generally anyone who can make stuff happen (e.g. builders and thinkers), use this totally correct consensus belief to make local decisions about where to allocate resources, and they don't check the global margin, then they will in aggregrate follow a portfolio of strategies that is incorrect. The make-stuff-happeners will each make happen the top few things on their list, and leave the rest undone. The top few things will be what the consensus says is most important——in our case, projects that help if AGI comes within 10 years. If a project helps in 30 years, but not 10 years, then it doesn't get any funding at all. This is not the right global portfolio; it oversaturates fast interventions and leaves slow interventions undone.

-

Because the shared narrative says AGI comes soon, there's less shared will for projects that take a long time to help. People don't come up with such projects, because they don't expect to get funding; and funders go on not funding such projects, because they don't see good ones, and they don't particularly mind because they think AGI comes soon.

Things that might actually work

Besides the standard stuff (AGI alignment research, moratoria on capabilities research, explaining why AGI is an existential risk), here are two key interventions:

- Human intelligence enhancement. Important, tractable, and neglected. Note that if alignment is hard enough that we can't solve it in time, but enhanced humans could solve it, then making enhanced humans one year sooner is almost as valuable as making AGI come one year later.

- Confrontation-worthy empathy. Important, probably tractable, and neglected.

- I suspect there's a type of deep, thorough, precise understanding that one person (the intervener) can have of another person (the intervened), which makes it so that the intervener can confront the intervened with something like "If you and people you know succeed at what you're trying to do, everyone will die.", and the intervened can hear this.

- This is an extremely high bar. It may go beyond what's normally called empathy, understanding, gentleness, wisdom, trustworthiness, neutrality, justness, relatedness, and so on. It may have to incorporate a lot of different, almost contradictory properties; for example, the intervener might have to at the same time be present and active in the most oppositional way (e.g., saying: I'm here, and when all is said and done you're threatening the lives of everyone I love, and they have a right to exist) while also being almost totally diaphanous (e.g., in fact not interfering with the intervened's own reflective processes). It may involve irreversible changes, e.g. risking innoculation effects and unilateralist commons-burning. It may require incorporating very distinct skills; e.g. being able to make clear, correct, compelling technical arguments, and also being able to hold emotional space in difficult reflections, and also being interesting and socially competent enough to get the appropriate audiences in the first place. It probably requires seeing the intervened's animal, and the intervened's animal's situation, so that the intervener can avoid being a threat to the intervened's animal, and can help the intervened reflect on other threats to their animal. Developing this ability probably requires recursing on developing difficult subskills. It probably requires to some extent thinking like a cultural-rationalist and to some extent thinking very much not like a cultural-rationalist. It is likely to have discontinuous difficulty——easy for some sorts of people, and then very difficult in new ways for other sorts of people.

- Some people are working on related abilities. E.g. Circlers, authentic relaters, therapists. As far as I know (at least having some substantial experience with Circlers), these groups aren't challenging themselves enough. Mathematicians constantly challenge themselves: when they answer one sort of question, that sort of question becomes less interesting, and they move on to thinking about more difficult questions. In that way, they encounter each fundamental difficulty eventually, and thus have likely already grappled with the mathematical aspect of a fundamental difficulty that another science encounters.

- Critch talks about empathy here [LW · GW], though maybe with a different emphasis.

56 comments

Comments sorted by top scores.

comment by Adele Lopez (adele-lopez-1) · 2023-07-09T14:30:28.025Z · LW(p) · GW(p)

Is there a specific thing you think LLMs won't be able to do soon, such that you would make a substantial update toward shorter timelines if there was an LLM able to do it within 3 years from now?

Replies from: TsviBT, Benito↑ comment by TsviBT · 2023-07-10T06:42:17.793Z · LW(p) · GW(p)

Well, making it pass people's "specific" bar seems frustrating, as I mentioned in the post, but: understand stuff deeply--such that it can find new analogies / instances of the thing, reshape its idea of the thing when given propositions about the thing taken as constraints, draw out relevant implications of new evidence for the ideas.

Like, someone's going to show me an example of an LLM applying modus ponens, or making an analogy. And I'm not going to care, unless there's more context; what I'm interested in is [that phenomenon which I understand at most pre-theoretically, certainly not explicitly, which I call "understanding", and which has as one of its sense-experience emanations the behavior of making certain "relevant" applications of modus ponens, and as another sense-experience emanation the behavior of making analogies in previously unseen domains that bring over rich stuff from the metaphier].

Replies from: adele-lopez-1, Roman Leventov↑ comment by Adele Lopez (adele-lopez-1) · 2023-07-10T06:59:31.051Z · LW(p) · GW(p)

Alright, to check if I understand, would these be the sorts of things that your model is surprised by?

- An LLM solves a mathematical problem by introducing a novel definition which humans can interpret as a compelling and useful concept.

- An LLM which can be introduced to a wide variety of new concepts not in its training data, and after a few examples and/or clarifying questions is able to correctly use the concept to reason about something.

- A image diffusion model which is shown to have a detailed understanding of anatomy and 3D space, such that you can use it to transform an photo of a person into an image of the same person in a novel pose (not in its training data) and angle with correct proportions and realistic joint angles for the person in the input photo.

↑ comment by TsviBT · 2023-07-10T07:21:31.031Z · LW(p) · GW(p)

Unfortunately, more context is needed.

An LLM solves a mathematical problem by introducing a novel definition which humans can interpret as a compelling and useful concept.

I mean, I could just write a python script that prints out a big list of definitions of the form

"A topological space where every subset with property P also has property Q"

and having P and Q be anything from a big list of properties of subsets of topological spaces. I'd guess some of these will be novel and useful. I'd guess LLMs + some scripting could already take advantage of some of this. I wouldn't be very impressed by that (though I think I would be pretty impressed by the LLM being able to actually tell the difference between valid proofs in reasonable generality). There are some versions of this I'd be impressed by, though. Like if an LLM had been the first to come up with one of the standard notions of curvature, or something, that would be pretty crazy.

An LLM which can be introduced to a wide variety of new concepts not in its training data, and after a few examples and/or clarifying questions is able to correctly use the concept to reason about something.

I haven't tried this, but I'd guess if you give an LLM two lists of things where list 1 is [things that are smaller than a microwave and also red] and list 2 is [things that are either bigger than a microwave, or not red], or something like that, it would (maybe with some prompt engineering to get it to reason things out?) pick up that "concept" and then use it, e.g. sorting a new item, or deducing from "X is in list 1" to "X is red". That's impressive (assuming it's true), but not that impressive.

On the other hand, if it hasn't been trained on a bunch of statements about angular momentum, and then it can--given some examples and time to think--correctly answer questions about angular momentum, that would be surprising and impressive. Maybe this could be experimentally tested, though I guess at great cost, by training a LLM on a dataset that's been scrubbed of all mention of stuff related to angular momentum (disallowing math about angular momentum, but allowing math and discussion about momentum and about rotation), and then trying to prompt it so that it can correctly answer questions about angular momentum. Like, the point here is that angular momentum is a "new thing under the sun" in a way that "red and smaller than microwave" is not a new thing under the sun.

Replies from: Roman Leventov, Nick_Tarleton↑ comment by Roman Leventov · 2023-07-10T08:50:28.958Z · LW(p) · GW(p)

On the other hand, if it hasn't been trained on a bunch of statements about angular momentum, and then it can--given some examples and time to think--correctly answer questions about angular momentum, that would be surprising and impressive. Maybe this could be experimentally tested, though I guess at great cost, by training a LLM on a dataset that's been scrubbed of all mention of stuff related to angular momentum (disallowing math about angular momentum, but allowing math and discussion about momentum and about rotation), and then trying to prompt it so that it can correctly answer questions about angular momentum. Like, the point here is that angular momentum is a "new thing under the sun" in a way that "red and smaller than microwave" is not a new thing under the sun.

Until recently, I thought that the fact that LLMs are not strong and efficient online (or quasi-online, i.e., need few examples) conceptual learners is a "big obstacle" for AGI or ASI. I no longer think so. Yes, humans evidently still have an edge in this, that is, humans can somehow relatively quickly and efficiently "surgeon" their world models to accommodate new concepts and use them efficiently in a far-ranging way. (Even though I suspect that we over-glorify this ability in humans and it more realistically takes weeks or even months for humans to fully integrate new conceptual frameworks in their thinking than hours, still, they should be able to do so without much external examples, which will be lacking if the concept is actually very new.)

I no longer think this handicaps LLMs much. New powerful concepts that permeate practical and strategic reasoning in the real world are rarely invented and are spread through the society slowly. Just being a skillful user of existing concepts that are amptly described in books and otherwise in the training corpus of LLMs should be well enough for gaining capacity for recursive self-improvement, and quite far superhuman intelligence/strategy/agency more generally.

Then, imagine that superhuman LLMs-based agents "won" and killed all humans. Even if they themselves don't (or couldn't!) invent ML paradigms for efficient online concept learning, they could still sort of hack through it, through experimenting with new concepts, trying to run a lot of simulations with them, checking these simulations against reality (filtering out incoherence/bad concepts), and then re-training themselves on the results of these simulations, and then giving text labels to the features found in their own DNNs to mark the corresponding concept.

Replies from: TsviBT↑ comment by TsviBT · 2023-07-10T22:54:46.582Z · LW(p) · GW(p)

Just being a skillful user of existing concepts

I don't think they're skilled users of existing concepts. I'm not saying it's an "obstacle", I'm saying that this behavior pattern would be a significant indicator to me that the system has properties that make it scary.

↑ comment by Nick_Tarleton · 2025-02-02T23:25:06.771Z · LW(p) · GW(p)

I don't really have an empirical basis for this, but: If you trained something otherwise comparable to, if not current, then near-future reasoning models without any mention of angular momentum, and gave it a context with several different problems to which angular momentum was applicable, I'd be surprised if it couldn't notice that was a common interesting quantity, and then, in an extension of that context, correctly answer questions about it. If you gave it successive problem sets where the sum of that quantity was applicable, the integral, maybe other things, I'd be surprised if a (maybe more powerful) reasoning model couldn't build something worth calling the ability to correctly answer questions about angular momentum. Do you expect otherwise, and/or is this not what you had in mind?

Replies from: TsviBT↑ comment by TsviBT · 2025-02-03T01:53:40.935Z · LW(p) · GW(p)

It's a good question. Looking back at my example, now I'm just like "this is a very underspecified/confused example". This deserves a better discussion, but IDK if I want to do that right now. In short the answer to your question is

- I at least would not be very surprised if gippity-seek-o5-noAngular could do what I think you're describing.

- That's not really what I had in mind, but I had in mind something less clear than I thought. The spirit is about "can the AI come up with novel concepts", but the issue here is that "novel concepts" are big things, and their material and functioning and history are big and smeared out.

I started writing out a bunch of thoughts, but they felt quite inadequate because I knew nothing about the history of the concept of angular momentum; so I googled around a tiny little bit. The situation seems quite awkward for the angular momentum lesion experiment. What did I "mean to mean" by "scrubbed all mention of stuff related to angular momentum"--presumably this would have to include deleting all subsequent ideas that use angular moment in their definitions, but e.g. did I also mean to delete the notion of cross product?

It seems like angular momentum was worked on in great detail well before the cross product was developed at all explicitly. See https://arxiv.org/pdf/1511.07748 and https://en.wikipedia.org/wiki/Cross_product#History. Should I still expect gippity-seek-o5-noAngular to notice the idea if it doesn't have the cross product available? Even if not, what does and doesn't this imply about this decade's AI's ability to come up with novel concepts?

(I'm going to mull on why I would have even said my previous comment above, given that on reflection I believe that "most" concepts are big and multifarious and smeared out in intellectual history. For some more examples of smearedness, see the subsection here: https://tsvibt.blogspot.com/2023/03/explicitness.html#the-axiom-of-choice)

Replies from: Raemon↑ comment by Raemon · 2025-02-03T07:49:21.208Z · LW(p) · GW(p)

That's not really what I had in mind, but I had in mind something less clear than I thought. The spirit is about "can the AI come up with novel concepts",

I think one reason I think the current paradigm is "general enough, in principle", is that I don't think "novel concepts" is really The Thing. I think creativity / intelligence mostly is about is combining concepts, it's just that really smart people are

a) faster in raw horsepower and can handle more complexity at a time

b) have a better set of building blocks to combine or apply to make new concepts (which includes building blocks for building better building blocks)

c) have a more efficient search for useful/relevant building blocks (both metacognitive and object-level).

Maybe you believe this, and think that "well yeah, it's the efficient search that's the important part, which we still don't actually have a real working version of?"?

It seems like the current models have basically all the tools a moderately smart human have, with regards to generating novel ideas, and the thing that they're missing is something like "having a good metacognitive loop such that they notice when they're doing a fake/dumb version of things, and course correcting" and "persistently pursue plans over long time horizons." And it doesn't seem to have zero of either of those, just not enough to get over some hump.

I don't see what's missing that a ton of training on a ton of diverse, multimodal tasks + scaffoldin + data flywheel isn't going to figure out.

Replies from: TsviBT↑ comment by TsviBT · 2025-02-03T08:00:12.462Z · LW(p) · GW(p)

really smart people

Differences between people are less directly revelative of what's important in human intelligence. My guess is that all or very nearly all human children have all or nearly all the intelligence juice. We just, like, don't appreciate how much a child is doing in constructing zer world.

the current models have basically all the tools a moderately smart human have, with regards to generating novel ideas

Why on Earth do you think this? (I feel like I'm in an Asch Conformity test, but with really really high production value. Like, after the experiment, they don't tell you what the test was about. They let you take the card home. On the walk home you ask people on the street, and they all say the short line is long. When you get home, you ask your housemates, and they all agree, the short line is long.)

I don't see what's missing that a ton of training on a ton of diverse, multimodal tasks + scaffoldin + data flywheel isn't going to figure out.

My response is in the post.

↑ comment by Roman Leventov · 2023-07-10T08:36:13.191Z · LW(p) · GW(p)

Analogies: "Emergent Analogical Reasoning in Large Language Models [LW · GW]"

Replies from: TsviBT↑ comment by Ben Pace (Benito) · 2023-10-18T00:00:11.081Z · LW(p) · GW(p)

I think the argument here basically implies that language models will not produce any novel, useful concepts in any existing industries or research fields that get substantial adoption (e.g. >10% of ppl use it, or a widely cited paper) in those industries, in the next 3 years, and if it did this, then the end would be nigh (or much nigher).

To be clear, you might get new concepts from language models about language if you nail some Chris Olah style transparency work, but the language model itself will not output ones that aren't about language in the text.

Replies from: TsviBT↑ comment by TsviBT · 2023-10-24T01:31:24.120Z · LW(p) · GW(p)

I roughly agree. As I mentioned to Adele, I think you could get sort of lame edge cases where the LLM kinda helped find a new concept. The thing that would make me think the end is substantially nigher is if you get a model that's making new concepts of comparable quality at a comparable rate to a human scientist in a domain in need of concepts.

if you nail some Chris Olah style transparency work

Yeah that seems right. I'm not sure what you mean by "about language". Sorta plausibly you could learn a little something new about some non-language domain that the LLM has seen a bunch of data about, if you got interpretability going pretty well. In other words, I would guess that LLMs already do lots of interesting compression in a different way than humans do it, and maybe you could extract some of that. My quasi-prediction would be that those concepts

- are created using way more data than humans use for many of their important concepts; and

- are weirdly flat, and aren't suitable out of the box for a big swath of the things that human concepts are suitable for.

comment by Max H (Maxc) · 2023-07-08T15:42:29.185Z · LW(p) · GW(p)

(Obviously it is somehow feasible to make an AGI, because evolution did it.)

This parenthetical is one of the reasons why I think AGI is likely to come soon.

The example of human evolution provides a strict upper bound on the difficulty of creating (true, lethally dangerous) AGI, and of packing it into a 10 W, 1000 cm box.

That doesn't mean that recreating the method used by evolution (iterative mutation over millions of years at planet scale) is the only way to discover and learn general-purpose reasoning algorithms. Evolution had a lot of time and resources to run, but it is an extremely dumb optimization process that is subject to a bunch of constraints and quirks of biology, which human designers are already free of.

To me, LLMs and other recent AI capabilities breakthroughs are evidence that methods other than planet-scale iterative mutation can get you something, even if it's still pretty far from AGI. And I think it is likely that capabilities research will continue to lead to scaling and algorithms progress that will get you more and more something. But progress of this kind can't go on forever - eventually it will hit on human-level (or better) reasoning ability.

The inference I make from observing both the history of human evolution and the spate of recent AI capabilities progress is that human-level intelligence can't be that special or difficult to create in an absolute sense, and that while evolutionary methods (or something isomorphic to them) at planet scale are sufficient to get to general intelligence, they're probably not necessary.

Or, put another way:

Finally: I also see a fair number of specific "blockers", as well as some indications that existing things don't have properties that would scare me.

I mostly agree with the point about existing systems, but I think there are only so many independent high-difficulty blockers which can "fit" inside the AGI-invention problem, since evolution somehow managed to solve them all through inefficient brute force. LLMs are evidence that at least some of the (perhaps easier) blockers can be solved via methods that are tractable to run on current-day hardware on far shorter timescales than evolution.

↑ comment by TsviBT · 2023-07-09T00:24:38.374Z · LW(p) · GW(p)

(Glib answers in place of no answers)

eventually it will hit on human-level (or better) reasoning ability.

Or it's limited to a submanifold of generators.

inefficient brute force

I don't think this is a good description of evolution.

Replies from: Maxc↑ comment by Max H (Maxc) · 2023-07-09T03:30:20.183Z · LW(p) · GW(p)

Or it's limited to a submanifold of generators.

"It" here refers to progress from human ingenuity, so I'm hesitant to put any limits whatsoever on what it will produce and how fast, let alone a limit below what evolution has already achieved.

I don't think this is a good description of evolution.

Hmm, yeah. The thing I am trying to get at is that evolution is very dumb and limited in some ways (in the sense of An Alien God [LW · GW], Evolutions Are Stupid (But Work Anyway) [LW · GW]), compared to human designers, but managed to design a general intelligence anyway, given enough time / energy / resources.

By "inefficient", I mean that human researchers with GPUs can (probably) design and create general intelligence OOM faster than evolution. Humans are likely to accomplish such a feat in decades or centuries at the most, so I think it is justified to call any process which takes millennia or longer inefficient, even if the human-based decades-long design process hasn't actually succeeded yet.

Replies from: TsviBT, TsviBT↑ comment by TsviBT · 2023-07-10T06:57:18.975Z · LW(p) · GW(p)

evolution is very dumb and limited in some ways

Ok. This makes sense. And I think about everyone agrees that evolution is very inefficient, in the sense that with some work (but vastly less time than evolution used) humans will be able to figure out how to make a thing that, using much less resources than evolution used, makes an AGI.

I was objecting to "brute force", not "inefficient". It's brute force in some sense, like it's "just physics" in the sense that you can just set up some particles and then run physics forward and get an AGI. But it also uses a lot of design ideas (stuff in the genome, and some ecological structure). It does a lot of search on a lot of dimensions of design. If you don't efficient-ify your big evolution, you're invoking a lot of compute; if you do efficient-ify, you might be cutting off those dimensions of search.

↑ comment by TsviBT · 2023-07-10T06:06:20.683Z · LW(p) · GW(p)

"It" here refers to progress from human ingenuity, so I'm hesitant to put any limits whatsoever on what it will produce and how fast

There's a contingent fact which is how many people are doing how much great original natural philosophy about intelligence and machine learning. If I thought the influx of people were directed at that, rather than at other stuff, I'd think AGI was coming sooner.

Humans are likely to accomplish such a feat in decades or centuries at the most,

As I said in the post, I agree with this, but I think it requires a bunch of work that hasn't been done yet, some of it difficult / requires insights.

Replies from: Maxc, mateusz-baginski↑ comment by Max H (Maxc) · 2023-07-12T17:20:40.278Z · LW(p) · GW(p)

I actually think another lesson from both evolution and LLMs is that it might not require much or any novel philosophy or insight to create useful cognitive systems, including AGI. I expect high-quality explicit philosophy to be one way of making progress, but not the only one.

Evolution itself did not do any philosophy in the course of creating general intelligence, and humans themselves often manage to grow intellectually and get smarter without doing natural philosophy, explicit metacognition, or deep introspection.

So even if LLMs and other current DL paradigm methods plateau, I think it's plausible, even likely, that capabilities research like Voyager will continue making progress for a lot longer. Maybe Voyager-like approaches will scale all the way to AGI, but even if they also plateau, I expect that there are ways of getting unblocked other than doing explicit philosophy of intelligence research or massive evolutionary simulations.

In terms of responses to arguments in the post: it's not that there are no blockers, or that there's just one thing we need, or that big evolutionary simulations will work or be feasible any time soon. It's just that explicit philosophy isn't the only way of filling in the missing pieces, however large and many they may be.

↑ comment by Dalcy (Darcy) · 2023-07-13T12:48:23.555Z · LW(p) · GW(p)

Related - "There are always many ways through the garden of forking paths, and something needs only one path to happen."

↑ comment by Mateusz Bagiński (mateusz-baginski) · 2023-07-10T07:23:57.721Z · LW(p) · GW(p)

Don't you think that once scaling hits the wall (assuming it does) the influx of people will be redirected towards natural philosophy of Intelligence and ML?

Replies from: TsviBT↑ comment by TsviBT · 2023-07-10T07:47:23.070Z · LW(p) · GW(p)

Yep! To some extent. That's what I meant by "It also seems like people are distracted now.", above. I have a denser probability on AGI in 2037 than on AGI in 2027, for that reason.

Natural philosophy is hard, and somewhat has serial dependencies, and IMO it's unclear how close we are. (That uncertainty includes "plausibly we're very very close, just another insight about how to tie things together will open the floodgates".) Also there's other stuff for people to do. They can just quiesce into bullshit jobs; they can work on harvesting stuff; they can leave the field; they can work on incremental progress.

↑ comment by momom2 (amaury-lorin) · 2025-03-28T20:48:23.157Z · LW(p) · GW(p)

But that argument would have worked the same way 50 years ago, when we were wrong to expect <50% chance of AGI in at least 50 years. Like I feel for LLMs, early computer work solved things that could be considered high-difficulty blockers such as proving a mathematical theorem.

comment by TsviBT · 2025-01-11T10:00:19.828Z · LW(p) · GW(p)

I still basically think all of this, and still think this space doesn't understand it, and thus has an out-of-whack X-derisking portfolio.

If I were writing it today, I'd add this example about search engines from this comment https://www.lesswrong.com/posts/oC4wv4nTrs2yrP5hz/what-are-the-strongest-arguments-for-very-short-timelines?commentId=2XHxebauMi9C4QfG4 [LW(p) · GW(p)] , about induction on vague categories like "has capabilities":

Would you say the same thing about the invention of search engines? That was a huge jump in the capability of our computers. And it looks even more impressive if you blur out your vision--pretend you don't know that the text that comes up on your screen is written by a human, and pretend you don't know that search is a specific kind of task distinct from a lot of other activity that would be involved in "True Understanding, woooo"--and just say "wow! previously our computers couldn't write a poem, but now with just a few keystrokes my computer can literally produce Billy Collins level poetry!".

I might also try to explain more how training procedures with poor sample complexity tend to not be on an unbounded trajectory.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2025-01-11T22:23:41.173Z · LW(p) · GW(p)

I think if you want to convince people with short timelines (e.g., 7 year medians) of your perspective, probably the most productive thing would be to better operationalize things you expect that AIs won't be able to do soon (but that AGI could do). As in, flesh out a response to this comment [LW(p) · GW(p)] such that it is possible for someone to judge.

Replies from: TsviBT, TsviBT↑ comment by TsviBT · 2025-01-11T22:38:08.699Z · LW(p) · GW(p)

But ok:

- Come up, on its own, with many math concepts that mathematicians consider interesting + mathematically relevant on a similar level to concepts that human mathematicians come up with.

- Do insightful science on its own.

- Perform at the level of current LLMs, but with 300x less training data.

↑ comment by TsviBT · 2025-01-11T23:39:31.158Z · LW(p) · GW(p)

But like, I wouldn't be surprised if, say, someone trained something that performed comparably to LLMs on a wide variety of benchmarks, using much less "data"... and then when you look into it, you find that what they were doing was taking activations of the LLMs and training the smaller guy on the activations. And I'll be like, come on, that's not the point; you could just as well have "trained" the smaller guy by copy-pasting the weights from the LLM and claimed "trained with 0 data!!". And you'll be like "but we met your criterion!" and I'll just be like "well whatever, it's obviously not relevant to the point I was making, and if you can't see that then why are we even having this conversation". (Or maybe you wouldn't do that, IDK, but this sort of thing--followed by being accused of "moving the goal posts"--is why this question feels frustrating to answer.)

↑ comment by Kaarel (kh) · 2025-01-29T23:18:25.502Z · LW(p) · GW(p)

¿ thoughts on the following:

- solving >95% of IMO problems while never seeing any human proofs, problems, or math libraries (before being given IMO problems in base ZFC at test time). like alphaproof except not starting from a pretrained language model and without having a curriculum of human problems and in base ZFC with no given libraries (instead of being in lean), and getting to IMO combos

↑ comment by TsviBT · 2025-01-29T23:43:21.285Z · LW(p) · GW(p)

human proofs, problems, or math libraries

(I'm not sure whether I'm supposed to nitpick. If I were nitpicking I'd ask things like: Wait are you allowing it to see preexisting computer-generated proofs? What counts as computer generated? Are you allowing it to see the parts of papers where humans state and discuss propositions and just cutting out the proofs? Is this system somehow trained on a giant human text corpus, but just without the math proofs?)

But if you mean basically "the AI has no access to human math content except a minimal game environment of formal logic, plus whatever abstract priors seep in via the training algorithm+prior, plus whatever general thinking patterns in [human text that's definitely not mathy, e.g. blog post about apricots]", then yeah, this would be really crazy to see. My points are trying to be, not minimally hard, but at least easier-ish in some sense. Your thing seems significantly harder (though nicely much more operationalized); I think it'd probably imply my "come up with interesting math concepts"? (Note that I would not necessary say the same thing if it was >25% of IMO problems; there I'd be significantly more unsure, and would defer to you / Sam, or someone who has a sense for the complexity of the full proofs there and the canonicalness of the necessary lemmas and so on.)

Replies from: kh↑ comment by Kaarel (kh) · 2025-01-30T05:24:37.126Z · LW(p) · GW(p)

I didn't express this clearly, but yea I meant no pretraining on human text at all, and also nothing computer-generated which "uses human mathematical ideas" (beyond what is in base ZFC), but I'd probably allow something like the synthetic data generation used for AlphaGeometry (Fig. 3) except in base ZFC and giving away very little human math inside the deduction engine. I agree this would be very crazy to see. The version with pretraining on non-mathy text is also interesting and would still be totally crazy to see. I agree it would probably imply your "come up with interesting math concepts". But I wouldn't be surprised if like of the people on LW who think A[G/S]I happens in like years thought that my thing could totally happen in 2025 if the labs were aiming for it (though they might not expect the labs to aim for it), with your things plausibly happening later. E.g. maybe such a person would think "AlphaProof is already mostly RL/search and one could replicate its performance soon without human data, and anyway, AlphaGeometry already pretty much did this for geometry (and AlphaZero did it for chess)" and "some RL+search+self-play thing could get to solving major open problems in math in 2 years, and plausibly at that point human data isn't so critical, and IMO problems are easier than major open problems, so plausibly some such thing gets to IMO problems in 1 year". But also idk maybe this doesn't hang together enough for such people to exist. I wonder if one can use this kind of idea to get a different operationalization with parties interested in taking each side though. Like, maybe whether such a system would prove Cantor's theorem (stated in base ZFC) (imo this would still be pretty crazy to see)? Or whether such a system would get to IMO combos relying moderately less on human data?

Replies from: TsviBT↑ comment by TsviBT · 2025-01-30T08:42:49.761Z · LW(p) · GW(p)

I'd probably allow something like the synthetic data generation used for AlphaGeometry (Fig. 3) except in base ZFC and giving away very little human math inside the deduction engine

IIUC yeah, that definitely seems fair; I'd probably also allow various other substantial "quasi-mathematical meta-ideas" to seep in, e.g. other tricks for self-generating a curriculum of training data.

But I wouldn't be surprised if like >20% of the people on LW who think A[G/S]I happens in like 2-3 years thought that my thing could totally happen in 2025 if the labs were aiming for it (though they might not expect the labs to aim for it), with your things plausibly happening later

Mhm, that seems quite plausible, yeah, and that does make me want to use your thing as a go-to example.

whether such a system would prove Cantor's theorem (stated in base ZFC) (imo this would still be pretty crazy to see)?

This one I feel a lot less confident of, though I could plausibly get more confident if I thought about the proof in more detail.

Part of the spirit here, for me, is something like: Yes, AIs will do very impressive things on "highly algebraic" problems / parts of problems. (See "Algebraicness".) One of the harder things for AIs is, poetically speaking, "self-constructing its life-world", or in other words "coming up with lots of concepts to understand the material it's dealing with, and then transitioning so that the material it's dealing with is those new concepts, and so on". For any given math problem, I could be mistaken about how algebraic it is (or, how much of its difficulty for humans is due to the algebraic parts), and how much conceptual progress you have to do to get to a point where the remaining work is just algebraic. I assume that human math is a big mix of algebraic and non-algebraic stuff. So I get really surprised when an AlphaMath can reinvent most of the definitions that we use, but I'm a lot less sure about a smaller subset because I'm less sure if it just has a surprisingly small non-algebraic part. (I think that someone with a lot more sense of the math in general, and formal proofs in particular, could plausibly call this stuff in advance significantly better than just my pretty weak "it's hard to do all of a wide variety of problems".)

↑ comment by TsviBT · 2025-01-11T22:33:16.268Z · LW(p) · GW(p)

I did give a response in that comment thread. Separately, I think that's not a great standard, e.g. as described in the post and in this comment https://www.lesswrong.com/posts/i7JSL5awGFcSRhyGF/shortform-2?commentId=zATQE3Lhq66XbzaWm [LW(p) · GW(p)] :

Second, 2024 AI is specifically trained on short, clear, measurable tasks. Those tasks also overlap with legible stuff--stuff that's easy for humans to check. In other words, they are, in a sense, specifically trained to trick your sense of how impressive they are--they're trained on legible stuff, with not much constraint on the less-legible stuff (and in particular, on the stuff that becomes legible but only in total failure on more difficult / longer time-horizon stuff).

In fact, all the time in real life we make judgements about things that we couldn't describe in terms that would be considered well-operationalized by betting standards, and we rely on these judgements, and we largely endorse relying on these judgements. E.g. inferring intent in criminal cases, deciding whether something is interesting or worth doing, etc. I should be able to just say "but you can tell that these AIs don't understand stuff", and then we can have a conversation about that, without me having to predict a minimal example of something which is operationalized enough for you to be forced to recognize it as judgeable and also won't happen to be surprisingly well-represented in the data, or surprisingly easy to do without creativity, etc.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2025-01-11T23:26:43.245Z · LW(p) · GW(p)

(Yeah, you responded, but felt not that operationalized and seemed doable to flesh out as you did.)

comment by Vladimir_Nesov · 2023-07-08T13:14:03.650Z · LW(p) · GW(p)

When there is a simple enlightening experiment that can be constructed out of available parts (including theories that inform construction), it can be found with expert intuition, without clear understanding. When there are no new parts for a while, and many experiments have been tried, this is evidence that further blind search becomes less likely to produce results, that more complicated experiments are necessary that can only be designed with stronger understanding.

Recently, there are many new parts for AI tinkering, some themselves obtained from blind experimentation (scaling gives new capabilities that couldn't be predicted to result from particular scaling experiments). Not enough time and effort has passed to rule out further significant advancement by simple tinkering with these new parts, and scaling itself hasn't run out of steam yet, it by itself might deliver even more new parts for further tinkering.

So while it's true that there is no reason to expect specific advancements, there is still reason to expect advancements of unspecified character for at least a few years, more of them than usually. This wave of progress might run out of steam before AGI, or it might not, there is no clear theory to say which is true. Current capabilities seem sufficiently impressive that even modest unpredictable advancement might prove sufficient, which is an observation that distinguishes the current wave of AI progress from previous ones.

Replies from: TsviBT↑ comment by TsviBT · 2023-07-09T00:25:45.849Z · LW(p) · GW(p)

I think the current wave is special, but that's a very far cry from being clearly on the ramp up to AGI.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-07-09T07:00:48.785Z · LW(p) · GW(p)

The point is, it's still a matter of intuitively converting impressiveness of current capabilities and new parts available for tinkering that hasn't been done yet into probability of this wave petering out before AGI. The arguments for AGI "being overdetermined" can be amended to become arguments for particular (kinds of) sequences of experiments looking promising, shifting the estimate once taken into account. Since failure of such experiments is not independent, the estimate can start going down as soon as scaling stops producing novel capabilities, or reaches the limits of economic feasibility, or there is a year or two without significant breakthroughs.

Right now, it's looking grim, but a claim I agree with is that planning for the possibility of AGI taking 20+ years is still relevant, nobody actually knows it's inevitable. I think the following few years will change this estimate significantly either way.

Replies from: TsviBT↑ comment by TsviBT · 2023-07-10T06:19:17.496Z · LW(p) · GW(p)

I'm not really sure whether or not we disagree. I did put "3%-10% probability of AGI in the next 10-15ish years".

I think the following few years will change this estimate significantly either way.

Well, I hope that this is a one-time thing. I hope that if in a few years we're still around, people go "Damn! We maybe should have been putting a bit more juice into decades-long plans! And we should do so now, though a couple more years belatedly!", rather than going "This time for sure!" and continuing to not invest in the decades-long plans. My impression is that a lot of people used to work on decades-long plans and then shifted recently to 3-10 year plans, so it's not like everyone's being obviously incoherent. But I also have an impression that the investment in decades-plans is mistakenly low; when I propose decades-plans, pretty nearly everyone isn't interested, with their cited reason being that AGI comes within a decade.

comment by Richard_Ngo (ricraz) · 2023-07-10T05:26:57.238Z · LW(p) · GW(p)

FWIW I think that confrontation-worthy empathy and use of the phrase "everyone will die" to describe AI risk are approximately mutually exclusive with each other, because communication using the latter phrase results from a failure to understand communication norms [LW · GW].

(Separately I also think that "if we build AGI, everyone will die" is epistemically unjustifiable given current knowledge. But the point above still stands even if you disagree with that bit.)

Replies from: TsviBT, lahwran↑ comment by TsviBT · 2023-07-10T06:49:51.962Z · LW(p) · GW(p)

What I mean by confrontation-worthy empathy is about that sort of phrase being usable. I mean, I'm not saying it's the best phrase, or a good phrase to start with, or whatever. I don't think inserting Knightian uncertainty is that helpful; the object-level stuff is usually the most important thing to be communicating.

This maybe isn't so related to what you're saying here, but I'd follow the policy of first making it common knowledge that you're reporting your inside views (which implies that you're not assuming that the other person would share those views); and then you state your inside views. In some scenarios you describe, I get the sense that Person 2 isn't actually wanting Person 1 to say more modest models, they're wanting common knowledge that they won't already share those views / won't already have the evidence that should make them share those views.

Replies from: ricraz↑ comment by Richard_Ngo (ricraz) · 2023-07-10T12:37:32.009Z · LW(p) · GW(p)

"I don't think inserting Knightian uncertainty is that helpful; the object-level stuff is usually the most important thing to be communicating."

The main point of my post is that accounting for disagreements about Knightian uncertainly is the best way to actually communicate object level things, since otherwise people get sidetracked by epistemological disagreements.

"I'd follow the policy of first making it common knowledge that you're reporting your inside views"

This is a good step, but one part of the epistemological disagreements I mention above is that most people consider inside views to be much a much less coherent category, and much less separable from other views, than most rationalists do. So I expect that more such steps are typically necessary.

"they're wanting common knowledge that they won't already share those views"

I think this is plausibly true for laypeople/non-ML-researchers, but for ML researchers it's much more jarring when someone is making very confident claims about their field of expertise, that they themselves strongly disagree with.

↑ comment by the gears to ascension (lahwran) · 2025-01-12T07:41:28.695Z · LW(p) · GW(p)

barring anything else you might have meant, temporarily assuming yudkowsky's level of concern if someone builds yudkowsky's monster, then evidentially speaking, it's still the case that "if we build AGI, everyone will die" is unjustified in a world where it's unclear if alignment is going to succeed before someone can build yudkowsky's monster. in other words, agreed.

comment by Nick_Tarleton · 2025-02-02T23:07:16.203Z · LW(p) · GW(p)

It seems right to me that "fixed, partial concepts with fixed, partial understanding" that are "mostly 'in the data'" likely block LLMs from being AGI in the sense of this post. (I'm somewhat confused / surprised that people don't talk about this more — I don't know whether to interpret that as not noticing it, or having a different ontology, or noticing it but disagreeing that it's a blocker, or thinking that it'll be easy to overcome, or what. I'm curious if you have a sense from talking to people.)

These also seem right

- "LLMs have a weird, non-human shaped set of capabilities"

- "There is a broken inference"

- "we should also update that this behavior surprisingly turns out to not require as much general intelligence as we thought"

- "LLMs do not behave with respect to X like a person who understands X, for many X"

(though I feel confused about how to update on the conjunction of those, and the things LLMs are good at — all the ways they don't behave like a person who doesn't understand X, either, for many X.)

But: you seem to have a relatively strong prior[1] on how hard it is to get from current techniques to AGI, and I'm not sure where you're getting that prior from. I'm not saying I have a strong inside view in the other direction, but, like, just for instance — it's really not apparent to me that there isn't a clever continuous-training architecture, requiring relatively little new conceptual progress, that's sufficient; if that's less sample-efficient than what humans are doing, it's not apparent to me that it can't still accomplish the same things humans do, with a feasible amount of brute force. And it seems like that is apparent to you.

Or, looked at from a different angle: to my gut, it seems bizarre if whatever conceptual progress is required takes multiple decades, in the world I expect to see with no more conceptual progress, where probably:

- AI is transformative enough to motivate a whole lot of sustained attention on overcoming its remaining limitations

- AI that's narrowly superhuman on some range of math & software tasks can accelerate research

- ^

It's hard for me to tell how strong: "—though not super strongly" is hard for me to square with your butt-numbers, even taking into account that you disclaim them as butt-numbers.

↑ comment by TsviBT · 2025-02-03T02:40:49.017Z · LW(p) · GW(p)

I'm curious if you have a sense from talking to people.

More recently I've mostly disengaged (except for making kinda-shrill LW comments). Some people say that "concepts" aren't a thing, or similar. E.g. by recentering on performable tasks, by pointing to benchmarks going up and saying that the coarser category of "all benchmarks" or similar is good enough for predictions. (See e.g. Kokotajlo's comment here https://www.lesswrong.com/posts/oC4wv4nTrs2yrP5hz/what-are-the-strongest-arguments-for-very-short-timelines?commentId=QxD5DbH6fab9dpSrg [LW(p) · GW(p)], though his actual position is of course more complex and nuanced.) Some people say that the training process is already concept-gain-complete. Some people say that future research, such as "curiosity" in RL, will solve it. Some people say that the "convex hull" of existing concepts is already enough to set off FURSI (fast unbounded recursive self-improvement).

(though I feel confused about how to update on the conjunction of those, and the things LLMs are good at — all the ways they don't behave like a person who doesn't understand X, either, for many X.)

True; I think I've heard some various people discussing how to more precisely think of the class of LLM capabilities, but maybe there should be more.

if that's less sample-efficient than what humans are doing, it's not apparent to me that it can't still accomplish the same things humans do, with a feasible amount of brute force

It's often awkward discussing these things, because there's sort of a "seeing double" that happens. In this case, the "double" is:

"AI can't FURSI because it has poor sample efficiency...

- ...and therefore it would take k orders of magnitude more data / compute than a human to do AI research."

- ...and therefore more generally we've not actually gotten that much evidence that the AI has the algorithms which would have caused both good sample efficiency and also the ability to create novel insights / skills / etc."

The same goes mutatis mutandis for "can make novel concepts".

I'm more saying 2. rather than 1. (Of course, this would be a very silly thing for me to say if we observed the gippities creating lots of genuine novel useful insights, but with low sample complexity (whatever that should mean here). But I would legit be very surprised if we soon saw a thing that had been trained on 1000x less human data, and performs at modern levels on language tasks (allowing it to have breadth of knowledge that can be comfortably fit in the training set).)

can't still accomplish the same things humans do

Well, I would not be surprised if it can accomplish a lot of the things. It already can of course. I would be surprised if there weren't some millions of jobs lost in the next 10 years from AI (broadly, including manufacturing, driving, etc.). In general, there's a spectrum/space of contexts / tasks, where on the one hand you have tasks that are short, clear-feedback, and common / stereotyped, and not that hard; on the other hand you have tasks that are long, unclear-feedback, uncommon / heterogenous, and hard. The way humans do things is that we practice the short ones in some pattern to build up for the variety of long ones. I expect there to be a frontier of AIs crawling from short to long ones. I think at any given time, pumping in a bunch of brute force can expand your frontier a little bit, but not much, and it doesn't help that much with more permanently ratcheting out the frontier.

AI that's narrowly superhuman on some range of math & software tasks can accelerate research

As you're familiar with, if you have a computer program that has 3 resources bottlenecks A (50%), B (25%), and C (25%), and you optimize the fuck out of A down to ~1%, you ~double your overall efficiency; but then if you optimize the fuck out of A again down to .1%, you've basically done nothing. The question to me isn't "does AI help a significant amount with some aspects of AI research", but rather "does AI help a significant and unboundedly growing amount with all aspects of AI research, including the long-type tasks such as coming up with really new ideas".

AI is transformative enough to motivate a whole lot of sustained attention on overcoming its remaining limitations

This certainly makes me worried in general, and it's part of why my timelines aren't even longer; I unfortunately don't expect a large "naturally-occurring" AI winter.

seems bizarre if whatever conceptual progress is required takes multiple decades

Unfortunately I haven't addressed your main point well yet... Quick comments:

- Strong minds are the most structurally rich things ever. That doesn't mean they have high algorithmic complexity; obviously brains are less algorithmically complex than entire organisms, and the relevant aspects of brains are presumably considerably simpler than actual brains. But still, IDK, it just seems weird to me to expect to make such an object "by default" or something? Craig Venter made a quasi-synthetic lifeform--but how long would it take us to make a minimum viable unbounded invasive organic replicator actually from scratch, like without copying DNA sequences from existing lifeforms?

- I think my timelines would have been considered normalish among X-risk people 15 years ago? And would have been considered shockingly short by most AI people.

- I think most of the difference is in how we're updating, rather than on priors? IDK.

comment by Max H (Maxc) · 2023-07-08T17:51:39.424Z · LW(p) · GW(p)

I suspect there's a type of deep, thorough, precise understanding that one person (the intervener) can have of another person (the intervened), which makes it so that the intervener can confront the intervened with something like "If you and people you know succeed at what you're trying to do, everyone will die.", and the intervened can hear this.

+1 to this being possible, but really really hard, even when the goal is to intervene on just one specific person.

A further complication is that enough people have to hear this message, such that there is not a large enough group of "holdouts" left with the means and inclination to press on anyway. The size and resources of such a holdout group required to pose an existential threat to humanity, even when most others have correctly understood the danger, gets back to the question of timelines, the absolute difficulty of inventing AGI, the difficulty of inventing AGI relative to aligning it, and the willingness / ability of non-holdouts to impose effective restrictions backed by credible enforcement.

comment by Roman Leventov · 2023-07-10T08:33:30.599Z · LW(p) · GW(p)

There are many more interventions that might work on decades-long timelines that you didn't mention:

- Collective intelligence/sense-making/decision-making/governance/democracy innovation (and it's introduction in organisations, communities, and societies on larger scales), such as https://cip.org

- Innovation in social network technology that fosters better epistemics and social cohesion rather than polarisation

- Innovation in economic mechanisms to combat the deficiencies and blind spots of free markets and the modern money-on-money return financial system, such as various crypto projects, or https://digitalgaia.earth

- Fixing other structural problems of the internet and money infrastructure that exacerbate risks: too much interconnectedness, too much centralisation of information storage, money is traceless, as I explained in this comment [LW(p) · GW(p)]. Possible innovations: https://www.inrupt.com/, https://trustoverip.org/ , other trust-based (cryptocurrency) systems.

- Other infrastructure projects that might address certain risks, notably https://worldcoin.org, albeit this is a double-edged sword (could be used for surveillence?)

- OTOH, fostering better interconnectedness between humans and humans to computers, primarily via brain-computer interfaces such as Neuralink. (Also, I think in mid- to long-term, human-AI merge is only viable "good" outcome for humanity at least.) However, this is a double-edged sword (could be used by AI to manipulate humans or quickly take over humans?)

comment by Logan Zoellner (logan-zoellner) · 2025-01-10T15:53:27.870Z · LW(p) · GW(p)

In particular, even if the LLM were being continually trained (in a way that's similar to how LLMs are already trained, with similar architecture), it still wouldn't do the thing humans do with quickly picking up new analogies, quickly creating new concepts, and generally reforging concepts.

I agree this is a major unsolved problem that will be solved prior to AGI.

However, I still believe "AGI SOON", mostly because of what you describe as the "inputs argument".

In particular, there are a lot of things I personally would try if I was trying to solve this problem, but most of them are computationally expensive. I have multiple projects blocked on "This would be cool, but LLMs need to be 100x-1Mx faster for it to be practical."

This makes it hard for me to believe timelines like "20 or 50 years", unless you have some private reason to think Moore's Law/Algorithmic progress will stop. LLM inference, for example, is dropping by 10x/year, and I have no reason to believe this stops anytime soon.