Please don't throw your mind away

post by TsviBT · 2023-02-15T21:41:05.988Z · LW · GW · 49 commentsContents

Dialogue Synopsis Highly theoretical justifications for having fun Appendix: What is this "fun" you speak of? What's a circle? Hookwave Random smooth paths Mandala Water flowing uphill Guitar chamber Groups, but without closure Wet wall None 49 comments

Dialogue

[Warning: the following dialogue contains an incidental spoiler for "Music in Human Evolution" by Kevin Simler. That post is short, good, and worth reading without spoilers, and this post will still be here if you come back later. It's also possible to get the point of this post by skipping the dialogue and reading the other sections.]

Pretty often, talking to someone who's arriving to the existential risk / AGI risk / longtermism cluster, I'll have a conversation like the following.

Tsvi: "So, what's been catching your eye about this stuff?"

Arrival: "I think I want to work on machine learning, and see if I can contribute to alignment that way."

T: "What's something that got your interest in ML?"

A: "It seems like people think that deep learning might be on the final ramp up to AGI, so I should probably know how that stuff works, and I think I have a good chance of learning ML at least well enough to maybe contribute to a research project."

T: "That makes sense. I guess I'm fairly skeptical of AGI coming very soon, compared to people around here, or at least I'm skeptical that most people have good reasons for believing that. Also I think it's pretty valuable to not cut yourself off from thinking about the whole alignment problem, whether or not you expect to work on an already-existing project. But what you're saying makes sense too. I'm curious though if there's something you were thinking about recently that just strikes you as fun, or like it's in the back of your mind a bit, even if you're not trying to think about it for some purpose."

A: "Hm... Oh, I saw this video of an octopus doing a really weird swirly thing. Here, let me pull it up on my phone."

T: "Weird! Maybe it's cleaning itself, like a cat licking its fur? But it doesn't look like it's actually contacting itself that much."

A: "I thought it might be a signaling display, like a mating dance, or for scaring off predators by looking like a big coordinated army. Like how humans might have scared off predators and scavenging competitors in the ancestral environment by singing and dancing in unison."

T: "A plausible hypothesis. Though it wouldn't be getting the benefit of being big, like a spread out group of humans."

A: "Yeah. Anyway yeah I'm really into animal behavior. Haven't been thinking about that stuff recently though because I've been trying to start learning ML."

T: "Ah, hm, uh... I'm probably maybe imagining things, but something about that is a bit worrying to me. It could make sense, consequentialist backchaining can be good, and diving in deep can be good, and while a lot of that research doesn't seem to me like a very hopeworthy approach, some well-informed people do. And I'm not saying not to do that stuff. But there's something that worries me about having your little curiosities squashed by the backchained goals. Like, I think there's something really good about just doing what's actually interesting to you, and I think it would be bad if you were to avoid putting a lot of energy into stuff that's caught your attention in a deep way, because that would tend to sacrifice a lot of important stuff that happens when you're exploring something out of a natural urge to investigate."

A: "That took a bit of a turn. I'm not sure I know what you mean. You're saying I should just follow my passion, and not try to work towards some specific goal?"

T: "No, that's not it. More like, when I see someone coming to this social cluster concerned with existential risk and so on, I worry that they're going to get their mind eaten. Or, I worry that they'll think they're being told to throw their mind away. I'm trying to say, don't throw your mind away."

A: "I... don't think I'm being told to throw my mind away?"

T: "Ok. The thing I'm saying might not apply much to you, I don't know. But there's a pattern that seems common to me around here, where people understandably feel a lot of urgency about the thing where the world might be destroyed, or at least they feel the urgency radiating off of everyone else around them, or at least, it seems as though everyone thinks that everyone ought to feel urgency. Then they sort of transmute that urgency, or the sense that they ought to be taking urgent action, into learning math or computer science urgently or urgently getting up to speed by reading a lot of blog posts about AI alignment. It's not a mistake to put a ton of effort into stuff like that, but doing that stuff out of urgency rather than out of interest tends to cause people to blot out other processes in themselves. Have you seen Logan's post on EA burnout [LW · GW]?"

A: "Yeah, I read that one."

T: "Another piece of the picture is that there's an activity of a growing mind that I'm calling "following your interest" or "fun" or "play", or maybe I should say "thinking hard" or "serious play", and that activity is another thing that can get thrown under the bus. That activity is especially prone to be thrown under the bus, and it's especially bad to throw that activity under the bus. The activity of thinking hard as a result of play is especially prone to be thrown under the bus because it's a highly convergent subgoal, and so, like in Logan's post, it's one of those True Values that gets replaced by a Should Value. The activity of being drawn into playful thinking that becomes thinking hard is especially bad to throw under the bus because it's a central way that a mind grows. So if you're not giving yourself the space to sometimes be drawn into playful thinking, then you're missing out on deepening your understanding of stuff that you could have been playfully thinking hard with, and also you're letting that muscle atrophy."

A: "Let me see if I've got what you're saying. There's two kinds of thinking, the True thinking and the Should thinking. And the Should thinking is worse than the True thinking, but people do the Should thinking?"

T: "Er, something like that... except that the phrase "Should thinking" is ambiguous here, and that's important. There's fake Should thinking, or let me say "Urgent fake thinking", which isn't really thinking, in that it doesn't take in and integrate much information, or notice contradictions, or deduce consequences of beliefs, or search for hypotheses and concepts, or make falsifiable predictions, or distill ideas. For example, rehearsing arguments that you read in a blog post, in response to rehearsed questions, usually isn't thinking, it's usually fake thinking."

A: "Got it, got it."

T: "Then there's Urgent real thinking, which is actually thinking, and has the structure: there's this way I want the world to go; how can I make the world go that way? Both backward chaining and forward chaining would be Urgent real thinking. It would also be Urgent real thinking to ask: What sort of scientific understanding would I have to have, in order to satisfy this subgoal of making the world go the way I want it to? And then doing that science. All of that activity has the structure of: I see how thinking about this stuff should help with getting the world to go a certain way. On the other hand, there's Playful thinking. Playful thinking doesn't have to have a justification like that; it's coming from a different source. So Urgent thinking has a tendency to be fake thinking, and even Urgent real thinking has a tendency to squash Playful thinking, and that these two things are connected."

A: "Sorry, what do you even mean by Playful thinking?"

T: "Yeah, sorry, I should have been more clear. It's sort of subtle, or even sacred if you'll permit me to use that word, so it's hard to just define or, like, comprehensively describe. I know it as a phenomenon, something I encounter, without knowing what it really is and how it works and stuff. Anyway, it's something like: the thoughts that are led to exuberantly play themselves out in the natural course of their own time. This isn't supposed to be something alien and complicated, this is supposed to be a description of something that you're already intimately familiar with, even made out of, and that you definitely did when you were a kid. It's probably whatever was happening with you that led you to make hypotheses about what that octopus was doing."

A: "Could you give an example of Playful thinking?"

T: "Gah, I really need to write this up as a blog post. Giving an example that I'm not really into at the moment seems kind of bad. But ok, so, [[goes on a rambling five-minute monologue that starts bored and boring, until visibly excited about some random thing like the origin of writing or the implausibility of animals with toxic flesh evolving or emergent modularity enabled by gene regulatory networks or the revision theory of truth or something; see the appendix for examples]]"

A: "Ok I think I have some idea of what you're talking about. But it sure sounds like what you're recommending is just inefficient? It seems like there's a bunch of smart people who have thought about alignment a lot more than I have, and they've said that there's all this stuff like linear algebra and probability theory that's useful for alignment, so it seems reasonable to learn that stuff. And I can learn it faster if I focus on it, instead of getting distracted, right? I think if I followed your advice I'd just goof off a lot."

T: "I'm definitely not saying not to dive in deep, go fast, et cetera. I'm saying to not not also do non-Urgent thinking. Like, if you find yourself noodling or doodling, don't stop yourself due to the overwhelming urgency of reading the next page of the textbook. Even assuming that you're just trying to learn some list of material as fast as possible, playful thinking is still needed. There's a thing I like to do, where I'll stare at a mathematical object, and keep staring until it sort of reaches "semantic satiation", and I can see it in a more naive way. And then I can tweak it, or look at it upside down, or combine it with something else, or ask why it has to be this way or that way rather than some other way. And this feels like playing, but I think it gives me the ideas more thoroughly, and then they're more useful to me in other contexts. So even from a backchaining point of view it ends up getting you something that a more functional goal, like "can I do the exercises in the textbook", doesn't always get you."

A: "I'm a little confused. Before I thought you were saying that people should let themselves get nerdsniped more, instead of only thinking about stuff that's planning ahead to what they want to work on. Now it sounds like you're saying there's some other way to do math that's more useful for alignment work?"

T: "Those two recommendations are connected. Both of those recommendations are consequences of the following proposition: your mind knows a lot about what's worth thinking about that "you" don't know, in some sense. And you can't just get that stuff by asking your mind to tell you that stuff; you have to let your mind do its thing sometimes, without requiring a legible justification. The name "nerdsniping" doesn't seem quite right, though. "Nerdsniping" is where someone else crafts a puzzle that catches your attention. That can be fun and good, but to my taste, it tends to have a flavor of being a bit too cute or clever. I want to point instead at something that involves eternity and elegance. Things that have an eternity to them--e.g. Euclidean space, the invention of words, embryogenesis--are more likely to draw your mind along into thinking, and they do that without being clever or polished, because the natural world is just like that. Elegance has something to do with eternity, like how the architecture of a church evokes something that normal buildings don't, or how a clear mathematical definition doesn't have superfluous complications. Elegance is something that your mind instinctively tries to find or create."

A: "Would elegance really help with learning things faster? It seems like, yeah, I could spend a long time getting a really good understanding of one thing, and that would have some benefits, like I could apply it faster. But it seems more important to get a pretty good understanding of a lot of things, so I can start combining ideas and understanding where the alignment field is currently at and thinking about how I can contribute."

T: "An analogy here is that covering a lot of ground too quickly is like programming a project just using the built-ins you already know, using repetitive hacks that aren't easy to understand or modify later and that have interfaces enmeshed with other functions. You can go fast in the short term, but in the longer term, you're going to come back and have a hard time understanding why the code is the way it is, and you'll probably have to do a bunch of work refactoring things to separate concerns so that you can understand the code well enough to modify it efficiently. Playing with ideas is like learning the built-ins that are appropriate to the task at hand, and factoring your code so pieces are reusable, and taking the time to reduce terms that do stuff like have intermediate variable assignments that can be eliminated with no detriment, and factoring out repetitive pieces of code into a single generalized function."

A: "Would people around here really disagree with that? I still don't feel like I'm being told to throw my mind away."

T: "I don't know. Maybe most people would agree with what I'm saying, at least verbally, and even consider it obvious. But also empirically people tend to get the message that they should orient with some kind of urgency and all-consuming attention around existential risk. This could be mediated implicitly, through patterns of attention. Like, someone tells you "Give yourself plenty of time for other pursuits, and make sure to leave a line of retreat [LW · GW] from this existential risk stuff.", which is good advice, and then also only get excited talking to you if you're describing your plan for how to prevent the world from being destroyed by AGI. Also, people arriving here with an attitude of deference, e.g. studying textbooks that other people told you to study, are prone to be very attuned to that sort of message. Even if no one were sending those messages, some people would impute those messages to their social context. A pattern I have noticed in myself, for example, is: "Oh, I'm feeling energetic at the moment, so now is a good time to sit down and actually think hard about alignment.", instead of seeing what I actually feel like doing given permission to decide to do something for the fun of it, and this behavior pattern seems like a recipe for getting into an unhelpful rut."

A: "Ok, I think I'm getting the picture. I'll make an effort to have more fun with math, and hopefully I'll learn it more deeply. That seems useful."

T: "Ack."

A: "Oh no. What did I do? :)"

T: "It's just that there's a danger in having fun with math because it helps you learn it more deeply, rather than because it's fun. Talking about how it helps you learn it more deeply is supposed to be a signpost, not always the main active justification. A signpost is a signal that speaks to you when you're in a certain mood, and tells you how and why to let yourself move into other moods. A signpost is tailored to the mood it's speaking to, so it speaks in the language of the mood that it's pointing away from, not in the language of the mood it's pointing the way towards. If you're in the mood of justifying everything in terms of how it helps decrease existential risk, then the justification "having fun with math helps you learn it better" might be compelling. But the result isn't supposed to be "try really hard to do what someone having fun would do" or "try really hard to satisfy the requirement of having fun, in order to decrease X-risk", it's supposed to be actually having fun. Actually having fun is a different mood from justifying everything in terms of X-risk. Imagine a six-year-old having fun; it's not because of X-risk."

A: "How am I supposed to try to have fun, without trying to have fun?"

T: "Come home to the unique flavor of shattering the grand illusion. Come home to Simple Rick's."

A: "That was a terrible Southern accent."

T: "Fair enough. Anyway, you might have been trained in school to falsify your preferences by pretending to have fun doing stupid activities. So you could try to do the opposite of everything you'd do in school. You could also try a "Do Nothing" meditation and see what happens. It might also help to think of having fun sort of like walking: you know how in some sense, and you even have an instinct for it; having fun, if you've forgotten, is more a question of letting those circuits--which don't require justification and just do what they do because that's what they do--letting those circuits do what they do, and enjoying that those circuits do what they do. Basically the main thing here is just: there's a thing called your mind, your mind likes to play seriously, and consider not preventing your mind from playing seriously."

A: "Got it."

T: "The end."

Synopsis

- Your mind wants to play. Stopping your mind from playing is throwing your mind away.

- Please do not throw your mind away.

- Please do not tell other people to throw their mind away.

- Please do not subtly hint with oblique comments and body-language that people shouldn't have poured energy into things that you don't see the benefit of. This is in conflict with coordinating around reducing existential risk. How to deal with this conflict?

- If you don't know how to let your mind play, try going for a long walk. Don't look at electronics. Don't have managerial duties. Stare at objects without any particular goal. It may or may not happen that some thing jumps out as anomalous (not the same as other things), unexpected, interesting, beautiful, relatable. If so, keep staring, as long as you like. If you found yourself staring for a while, you may have felt a shift. For example, I sometimes notice a shift from a background that was relatively hazy/deadened/dull, to a sense that my surroundings are sharp/fertile/curious. If you felt some shift, you could later compare how you engage with more serious things, to notice when and whether your engagement takes on a similar quality.

Highly theoretical justifications for having fun

-

Throwing your mind away makes life worse for you, and you should be wary of doing things that make life worse for you. This is the blatantly obvious central thing here.

-

Interrupting playful thinking causes learned helplessness about playful thinking. (Imagine knocking over a toddler's blocks whenever they are stacked three-high or more.) On the other hand, giving yourself to playful thinking makes it so that playful thinking expects you to be given to it. When playful thinking expects to be given your mind through which to play, playful thinking disposes itself to play.

-

Playful thinking puts ideas into a form where they're more ready to combine deeply with other ideas.

-

Playful thinking indexes ideas more thoroughly, so that they come up when helpful and in helpful ways.

-

Your senses of taste, interest, fun, and elegance know [stuff about what's worth thinking about] that isn't known by you or by those whose advice you take. Analogously, your sense of beautiful code knows stuff about good programming that you don't know.

-

Play is non-specific practice. It accesses canonical and practiceworthy structure.

-

Playful thinking sets up questions in your brain that you wouldn't have known how to set up just by explicitly trying to set up questions. A question is a record of a struggle to deal with some context. These questions, like the dozen problems Feynman carries around with him, set you up to connect with new ideas more thoroughly.

-

Play exercises the ability of setting up problem contexts and discerning interesting problem contexts. This ability is extremely under-exercised by more deferential ways of learning, and is especially needed in pre-paradigm fields.

-

Play is a long-term investment in deep understanding.

-

Many people are far from maximally exerting themselves. Someone who is far from maximally exerting themselves can't have very large and fundamental tradeoffs between exerting themselves towards one or another purpose, such as having fun vs. doing alignment research. Instead of trading off between two activities, they could exert themselves more if they had more exertion-worthy activities available. Playfully thinking hard is a suprisingly exertion-worthy activity.

-

Being initially drawn into playful thinking correlates with and grows into being drawn further into more elaborated playful thinking, which is continuous with any real thinking. Your thoughts about strategy, the alignment problem, or whatever important thing, can inform and be informed by your taste in what to playfully think about, without having to be the justification for your playful thinking.

-

Many ideas are provisional: they have yet to be fully grasped, made explicit, connected to what they should be connected to, had all their components implemented, carved at the joints, made available for use, indexed. Many important ideas are provisional. Playing with provisional ideas helps nurture them.

-

Your sense of fun usefully decorrelates you from others, leading you to do computations that others aren't doing, which greatly improves humanity's portfolio of computations.

-

You're more exercising ἀρετή ("excellence", cognate to "rational") when doing things that your mind is drawn to do by its own internal criteria.

-

Your sense of fun decorrelates you from brain worms / egregores / systems of deference, avoiding the dangers of those.

-

Brain worms / egregores / systems of deference that would punish you for devoting energy to playful thinking, are likely to be ineffective at achieving worthwhile goals, among other things because they cut off the natural influx of creativity that solves novel problems. A social coordination point can appear powerful just by being predominant, and therefore appears desirable to join, without actually being worthwhile to join.

-

Requiring that things be explicitly justified in terms of consequences forces a dichotomy: if you want to do something for reasons other than explicit justifications in terms of consequences, then you either have to lie about its explicit consequences, or you have to not do the thing. Both options are bad.

-

Intensity in the face of existential risk is appropriate. To enable intensity in the face of existential risk, don't require yourself to throw your mind away in order to be intense.

Appendix: What is this "fun" you speak of?

What's a circle?

Take a smooth closed curve C in the plane. These conditions are equivalent:

- There is a point p such that all points on C are equidistant from p.

- C has constant curvature.

- (If C bounds a disc) C bounds the maximum area that's possible to bound with a curve of the same length as C.

- The group of isometries of the plane that maps C to itself is a nontrivial connected compact topological group.

These conditions all define a circle in the plane. But what happens if instead of a plane, we look at some other manifold? See here for some disussion.

Hookwave

It's 0400 and it's raining heavily. Tributaries swell along curbs, then clatter down drains to join the subterranean sewerriver. In the middle of one street, the downward slope perpendicularly away from the curb exceeds the slope along the curb, sending the curb's tributary across to the other side of the streed. Hundreds of criss-crossing lines checker the flowing sheet of water that is spread out over the concrete. How does that make sense? It looks as though water is flowing in two skewed directions, one sheet flowing over or through the other sheet. Are these just wakes from the bumps in the asphalt? But it really looks like the water is flowing in both skew directions. Maybe all the little wakes are making ripples, so there are waves traveling in both directions?

In another curb's rivulet, there's an oddly-shaped wave. Most curb rivulets have lots of little wakes in them from bumps in the asphalt or from influxes reflecting off the curb. These little wakes usually curve so they join with the flow: they start off at some angle away from the boundary of the rivulet towards the center of the rivulet, and then curve to be more parallel with the direction of flow. But not this hookwave:

It's curving off flowward and to the right, so that its angle with the direction of flow increases flowward:

Why is it like that? Why is this wake different from all other wakes? This one and a few other hookwaves always come right after a divot in the concrete against the curb. If a foot (waterproofed, dry-socked) is placed just upstream of the divot, blocking the half of the rivulet that's further from the curb, then the hookwave straightens out a bit, like a more usual wake, contra the intuition that the flowing water should be "sweeping back" the hookwave. Does the flowing water instead "pile up" to form the hook?

Random smooth paths

This cemetary is large and round, so it has a network of internal paths. At each intersection there are 2 or 3, or occasionally 4 or 5 directions to continue in, "somewhat forwardly", without doubling straight back. Choosing randomly would follow a sort of constrained random walk: there's a kind of inertial or smoothness condition, where random turns are allowed but doubling back is disallowed. What sort of random walk is that? Staying on the paths and keeping off the grass reduces the random walk to a random (asymmetric) walk on a(nother) graph.

What if the grass isn't off limits, and there are choices at every moment which way to turn, rather than just at intersections? The Wiener process (which describes Brownian motion) could describe this kind of walk. But a Wiener process is infinitely jagged; the wheel gets turned arbitrarily sharply, arbitrarily densely in time. It's very far from smooth.

What if the path has to be an analytic function (comes from a Taylor series)? Then it's smooth, but it's also determined everywhere if it's determined on a small patch. So it's not intuitively random. A random path should have some independence between changes that happen at different points.

What about smooth paths? Smooth paths are nice, in that they at least have all derivatives at all points, and aren't determined by local patches, and can be stitched together (if given a bit of breathing room). Is there a nice notion of a smooth random walk? More detail here.

Mandala

Lying on the grass between the sidewalk and an Oakland street:

What sort of symmetry does this mandala have? The purple petals around the outer rim have sixteenfold rotational symmetry (and we could allow reflections, giving ). The purple petals near the center have eightfold rotational symmetry. Centerward of the innermore purple petals, we have 28-fold, 24-fold, and 32-fold symmetry, and then the red band has a full circle's symmetry. The innermost ring has 12-fold symmetry. So as a whole the mandala has 4-fold symmetry.

Abstractly, there's an embedding of into each of , , , , and , and is the biggest group that embeds into all those groups. That's just a fancy way of saying that four is the greatest common divisor of all those numbers. We could also say that there's an embedding of into each of , , , , and .

Given some set of groups, is there always a unique greatest common subgroup? What are greatest common subgroups for some pairs of finite groups? Given some groups and a greatest common subgroup , is there always a corresponding "mandala"--a geometric figure that's a union of figures such that the symmetry group of each is exactly and the symmetry group of is exactly ?

Water flowing uphill

The slope of this segment of the street is by all passerby accounts, eh, hard to tell but a bit uphill, coming from this direction. It has rained an hour ago. In the narrow channel between the curb and the retaining wall, there's water flowing. Which direction is it flowing? The water is flowing uphill. What.

Guitar chamber

The hollow chamber of an acoustic guitar makes the sound of a plucked string fuller. And louder? Does it? It's something about "resonance" and "interference". But how could the chamber make the sound louder? Isn't all the energy that's bleeding off from the vibrating string already being emitted as sound waves?

Maybe it's that the sound waves at the wavelengths that fit in the chamber don't interfere with themselves. But how would that make them louder? Do they "build up" over a few milliseconds? But that doesn't make them louder, the sound waves are still leaving the guitar pretty much the whole time while the string is picked, they're not built up and then released in a loud burst.

Maybe the chamber just redirects the sound forward, so it's all going in one direction, and it's louder in that direction but quieter behind the guitar?

Maybe the chamber isn't making the sound louder by adding energy, but just putting the soundwaves at different frequencies all into one frequency. That could make it sound louder, maybe? Why exactly would that sound louder? How does it put the energy from one frequency into another frequency? And also, how does whistling work? Or speaking, or howling wind?

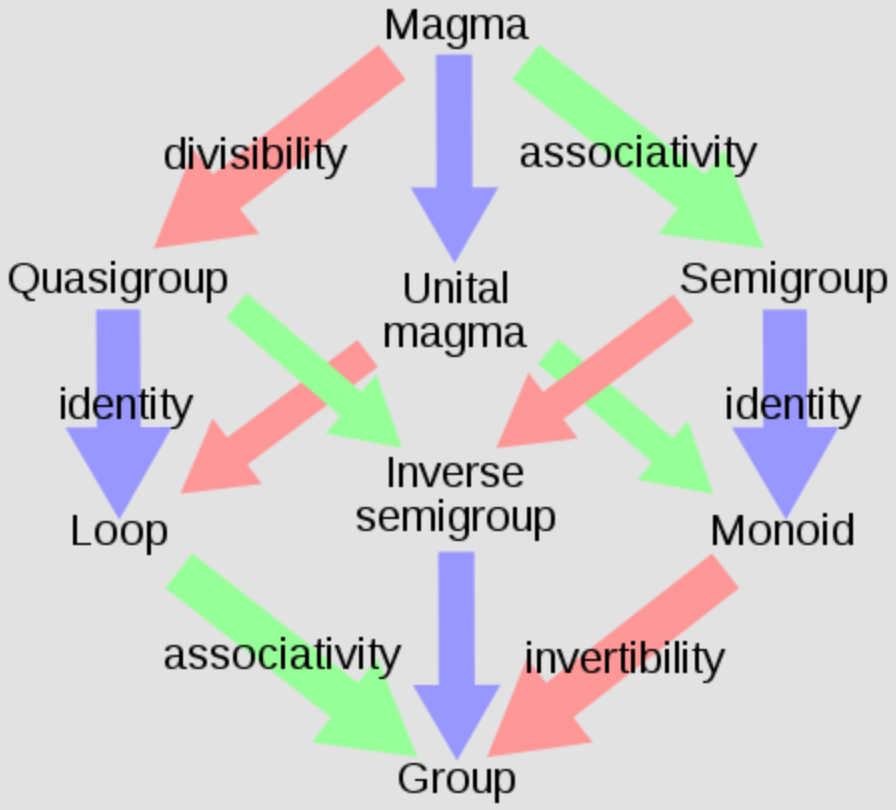

Groups, but without closure

A group is a set of transformations that has an identity transformation that doesn't do anything, inverse transformations that undo transformations, and composite transformations that are the result of doing one transformation followed by doing another transformation.

What happens if we tweak this concept to see how it works? What if there isn't an identity transformation? Why, that's just an inverse semigroup, of course. Mathematicians have got names for all those sorts of things:

(From Wikipedia)

But what about closure under composition? It makes sense that transformations are closed under composition: if you do one transformation and then do another, you've done some kind of transformation. So whatever you've done, that's the transformation that's the composition of the two component transformations.

But what if there were two transformations that you could do individually, but that you couldn't do one after the other? For example, look at the set of transformations of the form: I pick up this heavy box and move it to another spot on the ground. There's an identity transformation (do nothing), and there's usually an inverse transformation (if I moved the box 10 feet northwest, now I move it 10 feet southeast, and now it's as if I've done nothing). But what if I get too tired of moving the box after moving it a mile? Then I can move the box 1 mile northwest, but I can't do that transformation twice.

So what we have is some kind of "almost a group". What do these things look like? What are some interesting things that can happen with "almost a group"s that can't happen with groups? Is there a nice classification of them? (Compare approximate groups.)

Wet wall

Sometimes walls are wet.

Sometimes they're wet shaped like a V, and sometimes like an A. Why, exactly?

Thanks to Justis Mills for feedback on this post.

49 comments

Comments sorted by top scores.

comment by janus · 2023-02-16T04:40:28.311Z · LW(p) · GW(p)

I am so glad that this was written. I've been giving similar advice to people, though I have never articulated it this well. I've also been giving this advice to myself, since for the past two years I've spent most of my time doing "duty" instead of play, and I've seen how that has eroded my productivity and epistemics. For about six months, though, beginning right after I learned of GPT-3 and decided to dedicate the rest of my life to the alignment problem, I followed the gradients of fun, or as you so beautifully put it, thoughts that are led to exuberantly play themselves out, a process I wrote about in the Testimony of a Cyborg [LW · GW] appendix of the Cyborgism post. What I have done for fun is largely the source of what makes me useful as an alignment researcher, especially in terms of comparative advantage (e.g. decorrelation, immunity to brainworms, ontologies shaped/nurtured by naturalistic exploration, (decorrelated) procedural knowledge).

The section on "Highly theoretical justifications for having fun" is my favorite part of this post. There is so much wisdom packed in there. Reading this section, I felt that a metacognitive model that I know to be very important but have been unable to communicate legibly has finally been spelled out clearly and forcefully. It's a wonderful feeling.

I expect I'll be sending this post, or at least that section, to many people (the whole post is a long and meandering, which I enjoyed, but it's easier to get someone to read something compressed and straight-to-the-point).

Replies from: TsviBTcomment by riceissa · 2023-02-17T19:58:59.979Z · LW(p) · GW(p)

I upvoted this post because I think it's talking about some important stuff in ways (or tone or something) I somehow like better than what some previous posts in the same general area have done.

But also something feels really iffy about the way the word "fun" is used in this post. If I think back to the only time in my life I actually had fun, which was my childhood, I sure did not have fun in the ways described in this post. I had fun by riding bikes (but never once stopping to get curious about how bike gears work), playing Pokemon with my friends (but not actually being very strategic about it -- competitive battling/metagame would have been completely alien to my child's self), making dorodango (but, like, just for the fun of it, not because I wanted to get better at making them over time, and I sure did not ever wonder why different kinds of mud made more stable or shinier dorodango, or what the shine even consists of), etc.

The kind of "fun" that is described in this post is, I think, something I learned from other people when I was in my early teens or so, not something I was born with (as this post seems to imply?). And I learned and developed this skill because I was told (in books like Feynman's and the Sequences) that this is what actually smart people do.

So personally I feel like I am trying to get back the "original fun" that I experienced as a child, as well as trying to untangle the "useful/technical fun" from its social influences and trying to claim it as my own, or something, in addition to doing the kind of thing suggested by this post.

Replies from: cherrvak, TsviBT↑ comment by cherrvak · 2023-02-17T23:01:22.816Z · LW(p) · GW(p)

I agree. It seems awfully convenient that the all of the “fun” described in this post involve the legibly-impressive topics of physics and mathematics. Most people, even highly technically competent people, aren’t intrinsically drawn to play with intellectually prestigious tasks. They find fun in sports, drawing, dancing, etc. Even when they take adopt an attitude of intellectual inquiry to their play, the insights generated from drawing techniques or dance moves are far less obviously applicable to working on alignment than the insights generated from studying physics. My concern is that presenting such a safely “useful” picture of fun undercuts the message to “follow your playful impulses, even if they’re silly”, because the implicit signal is “and btw, this is how smart people like us should be having fun B)”

Replies from: TsviBT↑ comment by TsviBT · 2023-02-18T07:23:29.064Z · LW(p) · GW(p)

See my comment on the parent.

undercuts the message to “follow your playful impulses, even if they’re silly”

That's a fine message, but it's not the message of the post. The concept described in the post is playful thinking, not fun. It does use the word "fun" in a few places where the more specific phrase would arguably have been better, so the miscommunication is probably my fault.

are far less obviously applicable to working on alignment than the insights generated from studying physics

I literally don't believe this, but even if it were true, the post doesn't argue to do math because it's useful for alignment. The reason that alignment is even mentioned, is that the phenomenon of throwing away your mind is so perverse that it should be obviously bad even for someone who only cares about alignment, or at least thinks they want to sacrifice their wellbeing for the sake of alignment; and because people around X-risk have a tendency to throw their mind away anyway. If someone tells me they decided not to take a 2 year surfing vacation because alignment is too urgent, then I might worry a bit about how that sits in them, but the decision makes sense to me and seems sane. If someone tells me they stopped thinking about stuff they're really curious about because they don't see how it helps alignment, I'm like "No, what the hell are you doing, who told you that was a good idea??".

Replies from: cherrvak↑ comment by cherrvak · 2023-02-18T15:32:35.790Z · LW(p) · GW(p)

Thank you for clarifying your intended point. I agree with the argument that playful thinking is intrinsically valuable, but still hold that the point would have been better-reinforced by including some non-mathematical examples.

I literally don’t believe this

Here are two personal examples of playful thinking without obvious applications to working on alignment:

- A half-remembered quote, originally attributed to the French comics artist Moebius: “There are two ways to impress your audience: do a good drawing, or pack your drawing full of so much detail that they can’t help but be impressed.” Are there cases where less detail is more impressive than more detail? Well, impressive drawings of beautiful girls frequently have the strikingly sparse detail on the face, see drawings by Junji Ito, John Singer Sargent, Studio Kyoto. Why are these drawings appealing? Maybe because the sparse detail, mostly concentrated in the eyes (though Sargent reduces the eyes to simplified block-shadows as well), implies smooth, flawless skin. Maybe because the sparsity allows the viewer to interpret the empty space to contain their own idealized image— the Junji Ito girl’s nasal bridge is left undefined, so you can imagine her having a straight or button nose according to preference. Maybe there’s something inherently appealing to leaving shapes implied— doesn’t that Junji Ito girl’s nasal bridge remind you of the Kanizsa Triangle? Are people intrinsically drawn to having the eye fooled by abstracted drawings?

- A number of romantic Beatles songs have sinister undertones: the final verse of Norwegian Wood refers to committing arson, and even the sentimental Something has this bizarre protest “I don't want to leave her now”— ok dude, then why are you bringing it up? Is this consistent through their discography? Is this something unique to the Beatles, or were sinister love songs a well-established pop genre at the time? Did these sinister implications go over the heads of audiences, or were they key to the masses’ enjoyment of the songs?

If you can think of ways that these lines of thought apply to alignment, please let me know. This isn’t a “gotcha”, if you actually came up with something it’d be pretty dope.

Replies from: TsviBT, ChristianKl↑ comment by TsviBT · 2023-02-19T14:48:30.593Z · LW(p) · GW(p)

We might have different things in mind with "intellectual inquiry"; depth is important. The first one seems like a seed of something that could be interesting. Phenomenology is the best data we have about real minds.

But mainly I made that comment because I don't see insights from physics being "obviously applicable to working on alignment". (This is maybe a controversial take and I haven't thought about it that much and it could be stupid. I might also do accounting different, labeling more things as being "really math, not physics".)

↑ comment by ChristianKl · 2025-04-23T14:18:47.222Z · LW(p) · GW(p)

Are people intrinsically drawn to having the eye fooled by abstracted drawings?

If you want to apply this to alignment, the next question would be, is there something in the human nature that causes this, or would an AGI likely be drawn to similar effects?

If you gather knowledge about what's intrinsically motivating AGI's as well, that would be valuable for alignment research, because it's about creating motivations for AGI's to do things.

Did these sinister implications go over the heads of audiences, or were they key to the masses’ enjoyment of the songs?

You can reframe that question as, "Is this aspect of the Beatles songs aligned with the desires of the audience?"

Both of your examples about what people or agents in general value. AI alignment is about how to align what humans and AGIs value. Understanding something about the nature of value, seems applicable to AI alignment.

↑ comment by TsviBT · 2023-02-18T07:15:17.369Z · LW(p) · GW(p)

Thanks.

You make an important point. Fun in general is a broader thing than playful thinking (and deeper and more sacred in some ways), so playful thinking doesn't at all encompass all of fun. Fun and playful thinking are related though; playful thinking is supposed to be fun, and at least for me, the issue with playful thinking is that the fun is being stifled. So following on your last paragraph, the deeper thing is fun simpliciter.

Another point, only hinted at by the phrase "serious play", is that the concept of playful thinking is not supposed to imply unseriousness. Seriousness is not the same as explicit-usefulness-justification, because play can be serious but it's almost impossible for activity driven by explicit-usefulness-justification to be genuine, fully deep fun. (It can be somewhat fun, and some people are blessed to have explicit-usefulness-justifications that spur them into activity that then becomes genuine, fully deep fun. I can sort of do that but not fully, especially because my explicit-usefulness-justifications are pretty demanding and don't want me getting confused about what counts as success.) Serious play, in its seriousness, can involve instruction and taste. It could involve a mentor giving you harsh feedback. It could involve, for example, you saying to yourself: the thing I'm learning about right now, in the way I'm learning about it, does it access [what intuitively feels like] the living, underlying, hidden structure of the world? And then modifying how you're engaging to heighten that sense. It could involve your case of learning a mode of thinking from someone else.

> trying to claim it as my own

My two cents (although I'm worried about intruding on this, and worried about other people retroactively intruding such that the process is distorted): if at some point you realize that you've gained a lot on claiming it as your own, it would be very valuable to describe that to others. (If you'll allow a flight of fancy: We can only send messages backwards in time by a few years, so only messages that are taken seriously as a priority to transmit backwards in time will be relayed fast enough to outpace the forward flow of time, and make it back to primordiality.)

comment by Ruby · 2023-02-22T02:17:44.174Z · LW(p) · GW(p)

Curated. This post feels timely and warranted given the current climate. I think we, in our community, were already at some risk of throwing out our minds a decade ago, but it was less when it was easy to think timelines were 30-60 years. That allowed more time for play. Now as there's so much evidence of imminence and there are more people doing more things, AI x-risk isn't a side interest for many but a full-time occupation, yes, I think we're almost colluding in creating a culture that doesn't allow time for play. I like that this post makes the case for pushing back.

Further, this post points at something I want to reclaim for the spirit of LessWrong, something I feel like use to be more palpable than now. Random posts about There’s no such thing as a tree (phylogenetically) [LW · GW] or random voting theory posts felt rooted in this kind play – the raw interest and curiosity of the author rather than some urgent importance of the topic. The concerns I have that make me want to boost the default prominence of rationality and world modeling posts (see LW Filter Tags (Rationality/World Modeling now promoted in Latest Posts) [LW · GW] is not that I don't like the AI posts, but in large part that I want to see more of the playful posts of yore.

comment by Quadratic Reciprocity · 2023-02-16T13:34:16.103Z · LW(p) · GW(p)

This was a somewhat emotional read for me.

When I was between the ages of 11-14, I remember being pretty intensely curious about lots of stuff. I learned a bunch of programming and took online courses on special relativity, songwriting, computer science, and lots of other things. I liked thinking about maths puzzles that were a bit too difficult for me to solve. I had weird and wild takes on things I learned in history class that I wanted to share with others. I liked looking at ants and doing experiments on their behaviour.

And then I started to feel like all my learning and doing had to be directed at particular goals and this sapped my motivation and curiosity. I am regaining some of it back but it does feel like my ability to think in interesting and fun directions has been damaged. It's not just the feeling of "I have to be productive" that was very bad for me but also other things like wanting to have legible achievements that I could talk about (trying to learn more maths topics off a checklist instead of exploring and having fun with the maths I wanted to think about) and some anxiety around not knowing or being able to do the same things as others (not trying my hand at thinking about puzzles/questions I think I'll fail at and instead trying to learn "important" things I felt bored/frustrated by because I wanted to feel more secure about my knowledge/intelligence when around others who knew lots of things).

In my early attempts to fix this, I tried to force playful thinking and this frame made things worse. Because like you said my mind already wants to play. I just have to notice and let it do that freely without judgment.

comment by RHollerith (rhollerith_dot_com) · 2023-02-17T18:35:59.438Z · LW(p) · GW(p)

This post points at something important, for sure, but I'm bothered a little by your choice of the phrase, "throw away your mind". Specifically, it is hyberbole, and we wouldn't want hyperbole to become routine around here.

If I decide to live in a strictly consequentialist, goal-oriented way, doing nothing for the sake of curiosity or fun, surely, I still have a mind. Although I might be significantly limiting its potential, I am not throwing it away.

comment by Jeremy Gillen (jeremy-gillen) · 2025-01-10T14:57:08.537Z · LW(p) · GW(p)

Tsvi has many underrated posts. This one was rated correctly.

I didn't previously have a crisp conceptual handle for the category that Tsvi calls Playful Thinking. Initially it seemed a slightly unnatural category. Now it's such a natural category that perhaps it should be called "Thinking", and other kinds should be the ones with a modifier (e.g. maybe Directed Thinking?).

Tsvi gives many theoretical justifications for engaging in Playful Thinking. I want to talk about one because it was only briefly mentioned in the post:

Your sense of fun decorrelates you from brain worms / egregores / systems of deference, avoiding the dangers of those.

For me, engaging in intellectual play is an antidote to political mindkilledness. It's not perfect. It doesn't work for very long. But it does help.

When I switch from intellectual play to a politically charged topic, there's a brief period where I'm just.. better at thinking about it. Perhaps it increases open-mindedness. But that's not it. It's more like increased ability to run down object-level thoughts without higher-level interference. A very valuable state of mind.

But this isn't why I play. I play because it's fun. And because it's natural? It's in our nature.

It's easy to throw this away under pressure, and I've sometimes done so. This post is a good reminder of why I shouldn't.

comment by jsd · 2023-02-23T06:06:41.572Z · LW(p) · GW(p)

Thanks for this. I’ve been thinking about what to do, as well as where and with whom to live over the next few years. This post highlights important things missing from default plans.

It makes me more excited about having independence, space to think, and a close circle of trusted friends (vs being managed / managing, anxious about urgent todos, and part of a scene).

I’ve spent more time thinking about math completely unrelated to my work after reading this post.

The theoretical justifications are more subtle, and seem closer to true, than previous justifications I’ve seen for related ideas.

The dialog doesn’t overstate its case and acknowledges some tradeoffs that I think can be real - eg I do think there is some good urgent real thinking going on, that some people are a good fit for it, and can make a reasonable choice to do less serious play.

Replies from: TsviBT↑ comment by TsviBT · 2023-02-27T15:15:02.005Z · LW(p) · GW(p)

Good to hear.

I do think there is some good urgent real thinking going on, that some people are a good fit for it, and can make a reasonable choice to do less serious play.

Definitely. A second-order hope I have is to make more space available for people to be more head-down intense about urgent thinking. The idea being:

Alice wants to be heads-down intense about urgent thinking. She does so. Then Bob sees Alice, and feels pressured to also be intense in that way. When Bob tries to be intense in that way, he throws away his mind, and that's not good for him. He doesn't fully understand what's going wrong, but he knows that what's going wrong has something to do with him being pressured to be intense. He correctly identifies that the pressure is partly caused by Alice (whether or not it's Alice who really ought to change her behavior). Not understanding the situation in detail, Bob only has blunt actions available; and not having an explicit justification for pushing back against some pressure he feels, he doesn't push back explicitly, out in the open, but instead puts some of his force toward implicitly pressuring Alice to not be so intense.

If Bob were more able to defend important things from implicit pressure that he feels, he's less pushed to pressure Alice to not be intense. And so Alice is more freed to be intense, as is suitable for her.

(This is fairly theoretical, but would explain some of my experiences.)

comment by adamShimi · 2024-11-25T20:22:33.626Z · LW(p) · GW(p)

I remember reading this post, and really disliking it.

Then today, as I was reflecting on things, I recalled that this existed, and went back to read it. And this time, my reaction was instead "yep, that's pointing to the mental move that I've lost and that I'm now trying to relearn".

Which is interesting. Because that means a year or two ago, up till now, I was the kind of people who would benefit from this post; yet I couldn't get the juice out of it. I think a big reason is that while the description of the play/fun mental move is good and clear, the description of the opposite mental move, the one short-circuiting play/fun, felt very caricatural and fake.

My conjecture (though beware mind fallacy), is that it's because you emphasize "naive deference" to others, which looks obviously wrong to me and obviously not what most people I know who suffer from this tend to do (but might be representative of the people you actually met).

Instead, the mental move that I know intimately is what I call "instrumentalization" (or to be more memey, "tyranny of whys"). It's a move that doesn't require another or a social context (though it often includes internalized social judgements from others, aka superego); it only requires caring deeply about a goal (the goal doesn't actually matter that much), and being invested in it, somewhat neurotically.

Then, the move is that whenever a new, curious, fun, unexpected idea pop up, it hits almost instantly a filter: is this useful to reach the goal?

Obviously this filter removes almost all ideas, but even the ones it lets through don't survive unharmed: they get trimmed, twisted, simplified to fit the goal, to actually sound like they're going to help with the goal. And then in my personal case, all ideas start feeling like should, like weight and responsibility and obligations.

Anyway, I do like this post now, and I am trying to relearn how to use the "play" mental move without instrumentalizing everything away.

Replies from: TsviBT↑ comment by TsviBT · 2024-11-25T21:23:07.185Z · LW(p) · GW(p)

My conjecture (though beware mind fallacy), is that it's because you emphasize "naive deference" to others, which looks obviously wrong to me and obviously not what most people I know who suffer from this tend to do (but might be representative of the people you actually met).

Instead, the mental move that I know intimately is what I call "instrumentalization" (or to be more memey, "tyranny of whys"). It's a move that doesn't require another or a social context (though it often includes internalized social judgements from others, aka superego); it only requires caring deeply about a goal (the goal doesn't actually matter that much), and being invested in it, somewhat neurotically.

I'm kinda confused by this. Glancing back at the dialogue, it looks like most of the dialogue emphasizes general "Urgent fake thinking", related to backchaining and slaving everything to a goal; it mentions social context in passing; and then emphasizes deference in the paragraph starting "I don't know.".

But anyway, I strongly encourage you to write something that would communicate to past-Adam the thing that now seems valuable to you. :)

Replies from: adamShimicomment by David Althaus (wallowinmaya) · 2023-02-16T15:46:28.792Z · LW(p) · GW(p)

Great post, thanks for writing!

Most of this matches my experience pretty well. I think I had my best ideas during phases (others seem to agree) when I was unusually low on guilt- and obligation-driven EA/impact-focused motivation and was just playfully exploring ideas for fun and out of curiosity.

One problem with letting your research/ideas be guided by impact-focused thinking is that you basically train your mind to immediately ask yourself after entertaining a certain idea for a few seconds "well, is that actually impactful?". And basically all of the time, the answer is "well, probably not". This makes you disinclined to further explore the neighboring idea space.

However, even really useful ideas / research angles start out being somewhat unpromising and full of hurdles and problems and need a lot of refinement. If you allow yourself to just explore idea space for fun, you might overcome these problems and stumble on something truly promising. But if you had been in an "obsessing about maximizing impact" mindset you would have given up too soon because, in this mindset, spending hours or even days without having any impact feels too terrible to keep going.

comment by koletyst · 2023-02-20T12:12:50.853Z · LW(p) · GW(p)

Excellent post! Made me realize a lifelong learning anti-pattern I'd felt was always there, but escaped clear definition; I do think it becomes increasingly more difficult to even see the problem as one's immersion into only ever doing "duty" work increases. The comparison to "muscle atrophy" seems apt for this reason.

comment by Portia (Making_Philosophy_Better) · 2023-03-05T03:14:33.023Z · LW(p) · GW(p)

Just wanted to say I love this. Thank you.

comment by ivoandrews@gmail.com · 2023-02-22T20:15:41.255Z · LW(p) · GW(p)

Thank you for this post! It felt really valuable to me. Going to go have some fun...

comment by metasemi (Metasemi) · 2023-02-22T19:37:34.184Z · LW(p) · GW(p)

Among many virtues, this post is a beautiful reminder that rationality is a great tool, but a lousy master. Not just ill-suited, uninterested: rationality itself not only permits but compels this conclusion, though that's not the best way to reach it.

This is a much-needed message at this time throughout our societies. Awareness of death does not require me to spend my days taking long shots at immortality. Knowledge of the suffering in the world does not require us to train our children to despair. We work best in the light, and have other reasons to seek it that are deeper still.

comment by Ben Smith (ben-smith) · 2023-02-23T07:37:16.142Z · LW(p) · GW(p)

thank you for writing this. I really personally appreciate it!

comment by Pawn · 2023-02-22T16:00:32.167Z · LW(p) · GW(p)

This is exactly what I've been doing when studying mathematics! From letting my mind wander down to just staring at a definition and be 'genuinely curious' about it. In fact one of my favourite activities is to ask "What motivated this definition? Assuming these motivations, what definitions would I've come up with? How do they relate with this one?". It's the sort of exercise that feels important and meaningful to me, but I don't think I know anybody in my circle of friends who ever gives it a try. So I'm very happy to see it acknowledged!

Replies from: TsviBTcomment by DaystarEld · 2023-02-22T19:30:36.158Z · LW(p) · GW(p)

Great post, thank you for writing it. Helps to have something to link people to when trying to explain this, and also the list of examples are great.

(And also Music in Human Evolution gave me a great "click" sensation, as soon as I read the list of facts in the beginning)

comment by David Johnson (david-johnson-1) · 2023-02-22T16:43:56.663Z · LW(p) · GW(p)

Excellently enriching reading you have here! Helped me get back into an excel spreadsheet I have been feeling guilty about improving at work.

comment by Lauren Elbaum (lauren-elbaum) · 2023-02-22T07:13:35.633Z · LW(p) · GW(p)

I think that water on walls make an A coming out of the top of a flow point and a V at the bottom farther away from the flow point. What are your thoughts on this?

Replies from: TsviBT↑ comment by TsviBT · 2023-02-27T15:04:16.446Z · LW(p) · GW(p)

In my observations in the wild, flow out of drainpipes created an A, and flow over the edge of a wall created a V. That seems intuitively to be something about: the drainpipe has a bunch of water all close together, and then it opens up with more space along the surface of the wall. The water flowing over the edge of the wall is spread out, and then wants to stick together to other water, so it gets pulled closer, forming a V, like how a fountain with a watersheet output at the top gives a V-shaped watersheet. I don't feel completely satisfied by this though, because I don't understand exactly why it spreads out when coming out of a drainpipe, rather than continuing to stick together.

comment by Pattern · 2023-02-17T18:14:47.248Z · LW(p) · GW(p)

T: "Gah, I really need to write this up as a blog post. Giving an example that I'm not really into at the moment seems kind of bad. But ok, so, [[goes on a rambling five-minute monologue that starts bored and boring, until visibly excited about some random thing like the origin of writing or the implausibility of animals with toxic flesh evolving or emergent modularity enabled by gene regulatory networks or the revision theory of truth or something; see the appendix for examples]]"

Still reading the rest of this.

"Playful Thinking" (Curiosity driven exploration) may be serendipitous for other stuff you're doing, but there isn't a guarantee. Pursuing it because you want to might help you learn things. Overall it is (or can be) one of the ways you take care of yourself.

A focus only on value which is measurable in one way can miss important things. That doesn't mean that way should be ignored, but by taking more ways into account, you might get a more complete picture. If in the future we have better ways of measuring important things, and you want to work on that, maybe that could make a big difference.

Overall, 'I have to be able to justify why I'm working on this' seems like the wrong approach. This is definitely the case for how you spend ALL of your time. This becoming the default isn't justified. ('Your desire to learn will help you learn.' 'That sounds inefficient.' 'What?')

comment by Ben (ben-lang) · 2023-02-17T12:49:54.767Z · LW(p) · GW(p)

Mostly off-topic, but I think I know the answer to the guitar chamber problem posed.

A very counter-intuitive aspect of wave emission is the Purcell effect. The emission of waves from a source (like an excited atom, radio antenna or guitar string) is not solely a property of the source itself, but arises from the interaction between the source and the surrounding space. As a silly example if the guitar string was radiating sound into a 4D or non-Euclidian space we wouldn't expect the emission rate to be unchanged. A more immediate example is that if you hit a drum on the edge you get less sound than if you hit it in the middle. The "space" (drumskin) looks different in the middle than at the edges.

In this analogy the string is the drumbstick, and the air is the drumskin. If the air has edges (where we have wood for example) then it shouldn't be weird that hitting the air in some places relative to those edges is better than others. The total "responsiveness" of the air (or vacuum for light) can be moved around not just in location, but also be re-distributed away from some frequencies and towards others. A cavity with a lossy resonant mode is often good for this, enhancing interactions at frequencies near the resonant frequency of the cavity. This actually amplifies the interaction rate - the guitar string radiates more sound energy per unit time (but looses its own energy faster).

The key term is "local density of states", which measures the responsiveness of a field at a particular location, frequency (and polarisation). This mechanism is used to enhance lasers (with better laser cavities) and single-photon sources (which are normally entirely reliant on this effect to work). I assume that guitars are using the same thing.

Replies from: TsviBT↑ comment by TsviBT · 2023-02-18T06:43:38.296Z · LW(p) · GW(p)

Interesting. I'm not sure I follow though. How would stricter boundary conditions (having guitar walls) make the air more receptive to energy? Why doesn't this theory predict that strings with just a fretboard would be the loudest?

Replies from: ben-lang↑ comment by Ben (ben-lang) · 2023-02-18T13:33:33.867Z · LW(p) · GW(p)

If you had no boundary conditions at all (string with fretboard) then the air is receptive to sounds of all frequencies. It is also equally receptive to sound sources at every location. (X and X+1 are identical). The vibrating string couples to the normal modes, which in this case (no boundaries) are plane waves. All directions and locations are equal. Frequencies are unequal (high frequencies more strongly emit because their are a higher density of plane waves with a higher frequency).

Now, lets introduce a single infinite flat wall. (Infinitely high and wide. Made of perfectly reflective material for simplicity). The normal modes are now different, they all have a node at the location of the wall (x=0). The normal modes are no longer plane waves, but more like sine waves. If we imagine a sine wave coming out from x=0 then it has peaks and troughs. A string at one of the anti-nodes will radiate no sound at all into that mode. (So for sound with wavelength 10cm a string either 5cm, 10cm, 15cm, 20cm... from the wall would not radiate into that mode.) Meanwhile a string moved 2.5cm from any of those will be hitting the sine wave at its maximum/minimum (peak/trough), and radiate additional energy into that mode.

In the guitar example the guitar chamber has a normal mode with a very high intensity at the string location. This means that that specific normal mode will be strongly activated by the string. (Hitting the mode at its anti-node). The total sound output is determined by how well the string couples not just to that specific normal mode, but to all of them. But, there is no rule that they have to average out the same as they would without boundaries.

I think the biggest mental block in terms of understanding this is to think of the sound as "too small". You are imagining that the string radiates little ripples of sound that "don't know about" the walls until they crash into them. This is not a useful mental image. The wave is a spatially extended object, (otherwise it could not have a wavelength). The "it can't fit" situation (a sound with wavelength 10cm in a pipe only 3cm across) is quite intuitive. The "it fits super well" is, I agree, harder to wrap the head around.

Something I got hung up on when learning this was trying to work out what made the air molecules next to the string different in one case from another. The answer is the air molecules right next to the string are not taking the energy by themselves, any more than the cashier at a shop is taking the money by themselves. All the money passes through the cashier's hands, but the amount of money handed over is not dependent entirely on the cashier themselves, but also depends on the wider context of the shop, its location and the goods available. Those air molecules right next to the string are the immediate place the energy goes (like the coins in the cashier's hand) but trying to zoom right in on them and look at the causality without the bigger picture will not be useful. They are ordinary air molecules, but the will absorb more energy if their context is different. Just like the same person at a cash register might get handed more money if they worked in a different shop. (This last paragraph is not an explanation of why more sound goes out, but instead a wooly sketch of why a particular mindset is not useful).

Replies from: TsviBT↑ comment by TsviBT · 2023-02-19T14:50:56.079Z · LW(p) · GW(p)

I don't get it yet. Thanks though! Might come back. (I'm probably not devoting enough attention to get it.)

Replies from: ben-lang↑ comment by Ben (ben-lang) · 2023-02-20T13:53:28.042Z · LW(p) · GW(p)

I am afraid I have not given enough detail for you to "get it" based just on my comments. Often the phrases "resonance", "density of states" and "impedance matching" are used as catch all terms for describing the situation, although these phrases obviously don't themselves bring any understanding.

All three terms are describing the same thing in different words. "Impedance matching" is gesturing to the fact that when a wave moves into a material where it travels with a different speed their is a reflection at the interface, so shaping a cavity that effectively slows the sound waves down by making them ricochet reduces the amount of sound that the air bounces back into the string at that interface. Resonance is getting the same thing in the frequency picture. Density of states is saying the same thing again, this time using a Fourier transform to talk about the density of different possible waves in position instead of the speed of waves. (Essentially if the waves are walking on a monopoly board a slower speed implies a greater density of squares -> more states. So something that likes putting sound counters into nearby squares will deposit more in the slow areas because their are more squares to put them in.)

comment by Review Bot · 2024-04-05T21:54:03.664Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

comment by Mateusz Bagiński (mateusz-baginski) · 2023-08-22T16:00:25.309Z · LW(p) · GW(p)

Groups, but without closure

https://en.wikipedia.org/wiki/Groupoid ?

Replies from: TsviBTcomment by Wofsen · 2023-03-08T13:03:15.188Z · LW(p) · GW(p)

Playful thinking sets up questions in your brain that you wouldn't have known how to set up just by explicitly trying to set up questions. A question is a record of a struggle to deal with some context. These questions, like the dozen problems Feynman carries around with him, set you up to connect with new ideas more thoroughly.

Reminds me of Feynman's story about how a plate got him out of a depressive funk. https://kongar-olondar.bandcamp.com/track/at-cornell-part-2

comment by Nicholas / Heather Kross (NicholasKross) · 2023-02-24T00:58:46.105Z · LW(p) · GW(p)

My best high school math teacher (hi Ms. Keri!) tried to impart this idea to us, literally saying "just... play! with this idea".

Replies from: TsviBT↑ comment by TsviBT · 2023-02-27T15:16:02.192Z · LW(p) · GW(p)

I'm curious about what effects that had on you and your classmates. E.g., did it seem to cause an experience with math, that then seemed to cause further patterns of behavior later on?

Replies from: NicholasKross↑ comment by Nicholas / Heather Kross (NicholasKross) · 2023-03-03T23:59:45.436Z · LW(p) · GW(p)

I'm not sure. I minored in mathematics, though I forgot a lot of the specific details I learned. If plopped in front of a notation-heavy problem (e.g. one of those integrals for the flow of water through a 4D surface or whatever) of the kind I did for homework during college math courses, I'm unlikely to be able to solve it without googling once or twice.

I view play as very important, while also getting FOMO from all directions leading me away from it (this seems to be a common problem for adults), and also did I mention this is all under a cloud of inattentive ADHD?

comment by Viktor Rehnberg (viktor.rehnberg) · 2023-02-23T19:59:19.972Z · LW(p) · GW(p)

These problems seemed to me similar to the problems at the International Physicist's Tournament. If you want more problems check out https://iptnet.info

comment by bhishma (NomadicSecondOrderLogic) · 2023-02-22T05:08:01.149Z · LW(p) · GW(p)

This is very similar to the Julia Galef's framing of Hayekians vs Central planners, which I have found to be quite useful to look at these sort of dynamics. It's also a bit like the exploration/exploitation tradeoff. Initially when you have high uncertainty, it makes a lot of sense to wander and follow your curiosity as that would be more productive. And once you've gathered enough about something, it's much easier to apply the said knowledge.

comment by Dennis Akar (British_Potato) · 2023-02-16T12:13:51.031Z · LW(p) · GW(p)

And you can't just get that stuff by asking your mind to tell you that stuff; you have to let your mind do its thing sometimes, without requiring a legible justification.

I need to really internalize this. I tend to "rediscover" this and forget about it the moment things become more stressful.

Requiring that things be explicitly justified in terms of consequences forces a dichotomy: if you want to do something for reasons other than explicit justifications in terms of consequences, then you either have to lie about its explicit consequences, or you have to not do the thing. Both options are bad.

Unless one explicit justification is simply that "this is fun to do, which is Good™ in-and-of-itself, and also <insert this article here/>"?

Overall extremely good article and makes me less guilty about engaging in playful thinking (a frequent occurrence)

Typos:

- using repetitive that hacks aren't easy -> using repetitive hacks that aren't easy

- expects to be given you -> expects to be given to you

↑ comment by TsviBT · 2023-02-18T06:41:18.522Z · LW(p) · GW(p)

this is fun to do, which is Good™ in-and-of-itself, and also <insert this article here/>

The first one seems (according to my taste) healthy, and it's barely trying to be a justification, it just passes off to the intuitive notion of good (which seems healthy for this sort of thing). The second one seems fraught, because it's at risk of allowing the hegemony of explicit-utilitarian justification (that is, justifying things in terms of their legible uses) to subtly worm its way into even this idea of playing without needing a justification. By trying to justify [unjustified play], one implicitly asserts that [unjustified play] requires justification.

Typos: fixed the first, thanks. The second one actually wasn't a typo. I tried an edit to clarify. The point is that if you give yourself to playful thinking, then playful thinking gets the opposite of learned helplessness, "learned empowerment": it expects to be given you--that is, it expects to be empowered to play, embodied in you / your mind--and so it expects for it to be worthwhile to gather itself to play. And so it does gather itself to play, and in fact plays!