Gemini modeling

post by TsviBT · 2023-01-22T14:28:20.671Z · LW · GW · 8 commentsContents

Two scenarios Gemini modeling and empathic modeling Gemini modeling vs. general modeling Features that correlate with gemini modeling, with examples None 8 comments

[Metadata: crossposted from https://tsvibt.blogspot.com/2022/08/gemini-modeling.html. First completed 17 June 2022. I'm likely to not respond to comments promptly.]

A gemini model is a kind of model that's especially relevant for minds modeling minds.

Two scenarios

-

You stand before a tree. How big is it? How does it grow? What can it be used to make? Where will it fall if you cut it here or there?

-

Alice usually eats Cheerios in the morning. Today she comes downstairs, but doesn't get out a bowl and spoon and milk, and doesn't go over to the Cheerios cupboard. Then she sees green bananas on the counter. Then she goes and gets a bowl and spoon and milk, and gets a box of Cheerios from the cupboard. What happened?

We have some kind of mental model of the tree, and some kind of mental model of Alice. In the Cheerios scenario, we model Alice by calling on ourselves, asking how we would behave; we find that we'd behave like Alice if we liked Cheerios, and believed that today there weren't Cheerios in the cupboard, but then saw the green bananas and inferred that Bob had gone to the grocery store, and inferred that actually there were Cheerios. This seems different from how we model the tree; we're not putting ourselves in the tree's shoes.

Gemini modeling and empathic modeling

What's the difference though, really, between these two ways of modeling? Clearly Alice is like us in a way the tree isn't, and we're using that somehow; we're modeling Alice using empathy ("in-feeling"). This essay describes another related difference:

We model Alice's belief in a proposition by having in ourselves another instance of that proposition.

(Or: by having in ourselves the same proposition, or a grasping of that proposition.) We don't model the tree by having another instance of part of the tree in us. I call modeling some thing by having inside oneself another instance of the thing--having a twin of it--"gemini modeling".

Gemini modeling is different from empathic modeling. Empathic modeling is tuning yourself to be like another agent in some respects, so that their behavior is explainable as what [you in your current tuning] would do. This is a sort of twinning, broadly, but you're far from identical to the agent you're modeling; you might make different tradeoffs, have different sense acuity, have different concepts, believe different propositions, have different skills, and so on; you tune yourself enough that those differences don't intrude on your predictions. Whereas, the proposition "There are Cheerios in the cupboard.", with its grammatical structure and its immediate implications for thought and action, can be roughly identical between you and Alice.

As done by humans modeling humans, empathic modeling may or may not involve gemini modeling: we model Alice by seeing how we'd act if we believed certain propositions, and those propositions are gemini modeled; on the other hand, we could do an impression of a silly friend by making ourselves "more silly", which is maybe empathic modeling without gemini modeling. And, gemini modeling done by humans modeling humans involves empathic modeling: to see the implications of believing in a proposition or caring about something, we access our (whole? partial?) agentic selves, our agency.

Gemini modeling vs. general modeling

In some sense we make a small part of ourselves "like a tree" when we model a tree falling: our mental model of the tree supports [modeled forces] having [modeled effects] with resulting [modeled dynamics], analogous to how the actual tree moves when under actual forces. So what's different between gemini modeling and any other modeling? When modeling a tree, or a rock, or anything, don't we have a little copy or representation of some aspects of the thing in us? Isn't that like having a sort of twin of that part / aspect of the thing in our minds? How's that different from how we model beliefs, caring, understanding, usefulness, etc.?

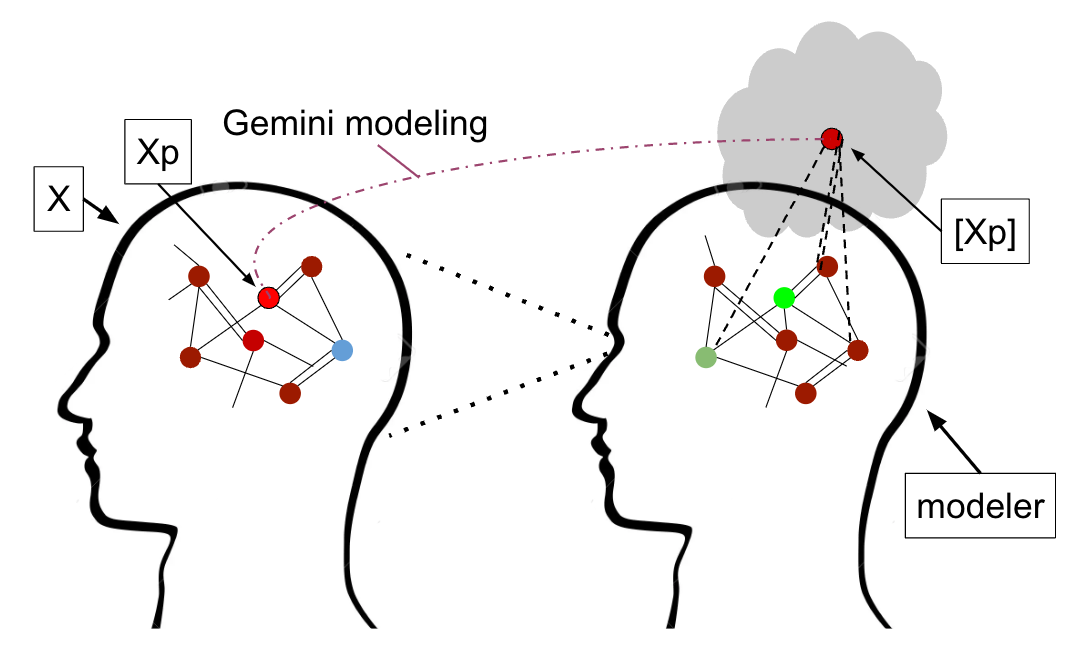

This essay asserts that there is a difference between that kind of modeling, and gemini modeling. The difference can be summed up as follows. Let X be some thing, let Xp be some part or aspect of X, and let [Xp] be a model of Xp (for some modeler). E.g. the form of the tree as it affects how the tree falls, is an aspect Xp of the tree X; and caring about Cheerios is an aspect of Alice. Then:

Xp is gemini modeled by [Xp] when the thingness of Xp, as it shows itself in the context provided by X, can show itself through [Xp] as fully and naturally as it shows itself through Xp.

Or put roughly into an analogy:

Xp : the context of Xp in X :: [Xp] : the context of [Xp] in the modeler

An illustration of the special case where X is the same kind of thing as the modeler:

In the above formula, "naturally" is a pre-theoretic term meaning "easily, in the natural course, without obstacle, in an already supported way". "Context" is a pre-theoretic term meaning "the surroundings, local environment, relationships; the inputs, the support given, the resources available, the causes; the cybernetic couplings, demands made, constraints, combinations; the outputs, uses, role, niche, products, implications, effects". Here "context" also points at the theoretical concept of "founding" in the sense of "that which is necessary as a precondition for Xp to be fully what it is" (which is a subset of "founding" as discussed here); propositions are founded inter alia on deduction, in that if you don't have deduction then you don't have propositions.

Features that correlate with gemini modeling, with examples

-

Empathic modeling, as discussed above. Different human minds are sufficiently similar that, not only is empathic modeling useful, but also they provide mental contexts that are similar enough for propositions and caring to be the same thing in different human minds. So human minds support gemini modeling.

-

Beyond general modeling, we could also empathically model the tree: Where would I reach my roots, if I wanted a drink? Where would I face my leaves if I wanted sun? Which parts would I make strong to support myself? More precisely, here we're empathically modeling [the evolution of that tree species, plus criteria applied by parts of the workings of the individual tree], in that they "look for" ways of shaping the tree that make it reproductively fit.

-

It's much more of a stretch, and maybe just incorrect, to say that we're gemini modeling tree-evolution's goal of getting water and goal of getting sun, with the resulting subgoals, and gemini modeling the belief of tree-evolution that in the area of the ground where the roots have found water there will be more water, and that in the direction where the leaves have found sun there will be more sun. Tree-evolution's ways of "pursuit of goals", and ways of "unfolding implications of a belief", aren't shaped like ours, so by default we're not gemini modeling goals and beliefs. There might be a more narrow sort of "belief" (of a deficient sort) held by [trees and tree-evolution] that we could be said to gemini model.

-

-

Recognition. When we want to explain someone's behavior, we often find "ready made" stuff in ourselves that "already is" whatever's going on with them. For example, I might point at a pair of boots with a spiky spinnable thing on the heel and nod, point at another pair that lacks the spiky spinnable thing and shake my head no, and so on. Whether or not the word "spurs" comes to mind, you can recognize exactly what I'm indicating as something you already had--you might for example believe correctly that you could have decided to act out the same pattern of pointing and head-shaking without seeing me do it.

-

Translation. In the same vein as recognition, a translation is a gemini modeling. E.g., in monolingual fieldwork, a linguist speaks with a speaker of an unknown language, without using any already-shared vocabulary, and maps the speaker's words to concepts (what lies behind words), which the linguist can find in zerself and put in zer own words. (Demonstration.)

-

Non-abstraction. Abstraction is pulled away from a thing; what's pulled away is less of a twin to the thing. Our version of "There are Cheerios in the cupboard." isn't an abstraction of Alice's version; our version of the weight and shape of the tree is an abstraction of the tree.

-

Type equality. If [Xp] gemini models Xp, it "has the same type" as Xp, in the sense that saying "When I think of an apple, there's an apple in my head." is a type error. (This essay could be viewed as trying to explain what's meant by "type" here.)

-

Equality or analogy, not representation. It's natural to say that you represent the tree, but that you believe the same proposition as Alice, or have the same values as Alice. You and Alice are the same sort of thing, you're analogous, and the proposition is analogously used / instantiated / manifested / grasped / participated in, by each of you. Your model of a tree plays a role in you strongly analogous to the role played in me by my model of a tree; i.e., your model twins mine. Your model (representation) of an aspect of a tree does not play in you a role strongly analogous to the role played by anything in a tree. Even the role played in you by your representation of the weight of the tree is not strongly analogous to the role played in the tree by [the actual weight of the tree, abstractly]. See the points below on access and convergence.

-

Access. A gemini model of Xp accesses the thingness of Xp in the same way, to the same extent, with the same ease as X accesses the thingness of Xp.

-

In modeling a tree as a shape and weight, we don't have access to the tree, only to some aspects of it; we're cut off from the rest of the tree. We can access the rest of the tree by investigating the tree itself, but we need the tree itself. That investigation, that following of the referential network into the tree-nexus, goes against the grain, as it were, of what we do have in us about the tree. Going with the grain of what we do have in us about the tree, we find intuitions about other physical objects; F=ma; calculus; experience with things tipping over; lines of load distribution; the general relationships between shape and compressive and tensile strength; analogies to buildings...

-

In modeling a proposition, we have access to the proposition. The proposition logical implies other statements, makes recommendations for caring action, provides conditions for its falsification, contributes to triangulating its constituent concepts, and so on. We can observe / enact / participate in / compute these relationships. The proposition has internal structure that we can analyze, e.g. its very-generally-a-priori logical relationships to other propositions, made obvious by its grammatical structure. The proposition has refinements that we can investigate just by thinking and without further observing Alice, e.g. we can clarify the terms involved in the proposition (which clarification itself flows along convergent gradients of truth and joint-carving).

-

-

Context. In the same vein as access, a gemini model provides that which is necessary as a precondition for a thing to be fully what it is, i.e. the foundation or necessary context of that thing.

-

E.g., a proposition can't be a proposition without investigation of truth values, which we implicitly provide when we think of Alice as believing a proposition.

-

We don't provide the context for the weight of the tree, as we represent it, to really be the weight of a tree. Our representation is like weight, abstractly, but it doesn't abut the wood, the termites, the support for live and dead branches, the sinking into the ground, the binding to the roots, cellulose, evolution, and so on, which are abutted by the actual weight of the actual tree.

-

Taking, on its own, a segment of DNA that codes a protein, gives us access only to some aspects of the gene whose DNA it is. If we splice the segment of DNA into a mouse embryo and let it develop, we've provided much more of the context in which the gene is a gene rather than only an assemblage of atoms. In the mouse embryo, the gene can take on its role--its place in the spatial differentiation of the embryo and temporal response to conditions, its up- and down-regulators, its feedback circuits with other genes being expressed, the contribution of its transcribed protein to the mouse's physiology. (This isn't complete, though; the gene doesn't have a role in mouse-evolution.) The gene, if placed in a PCR machine, can't take on its role; and it can only sometimes partly take on its role in a prokaryote, since for example prokaryotes don't have introns and so won't correctly translate eukaryotic genes with introns into proteins.

-

-

Convergence. If something is convergent, it's more amenable to be the same across analogous but non-identical contexts, and hence more amenable to gemini modeling. (This is in tension with non-abstraction.) Propositions, being related to the normativity of truth, have convergence; the weight of the tree, in the tree, does not tend to converge; our representation of the weight of the tree connects to our thinking in ways that tend to converge.

- Convergence answers an objection: Isn't gemini modeling just better modeling? If I had a much better model of the tree, that would be a gemini model, right? A much better model of the tree would indeed blur the distinction somewhat.

- But the distinction between gemini modeling and general modeling in part describes exactly this difference: the tree is not as amenable to gemini modeling as is "There are Cheerios.", and that implies that it's harder to get a very accurate model of the tree. The tree is big and complicated and is open to the world, admitting complicated interactions with other systems with lots of details that have large implications for what happens with the tree. To accurately model the tree would be to also model a lot of that other stuff, which is hard to do. Even though Alice is also complicated and related to complicated things, her belief "There are Cheerios." tends to converge in its meaning, and more generally is gemini modelable, being given its meaning by a mental context shared by other agents. The tree, being a living thing, does have some convergence, making it easier to model.

- The distinction between gemini modelable vs. not gemini modelable bites much harder with things that force non-cartesian reasoning. One can imagine, maybe unrealistically, getting a perfect mental simulacrum of a tree and its physical context; but it is impossible to get a complete understanding of yourself, or of other agents who know everything you know. In that highly non-cartesian contect, it may be that if a thing is modelable, that's usually mainly because it is gemini modelable, i.e. it gets most of its meaning from its surrounding mental context in a way that can also be provided by analogous mental contexts held by other minds. (Löbian cooperation might on further examination provide a stark example of gemini modeling.)

-

Description -> Causality -> Manipulation. A gemini model implies more beliefs about counterfactuals. It makes more sense to condition on someone being silly, than to counterfact on someone being silly; on the other hand, it does make sense to counterfact on Alice believing "There are Cheerios in the cupboard." or "There are not Cheerios in the cupboard.", and it makes sense to manipulate that variable, e.g. by lying to Alice. It doesn't make much sense to ask what would be the causal effect on a tree itself of making the tree 10% taller. It does make sense to ask what the causal effect on a car would be of making the engine have 10% more torque.

-

Mental. Agents, minds, and life, tend to provide contexts for things.

-

Simulation. However, physical things and other non-mental things can be gemini models, prototypically called "simulation". E.g.:

-

You could chop down a tree of similar shape and size to the original tree, see where the similar tree falls, and surmise that the original tree would fall similarly if you cut it the same way. (How is gemini modeling different from just literally having two of something? Gemini modeling implies a modeler, and doesn't imply exact identity; it implies enough identity and enough analogy of context that the thingness of the thing is accessed fully. By using a tree, not just a mental representation of a thick rod, much more of the original tree-nexus is accessed; the phase transition of fibers in the tree twisting and snapping as the cellulose structures buckle and the bark bursts with a cascade of crackle-crunch sounds, for example, is right there ready to be expressed in context.)

-

A human gene spliced into a mouse (an organism isn't a mind). In this example, the modeler (the scientist who did the splicing) is separate from what's being modeled, but there's still a non-trivial gemini situation: the mouse is not identical to other mice and is definitely not identical to humans, but it provides enough of a context analogous to the original context of the gene that the gene can literally and figuratively express itself, at least to some extent and maybe more-or-less fully. The result is a weird partial twinning, in the mouse, of the gene as it is in a human.

-

You could build a replica of a car's engine, and run it on your workbench hooked up to electricity and gasoline; or better yet, put it in a car and drive it around, to give the engine realistic (real) vibrations, demands, air flow, heat, and so on. Here gemini modeling is shown to be a dimension, with true gemini modeling at one extreme. The more the thing being modeled is robust and convergent, the less necessarily correlated is gemini modeling with replicating the whole context exactly; the engine, though, doesn't adapt itself, and so to be a gemini model the engine requires that you arrange the context precisely.

-

An automated theorem prover is sort of a grey zone; does it gemini model a proposition? We could say it gemini models a proposition "as a part of such-and-such logical theory in such-and-such language with such-and-such truth seeking", but doesn't model a proposition as a part of an agent that connects caring and propositions. But this doesn't clarify much; how is this distinguished from modeling in general? At least, we've highlighted that the context is needed.

-

The Wright brothers used gemini modeling, their wind tunnel providing enough context for a wing to be a wing.On reflection, I'm not sure about this one. The context of design is interesting, but I'm not sure how to think about it.

-

-

Constitutive of the modeler. To some extent, what I am is a thing that thinks in terms of propositions; when I'm gemini modeling Alice's belief in a proposition, I'm calling on what I am--the structure of how my behavior and thoughts relate to my belief in a proposition--to do the modeling. If constitutiveness really is correlated with gemini modeling, then paradoxically, being able to take something as object is anti-correlated with gemini modeling; this seems in tension with the claim that gemini models expose more counterfactuals.

Thanks to Sam Eisenstat for relevant conversations.

8 comments

Comments sorted by top scores.

comment by gwern · 2023-08-28T21:19:54.010Z · LW(p) · GW(p)

If you do more work on this, I would suggest renaming it. I didn't find 'Gemini' to be that helpful a metaphor, and discussion of 'Gemini models' is already drowned out by Google's much-hyped upcoming 'Gemini model(s)' (which appear to be text-image models).

comment by Mateusz Bagiński (mateusz-baginski) · 2023-08-28T16:28:59.152Z · LW(p) · GW(p)

My attempt to summarize the idea. How accurate is it?

Replies from: TsviBTGemini modeling - Agent A models another agent B when A simulates some aspect of B's cognition by counterfactually inserting an appropriate cognitive element Xp (that is a model of some element X, inferred to be present in B's mind) into an appropriate slot of A's mind, such that the relevant connections between X and other elements of B's mind are reflected by analogous connections between Xp and corresponding elements of A's mind.

↑ comment by TsviBT · 2023-09-03T16:22:41.280Z · LW(p) · GW(p)

Basically, yeah.

A maybe trivial note: You switched the notation; I used Xp to mean "a part of the whole thing" and X is "the whole thing, the whole context of Xp", and then [Xp] to denote the model / twin of Xp. X would be all of B, or enough of B to make Xp the sort of thing that Xp is.

A less trivial note: It's a bit of a subtle point (I mean, a point I don't fully understand), but: I think it's important that it's not just "the relevant connections are reflected by analogous connections". (I mean, "relevant" is ambiguous and could mean what gemini modeling is supposed to me.) But anyway, the point is that to be gemini modeling, the criterion isn't about reflecting any specific connections. Instead the criterion is providing connections enough so that the gemini model [Xp] is rendered "the same sort of thing" as what's being gemini modeled Xp. E.g., if Xp is a belief that B has, then [Xp] as an element of A has to be treated by A in a way that makes [Xp] play the role of a belief in A. And further, the Thing that Xp in B "wants to be"--what it would unfold into, in B, if B were to investigate Xp further--is supposed to also be the same Thing that [Xp] in A would unfold into in A if A were to investigate [Xp] further. In other words, A is supposed to provide the context for [Xp] that makes [Xp] be "the same pointer" as Xp is for B.

Replies from: mateusz-baginski↑ comment by Mateusz Bagiński (mateusz-baginski) · 2023-09-04T16:20:56.223Z · LW(p) · GW(p)

Sounds close/similar-to-but-not-the-same-as categorical limit (if I understand you and category theory sufficiently correctly).

(Switching back to your notation)

Think of the modeler-mind and the modeled-mind as categories where objects are elements (~currently) possibilizable by/within the mind.

[1]Gemini modeling can be represented by a pair of functors:

- which maps the things/elements in to the same things/elements in (their "sameness" determined by identical placement in the arrow-structure).[2] In particular, it maps the actualized in to the also actualized in .

- which collapses all elements in to in .

For every arrow in , there is a corresponding arrow and the same for arrows going in the other directions. But there is no isomorphism between and . They can fill the same roles but they are importantly different (the difference between "B believing the same thing that A believes" and "B modeling A as believing the that thing").

Now, for every other "candidate" that satisfies the criterion from the previous paragraph, there is a unique arrow that factorizes its morphisms through .

On second thought, maybe it's better to just have two almost identical functors, differing only by what is mapped onto? (I may also be overcomplicating things, pareidolizing, or misunderstanding category theory)

- ^

I'm not sure what the arrows are, unfortunately, which is very unsatisfying

- ^

On second thought, for this to work, we probably need to either restrict ourselves to a subset of each mind or represent them sufficiently coarse-grained-ly.

↑ comment by Nathaniel Monson (nathaniel-monson) · 2023-09-04T16:48:45.460Z · LW(p) · GW(p)

I don't think I've fully processed what you or the OP have said here--my apologies, but this still seemed relevant.

I think the category-theory way I would describe this is Bob is a category B, and Alice is a category A. A and B are big and complicated, and I have no idea how to describe all the objects or morphisms in them, although there is some structure preserving morphism between them (your G). But what Bob does is try to to find a straw-alice category A' which is small, and simple, along with functors from A' to A and from A' to B, which makes Alice predictable (or post-dictable).

Does that make any sense?

Replies from: mateusz-baginski↑ comment by Mateusz Bagiński (mateusz-baginski) · 2023-09-04T17:52:39.812Z · LW(p) · GW(p)

Yeah, maybe it makes more sense. B' would be just a subcategory of B that is sufficient for defining (?) Xp (something like Markov blanket of Xp?). The (endo-)functor from B' to B would be just identity and the relationship between Xp and [Xp] would be represented by a natural transformation?

comment by Mati_Roy (MathieuRoy) · 2023-02-05T23:56:58.272Z · LW(p) · GW(p)

i just read the beginning

I thought Alice wanted bananas for a change, but they weren't ready yet, so ze went for the Cheerios :p

Replies from: TsviBT