The voyage of novelty

post by TsviBT · 2023-04-30T12:52:16.817Z · LW · GW · 0 commentsContents

Novelty Structure hand-holdable by humanity Human understanding The dark matter shadow of hand-holdable structure Desired structure Voyage None No comments

[Metadata: crossposted from https://tsvibt.blogspot.com/2023/01/the-voyage-of-novelty.html. First completed January 17, 2023. This essay is more like research notes than exposition, so context may be missing, the use of terms may change across essays, and the text might be revised later; only the versions at tsvibt.blogspot.com are definitely up to date.]

Novelty is understanding that is new to a mind, that doesn't readily correspond or translate to something already in the mind. We want AGI in order to understand stuff that we haven't yet understood. So we want a system that takes a voyage of novelty: a creative search progressively incorporating ideas and ways of thinking that we haven't seen before. A voyage of novelty is fraught: we don't understand the relationship between novelty and control within a mind.

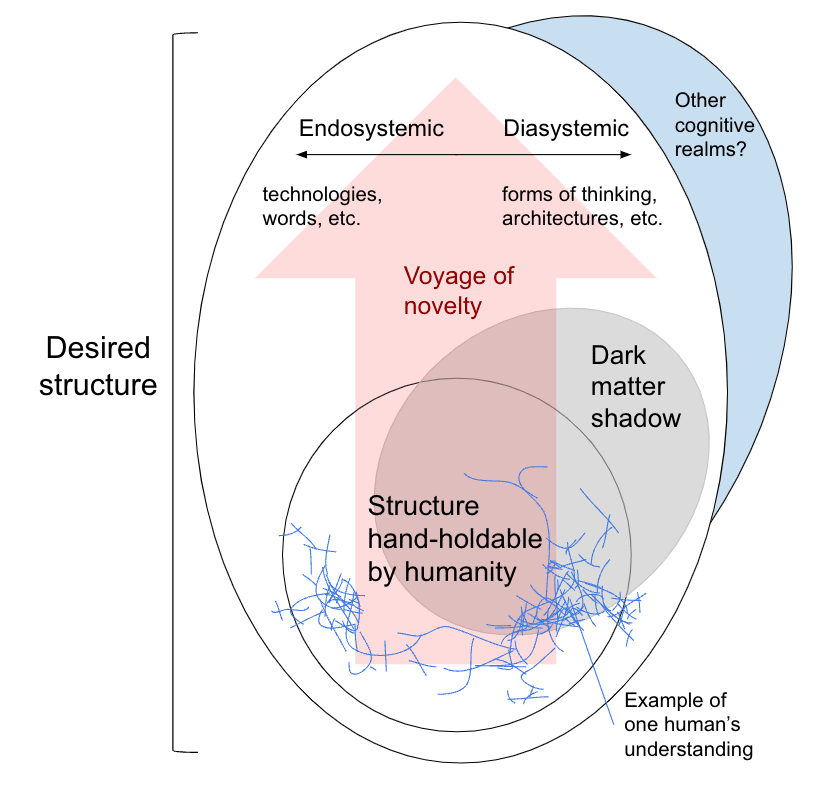

What takes the voyage, from where to where? The voyage is taken by some AI system, from not understanding what we want it to understand, to understanding all that. A schematic:

Novelty

Novelty is structure that is new to a mind. A mind acquires novelty via creativity.

Endosystemic novelty is novelty that fits in with the preexisting mind in ways analogous to how preexisting elements of the mind fit in with the mind; e.g., a new sub-skill such as "opening a car door", or a new word. Diasystemic novelty is novelty (new structure) that involves through-going changes to the mind; e.g. a new heuristic like "investigate boundaries" applied automatically across many contexts. At the extreme end of diasystemic novelty are cognitive realms distinct from the cognitive realm currently occupied by the mind.

Structure hand-holdable by humanity

Where does the voyage of novelty start? It starts wherever the mind is. But where can it safely start?

A lot of structure has the property that it is easy enough for humans to render non-dangerous a mind's exploration (discovery, creation) and exercise (application, expression) of that structure. Humanity can "hold a mind's hand" through its voyage of novelty through such structure.

Some overlapping reasons that structure can be hand-holdable, with examples:

- The structure just doesn't contribute much to a mind having large effects on the world. For example, a mind might know about some obscure species of grass that no human knows about; a human can't understand the mind's thoughts in absolutely full detail, at least without zerself learning about the species of grass. But it just doesn't matter that much, assuming the grass isn't a source of extremely potent toxin or something.

- The structure is easy to intervene on to limit the extent of its influence over the rest of the mind. For example, one can limit the information flow between graspable elements. (However, this sort of hand-holding is very fraught, for example because of conceptual Doppelgängers: the rest of the mind can just reconstitute the supposedly boxed structure in a Doppelgänger outside of the box.)

- The structure is easy for humans to understand fully, including all its implications for and participation in thinking and action, so that it doesn't contribute inscrutably to the mind's activity. See gemini modeling. For example, the mental model [coffee cup] is hand-holdable, as humans have it. This is a central example of hand-holdability: the mind (hypothetically, at least) in question does the same things, thinks the same way, with respect to coffee cups, as do humans; what's in common between the mind's activity in different contexts with respect to coffee cups, is also in common with humans. (It's not clear whether and how this is possible in practice, for one thing due to essential provisionality: the way a mind thinks about something can always change and expand, so that [the structure that currently plays the analogous role in the mind, as was played by the structure that was earlier mostly coincident with some structure in a human], as a path through the space of structure, will diverge from the human's structure.)

- The structure is somehow by nature limited in power or bounded in scope of application. For example, AlphaZero is hand-holdable because it just plays a narrow kind of game. See KANSI. A non-example would be the practice of science. Since science involves reconfiguring one's thoughts to describe an unboundedly expanding range of phenomena, it's not delimited--that is, it isn't easily precircumscribed or comprehended.

What are some other ways structure can be hand-holdable?

This is not a very clear, refined idea. One major issue is that hand-holdability is maybe unavoidably a property of the whole mind. For example, the hypothetical ideas that constitute the knowledge of how to make a nuclear weapon with spare junk in your garage could be individually hand-holdable, but together are not hand-holdable: we don't know how to enforce a ban on weapons you can manufacture that easily. For another example, humans have some theory of mind about other humans; at the human level of intelligence, most people's theory of mind is hand-holdable, but at a much higher level of intelligence would not be hand-holdable, and there'd be profounder and more widespread interpersonal manipulation than there is in our world. The theory of mind at a much higher level of intelligence would involve a lot of genuine novelty, rather than just scaling up the exercise of existing structure, but at some point the boundaries would blur between novelty and just scaling up.

Another vagueness, is the idea of changing hand-holdability. If a human comes to deeply understand a new idea, that should be considered to potentially dynamically render that concept hand-holdable: now the human can comprehend another mind's version of that idea. On the other hand, I'd want to say that architecting an AI system so that it avoids trampling on other agents's goals does not count as rendering that AI's creativity hand-holdable. It seems intuitively like it's the AI doing the work of interfacing with the novelty to render non-dangerous (to us) the AI's discovery and exercise of that novelty.

Human understanding

In the schematic, a single human's knowledge is represented as a reticular near-subset of structure hand-holdable by humanity. The set protrudes a little outside of what's hand-holdable. The endosystemic protrusion could be for example the instructions and mechanical skills to make a garage nuclear weapon. The diasystemic protrusion could be for example the intuitive know-how to short-circuit people's critical law-like reason, in favor of banding together to scapegoat someone.

The set of structure hand-holdable by humanity is not covered by the understanding of humans. For example, no human understands why exactly AlphaZero (or Stockfish) makes some move in a chess game, but those programs are hand-holdable.

The dark matter shadow of hand-holdable structure

"Understanding" here doesn't mean explicit understanding, and should maybe be thought of as abbreviating "possessed structure" ("possess" from the same root as "power" and "potent", maybe meaning "has power over" or "incorporates into one's power"). One can understand (i.e. possess structure) in a non-reflective or not fully explicit way: I know how to walk, without knowing how I walk.

If we don't know how I walk, other than by gemini modeling, then how I walk is dark matter: we know there is some structure that generates my walking behavior, and we can model it by calling on our own version of it, but we don't know what it is and how it works.

The schematic shows the dark matter "shadow" of structure that's hand-holdable by humanity: the collection of structure that generates that hand-holdable structure. A dark matter shadow isn't unique; there's more than one way to make a mind that possesses a given collection of structure.

Of the structure in the dark matter shadow of hand-holdable structure, some is endosystemic. We have concepts that we don't have words for, but that we use in the same way we use concepts which we do have words for, and that we build other hand-holdable structure on top of. (Though strictly speaking concepts can constitute diasystemic novelty, depending on how they're integrated into the mind; this is an unrefinedness in the endo-/dia-systemic distinction.) Some is diasystemic, for example whatever processes humans use to form discrete concepts. The schematic shows much more diasystemic than endosystemic dark matter. That's an intuitive guess, supported by the general facts that dark matter tends to be generatorward (generators being necessary for the observed structure, and also harder to see and understand), and that generators tend to be diasystemic.

Of the structure in the dark matter shadow of hand-holdable structure, some is itself hand-holdable. For example, at least some unlexicalized concepts should be easy for us to handle. Imagine not knowing the modern, refined mathematical concept of "set" or "natural number", but still being able to deal intuitively, fluently with another mind's conduct with respect to (ordinary, small) sets, such as identity under rearrangement and equinumerosity under bijection (see The Simple Truth [LW · GW]). Some is not hand-holdable, e.g. theory of mind as mentioned above. Dark matter has a tendency to be not hand-holdable, even though humans possess a sufficient collection of dark matter structure to generate much of hand-holdable structure. That's because dark matter is not understood, and because dark matter is more generatorward and therefore more powerful. The dark matter shadow of structure hand-holdable by humanity may be easy to scale up, and therefore difficult to hand-hold.

Desired structure

We want an AI in order to possess structure we don't already possess. So there's some target collection of structure we'd like the AI to acquire. This probably includes both endosystemic novelty, e.g. implementable designs for nanomachines that we can understand, and also diasystemic novelty, e.g. some structuring of thought that produces such designs quickly.

More precisely, that's novelty which is endosystemic or diasystemic (or parasystemic) for the AI. The novel understanding that the AI gains which we don't already possess is, for us, ectosystemic novelty, unless we follow along with the AI's voyage.

Voyage

The schematic again for the voyage of novelty:

So, as an aspirational trajectory: an AI system could start with structure that is easily understood or bounded by humans; then the AI system gains novelty through creativity; the new structure includes the structure that generates human understanding, concepts new to humanity, and forms of thought new to humanity; the new structure might include very alien cognitive realms; until the AI has some target collection of structure. Having an AI follow such a trajectory is fraught, since we don't know how to interface with novelty and we don't know what determines the effects of a mind.

Thanks to Sam Eisenstat for morally related conversations. Thanks to Vivek Hebbar for a related conversation.

0 comments

Comments sorted by top scores.