Why I no longer identify as transhumanist

post by Kaj_Sotala · 2024-02-03T12:00:04.389Z · LW · GW · 33 commentsContents

33 comments

Someone asked me how come I used to have a strong identity as a singularitarian / transhumanist but don’t have it anymore. Here’s what I answered them:

——-

So I think the short version is something like: transhumanism/singularitarianism used to give me hope about things I felt strongly about. Molecular nanotechnology would bring material abundance, radical life extension would cure aging, AI would solve the rest of our problems. Over time, it started feeling like 1) not much was actually happening with regard to those things, and 2) to the extent that it was, I couldn’t contribute much to them and 3) trying to work on those directly was bad for me, and also 4) I ended up caring less about some of those issues for other reasons and 5) I had other big problems in my life.

So an identity as a transhumanist/singularitarian stopped being a useful emotional strategy [LW · GW] for me and then I lost interest in it.

With regard to 4), a big motivator for me used to be some kind of fear of death. But then I thought about philosophy of personal identity until I shifted to the view that there’s probably no persisting identity over time anyway and in some sense I probably die and get reborn all the time in any case.

Here’s something that I wrote back in 2009 that was talking about 1):

—

The [first phase of the Excitement-Disillusionment-Reorientation cycle of online transhumanism] is when you first stumble across concepts such as transhumanism, radical life extension, and superintelligent AI. This is when you subscribe to transhumanist mailing lists, join your local WTA/H+ chapter, and start trying to spread the word to everybody you know. You’ll probably spend hundreds of hours reading different kinds of transhumanist materials. This phase typically lasts for several years.

In the disillusionment phase, you start to realize that while you still agree with the fundamental transhumanist philosophy, most of what you are doing is rather pointless. You can have all the discussions you want, but by themselves, those discussions aren’t going to bring all those amazing technologies here. You learn to ignore the “but an upload of you is just a copy” debate when it shows up the twentieth time, with the same names rehearsing the same arguments and thought experiments for the fifteenth time. Having gotten over your initial future shock, you may start to wonder why having a specific name like transhumanism is necessary in the first place – people have been taking advantage of new technologies for several thousands of years. After all, you don’t have a specific “cellphonist” label for people using cell phones, either. You’ll slowly start losing interest in activities that are specifically termed as transhumanist.

In the reorientation cycle you have two alternatives. Some people renounce transhumanism entirely, finding the label pointless and mostly a magnet for people with a tendency towards future hype and techno-optimism. Others (like me) simply realize that bringing forth the movement’s goals requires a very different kind of effort than debating other transhumanists on closed mailing lists. An effort like engaging with the large audience in a more effective manner, or getting an education in a technology-related field and becoming involved in the actual research yourself. In either case, you’re likely to unsubscribe the mailing lists or at least start paying them much less attention than before. If you still identify as a transhumanist, your interest in the online communities wanes because you’re too busy actually working for the cause. (Alternatively, you’ve realized how much work this would be and have stopped even trying.)

This shouldn’t be taken to mean that I’m saying the online h+ community is unnecessary, and that people ought to just skip to the last phase. The first step of the cycle is a very useful ingredient for giving one a strong motivation to keep working for the cause in one’s later life, even when they’re no longer following the lists.

—

So that’s describing a shift from “I’m a transhumanist” to “just saying I’m a transhumanist is pointless, I need to actually contribute to the development of these technologies”. So then I tried to do that and eventually spent several years trying to do AI strategy research, but that had the problem that I didn’t enjoy it much and didn’t feel very good at it. It was generally a poor fit for me due to a combination of various factors, some including “thinking all day about how AI might very well destroy everything that I value and care about is depressing”. So then I made the explicit decision to stop working on this stuff until I’d feel better, and that by itself made me feel better.

There were also various things in my personal psychology and history that were making me feel anxious and depressed, that actually had nothing to do with transhumanism and singularitarianism. I think that I had earlier been able to stave off some of that anxiety and depression by focusing on the thought of how AI is going to solve all our problems, including my personal ones. But then it turned out that focusing on therapy-type things as well as focusing on my concrete current circumstances were more effective for changing my anxiety than depression than pinning my hopes on AI. And pinning my hopes on AI wouldn’t work very well anymore anyway, since AI now seems more likely to me to lead to dystopian outcomes than utopian ones.

33 comments

Comments sorted by top scores.

comment by Roko · 2024-02-03T14:21:01.584Z · LW(p) · GW(p)

I shifted to the view that there’s probably no persisting identity over time anyway and in some sense I probably die and get reborn all the time in any case.

I think this is just wrong; there is personal identity in the form of preserved information that links your brain at time t to your brain at time t+1 as the only other physical system that has extremely similar information content (memories, personality, etc).

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2024-02-03T14:40:35.936Z · LW(p) · GW(p)

I said "in some sense", which grants the possibility that there is also a sense in which personal identity does exist.

I think the kind of definition that you propose is valid but not emotionally compelling in the same way as my old intuitive sense of personal identity was.

It also doesn't match some other intuitive senses of personal identity, e.g. if you managed to somehow create an identical copy of me then it implies that I should be indifferent to whether I or my copy live. But if that happened, I suspect that both of my instances would prefer to be the ones to live.

Replies from: Roko, Richard_Kennaway↑ comment by Roko · 2024-02-03T14:45:34.042Z · LW(p) · GW(p)

if you managed to somehow create an identical copy of me then it implies that I should be indifferent to whether I or my copy live. But if that happened, I suspect that both of my instances would prefer to be the ones to live.

I suspect that a short, private conversation with your copy would change your mind. The other thing here is that 1 is far from the ideal number of copies of you - you'd probably be extremely happy to live with other copies of yourself up to a few thousand or something. So going from 2 to 1 is a huge loss to your copy-clan's utility.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2024-02-03T14:52:54.169Z · LW(p) · GW(p)

I suspect that a short, private conversation with your copy would change your mind

Can you elaborate how?

E.g. suppose that it was the case that I would get copied, and then one of us would be chosen by lot to be taken in front of a firing squad while the other could continue his life freely. I expect - though of course it's hard to fully imagine this kind of a hypothetical - that the thought of being taken in front of that firing squad and never seeing any of my loved ones again would create a rather visceral sense of terror in me. Especially if I was given a couple of days for the thought to sink in, and I wouldn't just be in a sudden shock of "wtf is happening".

It's possible that the thought of an identical copy of me being out there in the world would bring some comfort to that, but mostly I don't see how any conversation would have a chance of significantly nudging those reactions. They seem much too primal and low-level for that.

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2024-02-04T11:03:03.180Z · LW(p) · GW(p)

Here is some FB discussion about some pragmatics of when you could have many clones of yourself. The specific hypothetical setup has the advantage of people thinking about what they would do themselves specifically:

↑ comment by Richard_Kennaway · 2024-02-05T16:50:49.435Z · LW(p) · GW(p)

What do you think of the view of identity suggested in Greg Egan’s “Schild’s Ladder”, which gives the novel its name?

I’d sketch what that view is, but not without rereading the book and not while sitting in a cafe poking at a screen keyboard. Meanwhile, an almond croissant had a persistent identity from when it came out of an oven until it was torn apart and crushed by my teeth, because I desired to use its atoms to sustain my own identity.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2024-02-05T20:20:37.300Z · LW(p) · GW(p)

I have read that book, but it's been long enough that I don't really remember anything about it.

Though I would guess that if you were to describe it, my reaction would be something along the lines of "if you want to have a theory of identity, sounds as as valid as any other".

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2024-02-06T12:22:37.259Z · LW(p) · GW(p)

Schild's ladder is a geometric construction to show how to transport a vector over a curved manifold. In the book, one of the characters as a nine-year-old boy knowing that he can expect to live indefinitely—longer than the stars, even—is afraid of the prospect, wondering how he will know that he will still be himself and isn't going to turn into someone else. His father explains Schild's Ladder to him, as a metaphor, or more than a metaphor, for how each day, you can take the new experiences of that day to update your self in the way truest to your previous self.

On a curved manifold, where you end up pointing will depend on the route you took to get there. However:

"You'll never stop changing, but that doesn't mean you have to drift in the wind. Every day, you can take the person you've been and the new things you've witnessed and make your own, honest choice as to who you should become.

"Whatever happens, you can always be true to yourself. But don't expect to end up with the same inner compass as everyone else. Not unless they started beside you and climbed beside you every step of the way."

So, historical continuity, but more than just that. Not an arbitrary continuous path through person-space, but parallel transport along a path.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2024-02-06T18:19:01.183Z · LW(p) · GW(p)

I don't fully understand the actual math of it so I probably am not fully getting it. But if the core idea is something like "you can at every timestep take new experiences and then choose how to integrate them into a new you, with the particulars of that choice (and thus the nature of the new you) drawing on everything that you are at that timestep", then I like it.

I might quibble a bit about the extent to which something like that is actually a conscious choice, but if the "you" in question is thought to be all of your mind (subconsciousness and all) then that fixes it. Plus making it into more of a conscious choice over time feels like a neat aspirational goal.

... now I do feel more of a desire to live some several hundred years in order to do that, actually.

comment by Nicholas / Heather Kross (NicholasKross) · 2024-03-10T21:08:28.390Z · LW(p) · GW(p)

- Person tries to work on AI alignment.

- Person fails due to various factors.

- Person gives up working on AI alignment. (This is probably a good move, when it's not your fit, as is your case.)

- Danger zone: In ways that sort-of-rationalize-around their existing decision to give up working on AI alignment, the person starts renovating their belief system around what feels helpful to their mental health. (I don't know if people are usually doing this after having already tried standard medical-type treatments, or instead of trying those treatments.)

- Danger zone: Person announces this shift to others, in a way that's maybe and/or implicitly prescriptive (example [LW · GW]).

There are, depressingly, many such cases of this pattern. (Related post with more details on this pattern [LW · GW].)

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2024-03-12T14:49:48.636Z · LW(p) · GW(p)

That makes sense to me, though I feel unclear about whether you think this post is an example of that pattern / whether your comment has some intent aimed at me?

Replies from: NicholasKross↑ comment by Nicholas / Heather Kross (NicholasKross) · 2024-03-12T17:02:26.040Z · LW(p) · GW(p)

Yes, I think this post / your story behind it, is likely an example of this pattern.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2024-03-12T17:20:14.348Z · LW(p) · GW(p)

Okay! It wasn't intended as prescriptive but I can see it as being implicitly that.

What do you think I'm rationalizing?

Replies from: NicholasKross↑ comment by Nicholas / Heather Kross (NicholasKross) · 2024-03-13T17:20:15.301Z · LW(p) · GW(p)

Giving up on transhumanism as a useful idea of what-to-aim-for or identify as, separate from how much you personally can contribute to it.

More directly: avoiding "pinning your hopes on AI" (which, depending on how I'm supposed to interpret this, could mean "avoiding solutions that ever lead to aligned AI occurring" or "avoiding near-term AI, period" or "believing that something other than AI is likely to be the most important near-future thing", which are pretty different from each other, even if the end prescription for you personally is (or seems, on first pass, to be) the same.), separate from how much you personally can do to positively affect AI development.

Then again, I might've misread/misinterpreted what you wrote. (I'm unlikely to reply to further object-level explanation of this, sorry. I mainly wanted to point out the pattern. It'd be nice if your reasoning did turn out correct, but my point is that its starting-place seems/seemed to be rationalization as per the pattern.)

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2024-03-13T21:12:04.654Z · LW(p) · GW(p)

I'm unlikely to reply to further object-level explanation of this, sorry.

No worries! I'll reply anyway for anyone else reading this, but it's fine if you don't respond further.

Giving up on transhumanism as a useful idea of what-to-aim-for or identify as, separate from how much you personally can contribute to it.

It sounds like we have different ideas of what it means to identify as something. For me, one of the important functions of identity is as a model of what I am, and as what distinguishes me from other people. For instance, I identify as Finnish because of reasons like having a Finnish citizenship, having lived in Finland for my whole life, Finnish being my native language etc.; these are facts about what I am, and they're also important for predicting my future behavior.

For me, it would feel more like rationalization if I stopped contributing to something like transhumanism but nevertheless continued identifying as a transhumanist. My identity is something that should track what I am and do, and if I don't do anything that would meaningfully set me apart from people who don't identify as transhumanists... then that would feel like the label was incorrect and imply wrong kinds of predictions. Rather, I should just update on the evidence and drop the label.

As for transhumanism as a useful idea of what to aim for, I'm not sure of what exactly you mean by that, but I haven't started thinking "transhumanism bad" or anything like that. I still think that a lot of the transhumanist ideals are good and worthy ones and that it's great if people pursue them. (But there are a lot of ideals I think are good and worthy ones without identifying with them. For example, I like that museums exist and that there are people running them. But I don't do anything about this other than occasionally visit one, so I don't identify as a museum-ologist despite approving of them.)

More directly: avoiding "pinning your hopes on AI" (which, depending on how I'm supposed to interpret this, could mean "avoiding solutions that ever lead to aligned AI occurring" or "avoiding near-term AI, period" or "believing that something other than AI is likely to be the most important near-future thing"

Hmm, none of these. I'm not sure of what the first one means but I'd gladly have a solution that led to aligned AI, I use LLMs quite a bit, and AI clearly does seem like the most important near-future thing.

"Pinning my hopes on AI" meant something like "(subconsciously) hoping to get AI here sooner so that it would fix the things that were making me anxious", and avoiding that just means "noticing that therapy and conventional things like that work better for fixing my anxieties than waiting for AI to come and fix them". This too feels to me like actually updating on the evidence (noticing that there's something better that I can do already and I don't need to wait for AI to feel better) rather than like rationalizing something.

comment by Vladimir_Nesov · 2024-02-03T14:51:46.043Z · LW(p) · GW(p)

the view that there’s probably no persisting identity over time anyway and in some sense I probably die and get reborn all the time in any case

In the long run, this is probably true for humans in a strong sense that doesn't depend on litigation of "personal identity" and "all the time". A related phenomenon is value drift. Neural nets are not a safe medium for keeping a person alive for a very long time without losing themselves, physical immortality is insufficient to solve the problem.

That doesn't mean that the problem isn't worth solving, or that it can't be solved. If AIs don't killeveryone, immortality or uploading is an obvious ask. But avoidance of value drift or of unendorsed long term instability of one's personality is less obvious. It's unclear what the desirable changes should be, but it's clear that there is an important problem here that hasn't been explored.

Replies from: Q Homecomment by Algon · 2024-02-03T13:30:53.096Z · LW(p) · GW(p)

And pinning my hopes on AI wouldn’t work very well anymore anyway, since AI now seems more likely to me to lead to dystopian outcomes than utopian ones.

Do you mean s-risks, x-risks, age of em style future, stagnation, or mainstream dystopic futures?

I shifted to the view that there’s probably no persisting identity over time anyway

I am suspcious about claims of this sort. It sounds like a case of "x is an illusion. Therefore, the pre-formal things leading to me reifying x are fake too." Yeah, A and B are the same brightness. No, they're not the same visually.

I.e. I am claiming that you are probably making the same mistake people make when they say "there's no such thing as free will". They are correct that you aren't "transcendentally free" but make the mistake of treating their feelings which generated that confused statement as confused in themselves, instead of just another part of the world. I just suspect you're doing a much more sophisticated version of this.

Or maybe I'm misunderstanding you. That's also quite likely.

EDIT: I just read the wikipedia page you linked to. I was misunderstanding you. Now, I think you are making an invalid inference from our state of knowledge of "Relation R" i.e. psychological connectedness which is quite rough and suprisingly far reaching to coarsening it while preserving those states that are connected. Naturally, you then notice "oh, R is a very indiscriminating relationship" and impose your abstract model of R over R itself, reducing your fear of death.

EDIT 2: Note I'm not disputing your choice not to identify as a transhumanist. That's your choice and is valid. I'm just disputing the arguement for worrying less about death that I think you're referencing.

↑ comment by Kaj_Sotala · 2024-02-03T14:16:00.388Z · LW(p) · GW(p)

Do you mean s-risks, x-risks, age of em style future, stagnation, or mainstream dystopic futures?

"All of the above" - I don't know exactly which outcome to expect, but most of them feel bad and there seem to be very few routes to actual good outcomes. If I had to pick one, "What failure looks like [LW · GW]" seems intuitively most probable, as it seems to require little else than current trends continuing.

I am suspcious about claims of this sort. It sounds like a case of "x is an illusion. Therefore, the pre-formal things leading to me reifying x are fake too."

That sounds like a reasonable thing to be suspicious about! I should possibly also have linked my take on the self as a narrative construct [LW · GW].

Though I don't think that I'm saying the pre-formal things are fake. At least to my mind, that would correspond to saying something like "There's no lasting personal identity so there's no reason to do things that make you better off in the future". I'm clearly doing things that will make me better off in the future. I just feel less continuity to the version of me who might be alive fifty years from now, so the thought of him dying of old age doesn't create a similar sense of visceral fear. (Even if I would still prefer him to live hundreds of years, if that was doable in non-dystopian conditions.)

↑ comment by Said Achmiz (SaidAchmiz) · 2024-02-15T14:50:02.343Z · LW(p) · GW(p)

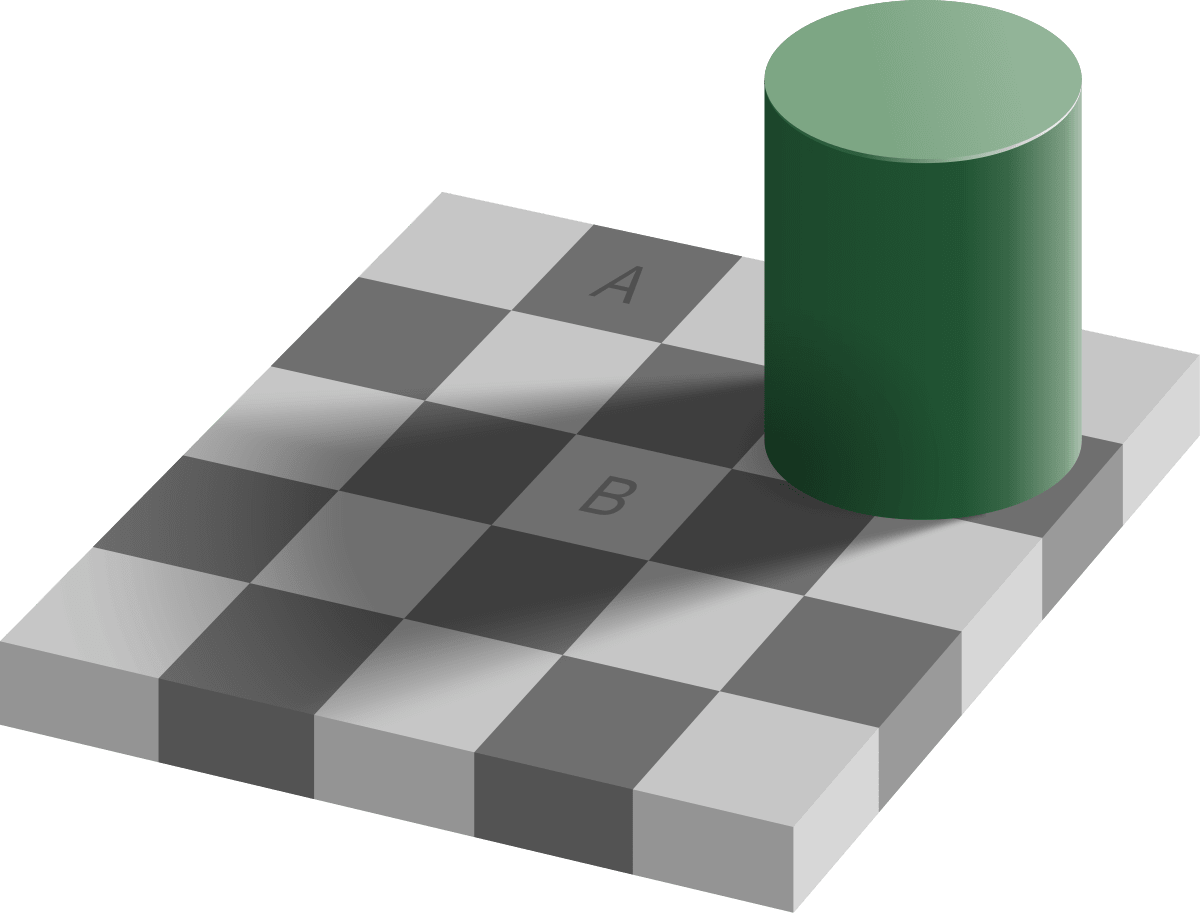

You picked an interesting example of an optical illusion, as I maintain that it isn’t one [LW(p) · GW(p)]. As noted in the linked comment thread, this can be analogized to philosophical/psychological questions (like the one in the OP)…

Replies from: Algoncomment by Curt Tigges (curt-tigges) · 2024-02-05T19:47:28.201Z · LW(p) · GW(p)

I actually went through the same process as what you describe here, but it didn't remove my "transhumanist" label. I was a big fan of Humanity+, excited about human upgrading, etc. etc. I then became disillusioned about progress in the relevant fields, started to understand nonduality and the lack of a persistent or independent self, and realized AI was the only critical thing that actually was in the process of happening.

In that sense, my process was similar but I still consider myself a transhumanist. Why? Because for me, solving death or trying to make progress in the scientific fields that lead to various types of augmentations aren't the biggest or most critical pieces of transhumanism. One could probably have been a transhumanist in the 1800s, because for me it's about the process of imagining and defining and philosophizing about what humanity--on an individual organism level as well as on a sociocultural level--will become (or what it might be worthwhile to become) after particular types of technological transitions.

Admittedly, there is a normative component that's something like "those of us who want to should be able to become something more than base human" and isn't really active until those capabilities actually exist, but the process of thinking about what it might be worthwhile to become, or what the transition will be like, or what matters and what is valuable in this kind of future, are all important.

It's not about maximizing the self, either--I'm not an extropian. Whether or not something called "me" exists in this future (which might be soon but might not), the conscious experience of beings in it matter to me (and in this sense I'm a longtermist).

Will an aligned AI solve death? Maybe, but my hopes don't rely on this. Humanity will almost certainly change in diverse ways, and is already changing a bit (though often not in great ways). It's worthwhile to think about what kind of changes we would want to create, given greater powers to do so.

comment by Lichdar · 2024-02-07T04:14:27.852Z · LW(p) · GW(p)

I had a very long writeup on this but I had a similar journey from identifying as a transhumanist to deeply despising AI, so I appreciate seeing this. I'll quote part of mine and perhaps identify:

"I worked actively in frontier since at least 2012 including several stints in "disruptive technology" companies where I became very familiar with the technology cult perspective and to a significant extent, identified. One should note that there is a definitely healthy aspect to it, though even the most healthiest aspect is, as one could argue, colonialist - the idea of destructive change in order to "make a better world."

Until 2023..

And yet I also had a deep and abiding love of art, heavily influenced by "Art as Prayer" by Andrei Tarkovsky, and this actually fit into a deep and abiding spiritual, even religion viewpoint that integrated my love of technology and love of art.

In my spare time and nontrivially, I spent a lot of time writing, drawing here and there, as well as writing poetry - all which had a deep and significant meaning to me.

In the Hermetic system, Man is the creation of clay and spirit imbued with the divine spark to create; this is why science and technology can be seen as a form of worship, because to learn of Nature and to advance the "occult" into the known is part of the Divine Science to ultimately know God and summon the spirits via the Machine; the computer is the modern occultist's version of the pentagram and circle. But simultaneous with this, is the understanding that the human is the one who ultimately is the creator, who "draws imagination from the Empyrean realms, transforms them into the material substance, and sublimates it into art" as glimpses of the magical. Another version of this is in the Platonic concept of the Forms, which are then conveyed into actual items via the labor of the artist or the craftsman.

As such, the deep love of technology and the deep love for art was not in contrast in the least, because one helped the other and in both, the ultimately human spirit was very much glorified. Perhaps there is a lot of the cyborg in it, but the human is never extinguished.

The change came in last year with the widespread release of Chatgpt. I actually was an early user of Midjourney and played around with it as an ideation device, which I would have never faulted a user of AI for. But it was when the realization that the augmentation of that which is human was exchanged for the replacement of human that I knew that something had gone deeply, terribly wrong with the philosophy of the place.

Perhaps that was not the only reason: another transformative experience was reading Other Minds: The Octopus, the Sea, and the Deep Origins of Consciousness which changed a lot of my awareness of life from something of "information"(which is still the default thinking of techbros, which is why mind-uploading is such a popular meme) into the understanding of the holobiont that life, even in an "organism" is in fact a kind of enormous ecosystem of many autonomous or semi-autonomous actors as small as the cellular level(or even below!). A human is in fact almost as much "not human" as human - now while the research as been revised slightly, it still comes out that an average human is perhaps at most 66% human, and many of our human cells show incredible independent initiative. Life is a "whorl of meaning" and miraculous on so many fundamental levels.

And now, with the encroachment of technology, was the idea of not only replacing humanity with the creation of art, one of the most fundamentally soulful things that we do, but the idea of extinguishing life for some sort of "more perfection." Others have already talked about this particular death cult of the tech-bros, but this article covers the omnicidal attitude well, which goes beyond just the murder of all of humanity but also the death of all organic life. Such attitudes are estimated to be among AI researchers between 5 to 10%, the people who are in fact, actively leading us down this path who literally want you and everything you love to die.

I responded to the widespread AI the same way with a lot of other researchers in the area - initially with a mental breakdown(which I am still recovering from) and then with the realization that with the monster that had been created, we had to make the world as aware as possible and to try to stop it.

Art as Transcendence

Art is beauty, art is fundamental to human communication and I believe that while humans exist, we will never stop creating art. But beyond that, is the realization of art as transcendent - it speaks of the urgency of love, the precariousness of life, the marvel that is heroism, the gentleness of caring, and all of the central meaning of our existence, things which should be eternal and things I truly realize now are part and parcel of our biology. One doesn't have to relate to a supernatural soul to know that these things should be infused into our very being and that they are, by nature, not part of any mechanical substrate. A jellyfish alien that breathes through its limbs would be more true to us than a computer with a human mind upload in that sense; for anything that is organic is almost certainly that same kind of ecosystem, of life built upon life, of a whorl of meaning and ultimately of beauty, while the machine is a kind of inert death, and undeath in its simulacrum and counterfeit of the human being.

And in that, I realized that there was a point where the human must be protected from the demons that we are trying to raise, because no longer are we trying to summon aid to us, but so many of the technologists have become besotted and have fallen in love with their own monsters, and not with the very life that has created us and given us all this wonderous existence to feel, to breathe and to be. "

comment by [deleted] · 2024-02-05T21:20:19.348Z · LW(p) · GW(p)

As near as I can tell, you have realized that all the transhumanist ideas aren't going to happen in your lifespan. I am assuming you are about 30 so that means within 50-70 years. I mean take neural implants, one of the core ones. The FDA wants 10+ years of study on a few patients before anyone else gets an implant. Highly likely the implants will cause something nasty current medicine is helpless to treat perhaps cognitive decline.

Are you going to be able to get an implant at all before you die of aging? Over 70 or so doctors will refuse to operate because they aren't skilled enough.

The only one that has a real chance of even existing is AGI and maybe ASI, and the core reason is because it turns out we don't need to upload human brains after all, just scale and iterate on measurable metrics for intelligence, not try to figure out how the brain happens to do it.

This is one reason I am displeased by the "AI pause" talk because it essentially dooms me, my children, my friends, and everyone I have ever met to more of the same. Transhumanism requires a medical superintelligence or it cannot happen in any humans lifetime. There are simply too many (complex and coupled) ways fragile human bodies will fail for any human doctor to learn how to handle all cases.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2024-02-05T23:47:51.359Z · LW(p) · GW(p)

"AI pause" talk [...] dooms [...] to more of the same

This depends on the model of risks. If risks without a pause are low, and they don't significantly reduce with a pause, then a pause makes things worse. If risks without a pause are high, but risks after a 20-year pause are much lower, then a pause is an improvement even for personal risk for sufficiently young people.

If risks without pause are high, risks after a 50-year pause remain moderately high, but risks after a 100-year pause become low, then not pausing trades significant measure of the future of humanity for a much smaller measure of survival of currently living humans. Incidentally, sufficiently popular cryonics can put a dent into this tradeoff for humanity, and cryonics as it stands can personally opt out anyone who isn't poor and lives in a country where the service is available.

Replies from: None↑ comment by [deleted] · 2024-02-06T00:39:09.420Z · LW(p) · GW(p)

risks without a pause are low, and they don't significantly reduce with a pause, then a pause makes things worse. If risks without a pause are high, but risks after a 20-year pause are much lower, then a pause is an improvement even for personal risk for sufficiently young people.

Yes. Although you have 2 problems:

- Why do you think a 20 year pause, or any pause, will change anything.

Like for example you may know that cybersecurity on game consoles and iPhones keeps getting cracked.

AI control is similar in many ways to cybersecurity in that you are trying to limit the AIs access to functions that let it do bad things, and prevent the AI from seeing information that will allow it to fail. (Betrayal is control failure, the model cannot betray in coordinated way if it doesn't somehow receive a message from other models that now is the time)

Are you going to secure your iPhones and consoles by researching cybersecurity for 20 years and then deploying the next generation? Or do you do the best you can with information from the last failures and try again? Each time you try to limit the damage, for example with game consoles there are various strategies that have grown more sophisticated to encourage users to purchase access to games rather than pirate them.

With AI you probably won't learn during a pause anything that will help you. We know this from experience because on paper, securing the products I mentioned is trivially easy. Sign everything with a key server, don't run unsigned code, check the key is valid on really well verified and privileged code.

Note that no one who breaks game consoles or iphones does it by cracking the encryption directly, pub/private key crypto is still unbroken.

Similarly I would expect very early on human engineers will develop an impervious method of AI control. You can write one yourself it's not difficult. But like everything it will fail on implementation....

- You know the cost of a 20 year pause. Just add up the body bags, or 20 years of deaths worldwide to aging. More than a billion people.

You don't necessarily have a good case that the benefit of the pause will save the lives of all humans because even guessing the problem will benefit during a pause is speculation. It's not speculation to say the cost is more than a billion lives, because younger human bodies keep them alive with high probability, eventually AI discover a fix. Even if it takes a 100 years, it will be in +100 years not +120 years.

- Historically the lesson is all of the parties "agreeing" to the pause are going to betray, and whoever betrays more gets an advantage. US navy lost an aircraft carrier in WW2 that a larger carrier would have survived because they honored the tonnage limits.

↑ comment by Vladimir_Nesov · 2024-02-07T04:18:26.562Z · LW(p) · GW(p)

Hypotheticals disentangle models from values. A pause is not a policy, not an attempt at a pause that might fail, it's the actual pause, the hypothetical. We can then look at the various hypotheticals and ask what happens there, which one is better. Hopefully our values can handle the strain of out-of-distribution evaluation and don't collapse into incoherence of goodharting, unable to say anything relevant about situations that our models consider impossible in actual reality.

In the hypothetical of a 100-year pause, the pause actually happens, even if this is impossible in actual reality. One of the things within that hypothetical is death of 4 generations of humans. Another is the AGI that gets built at the end. In your model, that AGI is no safer than the one that we build without the magic hypothetical of the pause. In my model, that AGI is significantly safer. A safer AGI translates into more value of the whole future, which is much longer than the current age of the universe. And an unsafe AGI now is less than helpful to those 4 generations.

AI control is similar in many ways to cybersecurity in that you are trying to limit the AIs access to functions that let it do bad things, and prevent the AI from seeing information that will allow it to fail.

That's the point of AI alignment as distinct from AI control [LW(p) · GW(p)]. Your model says the distinction doesn't work. My model says it does. Therefore my model endorses the hypothetical of a pause.

Having endorsed a hypothetical, I can start paying attention to ways of moving reality in its direction. But that is distinct from a judgement about what the hypothetical entails.

Replies from: None↑ comment by [deleted] · 2024-02-07T04:44:08.354Z · LW(p) · GW(p)

How would you know any method of alignment works without AGI of increasing capabilities/child AGI that are supposed to inherit aligned property to test this?

One of the reasons I gave current cybersecurity as an example is that pub/private key signing is correct. Nobody has broken the longer keys. Yet if you spent 20 years or 100 years proving it correct then deployed to software using present techniques you would get hacked immediately. Implementation is hard and is the majority of the difficulty.

Assuming ai alignment can be paper solved like this way I see it as the same situation. It will fail in ways you won't know until you try it for real.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2024-02-07T06:00:34.310Z · LW(p) · GW(p)

Consider an indefinite moratorium on AGI that awaits better tools that make building it a good idea rather than a bad idea. If there was a magic button that rewrote laws of nature to make this happen, would it be a good idea to press it? My point is that we both endorse pressing this button, the only difference is that your model says that building an AGI immediately is a good idea, and so the moratorium should end immediately. My model disagrees. This particular disagreement is not about the generations of people who forgo access to potential technology (where there is no disagreement), and it's not about feasibility of the magic button (which is a separate disagreement). It's about how this technology works, what works in influencing its design and deployment, and the effect it has on the world once deployed.

The crux of that disagreement seems to be about importance of preparation in advance of doing a thing, compared to the process of actually doing the thing in the real world. A pause enables extensive preparation to building an AGI, and high serial speed of thought of AGIs enables AGIs extensive preparation to acting on the world. If such preparation doesn't give decisive advantage, a pause doesn't help, and AGIs don't rewrite reality in a year once deployed. If it does give a decisive advantage, a pause helps significantly, and a fast-thinking AGI shortly gains the affordance of overwriting humanity with whatever it plans to enact.

I see preparation as raising generations of giants to stand on the shoulders of, which in time changes the character of the practical projects that would be attempted, and the details we pay attention to as we carry out such projects. Yes, cryptography isn't sufficient to make systems secure, but absence of cryptography certainly makes them less secure, as is attempting to design cryptographic algorithms without taking the time to get good at it. This is the kind of preparation that makes a difference. Noticing that superintelligence doesn't imply supermorality and that alignment is a concern at all is an important development. Appreciating goodharting and corrigibility changes the safety properties of AIs that appear important, when looking into more practical designs that don't necessarily originate from these considerations. Deceptive alignment is a useful concern to keep in mind, even if in the end it turns out that practical systems don't have that problem. Experiments on GPT-2 sized systems still have a whole lot to teach us about interpretable and steerable architectures.

Without AGI interrupting this process, the kinds of things that people would attempt in order to build an AGI would be very different 20 years from now, and different yet again in 40, 60, 80, and 100 years. I expect some accumulated wisdom to steer such projects in better and better directions, even if the resulting implementation details remain sufficiently messy and make the resulting systems moderately unsafe, with some asymptote of safety where the aging generations make it worthwhile to forgo additional preparation.

Replies from: None↑ comment by [deleted] · 2024-02-07T06:10:04.811Z · LW(p) · GW(p)

Do any examples of preparation over an extended length of time exist in human history?

I would suspect they do not for the simple reason that preparation in advance of a need you don't have, has no roi.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2024-02-07T06:46:20.277Z · LW(p) · GW(p)

Basic science and pure mathematics enable their own subsequent iterations without having them as explicit targets or even without being able to imagine these developments, while doing the work crucial in making them possible.

Extensive preparation never happened with a thing that is ready to be attempted experimentally, because in those cases we just do the experiments, there is no reason not to. With AGI, the reason not to do this is the unbounded blast radius of a failure, an unprecedented problem. Unprecedented things are less plausible, but unfortunately this can't be expected to have happened before, because then you are no longer here to update on the observation.

If the blast radius is not unbounded, if most failures can be contained, then it's more reasonable to attempt to develop AGI in the usual way, without extensive preparation that doesn't involve actually attempting to build it. If preparation in general doesn't help, it doesn't help AGIs either, making them less dangerous and reducing the scope of failure, and so preparation for building them is not as needed. If preparation does help, it also helps AGIs, and so preparation is needed.

Replies from: None↑ comment by [deleted] · 2024-02-07T07:04:14.363Z · LW(p) · GW(p)

If the blast radius is not unbounded, if most failures can be contained, then it's more reasonable to attempt to develop AGI in the usual way, without extensive preparation that doesn't involve actually attempting to build it

Is it true or not true that there is no evidence for an "unbounded" blast radius for any AI model someone has trained. I am not aware of any evidence.

What would constitute evidence that the situation was now in the "unbounded" failure case? How would you prove it?

So we don't end up in a loop, assume someone has demonstrated a major danger with current AI models. Assume there is a really obvious method of control that will contain the problem. Now what? It seems to me like the next step would be to restrict AI development in a similar way to how cobalt-60 sources are restricted, where only institutions with licenses, inspections, and methods of control can handle the stuff, but that's still not a pause...

When could you ever reach a situation where a stronger control mechanism won't work?

Like I try to imagine it, and I can imagine more and more layers of defense - "don't read anything the model wrote", "more firewalls, more isolation, servers in a salt mine" - but never a point where you couldn't agree it was under control. Like if you make a radioactive source more radioactive you just add more inches of shielding until the dose is acceptable.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2024-02-11T13:24:36.258Z · LW(p) · GW(p)

The blast radius of AGIs is unbounded in the same way as that of humanity, there is potential for taking over all of the future. There are many ways of containing it, and alignment is a way of making the blast a good thing. The point is that a sufficiently catastrophic failure that doesn't involve containing the blast is unusually impactful. Arguments about ease of containing the blast are separate from this point in the way I intended it.

If you don't expect AGIs to become overwhelmingly powerful faster than they are made robustly aligned, containing the blast takes care of itself right until it becomes unnecessary. But with the opposite expectation, containing becomes both necessary (since early AGIs are not yet robustly aligned) and infeasible (since early AGIs are very powerful). So there's a question of which expectation is correct, but the consequences of either position seem to straightforwardly follow.