You can be wrong about what you like, and you often are

post by Adam Zerner (adamzerner) · 2018-12-17T23:49:39.935Z · LW · GW · 21 commentsContents

21 comments

Meta: I'm not saying anything new here. There has been a lot of research on the topic, and popular books like Stumbling on Happiness have been written. Furthermore, I don't think I have explained any of this particularly well, or provided particularly enlightening examples. Nevertheless, I think these things are worth saying because a) a lot of people have an "I know what I like" attitude, and b) this attitude seems pretty harmful. Just be sure to treat this as more of an exploratory post than an authoritative one.

I think that the following attitudes are very common:

- I'm just not one of those people who enjoys "deeper" activities like reading a novel. I like watching TV and playing video games.

- I'm just not one of those people who likes healthy foods. You may like salads and swear by them, but I am different. I like pizza and french fries.

- I'm just not an intellectual person. I don't enjoy learning.

- I'm just not into that early retirement stuff. I need to maintain my current lifestyle in order to be happy.

- I'm just not into "good" movies/music/art. I like the Top 50 stuff.

Imagine what would happen if you responded to someone who expressed one of these attitudes by saying "I think that you're wrong." Often times, the response you'll get is something along the lines of:

Who are you to tell me what I do and don't like? How can you possibly know? I'm the one who's in my own head. I know how these things make me feel.

When I think about that response, I think about optical illusions. Consider this one:

When I think about that response, I think about the following dialog:

Me: A and B are the same shade of gray.

Person: No they're not! WTF are you talking about? How can you say that they are? I can see with my eyes that they're not!

I understand the frustration. It feels like they're different shades. It feels like it is stupidly obvious that they're different shades.

And if feels like you know what you like.

But sometimes, sometimes your brain lies to you.

The image of the squares is an optical illusion. Neuroscientists and psychologists study them. And they write books like this about them.

The question of knowing what you like can be a hedonic illusion (that's what I'll decide to call it anyway). Neuroscientists and psychologists study these illusions too. And they write books like this about them.

They have found that we're actually really bad at knowing what will make us happy. At knowing what we do, and don't like.

Some quotes from The Science of Happiness:

- “One big question was, Are beautiful people happier?” Etcoff says. “Surprisingly, the answer is no! This got me thinking about happiness and what makes people happy.”

- His book Stumbling on Happiness became a national bestseller last summer. Its central focus is “prospection”—the ability to look into the future and discover what will make us happy. The bad news is that humans aren’t very skilled at such predictions; the good news is that we are much better than we realize at adapting to whatever life sends us.

- The reason is that humans hold fast to a number of wrong ideas about what will make them happy. Ironically, these misconceptions may be evolutionary necessities. “Imagine a species that figured out that children don’t make you happy,” says Gilbert. “We have a word for that species: extinct.

That last one was pretty powerful, wow.

I think that the implications of this are all pretty huge. We all want to be happy. We all want to thrive. We make thousands and thousands of little decisions to this end. We decide to have fried chicken for dinner, and that having salads isn't worth the effort, despite whatever long term health benefits. We decide that video games are a nice, fun, relaxing way to decompress after work. We decide that working a corporate job is worth it because we "need the money".

All of these decisions shape our lives. If we're getting them wrong, well, then we're not doing a good job of shaping our lives.

And if we're basing these decisions off of our intuitions, according to the positive psychology research, we're probably screwing up a lot.

So then, I propose that we approach these sorts of questions with more curiosity.

The first virtue is curiosity. A burning itch to know is higher than a solemn vow to pursue truth. To feel the burning itch of curiosity requires both that you be ignorant, and that you desire to relinquish your ignorance. If in your heart you believe you already know, or if in your heart you do not wish to know, then your questioning will be purposeless and your skills without direction. Curiosity seeks to annihilate itself; there is no curiosity that does not want an answer. The glory of glorious mystery is to be solved, after which it ceases to be mystery. Be wary of those who speak of being open-minded and modestly confess their ignorance. There is a time to confess your ignorance and a time to relinquish your ignorance.

And with more humility.

The eighth virtue is humility. To be humble is to take specific actions in anticipation of your own errors. To confess your fallibility and then do nothing about it is not humble; it is boasting of your modesty. Who are most humble? Those who most skillfully prepare for the deepest and most catastrophic errors in their own beliefs and plans. Because this world contains many whose grasp of rationality is abysmal, beginning students of rationality win arguments and acquire an exaggerated view of their own abilities. But it is useless to be superior: Life is not graded on a curve. The best physicist in ancient Greece could not calculate the path of a falling apple. There is no guarantee that adequacy is possible given your hardest effort; therefore spare no thought for whether others are doing worse. If you compare yourself to others you will not see the biases that all humans share. To be human is to make ten thousand errors. No one in this world achieves perfection.

You can be wrong about what you like, and you often are.

https://www.youtube.com/watch?v=KmC1btSZP7U

(My grandpa used to read this to me all of the time when I was younger. And he still bugs me about it to this day. It's cool that I'm finally starting to understand it.)

21 comments

Comments sorted by top scores.

comment by Said Achmiz (SaidAchmiz) · 2018-12-18T03:40:55.362Z · LW(p) · GW(p)

There's a critical point to be made about that optical illusion. Consider the following version of your hypothetical dialogue:

Alice: A and B are the same shade of gray.

Bob: No they’re not! WTF are you talking about? How can you say that they are? I can see with my eyes that they’re not!

Alice: Observe that if you use a graphics editor program to examine a pixel in the middle of region A, and a pixel in the middle of region B, you will see that they are the same color; or you could use a photometer to directly measure the spectral power distributions of light being emitted by your computer display from either pixel, and likewise you will find those curves identical.

Bob: Yeah? So? What of it? Suppose I measure the spectral power distributions of light reaching my eyes from a banana at dusk, and a tomato at noon, and find them identical; should I conclude that a banana and a tomato are the same color? No; they look like they have different colors, and indeed they do have different colors. What about the same banana at noon, and then at dusk—the light reaching my eyes from the banana will have different spectral power distributions; has the banana changed color? No, it both looks like, and is, the same color in both cases.

Alice: What? What does that have to do with anything? The pixels really are the same!

Bob: What is that to me? This is an image of a cylinder standing on a checkerboard. I say that square A on the checkerboard, and square B on the checkerboard, are of different colors. Just as my visual system can tell that the tomato is red, and the banana yellow, regardless of whether either is viewed at noon or at dusk, so that same visual system can tell that these two checkerboard squares have different colors. Your so-called “illusions” cannot so easily fool human vision!

Alice: But… it’s a rendered image. There is no checkerboard.

Bob: Then there is no fact of the matter about whether A and B are the same color.

Can the principle illustrated in this dialogue be applied to the case of “knowing what you like”? If so—how? (And if not, the the optical illusion analogy is inapplicable—and dangerously misleading!)

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2018-12-18T06:10:47.103Z · LW(p) · GW(p)

I'm not sure if I understood the point you're making, but it sounds like the point is that, even if the pixels are the same color, the important thing is to be able to distinguish the objects. To see that the tomato and banana are different things even if they have the same pixels. I suppose more generally the idea is that with an illusion, it may be an illusion in some lower level sense, but not an illusion in a more practical sense. Is that accurate?

If so, I'm not sure how it would apply to "hedonic illusions". I suppose there are cases where we are wrong about thinking something provides happiness, but correct in some other more important way. But I'm having trouble thinking of concrete examples of what that other, more important thing is, and how it is distinct from "lower level happiness".

I would guess that there are some ways in which your point applies to "hedonic illusions". There may be some situations where we're wrong about "low level happiness" but correct about a more important thing. But I would also guess that it is not to the extent where we can reliably think "I know what I like".

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2018-12-18T06:55:47.861Z · LW(p) · GW(p)

I’m not sure if I understood the point you’re making, but it sounds like the point is that, even if the pixels are the same color, the important thing is to be able to distinguish the objects. To see that the tomato and banana are different things even if they have the same pixels. I suppose more generally the idea is that with an illusion, it may be an illusion in some lower level sense, but not an illusion in a more practical sense. Is that accurate?

The purpose[1] of our visual system—and, specifically, the purpose of our color vision—is to distinguish objects.

If, in the real world, you see a physical scene such as that pictured in the illustration, it may be that the spectral power distributions of the light reaching your eyes from the two identified regions of the physical checkerboard which you are looking at, will in fact match arbitrarily closely. However, you will correctly perceive the two squares as having different colors—i.e., as having different surface spectral reflectance functions—that being the practically relevant question which your visual system is designed to answer. (“What exactly is the spectral power distribution of the light incident upon my retina from such-and-such arc-region of my visual field” is virtually never relevant for any practical purpose.)

Similarly, if you see a photograph of a physical scene such as that pictured in the illustration, it may be that the spectral power distributions of the light reaching your eyes from the pixels representing the two identified regions of the physical checkerboard a picture of which you are looking at, will, again, match arbitrarily closely. But once again, you will correctly perceive the two pictured squares as having different colors—i.e., as having had, in the real-world scene of which the picture was taken, different surface spectral reflectance functions.

In both these cases, there is some real-world fact, which you are perceiving correctly. There is, then, some other real-world fact which you are not perceiving correctly (but which you also don’t particularly care about[2]). Once again: if I look at a real-world scene such as the one depicted, or a photograph of that scene, and I say “these two squares have different colors”, and by this I mean that the physical objects depicted have different colors, I am not mistaken; I am entirely correct in my judgment.

But! The picture in question is not a physical scene in the real world; nor is it a photograph of such a scene. It is artificial. It depicts something which does not actually exist, and never did. There is no fact of the matter about whether the identified checkerboard squares are of the same color or different colors, because they don’t exist; so there isn’t anything to be right or wrong about.

This means that the question—“are these two regions the same color, or different colors?”—is, in some sense, meaningless. Once again, the purpose of our visual system is to perceive certain practically relevant features of real-world objects. The “optical illusion” in question feeds that system nonsense data; and we get back a nonsense answer. But that doesn’t mean we’re answering incorrectly, that we’re making a mistake; in fact, there isn’t any right answer!

Once again, note that if we encountered this situation in the real world, we would correctly note that the two checkerboard squares have different colors. If we had to take some action, or make some decision, on the basis of whether square A and square B were the same color or different colors, we would therefore act or decide correctly. Acting or deciding on the basis of a belief that squares A and B are of the same color, would be a mistake!

Contrast this with, for example, color blindness. If I cannot see the difference between red and green, then I will have quite a bit of trouble driving a car—I will mis-perceive the state of traffic lights! Note that in this case, you can be sure that I won’t argue with you when you tell me that I am perceiving the traffic lights incorrectly; there is no sense in which, from a certain perspective, my judgment is actually correct. No; I simply can’t see a difference which is demonstrably present (and which other people can see just fine); and this is clearly problematic, for me; it causes me to take sub-optimal actions which, if I perceived things correctly, I would do otherwise (more advantageously to myself).

The takeaway is this: if you think you have discovered a bug in human cognition, it is not enough to demonstrate that, if provided with data that is nonsensical, weird (in a “doesn’t correspond to what is encountered in the real world” way), or designed to be deceptive, the cognitive system in question yields some (apparently) nonsensical answer. What is necessary is to demonstrate that this alleged bug causes people to act in a way which is clearly a mistake, from their own perspective—and that is far more difficult.

Furthermore, in the “optical illusion” example, if you insisted that the “right” answer is that A and B are the same color, you would (as I claim, and explain, above) be wrong—or, more precisely, you would be right in a useless and irrelevant way, but wrong in the important and practical (but still quite specific) way. Now, how sure are you that the same isn’t true in the happiness case? (For instance, some researcher says that beautiful people aren’t happier. But is this true in an important and practical way, or is it false in that way and only true in an irrelevant and useless way? And if you claim the former—given the state of social science, how certain are you?)

In the “survival-critical task which is the source of selection pressure to develop and improve said system” sense. ↩︎

And if you decide that in some given case, you do care about this secondary fact, you can use tools designed to measure it. But usually, this other fact is of academic interest at best. ↩︎

↑ comment by Adam Zerner (adamzerner) · 2018-12-18T18:30:37.991Z · LW(p) · GW(p)

Thank you for a really great explanation, I understand now.

Now, how sure are you that the same isn’t true in the happiness case? (For instance, some researcher says that beautiful people aren’t happier. But is this true in an important and practical way, or is it false in that way and only true in an irrelevant and useless way? And if you claim the former—given the state of social science, how certain are you?)

I would say that I feel about 90% sure that it isn't true in the happiness case. I am not particularly familiar with the research, but in general, we have a ton of blind spots and biases that are harmful in a practical, real world sort of sense, and so a claim that we also have one in the context of happiness seems very plausible. It also seems plausible because of the evopsych reasoning. And most of all, reputable scientists seem to be warning us about the pitfalls of thinking we know what we like. If they were just making an academic point that we have these blind spots, but these blind spots aren't actually relevant to everyday people and everyday life, I wouldn't expect there to be bestselling books about it like Stumbling on Happiness.

But, it is definitely possible that I am just misinterpreting and misunderstanding things. If I am - if we aren't actually getting something wrong about happiness that is important in a practical sense - then that is very important. So that I can update my beliefs, and so that I can either edit or delete this post.

Replies from: TAG↑ comment by TAG · 2018-12-21T09:33:15.280Z · LW(p) · GW(p)

but in general, we have a ton of blind spots and biases that are harmful in a practical, real world sort of sense,

..but which don't get selected out, for some reason.

Replies from: Lanrian↑ comment by Lukas Finnveden (Lanrian) · 2018-12-21T21:20:24.144Z · LW(p) · GW(p)

Blind spots and biases can be harmful to your goals without being harmful to your reproductive fitness. Being wrong about which future situations will make you (permanently) happier is an excellent example of such a blind spot.

↑ comment by Shmi (shminux) · 2018-12-19T01:52:01.638Z · LW(p) · GW(p)

if you insisted that the “right” answer is that A and B are the same color, you would (as I claim, and explain, above) be wrong—or, more precisely, you would be right in a useless and irrelevant way, but wrong in the important and practical (but still quite specific) way. Now, how sure are you that the same isn’t true in the happiness case?

An excellent point! I wonder how one can figure out this distinction in the hedonic case.

↑ comment by Slider · 2019-07-30T19:37:45.846Z · LW(p) · GW(p)

Picture-data presented to the visual cortex can be for novel situations. It might be the only evidence for the truth of the matter seen. It would seem that criteria for correct seeing should still exist. Therefore saying that the picture is artifical is not particularly relevant. It being artificial might be that it is likely to present an edge case that would not be encountered naturally. But seeing phenomena that are totally unlike anything encountered previuosly is a thing that living organisms might need to deal with. In order to build a working behavoiur for novel situations it is important that the sense organ behaves consistently and not randomly (if truly no criteria then random noise would be valid behaviour) even if the consistentcy that is settled on is picked arbitrarily.

↑ comment by Pattern · 2018-12-21T20:49:10.157Z · LW(p) · GW(p)

If I cannot see the difference between red and green, then I will have quite a bit of trouble driving a car—I will mis-perceive the state of traffic lights!

Unless they are conveniently arranged in a set way, which you may memorize, such as left to right/bottom to top = red, yellow, green.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2019-01-02T16:48:03.695Z · LW(p) · GW(p)

Lest anyone get the idea that the parent comment is being ignored merely because it’s “pedantic” or “misses the point” or some such, I want to point out that it’s also mistaken.

This fascinating and engrossing document is Part 4 of the Manual of Uniform Traffic Control Devices for Streets and Highways (a.k.a. “MUTCD”), published by the Federal Highway Administration (a division of the United States Department of Transportation).

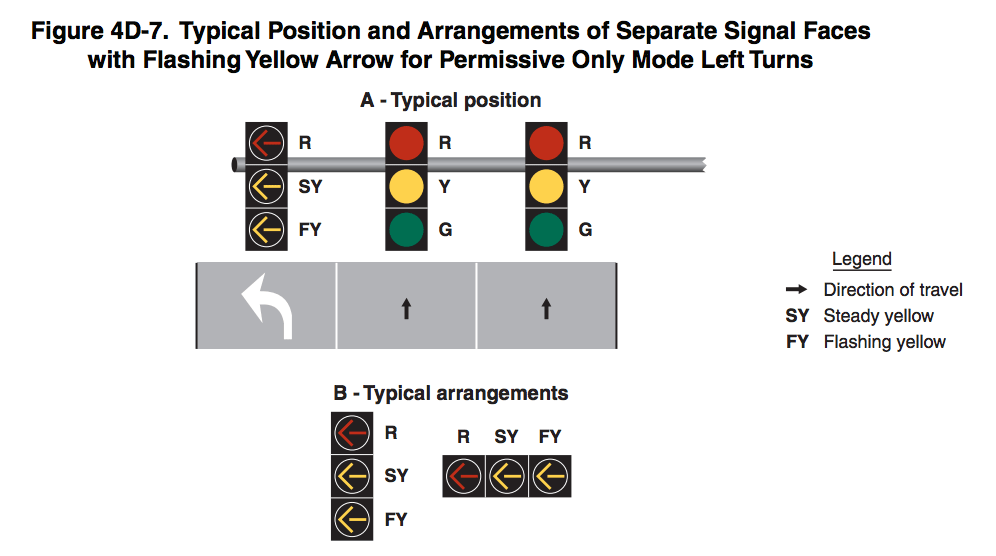

Page 468 of the MUTCD (p. 36 in the PDF) contains this diagram:

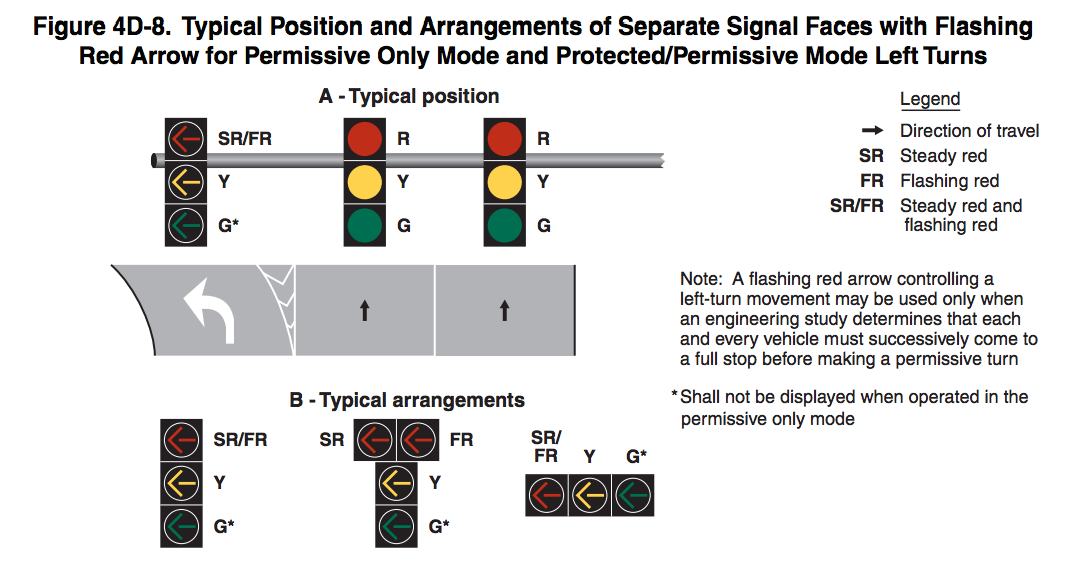

And page 469 of the MUTCD (p. 37 in the PDF) contains this diagram:

Note that the iconography is the same and the orientation and arrangement is the same. But the colors are different!

For another example, take a look at MUTCD p. 487 vs. p. 488 (PDF pp.55–56).

In summary, it is not possible to reliably determine the colors of traffic control signals from their positions in a traffic light arrangement.

comment by jessicata (jessica.liu.taylor) · 2018-12-18T05:22:57.547Z · LW(p) · GW(p)

Worth mentioning the wanting vs. liking distinction. Wanting something and expecting it to make you happy are distinct. Not everyone wants to wirehead. (Of course, people can have incorrect explicit beliefs about what they want)

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2018-12-18T06:15:39.177Z · LW(p) · GW(p)

Ah, good point. I agree.

I think there are times when people want something but know they won't actually like it; that it won't actually make them happy. For example, pizza and french fries might be something that people want, but that they know won't actually make them happy. I used pizza and french fries as an example, and maybe it wasn't a good choice.

Still, I think that there are still many other times where people are actually wrong about what they like, enough such that it is very harmful.

comment by romeostevensit · 2018-12-19T03:57:22.378Z · LW(p) · GW(p)

I agree with all of this.

*And*

I think that thinking of people as wanting happiness is similar to trying to understand speedrunning through the eyes of a child who is still at the stage of identifying with the video game character. They can't think rationally about strategies that decrease hit points too much, because that means your character is getting *hurt.* To the speedrunner, health points are just one more dimension of tradeoff in a convex optimization space.

Replies from: ricraz↑ comment by Richard_Ngo (ricraz) · 2018-12-20T18:23:48.890Z · LW(p) · GW(p)

Why is the optimisation space convex?

Replies from: romeostevensit↑ comment by romeostevensit · 2018-12-21T02:10:08.962Z · LW(p) · GW(p)

ambiguous wording, the optimization they're doing over the space is convex. Also I don't think choice of optimization function is super important for the metaphor. Just to give the flavor.

comment by avturchin · 2018-12-18T16:40:59.449Z · LW(p) · GW(p)

Obvious comment: Looks like a strong argument against approval directed AI alignment approach.

Maybe less obvious one: Assumption that "people want to be happy" may be wrong. People often have reasonable goals which - and they know it - will make them less happy by all typical measures, but they still want it and approve it. Examples: perusing scientific carrier or having children.

comment by Richard_Ngo (ricraz) · 2018-12-20T18:49:41.172Z · LW(p) · GW(p)

I'm just not one of those people who enjoys "deeper" activities like reading a novel. I like watching TV and playing video games.

I'm just not one of those people who likes healthy foods. You may like salads and swear by them, but I am different. I like pizza and french fries.

I'm just not an intellectual person. I don't enjoy learning.

I'm just not into that early retirement stuff. I need to maintain my current lifestyle in order to be happy.

I'm just not into "good" movies/music/art. I like the Top 50 stuff.

I'm curious why you chose these particular examples. I think they're mostly quite bad and detract from the reasonable point of the overall post. The first three, and the fifth, I'd characterise as "acquired tastes": they're things that people may come to enjoy over time, but often don't currently enjoy. So even someone who would grow to like reading novels, and would have a better life if they read more novels, may be totally correct in stating that they don't enjoy reading novels.

The fourth is a good example for many people, but many others find that retirement is boring. Also, predicting what your life will look like after a radical shift is a pretty hard problem, so if this is the sort of thing people are wrong about it doesn't seem so serious.

More generally, whether or not you enjoy something is different from whether that thing, in the future, will make you happier. At points in this post you conflate those two properties. The examples also give me elitist vibes: the implication seems to be that upper-class pursuits are just better, and people who say they don't like them are more likely to be wrong. (If anything, actually, I'd say that people are more likely to be miscalibrated about their enjoyment of an activity the more prestigious it is, since we're good at deceiving ourselves about status considerations).

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2018-12-20T20:03:49.538Z · LW(p) · GW(p)

I'm curious why you chose these particular examples.

I wanted to think of better ones but was having trouble doing so, didn't want to dedicate the time to doing so, and figured that it would be better to submit a mediocre exploratory post about a topic that I think is important than to not post anything at all.

More generally, whether or not you enjoy something is different from whether that thing, in the future, will make you happier. At points in this post you conflate those two properties.

I agree, and I think it would have been a good thing to discuss in the main post. "I know that I don't like salads now; I think I could develop a taste for them, but I don't want to or can't bring myself to do so" is definitely a different thing than "I don't like salads now, so I'm not going to eat them" and "I don't like salads now and I don't think that I could ever like them".

By discussing the above point, I think it would have the benefit of being more clear about what exactly the problem is. In particular, that "I know that I don't like salads now; I think I could develop a taste for them, but I don't want to or can't bring myself to do so" is a different problem.

The examples also give me elitist vibes: the implication seems to be that upper-class pursuits are just better, and people who say they don't like them are more likely to be wrong.

I definitely don't mean to imply that this is true. I personally don't think that it is. But I do see how the examples I chose would give off that vibe, and I think it would have been better to come up with examples that demonstrate a wider range of "I know what I like" attitudes.

Replies from: Vaniver↑ comment by Vaniver · 2018-12-20T23:04:50.497Z · LW(p) · GW(p)

I definitely don't mean to imply that this is true. I personally don't think that it is.

Your perception of them stays similar when you flip the signs? ("I don't like watching TV, I only read novels" becomes "yep, that person is probably mistaken about what they want/like.")

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2018-12-21T01:19:15.254Z · LW(p) · GW(p)

In the example of TV vs. novels, no, but there are other examples where I do think so:

- Live-like-the-locals vacation vs. tourist vacation

- Doing home improvement stuff yourself vs. paying someone to do it for you

- Biking everywhere vs. having a car

On balance, I'm actually not sure of what I think about whether "high class" things tend to provide more happiness than "low class" things, so I spoke too soon in the previous comment.

comment by jmh · 2018-12-20T18:50:59.504Z · LW(p) · GW(p)

Perhaps I'm just being dense here but why is liking and happy being linked together the way they are? I don't think "I like pizza." (presumably to eat) is a completely different statement from "I am happy when I eat pizza."

I do agree that we often do things that ultimately are not in our own interests but I don't really see that as a huge problem. We're rather complex in our existence. I don't think we have some internal utility function with a single metric to maximize. What we end up dealing with is more a constrained maximization case. Moreover, the various margins we maximize on are often not only mutually exclusive but mutually incompatible.