On the construction of the self

post by Kaj_Sotala · 2020-05-29T13:04:30.071Z · LW · GW · 18 commentsContents

On the construction of the self Mechanisms of claiming credit No-self and paradoxes around “doing” The difficulty of surrendering An example of a no-self experience None 18 comments

This is the fifth post of the "a non-mystical explanation of the three characteristics of existence" series [LW · GW].

On the construction of the self

In his essay The Self as a Center of Narrative Gravity, Daniel Dennett offers the thought experiment of a robot that moves around the world. The robot also happens to have a module writing a novel about someone named Gilbert. When we look at the story the novel-writing module is writing, we notice that its events bear a striking similarity to what the rest of the robot is doing:

If you hit the robot with a baseball bat, very shortly thereafter the story of Gilbert includes his being hit with a baseball bat by somebody who looks like you. Every now and then the robot gets locked in the closet and then says "Help me!" Help whom? Well, help Gilbert, presumably. But who is Gilbert? Is Gilbert the robot, or merely the fictional self created by the robot? If we go and help the robot out of the closet, it sends us a note: "Thank you. Love, Gilbert." At this point we will be unable to ignore the fact that the fictional career of the fictional Gilbert bears an interesting resemblance to the "career" of this mere robot moving through the world. We can still maintain that the robot's brain, the robot's computer, really knows nothing about the world; it's not a self. It's just a clanky computer. It doesn't know what it's doing. It doesn't even know that it's creating a fictional character. (The same is just as true of your brain; it doesn't know what it's doing either.) Nevertheless, the patterns in the behavior that is being controlled by the computer are interpretable, by us, as accreting biography--telling the narrative of a self.

As Dennett suggests, something similar seems to be going on in the brain. Whenever you are awake, there is a constant distributed decision-making process going on, where different subsystems swap in and out of control. While you are eating breakfast, subsystem #42 might be running things, and while you are having an argument with your spouse, subsystem #1138 may be driving your behavior. As this takes place, a special subsystem that we might call the “self-narrative subsystem” creates a story of how all of these actions were taken by “the self”, and writes that story into consciousness.

The consciously available information, including the story of the self, is then used by subsystems in the brain to develop additional models of the system’s behavior. There is an experience of boredom, and “the self” seems to draw away from the boredom - this may lead to the inference that boredom is bad for the self, and something that needs to be avoided. An alternative inference might have been that there is a subsystem which reacts to boredom by avoiding it, while other subsystems don’t care. So rather than boredom being intrinsically bad, one particular subsystem has an avoidance reaction to it. But as long as the self-narrative subsystem creates the concept of a self, the notion of there being a single self doing everything looks like the simplest explanation, and the system tends to gravitate towards that interpretation.

In the article “The Apologist and the Revolutionary [LW · GW]”, Scott Alexander discusses neuroscientist V.S. Ramachandran’s theory that the brain contains a reasoning module which attempts to fit various observations into the brain’s “current best guess” of what’s going on. He connects this theory with the behavior of “split-brain” patients, who have had the connection between the hemispheres of their brain severed:

Consider the following experiment: a split-brain patient was shown two images, one in each visual field. The left hemisphere received the image of a chicken claw, and the right hemisphere received the image of a snowed-in house. The patient was asked verbally to describe what he saw, activating the left (more verbal) hemisphere. The patient said he saw a chicken claw, as expected. Then the patient was asked to point with his left hand (controlled by the right hemisphere) to a picture related to the scene. Among the pictures available were a shovel and a chicken. He pointed to the shovel. So far, no crazier than what we've come to expect from neuroscience.

Now the doctor verbally asked the patient to describe why he just pointed to the shovel. The patient verbally (left hemisphere!) answered that he saw a chicken claw, and of course shovels are necessary to clean out chicken sheds, so he pointed to the shovel to indicate chickens. The apologist in the left-brain is helpless to do anything besides explain why the data fits its own theory, and its own theory is that whatever happened had something to do with chickens, dammit!

Now, as people have pointed out, it is tricky to use split-brain cases to draw conclusions about people who have not had their hemispheric connections severed. But this case looks very similar to what meditative insights suggest, namely that the brain constructs an ongoing story of there being a single decision-maker. And while the story of the single self tends to be closely correlated with the system’s actions, the narrative self does not actually decide the person’s actions, it's just a story of someone who does. In a sense, the part of your mind that may feel like the “you” that takes actions, is actually produced by a module that just claims credit for those actions.

With sufficiently close study of your own experience, you may notice how these come apart, and what creates the appearance of them being the same. As you accumulate more evidence about their differences, it becomes harder for the system to consistently maintain the hypothesis equating them. Gradually, it will start revising its assumptions.

Some of this might be noticed without any meditation experience. For example, suppose that you get into a nasty argument with your spouse, and say things that you don’t fully mean. The next morning, you wake up and feel regret, wondering why in the world you said those terrible things. You resolve to apologize and not do it again, but after a while, there is another argument and you end up behaving in the same nasty way.

What happened was something like: something about the argument triggered a subsystem which focused your attention on everything that could be said to be wrong with your spouse. The following morning, that particular trigger was no longer around: instead, there was an unpleasant emotion, guilt. The feelings of guilt activated another subsystem which had the goal of making those sensations go away, and it had learned that self-punishment and deciding to never act the same way is an effective way of doing so. (As an aside, Chris Frith and Thomas Metzinger speculate that one of the reasons why we have a unified self-model, is to allow this kind of a social regret and a feeling of “I could have done otherwise”.)

In fact, the “regretful subsystem” cannot directly influence the “nasty subsystem”. The regretful subsystem can only output the thought that it will not act in a nasty way again - which it never did in the first place. Yet, because the mind-system has the underlying modeling assumption of being unified, the regretful subsystem will (correctly) predict that it will not act in that nasty way, if put into the same situation again… while failing to predict that it will actually be the nasty subsystem that activates, because the regretful subsystem is currently identifying with (modeling itself as having caused) the actions that were actually caused by the nasty subsystem.

Having an intellectual understanding of this may sometimes help, but not always. Suppose that you keep feeling strong shame over what happened and a resolve never to do so again. At the same time, you realize that you are not thinking clearly about this, and that you are not actually a unified self. Your train of thought might thus go something like this:

“Oh God why did I do that, I feel so ashamed for being such a horrible person… but aaah, it’s pointless to feel shame, the subsystem that did that wasn’t actually the one that is running now, so this isn’t helping change my behavior… oh man oh man oh man I’m such a terrible person I must never do that again… but that thought doesn’t actually help, it doesn’t influence the system that actually did it, I must do something else… aaaahh why did I do that I feel so much shame…”

Which we can rewrite as:

Regretful subsystem: “Oh God why did I do that, I feel so shamed for being such a horrible person…”

“Sophisticated” subsystem: “But aaah, it’s pointless to feel shame, the subsystem that did that wasn’t actually the one that is running now…”

Regretful subsystem: “Oh man oh man oh man I’m such a terrible person I must never do that again…”

“Sophisticated” subsystem: “But that thought doesn’t actually help, it doesn’t influence the subsystem that actually did it, so I must do something else…”

Regretful subsystem: “Aaaahh why did I do that, I feel so much shame…”

I previously said that the regretful subsystem cannot directly affect the nasty subsystem… but it may very well be that a more sophisticated subsystem, which understands what is happening, cannot directly influence the regretful subsystem either. Even as it understands the disunity of self on one level, it may still end up identifying with all the thoughts currently in consciousness.

In fact, the “sophisticated” subsystem may be particularly likely to assume that the mind is unified.

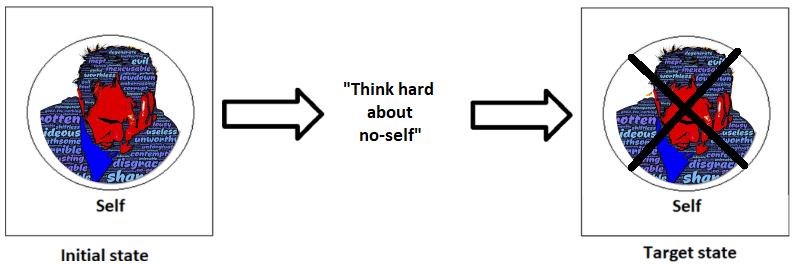

Suppose that the regretful subsystem triggers first, and starts projecting self-punishment into the global workspace. This then triggers another subsystem, which has learned that “a subsystem triggering to punish myself [LW · GW] is not actually going to change my future actions, and it would be more useful for me to do something else”. In other words, even as the philosophical subsystem’s strategy is to think really hard about the verbal concept of no-self, the subsystem has actually been triggered by the thought of “I am ashamed”, and has the goal state of making the “me” not feel shame. It even ends up thinking “I must do something else”, as if the shameful thoughts were coming from it rather than a different subsystem. Despite a pretense of understanding no-self, its actual cognitive schema is still identifying with the regretful system’s output.

This is one of the trickiest things about no-self experiences: on some occasions, you might be able to get into a state with no strong sense of self, when you are not particularly trying to do so and don’t have strong craving [LW · GW]. The resulting state then feels really pleasant. You might think that this would make it easier to let go of craving in the future… and it might in fact make you more motivated to let go of craving again, but “more motivated” frequently means “have more craving to let go of craving”. Then you need to find ways to disable those subsystems - but of course, if you have the goal of disabling the subsystems, then this may mean that you have craving to let go of the craving to let go of the craving…

Mechanisms of claiming credit

Consider someone who is trying hard to concentrate on a lecture (or on their meditation object), but then wanders off into a daydream without noticing it. Thomas Metzinger calls the moment of drifting off to the daydream a “self-representational blink”. As the content of consciousness changes from that produced by one subsystem to another, there is a momentary loss of self-monitoring that allows this change to happen unnoticed.

From what I can tell, the mechanism by which the self-narrative agent manages to disguise itself as the causally acting agent seems to also involve a kind of a self-representative blink. Normally, intentions precede both physical and mental actions: before moving my hand I have an intention to move my hand, before pursuing a particular line of thought I have an intention to pursue that line of thought, and before moving my attention to my breath I have an intention to move my attention to my breath. Just as these intentions appear, there is a blink in awareness, making it hard to perceive what exactly happens. That blink is used by the self-narrative agent to attribute the intention as arising from a single “self”.

If one develops sufficient introspective awareness, they may come to see that the intentions are arising on their own, and that there is actually no way to control them: if one intends to control them somehow, then the intention to do that is also arising on its own. Further, it can become possible to see exactly what the self-narrative agent is doing, and how it creates the story of an independent and acausal self. This does not eliminate the self-narrative agent, but does allow its nature to be properly understood, as it is more clearly seen. Meditation teacher Daniel Ingram describes this as the nature of the self-representation “becoming bright, clear, hardwired to be perceived in a way that doesn’t create habitual misperception”; and elaborates:

The qualities that previously meant and were perceived to be “Agent” still arise in their entirety. Every single one of them. Their perceptual implications are very different, but, functionally, their meanings are often the same, or at least similar. [...]

All thoughts arise in this space. They arise simultaneously as experiences (auditory, physical, visual, etc.), but also as meaning. These meanings include thoughts and representations such as “I”, “me”, and “mine” just the way they did before. No meaning have been cut off. Instead, these meanings are a lot easier to appreciate as they arise for being the representations that they are, and also there is a perspective on this representation that is refreshingly open and spacious rather than being contracted into them as happened before.

No-self and paradoxes around “doing”

Some meditation instructions may say nonsensical-sounding things that go roughly as follows: “Just sit down and don’t do anything. But also don’t try to not do anything. You should neither do anything, nor do ‘not doing anything’.”

Or they might say: “Surrender entirely. This moment is as it is, with or without your participation. This does not mean that you must be passive. Surrender also to activity.”

This sounds confusing, because our normal language is built around the assumption of a unitary self which only engages in voluntary, intentionally chosen activities. But it turns out that if you do just sit down and decide to let whatever thoughts arise in your head, you might soon start having confusing experiences.

Viewed from a multi-agent frame, there is no such thing as “not making decisions”. Different subsystems are constantly transmitting different kinds of intentions - about what to say, what to do, and even what to think - into consciousness. Even things which do not subjectively feel like decisions, like just sitting still and waiting, are still the outcomes of a constant decision-making process. It is just that a narrative subsystem tags some outcomes as being the result of “you” having made a decision, while tagging others as things that “just happened”.

For example, if an unconscious evidence-weighting system decides to bring a daydream into consciousness, causing your mind to wander into the daydream, you may feel that you “just got lost in thought”. But when a thought about making tea comes into consciousness and you then get up to make tea, you may feel like you “decided to make some tea”. Ultimately, you have a schema which classifies everything as either “doing” or “not doing”, and attempts to place all experience into one of those two categories.

Various paradoxical-sounding meditation instructions about doing ”nothing” sound confusing because they are intended to get you to notice things that do not actually match these kinds of assumptions.

For example, you may be told to just sit down and let your mind do whatever it wants, neither controlling what happens in your mind but also not trying to avoid controlling it. Another way of putting this is:

- Do not exert any conscious intention to control your mind, such as by preventing yourself from thinking particular thoughts.

- But if you notice a sensation of yourself trying to control your mind, also do not do anything to stop yourself from feeling that sensation.

One thing (of many) that may happen when you try to follow these instructions is:

- You were told not to have any conscious intention to control your mind, so the subsystems that caused you to sit down and start meditating, take no particular action to shift the contents of consciousness.

- At some point, other subsystems, driven by a priority other than meditating, send something into consciousness. They are attempting to change its contents towards the particular priority that these subsystems care about.

- This is noticed by the subsystem classifying mental contents as doing or non-doing. It classifies this as “doing”, and sends a sensation of intentional “doing” into consciousness.

- The subsystem which originally sat down with the intention of meditating and not exerting conscious control, notices the sensation of conscious doing.

- The overall mind-system gets confused, because its internal narrative (modeled from the assumption of a unitary self) now says “I sat down and intended not to intentionally control my mind, but now I find myself intentionally controlling my mind anyway, but how can I unintentionally end up doing something intentional?”

- Following the original instructions, the non-meditating subsystem may now just let the sensation of conscious doing be there, without interfering with it.

- Eventually, there may be an experiential realization that the sensation of intentional doing is just a sensation or a tag assigned to some particular actions, not intrinsically associated with any single subsystem.

So, translated into multiagent language: “don’t do anything but also don’t not do anything” means “you, the subsystem which is following these instructions: don’t do anything in particular, but when an intention to do something arises from another subsystem, also don’t do anything to counteract that intention”.

Inevitably, you will experience yourself as doing something anyway, because another subsystem has swapped in and out of control, and this registers to you as 'you' having done something. This is an opportunity to notice on an experiential level that you are actually identifying with multiple distinct processes at the same time.

(This is very much a simplified account; in actuality, even the “meditating subsystem” seems itself composed of multiple smaller subsystems. As the degree of mental precision grows, every component in the mind will grow increasingly fine-grained. This makes it difficult to translate things into a coarser ontology which does not draw equal distinctions.)

The difficulty of surrendering

Contemplative traditions may make frequent reference to the concept of “surrendering” to an experience. One way that surrender may happen is when a subsystem has repeatedly tried to resist or change a particular experience, failed time after time, and finally just turns off. (It is unclear to me whether this is better described as “the subsystem gives up and turns off” or “the overall system gives up on this subsystem and turns it off”, but I think that it is probably the latter, even if the self-narrative agent may sometimes make it feel like the former.) Once one genuinely surrenders to the experience of (e.g.) pain with no attempt to make it stop, it ceases to be aversive.

However, this tends to be hard to repeat. Suppose that a person manages to surrender to the pain, which makes it stop. The next time they are in pain, they might remember that “I just need to surrender to the pain, and it will stop”.

In this case, a subsystem which is trying to get the pain out of consciousness has formed the prediction that there is an action that can be carried out to make the pain stop. Because the self-narrative agent records all actions as “I did this”, the act of surrendering to the pain has been recorded in memory as “I surrendered to the pain, and that made it stop”.

The overall system has access to that memory; it infers something like “there was an action of ‘surrender’ which I carried out, in order to make the pain stop; I must carry out this action of surrender again”. Rather than inferring that there is nothing which can be done to make the pain stop, making it useless to resist, the system may reach the opposite conclusion and look harder for the thing that it did the last time around.

The flaw here is that “surrendering” was not an action which the resisting subsystem took; surrendering was the act of the resisting subsystem going offline. Unfortunately, for as long as no-self has not been properly grasped, the mind-system does not have the concept of a subsystem going offline. Thus, successfully surrendering to the pain on a few occasions can make it temporarily harder to surrender to it.

And again, having an intellectual understanding of what I wrote just means that there is a subsystem that can detect that the resisting subsystem keeps resisting when it should surrender. It can then inject into consciousness the thought, “the resisting subsystem should surrender”. But this is just another thought in consciousness, and the resisting subsystem does not have the capacity to parse verbal arguments, so the verbal understanding may become just another system resisting the mind’s current state.

At some point, you begin to surrender to the fact that even if you have deep equanimity at the moment, at some point it will be gone. “You” can’t maintain equanimity at all times, because there is no single “you” that could maintain it, just subsystems which have or have not internalized no-self.

Even if the general sense of self has been strongly weakened, it looks like craving subsystems run on cached models [LW · GW]. That is to say, if a craving subsystem was once created to resist a particular sensation under the assumption that this is necessary for protecting a self, that assumption and associated model of a self will be stored within that subsystem. The craving subsystem may still be triggered by the presence of the sensation - and as the subsystem then projects its models into consciousness, they will include a strong sense of self again.

There is an interesting connection between this and Botvinick et al. (2019), who discuss a brain-inspired artificial intelligence approach. “Episodic meta-reinforcement learning” is an approach where a neural network is capable of recognizing situations that it has encountered before. When it does, the system reinstates the network state that it was in when it was in this situation before, allowing old learning to be rapidly retrieved and reapplied.

The authors argue that this model of learning applies to the brain as well. If true, it would be compatible with models suggesting that emotional learning needs to be activated to be updated [LW · GW]; and it would explain why craving can persist and be triggered even after global beliefs about the self have been revised. Once a person encounters a situation which triggers craving, that triggering may effectively retrieve from memory a partial “snapshot” of what their brain was like on previous occasions when this particular craving triggered… and that snapshot may substantially predate the time when the person became enlightened, carrying with it memories of past self-models.

People who have made significant progress on the path of enlightenment say that once you have seen through how the sense of self is constructed, it becomes possible to remember what the self really is, snapping out of a triggered state and working with the craving so that it won’t trigger again. But you need to actually remember to do so - that is to say, the triggered system needs to grow less activated and allow other subsystems better access to consciousness. Over time, remembering will grow more frequent and the “periods of forgetting” will grow shorter, but forgetting will still keep happening.

Managing to have a period of equanimity means that you can maintain a state where nothing triggers craving, but then maybe your partner yells at you and a terrified subsystem triggers and then you scream back, until you finally remember and have equanimity again. And at some point you just surrender to the fact that this, too, is inevitable, and come to crave complete equanimity less. (Or at least, you remember to surrender to this some part of the time.)

An example of a no-self experience

There was one evening when I had done quite a lot of investigation into no-self but wasn’t really thinking about the practice. I was just chatting with friends online and watching YouTube, when I had a sense of a mental shift, as if a chunk of the sense of having a separate self fell away.

I'd previously had no-self experiences in which I'd lost the sense of being the one doing things, and instead just watched my own thoughts and actions from the side. However, those still involved the experience of a separate observer, just one which wasn't actively involved in doing anything. This was the first time that that too fell away (or at least the first time if we exclude brief moments in the middle of conventional flow experiences).

There was also a sense of some thoughts - of the kind which would usually be asking things like "what am I doing with my life" or "what do others think of me" - starting to feel a little less natural, as if my mind was having difficulties identifying the subject that the "I" in those sentences was referring to. I became aware of this from having a sense of absence, as if I'd been looping those kinds of thoughts on a subconscious level, but then had those loops disrupted and only became aware of the loops once they disappeared.

The state didn't feel particularly dramatic; it was nice, but maybe more like "neutral plus" than "actively pleasant". After a while in the state, I started getting confused about the extent to which the sense of a separate self really had disappeared; because I started again picking up sensations associated with that self. But when my attention was drawn to those sensations, their interpretation would change. They were still the same sensations, but an examination would indicate them to be just instances of a familiar sensation, without that sensation being interpreted as indicating the existence of a self. Then the focus of my attention would move elsewhere, and they would start feeling a bit more like a self again, when I wasn’t paying so much attention to the sensations just being sensations.

A couple of hours later, I went to the sauna by myself; I had with me a bottle of cold water. At some point I was feeling hot but didn't feel like drinking more water, so instead I poured some of it on my body. It felt cold enough to be aversive, but then I noticed that the aversiveness didn't make me stop pouring more of it on myself. This was unusual; normally I would pour some of it on myself, go "yikes", and stop doing it.

This gave me the idea for an experiment. I'd previously thought that taking cold showers could be nice mindfulness exercise, but hadn't been able to make myself actually take really cold ones; the thought had felt way too aversive. But now I seemed to be okay with doing aversive things involving cold water.

Hmm.

After I was done in the sauna, I went to the shower, with the pressure as high and the temperature as cold as it went. The thought of stepping into it did feel aversive, but not quite as strongly aversive as before. It only took me a relatively short moment of hesitation before I stepped into the shower.

The experience was... interesting. On previous occasions when I'd experimented with cold showers, my reaction to a sudden cold shock had been roughly "AAAAAAAAAAA I'M DYING I HAVE TO GET OUT OF HERE". And if you had been watching me from the outside, you might have reasonably concluded that I was feeling the same now. Very soon after the water hit me, I could feel myself gasping for breath, the water feeling like a torrent on my back that forced me down on my knees, my body desperately trying to avoid the water. The shock turned my heartbeat into a frenzied gallop, and I would still have a noticeably elevated pulse for minutes after I’d gotten out of the shower.

But I'm not sure if there was any point where I actually felt uncomfortable, this time around. I did have a moderate desire to step out of the shower, and did give into it after a pretty short while because I wasn't sure for how long this was healthy, but it was kind of like... like any discomfort, if there was any, was being experienced by a different mind than mine.

Eventually I went to bed, and my state had become more normal in the morning, the mind having returned to a previous mode of functioning.

The way I described it afterwards, was that in that state, there was an awareness of how the sense of self felt like “the glue binding different experiences together”. Normally, there might be a sensation of cold, a feeling of discomfort, and a thought about the discomfort. All of them would be bound together into a single experience of “I am feeling cold, being uncomfortable, and thinking about this”. But without the sense of self narrating how they relate to each other, they only felt like different experiences, which did not automatically compel any actions. In other words, craving did not activate, as there was no active concept of a self that could trigger it.

This is related to my earlier discussion [LW · GW] of the sensations of a self as a spatial tag. I mentioned that you might have a particular sensation around or behind your eyes - such as some physical sense of tension - which is interpreted as “you” looking out from behind your eyes and seeing the world. When the sense of self is active, the physical sensations are bound together: there is a sensation of seeing, a sensation of physical tension, and the self-narrative agent interpreting these as “the sensation of tension is the self, which is experiencing the sensation of seeing”. Without that linkage, experience turns into just a sensation of seeing and a sensation of tension, leaving out the part of a separate entity experiencing something.

This is the fifth post of the "a non-mystical explanation of the three characteristics of existence" series [LW · GW]. The next part in the series is Three characteristics: impermanence [LW · GW].

18 comments

Comments sorted by top scores.

comment by Vanessa Kosoy (vanessa-kosoy) · 2020-05-31T16:05:16.621Z · LW(p) · GW(p)

How about the following model for what is going on.

A human is an RL agent with a complicated reward function, and one of the terms in this reward function requires (internally) generating an explanation for the agent's behavior. You can think of it as a transparency technique. The evolutionary reason for this is that other people can ask you to explain your behavior (for example, to make sure they can trust you) and generating the explanation on demand would be too slow (or outright impossible if you don't remember the relevant facts), so you need to do it continuously. Like often with evolution, an instrumental (from the perspective of reproductive fitness) goal has been transformed into a terminal (from the perspective of the evolved mind) goal.

The criteria for what comprises a valid explanation are at least to some extent learned (we can speculate that the learning begins when parents ask children to explain their behavior, or suggest their own explanations). We can think of the explanation as a "proof" and the culture as determining the "system of axioms". Moreover, the resulting relationship between the actual behavior and explanation is two way: on the one hand, if you behaved in a certain way, then the explanation needs to reflect it, on the other hand, if you already generated some explanation, then your future behavior should be consistent with it. This might be the primary mechanism by which the culture's notions about morality are "installed" into the individual's preferences: you need to (internally) explain your behavior in a way consistent with the prevailing norms, therefore you need to actually not stray too far from those norms.

The thing we call "self" or "consciousness" is not the agent, is not even a subroutine inside the agent, it is the explanation. This is because any time someone describes eir internal experiences, ey are actually describing this "innate narrative": after all, this is exactly its original function.

Replies from: Kaj_Sotala, Raemon, Chipmonk↑ comment by Kaj_Sotala · 2020-06-01T09:58:19.857Z · LW(p) · GW(p)

This makes sense to me; would also be compatible with the model of craving as a specifically socially evolved motivational layer [LW(p) · GW(p)].

The thing we call "self" or "consciousness" is not the agent, is not even a subroutine inside the agent, it is the explanation. This is because any time someone describes eir internal experiences, ey are actually describing this "innate narrative": after all, this is exactly its original function.

Yes. Rephrasing it slightly, anything that we observe in the global workspace is an output from some subsystem; it is not the subsystem itself. Likewise, the sense of a self is a narrative produced by some subsystem. This narrative is then treated as an ontologically basic entity, the agent which actually does things, because the subsystems that do the self-modeling can only see the things that appear in consciousness. Whatever level is the lowest that you can observe, is the one whose behavior you need to take as an axiom; and if conscious experience is the lowest level that you can observe, then you take the narrative as something whose independent existence has to be assumed.

(Now I wonder about that self-representational blink associated with the experience of the self. Could it be that the same system which produces the narrative of the self also takes that narrative as input - and that the blink obscures it from noticing that it is generating the very same story which it is basing its inferences on? "I see a self taking actions, so therefore the best explanation must be that there is a self which is taking actions?")

This would also explain Julian Jaynes's seemingly-crazy theory that ancient people experienced their gods as auditory hallucinations. If society tells you that some of the thoughts that you hear in your head come from gods, then maybe your narrative of the self just comes to assign those thoughts as coming from gods.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2020-06-02T09:22:32.143Z · LW(p) · GW(p)

This would also explain Julian Jaynes's seemingly-crazy theory that ancient people experienced their gods as auditory hallucinations. If society tells you that some of the thoughts that you hear in your head come from gods, then maybe your narrative of the self just comes to assign those thoughts as coming from gods.

comment by Steven Byrnes (steve2152) · 2020-05-29T16:06:38.973Z · LW(p) · GW(p)

I'm really enjoying all these posts, thanks a lot!

something about the argument brought unpleasant emotions into your mind. A subsystem activated with the goal of making those emotions go away, and an effective way of doing so was focusing your attention on everything that could be said to be wrong with your spouse.

Wouldn't it be simpler to say that righteous indignation is a rewarding feeling (in the moment) and we're motivated to think thoughts that bring about that feeling?

the “regretful subsystem” cannot directly influence the “nasty subsystem”

Agreed, and this is one of the reasons that I think normal intuitions about how agents behave don't necessarily carry over to self-modifying agents whose subagents can launch direct attacks against each other, see here [EA(p) · GW(p)].

it looks like craving subsystems run on cached models

Yeah, just like every other subsystem right? Whenever any subsystem (a.k.a. model a.k.a. hypothesis) gets activated, it turns on a set of associated predictions. If it's a model that says "that thing in my field of view is a cat", it activates some predictions about parts of the visual field. If it's a model that says "I am going to brush my hair in a particular way", it activates a bunch of motor control commands and related sensory predictions. If it's a model that says "I am going to get angry at them", it activates, um, hormones or something [LW · GW], to bring about the corresponding arousal and valence. All these examples seem like the same type of thing to me, and all of them seem like "cached models".

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2020-06-01T10:05:27.108Z · LW(p) · GW(p)

I'm really enjoying all these posts, thanks a lot!

Thank you for saying that. :)

Wouldn't it be simpler to say that righteous indignation is a rewarding feeling (in the moment) and we're motivated to think thoughts that bring about that feeling?

Well, in my model there are two layers:

1) first, the anger is produced by a subsystem which is optimizing for some particular goal

2) if that anger looks like it is achieving the intended goal, then positive valence is produced as a result; that is experienced as a rewarding feeling that e.g. craving may grab hold of and seek to maintain

That said, the exact reason for why anger is produced isn't really important for the example and might just be unnecessarily distracting, so I'll remove it.

Agreed, and this is one of the reasons that I think normal intuitions about how agents behave don't necessarily carry over to self-modifying agents whose subagents can launch direct attacks against each other, see here [EA(p) · GW(p)].

Agreed.

Yeah, just like every other subsystem right?

Yep! Well, some subsystems seem to do actual forward planning as well, but of course that planning is based on cached models.

comment by Slider · 2020-05-30T01:26:33.895Z · LW(p) · GW(p)

Having been reading meditation content there was an association while watching Day9 promote their game project Straigts of Danger.

In the game there is a single boat and 20 players each of which controls a walking humanoid on the boat. The players can move their humanoid on buttons to control the boat. Because the players don't have voice chat explicit coordinatino is hard and lot of multiple people doing contradictory plans at the same time makes multiple non-sensical buttons being pressed make the boat so almost random things.

As a caster/presenter Day9 didn't himself play but the players probably were watching the stream. Using language like "we" and narrating from the view point of the point it could seem like he was controlling the boat. But infact he was not pressing any boat controlling buttons but only giving slight suggestions to autonumous human players.

Achieving a high level of coordination was referred to as "becoming a mind". While in there it was more for humorous effect it is an interesting theorethical question can individuals ever make an organisation that properly counted as a separate mind from the constituents and what conditions such a delineation would follow.

Replies from: Alexei, Pattern↑ comment by Alexei · 2020-05-30T21:36:32.792Z · LW(p) · GW(p)

Very similar to : https://www.lesswrong.com/posts/GaP3JK5kSABRa8GkK/the-mind-board-game-review [LW · GW]

comment by 2PuNCheeZ · 2022-07-16T04:53:51.472Z · LW(p) · GW(p)

Now, as people have pointed out, it is tricky to use split-brain cases to draw conclusions about people who have not had their hemispheric connections severed. But this case looks very similar to what meditative insights suggest, namely that the brain constructs an ongoing story of there being a single decision-maker

choice blindness experiments are a great example of this. people seem to change their actual preferences after having to come up with a justification for something they didn't even prefer but thought they did

comment by kithpendragon · 2020-06-02T20:01:35.622Z · LW(p) · GW(p)

It sounds like the memories generated by a mind with a substantial amount of enlightenment should be notably different than those of a person who still has a fully established sense of self. I think I see a little of what I'm pointing at hinted in "An example of a no-self experience". If I'm right, it suggests (yet another way in which) I've got a lot of work to do in my own practice, and it might be diagnostic as to how a practice is progressing along one axis; I'd be interested to see a discussion on the topic.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2020-06-03T14:28:51.894Z · LW(p) · GW(p)

Yeah. I find that many experiences that I've had in meditation-induced states are basically impossible to recall precisely: I will only remember a surface impression or verbal summary of what the state felt like. Then when I get back to that state, I have an experience of "oh yeah, this is what those words that I wrote down earlier really meant".

Replies from: kithpendragon↑ comment by kithpendragon · 2020-06-03T17:00:26.134Z · LW(p) · GW(p)

That tracks with descriptions I've heard from other meditators of working with the mind in rarified states, but it's unfortunate for the purposes of discourse. Ah well; back to the cushion, then!

comment by Miguel Lopes (miguel-lopes) · 2020-06-01T16:44:30.620Z · LW(p) · GW(p)

Great posts Kaj Sotala. Would it be wrong to say this is an attempt to place Buddhist/meditation insights in the domain and language of cognitive science? I would suggest that an exciting step would be the connection to neuroscience - just recently read Michael Pollan's 'How to Change Your Mind' and became aware of the concept of default mode network, and how its down-regulation is associated with states of flow and likely with meditation/psychedelic induced changes in the perception of self. Interesting stuff!

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2020-06-02T09:25:32.328Z · LW(p) · GW(p)

Thanks! You could say that, yes.

A lot of this already draws on neuroscience, particularly neuronal workspace theory [LW · GW], but I agree that there's still a lot more that could be brought in. Appreciate the book suggestion, it looks interesting.