From self to craving (three characteristics series)

post by Kaj_Sotala · 2020-05-22T12:16:42.697Z · LW · GW · 21 commentsContents

The connection between self and craving Craving as a second layer of motivation From self to craving and from craving to self Craving and the self-model None 21 comments

Buddhists talk a lot about the self, and also about suffering. They claim that if you come to investigate what the self is really made of, then this will lead to a reduction in suffering. Why would that be?

This post seeks to answer that question. First, let’s recap a few things that we have been talking about before.

The connection between self and craving

In “a non-mystical explanation of ‘no-self’ [LW · GW]”, I talked about the way in which there are two kinds of goals. First, we can manipulate something that does not require a representation of ourselves. For example, we can figure out how to get a truck on a column of blocks.

In that case, we can figure out a sequence of actions that takes the truck from its initial state to its target state. We don’t necessarily need to think about ourselves as we are figuring this out - the actual sequence could just as well be carried out by someone else.

I mentioned that these kinds of tasks seem to allow flow states, in which the sense of self becomes temporarily suspended as unnecessary, and which are typically experienced as highly enjoyable and free from discomfort.

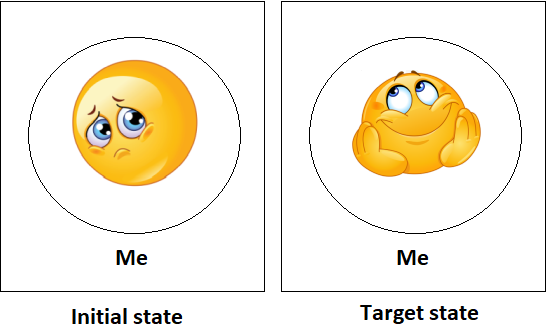

Alternatively, we can think of a goal which intrinsically requires self-reference. For example, I might be feeling sad, and think that I want to feel happy instead. In this case, both the initial state and the target state are defined in terms of what I feel, so in order to measure my progress, I need to track a reference to myself.

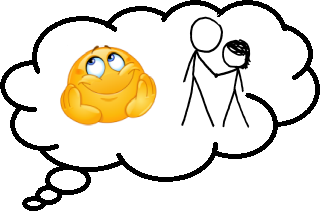

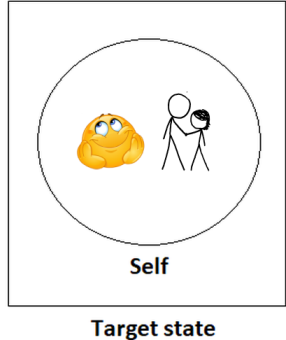

In that post, I remarked that changing one’s experience of one’s self may change how emotions are experienced. This does not necessarily require high levels of enlightenment: it is a common mindfulness practice to reframe your emotions as something that is external to you, in which case negative emotions might cease to feel aversive.

I have also previously discussed [LW · GW] therapy techniques that allow you to create some distance between yourself and your feelings, making them less aversive. For example, one may pay attention to where in their body they feel an emotion, keep their attention on those physical feelings, and then allow a visual image of that emotion to arise. This may then cause the emotion to be experienced as “not me”, in a way which makes it easier to deal with.

The next post in my series was on craving and suffering [LW · GW], where I distinguished two different kinds of motivation: craving and non-craving. I claimed that craving is intrinsically associated with the desire to either experience positive valence, or to avoid experiencing negative valence. Non-craving-based motivation, on the other hand, can care about anything; not just valence.

In particular, I claimed that discomfort / suffering (unsatisfactoriness, to use a generic term) is created by craving: craving attempts to resist reality, and in so doing generates an error signal which is subjectively experienced as unsatisfactoriness. I also suggested that non-craving-based motivation does not resist reality in the same way, so does not create unsatisfactoriness.

Putting some of these pieces together:

- Craving tries to ensure that “the self” experiences positive feelings and avoids negative feelings.

- If there are consciously experienced feelings which are not interpreted as being experienced by the self, it does not trigger craving.

- There is a two-way connection between the sense of self and craving.

- On one hand, experiencing a strong sense of self triggers craving, as feelings are interpreted as happening to the self.

- From the other direction, once craving is triggered, it sends into consciousness the goal of either avoiding or experiencing particular feelings. As this goal is one that requires making reference to the self, sending it into consciousness instantiates a sense of self.

Let’s look at this a bit more.

Craving as a second layer of motivation

A basic model is that the brain has subsystems which optimize for different kinds of goals, and then produce positive or negative valence in proportion to how well those goals are being achieved.

For example, appraisal theories of emotion hold that emotional responses (with their underlying positive or negative valence) are the result of subconscious evaluations about the significance of a situation, relative to the person's goals. An evaluation saying that you have lost something important to you, for example, may trigger the emotion of sadness with its associated negative valence.

Or consider a situation where you are successfully carrying out some physical activity; playing a fast-paced sport or video game, for example. This is likely to be associated with positive valence, which emerges from having success at the task. On the other hand, if you were failing to keep up and couldn't get into a good flow, you would likely experience negative valence.

Valence looks like a signal about whether some goals/values are being successfully attained. A subsystem may have a goal X which it pursues independently, and depending on how well it goes, valence is produced as a result. In this model, because valence tends to signal states that are good/bad for the achievement of an organism's goals, craving acts as an additional layer that "grabs onto" states that seem to be particularly good/bad, and tries to direct the organism more strongly towards those.

There’s a subtlety here, in that craving is distinct from negative valence, but craving can certainly produce negative valence. For example:

- You are feeling stressed out, so you go for a long walk. This helps take your mind off the stress, and you come back relaxed.

- The next time you are stressed and feel like you need a break, you remember that the walk helped you before. It’s important for you to get some more work done today, so you take another walk in the hopes of calming down again. As you do, you keep thinking “okay, am I about to calm down and forget my worries now” - and this question keeps occupying you throughout your walk, so that when you get back home, you haven’t actually relaxed at all.

I think this has to do with craving influencing the goals and inputs that are fed into various subsystems. If craving generates the goal of “I should relax”, then that goal is taken up by some planning subsystem; and success or failure at that goal, may by itself produce positive or negative valence just like any other goal does. This also means that craving may generate emotions that generate additional craving: first you have a craving to relax on a walk, but then going on the walk produces frustration, creating craving to be rid of the frustration...

My model about craving and non-craving seems somewhat similar to the proposed distinction between model-based and model-free goal systems [LW · GW] in the brain. The model-based system does complex reasoning about what to do; it is capable of pretty sophisticated analyses, but requires substantial computational resources. To save on computation, the model-free system remembers which actions have led to good or bad outcomes in the past, and tries to repeat/avoid them. Under this model, craving would be associated with something like the model-free system, one which used “did an action produce positive or negative valence” as a shorthand for whether actions should be taken or avoided.

However, it would be a mistake to view these as two entirely distinct systems. Research suggests [LW(p) · GW(p)] that even within the same task, both kinds of systems are active, with the brain adaptively learning which one of the systems to deploy during which parts of the activity. Craving valence and more complex model-based reasoning also seem to be intertwined in various ways, such as:

- It is possible to unlearn particular cravings, as the brain updates to notice that this specific craving is not actually useful. The unlearning process seems to involve some degree of model-based evaluation, as the brain seems resistant to release cravings if it predicts that doing so would make it harder to achieve some particular goal.

- Model-based subsystems may act in ways that seem to make use of craving. For example, in an earlier post reviewing the book Unlocking the Emotional Brain [LW · GW], I discussed the example of Richard. A subsystem in his brain predicted that if he were to express confidence, people would dislike him, so it projected negative self-talk into his consciousness to prevent him from being confident. Presumably the self-talk had negative valence and caused a craving to avoid it, in a way which contributed to him not saying anything. I suspect that this is part of why mindfulness practice may release buried trauma: if you get better at dealing with mild aversion, that aversion might previously have been used by subsystems to keep negative memories contained - and then your mind gets flooded with really unpleasant memories, and much stronger unsatisfactoriness than you were necessarily prepared to deal with.

It is also worth making explicit that pursuing positive valence and avoiding negative valence are goals that can be pursued by non-craving-based subsystems as well.

For basically any goal, there can be craving-based and non-craving-based motivations. You might pursue pleasure because of a craving for pleasure, but you may also pursue it because you value it for its own sake, because experiencing pleasure makes your mind and body work better than if you were only experiencing unhappiness, because it is useful for releasing craving… or for any other reason.

From self to craving and from craving to self

I have mostly been talking about craving in terms of aversion (craving to avoid a negative experience). Let’s look at some examples of craving a positive experience instead:

- It is morning and your alarm bell rings. You should get up, but it feels nice to be sleepy and remain in bed. You want to hang onto those pleasant sensations of sleepiness for a little bit more.

- You are spending an evening together with a loved one. This is the last occasion that you will see each other in a long time. You feel really good being with them, but a small part of you is unhappy over the fact that this evening will eventually end.

- You are at work on a Friday afternoon. Your mind wanders to the thought of no longer being at work, and doing the things you had planned for the weekend. You would prefer to be done with work already, and find it hard to stay focused as you cling to the thoughts of your free time.

- You are single and hanging out with an attractive person. You know that they are not into you, but it would be so great if they were. You can’t stop thinking about that possibility, and this keeps distracting you from the actual conversation.

- You are in a conversation with several other people. You think of a line that would be a great response to what someone else just said. Before you can say it, somebody says something, and the conversation moves on. You find yourself still thinking of your line, and how nice it would have been to get to say it.

- You had been planning on going to a famous museum while on your vacation, but the museum turns out to be temporarily closed at the time. You keep thinking about how much you had been looking forward to it.

- You are hungry, and keep thinking about how good a particular food would taste, and how much better you would feel after you had eaten.

These circumstances are all quite different, but on a certain abstract level, they share the same kind of a mechanism. There is the thought of something pleasant, which triggers craving, and a desire to either get into that pleasant state or make sure you remain in it.

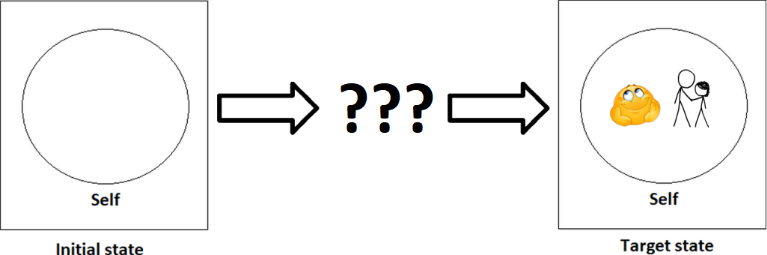

Let’s say that there is this kind of a process:

Sense contact. You have some particular experience, such as the sensation of being sleepy and in bed, or the thought of how it would feel if the attractive person in front of you were to like you. In third-person terms, this sensation/thought is sent to the global workspace [LW · GW] of your consciousness.

Valence. An emotional system classifies this experience as being positive, and paints it with a corresponding positive gloss [LW · GW] as it is being broadcast into the workspace.

Craving. A craving subsystem notices the positive valence, and interprets the valence as being experienced by you. The subsystem registers the imagined state the valence is associated with, so it sets the intention of getting the self to achieve that state, and the resulting positive valence. For example, it may set the intention of getting into a relationship with that attractive person.

Clinging and planning. This intent clings in your mind and is fed to planning subsystems. They make plans of how to get to the state which has been evaluated as being better. (My previous post [LW · GW] was largely a description of this stage.)

Birth. As these plans are broadcast into consciousness for evaluation, they contain a representation of your current state, creating a stronger experience of having a distinct self that wants a particular thing. Subsystems may emphasize particular aspects of their model of you that seem relevant for achieving the goal: for example, if the other person seems to be drawn towards musicians, your skill at this may become highlighted. Maybe you, being a musical kind of person, could play the guitar and make a good impression on them… emphasizing your “musician nature” in the self-representation that appears in consciousness.

Death. Eventually, you stop pursuing the goal, at least for the time being. Maybe you get what you wanted, it turns out to be unviable right now, or you just get distracted and forget. This particular goal-state and plan disappear from consciousness, and with it, the sense of self that was tracking its completion disappears as well. Before long - or maybe even simultaneously - another self will be created, one which cares about something completely different: maybe you got hungry, and now the craving only cares about getting some food, creating a food-hungry self...

Craving and the self-model

There’s a straightforward reason for why craving should be tied into a conception of the self: its purpose is to motivate you to action. If your brain predicts that someone other than you would experience positive valence, this does not trigger craving in the same way. (Imagine getting the one thing that you most desire at the moment. Then imagine some complete stranger getting a similar thing. Not quite the same, is it?)

Your craving subsystems have been wired to make you experience positive valence / avoid negative valence. Each craving subsystem contains an implicit schema along the lines of “I will strive towards a more positive experience, and then I will have that more positive experience in the end”. If a craving subsystem predicted that its actions would produce someone else a more positive experience, this would not fulfill the goal condition in that schema. (Of course, craving may have the instrumental goal of making someone else happy, if it predicts that leads to a positive consequence for you.)

At the same time, there is something interesting going on with the fact that mindfulness and cognitive defusion practices work: if you can mentally transform a source of negative valence into something that feels external to you, it may not bother you as much. In particular, it feels like you don’t need to react to it: that is, there is less of a craving to get it out of your consciousness. The inference of “if I get this emotion out of my mind, I will feel better” is never applied, as the emotion is not experienced as being “in my mind” in the first place.

In other words, the mere experience or prediction of positive/negative valence alone does not seem to be enough to trigger craving. The valence also needs to be bound into a particular abstract representation of the self, and interpreted as happening to “you”.

At this point, we need to distinguish between two different senses of “happening to you”:

- Happening to the system defined by the physical boundaries of your body; for conscious experiences, appearing in the global workspace located within that body. I will call this “happening to the system”.

- Happening to “the self”, an abstract tag which is computed within the system, and which feels like the entity that internal experiences such as emotions happen to; typically only includes part of the content in the global workspace. I will call this “happening to the self-model”.

Craving reacts to valence that is experienced by the self-model. It may seem to also react to events that happen to the system, such as getting to sleep for longer, but this always takes place through something that bottoms out at the self-model experiencing valence. E.g. the thought of sleeping longer creates positive valence, and the craving reacts to the positive valence becoming incorporated in the self-model. (At the same time, the predictions that craving is based on are not necessarily accurate or up to date [LW · GW], so there is also craving for things that we do not actually enjoy.)

At the same time, non-craving-based motivation may react to anything, including events that happen to the system rather than the self-model. Even if the thought of getting to sleep for longer didn't cause craving, you could still stay in bed in order to get more rest. Because this involves some degree of abstract reasoning, craving is probably something that humans need to have in place before non-craving-based systems have had a chance to mature and acquire sophisticated models. A toddler is not capable of intellectually figuring out the consequences of most harmful things and how they should thus be avoided, but the toddler does still have visceral craving to avoid pain.

Earlier in this series, I mentioned a metaphor of experiencing yourself as the sky, and all the emotions and thoughts as things that are on the sky but which do not affect the sky. This was intended as a metaphor for how it feels once your mind comes to identify with your underlying field of consciousness, as opposed to the contents of that consciousness. I noted that this raised a question which I promised to come back to: why would this kind of a shift in identification reduce suffering?

What I have outlined above suggests an answer: because craving subsystems are activated by a prediction of positive or negative valence being experienced by the self-model. If the system’s self-model changes so that experiences are no longer interpreted as happening to the self-model, craving will not trigger, nor will it produce a feeling of unsatisfactoriness. At the same time, non-craving-based motivation can still reason about the consequences of those events and respond appropriately.

In these articles, I have used the term “no-self”, because that seems to be the established translation for anatta; but I could also have used the arguably better translation of“not self”.

In other words, the brain has something of a built-in model defining what kinds of criteria a piece of conscious experience needs to fulfill in order to be incorporated into the self-model. With sufficiently close study, one may come to notice the way in which actually no conscious content fulfills this criteria: there is nothing in consciousness that is “self”... and if there is nothing that is incorporated into the self-model, then there is nothing that could trigger craving.

At the same time, some “no-self” experiences also seem to also be interpreted as “everything is self” rather than “nothing is self”. For example, my earlier post on no-self [LW · GW] quoted an experience described as "this is all me [...] my identity is literally everything that I could see through my eyes". Craving also seems reduced in these situations.

With emotions, the raw experience of the emotion is distinct from the process of naming the emotion; the same emotion may have different names in different languages. Likewise, the subsystem which creates the abstract boundary that craving responds to, and the subsystem which produces the verbal label of that boundary, may be distinct. Thus, if the experience of a boundary dividing self and non-self disappears, then this might on a verbal level be interpreted as either “all is self” or “nothing is self”, depending on which framework [LW · GW] one is using and which features of the experience one is paying attention to.

This is the fourth post of the "a non-mystical explanation of insight meditation and the three characteristics of existence" series. The next post in the series is "On the construction of the self [LW · GW]".

Some of the stick figures and musical notes in this post were borrowed from xkcd.com.

21 comments

Comments sorted by top scores.

comment by pjeby · 2020-05-24T17:33:29.504Z · LW(p) · GW(p)

Interesting model. I'm not 100% certain that mere identification+valence is sufficient (or necessary) to create craving, though. In my experience, the kind of identification that seems to create craving and suffering and mental conflict is the kind that has to do with self-image, in the sense of "what kind of person this would make me", not merely "what kind of sensory experience would I be having".

For example, I can imagine delicious food and go "mmmm" and experience that "mmm" myself, without necessarily creating attachment (vs. merely desire, and the non-self-involved flow state of seeking out food).

But perhaps I'm misinterpreting your model, since perhaps what you're saying is that I would have to also think "it would make me happy to eat that, so I should do that in order to be happy."

Though I think that I'm trying to clarify that it is not merely valence or sensation being located in the self, but that another level of indirection is required, as in your "walk to relax" example... Except that "walk to relax" is really an attempt to escape non-relaxedness, which is already a level of indirection. If I am stressed, and think of taking a walk, however, I could still feel attracted to the calming of walking, without it being an attempt to escape the stress per se.

Yeah. So ISTM that indirection and self-image are more the culprits for creating dysfunctional loops, than mere self-identified experience. Seeking escape from a negative state, or trying to manipulate how the "self" is seen, seem to me to be prerequisites for creating dysfunctional desire.

In contrast, ISTM that many things that induce suffering (e.g. wanting/not wanting to get up) are not about this indirection or self-image manipulation, but rather about wanting conflicting things.

IOW, reducing to just self-identified valence seems like a big oversimplification to me, with devils very much in the details, unless I'm misunderstanding something. Human motivation is rather complex, with a lot of different systems involved, that I roughly break down as:

- pleasure->planning (the system that goes into flow to create a future state, whose state need not include a term for the self)

- effort/reward (the system that makes us bored/frustrated on the one hand, or go sunk-cost on the other)

- moral judgment and righteousness (which can include ideas about the "right" way to do things or the "right" way of being, ideals of perfection or happiness, etc.)

- self-image/esteem (how we see ourselves, as a proxy for "what people will think")

- simple behavioral conditioning and traumatic conditioning

...and those are just what I've found it useful to break it down into. I'm sure it's a lot more complicated than just that. My own observation is that only the first subsystem produces useful motivation for personal goals without creating drama, addiction, self-sabotage, or other side effects... and then only when run in "approach" mode, rather than "avoid" mode.

So for example, the self-image/esteem module is absolutely in line with your model, in the sense that a term for "self" is in the equation, and that using the module tends to produce craving/compulsion loops. But the moral judgment system can produce craving/compulsion loops around other people's behavior, without self-reference! You can go around thinking that other people are doing the wrong thing or should be doing something else, and this creates suffering despite there not being any "self" designated in the thought process. (e.g. "Someone is wrong on the internet!" is not a thought that includes a self whose state is to be manipulated, but rather a judgment that the state of the world is wrong and must be fixed.)

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2020-05-25T11:16:34.929Z · LW(p) · GW(p)

Nice points. To start, there are a few subtleties involved.

One issue, which I thought I had discussed but which I apparently ended up deleting in an editing phase, is that while I have been referring to the Buddhist concept of dukkha as "suffering", there are some issues with that particular translation. I have also been using the term "unsatisfactoriness", which is better in some respects.

The issue is that when we say "suffering", it tends to refer to a relatively strong experience: if you felt a tiny bit of discomfort from your left sock being slightly itchy, many people would say that this does not count as suffering, it's just a bit of discomfort. But dukkha also includes your reaction to that kind of very slight discomfort.

Furthermore, you can even have dukkha that you are not conscious of. Often we think that suffering is a subjective experience, so something that you are conscious of by definition. Can you suffer of something without being conscious of the fact that you are suffering? I can avoid this kind of an issue by saying that dukkha is not exactly the same thing as our common-sense definition of suffering, and unlike the common-sense definition, it doesn't always need to be conscious. Rather, dukkha is something like a training signal that is used by the brain to optimize its functioning and to learn to avoid states with a lot of dukkha: like any other signal in the brain, it has the strongest effect when the signal becomes strong enough to make it to conscious awareness, but it has an effect even if just unconscious.

One example of unconscious dukkha might be this. Sometimes there is a kind of a background discomfort or pain that you have gotten used to, and you think that you are just fine. But once something happens to make that background discomfort go away, you realize how much better you suddenly feel, and that you were actually not okay before.

My model is something like: craving comes in degrees. A lot of factors go into determining how strong it is. Whenever there is craving, there is also dukkha, but if the craving is very subtle, then the dukkha may also be very subtle. There's a spectrum of how easy it is to notice, going roughly something like:

- Only noticeable in extremely deep states of meditative absorption; has barely any effect on decision-making

- Hovering near the threshold of conscious awareness, becoming noticeable if it disappears or when there's nothing else going on that could distract you

- Registers as a slight discomfort, but will be pushed away from consciousness by any distraction

- Registers as a moderate discomfort that keeps popping up even as other things are going on

- Experienced as suffering, obvious and makes it hard to focus on anything else

- Extreme suffering, makes it impossible to think about anything else

So when you say that suffering seems to be most strongly associated with wanting conflicting things, I agree with that... that is, I agree that that tends to produce the strongest levels of craving (by making two strong cravings compete against each other), and thus the level of dukkha that we would ordinarily call "suffering".

At the same time, I also think that there are levels of craving/dukkha that are much subtler, and which may be present even in the case of e.g. imagining a delicious food - they just aren't strong enough to consciously register, or to have any other effect on decision-making; the main influence in those cases is from non-craving-based motivations. (When the craving is that subtle, there's also a conflict, but rather than being a conflict between two cravings, it's a conflict between a craving and how reality is - e.g. "I would like to eat that food" vs. "I don't actually have any of that food right now".)

perhaps what you're saying is that I would have to also think "it would make me happy to eat that, so I should do that in order to be happy."

I think there's something like this going on, yes. I mentioned in my previous post [LW · GW] that

a craving for some outcome X tends to implicitly involve at least two assumptions:

1. achieving X is necessary for being happy or avoiding suffering

2. one cannot achieve X except by having a craving for it

Both of these assumptions are false, but subsystems associated with craving have a built-in bias to selectively sample evidence which supports these assumptions, making them frequently feel compelling. Still, it is possible to give the brain evidence which lets it know that these assumptions are wrong: that it is possible to achieve X without having craving for it, and that one can feel good regardless of achieving X.

One way that I've been thinking of this, is that a craving is a form of a hypothesis, in the predictive processing sense where hypotheses drive behavior by seeking to prove themselves true. For example, your visual system may see someone's nose and form the hypothesis that "the thing that I'm seeing is a nose, and a nose is part of a person's face, so I'm seeing someone's face". That contains the prediction "faces have eyes next to the nose, so if I look slightly up and to the right I will see an eye, and if I look left from there I will see another eye"; it will then seek to confirm its prediction by making you look at those spots and verify that they do indeed contain eyes.

This is closely related to two points that you've talked about before; that people form unconscious beliefs about what they need in order to be happy, and that the mind tends to generate filters which pick out features of experience that support the schema underlying the filter - sometimes mangling the input quite severely to make it fit the filter. The "I'm seeing a face" hypothesis is a filter that picks out the features - such as eyes - which support it. In terms of the above, once a craving hypothesis for X is triggered, it seeks to maintain the belief that happiness requires getting X, focusing on evidence which supports that belief. (To be clear, I'm not saying that all filters are created by craving; rather, craving is one subtype of such a filter.)

My model is that the brain has something like a "master template for craving hypotheses". Whenever something triggers positive or negative valence, the brain "tries on" the generic template for craving ("I need to get / avoid this in order to be happy") adapted to this particular source of valence. How strong of a craving is produced, depends on how much evidence can be found to support the hypothesis. If you just imagine a delicious food but aren't particularly hungry, then there isn't much of a reason to believe that you need it for your happiness, so the craving is pretty weak. If you are stressed out and seriously need to get some work done, then "I need to relax while I'm on my walk" has more evidence in its favor, so it produces a stronger craving.

One description for the effects of extended meditative practice is "you suffer less, but you notice it more". Based on the descriptions and my own experience, I think this means roughly the following:

- By doing meditative practices, you develop better introspective awareness and ability to pay attention to subtle nuances of what's going on in your mind.

- As your ability to do this improves, you become capable of seeing the craving in your mind more clearly.

- All craving hypotheses are ultimately false, because they hold that craving is necessary for avoiding dukkha (discomfort), but actually craving is that which generates dukkha in the first place. Each craving hypothesis attributes dukkha to an external source, when it is actually an internally-generated error signal.

- When your introspective awareness and equanimity sharpen enough, your mind can grab onto a particular craving without getting completely pulled into it. This allows you to see that the craving is trying to avoid discomfort, and that it is also creating discomfort by doing so.

- Seeing both of these at the same time proves the craving hypothesis false, triggering memory reconsolidation and eliminating the craving.

- In order to see the craving clearly enough to eliminate it, your introspective awareness had to become sharper and more capable of magnifying subtle signals to the level of conscious awareness. As a result, as you eliminate strong and moderate-strength cravings, the "detection threshold" for when a craving and its associated dukkha is strong enough to become consciously detectable drops. Cravings and discomforts which were previously too subtle to notice, now start appearing in consciousness.

- The end result is that you have less dukkha (suffering) overall, but become better at noticing those parts of it that you haven't eliminated yet.

There are some similarities between working with craving, and the kind of work with the moral judgment system that you discussed in your post about it [LW · GW]. That is, we have learned rules/beliefs which trigger craving in particular situations, just as we have learned rules/beliefs which trigger moral judgment in some situations. As with moral judgment, craving is a system in the brain that cannot be eliminated entirely, and lots of its specific instances need to be eliminated separately - but there are also interventions deeper in the belief network that propagate more widely, eliminating more cravings.

One particular problem with eliminating craving is that even as you eliminate particular instances of it, new craving keeps being generated, as the underlying beliefs about its usefulness are slow to change even as special cases get repeatedly disproven. The claim from Buddhist psychology, which my experience causes me to consider plausible, is that the beliefs which cause cravings to be learned are entangled with beliefs about the self. Changing the beliefs which form the self-model cause changes to craving - as the conception of "I" changes, that changes the kinds of evidence which are taken to support the hypothesis of "I need X to be happy". Drastic enough updates to the self-model can cause a significant reduction in the amount of craving that is generated, to the point that one can unlearn it faster than it is generated.

Though I think that I'm trying to clarify that it is not merely valence or sensation being located in the self, but that another level of indirection is required, as in your "walk to relax" example...

So for craving, indirection can certainly make it stronger, but at its most basic it's held to be a very low-level response to any valence. Physical pain and discomfort is the most obvious example: pain is very immediate and present, but if becomes experienced as less self-related, it too becomes less aversive. In an earlier comment [LW(p) · GW(p)], I described an episode in which my sense of self seemed to become temporarily suspended; the result was that strong negative valence (specifically cold shock from an icy shower) was experienced just as strongly and acutely as before, but it lacked the aversive element - I got out of the shower because I was concerned about the health effects of long-term exposure, but could in principle have remained there for longer if I had wanted. I have had other similar experiences since then, but that one was the most dramatic illustration.

In the case of physical pain, the hypothesis seems to be something like "I have to get this sensation of pain out of my consciousness in order to feel good". If that hypothesis is suspended, one still experiences the sensation of pain, but without the need to get it out of their mind.

(This sometimes feels really weird - you have a painful sensation in your mind, and it feels exactly as painful as always, and you keep expecting yourself to flinch away from it right now... except, you just never do. It just feels really painful and the fact that it feels really painful also does not bother you at all, and you just feel totally confused.)

But the moral judgment system can produce craving/compulsion loops around other people's behavior, without self-reference! You can go around thinking that other people are doing the wrong thing or should be doing something else, and this creates suffering despite there not being any "self" designated in the thought process. (e.g. "Someone is wrong on the internet!" is not a thought that includes a self whose state is to be manipulated, but rather a judgment that the state of the world is wrong and must be fixed.)

So there's a subtlety in that the moral judgment system is separate from the craving system, but it does generate valence that the craving system also reacts to, so their operation gets kinda intermingled. (At least, that's my working model - I haven't seen any Buddhist theory that would explicitly make these distinctions, though honestly that may very well just be because I haven't read enough of it.)

So something like:

- You witness someone being wrong on the internet

- The moral judgment system creates an urge to argue with them

- Your mind notices this urge and forms the prediction that resisting it would feel unpleasant, and even though giving into it isn't necessarily pleasant either, it's at least less unpleasant than trying to resist the urge

- There's a craving to give in to the urge, consisting of the hypothesis that "I need to give in to this urge and prove the person on the internet wrong, or I will experience greater discomfort than otherwise"

- The craving causes you to give in to the urge

This is a nice example of how cravings are often self-fulfilling prophecies. Experiencing a craving is unpleasant; when there is negative valence from resisting an urge, craving is generated which tries to resist that negative valence. The negative valence would not create discomfort by itself, but there is discomfort generated by the combination of "craving + negative valence". The craving says that "if I don't give in to the urge, there will be discomfort"... and as soon as you give in to the urge, the craving has gotten you to do what it "wanted" you to do, so it disappears and the discomfort that was associated with it disappears as well. So the craving just "proved" that you had to give in to the urge in order to avoid the discomfort from the negative valence... even though the discomfort was actually produced by the craving itself!

Whereas if you eliminated the craving to avoid this particular discomfort, then the discomfort from resisting the urge would also disappear. Note that this does not automatically mean that you would resist the urge: it just means that you'd have the option to, if you had some reason to do so. But falsifying the beliefs behind the craving is distinct from falsifying the beliefs that triggered the moral judgment system; you might still give in to the urge, if you believed it to be correct and justified. (This is part of my explanation for why it seems that you can reach high levels of enlightenment and see through the experience of the self, and still be a complete jerk towards others.)

Replies from: pjeby↑ comment by pjeby · 2020-05-25T21:09:39.050Z · LW(p) · GW(p)

This is all very interesting, but I can't help but notice that this idea of valence doesn't seem to be paying rent in predictions that are different from what I'd predict without it. And to the extent it does make different predictions, I don't think they're accurate, as they predict suffering or unsatisfactoriness where I don't consciously experience it, and I don't see what benefit there is to having an invisible dragon in that context.

I mean, sure, you can say there is a conflict between "I want that food" and "I don't have it", but this conflict can only arise (in my experience) if there is a different thought behind "I want", like "I should". If "I want" but "don't have", this state is readily resolved by either a plan to get it, or a momentary sense of loss in letting go of it and moving on to a different food.

In contrast, if "I should" but "don't have", then this actually creates suffering, in the form of a mental loop arguing that it should be there, but it isn't, but it was there, but someone ate it, and they shouldn't have eaten it, and so on, and so forth, in an undending loop of hard-to-resolve suffering and "unsatisfactoriness".

In my model, I distinguish between these two kinds of conflict -- trivially resolved and virtually irreconcilable -- because only one of them is the type that people come to me for help with. ;-) More notably, only one can reasonably be called "suffering", and it's also the only one where meditation of some sort might be helpful, since the other will be over before you can start meditating on it. ;-)

If you want to try to reduce this idea further, one way of distinguishing these types of conflict is that "I want" means "I am thinking of myself with this thing in the future", whereas "I should" means "I am thinking of myself with this thing in the past/present".

Notice that only one of these thoughts is compatible with the reality of not having the thing in the present. I can not-have food now, and then have-food later. But I can't not-have food now, and also have-food now, nor can I have-food in the past if I didn't already. (No time travel allowed!)

Similarly, in clinging to positive things, we are imagining a future negative state, then rejecting it, insisting the positive thing should last forever. It's not quite as obvious a causality violation as time travel, but it's close. ;-)

I guess what I'm saying here is that ISTM we experience suffering when our "how things (morally or rightly) ought to be" model conflicts with our "how things actually are" model, by insisting that the past, present, or likely future are "wrong". This model seems to me to be a lot simpler than all these hypotheses about valence and projections and self-reference and whatnot.

You say that :

- You witness someone being wrong on the internet

- The moral judgment system creates an urge to argue with them

- Your mind notices this urge and forms the prediction that resisting it would feel unpleasant, and even though giving into it isn't necessarily pleasant either, it's at least less unpleasant than trying to resist the urge

- There's a craving to give in to the urge, consisting of the hypothesis that "I need to give in to this urge and prove the person on the internet wrong, or I will experience greater discomfort than otherwise"

- The craving causes you to give in to the urge

But this seems like adding unnecessary epicycles. The idea of an "urge" does not require the extra steps of "predicting that resisting the urge would be unpleasant" or "having a craving to give in to the urge", etc., because that's what "having an urge" means. The other parts of this sequence are redundant; it suffices to say, "I have an urge to argue with that person", because the urge itself combines both the itch and the desire to scratch it.

Notably, hypothesizing the other parts doesn't seem to make sense from an evolutionary POV, as it is reasonable to assume that the ability to have "urges" must logically precede the ability to make predictions about the urges, vs. the urges themselves encoding predictions about the outside world. If we have evolved an urge to do something, it is because evolution already "thinks" it's probably a good idea to do the thing, and/or a bad idea not to, so another mechanism that merely recapitulates this logic would be kind of redundant.

(Not that redundancy can't happen! After all, our brain is full of it. But such redundancy as described here isn't necessary to a logical model of craving or suffering, AFAICT.)

Replies from: Kaj_Sotala, Kaj_Sotala↑ comment by Kaj_Sotala · 2020-05-26T11:48:56.764Z · LW(p) · GW(p)

Well, whether or not a model is needlessly complex depends on what it needs to explain. :-)

Back when I started thinking about the nature of suffering, I also had a relatively simple model [LW · GW], basically boiling down to "suffering is about wanting conflicting things". (Upon re-reading that post from nine years back, I see that I credit you for a part of the model that I outlined there. We've been at this for a while. :-)) I still had it until relatively recently. But I found that there were things which it didn't really explain or predict. For example:

- You can decouple valence and aversion, so that painful sensations appear just as painful as before, but do not trigger aversion.

- Changes to the sense of self cause changes even to the aversiveness of things that don't seem to be related to a self-model (e.g. physical pain).

- You can learn to concentrate better by training your mind to notice [LW · GW] that it keeps predicting that indulging in a distraction is going to eliminate the discomfort from the distracting urges, but that it could just as well just drop the distraction entirely.

- There are mental moves that you can make to investigate craving, in such a way which causes the mind to notice that maintaining the craving is actually preventing it from feeling good, and then dropping it.

- If you can get your mind into states in which there is little or no craving, then those states will feel intrinsically good without regard to their valence.

- Upon investigation, you can notice that many states that you had thought were purely pleasant actually contain a degree of subtle discomfort; releasing the craving in those states then gets you into states that are more pleasant overall.

- If you train your mind to have enough sensory precision, you can eventually come to directly observe how the mind carries out the kinds of steps that I described under "Let’s say that there is this kind of a process": an experience being painted with valence, that valence triggering craving, a new self being fabricated by that craving, and so on.

From your responses, it's not clear to me how much credibility you lend to these kinds of claims. If you feel that meditation doesn't actually provide any real insight into how minds work and that I'm just deluded, then I think that that's certainly a reasonable position to hold. I don't think that that position is true, mind you, but it seems reasonable that you might be skeptical. After all, most of the research on the topic is low quality, there's plenty of room for placebo and motivated reasoning effects, introspection is famously unreliable, et cetera.

But ISTM that if you are willing to at least grant that me and others who are saying these kinds of things are not outright lying about our subjective experience... then you need to at least explain how come it seems to us like the urge and the aversion from resisting the urge can become decoupled, or why it seems to us like reductions in the sense of self systematically lead to reductions in the aversiveness of negative valence.

I agree that if I were just developing a model of human motivation and suffering from first principles and from what seems to make evolutionary sense, I wouldn't arrive at this kind of an explanation. "An urge directly combines an itch and the desire to scratch it" would certainly be a much more parsimonious model... but it would then predict that you can't have an urge without a corresponding need to engage in it, and that prediction is contradicted both by my experience and the experience of many others who engage in these kinds of practices.

Replies from: pjeby↑ comment by pjeby · 2020-05-27T17:04:37.497Z · LW(p) · GW(p)

No, that's a good point, as far as it goes. There does seem to be some sort of meta-process that you can use to decouple from craving regarding these things, though in my experience it seems to require continuous attention, like an actively inhibitory process. In contrast, the model description you gave made it sound like craving was an active process that one could simply refrain from, and I don't think that's predictively accurate.

Your points regarding what's possible with meditation also make some sense... it's just that I have trouble reconciling the obvious evolutionary model with "WTF is meditation doing?" in a way that doesn't produce things that shouldn't be there.

Consciously, I know it's possible to become willing to experience things that you previously were unwilling to experience, and that this can eliminate aversion. I model this largely under the second major motivational mechanic, that of risk/reward, effort/payoff.

That is, that system can decide that some negative thing is "worth it" and drop conflict about it. And meditation could theoretically reset the threshold for that, since to some extent meditation is just sitting there, despite the lack of payoff and the considerable payoffs offered to respond to current urges. If this recalibrates the payoff system, it would make sense within my own model, and resolve the part where I don't see how what you describe could be a truly conscious process, in the way that you made it sound.

IOW, I might more say that part of our motivational system is a module for determining what urges should be acted upon and which are not worth it, or perhaps that translates mind/body/external states into urges or lack thereof, and that you can retrain this system to have different baselines for what constitutes "urge"-ency. ;-) (And thus, a non-conscious version of "valence" in your model.)

That doesn't quite work either, because ISTM that meditation changes the threshold for all urges, not just the specific ones trained. Also, the part about identification isn't covered here either. It might be yet another system being trained, perhaps the elusive "executive function" system?

On the other hand, I find that the Investor (my name for the risk/reward, effort/payoff module) is easily tricked into dropping urges for reasons other than self-identification. For example, the Investor can be tricked into letting you get out of a warm bed into a cold night if you imagine you have already done so. By imagining that you are already cold, there is nothing to be gained by refraining from getting up, and this shifts the "valence", as you call it, in favor of getting up, because the Investor fundamentally works on comparing projections against an "expected status quo". So if you convince it that some other status quo is "expected", it can be made to go along with almost anything.

And so I suppose if you imagine that it is not you who is the one who is going to be cold, then that might work just as well. Or perhaps making it "not me" somehow convinces the Investor that the changes in state are not salient to its evaluations?

Hm. Now that my attention has been drawn to this, it's like an itch I need to scratch. :) I am wondering now, "Wait, why is the Investor so easily tricked?" And for that matter, given that it is so easily tricked, could the feats attributed to long-term meditation be accomplished in general using such tricks? Can I imagine my way to no-self and get the benefits without meditating, even if only temporarily?

Also, I wonder if I have been overlooking the possibility to use Investor mind-tricks to deal with task-switching inertia, which is very similar to having to get out of a warm bed. What if I imagine I have already changed tasks? Hm. Also, if I am imagining no-self, will starting unpleasant tasks be less aversive?

Okay, I'm off to experiment now. This is exciting!

Replies from: Korz, Kaj_Sotala↑ comment by Mart_Korz (Korz) · 2020-05-27T22:33:21.309Z · LW(p) · GW(p)

I am very much impressed by the exchange in the parent-comments and cannot upvote sufficiently.

With regards to the 'mental motion':

In contrast, the model description you gave made it sound like craving was an active process that one could simply refrain from [...]

As I see it, the perspective of this (sometimes) being an active process makes sense from the global workspace theory perspective: There is a part of one's mind that actually decides on activating craving or not. (Especially if trained through meditation) it is possible to connect this part to the global workspace and thus consciousness, which allows noticing and influencing the decision. If this connection is strong enough and can be activated consciously, it can make sense to call this process a mental motion.

↑ comment by Kaj_Sotala · 2020-05-29T13:45:11.623Z · LW(p) · GW(p)

Cool. :)

There does seem to be some sort of meta-process that you can use to decouple from craving regarding these things, though in my experience it seems to require continuous attention, like an actively inhibitory process. In contrast, the model description you gave made it sound like craving was an active process that one could simply refrain from, and I don't think that's predictively accurate.

An analogy that I might use is that learning to let go of craving, is kind of the opposite of the thing where you practice an effortful thing until it becomes automatic. Craving usually triggers automatically and outside your conscious control, but you can come to gradually increase your odds of being able to notice it, catch it, and do something about it.

"An actively inhibitory process" sounds accurate for some of the mental motions involved. Though merely just bringing more conscious attention to the process also seems to affect it, and in some cases interrupt it, even if you don't actively inhibit it.

If this recalibrates the payoff system, it would make sense within my own model, and resolve the part where I don't see how what you describe could be a truly conscious process, in the way that you made it sound.

Not sure how I made it sound :-) but a good description might be "semi-conscious", in the same sense that something like Focusing can be: you do it, something conscious comes up, and then a change might happen. Sometimes enough becomes consciously accessible that you can clearly see what it was about, sometimes you just get a weird sensation and know that something has shifted, without knowing exactly what.

Okay, I'm off to experiment now. This is exciting!

Any results yet? :)

Replies from: pjeby↑ comment by pjeby · 2020-05-31T00:43:57.583Z · LW(p) · GW(p)

Eh. Sorta? I've been busy with clients the last few days, not a lot of time for experimenting. I have occasionally found myself, or rather, found not-myself, several times, almost entirely accidentally or incidentally. A little like a perspective shift changing between two possible interpretations of an image; or more literally, like a shift between first-person, and third-person-over-the-shoulder in a video game.

In the third person perspective, I can observe limbs moving, feel the keys under my fingers as they type, and yet I am not the one who's doing it. (Which, I suppose, I never really was anyway.)

TBH, I'm not sure if it's that I haven't found any unpleasant experiences to try this on, or if it's more that because I've been spontaneously shifting to this state, I haven't found anything to be an unpleasant experience. :-)

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2020-06-01T08:26:04.166Z · LW(p) · GW(p)

Cool, that sounds like a mild no-self state alright. :) Though any strong valence is likely to trigger a self schema and pull you out of it, but it's a question of practice.

Your description kinda reminds me of the approach in Loch Kelly's The Way of Effortless Mindfulness; it has various brief practices that may induce states like the one that you describe. E.g. in this one, you imagine the kind of a relaxing state in which there is no problem to solve and the sense of self just falls away. (Directly imagining a no-self state is hard, because checking whether you are in a no-self state yet activates the self-schema. But if you instead imagine an external state which is likely to put you in a no-self state, you don't get that kind of self-reference, no pun intended.)

First, read this mindful glimpse below. Next, choose a memory of a time you felt a sense of freedom, connection, and well-being. Then do this mindful glimpse using your memory as a door to discover the effortless mindfulness that is already here now.

1. Close your eyes. Picture a time when you felt well-being while doing something active like hiking in nature. In your mind, see and feel every detail of that day. Hear the sounds, smell the smells, and feel the air on your skin; notice the enjoyment of being with your companions or by yourself; recall the feeling of walking those last few yards toward your destination.

2. Visualize and feel yourself as you have reached your goal and are looking out over the wide-open vista. Feel that openness, connection to nature, sense of peace and well-being. Having reached your goal, feel what it’s like when there’s no more striving and nothing to do. See that wide-open sky with no agenda to think about, and then simply stop. Feel this deep sense of relief and peace.

3. Now, begin to let go of the visualization, the past, and all associated memories slowly and completely. Remain connected to the joy of being that is here within you.

4. As you open your eyes, feel how the well-being that was experienced then is also here now. It does not require you to go to any particular place in the past or the future once it’s discovered within and all around.

Recently I've also gotten interested in the Alexander Technique, which seems to have a pretty straightforward series of steps for expanding your awareness and then getting your mind to just automatically do things in a way which feels like non-doing. It also seems to induce the kinds of states that you describe, of just watching oneself work, which I had previously only gotten from meditation.

Can you pick up a ball without trying to pick up the ball? It sounds contradictory, but it turns out that there is a specific behaviour we do when we are “trying”, and this behaviour is unnecessary to pick up the ball.

How is this possible? Well, consider when you’ve picked up something to fiddle with without realising. You didn’t consciously intend for it to end up in your hand, but there it is. There was an effortlessness to it. [...]

But this kind of non-‘deliberate’ effortless action needn’t be automatic and unchosen, like a nervous fiddling habit; nor need it require redirected attention / collapsed awareness, like not noticing you picked up the object. You can be fully aware of what you’re doing, and ‘watch’ yourself doing it, while choosing to do it, and yet still have there be this effortless “it just happened” quality. [...]

Suppose you do actually want to pick up that ball over there. But you don’t want to ‘do’ picking-up-the-ball. The solution is to set an intention.

[1] Have the intention to pick up the ball. [2] Expand your awareness to include what’s all around you, the room, the route to the ball, and your body inside the room. [3] Notice any reactions of trying to do picking-up-the-ball (like “I am going to march over there and pick up that ball”, or “I am going to get ready to stand up so I can go pick up that ball”, or “I am going to approach the ball to pick it up”) — and decline those reactions. [4] Wait. Patiently hold the intention to pick up the ball. Don’t stop yourself from moving — stopping yourself is another kind of ‘doing’ — yet don’t try to deliberately/consciously move. [5] Let movement happen.

↑ comment by Kaj_Sotala · 2020-06-01T08:42:19.889Z · LW(p) · GW(p)

Notably, hypothesizing the other parts doesn't seem to make sense from an evolutionary POV, as it is reasonable to assume that the ability to have "urges" must logically precede the ability to make predictions about the urges, vs. the urges themselves encoding predictions about the outside world. If we have evolved an urge to do something, it is because evolution already "thinks" it's probably a good idea to do the thing, and/or a bad idea not to, so another mechanism that merely recapitulates this logic would be kind of redundant.

A hypothesis that I've been considering, is whether the shift to become more social might have caused a second layer of motivation to evolve. Less social animals animals can act purely based on physical considerations like the need to eat or avoid a predator, but for humans every action has potential social implications, so needs to also be evaluated in that light. There are some interesting anecdotes like Helen Keller's account suggesting that she only developed a self after learning language. The description of her old state of being sounds like there was just the urge, which was then immediately acted upon; and that this mode of operation then became irreversibly altered:

Before my teacher came to me, I did not know that I am. [...] I cannot hope to describe adequately that unconscious, yet conscious time of nothingness. I did not know that I knew aught, or that I lived or acted or desired. I had neither will nor intellect. I was carried along to objects and acts by a certain blind natural impetus. I had a mind which caused me to feel anger, satisfaction, desire. These two facts led those about me to suppose that I willed and thought. [...] I never viewed anything beforehand or chose it. [...] My inner life, then, was a blank without past, present, or future, without hope or anticipation, without wonder or joy or faith. [...]

I remember, also through touch, that I had a power of association. I felt tactual jars like the stamp of a foot, the opening of a window or its closing, the slam of a door. After repeatedly smelling rain and feeling the discomfort of wetness, I acted like those about me: I ran to shut the window. But that was not thought in any sense. It was the same kind of association that makes animals take shelter from the rain. From the same instinct of aping others, I folded the clothes that came from the laundry, and put mine away, fed the turkeys, sewed bead-eyes on my doll's face, and did many other things of which I have the tactual remembrance. When I wanted anything I liked,—ice-cream, for instance, of which I was very fond,—I had a delicious taste on my tongue (which, by the way, I never have now), and in my hand I felt the turning of the freezer. I made the sign, and my mother knew I wanted ice-cream. I "thought" and desired in my fingers. [...]

I thought only of objects, and only objects I wanted. It was the turning of the freezer on a larger scale. When I learned the meaning of "I" and "me" and found that I was something, I began to think. Then consciousness first existed for me. Thus it was not the sense of touch that brought me knowledge. It was the awakening of my soul that first rendered my senses their value, their cognizance of objects, names, qualities, and properties. Thought made me conscious of love, joy, and all the emotions. I was eager to know, then to understand, afterward to reflect on what I knew and understood, and the blind impetus, which had before driven me hither and thither at the dictates of my sensations, vanished forever.

Would also make sense in light of the observation that the sense of self may disappear when doing purely physical activities (you fall back to the original set of systems which doesn't need to think about the self), the PRISM model of consciousness as a conflict-solver, the way that physical and social reasoning seem to be pretty distinct [LW · GW], and a kind of a semi-modular approach (you have the old primarily physical system, and then the new one that can integrate social considerations on top of the old system's suggestions just added on top). If you squint, the stuff about simulacra [LW · GW] also feels kinda relevant, as an entirely new set of implications that diverge from physical reality and need to be thought about on their own terms.

I wouldn't be very surprised if this hypothesis turned out to be false, but at least there's suggestive evidence.

comment by kithpendragon · 2020-05-25T15:13:03.623Z · LW(p) · GW(p)

I've been examining anatta recently, and this article really helped clarify some thinking for me! It clicked when my training in computer science began framing the problem in terms of a Self class that gets instantiated each time a subroutine needs a Self object to manipulate for some project. If the brain doesn't do a good job cleaning up old instances, or if multiple instances of the same class have a tendency to coincide and share memory space (perhaps they cross-link heavily to save RAM, as it were), it might lead to a sense of a continuous entity.

Decreasing the coincident instances of Self by reducing dependencies in the decision making processes on the craving subroutines that heavily depend on Self objects could lead to times where some processes looks for any current instance of Self but finds none available (because they've all been cleaned up for once), then returns a code for NO_SELF_FOUND. This could lead to a feeling of "there is no self" as an observation on the current state of the Global workspace. The calling process may also elect to work in Global directly. If another process then notices self-like code hanging out nakedly in Global it might start acting like Global is an instance of Self, leading to a sense of "all is self".

If true, this would explain why there's so much disagreement on the best translation of "anatta", and also why teachers sometimes claim that no-self and all-self amount to the same thing in the end.

I don't know if all that is functionally representative of what's going on, but it seems worth playing with for a while. At the least, it gives a good sense of why we might pretend to "be the sky"!

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2020-05-26T11:52:50.295Z · LW(p) · GW(p)

I'm not sure if I managed to follow all of this, but at least the first paragraph seems spot-on to me. :)

comment by Steven Byrnes (steve2152) · 2020-05-23T12:53:18.568Z · LW(p) · GW(p)

Fascinating!

My running theory so far (a bit different from yours) would be:

- Motivation = prediction of reward

- Craving = unhealthily strong motivation—so strong that it breaks out of the normal checks and balances that prevent wishful thinking [LW · GW] etc.

- When empathetically simulating someone's mental state, we evoke the corresponding generative model in ourselves (this is "simulation theory"), but it shows up in attenuated form, i.e. with weaker (less confident) predictions (I've already been thinking that, see here [LW · GW]).

- By meditative practice, you can evoke a similar effect in yourself, sorta distancing yourself from your feelings and experiencing them in a quasi-empathetic way.

- ...Therefore, this is a way to turn cravings (unhealthily strong motivations) into mild, healthy, controllable motivations

Incidentally, why is the third bullet point true, and how is it implemented? I was thinking about that this morning and came up with the following ... If you have generative model A that in turn evokes (implies) generative model B, the model B inherits the confidence of A, attenuated by a measure of how confident you are that A leads to B (basically, P(A) × P(B|A)). So if you indirectly evoke a generative model, it's guaranteed to appear with a lower confidence value than if you directly evoke it.

In empathetic simulation, A would be the model of the person you're simulating, and B would be the model that you think that person is thinking / feeling.

Sorry if that's stupid, just thinking out loud :)

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2020-05-25T11:23:29.235Z · LW(p) · GW(p)

Hmm... I think that there's something else going on than just an unhealthily strong motivation, given that craving looks like a hypothesis that can often be disproven - see my reply to pjeby [LW(p) · GW(p)] in the other comment.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2020-05-29T13:26:25.629Z · LW(p) · GW(p)

Interesting!

I don't really see the idea of hypotheses trying to prove themselves true. Take the example of saccades that you mention. I think there's some inherent (or learned) negative reward associated with having multiple active hypotheses (a.k.a. subagents a.k.a. generative models) that clash with each other by producing confident mutually-inconsistent predictions about the same things. So if model A says that the person coming behind you is your friend and model B says it's a stranger, then that summons model C which strongly predicts that we are about to turn around and look at the person. This resolves the inconsistency, and hence model C is rewarded, making it ever more likely to be summoned in similar circumstances in the future.

You sorta need multiple inconsistent models for it to make sense for one to prove one of them true. How else would you figure out which part of the model to probe? If a model were trying to prevent itself from being falsified, that would predict that we look away from things that we're not sure about rather than towards them.

OK, so here's (how I think of) a typical craving situation. There are two active models.

Model A: I will eat a cookie and this will lead to an immediate reward associated with the sweet taste

Model B: I won't eat the cookie, instead I'll meditate on gratitude and this will make me very happy

Now in my perspective, this is great evidence that valence and reward are two different things. If becoming happy is the same as reward, why haven't I meditated in the last 5 years even though I know it makes me happy? And why do I want to eat that cookie even though I totally understand that it won't make me smile even while I'm eating it, or make me less hungry, or anything?

When you say "mangling the input quite severely to make it fit the filter", I guess I'm imagining a scenario like, the cookie belongs to Sally, but I wind up thinking "She probably wants me to eat it", even if that's objectively far-fetched. Is that Model A mangling the evidence to fit the filter? I wouldn't really put it that way...

The thing is, Model A is totally correct; eating the cookie would lead to an immediate reward! It doesn't need to distort anything, as far as it goes.

So now there's a Model A+D that says "I will eat the cookie and this will lead to an immediate reward, and later Sally will find out and be happy that I ate the cookie, which will be rewarding as well". So model A+D predicts a double reward! That's a strong selective pressure helping advance that model at the expense of other models, and thus we expect this model to be adopted, unless it's being weighed down by a sufficiently strong negative prior, e.g. if this model has been repeatedly falsified in the past, or if it contradicts a different model which has been repeatedly successful and rewarded in the past.

(This discussion / brainstorming is really helpful for me, thanks for your patience.)

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2020-06-05T07:42:54.364Z · LW(p) · GW(p)

If a model were trying to prevent itself from being falsified, that would predict that we look away from things that we're not sure about rather than towards them.

That sounds like the dark room problem. :) That kind of thing does seem to sometimes happen, as people have varying levels of need for closure. But there seem to be several competing forces going on, one of them being a bias towards proving the hypothesis true by sampling positive evidence, rather than just avoiding evidence.

Model A: I will eat a cookie and this will lead to an immediate reward associated with the sweet taste

Model B: I won't eat the cookie, instead I'll meditate on gratitude and this will make me very happy

Now in my perspective, this is great evidence that valence and reward are two different things. If becoming happy is the same as reward, why haven't I meditated in the last 5 years even though I know it makes me happy? And why do I want to eat that cookie even though I totally understand that it won't make me smile even while I'm eating it, or make me less hungry, or anything?

This is actually a nice example, because I claim that if you learn and apply the right kinds of meditative techniques and see the craving in more detail, then your mind may notice that eating the cookie actually won't bring you very much lasting satisfaction (even if it does bring a brief momentary reward)... and then it might gradually shift over to preferring meditation instead. (At least in the right circumstances; motivation is affected by a lot of different factors.)

Which cravings get favored in which circumstances looks like a complex question, that I don't have a full model of... but we know from human motivation in general [LW · GW] that there's a bias towards actions that bring immediate rewards. To some extent it might be a question of the short-term rewards simply getting reinforced more. Eating a cookie takes less time than meditating for an hour, so if you are more likely to eat more cookies than you finish meditation sessions, each eaten cookie slightly notching up the estimated confidence in the hypothesis and biasing your future decisions even more in favor of the cookie.

The thing is, Model A is totally correct; eating the cookie would lead to an immediate reward! It doesn't need to distort anything, as far as it goes.

So the prediction that craving makes isn't actually "eating the cookie will bring reward"; I'm not sure of what the very exact prediction is, but it's closer to something like "eating the cookie will lead to less dissatisfaction". And something like the following may happen:

You're trying to meditate, and happen to think of the cookie on your desk. You get a craving to stop meditating and go eat the cookie. You try to resist the craving, but each moment that you resist it feels unpleasant. Your mind keeps telling you that if you just gave in to the temptation, then the discomfort from resisting it would stop. Finally, you might give in, stopping your meditation session short and going to eat the cookie.

What happened here was that the craving told you that in order to feel more satisfied, you need to give in to the craving. When you did go eat the cookie, this prediction was proven true. But there was a self-fulfilling prophecy there: the craving told you that the only way to eliminate the discomfort was by giving in to the craving, when just dropping the craving would also have eliminated the discomfort. Maybe the craving didn't exactly distort the sense data, but it certainly sampled a very selected part of it.

The reason why I like to think of cravings as hypotheses, is that if you develop sufficient introspective awareness for the mind to see in real time that the craving is actively generating discomfort rather than helping avoid it, (that particular) craving will be eliminated. The alternative hypothesis that replaces it is then something like "I'm fine even if I go without a cookie for a while".

comment by Slider · 2020-05-23T17:19:24.910Z · LW(p) · GW(p)

With the term homing in english it raised questions about which terms would be appropriate for my non-secondary languages.

After Reflecting somewhat list seems like:

"mukava" - adjective for positive craving

"vaivata" - verb for suffering

"välittää" - verb for craving

Becuase of somewhat high systematicity and low language velocity finnish is somewhat more stable in derived words. "Mukava" could be translated as pleasant/nice but there is a case to be made that it really means "compatible with how I am". Another word near the root word is "muokata" which would be translated as edit is to transform another (to be fit for a specific purpose). "Mukautua" could be translated as "fit in" or to "adapt".

"vaivata" could also be translated as "bother" but also has a meaning close to "suffer". The idiom "nähdä vaivaa" - to see suffering - means to expend effort/work. A term referring to observation is curious and suggest a model where you "evaluate a thing as something that should be rectrified" almost automatically leading to start working towards that goal. An almost exact opposite image is in the english idiom "Have an issue? grab a tissue.", I won't act on this even if negative valence would be found here (by some other person).

"Välittää" by itself would translate as "to care" but "väli" by itself means space/gap (Viruscounter measures include slogans such as "Välitä" having a double meaning of "Be considerate of others" and "keep a spatial separation"). One could argue the concept has a side of "to determine what the thing means in relation to you". This would concpetually link caring to self-image reference. Or it could mean what is the "value gap" of two different states, would things really be better if one of the options actually was the case?

Not the most unmysterious but seems like signifcant connocation space with fairly objective meaning grounding for those access to both languages terms.

Reading the mediation information made me experience the video game "Control" in a very different manner. Again finnish cultural knowledge might be a benefit. Them fighting the Hiss is very close to not being able to be satisfied with the nature of their reality. The identity struggles of the protagonist mirroring the relationship of player and game makes things like being "fifth wall breaking" as an admission of trying to enact a psychological shift in the player. But that is somewhat mysterious unless it is to simliar interpretting how twin peaks being a TV show about being a TV show "demystifies". Your explanativeness may wary.

Replies from: Kaj_Sotala, Slider↑ comment by Kaj_Sotala · 2020-05-26T11:00:21.103Z · LW(p) · GW(p)