Craving, suffering, and predictive processing (three characteristics series)

post by Kaj_Sotala · 2020-05-15T13:21:50.666Z · LW · GW · 56 commentsContents

Unsatisfactoriness Predictive processing and binocular rivalry Predictive processing and psychological suffering The case of physical pain Craving-based and non-craving-based motivation Some other disadvantages of craving Craving and suffering None 56 comments

This is the third post of the "a non-mystical explanation of insight meditation and the three characteristics of existence" series. I originally intended this post to more closely connect no-self and unsatisfactoriness, but then decided on focusing on unsatisfactoriness in this post and relating it to no-self in the next one.

Unsatisfactoriness

In the previous post, I discussed some of the ways that the mind seems to construct a notion of a self. In this post, I will talk about a specific form of motivation, which Buddhism commonly refers to as craving (taṇhā in the original Pali). Some discussions distinguish between craving (in the sense of wanting positive things) and aversion (wanting to avoid negative things); this article uses the definition where both desire and aversion are considered subtypes of craving.

My model is that craving is generated by a particular set of motivational subsystems within the brain. Craving is not the only form of motivation that a person has, but it normally tends to be the loudest and most dominant. As a form of motivation, craving has some advantages:

- People tend to experience a strong craving to pursue positive states and avoid negative states. If they had less craving, they might not do this with an equal zeal.

- To some extent, craving looks to me like a mechanism that shifts behaviors from exploration to exploitation [LW · GW].

- In an earlier post, Building up to an Internal Family Systems model [LW · GW], I suggested that the human mind might incorporate mechanisms that acted as priority overrides to avoid repeating particular catastrophic events. Craving feels like a major component of how this is implemented in the mind.

- Craving tends to be automatic and visceral. A strong craving to eat when hungry may cause a person to get food when they need it, even if they did not intellectually understand the need to eat.

At the same time, craving also has a number of disadvantages:

- Craving superficially looks like it cares about outcomes. However, it actually cares about positive or negative feelings (valence). This can lead to behaviors that are akin to wireheading in that they suppress the unpleasant feeling while doing nothing about the problem. If thinking about death makes you feel unpleasant and going to the doctor reminds you of your mortality, you may avoid doctors - even if this actually increases your risk of dying.

- Craving narrows your perception, making you only pay attention to things which seem immediately relevant for your craving. For example, if you have a craving for sex and go to a party with the goal of finding someone to sleep with, you may see everyone only in terms of “will sleep with me” or “will not sleep with me”. This may not be the best possible way of classifying everyone you meet.

- Strong craving may cause premature exploitation [LW · GW]. If you have a strong craving to achieve a particular goal, you may not want to do anything that looks like moving away from it, even if that would actually help you achieve it better. For example, if you intensely crave a feeling of accomplishment, you may get stuck playing video games that make you feel like you are accomplishing something, even if there was something else that you could do that was more fulfilling in the long term.

- Multiple conflicting cravings may cause you to thrash around in an unsuccessful attempt to fulfill all of them. If you crave to get your toothache fixed, but also a craving to avoid dentists, you may put off the dentist visit even as you continue to suffer from your toothache.

- Craving seems to act in part by creating self-fulfilling prophecies; making you strongly believe that you are going to achieve something, so as to cause you to do it. The stronger the craving, the stronger the false beliefs injected into your consciousness. This may warp your reasoning in all kinds of ways: updating to believe an unpleasant fact may subjectively feel like you are allowing that fact to become true by believing in it, incentivizing you to come up with ways to avoid believing in it.

- Finally, although craving is often motivated by a desire to avoid unsatisfactory experiences, it is actually the very thing that causes dissatisfaction in the first place. Craving assumes that negative feelings are intrinsically unpleasant, when in reality they only become unpleasant when craving resists them.

Given all of these disadvantages, it may be a good idea to try to shift one’s motivation to be more driven by subsystems that are not motivated by craving. It seems to me that everything that can be accomplished via craving, can in principle be accomplished by non-craving-based motivation as well.

Fortunately, there are several ways of achieving this. For one, a craving for some outcome X tends to implicitly involve at least two assumptions:

- achieving X is necessary for being happy or avoiding suffering

- one cannot achieve X except by having a craving for it

Both of these assumptions are false, but subsystems associated with craving have a built-in bias to selectively sample evidence which supports these assumptions, making them frequently feel compelling. Still, it is possible to give the brain evidence which lets it know that these assumptions are wrong: that it is possible to achieve X without having craving for it, and that one can feel good regardless of achieving X.

Predictive processing and binocular rivalry

I find that a promising way of looking at unsatisfactoriness and craving and their impact on decision-making comes from the predictive processing (PP) model about the brain. My claim is not that craving would work exactly like this, but something roughly like this seems like a promising analogy.

Good introductions to PP include this book review as well as the actual book in question... but for the purposes of this discussion, you really only need to know two things:

- According to PP, the brain is constantly attempting to find a model of the world (or hypothesis) that would both explain and predict the incoming sensory data. For example, if I upset you, my brain might predict that you are going to yell at me next. If the next thing that I hear is you yelling at me, then the prediction and the data match, and my brain considers its hypothesis validated. If you do not yell at me, then the predicted and experienced sense data conflict, sending off an error signal to force a revision to the model.

- Besides changing the model, another way in which the brain can react to reality not matching the prediction is by changing reality. For example, my brain might predict that I am going to type a particular sentence, and then fulfill that prediction by moving my fingers so as to write that sentence. PP goes so far as to claim that this is the mechanism behind all of our actions: a part of your brain predicts that you are going to do something, and then you do it so as to fulfill the prediction.

Next I am going to say a few words about a phenomenon called binocular rivalry and how it is interpreted within the PP paradigm. I promise that this is going to be relevant for the topic of craving and suffering in a bit, so please stay with me.

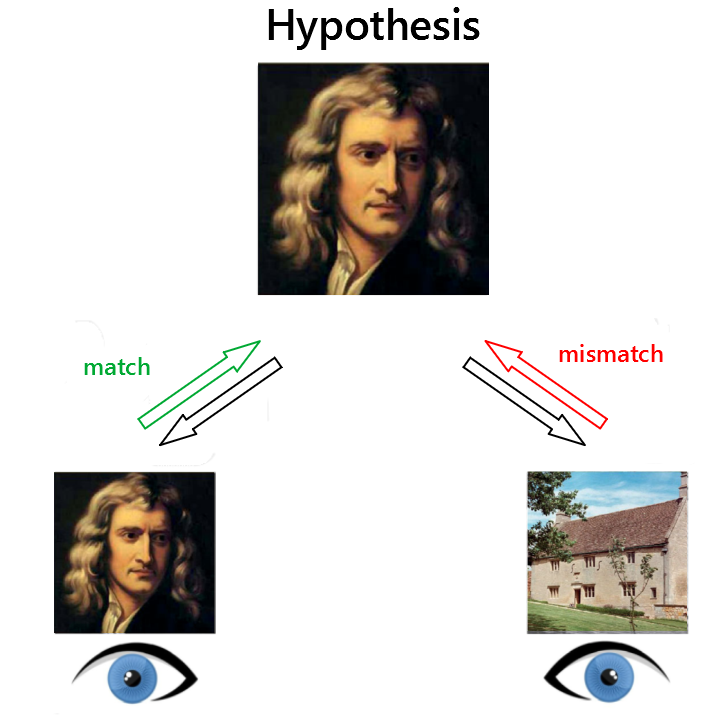

Binocular rivalry, first discovered in 1593 and extensively studied since then, is what happens when your left eye is shown one picture (e.g. an image of Isaac Newton), and your right eye is shown another (e.g. an image of a house) in the right. People report that their experience keeps alternating between seeing Isaac Newton and seeing a house. They might also see a brief mashup of the two, but such Newton-houses are short-lived and quickly fall apart before settling to a stable image of either Newton or a house.

Image credit: Schwartz et al. (2012), Multistability in perception: binding sensory modalities, an overview. Philosophical Transactions of the Royal Society B, 367, 896-905.

Predictive processing explains what’s happening as follows. The brain is trying to form a stable hypothesis of what exactly the image data that the eyes are sending represents: is it seeing Newton, or is it seeing a house? Sometimes the brain briefly considers the hybrid hypothesis of a Newton-house mashup, but this is quickly rejected: faces and houses do not exist as occupying the same place at the same scale at the same time, so this idea is clearly nonsensical. (At least, nonsensical outside highly unnatural and contrived experimental setups that psychologists subject people to.)

Your conscious experience alternating between the two images reflects the brain switching between the hypotheses of “this is Isaac Newton” and “this is a house”; the currently-winning hypothesis is simply what you experience reality as.

Suppose that the brain ends up settling on the hypothesis of “I am seeing Isaac Newton”; this matches the input from the Newton-seeing eye. As a result, there is no error signal that would arise from a mismatch between the hypothesis and the Newton-seeing eye’s input. For a moment, the brain is satisfied that it has found a workable answer.

However, if one really was seeing Isaac Newton, then the other eye should not keep sending an image of a house. The hypothesis and the house-seeing eye’s input do have a mismatch, kicking off a strong error signal which lowers the brain’s confidence in the hypothesis of “I am seeing Isaac Newton”.

The brain goes looking for a hypothesis which would better satisfy the strong error signal… and then finds that the hypothesis of “I am seeing a house” serves to entirely quiet the error signal from the house-seeing eye. Success?

But even as the brain settles on the hypothesis of “I am seeing a house”, this then contradicts the input coming from the Newton-seeing eye.

The brain is again momentarily satisfied, before the incoming error signal from the hypothesis/Newton-eye mismatch drives down the probability of the “I am seeing a house” hypothesis, causing the brain to eventually go back to the “I am seeing Isaac Newton” hypothesis... and then back to seeing a house, and then to seeing a Newton, and...

One way of phrasing this is that there are two subsystems, each of which are transmitting a particular set of constraints (about seeing Newton and a house). The brain is then trying and failing to find a hypothesis which would fulfill both sets of constraints, while also respecting everything else that it knows about the world.

As I will explain next, my feeling is that something similar is going on with unsatisfactoriness. Craving creates constraints about what the world should be like, and the brain tries to find an action which would fulfill all of the constraints, while also taking into account everything else that it knows about the world. Suffering/unsatisfactoriness emerges when all of the constraints are impossible to fulfill, either because achieving them takes time, or because the brain is unable to find any scenario that could fulfill all of them even in theory.

Predictive processing and psychological suffering

There are two broad categories of suffering: mental and physical discomfort. Let’s start with the case of psychological suffering, as it seems most directly analogous to what we just covered.

Let’s suppose that I have broken an important promise that I have made to a friend. I feel guilty about this, and want to confess what I have done. We might say that I have a craving to avoid the feeling of guilt, and the associated craving subsystem sends a prediction to my consciousness: I will stop feeling guilty.

In the previous discussion, an inference mechanism in the brain was looking for a hypothesis that would satisfy the constraints imposed by the sensory data. In this case, the same thing is happening, but

- the hypothesis that it is looking for is a possible action that I could take, that would lead to the constraint being fulfilled

- the sensory data is not actually coming from the senses, but is internally generated by the craving and represents the outcome that the craving subsystem would like to see realized

My brain searches for a possible world that would fulfill the provided constraints, and comes up with the idea of just admitting the truth of what I have done. It predicts that if I were to do this, I would stop feeling guilty over not admitting my broken promise. This satisfies the constraint of not feeling guilty.

However, as my brain further predicts what it expects to happen as a consequence, it notes that my friend will probably get quite angry. This triggers another kind of craving: to not experience the feeling of getting yelled at. This generates its own goal/prediction: that nobody will be angry with me. This acts as a further constraint for the plan that the brain needs to find.

As the constraint of “nobody will be angry at me” seems incompatible with the plan of “I will admit the truth”, this generates an error signal, driving down the probability of this plan. My brain abandons this plan, and then considers the alternative plan of “I will just stay quiet and not say anything”. This matches the constraint of “nobody will be angry at me” quite well, driving down the error signal from that particular plan/constraint mismatch… but then, if I don’t say anything, I will continue feeling guilty.

The mismatch with the constraint of “I will stop feeling guilty” drives up the error signal, causing the “I will just stay quiet” plan to be abandoned. At worst, my mind may find it impossible to find any plan which would fulfill both constraints, keeping me in an endless loop of alternating between two unviable scenarios.

There are some interesting aspects about the phenomenology of such a situation, which feel like they fit the PP model quite well. In particular, it may feel like if I just focus on a particular craving enough, thinking about my desired outcome hard enough will make it true.

Recall that under the PP framework, goals happen because a part of the brain assumes that they will happen, after which it changes reality to make that belief true. So focusing really hard on a craving for X makes it feel like X will become true, because the craving is literally rewriting an aspect of my subjective reality to make me think that X will become true.

When I focus hard on the craving, I am temporarily guiding my attention away from the parts of my mind which are pointing out the obstacles in the way of X coming true. That is, those parts have less of a chance to incorporate their constraints into the plan that my brain is trying to develop. This momentarily reduces the motion away from this plan, making it seem more plausible that the desired outcome will in fact become real.

Conversely, letting go of this craving, may feel like it is literally making the undesired outcome more real, rather than like I am coming more to terms with reality. This is most obvious in cases where one has a craving for an outcome that is impossible for certain, such as in the case of grieving about a friend’s death. Even after it is certain that someone is dead, there may still be persistent thoughts of if only I had done X, with an implicit additional flavor of if I just want to have done X really hard, things will change, and I can’t stop focusing on this possibility because my friend needs to be alive.

In this form, craving may lead to all kinds of rationalization [? · GW] and biased reasoning: a part of your mind is literally making you believe that X is true, because it wants you to find a strategy where X is true. This hallucinated belief may constrain all of your plans and models about the world in the same sense as getting direct sensory evidence about X being true would constrain your brain’s models. For example, if I have a very strong urge to believe that someone is interested in me, then this may cause me to interpret any of his words and expressions in a way compatible with this belief, regardless of how implausible and far-spread [? · GW] of a distortion this requires.

The case of physical pain

Similar principles apply to the case of physical pain.

We should first note that pain does not necessarily need to be aversive: for example, people may enjoy the pain of exercise, hot spices or sexual masochism. Morphine may also have an effect where people report that they still experience the pain but no longer mind it.

And, relevant for our topic, people practicing meditation find that by shifting their attention towards pain, it can become less aversive. The meditation teacher Shinzen Young writes that

... pain is one thing, and resistance to the pain is something else, and when the two come together you have an experience of suffering, that is to say, 'suffering equals pain multiplied by resistance.' You'll be able to see that's true not only for physical pain, but also for emotional pain and it’s true not only for little pains but also for big pains. It's true for every kind of pain no matter how big, how small, or what causes it. Whenever there is resistance there is suffering. As soon as you can see that, you gain an insight into the nature of "pain as a problem" and as soon as you gain that insight, you'll begin to have some freedom. You come to realize that as long as we are alive we can't avoid pain. It's built into our nervous system. But we can certainly learn to experience pain without it being a problem. (Young, 1994)

What does it mean to say that resisting pain creates suffering?

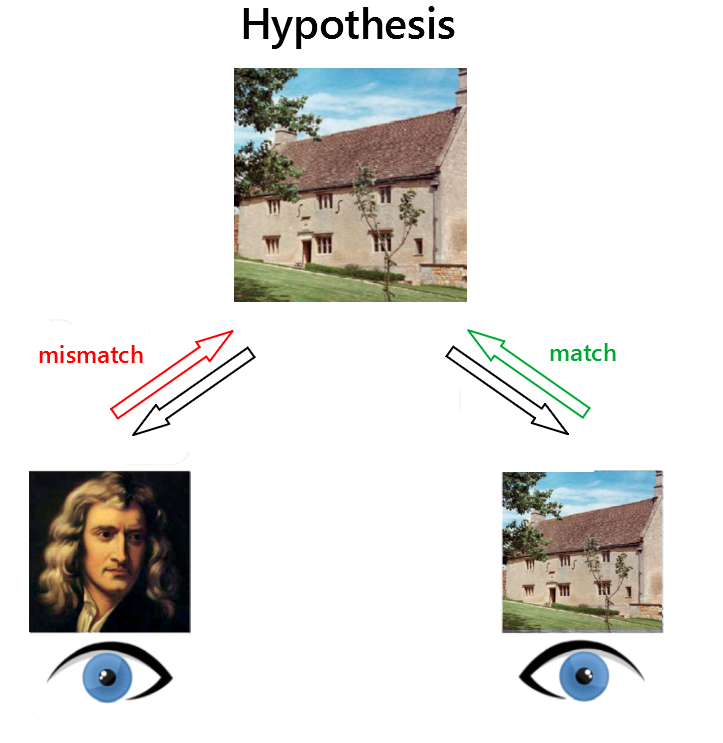

In the discussion about binocular rivalry, we might have said that when the mind settled on a hypothesis of seeing Isaac Newton, this hypothesis was resisted by the sensory data coming from the house-seeing eye. The mind would have settled on the hypothesis of “I am seeing Isaac Newton”, if not for that resistance. Likewise, in the preceding discussion, the decision to admit the truth was resisted by the desire to not get yelled at.

Suppose that you have a sore muscle, which hurts whenever you put weight on it. Like sensory data coming from your eyes, this constrains the possible interpretations of what you might be experiencing: your brain might settle on the hypothesis of “I am feeling pain”.

But the experience of this hypothesis then triggers a resistance to that pain: a craving subsystem wired to detect pain and resist it by projecting a form of internally-generated sense data, effectively claiming that you are not in pain. There are now again two incompatible streams of data that need to be reconciled, one saying that you are in pain, and another which says that you are not.

In the case of binocular rivalry, both of the streams were generated by sensory information. In the discussion about psychological suffering, both of the streams were generated by craving. In this case, craving generates one of the streams and sensory information generates the other.

On the left, a persistent pain signal is strong enough to dominate consciousness. On the right, a craving for not being in pain attempts to constrain consciousness so that it doesn’t include the pain.

Now if you stop putting weight on the sore muscle, the pain goes away, fulfilling the prediction of “I am not in pain”. As soon as your brain figures this out, your motor cortex can incorporate the craving-generated constraint of “I will not be in pain” into its planning. It generates different plans of how to move your body, and whenever it predicts that one of them would violate the constraint of “I will not be in pain”, it will revise its plan. The end result is that you end up moving in ways that avoid putting weight on your sore muscle. If you miscalculate, the resulting pain will cause a rapid error signal that causes you to adjust your movement again.

What if the pain is more persistent, and bothers you no matter how much you try to avoid moving? Or if the circumstances force you to put weight on the sore muscle?

In that case, the brain will continue looking for a possible hypothesis that would fulfill the constraint of “I am not in pain”. For example, maybe you have previously taken painkillers that have helped with your pain. In that case, your mind may seize upon the hypothesis that “by taking painkillers, my pain will cease”.

As your mind predicts the likely consequences of taking painkillers, it notices that in this simulation, the constraint of “I am not in pain” gets fulfilled, driving down the error signal between the hypothesis and the “I am not in pain” constraint. However, if the brain could suppress the craving-for-pain-relief merely by imagining a scenario where the pain was gone, then it would never need to take any actions: it could just hallucinate pleasant states. Helping keep it anchored into reality is the fact that simply imagining the painkillers has not done anything to the pain signal itself: the imagined state does not match your actual sense data. There is still an error signal generated between the mismatch of the imagined “I have taken painkillers and am free of pain” scenario, and the fact that the pain is not gone yet.

Your brain imagines a possible experience: taking painkillers and being free of pain. This imagined scenario fulfills the constraint of “I have no pain”. However, it does not fulfill the constraint of actually matching your sense data: you have not yet taken painkillers and are still in pain.

Fortunately, if painkillers are actually available, your mind is not locked into a state where the two constraints of “I’m in pain” and “I’m not in pain” remain equally impossible to achieve. It can take actions - such as making you walk towards the medicine cabinet - that get you closer towards being able to fulfill both of these constraints.

There are studies suggesting that physical pain and psychological pain share similar neural mechanisms [citation]. And in meditation, one may notice that psychological discomfort and suffering involves avoiding unpleasant sensations in the same way as physical pain does; the same mechanism has been recruited for more abstract planning.

When the brain predicts that a particular experience would produce an unpleasant sensation, craving resists that prediction and tries to find another way. Similarly, if the brain predicts that something will not produce a pleasant sensation, craving may also resist that aspect of reality.

Now, this process as described has a structural equivalence to binocular rivalry, but as far as I know, binocular rivalry does not involve any particular discomfort. Suffering obviously does.

Being in pain is generally bad: it is usually better to try to avoid ending up in painful states, as well as try to get out of painful states once you are in them. This is also true for other states, such as hunger, that do not necessarily feel painful, but still have a negative emotional tone. Suppose that whenever craving generates a self-fulfilling prediction which resists your direct sensory experience, this generates a signal we might call “unsatisfactoriness”.

The stronger the conflict between the experience and the craving, the stronger the unsatisfactoriness - so that a mild pain that is easy to ignore only causes a little unsatisfactoriness, and an excruciating pain that generates a strong resistance causes immense suffering. The brain is then wired to use this unsatisfactoriness as a training signal, attempting to avoid situations that have previously included high levels of it, and to keep looking for ways out if it currently has a lot of it.

It is also worth noting what it means for you to be paralyzed by two strong, mutually opposing cravings. Consider again the situation where I am torn between admitting the truth to my friend, and staying quiet. We might think that this is a situation where the overall system is uncertain of the correct course of action: some subsystems are trying to force the action of confronting the situation, others are trying to force the action of avoiding it. Both courses of action are predicted to lead to some kind of loss.

In general, it is a bad thing if a system ends up in a situation where it has to choose between two different kinds of losses, and has high internal uncertainty of the right action. A system should avoid such dilemmas, either by avoiding the situations themselves or by finding a way to reconcile the conflicting priorities.

Craving-based and non-craving-based motivation

What I have written so far might be taken to suggest that craving is a requirement for all action and planning. However, the Buddhist claim is that craving is actually just one of at least two different motivational systems in the brain. Given that neuroscience suggests the existence of at least three different motivational systems [LW · GW], this should not seem particularly implausible.

Let’s take another look at the types of processes related to binocular rivalry versus craving.

Craving acts by actively introducing false beliefs into one’s reasoning. If craving could just do this completely uninhibited, rewriting all experience to match one’s desires, nobody would ever do anything: they would just sit still, enjoying a craving-driven hallucination of a world where everything was perfect.

In contrast, in the case of binocular rivalry, no system is feeding the reasoning process any false beliefs: all the constraints emerge directly from the sense data and previous life-experience. To the extent that the system can be said to have a preference over either the “I am seeing a house” or the “I am seeing Isaac Newton” hypothesis, it is just “if seeing a house is the most likely hypothesis, then I prefer to see a house; if seeing Newton is the most likely hypothesis, then I prefer to see Newton”. The computation does not have an intrinsic attachment to any particular outcome, nor will it hallucinate a particular experience if it has no good reason to.

Likewise, it seems like there are modes of doing and being which are similar in the respect that one is focused on process rather than outcome: taking whatever actions are best-suited for the situation at hand, regardless of what their outcome might be. In these situations, little unsatisfactoriness seems to be present.

In an earlier post, I discussed [LW · GW] a proposal where an autonomously acting robot has two decision-making systems. The first system just figures out whatever actions would maximize its rewards and tries to take those actions. The second “Blocker” system tries to predict whether or not a human overseer would approve of any given action, and prevents the first system from doing anything that would be disapproved of. We then have two evaluation systems: “what would bring the maximum reward” (running on a lower priority) and “would a human overseer approve of a proposed action” (taking precedence in case of a disagreement).

It seems to me that there is something similar going on with craving. There are processes which are neutrally just trying to figure out the best action; and when those processes hit upon particularly good or bad outcomes, craving is formed in an attempt to force the system into repeating or avoiding those outcomes in the future.

Suppose that you are in a situation where the best possible course of action only has a 10% chance of getting you through alive. If you are in a non-craving-driven state, you may focus on getting at least that 10% chance together, since that’s the best that you can do.

In contrast, the kind of behavior that is typical for craving is realizing that you have a significant chance of dying, deciding that this thought is completely unacceptable, and refusing to go on before you have an approach where the thought of death isn’t so stark.

Both systems have their upsides and downsides. If it is true that a 10% chance of survival really is the best that you can do, then you should clearly just focus on getting the probability even that high. The craving which causes trouble by thrashing around is only going to make things worse. On the other hand, maybe this estimate is flawed and you could achieve a higher probability of survival by doing something else. In that case, the craving absolutely refusing to go on until you have figured out something better might be the right action.

There is also another major difference, in that craving does not really care about outcomes. Rather, it cares about avoiding positive or negative feelings. In the case of avoiding death, craving-oriented systems are primarily reacting to the thought of death… which may make them reject even plans which would reduce the risk of death, if those plans involved needing to think about death too much.

This becomes particularly obvious in the case of things like going to the dentist in order to have an operation you know will be unpleasant. You may find yourself highly averse to going, as you crave the comfort of not needing to suffer from the unpleasantness. At the same time, you also know that the operation will benefit you in the long term: any unpleasantness will just be a passing state of mind, rather than permanent damage. But avoiding unpleasantness - including the very thought of experiencing something unpleasant - is just what craving is about.

In contrast, if you are in a state of equanimity with little craving, you still recognize the thoughts of going to the dentist as having negative valence, but this negative valence does not bother you, because you do not have a craving to avoid it. You can choose whatever option seems best, regardless of what kind of content this ends up producing in your consciousness.

Of course, choosing correctly requires you to actually know what is best. Expert meditators have been known to sometimes ignore extreme physical pain that should have caused them to seek medical aid. And they probably would have sought help, if not for their ability to drop their resistance to pain and experience it with extreme equanimity.

Negative-valence states tend to correlate with states which are bad for the achievement of our goals. That is the reason why we are wired to avoid them. But the correlation is only partial, so if you focus too much on avoiding unpleasantness, you are falling victim to Goodhart’s Law [LW · GW]: optimizing a measure so much that you sacrifice the goals that the measure was supposed to track. Equanimity gives you the ability to ignore your consciously experienced suffering, so you don't need to pay additional mental costs for taking actions which further your goals. This can be useful, if you are strategic about actually achieving your goals.

But while Goodharting on a measure is a failure mode, so is ignoring the measure entirely. Unpleasantness does still correlate with things that make it harder to realize your values, and the need to avoid displeasure normally operates as an automatic feedback mechanism. It is possible to have high equanimity and weaken this mechanism, without being smart about it [LW · GW] and doing nothing to develop alternative mechanisms. In that case you are just trading Goodhart’s Law for the opposite failure mode.

Some other disadvantages of craving

In the beginning of this post, I mentioned a few other disadvantages that craving has, which I have not yet mentioned explicitly. Let’s take a quick look at those.

Craving narrows your perception, making you only pay attention to things that seem immediately relevant for your craving.

In predictive processing, attention is conceptualized [LW(p) · GW(p)] as giving increased weighting to those features of the sensory data that seem most useful for making successful predictions about the task at hand. If you have strong craving to achieve a particular outcome, your mind will focus on those aspects of the sensory data that seem useful for realizing your craving.

Strong craving may cause premature exploitation [LW · GW]. If you have a strong craving to achieve a particular goal, you may not want to do anything that looks like moving away from it, even if that would actually help you achieve it better.

Suppose that you have a strong craving to experience a feeling of accomplishment: this means that the craving is strongly projecting a constraint of “I will feel accomplished” into your planning, causing an error signal if you consider any plan which does not fulfill the constraint. If you are thinking about a multistep plan which will take time before you feel accomplished, it will start out by you not feeling accomplished. This contradicts the constraint of “I will feel accomplished”, causing that plan to be rejected in favor of ones that bring you even some accomplishment right away.

Craving and suffering

We might summarize the unsatisfactoriness-related parts of the above as follows:

- Craving tries to get us into pleasant states of consciousness.

- But pleasant states of consciousness are those without craving.

- Thus, there are subsystems which are trying to get us into pleasant states of consciousness by creating constant craving, which is the exact opposite of a pleasant state.

We can somewhat rephrase this as:

- The default state of human psychology involves a degree of almost constant dissatisfaction with one’s state of consciousness.

- This dissatisfaction is created by the craving.

- The dissatisfaction can be ended by eliminating craving.

… which, if correct, might be interpreted to roughly equal the first three of Buddhism’s Four Noble Truths: the fourth is “Buddhism’s Noble Eightfold Path is a way to end craving”.

A more rationalist framing might be that the craving is essentially acting in a way that looks similar to wireheading: pursuing pleasure and happiness even if that sacrifices your ability to impact the world. Reducing the influence of the craving makes your motivations less driven by wireheading-like impulses, and more able to see the world clearly even if it is painful. Thus, reducing craving may be valuable even if one does not care about suffering less.

This gives rise to the question - how exactly does one reduce craving? And what does all of this have to do with the self, again?

We’ll get back to those questions in the next post.

This is the third post of the "a non-mystical explanation of insight meditation and the three characteristics of existence" series. The next post in the series is "From self to craving [LW · GW]".

56 comments

Comments sorted by top scores.

comment by romeostevensit · 2020-05-15T18:15:43.528Z · LW(p) · GW(p)

One frame that's been useful for me is explicitly noticing how different parts have different time horizons they are sampling over, and that that creates a sort of implicit tension since they are paid in different rewards but are competing for the same motivational system.

comment by abramdemski · 2020-12-31T16:44:47.385Z · LW(p) · GW(p)

I like this version of Predictive Processing much better than the usual, in that you explicitly posit that warping beliefs toward success is only ONE of several motivation systems. I find this much more plausible than using it as the grand unifying theory.

That said, isn't the observation that binocular rivalry doesn't create suffering a pretty big point against the theory as you've described it?

Side note, I don't experience the alternating images you described. I see both things superimposed, something like if you averaged the bitmaps together. Although that's not /quite/ an accurate description. I attribute this to playing with crossing my eyes a lot at a young age, although the causality could be the other way. There's a lot of variance in how people experience their visual field, you'll find, if you ask people enough detailed questions about it. (Same with all sorts of aspects of cognition. Practically all cognitive studies of this kind focus on the typical response more than the variation, giving a false impression of unity of you only read summaries. I suspect a lot of the cognitive variation correlates with personality type (ie OCEAN).)

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2020-12-31T18:39:07.021Z · LW(p) · GW(p)

That said, isn't the observation that binocular rivalry doesn't create suffering a pretty big point against the theory as you've described it?

It does. I think that I've figured out a better explanation since writing this essay, but I've yet to write it up in a satisfying form...

Side note, I don't experience the alternating images you described. I see both things superimposed, something like if you averaged the bitmaps together.

Huh, that's an interesting datapoint!

comment by Steven Byrnes (steve2152) · 2020-05-16T20:01:07.009Z · LW(p) · GW(p)

It seems like if you have to choose between bad options, the healthy thing is to declare that all your options are bad, and take the least bad one. This sometimes feels like "becoming resigned to your fate" maybe? The unhealthy thing is to fight against this, and not accept reality.

Why is the latter so tempting? I think it comes from the Temporal Difference Learning algorithm used by the brain's reward system. I think the TD learning algorithm attaches a very strong negative reward to the moment where you start believing that your predicted reward is a lot lower than what you had thought it would be before. So that would create an exceptionally strong motivation to not accept that, even if it's true.

This ties into my other comment [LW(p) · GW(p)] that maybe craving is fundamentally the same as other motivations, but stronger, and in particular, so strong that it screws up our ability to think straight.

comment by Steven Byrnes (steve2152) · 2020-05-16T19:47:28.198Z · LW(p) · GW(p)

After reading this and lukeprog's post you referenced [LW · GW], I'm still not convinced that there is fundamentally more than one motivational system—although I don't have high confidence and still want to chase down the references.

(Well, I see a distinction between actions not initiated by the neocortex, like flinching away from a projectile, versus everything else—see here [LW · GW]—but that's not what we're talking about here.)

It seems to me that what you call "craving" is what I would call "an unhealthily strong motivation". The background picture in my head is this [LW · GW], where wishful thinking is a failure mode built into the deepest foundations of our thoughts. Wishful thinking stays under control mainly because the force of "What we can imagine and expect is constrained by past experience and world-knowledge" can usually defeat the force of Wishful thinking. But if we want something hard enough, it can break through those shackles, so that, for example, it doesn't get immediately suppressed even if our better judgment declares that it cannot work.

Like, take your dentist example:

- The thought of being at the dentist is aversive.

- The thought of having clean healthy teeth is attractive.

We make the decision by weighing these against each other, I think. You are categorizing the former as a craving and the latter as motivation-that-is-not-craving (right?), but they seem like fundamentally the same type of thing to me. (After all, we can weigh them against each other.) It seems like the difference is that the former is exceptionally strong—so strong that it prevents us from thinking straight about it. The latter is a normal mild attraction, which is healthy and unproblematic. I see a continuum between the two.

(If this is right, it doesn't undermine the idea that cravings exist and that we should avoid them. I still believe that. I'm just suggesting that maybe craving vs motivations-that-are-not-craving is a difference of degree not kind.)

I dunno, I'm just spitballing here :-D

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2020-05-17T09:04:23.649Z · LW(p) · GW(p)

The thought of being at the dentist is aversive.

The thought of having clean healthy teeth is attractive.

We make the decision by weighing these against each other, I think. You are categorizing the former as a craving and the latter as motivation-that-is-not-craving (right?),

I mostly used examples of aversion in the post, but to be clear, both desire and aversion can be forms of craving. As I noted in another comment [LW(p) · GW(p)], basically any goal can be either craving-based, non-craving-based, or (typically) a mixture of both.

After reading this and lukeprog's post you referenced [LW · GW], I'm still not convinced that there is fundamentally more than one motivational system—although I don't have high confidence and still want to chase down the references. [...] I'm just suggesting that maybe craving vs motivations-that-are-not-craving is a difference of degree not kind .

Possible; subjectively they feel like differences in kind, but of course subjective experience is not strong evidence for how something is implemented neurally. Large enough quantitative differences can produce effects that feel like qualitative differences.

I wonder about the connection to the referenced motivational systems; based on a superficial description (e.g. the below excerpt from Surfing Uncertainty), it kinda sounds like the model-free motivational system in neuroscience could be craving, and the model-based system non-craving. (Or maybe not, since it's suggested that model-free would involve more bottom-up influences, which sounds contrary to craving; I'm confused by that.) That discussion of how the brain learns which system to use in which situation, would be compatible with the model where one can gradually unlearn craving using various methods (I'll get to that in a later post). But I would need to look into this more.

To see how this might work in practice, it helps to start with some examples from a different (but in fact quite closely related) literature. This is the extensive literature concerning choice and decision-making. Within that literature, it is common to distinguish between ‘model-based’ and ‘model-free’ approaches (see, e.g., Dayan, 2012; Dayan & Daw, 2008; Wolpert, Doya, & Kawato, 2003). Model-based strategies rely, as the name suggests, on a model of the domain that includes information about how various states (worldly situations) are connected, thus allowing a kind of principled estimation (given some cost function) of the value of a putative action. Such approaches involve the acquisition and the (computationally challenging) deployment of fairly rich bodies of information concerning the structure of the task-domain. Model-free strategies, by contrast, are said to ‘learn action values directly, by trial and error, without building an explicit model of the environment, and thus retain no explicit estimate of the probabilities that govern state transitions’ (Gläscher et al., 2010, p. 585). Such approaches implement pre-computed ‘policies’ that associate actions directly with rewards, and that typically exploit simple cues and regularities while nonetheless delivering fluent, often rapid, response.Replies from: steve2152

Model-free learning has been associated with a ‘habitual’ system for the automatic control of choice and action, whose neural underpinnings include the midbrain dopamine system and its projections to the striatum, while model-based learning has been more closely associated with the action of cortical (parietal and frontal) regions (see Gläscher et al., 2010). Learning in these systems has been thought to be driven by different forms of prediction error signal—affectively salient ‘reward prediction error’ (see, e.g., Hollerman & Schultz, 1998; Montague et al., 1996; Schultz, 1999; Schultz et al., 1997) for the model-free case, and more affectively neutral ‘state prediction error’ (e.g., in ventromedial prefrontal cortex) for the model-based case. These relatively crude distinctions are, however, now giving way to a much more integrated story (see, e.g., Daw et al., 2011; Gershman & Daw, 2012) as we shall see.

How should we conceive the relations between PP and such ‘model-free’ learning? One interesting possibility is that an onboard process of reliability estimation might select strategies according to context. If we suppose that there exist multiple, competing neural resources capable of addressing some current problem, there needs to be some mechanism that arbitrates between them. With this in mind, Daw et al. (2005) describe a broadly Bayesian ‘principle of arbitration’ whereby estimations of the relative uncertainty associated with distinct ‘neural controllers’ (e.g., ‘model-based’ versus ‘model-free’ controllers) allows the most accurate controller, in the current circumstances, to determine action and choice. Within the PP framework this would be implemented using the familiar mechanisms of precision estimation and precision-weighting. Each resource would compute a course of action, but only the most reliable resource (the one associated with the least uncertainty when deployed in the current context) would get to determine high-precision prediction errors of the kind needed to drive action and choice. In other words, a kind of meta-model (one rich in precision expectations) would be used to determine and deploy whatever resource is best in the current situation, toggling between them when the need arises.

Such a story is, however, almost certainly over-simplistic. Granted, the ‘model-based / model-free’ distinction is intuitive and resonates with old (but increasingly discredited) dichotomies between habit and reason, and between emotion and analytic evaluation. But it seems likely that the image of parallel, functionally independent, neural sub-systems will not stand the test of time. For example, a recent fMRI study (Daw, Gershman, et al., 2011) suggests that rather than thinking in terms of distinct (functionally isolated) model-based and model-free learning systems, we may need to posit a single ‘more integrated computational architecture’ (p. 1204) in which the different brain areas most commonly associated with model-based and model-free learning (pre-frontal cortex and dorsolateral striatum, respectively) each trade in both model-free and model-based modes of evaluations and do so ‘in proportions matching those that determine choice behavior’ (p. 1209). One way to think about this, from within the PP perspective, is by associating ‘model-free’ responses with processing dominated (‘bottom-up’) by the sensory flow, while ‘model-based’ responses are those that involve greater and more widespread kinds of ‘top-down’ influence.5 The context-dependent balancing between these two sources of information, achieved by adjusting the precision-weighting of prediction error, then allows for whatever admixtures of strategy task and circumstances dictate.

Support for this notion of a more integrated inner economy was provided by a decision task (Daw, Gershman et al., 2011) in which experimenters were able to distinguish between apparently model-based and apparently model-free influences on subsequent choice and action. This is possible because model-free response is inherently backwards-looking, associating specific actions with previously encountered rewards. Animals exhibiting only model-free responses are, in that sense, condemned to repeat the past, releasing previously reinforced actions when circumstances dictate. A model-based system, by contrast, is able to evaluate potential actions using (as the name suggests) some kind of inner surrogate of the external arena in which actions are to be performed and choices made—such systems may, for example, deploy mental simulations to determine whether or not one action is to be preferred over another. Animals that deploy a model-based system are thus able, in the terms of Seligman et al. (2013), to ‘navigate into the future’ rather than remaining ‘driven by the past’.

Most animals, it now seems clear, are capable of both forms of response and combine dense enabling webs of habit with sporadic bursts of genuine prospection. According to the standard picture, recall, there exist distinct neural valuation systems and distinct forms of prediction error signal supporting each type of learning and response. Using a sequential choice task, Daw et al. were able to create conditions under which the computations of one or other of these neural valuation systems should dissociate from behaviour, revealing the presence of independent computations (in different, previously identified, brain areas) of value by a model-free and a model-based system. Instead they found neural correlates of apparently model-free and apparently model-based responses in both areas. Strikingly, this means that even striatally computed ‘reward prediction errors’ do not simply reflect learning using a truly model-free system. Instead, recorded activity in the striatum ‘reflected a mixture of model-free and model-based evaluations’ (Daw et al., 2011, p. 1209) and ‘even the signal most associated with model-free RL [reinforcement learning], the striatal RPE [reward prediction error], reflects both types of valuation, combined in a way that matches their observed contributions to choice behavior’ (Daw et al., 2011, p. 1210). Top-down information, Daw et al. (2011) suggest, might here control the way different strategies are combined in differing contexts for action and choice. Greater integration between model-based and model-free valuations might also, they speculate, flow from the action of some kind of hybrid learning routine in which a model-based resource may train and tune the responses of a (quicker, in context more efficient) model-free resource.

At a more general level, such results add to a growing literature (for a review, see Gershman & Daw, 2012) that suggests the need for a deep reworking of the standard decision-theoretic model. Where that model posits distinct representations of utility and probability, associated with the activity of more-or-less independent neural sub-systems, we may actually confront a more deeply integrated architecture in which ‘perception, action, and utility are ensnared in a tangled skein [involving] a richer ensemble of dynamical interactions between perceptual and motivational systems’ (Gershman & Daw, 2012, p. 308). The larger picture scouted in this section here makes good functional sense, allowing ‘model-free’ modes to use model-based schemes to teach them how to respond. Within the PP framework, this results in a hierarchical embedding of the (shallow) ‘model-free’ responses in a (deeper) model-based economy. This has many advantages, since model-based schemes are (chapter 5 above) profoundly context-sensitive, whereas model-free or habitual schemes—once in place—are fixed, bound to the details of previous contexts of successful action. By delicately combining the two modes within an overarching economy, adaptive agents may identify the appropriate contexts in which to deploy the model-free (‘habitual’) schemes. ‘Model-based’ and ‘model-free’ modes of valuation and response, if this is correct, simply name extremes along a single continuum and may appear in many mixtures and combinations determined by the task at hand.

↑ comment by Steven Byrnes (steve2152) · 2020-05-17T18:50:16.458Z · LW(p) · GW(p)

Yeah, I haven't read any of these references, but I'll elaborate on why I'm currently very skeptical that "model-free" vs "model-based" is a fundamental difference.

I'll start with an example unrelated to motivation, to take it one step at a time.

Imagine that, every few hours, your whole field of vision turns bright blue for a couple seconds, then turns yellow, then goes back to normal. You have no idea why. But pretty soon, every time your field of vision turns blue, you'll start expecting it to then turn yellow within a couple seconds. This expectation is completely divorced from everything else you know, since you have no idea why it's happening, and indeed all your understanding of the world says that this shouldn't be happening.

Now maybe there's a temptation here to say that the expectation of yellow is model-free pattern recognition, and to contrast it with model-based pattern recognition, which would be something like expecting a chess master to beat a beginner, which is a pattern that you can only grasp using your rich contextual knowledge of the world.

But I would not draw that contrast. I would say that the kind of pattern recognition that makes us expect to see yellow after blue just from direct experience without understanding why, is exactly the same kind of pattern recognition that originally built up our entire world-model from scratch, and which continues to modify it throughout our lives.

For example, to a 1-year-old, the fact that the words "1 2 3 4..." is usually followed by "5" is just an arbitrary pattern, a memorized sequence of sounds. But over time we learn other patterns, like seeing two things while someone says "two", and we build connections between all these different patterns, and wind up with a rich web of memorized patterns that comprises our entire world-model.

Different bits of knowledge can be more or less integrated into this web. "I see yellow after blue, and I have no idea why" would be an extreme example—an island of knowledge isolated from everything else we know. But it's a spectrum. For example, take everyone on Earth who knows the phrase "E=mc²". There's a continuum, from people who treat it as a memorized sequence of meaningless sounds in the same category as "yabba dabba doo", to people who know that the E stands for energy but nothing else, to physics students who kinda get it, all the way to professional physicists who find E=mc² to be perfectly obvious and inevitable and then try to explain it on Quora because I guess I had nothing better to do on New Years Day 2014... :-)

So, I think model-based and model-free is not a fundamental distinction. But I do think that with different ways of acquiring knowledge, there are systematic trends in prediction strength, with first-hand experience leading to much stronger predictions than less-direct inferences. If I have repeated direct experience of my whole field of vision filling with yellow after blue, that will develop into a very very strong (confident) prediction. After enough times seeing blue-then-yellow, if I see blue-then-green I might literally jump out of my seat and scream!! By contrast, the kind of expectation that we arrive at indirectly via our world model tends to be a weaker prediction. If I see a chess master lose to a beginner, I'll be surprised, but I won't jump out of my seat and scream. Of course that's appropriate: I only predicted the chess master would win via a long chain of uncertain probabilistic inferences, like "the master was trying to win", "nobody cheated", "the master was sober", "chess is not the kind of game where you can win just by getting lucky", etc. So it's appropriate for me to be predicting the win with less confidence. As yet a third example, let's say a professional chess commentator is watching the same match, in the context of a proper tournament. The commentator actually might jump out of her chair and scream when the master loses! For her, the sight of masters crushing beginners is something that she has repeatedly and directly experienced. Thus her prediction is much stronger than mine. (I'm not really into chess.)

All this is about perception, not motivation. Now, back to motivation. I think we are motivated to do things proportionally to our prediction of the associated reward.

I think we learn to predict reward in a similar way that we learn to predict anything else. So it's the same idea. Some reward predictions will be from direct experience, and not necessarily well-integrated with the rest of our world-model: "Don't know why, but it feels good when I do X". It's tempting to call these "model-free". Other reward predictions will be more indirect, mediated by our understanding of how some plan will unfold. The latter will tend to be weaker reward predictions in general (as is appropriate since they rely on a longer chain of uncertain inferences), and hence they tend to be less motivating. It's tempting to call these "model-based". But I don't think it's a fundamental or sharp distinction. Even if you say "it feels good when I do X", we have to use our world-model to construct the category X and classify things as X or not-X. Conversely, if you make a plan expecting good results, you implicitly have some abstract category of "plans of this type" and you do have previous direct experience of rewards coming from the objects in this abstract category.

Again, this is just my current take without having read the literature :-D

(Update 6 months later: I have read more of the relevant literature since writing this, but basically stand by what I said here.)

Replies from: Kaj_Sotala, Slider↑ comment by Kaj_Sotala · 2020-05-22T12:24:58.379Z · LW(p) · GW(p)

This reminds me of my discussion with johnswentworth [LW(p) · GW(p)], where I was the one arguing that model-free vs. model-based is a sliding scale. :)

So yes, it seems reasonable to me that these might be best understood as extreme ends of a spectrum... which was part of the reason why I copied that excerpt, as it included the concluding sentence of "‘Model-based’ and ‘model-free’ modes of valuation and response, if this is correct, simply name extremes along a single continuum and may appear in many mixtures and combinations determined by the task at hand" at the end. :)

↑ comment by Slider · 2020-05-17T22:02:26.424Z · LW(p) · GW(p)

I am not the party that used the terms but ot me "yellow then blue" reads as a very simple model and model based thinking.

The part of " we have to use our world-model to construct the category X and classify things as X or not-X " reads to me that you do not think that model-free thinking is possible.

You can be a situation and something in it elict you to respond in a way Y without you being aware what is the condition that makes that expereince fall within a triggering reference class. Now if you know you have such a reaction you can by experiment try to to inductive investigation by carefully varying the environment and check whether you do the react or not. Then you might reverse engineer the reflex and end up with a model how the reflex works.

The question of ineffabiolity of neural network might be relevant. If a neural network makes a mistake and tries to avoid doing that mistake in the future a lot of weights are adjusted none of which is easily expressible as a doing a different action in some discrete situation. Even if it is a simple model a model like "blue" seemss to point out a set of criteria how you could rule whether a novel experince falls wihtin the perfew of the model or not. But if you have a ill or fuzzily defined "this kind of situation" that is a completely different thing.

comment by Steven Byrnes (steve2152) · 2020-05-16T18:48:15.305Z · LW(p) · GW(p)

Craving superficially looks like it cares about outcomes. However, it actually cares about positive or negative feelings (valence).

Really? My model has been that you can want something without really enjoying it or being happy to have it. (That comes mostly from reading Scott's old post on wanting/liking/approving [LW · GW].) Or maybe you're using "feelings (valence)" in a broader sense that encompasses "dopamine rush"? (I may be misunderstanding the exact meaning of "valence"; I haven't dived deep into it, although I've been meaning to.)

Replies from: mr-hire, Kaj_Sotala↑ comment by Matt Goldenberg (mr-hire) · 2020-05-18T04:45:14.335Z · LW(p) · GW(p)

Isn't this kind of craving about avoiding negative valence? Having an addiction (a wanting) that's not fulfilled is very unpleasant. My model of this is that the addiction starts from a model based place of choosing a behavior, then the pavlovian part takes offer as the behavior leads to a positive or avoiding a negative, then the model free system starts to get a handle on what's happening with the palovian.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2020-05-18T11:10:12.309Z · LW(p) · GW(p)

Ah yeah, this definitely describes my experiences with a lot of addiction-like behaviors. The behavior itself isn't necessarily enjoyable, but not doing it feels aversive, and then there's a craving to get rid of that aversive feeling.

↑ comment by Kaj_Sotala · 2020-05-16T20:17:02.328Z · LW(p) · GW(p)

Good question! My answer would be that craving is trying to get things that it expects will bring positive valence, but this prediction may or may not be accurate (though it may once have been). [EDIT: also, see mr-hire's comment [LW(p) · GW(p)].]

comment by Grotace · 2020-05-16T01:39:59.049Z · LW(p) · GW(p)

I am a bit confused by the lines:

"...pursuing pleasure and happiness even if that sacrifices your ability to impact the world. Reducing the influence of the craving makes your motivations less driven by wireheading-like impulses, and more able to see the world clearly even if it is painful."

Once we have deemed that wanting to pursue pleasure and happiness are wireheading-like impulses, why stop ourselves from saying that wanting to impact the world is a wireheading-like impulse?

You also talk about meditators ignoring pain, and how the desire to avoid pain is craving. Why isn't a desire to avoid death craving? You clearly speak as if going to a dentist when you have a tooth ache is the right thing to do, but why? Once you distance your 'self' from pain, why not distance yourself from your rotting teeth?

All my intuitions about how to act are based on this flawed sense of self. And from what you are outlining, I don't see how any intuition about the right way to act can possibly remain once we lose this flawed sense of self.

There's a general discomfort I have with this series of posts that I'm not able to fully articulate, but the above questions seem related.

Replies from: Kaj_Sotala, lsusr↑ comment by Kaj_Sotala · 2020-05-16T09:23:58.009Z · LW(p) · GW(p)

Once we have deemed that wanting to pursue pleasure and happiness are wireheading-like impulses, why stop ourselves from saying that wanting to impact the world is a wireheading-like impulse? [...] Why isn't a desire to avoid death craving?

Fair question. One answer is: wanting to save the world can be a wireheading-like impulse, if it is generated by craving as opposed to some other form of motivation. Likewise, pursuing pleasure and happiness can also be non-wireheading-like, if you pursue them for reasons other than craving. Wanting to avoid death, too, is something that you can pursue either out of craving or for other reasons.

For example, you may pursue pleasure:

- Because you value it for its own sake

- Because experiencing pleasure makes your mind and body work better than if you were only experiencing unhappiness

- Because it is useful for releasing craving

- Or for some other reason.

The difference (or at least a difference) is more in how you react to the possibility of there being obstacles to that goal. Take the dentist example.

You might value pleasure and healthy teeth in a non-craving-based way; this leads you to conclude that even though the dentist visit might be unpleasant, overall there is going to be more pleasure if you just go to the dentist right away and get the source of discomfort fixed as soon as possible. You can think about how unpleasant the dentist visit is and weigh it appropriately, without instinctively flinching away from the very thought of that unpleasantness.

Or you might have a craving to pursue pleasure and avoid discomfort, in which case even thinking about the dentist visit is aversive. In third-person terms, you have a constraint "do not think about doing unpleasant things", so as soon as you mentally simulate the dentist visit and the simulation includes discomfort, your mind is pushed to think about something else. I call this "wireheading-like" in the sense that you are taking actions which are superficially furthering the goal in the short term (by avoiding the thought of the dentist, you are avoiding some discomfort), but are actually hurting it in the long term (if you just went to the dentist right away, you'd end up with much less discomfort overall).

You clearly speak as if going to a dentist when you have a tooth ache is the right thing to do, but why?

Because even when you let go of craving, you still have all of your other values.

I find it helpful to think of craving and non-craving as two layers of motivation: at the bottom there is one system of motivations which is doing things, and then on top there is craving, which sets a variety of its own goals. Decision-making tends to involve a mixture of motivations, some of them coming from craving and some of them coming from non-craving. But craving tends to be so "loud", and frequently be the dominant form of motivation, that the other motivations can become hard to notice.

As an example, maybe you have had an experience where you are just trying out something for the first time, and don't have any major expectations one way or the other; you have a relaxed time. Because you are so relaxed and non-stressed, things go well and it ends up being really enjoyable. Afterwards, you develop a desire to repeat the experience and ensure that it goes that well again; as a result, it doesn't, because you are so focused on how to repeat it rather than on doing things in the relaxed way that actually got you the positive result the first time.

The first time you were acting without craving, which led to good results; then craving seized upon those good results and tried to repeat them, which did not go as well.

(For me, a particularly clear example of this is in the context of romantic relationships. If I'm meeting someone for the first time, I might be relaxed and not particularly focused on whether it will lead to an actual relationship or not. But then if it looks like we might actually end up in a relationship, I can get a major craving towards wanting things to go that way, and then make a mess of it.)

For Western, non-mystical contexts where people have picked up on the craving thing, the examples from this newsletter feel related:

First up is The Inner Game Of Work by W. Timothy Gallwey. This is a successor to The Inner Game Of Tennis, though this one speaks more clearly to me as a non-sporty person. The key thesis of the book is that we have two 'Selves': Self 1 and Self 2.

Self 1 is the voice in your head that gives instructions, e.g. to hit the tennis ball, and then criticises performance as good or bad. You know this voice well, I’m sure. Self 2 is the one that actually hits the ball.

When I was playing at my best, I wasn’t trying to control my shots with self-instruction and evaluation. It was a much simpler process than that. I saw the ball clearly, chose where I wanted to hit it, and I let it happen. Surprisingly, the shots were more controlled when I didn’t try to control them.

This is a book where I was nodding along and highlighting every other sentence with notes like "yes!! this is AT!!". In this context, Alexander Technique is a method to shut Self 1 up and allow Self 2 to express itself.

In those terms, "Self 1" is associated with the construct of the self, as well as craving. "Self 2" are the subsystems that just do stuff regardless, and may indeed often do better if the craving doesn't get in the way.

Replies from: Grotace, Slider↑ comment by Grotace · 2020-05-16T11:35:52.655Z · LW(p) · GW(p)

Thank you for your reply, and it does clarify some things for me. If I may summarise in short, I think you are saying:

- Craving is a bad sort of motivation because it makes you react badly to obstacles, but other sorts of motivation can be fine.

- Self-conscious/ craving-filled states of mind can be unproductive when trying to act on these other sorts of motivations.

I still have some questions though.

You say you may pursue pleasure because you value it for its own sake. But what is the self (or subsystem?) that is doing this valuing? It feels like the valuer is a lot like a “Self 1”, the kind of self which meditation should expose to be some kind of delusion.

Here’s an attempt to put the question another way. Some one suggested in one of the previous comment threads about the topic that non-self was a bit like not identifying with your short term desires, and also your long term desires (and then eventually not identifying with anything). So why is identifying yourself with your values compatible with non-self?

EDIT: I reproduce here part of my response to Isusr, which I think is relevant, and is perhaps yet another way to ask the same question.

Typically, when we reason about what actions we should or should not perform, at the base of that reasoning is something of the form "X is intrinsically bad." Now, I'd always associated "X is intrinsically bad" with some sort of statement like "X induces a mental state that feels wrong." Do I have access to this line of reasoning as a perfect meditator?

Concretely, if someone asked me why I would go to a dentist if my teeth were rotting, I would have to reply that I do so because I value my health, or maybe because unhealthiness is intrinsically bad. And if they asked me why I value my health, I cannot answer except to point to the fact the that it does not feel good to me, in my head. But from what I understand, the enlightened cannot say this, because they feel that everything is good to them, in their heads.

I kind of feel that the enlightened cannot provide any reasons for their actions at all.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2020-05-16T12:27:58.505Z · LW(p) · GW(p)

Craving is a bad sort of motivation because it makes you react badly to obstacles, but other sorts of motivation can be fine.

Self-conscious/ craving-filled states of mind can be unproductive when trying to act on these other sorts of motivations.

Roughly, yes, though I would be a bit cautious about framing craving as outright bad, more like "the tradeoffs involved may make it better to let go of it in the end"; but of course, that depends on what exactly one is trying to achieve. As I noted in the post, it is also possible for one to weaken their craving with bad results, at least if we evaluate "results" from the point of view of achieving things.

You say you may pursue pleasure because you value it for its own sake. But what is the self (or subsystem?) that is doing this valuing? It feels like the valuer is a lot like a “Self 1”, the kind of self which meditation should expose to be some kind of delusion.

Different subsystems make valuations all the time; that's not an illusion. What's illusory is the notion that all of the different valuations are coming from a single self, and that positive/negative valence are things that the system intrinsically has to pursue/avoid.

For instance, one part of the mechanism is that at any given moment, you may have conscious intentions about what to do next. If you have two conflicting intentions, then those conflicting intentions are generated by different subsystems. However, frequently the mind-system attributes all intentions to a single source: "the self". Operating based on that assumption, the mind-system models itself as having a single decision-maker that generates all intentions and observes all experiences.

In The Apologist and the Revolutionary [LW · GW], Scott Alexander writes:

Anosognosia is the condition of not being aware of your own disabilities. [...] Take the example of the woman discussed in Lishman's Organic Psychiatry. After a right-hemisphere stroke, she lost movement in her left arm but continuously denied it. When the doctor asked her to move her arm, and she observed it not moving, she claimed that it wasn't actually her arm, it was her daughter's. Why was her daughter's arm attached to her shoulder? The patient claimed her daughter had been there in the bed with her all week. Why was her wedding ring on her daughter's hand? The patient said her daughter had borrowed it. Where was the patient's arm? The patient "turned her head and searched in a bemused way over her left shoulder". [...]

Dr. Ramachandran [...] posits two different reasoning modules located in the two different hemispheres. The left brain tries to fit the data to the theory to preserve a coherent internal narrative and prevent a person from jumping back and forth between conclusions upon each new data point. It is primarily an apologist, there to explain why any experience is exactly what its own theory would have predicted. The right brain is the seat of the second virtue. When it's had enough of the left-brain's confabulating, it initiates a Kuhnian paradigm shift to a completely new narrative. Ramachandran describes it as "a left-wing revolutionary".

Normally these two systems work in balance. But if a stroke takes the revolutionary offline, the brain loses its ability to change its mind about anything significant. If your left arm was working before your stroke, the little voice that ought to tell you it might be time to reject the "left arm works fine" theory goes silent. The only one left is the poor apologist, who must tirelessly invent stranger and stranger excuses for why all the facts really fit the "left arm works fine" theory perfectly well. [...]

This divorce between the apologist and the revolutionary might also explain some of the odd behavior of split-brain patients. Consider the following experiment: a split-brain patient was shown two images, one in each visual field. The left hemisphere received the image of a chicken claw, and the right hemisphere received the image of a snowed-in house. The patient was asked verbally to describe what he saw, activating the left (more verbal) hemisphere. The patient said he saw a chicken claw, as expected. Then the patient was asked to point with his left hand (controlled by the right hemisphere) to a picture related to the scene. Among the pictures available were a shovel and a chicken. He pointed to the shovel. So far, no crazier than what we've come to expect from neuroscience.

Now the doctor verbally asked the patient to describe why he just pointed to the shovel. The patient verbally (left hemisphere!) answered that he saw a chicken claw, and of course shovels are necessary to clean out chicken sheds, so he pointed to the shovel to indicate chickens. The apologist in the left-brain is helpless to do anything besides explain why the data fits its own theory, and its own theory is that whatever happened had something to do with chickens, dammit!

One way of explaining the construct of the self, is that there's a reasoning module which constructs a story of there being a single decision-maker, "the self", that's deciding everything. In the case of the split-brain patient, a subsystem has decided to point at a shovel because it's related to the sight of the snowed-in house that it saw; but the subsystem that is constructing the narrative of the self being in charge of everything, has only seen a chicken claw. So in order to fit the things that it knows into a coherent story, it creates a spurious narrative where the self saw the chicken claw, and shovels are needed for cleaning chicken sheds, so that's the reason why the self picked the shovel.

But what actually made the decision was an independent subsystem that was cut off from the self-narrative subsystem, which happened to infer that a shovel is useful for digging your way out of a snowed-in house. The subsystem creating the construct of the self wasn't responsible for the decision nor the implicit valuations involved in it, it merely happened to create a story that took the credit for what another subsystem had already done.

Seeing the nature of the self doesn't stop you from making valuations, it just makes you see that they are not coming from the self. But many of the valuations themselves remain unchanged by that. (As the Zen proverb goes: "Before enlightenment, chop wood, carry water. After enlightenment, chop wood, carry water.")

Replies from: Grotace↑ comment by Grotace · 2020-05-16T19:58:57.234Z · LW(p) · GW(p)

Thank you for your reply, which is helpful. I understand it takes time and energy to compose these responses, so please don't feel too pressured to keep responding.

1. You say that positive/negative valence are not things that the system intrinsically has to pursue/avoid. Then when the system says it values something, why does it say this? A direct question: there exists at least a single case in which the why is not answered by positive/negative valence (or perhaps it is not answered at all). What is this case, and what is the answer to the why?

2. Often in real life, we feel conflicted within ourselves. Maybe different valuations made by different parts of us contradict each other in some particular situation. And then we feel confused. Now one way we resolve this contradiction is to reason about our values. Maybe you sit and write down a series of assumptions, logical deductions, etc. The output of this process is not just another thing some subsystem is shouting about. Reasons are the kind of things that motivate action, in anyone. So it seems the reasoning module is somehow special, and I think there's a long tradition in Western philosophy of equating this reasoner with the self. This self takes into account all the things parts of it feel and value, and makes a decision. This self computes the tradeoffs involved in keeping/ letting go of craving. What do you think about this?

I think you are saying that the reasoning module is also somehow always under suspicion of producing mere rationalisations (like in the chicken claw story), and that even when we think it is the reasoning module making a decision, we're often deluded. But if the reasoning module, and every other module, is to be treated as somehow not-final, how do (should) you make a decision when you're confused? I think you would reject this kind of first-person decision making, and give a sort of third-person explanation of how the brain just does make decisions, somehow accumulating the things various subsystems say. But this provides no practical knowledge about what processes the brains of people who end up making good (or bad) decisions deploy.