Posts

Comments

Grok 3 told me 9.11 > 9.9. (common with other LLMs too), but again, turning on Thinking solves it.

This is unrelated to Grok 3, but I am not convinced that the above part of Andrej Karpathy's tweet is a "gotcha". Software version numbers use dots with a different meaning than decimal numbers and there 9.11 > 9.9 would be correct.

I don't think there is a clear correct choice of which of these contexts to assume for an LLM if it only gets these few tokens.

E.g. if I ask Claude, the pure "is 9.11>9.9" question gives me a no, whereas

"I am trying to install a python package. Could you tell me whether `9.11>9.9`?" gives me a yes.

For me, a strong reason why I do not see myself[1] doing deliberate practice as you (very understandably) suggest is that, on some level, the part of my mind which decides on how much motivational oomph and thus effort is put into activities just in fact does not care much about all of these abstract and long-term goals.

Deliberate practice is a lot of hard work and the part of my mind which makes decisions about such levels of mental effort just does not see the benefits. There is a way in which a system that circumvents this motivational barrier is working against my short-term goals and and it is the latter who significantly controls motivation: Thus, such a system will "just sort of sputter and fail" in such a way that, consciously, I don't even want to think about what went wrong.

If Feedbackloop Rationality wants to move me to be more rational, it has to work with my current state of irrationality. And this includes my short-sighted motivations.

And I think you do describe a bunch of the correct solutions: Building trust between one's short-term motivations and long-term goals. Starting with lower-effort small-scale goals where both perspectives can get a feel for what cooperation actually looks like and can learn that it can be worth the compromises. In some sense, it seems to me that once one is capable of the kind of deliberate practice that you suggest, much of this boot strapping of agentic consistency between short-term motivation and deliberate goals has already happened.

On the other hand, it might be perfectly fine if Feedbackloop Rationality requires some not-yet-teachable minimal proficiency at this which only a fraction of people already have. If Feedbackloop Rationality allows these people to improve their thinking and contribute to hard x-risk problems, that is great by itself.

- ^

To some degree, I am describing an imaginary person here. But the pattern I describe definitely exists in my thinking even if less clearly than I put it above.

Thank you for another beautiful essay at real thinking! This time about the mental stance itself.

But I’ll describe a few tags I’m currently using, when I remind myself to “really think.” Suggestions/tips from readers would also be welcome.

I think there is a strong conceptual overlap with what John Vervaeke describes as Relevance Realisation and wisdom.

I'll attempt a summary of my understanding of John Vervaeke's Relevance Realisation.

A key capability of any agentic/living beings is to prune the exponentially exploding space of possibilities when making any decision or thought. We are computationally bounded, and how we deal with that is crucial. Vervaeke terms the process that does this Relevance Realisation.

There is a lot of detail to his model, but let's jump to how some of this plays out in human thinking: A core aspect in how we are agentic is our use of memeplexes that form an "agent-arena-relationship" - we combine a worldview with an agent that is suited to that world and then tie our identity to that agent. We build toy versions all the time (many games are like this), but --according to Vervaeke's theses in the "Awakening from the Meaning Crisis" lectures-- modern western culture has somewhat lost track of the cultural structures which allow individuals to coordinate on and (healthily) grow their mind's agent-arena-relationship. We have institutions of truth (how to reach good factual statements rather than falsehoods), but not of wisdom (how to live with a healthy stance towards reality rather than bullshit. Historically religious institutions played that role but they now successfully do that for fewer and fewer people)

Inhabiting a functional such relationship feels alive and vibrant ("inhabit" = tying one's identity into these memes), whereas the lack of a functional agent-arena-relationship feels dream-like (zombies are a good representation; maybe "NPC" is a more recent meme that points at this).

A related thing is people having a spiritual experience: this involves glimpsing a new agent-arena-relationship which then sometimes gets nourished into a re-structuring of one's self-concept and priorities.

tying this back to "real thinking"

Although the process I described is not the same thing as real thinking, I do think that there are important similarities.

Regarding how to do this well, one important point of Vervaeke's is that humans necessarily enter the world with very limited concepts of "self", "agent" or "arena". This perspective makes it clear that a core part of what we do while growing up is refining these concepts. A whole lot of our nature is about doing this process of transcending our previous self-concept. Verveake loves the quote "the sage is to the adult as the adult is to the child" to point at what the word wisdom means.

The process according to his recommendations, involves

- a Community of Practice (a community of people who share the goal of re-orienting themselves. One such is forming with and around him at awakentomeaning.com),

- an Ecology of Practices (meditation, reflecting on philosophy, embodiment practices, etc. are all contributing factors to reducing self-delusion; the term practice emphasizes that it is about a regular activity rather than about fixed beliefs). A good way to get a feel for his perspective might be the first episode(s) of his After Socrates youtube series

and from my impression, a lot of this is practicing the ability of inhabiting/changing the currently active agent-arena-perspective, exploring its boundaries, not flinching away from noticing its limitations, engaging with the perspectives which others have built and so on. Generally, a kind of fluidity in the mental motions which are involved in this.

I hope my descriptions are sufficiently right to give an impression of his perspective and whether there are some ideas that are valuable to you :)

It happens to be the case that 1 kWh = 3,600,000 kg/s². You could substitute this and cancel units to get the same answer.

This should be kg m²/s².[1] On the other hand, this is a nice demonstration of how useful computers are: it really is too easy to accidentally drop a term when converting units

- ^

I double checked

I read

to have a dude clearly describe a phenomenon he is clearly experiencing as “mostly female”

as "he makes a claim about a thought pattern he considers mostly female", not as "he himself is described by the pattern" (QC does demonstrate high epistemic confidence in that post). Thus, I don't think that Elizabeth would disagree with you.

Thanks for the guidance! Together with Gwern's reply my understanding now is that caching can indeed be very fluidly integrated into the architecture (and that there is a whole fascinating field that I could try to learn about).

After letting the ideas settle for a bit, I think that one aspect that might have lead me to think

In my mind, there is an amount of internal confusion which feels much stronger than what I would expect for an agent as in the OP

is that a Bayesian agent as described still is (or at least could be) very "monolithic" in its world model. I struggle with putting this into words, but my thinking feels a lot more disjointed/local/modular. It would make sense if there is a spectrum from "basically global/serial computation" to "fully distributed/parallel computation" where going more to the right adds sources of internal confusion.

What a reply, thank you!

We are sitting close to the playground on top of red and blue blankets

Hmm.. . In my mind, the Pilot wave theory position does introduce a substrate dependence for the particle-position vs. wavefunction distinction, but need not distinguish any further than that. This still leaves simulation, AI-consciousness and mind-uploads completely open. It seems to me that the Pilot wave vs. Many worlds question is independent of/orthogonal to these questions.

I fully agree that saying "only corpuscle folk is real" (nice term by the way!) is a move that needs explaining. One advantage of Pilot wave theory is that one need not wonder about where the Born probabilities are coming from - they are directly implied of one wishes to make predictions about the future. One not-so-satisfying property is that the particle positions are fully guided by the wavefunction without any influence going the other way. I do agree that this makes it a lot easier to regard the positions as a superfluous addition that Occam's razor should cut away.

For me, an important aspect of these discussions is that we know that our understanding is incomplete for every of these perspectives. Gravity has not been satisfyingly incorporated into any of these. Further, the Church-Turing thesis is an open question.

I am not too familiar with how advocates of Pilot wave theory usually state this, but I want to disagree slightly. I fully agree with the description of what happens mathematically in Pilot wave theory, but I think that there is a way in which the worlds that one finds oneself outside of do not exist.

If we assume that it is in fact just the particle positions which are "reality", the only way in which the wave function (including all many worlds contributions) affects "reality" is by influencing its future dynamics. Sure, this means that the many worlds computationally do exist even in pilot wave theory. But I find the idea that "the way that the world state evolves is influenced by huge amounts of world states that 'could have been'" meaningfully different to "there literally are other worlds that include versions of myself which are just as real as I am". The first is a lot closer to everyday intuitions.

Well, this works to the degree to which we can (arbitrarily?) decide the particle positions to define "reality" (the thing in the theory that we want to look at in order to locate ourselves in the theory) in a way that is separate from being computationally a part of the model. One can easily have different opinions on how plausible this step is.

Finally, if we want to make the model capture certain non-Bayesian human behaviors while still keeping most of the picture, we can assume that instrumental values and/or epistemic updates are cached. This creates the possibility of cache inconsistency/incoherence.

In my mind, there is an amount of internal confusion which feels much stronger than what I would expect for an agent as in the OP. Or is the idea possibly that everything in the architecture uses caching and instrumental values? From reading, I imagined a memory+cache structure instead of being closer to "cache all the way down".

Apart from this, I would bet that something interesting will happen for a somewhat human-comparable agent with regards to self-modelling and identity. Would anything similar to human identity emerge or would this require additional structure? Some representation of the agent itself, and its capabilities should be present at least

After playing around für a few minutes, I like your app with >95% Probability ;) compare this bayescalc.io calculation

Unfortunately, I do not have useful links for this - my understanding comes from non-English podcasts of a nutritionist. Please do not rely on my memory, but maybe this can be helpful for localizing good hypotheses.

According to how I remember this, one complication of veg*n diets and amino acids is that the question of which of the amino acids can be produced by your body and which are essential can effectively depend on your personal genes. In the podcast they mentioned that especially for males there is a fraction of the population who totally would need to supplement some "non-essential" amino acids if they want to stay healthy and follow veg*n diets. As these nutrients are usually not considered as worthy of consideration (because most people really do not need to think about them separately and also do not restrict their diet to avoid animal sources), they are not included in usual supplements and nutrition advice

(I think the term is "meat-based bioactive compounds").

I think Elizabeth also emphasized this aspect in this post

log score of my pill predictions (-0.6)

If did not make a mistake, this score could be achieved by e.g. giving ~55% probabilities and being correct every time or by always giving 70% probabilities and being right ~69 % of the time.

you'd expect the difference in placebo-caffeine scores to drop

I am not sure about this. I could also imagine that the difference remains similar, but instead the baseline for concentration etc. shifts downwards such that caffeine-days are only as good as the old baseline and placebo-days are worse than the old baseline.

Update: I found a proof of the "exponential number of near-orthogonal vectors" in these lecture notes https://www.cs.princeton.edu/courses/archive/fall16/cos521/Lectures/lec9.pdf From my understanding, the proof uses a quantification of just how likely near-orthogonality becomes in high-dimensional spaces and derives a probability for pairwise near-orthogonality of many states.

This does not quite help my intuitions, but I'll just assume that the "it it possible to tile the surface efficiently with circles even if their size gets close to the 45° threshold" resolves to "yes, if the dimensionality is high enough".

One interesting aspect of these considerations should be that with growing dimensionality the definition of near-orthogonality can be made tighter without loosing the exponential number of vectors. This should define a natural signal-to-noise ratio for information encoded in this fashion.

Weirdly, in spaces of high dimension, almost all vectors are almost at right angles.

This part, I can imagine. With a fixed reference vector written as , a second random vector has many dimensions that it can distribute its length along while for alignment to the reference (the scalar product) only the first entry contributes.

It's perfectly feasible for this space to represent zillions of concepts almost at right angles to each other.

This part I struggle with. Is there an intuitive argument for why this is possible?

If I assume smaller angles below 60° or so, a non-rigorous argument could be:

- each vector blocks a 30°-circle around it on the d-hypersphere[1] (if the circles of two vectors touch, their relative angle is 60°).

- an estimate for the blocked area could be that this is mostly a 'flat' (d-1)-sphere of radius which has an area that scales with

- the full hypersphere has a surface area with a similar pre-factor but full radius

- thus we can expect to fit a number of vectors that scales roughly like which is an exponential growth in .

For a proof, one would need to include whether it is possible to tile the surface efficiently with the circles. This seems clearly true for tiny angles (we can stack spheres in approximately flat space just fine), but seems a lot less obvious for larger angles. For example, full orthogonality would mean 90° angles and my estimate would still give , an exponential estimate for the number of strictly orthogonal states although these are definitely not exponentially many.

and a copy of that circle on the opposite end of the sphere ↩︎

But the best outcomes seem to come out of homeopathy, which is as perfect of a placebo arm as one can get.

I did expect to be surprised by the post given the title, but I did not expect this surprise.

I have previously heard lots of advocates for evidence-based medicine claim that homoeopathy has very weak evidence for effects (mostly the amount that one would expect from noise and flawed studies, given the amount of effort being put into proving its efficacy) – do I understand correctly that this is an acceptable interpretation, while the aggregate mortality of real-world patients (as opposed to RCT participants) clearly improves when treated homoeopathically (compared to usual medicine)?

More generally, if I assume that the shift "no free healthcare"->"free healthcare" does not improve outcomes, and that "healthcare"->"healthcare+homoeopathy" does improve outcomes, wouldn't that imply that "healthcare+homoeopathy" is preferable to "no free healthcare"?

- of course, there are a lot of steps in this argument that can go wrong

- but generally, I would expect that something like this reasoning should be right.

- if I do assume that homoeopathy is practically a placebo, this can point us to at least some fraction of treatments which should be avoided: those that homoeopathy claims to heal without the need for other treatments

That looks like an argument that an approach like your "What I do"-section can actually lead to strong benefits from the health system, and that non-excessively complicated strategies are available.

One aspect which I disagree with is that collapse is the important thing to look at. Decoherence is sufficient to get classical behaviour on the branches of the wave function. There is no need to consider collapse if we care about 'weird' vs. classical behaviour. This is still the case even if the whole universe is collapse-resistant (as is the case in the many worlds interpretation). The point of this is that true cat states ( = superposed universe branches) do not look weird.

The whole 'macroscopic quantum effects' are interferences between whole universes branches from the view of this small quantum object in they brain.

Superposition of universe - We can certainly regard the possibility that the macroscopic world is in a superposition as seen from our brain. This is what we should expect (absent collapse) just from the sizes of universe and brain:

- The size of our brain corresponds to a limited number for the dimensionality of all possible brain states (we can include all sub-atomic particles for this)

- If the number of branches of the universe is larger than the number of possible brain states, there is no possible wave function in which there aren't some contributions in which the universe is in a superposition with regards to the brain. Some brain states must be associated with multiple branches.

- the universe is a lot larger than the brain and dimensionality scales exponentially with particle number

- further, it seems highly likely that many physical brain-states correspond to identical mind states (some unnoticeable vibration propagating through my body does not seem to scramble my thinking very much)

Because of this, anyone following the many worlds interpretation should agree that from our perspective, the universe is always in a superposition - no unknown brain properties required. But due to decoherence (and assuming that branches will not meet), this makes no difference and we can replace the superposition with a probability distribution.

Perhaps this is captured by your "why Everett called his theory relative interpretation of QM" - I did not read his original works.

The question now becomes the interference between whole universe branches: A deep assumption in quantum theory is locality which implies that two branches must be equal in all properties[1] in order to interfere[2]. Because of this, interference of branches can only look like "things evolving in a weird direction" (double slit experiment) and not like "we encounter a wholly different branch of reality" (fictional stories where people meet their alternate-reality versions).

Because of this, I do not see how quantum mechanics could create the weird effects that it is supposed to explain.

If we do assume that human minds have an extra ability to facilitate interaction between otherwise distant branches if they are in a superposition compared to us, this of course could create a lot of weirdness. But this seems like a huge claim to me that would depart massively from much of what current physics believes. Without a much more specific model, this feels closer to a non-explanation than to an explanation.

more strictly: must have mutual support in phase-space. For non-physicists: a point in phase-space is how classical mechanics describes a world. ↩︎

This is not a necessary property of quantum theories, but it is one of the core assumptions used in e.g. the standard model. People who explore quantum gravity do consider theories which soften this assumption ↩︎

I mean, if it's about looking for post-hoc rationalizations, what's even the point of pretending there's a consistent ethical system?

Hmm, I would not describe it as rationalization in the motivated reasoning sense.

My model of this process is that most of my ethical intuitions are mostly a black-box and often contradictory, but still in the end contain a lot more information about what I deem good than any of the explicit reasoning I am capable of. If however, I find an explicit model which manages to explain my intuitions sufficiently well, I am willing to update or override my intuitions. I would in the end accept an argument that goes against some of my intuitions if it is strong enough. But I will also strive to find a theory which manages to combine all the intuitions into a functioning whole.

In this case, I have an intuition towards negative utilitarianism, which really dislikes utility monsters, but I also have noticed the tendency that I land closer to symmetric utilitarianism when I use explicit reasoning. Due to this, the likely options are that after further reflection I

- would be convinced that utility monsters are fine, actually.

- would come to believe that there are strong utilitarian arguments to have a policy against utility monsters such that in practice they would almost always be bad

- would shift in some other direction

and my intuition for negative utilitarianism would prefer cases 2 or 3.

So the above description was what was going on in my mind, and combined with the always-present possibility that I am bullshitting myself, led to the formulation I used :)

As I understand, the main difference form her view is that decoherence is the relation between objects in the system, but measurement is related to the whole system "collapse".

I think I would agree to "decoherence does not solve the measurement problem" as the measurement problem has different sub-problems. One corresponds to the measurement postulate which different interpretations address differently and which Sabine Hossenfelder is mostly referring to in the video. But the other one is the question of why the typical measurement result looks like a classical world - and this is where decoherence is extremely powerful: it works so well that we do not have any measurements which manage to distinguish between the hypotheses of

- "only the expected decoherence, no collapse"

- "the expected decoherence, but additional collapse"

With regards to her example of Schrödinger's cat, this means that the state will not actually occur. It will always be a state where the environment must be part of the equation such that the state is more like after a nanosecond and already includes any surrounding humans after a microsecond (light went 300 m in all directions by then). When human perception starts being relevant, the state is With regards to the first part of the measurement problem, this is not yet a solution. As such I would agree with Sabine Hossenfelder. But it does take away a lot of the weirdness because there is no branch on the wave function that contains non-classical behaviour[1].

Wigner's friend.

You got me here. I did not follow the large debate around Wigner's friend as i) this is not the topic I should spend huge amounts of time on, and ii) my expectations were that these will "boil down to normality" once I manage to understand all of the details of what is being discussed anyway.

It can of course be that people would convince me otherwise, but before that happens I do not see how these types of situations could lead to strange behaviour that isn't already part of the well-established examples such as Schrödinger's cat. Structurally, they only differ in that there are multiple subsequent 'measurements', and this can only create new problems if the formalism used for measurements is the source. I am confident that the many worlds and Bohmian interpretations do not lead to weirdness in measurements[2], such that I am as-of-yet not convinced.

I think (give like 30 per cent probability) that the general nature of the UFO phenomenon is that it is anti-epistemic

Thanks for clarifying! (I take this to be mostly 'b) physical world' in that it isn't 'humans have bad epistemics') Given the argument of the OP, I would at least agree that the remaining probability mass for UFOs/weirdness as a physical thing is on the cases where the weird things do mess with our perception, sensors and/or epistemics.

The difficult thing about such hypotheses is that they can quickly evolve to being able to explain anything and becoming worthless as a world-model.

Could you clarify whether you attribute the similarity to a) how human minds work, or b) how the physical world works, or c) something I am not thinking of?

b would seem clearly mistaken to me:

In some sense it is similar to large-scale Schrodinger's cat, which can be in the state of both alive and dead only when unobserved.

For this I would recommend to use the decoherence conception of what measurements do (which is the natural choice in the Many Worlds Interpretation and still highly relevant if one assumes that a physical collapse occurs during measurement processes). From this perspective, what any measurement does is to separate the wave function into a bunch of contributions where each contains the measurement device showing result x and the measured system having the property x that is being measured[1]. Due to the high-dimensional space that the wave-function moves in, these parts will tend to never meet again, and this is what the classical limit means[2]. When people talk about 'observation' here, it is important to realize that an arbitrary physical interaction with the outside world is sufficient to count. This includes air molecules, thermal radiation, cosmic radiation, and very likely even gravity[3]. For objects large enough that we can see them, it will not happen without extreme effort that they remain 'unobserved' for longer times[4].

For anything macroscopic, there is no reason to believe that "human observation" is remotely relevant for observing classical behaviour.

This assumes that this is a useful measurement. More generally, any arbitrary interaction between two systems does the same thing except that there is no legible "result x" or "property x" which we could make use of. ↩︎

of course, if there is a collapse which actually removes most of the parts there is additional reason why they will not meet in the future. The measurements we have done so far do not show any indication of a collapse in the regimes we could access, which implies that this process of decoherence is sufficient as a description for everyday behaviour. The reason why we cannot access further regimes is that decoherence kicks in and makes the behaviour classical even without the need for a physical collapse. ↩︎

Though getting towards experiments which manage to remove the other decoherence sources enough that gravity's decoherence even could be observed is one of the large goals that researchers are striving for. ↩︎

E.g. Decoherence and the Quantum-to-Classical Transition by Maximilan Schlosshauer has a nice derivation and numbers for the 'not-being-observed' time scales: Table 3.2 gives the time scales resulting from different 'observers' for a dust grain of size 0.01 mm as "1 s due to cosmic background radiation, s from photons at room temperature, s from collisions with air molecules". ↩︎

You are right. Somehow I had failed to attribute this to culture. There clearly are lots of systems with a zero-sum competitive mentality.

Compared to the US, the German school and social system seems significantly less competitive to me (from what I can tell, living only in the latter). There still is a lot of competition, but my impression is that there are more niches which provide people with slack.

I do tend to round things off to utilitarianism it seems.

Your point on the distinct categories between puppies and other animals is a good one. With the categorical distinction in place, our other actions aren't really utilitarian trade-offs any more. But there are animals like guinea pigs which are in multiple categories.

what do we do with the now created utility monster?

I have trouble seriously imagining an utility monster which actually is net-positive from a total utility standpoint. In the hypothetical with the scientist, I would tend towards not letting the monster do harm just to remove incentives for dangerous research. For the more general case, I would search for some excuses why I can be a good utilitarian while stopping the monster. And hope that I actually find a convincing argument. Maybe I think that most good in the world needs strong cooperation which is undermined by the existence of utility monsters.

In a way, the stereotypical "Karen" is a utility monster

One complication here is that I would expect the stereotypical Karen to be mostly role-playing such that it would not actually be positive utility to follow her whims. But then, there could still be a stereotypical Caren who actually has very strong emotions/qualia/the-thing-that-matters-for-utility. I have no idea how this would play out or how people would even get convinced that she is Caren and not Karen.

Very fun read, thanks!

[...] we ought to indeed accept the numbers and with them the super-puppy and the pain to be found within its joyous and drooling jaws, which isn't the common sense ethical approach to the problem (namely, take away the mad scientist's grant funding and have them work on something useful, like a RCT on whether fresh mints cause cancer)

I am not sure about this description of common sense morality. People might agree about not creating such a super-puppy, but we do some horrible stuff to lab/farm animals in order to improve medical understanding/enjoy cheaper food. Of course, there isn't the aspect of "something directly values the pain of others", but we are willing to hurt puppies if this helps human interests.

Also, being against the existence of 'utility monsters which actually enjoy the harm to others' could also be argued for from a utilitarian perspective. We have little reason to believe that "harm to others" plays any significant/unavoidable role in feeling joy. Thus, anyone who creates entities with this property is probably actually optimizing for something else.

For normal utility monsters (entities which just have huge moral weight), my impression is that people mostly accept this in everyday examples. Except maybe for comparisons between humans where we have large amounts of historical examples where people used these arguments to ruin the lifes of others using flawed/motivated reasoning.

Just because there is somebody who is smarter than you, who works on some specific topic, doesn't mean that you shouldn't work on it. You should work on the thing where you can make the largest positive difference. [...]

I think you address an important point. Especially for people who are attracted to LessWrong, there tends to be a lot identification with one's cognitive abilities. Realizing that there are other people who just are significantly more capable can be emotionally difficult.

For me, one important realization was that my original emotions around this kind of assumed a competition where not-winning was actually negative. When I grokked that a huge fraction of these super capable people are actually trying to do good things, this helped me shift towards mostly being glad if I encounter such people.

Also The Pont of Trade and Being the (Pareto) best in the World are good posts which emphasize that "contributing value" needs way fewer assumptions/abilities than one might think.

I do think that tuning cognitive strategies (and practice in general) is relevant to improving the algorithm.

Practically hard-coded vs. Literally hard-coded

My introspective impression is less that there are "hard-coded algorithms" in the sense of hardware vs. software, but that it is mostly practically impossible to create major changes for humans.

Our access to unconscious decision-making is limited and there is a huge amount of decisions which one would need to focus on. I think this is a large reason why the realistic options for people are mostly i) only ever scratching the surface for a large number of directions for cognitive improvement, or ii) focussing really strongly on a narrow topic and becoming impressive in that topic alone[1].

Then, our motivational system is not really optimizing for this process and might well push in different directions. Our motivational system is part of the algorithm itself, which means that there is a boot strapping problem. People with unsuited motivations will never by motivated to change their way of thinking.

Why this matters

Probably we mostly agree on what this means for everyday decisions.

But with coming technology, some things might change.

- Longevity/health might allow for more long-term improvement to be worthwhile (probably not enough by itself unless we reach astronomic lifespans)

- technology might become more integrated into the brain. It does not seem impossible that "Your memory is unreliable. Let us use some tech and put it under a significant training regimen and make it reliable" will become possible at some point.

- technologies like IVF could lead to "average people" having a higher starting point with regard to self-reflection and reliable cognition.

Also, this topic is relevant to AI takeoff. We do perceive that there is this in-principle possibility for significant improvement in our cognition, but notice that in practice current humans are not capable of pulling it off. This lets us imagine that beings who are somewhat beyond our cognitive abilities might hit this threshold and then execute the full cycle of reflective self-improvement.

I think this is the pragmatic argument for thinking in separate magisteria ↩︎

I have a pet theory that some biases can be explained as a mix-up between probability and likelihood. (I don't know if this is a good explanation.)

At least not clearly distinguishing probability and likelihood seems common. One point-in-favour is our notation of conditional probabilities (e.g. ) where is a symbol with mirror-symmetry. As Eliezer writes in a lecture in plane crash, this is a didactically bad idea and an asymmetric symbol would be a lot easier to understand: is less optically obvious than[1]

Of course, our written language has an intrinsic left-to-right directional asymmetry, so that the symmetric isn't a huge amount of evidence[2].

I agree a lot. I had the constant urge to add some disclaimer "this is mostly about possible AI and not about likely AI" while writing.

It certainly seems very likely that AIs can be much better at this than us, but it's not obvious to me how big a difference that makes, compared with the difference between doing it at all (like us) and not (like chimps).

The only obvious improvements I can think of are things where I feel that humans are below their own potential[1]. This does seem large to me, but by itself not quite enough to create a difference as large as chimps-to-humans. But I do think that adding possibilities like compute speed could be enough to make this a sufficiently large gap: If I imagine myself to wake up for one day every month while everyone else went on with their lives in the mean time, I would probably be completely overwhelmed by all the developments after only a few subjective 'days'. Of course, this is only a meaningful analogy if the whole world is filled with the fast AIs, but that seems very possible to me.

A lot of the ways in which we hope/fear AIs may be radically better than us seem dependent on having AIs that are designed in a principled way rather than being huge bags of matrices doing no one knows quite what. [...]

Yeah. I still think that the other considerations are have significance: they give AIs structural advantages compared to biological life/humans. Even if there never comes to be some process which can improve the architecture of AIs using a thorough understanding, mostly random exploration still creates an evolutionary pressure on AIs becoming more capable. And this should be enough to take advantage of the other properties (even if a lot slower).

My sense: In the right situation or mindset, people can be way more impressive than we are most of the time. I sometimes feel like a lot of this is caused by hard-wired mechanisms that conserve calories. This does not make much sense for most people in rich countries, but is part of being human. ↩︎

I would argue that a significant factor in the divide between humans and other animals is that we (now) accumulate knowledge over many generations/people. Humans a 100 000 years ago might already have been somewhat notable compared to chimpanzees, but we only hit the threshold of really accumulating knowledge/strategies/technology of millions of individuals very recently.

If I would have been raised by chimpanzees (assuming this works), my abilities to have influence over the world would have been a lot lower even though I would be human.

Given that LLMs already are massively beyond human ability in absorbing knowledge (I could not read even a fraction of all the texts that they now use in training), we have good reasons to think that future AI will be beyond human abilities, too.

Further than this, we have good reasons to think that AIs will be able to evade bottlenecks which humans cannot (compare life 3.0 by Max Tegmark). AIs will not have to suffer from ageing and an intrinsic expiration date to all of their accumulated expertise, will be in principle able to read and change their own source code, and have the scalability/flexibility advantages of software (one can just provide more GPUs to run the AI faster or with more copies).

I don't think 'tautology' fits. There are some people who would draw the line somewhere else even if they were convinced of sentience. Some people might be convinced that only humans should be included, or maybe biological beings, or some other category of entities that is not fully defined by mental properties. I guess 'moral patient' is kind of equivalent to 'sentient' but I think this mostly tells us something about philosophers agreeing that sentience is the proper marker for moral relevance.

I never opened up the ones I used, but I would expect that there is a similar heating element which just has no direct contact with the water and is connected to the bottom plate thermally, but not electrically (compare this wikimedia image.

I imagine this to be similar to how adding white noise can make it easier to concentrate in an environment with distracting noise (in the sense that more signal can be calming).

I mostly agree with this perspective with regards to the "moral imperative".

But apart from that, it seems to me that a good case can be made if we use personal health spending as a reference class.

Even if we only consider currently achievable DALY gains, it is quite notable that we have a method to gain several healthy life-years for a price of maybe $20,000/healthy year (and actually these gains should even be heritable themselves!).

I do not know the numbers for common health interventions, but this should already be somewhat comparable.

update: Quick estimate: US per capita health spending in 2019 was $11,582 according to CDC. If the US health spending doubles life expectancy compared to having no health system, this is comparable to $20,000/healthy year.

Not only does gpt-neox-20b have shards, it has exactly forty-six.

I notice that human DNA has 46 shards. This looks to me like evidence against the orthogonality thesis and for human values being much easier to reach than I expected.

I am not sure we disagree with regards to the prevalence of maleficience. One reason why I would imagine that

"are they truly part of our tribe?" actually manages to filter out a large portion of harmful cases.

works in more tribal contexts would be that cities provide more "ecological" niches (would the term be sociological here?) for this type of behaviour.

intuitions [...] are maladaptive in underpredicting sociopathy or deliberate deception

Interesting. I would mostly think that people today are way more specialized in their "professions" such that for any kind of ability we will come into contact with significantly more skilled people than a typical ancestor of ours would have. If I try to think about examples where people are way too trusting, or way too ready to treat someone as an enemy, I have the impression that for both mistakes examples come to mind quite readily. Due to this, I think I do not agree with "underpredict" as a description and instead tend to a more general "overwhelmed by reality".

I think one important aspect for the usefulness of general principles is by how much they constrain the possible behaviour. Knowing general physics for example, I can rule out a lot of behaviour like energy-from-nothing, teleportation, perfect knowledge and many such otherwise potentially plausible behaviours. These do apply both to bacteria and humans, and they actually are useful for understanding bacteria and humans.

The OP, I think, argues that FEP is not helpful in this sense because without further assumptions it is equally compatible with any behaviour of a living being.

I think one aspect which softens the discrepancy is that our intuitions here might not be adapted to large-scale societies. If everyone really lives mainly with one's own tribe and has kind of isolated interactions with other tribes and maybe tribe-switching people every now and then (similar to village-life compared to city-life), I could well imagine that "are they truly part of our tribe?" actually manages to filter out a large portion of harmful cases.

Also, regarding 2): If indeed almost no one is evil, almost everyone is broken: there are strong incentives to make sure that the social rules do not rule out your way of exploiting the system. Because of this I would not be surprised if "common knowledge" around these things tends to be warped by the class of people who can make the rules. Another factor is that as a coordination problem, using "never try to harm others" seems like a very fine Schelling point to use as common denominator.

I also like "problematic" - it could be used as a 'we are not yet quite sure about how bad this is' version of "destructive"

This time, I agree fully :)

I think there is a lot of truth to this, but I do not quite agree.

Most long-lasting negative emotions and moods exist solely for social signaling purposes

feels a bit off to me. I think I would agree with an alternate version "most long-lasting negative emotions and moods are caused by our social cognition" (I am not perfectly happy with this formulation).

In my mind the difference is that "for signalling purposes" contains an aspect of a voluntary decision (and thus blame-worthiness for the consequences), whereas my model of this dynamic is closer to "humans are kind of hard-wired to seek high-calorie food which can lead to health problems if food is in abundance". I guess many rationalists are already sufficiently aware that much of human decision-making (necessarily) is barely conscious. But I think that especially when dealing with this topic of social cognition and self-image it is important to emphasize that some very painful failure modes are bundled with being human and that, while we should take agency in avoiding/overcoming them, we do not have the ability to choose our starting point.

On a different note:

This Ezra Klein Show interview with Rachel Aviv has impressive examples of how influential culture/memes can be for mental (and even physical) illnesses and also how difficult it is to culturally deal with this.

I think my hypothesis would more naturally predict that schizophrenics would experience these symptoms constantly, and not just during psychotic episodes. Not sure what to make of that. Hmmmm. Maybe I should hypothesize that different parts of the cortex are “completely unmoored from each other” only during psychotic episodes, and the rest of the time they’re merely “mediocre at communicating”?

I am not sure that constant symptoms would be a necessary prediction of your theory: I could easily imagine that the out-of-sync regions with weak connections mostly learn to treat their connections as "mostly a bit of noise, little to gain here" and mostly ignore them during normal functioning (this also seems energetically efficient). But during exceptionally strong activation, they start using all channels and the neighbouring brain regions now need to make sense of the unusual input.

I cannot tell whether this story is more natural than a prediction of constant symptoms, but it does seem plausible to me.

I do not know about scientific studies (which does not mean much), but at least anecdotally I think the answer is a yes at least for people who are not trained/experienced in making exactly these kinds of decisions.

One thing I have heard anecdotally is that people often significantly increase the prize when deciding to build/buy a house/car/vacation because they "are already spending lots of money, so who cares about adding 1% to the prize here and there to get neat extras" and thus spend years/months/days of income on things which they would not have bought if they had treated this as a separate decision.

This is a bit different from the bird-charity example, but it seems very related to me in that our intuitions have trouble with keeping track of absolute size.

Isn't -1 inversion?

I think for quaternions, corresponds both to inversion and a 180 degree rotation.

When using quaternions to describe rotations in 3D space however, one can still represent rotations with unit-quaternions where n is a 'unit vector' distributed along the directions and indicates the rotation axis, and is the 3D rotation angle. If one wishes to rotate any orientation (same type of object as n) by q, the result is . Here, corresponds to and is thus a full 360 turn.

I have tried to read up on explanations for this a few times, but unfortunately never with full success. But usually people start talking about describing a "double cover" of the 3D rotations.

Maybe a bit of intuition about this relation can come from thinking about measured quantities in quantum mechanics as 'expectation values' of some operator written as : Here it becomes more intuitive that replacing (rotating the measured quantity back and forth by around the axis ) results in , which is an -rotated X measured on an -rotated wavefunction.

Thank you.

I really like your framing of home - it seems very close to how John Vervaeke describes it, but somehow your description made something click for me.

I wish to be annealed by this process.

I'd like to share a similar framing of a different concept: beauty. I struggled with what I should call beautiful for a while, as there seemed to be both some objectivity to it, but also loads of seemingly arbitrary subjectiveness which just didn't let me feel comfortable with feeling something to be beautiful. All the criteria I could use to call something beautiful just seemed off. A frame which helped me re-conceptualize much of my thinking about it is:

Beautiful is that which contributes to me wishing to be a part of this world.

Of this framing, I really like how compatible it is with being agentic, and also that it emphasizes subjectiveness without any sense of arbitrariness.

I will have to try this, thanks for pointing to a mistake I have made in my previous attempts at scheduling tasks!

One aspect which I have the feeling is also important to you (and is important to me) is that the system also has some beauty to it. I guess this is mostly because using the system should feel more rewarding than the alternative of "happen to forget about it" so that it can become a habit.

I recently read (/listened to) the shard theory of human values and I think that its model of how people decide on actions and especially how hyperbolic discounting arises fits really well with your descriptions:

To sustain ongoing motivation, it is important to clearly feel the motivation/progress in the moment. Our motivational system does not have access to an independent world model which could tell it that the currently non-motivating task is actually something to care about and won't endorse it if there is no in-the-moment expectation of relevant progress. As you describe, your approach feels more like "making the abstract knowledge of progress graspable in-the-moment to one's motivational system" instead of "trying to trick it into doing 'the right thing' ".

Regarding the transporter:

Why does "the copy is the same consciousness" imply that killing it is okay?

From these theories of consciousness, I do not see why the following would be ruled out:

- Killing a copy is equally bad as killing "the sole instance"

- It fully depends on the will of the person

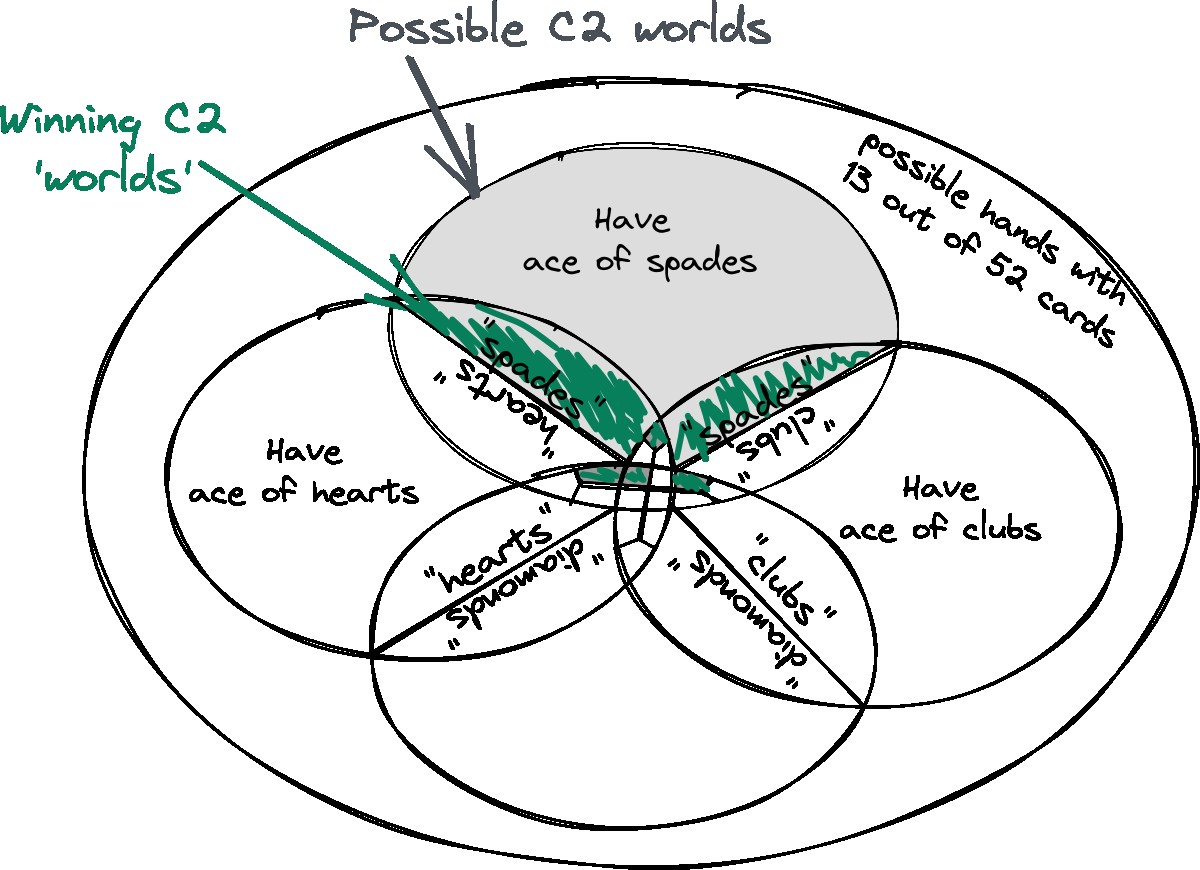

oh.., right - it seems I actually drew B instead of C2. Here is the corrected C2 diagram:

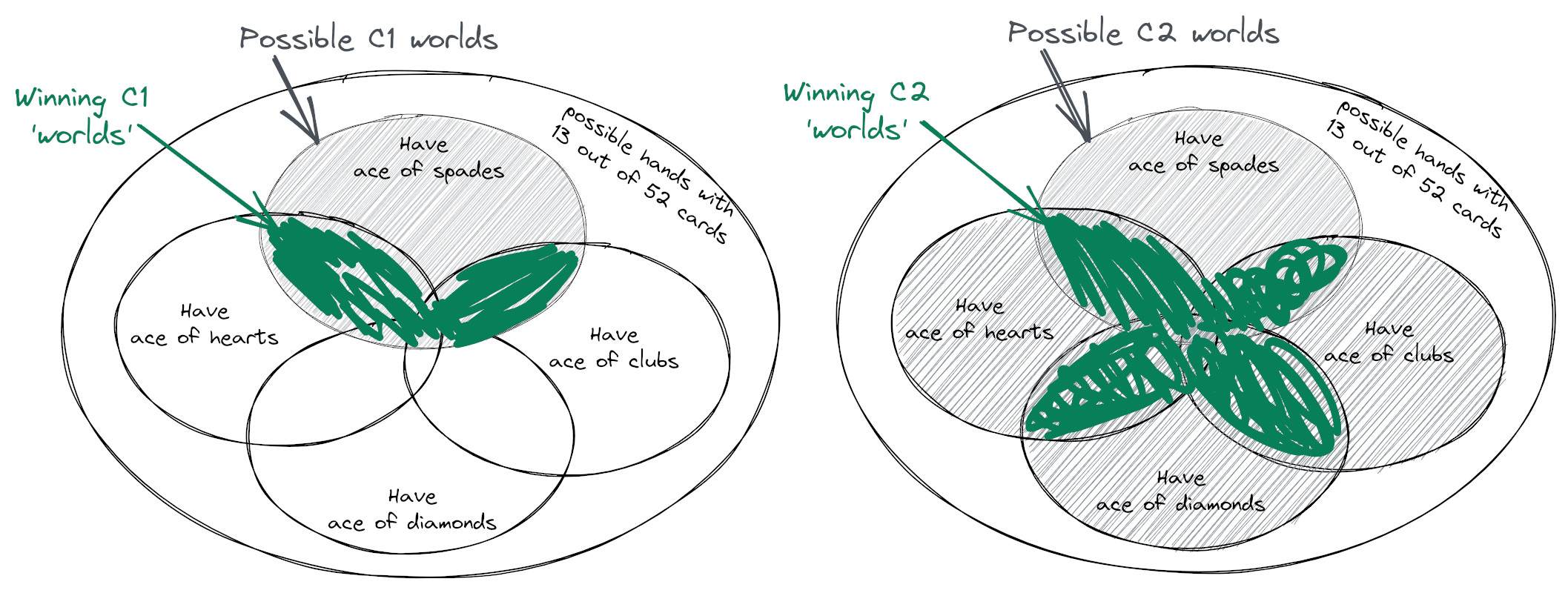

Okay, I think I managed to make at least the case C1-C2 intuitive with a Venn-type drawing:

(edit: originally did not use spades for C1)

The left half is C1, the right one is C2. In C1 we actually exclude both some winning 'worlds' and some losing worlds, while C2 only excludes losing worlds.

However due to symmetry reasons that I find hard to describe in words, but which are obvious in the diagrams, C1 is clearly advantageous and has a much better winning/loosing ratio.

(note that the 'true' Venn diagram would need to be higher dimensional so that one can have e.g. aces of hearts and clubs without also having the other two. But thanks to the symmetry, the drawing should still lead to the right conclusions.)

Thanks for the attempt at giving an intuition!

I am not sure I follow your reasoning:

Maybe the intuition here is a little clearer, since we can see that winning hands that contain an ace of spades are all reported by C1 but some are not reported by C2, while all losing hands that contain an ace of spades are reported by both C1 and C2 (since there's only one ace for C2 to choose from)

If I am not mistaken, this would at first only say that "in the situations where I have the ace of spades, then being told C1 implies higher chances than being told C2"? Each time I try to go from this to C1 > C2, I get stuck in a mental knot. [Edited to add:] With the diagrams below, I think I now get it: If we are in C2 and are told "You have the ace of spades", we do have the same grey/loosing area as in C1, but the winning worlds only had a random 1/2 to 1/4 (one over the number of actual aces) chance of telling us about the ace of spades. Thus we should correspondingly reduce the belief that we are in these winning worlds. I hope this is finally correct reasoning. [end of edit]

I can only find an intuitive argument why B≠C is possible: If we initially imagine to be with equal probability in any of the possible worlds, when we are told "your cards contain an ace" we can rule out a bunch of them. If we are instead told "your cards contain this ace", we have learned something different, and also something more specific. From this perspective it seems quite plausible that C > B