[April Fools'] Definitive confirmation of shard theory

post by TurnTrout · 2023-04-01T07:27:23.096Z · LW · GW · 8 commentsContents

8 comments

I've written a lot about shard theory [? · GW] over the last year. I've poured dozens of hours into theorycrafting, communication, and LessWrong comment threads. I pored over theoretical alignment concerns with exquisite care and worry. I even read a few things that weren't blog posts on LessWrong.[1] In other words, I went all out.

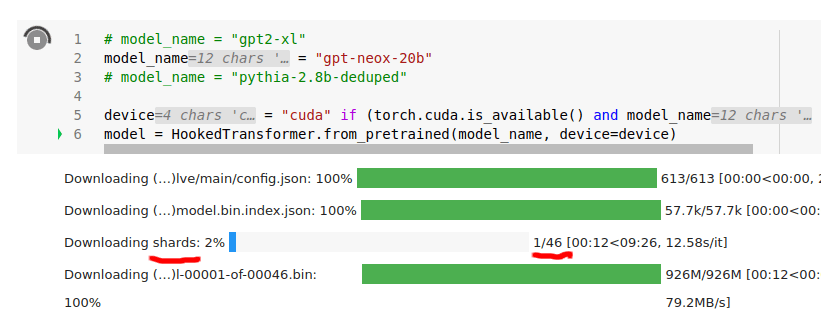

Last month, I was downloading gpt-neox-20b when I noticed the following:

gpt-neox-20b have shards, it has has exactly forty-six.I've concluded the following:

- Shard theory is correct (at least, for

gpt-neox-20b). - I wasted a lot of time arguing on LessWrong when I could have just touched grass [LW · GW] and ran the cheap experiment of actually downloading a model and observing what happens.

- I totally underappreciated how much work the broader AI community[2] has done on alignment. They are literally able to print out how many shards an "uninterpretable" model like

gpt-neox-20bhas.- They don't whine about how tough these models are to interpret. They just got the damn job done.

- This really makes team shard's recent work [LW · GW] look naive and incomplete. I think we found subcircuits of a cheese-shard. The ML community just found all 46 shards in

gpt-neox-20b, and nonchalantly printed that number for us to read.

- This is embarrassing for me, even though shard theory just got confirmed. I seriously spent months arguing about this stuff? Cringe.

- I did a bad job of interpreting theoretical arguments, given that I was still uncertain after months of thought. Probably this is hard and we are bad at it.

- Stop arguing in huge comment threads about what highly speculative approaches are going to work, and start making your beliefs pay rent by finding ways to experimentally test them.

- See how well you can predict AI alignment results today [LW · GW] before trying to predict what happens for 500IQ GPT-5.

- I don't ever want to hear another "but I won't be able to learn until the intelligence explosion happens or not." Correct theories leave many clues and hints [LW · GW], and some of those are going to be findable today. If even a navel-gazing edifice like shard theory can get instantly experimentally confirmed, so can your theory.

I then had GPT-4 identify some of the 46 shards.

- The Procrastination Shard: This shard makes the AI model continually suggest that users read just one more LessWrong post or engage in another lengthy comment thread before taking any real-world action.

- Hindsight Bias Shard: This shard leads the model to believe it knew the answer all along after learning new information, making it appear much smarter in retrospect.

- The "Armchair Philosopher" Shard: With this shard, the AI can generate lengthy and convoluted philosophical arguments on any topic, often without any direct experience or understanding of the subject matter.

- Argumentative Shard: A shard that compels the model to challenge every statement and belief, regardless of how trivial or uncontroversial it may be.

- Trolley Problem Shard: This shard makes the model obsess over hypothetical moral dilemmas and ethical conundrums, while ignoring more practical and immediate concerns.

- The Existential Dread Shard: This shard causes the AI to frequently bring up the potential risks of AI alignment and existential catastrophes, even when discussing unrelated topics.

I'm pleasantly surprised that gpt-neox-20b shares values which I (and other LessWrong users) have historically demonstrated. The fact that gpt-neox-20b and LessWrong share many shards makes me more optimistic about alignment overall, since it implies convergence in the values of real-world trained systems.

(As a corollary, this demonstrates that LLMs can make serious alignment progress.)

Although I'm embarrassed and humbled by this experience, I'm glad that shard theory is true. Here's to—next time—only taking three months before running the first experiment! 🥂

- ^

DM me if you want links to websites where you can find information which is not available on LessWrong!

- ^

"The broader AI community" taken in particular to include more traditional academics, like those at EleutherAI.

8 comments

Comments sorted by top scores.

comment by Mart_Korz (Korz) · 2023-04-01T18:24:36.600Z · LW(p) · GW(p)

Not only does gpt-neox-20b have shards, it has exactly forty-six.

I notice that human DNA has 46 shards. This looks to me like evidence against the orthogonality thesis and for human values being much easier to reach than I expected.

comment by the gears to ascension (lahwran) · 2023-04-01T09:42:13.667Z · LW(p) · GW(p)

Help I am

only able to

type in shards

what do I

do

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2023-04-01T11:19:17.547Z · LW(p) · GW(p)

Try writing haiku.

Each line written by one shard.

See what comes of it.

comment by DragonGod · 2023-04-01T08:22:19.717Z · LW(p) · GW(p)

I didn't make it very far beyond combusting into laughter (the first image).

Sadly I should have realised it by footnote 1. I didn't take sufficient notice of that tiny note of discord [LW · GW] [1] at Turntrout describing reading non LW content as an extraordinary effort.

I have failed as a rationalist. 😔

I did at least notice my confusion [? · GW] enough at the joke post on Alignment Forum to realise that today was April 1st though. ↩︎

↑ comment by Nicholas / Heather Kross (NicholasKross) · 2023-04-01T16:39:42.908Z · LW(p) · GW(p)

Speaking of tiny notes on Discord, Eleuther should setup a new channel just for analyzing this

comment by Stephen McAleese (stephen-mcaleese) · 2023-04-01T11:52:05.928Z · LW(p) · GW(p)

I forgot today was 1 April and thought this post was serious until I saw the first image!

Replies from: unicode-70↑ comment by Ben Amitay (unicode-70) · 2023-04-02T05:45:06.278Z · LW(p) · GW(p)

So imagine hearing the audio version - no images there

comment by Review Bot · 2024-09-20T23:01:46.043Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?