Ethodynamics of Omelas

post by dr_s · 2023-06-10T16:24:16.215Z · LW · GW · 18 commentsContents

Introduction Free utility Those who should actually maybe walk back to Omelas Conclusions None 18 comments

Epistemic status: just kidding... haha... unless...

Introduction

A great amount of effort has been expended throughout history to decide the matter of "what is good" or "what is right" [citation needed]. This valiant but, let's face it, not tremendously successful effort has produced a number of possible answers to these questions, none of which is right, if history is any indication. Far from this being their only problem, the vast majority of these answers also suffer from the even more serious flaw of not being able to produce anything resembling a simple quantitative prediction or computable expression for the thing they are supposed to discuss, and instead prefer to faff about with qualitative and ambiguously defined statements. Not only they aren't right; they aren't even wrong.

More recently, and in certain premises more than others, a relatively new-ish theory of ethics has gained significant traction as the one most suited to a properly scientific, organized and rational mind - the theory of Utilitarianism. This approach to ethics postulates that all we have to do to solve it is to define some kind of "utility function" (TBD) representing all of the "utility" of each individual who is deemed a moral subject (TBD) via some aggregation method (TBD) and in some kind of objective, commensurable unit (TBD). It is then a simple matter of finding the policy which maximizes this utility function, and there you go - the mathematically determined guide to living the good life, examined down to however many arbitrary digits of precision one might desire! By tackling the titanic task head on, refusing to bow to the tyranny of vague metaphysics, and instead looking for the unyielding and unforgiving certainty of numbers, Utilitarianism manages one important thing:

Utilitarianism manages to be wrong.

Now, I hope the last statement doesn't ruffle too many feathers. If it helps reassure any of those who may have felt offended by it, I'll admit that this study also deals unashamedly into Utilitarianism; and thus, by definition, it is also wrong. But one hopes, at least, wrong in an interesting enough way, which is often the best we can do in such complex matters. The object of this work is the aggregation method of utility. There are many possible proposals, none, in my opinion, too satisfactory, all vulnerable to falling into one or another horrible trap laid by the clever critic which our moral instincts refuse to acknowledge as possibly ever correct.

A common one, which we might call Baby's First Utility Function, is total sum utilitarianism. In this aggregation approach, more good is good. Simple enough! Ten puppies full of joy and wonder are obviously better than five puppies full of joy and wonder, even an idiot understands as much. What else is left to consider? Ha-ha, says the critic: but what if a mad scientist created some kind of amazing super-puppy able to feel joy and wonder through feasting upon the mangled bodies of all others? What if the super-puppy produced so much joy that it offsets the suffering it inflicts? Total sum utilitarianism tells us that if the parameters are right, we ought to indeed accept the numbers and with them the super-puppy and the pain to be found within its joyous and drooling jaws, which isn't the common sense ethical approach to the problem (namely, take away the mad scientist's grant funding and have them work on something useful, like a RCT on whether fresh mints cause cancer). But even without super-puppies, total sum utilitarianism lays more traps for us. The obvious one is that if more total utility is always good, then as long as life is above the threshold for positive utility (TBD), it's a moral imperative to simply spawn more humans[1]. This is known as the "repugnant conclusion", named so after the philosopher who reached it took a look at the costs of housing and childcare in his city. Obviously, as our lifestyle has improved and diversified, our western sensibilities have converged towards the understanding that children are like wine: they get better as they age, and are only good in moderation. We'd like our chosen ethical system not to reprimand us too harshly for things we're dead set on doing anyway, thank you very much.

Now, seeking to fix these issues, the philosopher who through a monumental cross-disciplinary effort has learned not only addition, but division too, may thus come up with another genius idea: average utilitarianism. Just take that big utility boi and divide it by the number of people! Now the number goes up only if each individual person's well-being does as well; simply creating more people changes nothing, or makes the situation worse if you can't keep up with the standards of living. This seems more intuitive; after all, no one ever personally experiences all of the utility anyway. There is no grand human gestalt consciousness that we know of (and I'm fairly confident, not more than one or two that we don't). So average utility is as good as it gets, because as every statistician will tell you, averages are all politicians need to know about distributions before taking monumental decisions. Fun fact, they usually cry while telling you this.

Now, while I'm no statistician, I am sympathetic to their plight, and can see full well how averages alone can be deceptive. After all, a civilization in which everyone has a utility of 50 and one in which half of everyone has a utility of 100 and the other half has a utility of "eat shit" are quite different, yet they have the same average utility. And the topic of unfairness and inequality in society has been known to rouse some strong passions in the masses [citation needed]. There are good reasons to find ways to weigh this effect into your utility function, either because you're one of those who eat shit, or because you're one of those who have a utility of 100 but have begun noticing that the other half of the population is taking a keen interest in pointy farming implements.

To overcome these problems, in this study, I draw inspiration from that most classic of teachers, Nature, and specifically from its most confusing and cruel lesson, thermodynamics. I suggest the concepts of ethodynamics as an equivalent of thermodynamics applied to utilitarian ethics, and a quantity called the free utility as the utility function to maximize. I then apply the new method to the rigorous study of a known toy problem, the Omelas model [LeGuin, 1973] to showcase its results.

Free utility

We define the free specific utility for a closed society as:

where is the average expected utility, is the entropy of the distribution of possible utility outcomes, and is a free "temperature" parameter which expresses the importance we're willing to give to equality over total well-being. If you believe that slavery is fine as long as the slaves are making some really nice stuff, your "ethical temperature" is probably very close to zero; if you believe that the Ministry of Equalization should personally see to it that the legs of those who are born too tall are sawed off so that no one feels looked down on, then your temperature is approaching infinity. Most readers, I expect, will place somewhere in between these two extremes.

The other quantities can be defined with respect to a probability distribution of outcomes, :

With this definition, the free energy will go up with each improvement in average utility and down with each increase in inequality, at a rate that depends on the temperature parameter. We can then identify two laws of ethodynamics:

- any conscientious moral actor ought to try to maximize according to their chosen temperature;

- absent outside intervention, in a closed society, Moloch [Alexander, 2014] tends to drive down.

The first law is really more of a guideline, or a plea. The second law is, as far as I can tell, as inescapable as its thermodynamical cousin, and possibly literally just the same law with fake glasses and a mustache.

Those who should actually maybe walk back to Omelas

For a simplified practical example of this model we turn to the well known Omelas model, which provides the simplest possible example of a society with high utility but also non-zero entropy. The model postulates the following situation: a city called Omelas exists, with an unspecified amount of inhabitants. Of these, live in what can only be described as joyous bliss, in a city as perfect as the human mind can imagine. The last one, however, is a child who lives in perpetual torture, which is through unspecified means absolutely necessary to guarantee everyone else's enjoyment. The model is somewhat imprecise on the details of this arrangement (why a child? What happens when they come of age? Do they just turn into another happy citizen and are replaced by a newborn? Do they die? Do they simply live forever, frozen in time by whatever supernatural skulduggery powers the whole thing?) or the demographics of Omelas, so we decide to simplify it further by removing the detail that the sufferer has to be a child. Instead, we describe our model with the following utility distribution:

where the zero of utility is assumed to be the default state of anyone who lives outside of Omelas, and units of utility are arbitrary.

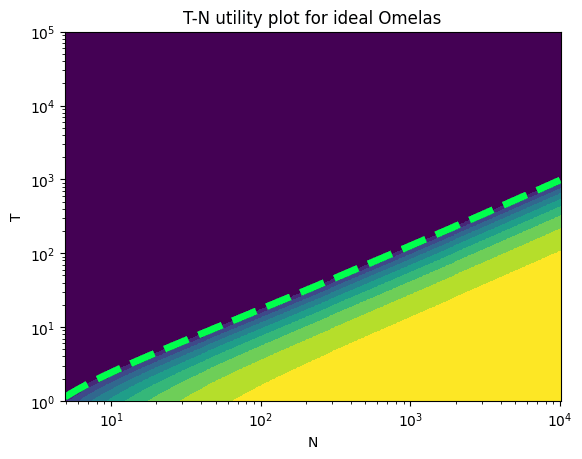

In this ideal model of Omelas, the specific free utility can be found as

as a function of temperature and population. For large enough , we can apply Stirling's approximation to simplify:

leading to

This has the consequence that the free utility converges to 1 as the population of Omelas grows, independently of temperature. The boundary across which the crossover from negative to positive utility happens is approximately:

For any populations larger than the one marked by this line, the existence of Omelas is a net good for the world, and thus its population ought to increase. Far from walking away, moral actors ought to walk towards it! This is not even too surprising a result on consideration: given that one poor child is being tortured regardless, we may as well get as much good as possible out of it.

But this unlimited growth should raise suspicion that this model might be a tad bit too perfect to be real, much like spherical cows, trolley problems, and Derek Zoolander. There's no such thing as a free lunch, even if the child is particularly plump. What, are we to believe that whatever magical bullshit/deal with Cthulhu powers Omelas' unnatural prosperity has no limit whatsoever, that a single tortured person can provide enough mana to cast Wish on an indefinite amount of inhabitants? Should we amass trillions and trillions on the surface of a Dyson sphere centered on Omelas? Child-torture powered utopias, we can believe; but infinitely growing child-torture powered utopias are a bridge too far!

In our search for a more realistic version of an enchanted enclave drawing energy from infant suffering we ought thus to put a limit to the ability of Omelas to infinitely scale its prosperity up with its number of citizens. Absent any knowledge about the details of the spells, conjurings and pacts that power the city to build a model of, we have to do like good economists and do the next best thing: pull one out of our ass. We therefore postulate that the real Omelas model provides a total utility (to be equally divided between its non-tortured inhabitants) of:

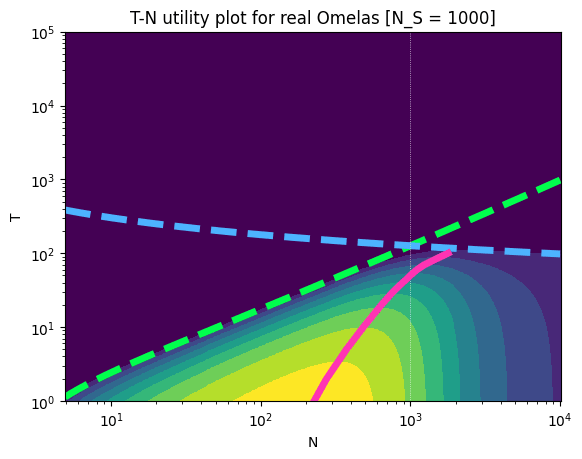

This logistic function has all the desired properties. It is approximately linear in with slope 1 around 0, then slowly caps as soon as the population grows past a certain maximum support number . We can then replace it in the free utility:

In the limit of low , the zero line described above still applies. Meanwhile, for high , we see a new zero line:

This produces a much more interesting diagram:

We see now that optimization is no longer trivial. To begin with, there is a temperature above which the existence of Omelas will always be considered intolerable and a net negative. This is roughly the temperature at which the two zero lines cross, so:

For , this corresponds to . In addition, even below this temperature, the free utility has a maximum in for each value of . In other words, there is an optimum of population for Omelas. People with will find themselves able to tolerate Omelas, on the whole, but will also converge towards a certain value of its population. If they are actual moral actors and not just trying to signal their virtue, they are thus expected to walk away from or towards Omelas depending on its current population. Too little people and the child's sacrifice is going to waste; too many, and the spreading out of too few resources make things miserable again.

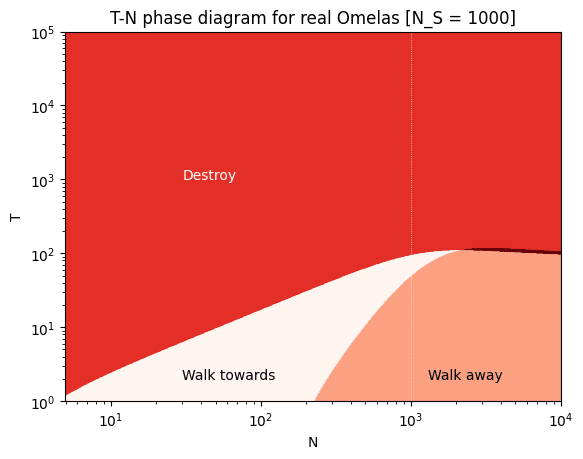

The phase diagram can be built by coloring regions based on the sign of both the free energy and its derivative in , :

- the almost white region is the one in which both and are positive; here Omelas is a net good, but it can be made even better if more people flock to it; this is the walk towards phase;

- the orange region is the one in which is positive but is negative; here Omelas is a net good, but it can be made even better if someone conscientious enough makes willingly the sacrifice of leaving it, so that others may enjoy more its scarce and painfully earned resources; this is the walk away phase which the original work hints at;

- the bright red region is the one in which is negative even though is positive; here Omelas is seen as a blight upon the world, a haven of unfairness which offends the eyes and souls of those who seek good. No apologies nor justifications can be offered for its dark sacrificial practices; the happiness of the many doesn't justify the suffering of the very few. For those who find themselves in this region, walking away isn't enough, and is mere cowardice. Omelas must be razed, its palaces pillaged, its walls ground to dust, its populace scattered, its laws burned, and the manacles that shackle the accursed child shattered by the hammer of Justice, that they may walk free and happy again. This is the destroy phase;

- the thin dark red sliver is the region in which both and are negative. We don't talk about that one.

Conclusions

This study has offered what I hope was an exhaustive treatment of the much discussed Omelas model, put in a new utilitarian framework that tries to surpass the limits of more traditional and well-known ones. I have striven to make this paper a pleasant read by enriching it with all manners of enjoyable things: wit, calculus, and a non indifferent amount of imaginary child abuse[2]. But I've also tried to endow it with some serious considerations about ethics, and to contribute something new to the grand stream of consciousness of humanity's thought on the matter that has gone on since ancient times. Have I succeeded? Probably no. Have I at least inspired in someone else such novel and deep considerations, if only by enraging them so much that the righteous creative furor of trying to rebut to my nonsense gives them the best ideas of their lives? Also probably no.

But have I at least written something that will elicit a few laughs, receive a handful of upvotes, and marginally raise my karma on this website by a few integers, thus giving me a fleeting sense of accomplishment for the briefest of moments in a world otherwise lacking any kind of sense, rhyme, reason, or purpose?

That, dear readers, is up to you to decide.

- ^

Actually a less discussed consequence is that if it instead turned out that life is generally below that threshold (TBD), the obvious path forward is that we all ought to commit suicide as soon as possible. This is also frowned upon.

- ^

Your mileage may vary about how enjoyable these things actually are. Not everyone likes calculus.

18 comments

Comments sorted by top scores.

comment by Jonas Hallgren · 2023-06-11T10:56:42.007Z · LW(p) · GW(p)

Man, this was a really fun and exciting post! Thank you for writing it.

Maybe there's a connection to the FEP here? I remember Karl Friston saying something about how we can see morality as downstream from the FEP, which is (kinda?) used here, maybe?

Replies from: unicode-70↑ comment by Ben Amitay (unicode-70) · 2023-06-12T18:44:50.756Z · LW(p) · GW(p)

In another comment on this post I suggested an alternative entropy-inspired expression, that I took from RL. To the best of my knowledge, to the RL context it came from FEP or active inference or at least is acknowledged to be related.

Don't know about the specific Friston reference though

comment by Jonas Hallgren · 2025-01-08T16:42:03.199Z · LW(p) · GW(p)

This delightful piece applies thermodynamic principles to ethics in a way I haven't seen before. By framing the classic "Ones Who Walk Away from Omelas" through free energy minimization, the author gives us a fresh mathematical lens for examining value trade-offs and population ethics.

What makes this post special isn't just its technical contribution - though modeling ethical temperature as a parameter for equality vs total wellbeing is quite clever. The phase diagram showing different "walk away" regions bridges the gap between mathematical precision and moral intuition in an elegant way.

While I don't think we'll be using ethodynamics to make real-world policy decisions anytime soon, this kind of playful-yet-rigorous exploration helps build better abstractions for thinking about ethics. It's the kind of creative modeling that could inspire novel approaches to value learning and population ethics.

Also, it's just a super fun read. A great quote from the conclusion is "I have striven to make this paper a pleasant read by enriching it with all manners of enjoyable things: wit, calculus, and a non indifferent amount of imaginary child abuse".

That is the type of writing I want to see more of! Very nice.

comment by Vanessa Kosoy (vanessa-kosoy) · 2023-06-11T15:14:04.116Z · LW(p) · GW(p)

If you want to add a term that rewards equality, it makes more sense to use e.g. variance rather than entropy. Because, (i) entropy is insensitive to the magnitude of the differences between utilities, and (ii) it's only meaningful under some approximations, and it's not clear which approximation to take. Because, the precise distribution is discrete, and usually no two people will have exactly the same utility, so entropy will always be the logarithm of population size.

Replies from: dr_s↑ comment by dr_s · 2023-06-11T16:00:17.326Z · LW(p) · GW(p)

It's entropy of the distribution, so you should at least bin it in some kind of histogram (or take the limit to a continuous approximation) to get something meaningful out of it. But agree on the magnitude of the difference, which does indeed matter in the Omelas example; the entropy for a system in which everyone has 100 except for one who has 99 would be the exact same. However, the average utility would be different, so the total "free utility" still wouldn't be the same.

I think variance alone suffers from limits too, you'd need a function of the full series of moments of the distribution, that's why I found entropy more potentially attractive. Perhaps the moment generating function for the distribution comes closer to the properties we're interested in? In the thermodynamics analogy it also resembles a lot the partition function.

Replies from: unicode-70↑ comment by Ben Amitay (unicode-70) · 2023-06-12T10:04:26.948Z · LW(p) · GW(p)

I think that the thing you want is probably to maximize N*sum(u_i exp(-u_i/T))/sum(exp(-u_i/T)) or -log(sum(exp(-u_i/T))) where u_i is the utility of the Ith person, and N is the number of people - not sure which. That way you get in one limit the vail of ignorance for utility maximizers, and in the other limit the vail of ignorance of Roles (extreme risk aversion).

That way you also don't have to treat the mean utility separately.

Replies from: dr_s↑ comment by dr_s · 2023-06-12T10:30:51.123Z · LW(p) · GW(p)

Well, that sure does look a lot like a utility partition function though. Not sure why use N if you're also doing a sum though; that will already scale with the numbers of people. If anything,

becomes the average utility in the limit of , whereas for you converge on maximising simply the single lowest utility around. If we write it as:

then we're maximizing . It's interesting though that despite the similarity this isn't an actual partition function, because the individual terms don't represent probabilities, more like weights. We're basically discounting utility more and more the more you accumulate of it.

Replies from: unicode-70↑ comment by Ben Amitay (unicode-70) · 2023-06-12T18:38:50.549Z · LW(p) · GW(p)

I agree with all of it. I think that I through the N there because average utilitarianism is super contra intuitive for me so I tried to make it total utility.

And also about the weights - to value equality is basically to weight the marginal happiness of the unhappy more than that of the already-happy. Or when behind the vail of ignorance, to consider yourself unlucky and therefore more likely to be born as the unhappy. Or what you wrote.

comment by Mart_Korz (Korz) · 2023-06-12T14:50:41.157Z · LW(p) · GW(p)

Very fun read, thanks!

[...] we ought to indeed accept the numbers and with them the super-puppy and the pain to be found within its joyous and drooling jaws, which isn't the common sense ethical approach to the problem (namely, take away the mad scientist's grant funding and have them work on something useful, like a RCT on whether fresh mints cause cancer)

I am not sure about this description of common sense morality. People might agree about not creating such a super-puppy, but we do some horrible stuff to lab/farm animals in order to improve medical understanding/enjoy cheaper food. Of course, there isn't the aspect of "something directly values the pain of others", but we are willing to hurt puppies if this helps human interests.

Also, being against the existence of 'utility monsters which actually enjoy the harm to others' could also be argued for from a utilitarian perspective. We have little reason to believe that "harm to others" plays any significant/unavoidable role in feeling joy. Thus, anyone who creates entities with this property is probably actually optimizing for something else.

For normal utility monsters (entities which just have huge moral weight), my impression is that people mostly accept this in everyday examples. Except maybe for comparisons between humans where we have large amounts of historical examples where people used these arguments to ruin the lifes of others using flawed/motivated reasoning.

↑ comment by dr_s · 2023-06-12T16:39:03.933Z · LW(p) · GW(p)

Of course, there isn't the aspect of "something directly values the pain of others", but we are willing to hurt puppies if this helps human interests.

I mean, completely different thing though, right? Here with "puppies" I was mostly jokingly thinking of actual doggies (which in our culture we wouldn't dream of eating, unlike calves, piglets and chicks, that are cute and all but actually fine for some reason, or rabbits, that are either pets or food depending on our eldritch whims). But I'd say there's nothing weird about our actions if you consider that we just don't consider animals actual moral subjects. We do with them as it pleases us. Sometimes it pleases us to care for and protect them, because the gods operate in mysterious ways. But nothing of what we do, not even animal abuse laws and all that stuff, actually corresponds to making them into full moral subjects, because it's all still inconsistent as hell and always comes second to human welfare. I always found actually really stupid some ethical rules about e.g. mice treatment in medical research - pretty much, if anything may be causing them suffering, you're supposed to euthanise them. But we haven't exactly asked the mice if they prefer death to a bit of hardship. It's just our own self-righteous attitude we take on their behalf, and nothing like what we would do with humans. If a potentially aggressive animal is close to a human and may endanger their life, they get put down, no matter how tiny the actual risk. A fraction of human life in expectation always outweighs one or more animal lives. To make animals true moral subjects by extending the circle of concern to them would make our life a whole lot harder, and thus, conveniently, we don't actually do it. Not even the most extreme vegans fully commit to it, that I know of. If you've seen "The Good Place", Doug Forcett is what a human who treats animals kind of like moral subjects looks like, and he holds a funeral wracked by guilt for having accidentally stomped on a snail.

Thus, anyone who creates entities with this property is probably actually optimizing for something else.

True, but what do we do with the now created utility monster? Euthanise it mercifully because it's one against many? To do so already implies that we measure utility by something else than total sum.

my impression is that people mostly accept this in everyday examples

I don't know. In a way, the stereotypical "Karen" is a utility monster: someone who weaponizes their aggrievement by claiming disproportionate suffering out of a relatively trivial inconvenience to try and guilt-trip others into moving mountains for their sake. And people don't generally look too kindly on that. If anything, do it long enough, and many people will try to piss you off on purpose just out of spite.

Replies from: Korz↑ comment by Mart_Korz (Korz) · 2023-06-13T21:37:54.975Z · LW(p) · GW(p)

I do tend to round things off to utilitarianism it seems.

Your point on the distinct categories between puppies and other animals is a good one. With the categorical distinction in place, our other actions aren't really utilitarian trade-offs any more. But there are animals like guinea pigs which are in multiple categories.

what do we do with the now created utility monster?

I have trouble seriously imagining an utility monster which actually is net-positive from a total utility standpoint. In the hypothetical with the scientist, I would tend towards not letting the monster do harm just to remove incentives for dangerous research. For the more general case, I would search for some excuses why I can be a good utilitarian while stopping the monster. And hope that I actually find a convincing argument. Maybe I think that most good in the world needs strong cooperation which is undermined by the existence of utility monsters.

In a way, the stereotypical "Karen" is a utility monster

One complication here is that I would expect the stereotypical Karen to be mostly role-playing such that it would not actually be positive utility to follow her whims. But then, there could still be a stereotypical Caren who actually has very strong emotions/qualia/the-thing-that-matters-for-utility. I have no idea how this would play out or how people would even get convinced that she is Caren and not Karen.

Replies from: dr_s↑ comment by dr_s · 2023-06-13T22:10:10.611Z · LW(p) · GW(p)

For the more general case, I would search for some excuses why I can be a good utilitarian while stopping the monster. And hope that I actually find a convincing argument. Maybe I think that most good in the world needs strong cooperation which is undermined by the existence of utility monsters.

I mean, if it's about looking for post-hoc rationalizations, what's even the point of pretending there's a consistent ethical system? Might as well go "fuck the utility monster" and blast it to hell with no further justification than sheer human chauvinism. I think we need a bit of that in fact in the face of AI issues - some of the most extreme e/acc people seem indeed to think that an ASI would be such a utility monster and "deserves" to take over for... reasons, reasons that I personally don't really give a toss about.

But then, there could still be a stereotypical Caren who actually has very strong emotions/qualia/the-thing-that-matters-for-utility.

Having no access to the internal experiences of anyone else, how do we even tell? With humans, we assume we can know because we assume they're kinda like us. And we're probably often wrong on that too! People seem to experience pain, for example, both physical and mental, on very different scales, both in terms of expression and of how it actually affects their functioning. Does this mean some people feel the same pain but can power through it, or does it mean they feel objectively less? Does the question even make sense? If you start involving non-human entities, we have essentially zero reference frame to judge. Outward behavior is all we have, and it's not a lot to go by.

Replies from: Korz↑ comment by Mart_Korz (Korz) · 2023-06-16T09:04:51.890Z · LW(p) · GW(p)

I mean, if it's about looking for post-hoc rationalizations, what's even the point of pretending there's a consistent ethical system?

Hmm, I would not describe it as rationalization in the motivated reasoning sense.

My model of this process is that most of my ethical intuitions are mostly a black-box and often contradictory, but still in the end contain a lot more information about what I deem good than any of the explicit reasoning I am capable of. If however, I find an explicit model which manages to explain my intuitions sufficiently well, I am willing to update or override my intuitions. I would in the end accept an argument that goes against some of my intuitions if it is strong enough. But I will also strive to find a theory which manages to combine all the intuitions into a functioning whole.

In this case, I have an intuition towards negative utilitarianism, which really dislikes utility monsters, but I also have noticed the tendency that I land closer to symmetric utilitarianism when I use explicit reasoning. Due to this, the likely options are that after further reflection I

- would be convinced that utility monsters are fine, actually.

- would come to believe that there are strong utilitarian arguments to have a policy against utility monsters such that in practice they would almost always be bad

- would shift in some other direction

and my intuition for negative utilitarianism would prefer cases 2 or 3.

So the above description was what was going on in my mind, and combined with the always-present possibility that I am bullshitting myself, led to the formulation I used :)

comment by Jonas Hallgren · 2024-06-05T16:21:20.416Z · LW(p) · GW(p)

Just revisiting this post as probably my favourite one on this site. I love it!

comment by A. Weber (a-weber) · 2023-06-14T15:30:21.841Z · LW(p) · GW(p)

...You can't just mention one region and say "we don't talk about that region!" Now I really wanna know. :P

Is it the equivalent of those taxes where imposing the tax overly benefits the rich, but if that tax already exists, removing the tax ALSO overly benefits the rich? So in this case, Omelas is bad, but destroying it would ALSO be bad? It's the derivatives that are throwing me off a little.

Replies from: dr_s↑ comment by dr_s · 2023-06-14T16:02:36.039Z · LW(p) · GW(p)

That's more the "everything is horrible" region. Omelas is horrible, and it gets more horrible the more people live in it.

To be sure I actually have doubt about labelling the "destroy" region now. Some of it is in a position in which Omelas is a net negative but can become a mild net positive if enough people migrate to it. It's the region above the critical T line that makes Omelas unfixable.

comment by M. Y. Zuo · 2023-06-11T00:03:06.646Z · LW(p) · GW(p)

It doesn't seem like you mentioned what your definition of 'utility' is, can you comment on that?

Replies from: dr_s↑ comment by dr_s · 2023-06-11T08:17:02.847Z · LW(p) · GW(p)

TBD

(approximated by "anything that makes you feel like you probably want more of it rather than less, and your extrapolation of what others would feel like they want more of rather than less, on the already generous assumption that you're not actually surrounded by P-zombies")