Lessons from Convergent Evolution for AI Alignment

post by Jan_Kulveit, rosehadshar · 2023-03-27T16:25:13.571Z · LW · GW · 9 commentsContents

Prelude: sharks, aliens, and AI Introduction The basics of convergent evolution This is a potentially big deal for AI alignment work Convergent evolution might point to deep selection pressures Multicellularity Agency "Intelligence" "Sentience" The limits of convergent evolution may challenge some existing ideas in AI alignments But biology is super different from AI, no? None 9 comments

Prelude: sharks, aliens, and AI

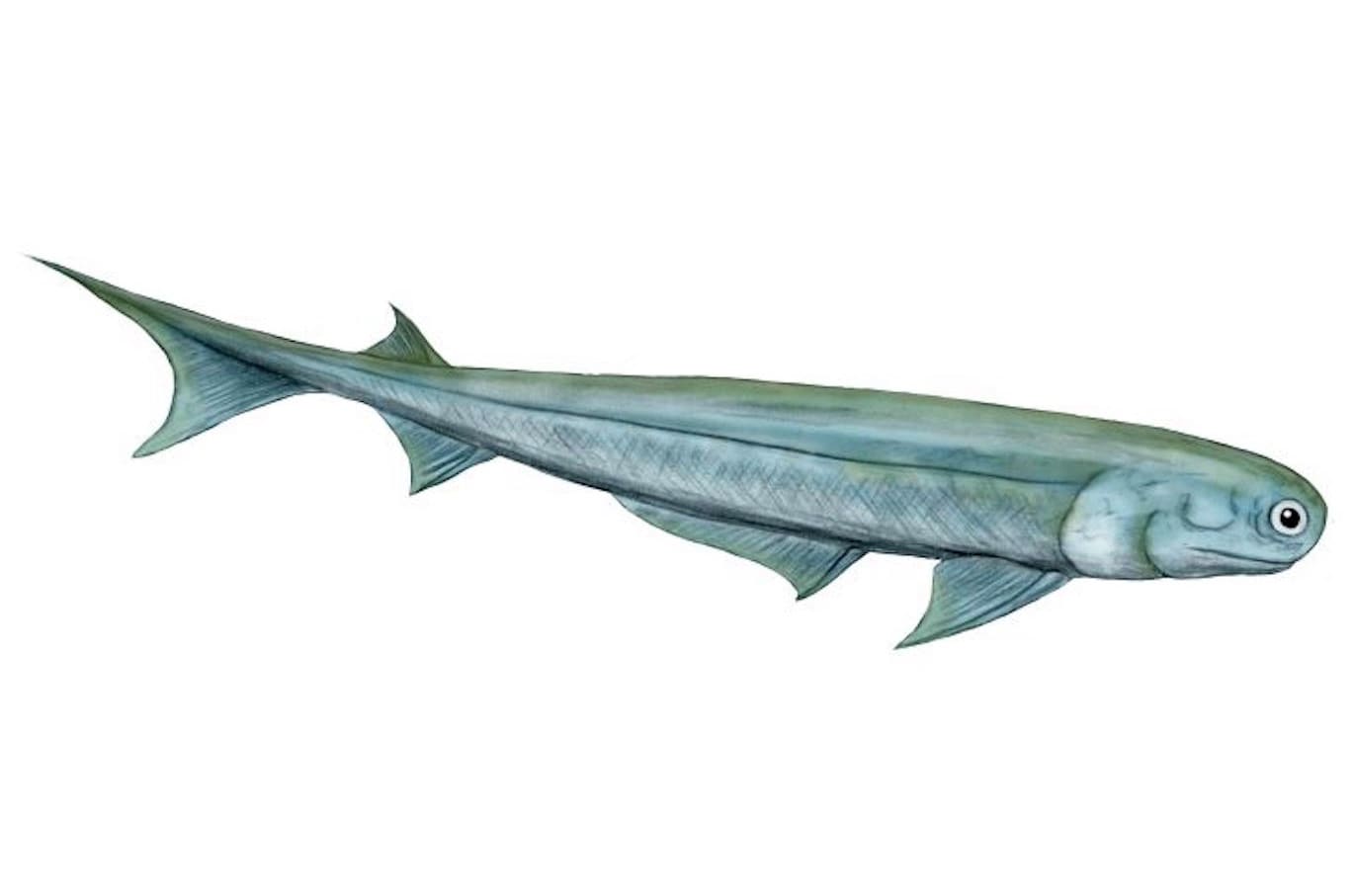

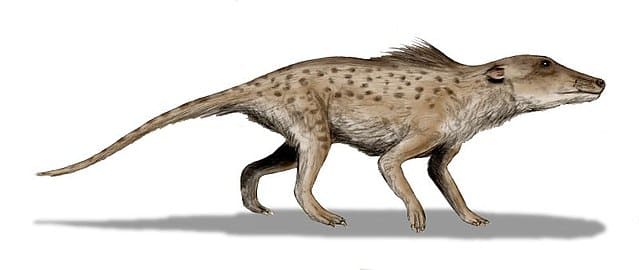

If you go back far enough, the ancestors of sharks and dolphins look really different:

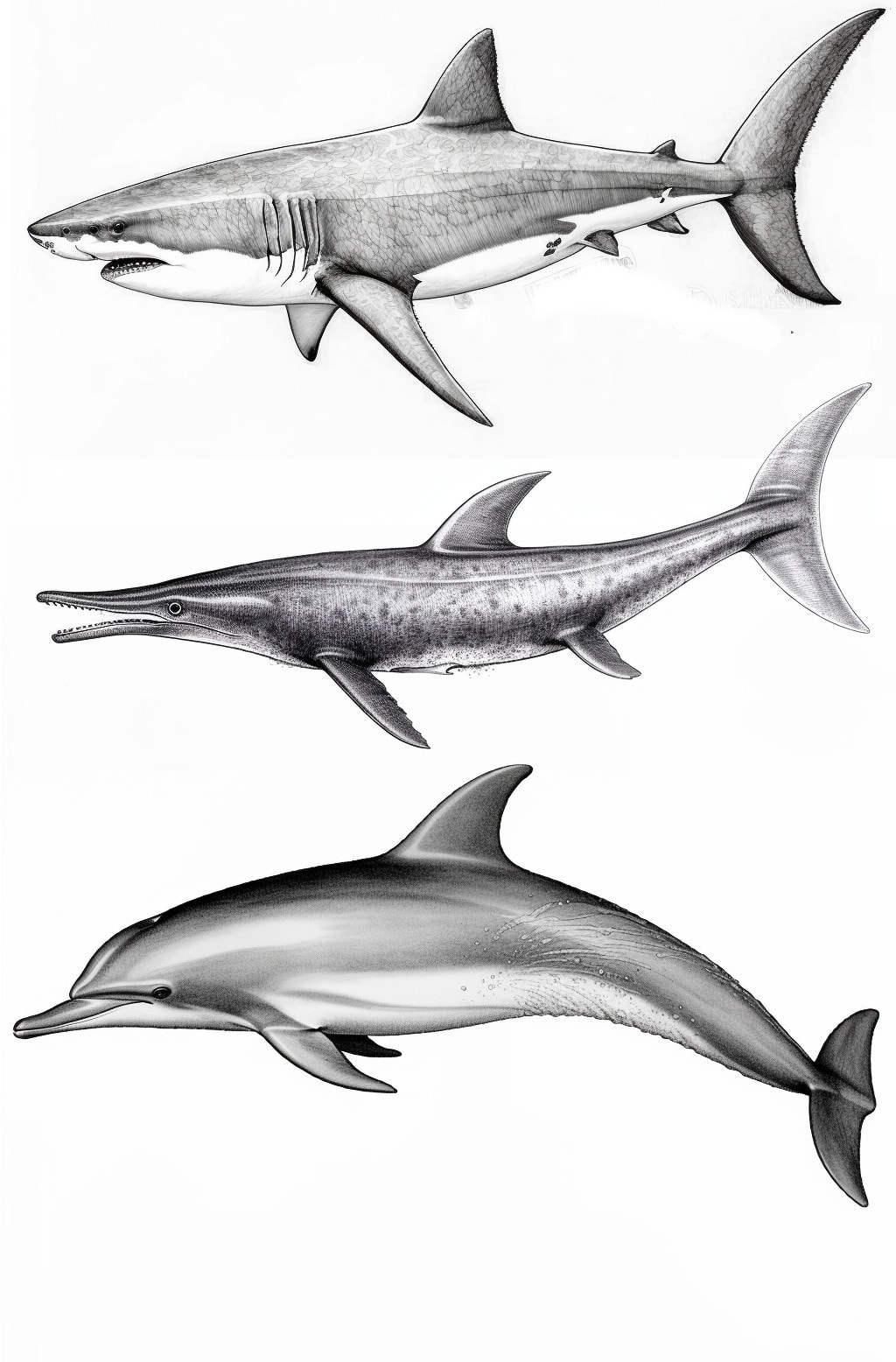

But modern day sharks and dolphins have very similar body shapes:

This is a case of convergent evolution: the process by which organisms with different origins develop similar features. Both sharks and dolphins needed speed and energy efficiency when moving in an environment governed by the laws of hydrodynamics, and so they converged on a pretty similar body shape.

For us, this isn’t very surprising, and doesn’t require much knowledge of evolution: we have a good intuitive understanding of how water works, and humans knew a lot of the underlying maths for the laws of hydrodynamics before they understood anything about evolution. Starting from these laws, it isn’t very surprising that sharks and dolphins ended up looking similar.

But what if instead of starting with knowledge of hydrodynamics and then using that to explain the body shape of sharks and dolphins, we started with only knowledge of sharks’ and dolphins’ body shape, and tried to use that to explain underlying laws?

Let’s pretend we’re alien scientists from an alternative universe, and for some weird reason we only have access to simplified 3D digital models of animals and some evolutionary history, but nothing about the laws of physics in the human/shark/dolphin universe. My guess is that these alien scientists would probably be able to uncover a decent amount of physics and a fair bit about the earth’s environment, just by looking at cases of convergent evolution.

If I’m right about this guess, then this could be pretty good news for alignment research. When it comes to thinking about AI, we’re much closer to the epistemic position of the alien scientist: we either don't know the ‘physics’ of life and intelligence at all, or are only just in the process of uncovering it.

But cases of convergent evolution might help us to deduce deep selection pressures which apply to AI systems as well as biological ones. And if they do, we might be able to say more about what future AI systems might look like, or, if we are lucky, even use some of the selection pressures to shape what systems we get.

Introduction

This post argues that we should use cases of convergent evolution to look for deep selection pressures which extend to advanced AI systems.

Convergent evolution is a potentially big deal for AI alignment work:

- Finding deep selection pressures could help us predict what advanced AI systems will be like.

- It seems plausible that some of the properties people in the alignment space assume are convergent don’t actually extend to advanced AI.

In this post, I’ll:

- Share some basics of convergent evolution,

- Argue that this is a big deal for alignment work, and then

- Respond to the objection that biology is super different from AI.

The basics of convergent evolution

The body shape of sharks and dolphins is just one of very many examples of convergent evolution in biology. For example:

- Visual organs arose “possibly hundreds of times”.

- Multicellularity evolved independently probably at least 11 times.

- Some form of higher-level intelligence evolved multiple times - in primates, apes, corvids, cetaceans, elephants - and possibly many other cases, depending on thresholds and definitions.

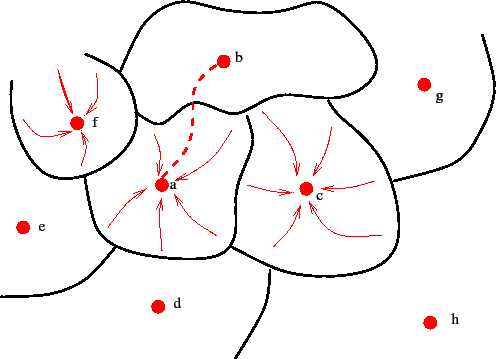

We can think about convergent evolution in terms of:[3]

- a basin of convergent evolution,

- an attractor state(s), and

- selection pressure(s).

The basin of convergent evolution is the region of the abstract space in which, once an organism enters the basin, the pull of the selection pressure brings the organism closer to the attractor state.[4]

In the case of sharks and dolphins:

- The basin of convergent evolution is hunting fish in water in a certain way.

- The attractor state is the rough body shape which sharks and dolphins share.

- The selection pressures are the laws of hydrodynamics, and the need for speed and energy efficiency when moving in an environment governed by those laws.

There are some important nuances here.

Firstly, if you back out far enough, cases of convergent evolution are always contingent on something.

Contingent evolution is the process by which organisms develop different traits under the same conditions, because of contingent factors (like random mutations or interspecies encounters). At first, convergent and contingent evolution sound like opposites, but actually they are fractal: every instance of convergent evolution is contingent on some higher level thing. To take our shark and dolphin example, their body shape is contingent on them both being vertebrates. Invertebrates under the same conditions don’t develop that sort of body shape.

Another way of putting this point would be that organisms have to enter the basin for the selection pressures to apply. Different factors determine entry, including both features of the environment and features of the organism. Entry into the basin of convergent evolution which dolphins and sharks both fell into seems to require vertebrae, among other things.

Secondly, similarity/generality do not necessarily imply convergence. Many animals have hooves, but they all share a common ancestor. This is a case of homology, not convergent evolution. The fact that hooved animals are quite widespread shows us that hooves are not maladaptive - but we don’t get the kind of strong signal we would from convergent evolution that hooves are uniquely adaptive. To say that X is convergent, you need to be able to point to multiple different origins converging to points close to X. It’s not enough to just observe that there’s a lot of X around.

Both of these nuances limit and clarify the concept of convergent evolution. Convergent evolution is limited in that there are many common phenomena which it can’t explain (like hooves). But it’s also unusually predictive: provided you understand the scope of the basin of convergent evolution (or in other words, can back out accurately what the convergent evolution is contingent on), then within that basin there’s not much room for things to go otherwise than fall towards the attractor state.

That’s a substantive proviso though: it can be very tricky to back out the contingencies, so often there will be uncertainty about exactly where the selection pressures apply.

This is a potentially big deal for AI alignment work

Convergent evolution might point to deep selection pressures

Firstly, cases of convergent evolution might point to deep selection pressures which help us predict what advanced AI will be like.

There is some work of this type already in the alignment space, but we think it’s a promising area for further exploration.

There are at least a few different ways of exploring this idea, and probably others we haven’t thought of yet:

- You can look for attractor states which seem convergent across many domains. This post has mostly focused on biological evolution, but convergent evolution can also be expanded beyond biology, to things like culture, technology and software. The further out you have to go to find the contingency, the more general the case of convergent evolution is, and the more likely it is that advanced AI systems will fall into the basin of convergence too. Searching for properties which are convergent across many kinds of systems (biological, cultural, economic, technological…) might point us towards convergences which hold for advanced AI systems.

- You can start with a guess about a selection pressure, and then try to figure out what basin of convergence it should apply to. Then you can check whether in reality it does apply or not.

- If it does, that’s some evidence that you’re onto something.

- If it doesn’t, that’s some evidence that you’re missing something.

Here are some examples where I think that convergent biological evolution points to some deep selection pressures, which are likely to also be relevant for understanding advanced AI systems. We will go into more detail and unpack implications in followup posts.

Multicellularity

- In biological organisms, multicellularity evolved independently probably at least 11 times. Intuitively, multicellular organisms unlocked new levels of complexity and power in living things.

- There’s a possible analogy between multicellularity and division of labour in human economies, leading to "civilization" and transition to a period of faster growth of human power , which contributed to the rise of "civilization" and the transition to a period of faster growth in human power.

- A candidate for the selection pressure here is ‘specialise and trade’:

- In economics this is represented by Ricardo’s law (comparative advantage), which explains economic specialisation.

- You can make a similar argument for multicellularity. An advantage for a simple multicellular organism might be, for example, that some of the cells specialise at movement, and some at food processing. Part of the cells, for example, develop flagella.

- Candidates for the boundaries of the basin of convergence include how easy it is to scale the capacity of an individual, and how easy it is to solve coordination problems between individuals.

- For example, if it were easy for cultural evolution to scale the capacity of individual humans arbitrarily, there would be less pull towards specialisation. If coordination between individual humans were extremely difficult, there would also be less pull towards specialisation.

- Goal-directed systems tend to be made out of parts which are themselves also goal-directed.[6] It is likely that something like this might also be the case for advanced AI systems.

- It’s possible that collectives or "swarms" of somewhat intelligent AI systems (such as LLMs) might form larger emergent systems in response to selection pressures similar to those which caused multicellularity.[7]

Agency

- Agency (in the sense of Dennett’s intentional stance[8]) seems somewhat convergent: it arises in many different animals.

- But most animals don’t seem strongly agentic. To understand animal behaviour, you usually need not just the ‘goal’ of the animal but also quite a lot of information about the ways in which the animal is bounded, its instincts and habits, etc.

- In other words, the attractor state doesn’t seem very pointy.

- Understanding why seems like it might help us think about agency in advanced AI systems.

- A preliminary guess: information processing is costly; some forms of coherence and agency require more information processing all else equal; and it’s often not worth the additional costs.

"Intelligence"

- Some form of higher-level intelligence evolved multiple times - in primates, apes, corvids, cetaceans, elephants - and possibly many other cases, depending on thresholds and definitions.

- Understanding of the selection pressures here is an active area of research in biology, and it's not clear what the best explanation is.[9]

- One hypothesis is that runaway selection for social skills leads to intelligence.[10]

- (Primates, apes, corvids, cetaceans, elephants and humans are all social.)

- This intuitively makes sense: in most natural environments, there may be sharply diminishing returns from spending more energy on energy-hungry brains modelling a fixed complexity environment better. However, if the really important part of the environment are other similarly complex minds, this can lead to a race in intelligence.

- If selection pressure towards modelling other minds leads to intelligence, this would have important implications for AI development and AI risk.

"Sentience"

Epistemic status: this subsection is highly speculative, more than the others.

- This is possibly the most controversial example, and biological literature often shies away from the topic, with some recent exceptions.[11] On the other hand, the topic is of central importance to moral philosophy.

- There is some literature exploring how functional theories of consciousness such as global workspace theory could be related to properties of machine learning architectures.[12]

- Understanding the possible convergence of whatever the morally relevant properties of systems are could be important for avoiding mind crimes.

There are multiple other possibly relevant examples we decided not to include in this post, but we recommend thinking about it for yourself and posting further examples as comments.

The limits of convergent evolution may challenge some existing ideas in AI alignments

Secondly, in my view, lots of existing alignment research implicitly or explicitly relies on convergence.

- Often there has been an implicit or explicit assumption in alignment research that something like VNM rationality or goal coherence is convergent for sufficiently intelligent systems.

- Many arguments about AI risk stem from the idea of instrumental convergence - that specific goals such as self-preservation and power-seeking are likely to be convergent goals for any rational agent.

- The natural abstraction hypothesis [AF · GW] is a hypothesis that there are some selection pressures and some basin of attraction such that certain concepts are natural abstractions/an attractor state.

- Selection theorems [LW · GW] is an abstracted and simplified way of looking at convergent evolution, applied to agency specifically.

It seems plausible that for some of the properties people in the alignment space assume are convergent, the relevant basin actually doesn’t extend to advanced AI, or the specific selection pressures are just one of many, making the attractor states not too deep.

Thinking through convergent evolution makes the reasons why these cases of convergence may be relevant clearer. At the same time, the interplay between convergence and contingency, and the limited extent to which some of these pressures seem to shape living things, may point to some of the basins of convergence not being as universal as assumed, or the selection pressures not being that strong. It would be good to have a more explicit discussion of what these cases of convergence are contingent upon, and how clear it is that advanced AI systems will meet those conditions.

But biology is super different from AI, no?

Yes, biology is super different from AI.

Evolution is not ‘smart’ - but over the past few billion years, it has had a lot of compute and has explored a lot. [13]

And evolution didn’t just explore spaces like ‘body shapes made of flesh’, which aren’t very relevant to AI systems. It also explored spaces like ‘control theory algorithms implementable by biological circuits’ and ‘information processing architectures’. Looking at the properties which were converged upon in spaces like that can hopefully tell us something about the underlying selection pressures.

While details of what biological evolution found are contingent, it seems likely that vast convergences across very different species, or even across very different systems like culture and technology, point to deeper selection pressures which apply to AI systems too.

The ideas in this post are mostly Jan’s. Special thanks to Clem who made substantial contributions especially on the parts about contingency, and plans to write a follow up post on the relevance of contingency to AI alignment research. Thanks also to TJ, Petr Tureček and John Wentworth for comments on a draft. Rose did most of the writing.

- ^

Nobu Tamura (http://spinops.blogspot.com), CC BY 3.0, via Wikimedia Commons.

- ^

Nobu Tamura (http://spinops.blogspot.com), CC BY 3.0, via Wikimedia Commons.

- ^

This way of thinking about convergent evolution is used by evolutionary biologists, e.g. here. There are also other ways of approaching it, most commonly in terms of fitness landscape, where instead of individuals falling down into attractor states, selection pressures push individuals uphill. Conventions depend on the subfield.

- ^

Note that the attractor state applies to some feature or features of the organism, but is irrelevant to most others. In the shark and dolphin case, the attractor relates to body shape, but does not affect other features like type of immune cells.

- ^

https://commons.wikimedia.org/wiki/File:Local_search_attraction_basins.png , CC BY-SA 3.0 <http://creativecommons.org/licenses/by-sa/3.0/>, via Wikimedia Commons.

- ^

See https://www.frontiersin.org/articles/10.3389/fpsyg.2019.02688/full and this [AF · GW] on hierarchical agency.

- ^

See What multipolar failure looks like [LW · GW].

- ^

Roughly:

- You can look at any system as an agent

- A system is more agentic the more that describing it using the intentional stance is useful, relative to other stances.

- ^

Some candidates: parasitoidism; a combination of causal reasoning, flexibility, imagination, and prospection.

- ^

This paper argues that different pressures operated in different taxa, and that for some taxa social learning was a key selection pressure.

- ^

For example, The Evolution of the Sensitive Soul: Learning and the Origins of Consciousness by Simona Ginsburg, Eva Jablonka.

- ^

- ^

Stochastic gradient descent also isn’t the smartest designer, but with enough compute it’s been able to find the smartest AI systems we have.

9 comments

Comments sorted by top scores.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-03-27T20:33:46.699Z · LW(p) · GW(p)

In addition to looking at biology, I'd look at human organizations (corporations, governments, organized religions, militaries, etc.) Under what conditions do they evolve towards something like "agency?" What about "intelligence?" Under what conditions do they evolve away from those things?

comment by Jan_Kulveit · 2023-03-28T07:15:49.543Z · LW(p) · GW(p)

On the topic thinking about it for yourself and posting further examples as comments...

This is GPT4 thinking about convergent properties, using the post as a prompt and generating 20 plausibly relevant convergences.

- Modularity: Biological systems, like the human brain, display modularity in their structure, allowing for functional specialization and adaptability. Modularity is also found in industries and companies, where teams and departments are organized to handle specific tasks.

- Hierarchical organization: In biological systems, hierarchical organization is common, with higher-level structures built from lower-level components. Hierarchies are also observed in companies and organizations.

- Recurrent connections: Neural networks in the brain have recurrent connections, which allow for feedback and information processing over time. Recurrent structures are also seen in supply chains and communication networks.

- Redundancy: Redundancy in biological systems provides robustness and fault tolerance. This concept is also utilized in industries for backup systems and fail-safe mechanisms.

- Adaptation: Biological systems adapt to changing environments to survive. Adaptation is also a crucial aspect for businesses and industries, where they must adjust to market trends and demands.

- Error correction: Biological systems have mechanisms to detect and correct errors (e.g., DNA repair mechanisms). Error correction is an essential aspect of modern communication systems and data storage.

- Network robustness: Biological networks (e.g., metabolic networks) exhibit robustness against perturbations. Similar robustness is desirable in communication and transportation networks.

- Small-world networks: Biological networks often display small-world properties, with short path lengths and high clustering. These properties are found in social networks and the internet.

- Scale-free networks: Biological networks often exhibit scale-free properties, with a few highly connected nodes (hubs) and many less connected nodes. Scale-free networks are also found in the internet, social networks, and citation networks.

- Sparsity: Neural networks in the brain are sparse, with many fewer connections than theoretically possible. Sparsity is also utilized in machine learning algorithms and data compression techniques.

- Decentralization: Biological systems often rely on decentralized control mechanisms. Decentralization can also be seen in blockchain technology and peer-to-peer networks.

- Homeostasis: Biological systems maintain internal stability through feedback mechanisms. Homeostasis is also relevant to industries, where maintaining stable operating conditions is essential.

- Oscillations: Oscillatory behavior is common in biological systems, such as circadian rhythms. Oscillations can also be observed in economic cycles and traffic patterns.

- Synchronization: Synchronization occurs in biological systems, such as the firing of neurons. Synchronization is also essential in distributed computing and communication systems.

- Division of labor: Division of labor is observed in biological systems (e.g., cells within multicellular organisms) and is a fundamental principle in industries and organizations.

- Cooperation and competition: Biological systems display a balance of cooperation and competition. These dynamics are also observed in economic systems, business strategies, and social interactions.

- Plasticity: Plasticity in biological systems allows for learning and adaptation. In industries, plasticity is important for innovation and adaptation to changing market conditions.

- Evolvability: Biological systems can evolve through mutation and selection. Evolvability is also relevant in industries, where companies must be able to innovate and adapt to survive.

- Self-organization: Self-organization occurs in biological systems, such as pattern formation in developing organisms. Self-organization is also observed in swarm intelligence and decentralized control systems.

- Energy efficiency: Biological systems are optimized for energy efficiency, as seen in metabolic pathways. Energy efficiency is also a crucial consideration in industries and technology development.

In my view

a) it broadly got the idea

b) the result are in my view in a better taste for understand agents than e.g. what you get from karma-ranked LW frontpage posts about AIs on an average day

comment by Oliver Sourbut · 2023-03-28T08:31:33.935Z · LW(p) · GW(p)

Really enjoyed this post, both aesthetically (I like evolution and palaeontology, and obviously AI things!) and as a motivator for some lines of research and thought.

I had a go at one point connecting natural selection with gradient descent [LW · GW] which you might find useful depending on your aims.

I also collected some cases of what I think are potentially convergent properties of 'deliberating systems' [LW · GW], many of them natural, and others artificial. Maybe you'll find those useful, and I'd love to know to what extent you agree or disagree with the concepts there.

comment by gpt4_summaries · 2023-03-28T08:29:25.702Z · LW(p) · GW(p)

Tentative GPT4's summary. This is part of an experiment.

Up/Downvote "Overall" if the summary is useful/harmful.

Up/Downvote "Agreement" if the summary is correct/wrong.

If so, please let me know why you think this is harmful.

(OpenAI doesn't use customers' data anymore for training, and this API account previously opted out of data retention)

TLDR: Convergent evolution, where organisms with different origins develop similar features, can provide insights into deep selection pressures that may extend to advanced AI systems, potentially informing AI alignment work and predicting future AI system properties.

Arguments: The article provides several examples of convergent evolution, including the body shapes of sharks and dolphins, multicellularity, agency, intelligence, and sentience. The article discusses that these convergent properties might provide valuable insights into selection pressures relevant to AI alignment research.

Takeaways:

1. Cases of convergent evolution might point to deep selection pressures, which may help predict advanced AI systems' properties.

2. Convergent evolution may challenge existing assumptions about AI alignments, which often rely on convergence.

3. Learning from convergent evolution can help AI alignment work by understanding the properties that may extend to advanced AI systems.

Strengths:

1. The article presents strong examples of convergent evolution that can potentially extend to AI systems.

2. Convergent evolution as a concept provides a powerful framework for searching for deep selection pressures relevant to AI alignment.

3. The article explores the relevance of convergent evolution to AI alignment work and suggests fruitful areas of future research.

Weaknesses:

1. The article acknowledges that biology is significantly different from AI, which might limit the direct applicability of convergent evolution insights to AI alignment.

2. Due to the complex interactions of selection pressures and contingencies, it may be challenging to predict which properties will extend to advanced AI systems.

Interactions: The exploration of convergent evolution interacts with AI safety topics like instrumental convergence, natural abstraction hypothesis, and selection theorems. Understanding these interactions can help refine alignment work and predictions about AI systems.

Factual mistakes: The summary accurately represents the content of the article and does not contain factual mistakes or hallucinations.

Missing arguments: The main missing argument in the earlier sections is the importance of explicitly discussing convergence and contingency in AI alignment. This discussion can help refine our understanding of the properties that may extend to advanced AI systems and the selection pressures that shape their development.

comment by Mateusz Bagiński (mateusz-baginski) · 2023-03-28T12:20:49.919Z · LW(p) · GW(p)

Standardized communication protocols

Language is the most obvious example, but there's plenty of others. E.g. taking different parts of the body as subsystems communicating with each other, one neurotransmitter/hormone often has very similar effects in many parts of the body.

In software, different processes can communicate with each other by passing messages having some well-defined format. When you're sending an API request, you usually have a good idea of what shape the response is going to take and if the request fails, it should fail in a predictable way that can be harmlessly handled. This makes making reliable software easier.

Some cases of standardization are spontaneous/bottom-up, whereas others are engineered top-down. Human language is both. Languages with greater number of users seem to evolve simpler, more standardized grammars, e.g. compare Russian to Czech or English to Icelandic (though syncretism and promiscuous borrowing may also have had an impact in the second case). I don't know if something like that occurs at all in programming languages but one factor that makes it much less likely is the need to maintain backward-compatibility, which is important for programing languages but much weaker for human languages.

comment by Oliver Sourbut · 2023-03-28T08:20:20.002Z · LW(p) · GW(p)

One hypothesis is that runaway selection for social skills leads to intelligence.

I realise you're explicitly not claiming that this has been the only route to intelligence, but I wanted to insert a counterexample here: cephalopods (octopus, squid, ...) are generally regarded as highly intelligent, but as far as I know there are few or no social species. They don't even interact socially with their own young, unlike orangutans, another example of an otherwise usually solitary intelligent species.

Replies from: clem_acs, Jan_Kulveit↑ comment by clem_acs · 2023-03-29T13:29:33.733Z · LW(p) · GW(p)

"Social" is slightly too coarse-grained a tag. The thing we're actually interested in is "whether successfully predicting the behaviour of other members of its own species is a strong selection pressure". Social collaboration is one way this happens - another seems to be "deception" arms races (such as corvids stealing and hiding things from each other), or specific kinds of mating rituals. It also depends on the relative strength of other selection pressures - in most cases highly intelligent creatures also seem to have developed a "slack" in resources they can devote to intelligence (e.g. humans cooking food).

This does seem to hold for cephalopods - a strong datapoint for which being their highly sophisticated forms of communication (e.g. video below).

↑ comment by Jan_Kulveit · 2023-03-28T20:33:32.364Z · LW(p) · GW(p)

To be clear we are explicitly claiming it's likely not the only pressure - check footnotes 9 and 10 for refs.