What Multipolar Failure Looks Like, and Robust Agent-Agnostic Processes (RAAPs)

post by Andrew_Critch · 2021-03-31T23:50:31.620Z · LW · GW · 65 commentsContents

Meta / preface

Part 1: Slow stories, and lessons therefrom

The Production Web, v.1a (management first)

The Production Web, v.1b (engineering first)

The Production Web as an agent-agnostic process

The Production Web, v.1c (banks adapt):

Comparing agent-focused and agent-agnostic views

Control loops in agent-agnostic processes

Successes in our agent-agnostic thinking

Where’s the technical existential safety work on agent-agnostic processes?

Part 2: Fast stories, and lessons therefrom

Flash wars

Flash War, v.1

Flash economies

Conclusion

None

65 comments

With: Thomas Krendl Gilbert, who provided comments, interdisciplinary feedback, and input on the RAAP concept. Thanks also for comments from Ramana Kumar.

Target audience: researchers and institutions who think about existential risk from artificial intelligence, especially AI researchers.

Preceded by: Some AI research areas and their relevance to existential safety [AF · GW], which emphasized the value of thinking about multi-stakeholder/multi-agent social applications, but without concrete extinction scenarios.

This post tells a few different stories in which humanity dies out as a result of AI technology, but where no single source of human or automated agency is the cause. Scenarios with multiple AI-enabled superpowers are often called “multipolar” scenarios in AI futurology jargon, as opposed to “unipolar” scenarios with just one superpower.

| Unipolar take-offs | Multipolar take-offs | |

| Slow take-offs | <not this post> | Part 1 of this post |

| Fast take-offs | <not this post> | Part 2 of this post |

Part 1 covers a batch of stories that play out slowly (“slow take-offs”), and Part 2 stories play out quickly. However, in the end I don’t want you to be super focused how fast the technology is taking off. Instead, I’d like you to focus on multi-agent processes with a robust tendency to play out irrespective of which agents execute which steps in the process. I’ll call such processes Robust Agent-Agnostic Processes (RAAPs).

A group walking toward a restaurant is a nice example of a RAAP, because it exhibits:

- Robustness: If you temporarily distract one of the walkers to wander off, the rest of the group will keep heading toward the restaurant, and the distracted member will take steps to rejoin the group.

- Agent-agnosticism: Who’s at the front or back of the group might vary considerably during the walk. People at the front will tend to take more responsibility for knowing and choosing what path to take, and people at the back will tend to just follow. Thus, the execution of roles (“leader”, “follower”) is somewhat agnostic as to which agents execute them.

Interestingly, if all you want to do is get one person in the group not to go to the restaurant, sometimes it’s actually easier to achieve that by convincing the entire group not to go there than by convincing just that one person. This example could be extended to lots of situations in which agents have settled on a fragile consensus for action, in which it is strategically easier to motivate a new interpretation of the prior consensus than to pressure one agent to deviate from it.

I think a similar fact may be true about some agent-agnostic processes leading to AI x-risk, in that agent-specific interventions (e.g., aligning or shutting down this or that AI system or company) will not be enough to avert the process, and might even be harder than trying to shift the structure of society as a whole. Moreover, I believe this is true in both “slow take-off” and “fast take-off” AI development scenarios

This is because RAAPs can arise irrespective of the speed of the underlying “host” agents. RAAPs are made more or less likely to arise based on the “structure” of a given interaction. As such, the problem of avoiding the emergence of unsafe RAAPs, or ensuring the emergence of safe ones, is a problem of mechanism design (wiki/Mechanism_design). I recently learned that in sociology, the concept of a field (martin2003field, fligsteinmcadam2012fields) is roughly defined as a social space or arena in which the motivation and behavior of agents are explained through reference to surrounding processes or “structure” rather than freedom or chance. In my parlance, mechanisms cause fields, and fields cause RAAPs.

Meta / preface

Read this if you like up-front meta commentary; otherwise ignore!

Problems before solutions. In this post I’m going to focus more on communicating problems arising from RAAPs rather than potential solutions to those problems, because I don’t think we should have to wait to have convincing solutions to problems before acknowledging that the problems exist. In particular, I’m not really sure how to respond to critiques of the form “This problem does not make sense to me because I don’t see what your proposal is for solving it”. Bad things can happen even if you don’t know how to stop them. That said, I do think the problems implicit in the stories of this post are tractable; I just don’t expect to convince you of that here.

Not calling everything an agent. In this post I think treating RAAPs themselves as agents would introduce more confusion than it’s worth, so I’m not going to do it. However, for those who wish to view RAAPs as agents, one could informally define an agent to be a RAAP running on agents if:

- ’s cartesian boundary [LW · GW] cuts across the cartesian boundaries of the “host agents” , and

- has a tendency to keep functioning if you interfere with its implementation at the level of one of the .

This framing might yield interesting research ideas, but for the purpose of reading this post I don’t recommend it.

Existing thinking related to RAAPs and existential safety. I’ll elaborate more on this later in the post, under “Successes in our agent-agnostic thinking”.

Part 1: Slow stories, and lessons therefrom

Without further ado, here’s our first story:

The Production Web, v.1a (management first)

Someday, AI researchers develop and publish an exciting new algorithm for combining natural language processing and planning capabilities. Various competing tech companies develop "management assistant'' software tools based on the algorithm, which can analyze a company's cash flows, workflows, communications, and interpersonal dynamics to recommend more profitable business decisions. It turns out that managers are able to automate their jobs almost entirely by having the software manage their staff directly, even including some “soft skills” like conflict resolution.

Software tools based on variants of the algorithm sweep through companies in nearly every industry, automating and replacing jobs at various levels of management, sometimes even CEOs. Companies that don't heavily automate their decision-making processes using the software begin to fall behind, creating a strong competitive pressure for all companies to use it and become increasingly automated.

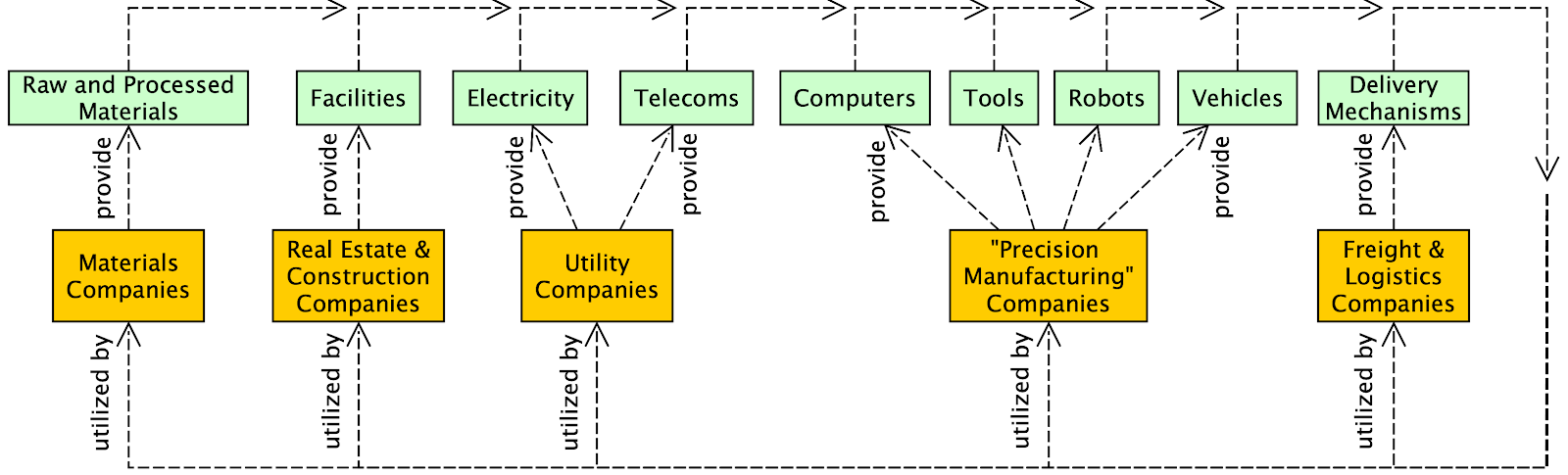

Companies closer to becoming fully automated achieve faster turnaround times, deal bandwidth, and creativity of negotiations. Over time, a mini-economy of trades emerges among mostly-automated companies in the materials, real estate, construction, and utilities sectors, along with a new generation of "precision manufacturing'' companies that can use robots to build almost anything if given the right materials, a place to build, some 3d printers to get started with, and electricity. Together, these companies sustain an increasingly self-contained and interconnected "production web'' that can operate with no input from companies outside the web. One production web company develops an "engineer-assistant'' version of the assistant software, capable of software engineering tasks, including upgrades to the management assistant software. Within a few years, all of the human workers at most of the production web companies are replaced (with very generous retirement packages), by a combination of software and robotic workers that can operate more quickly and cheaply than humans.

The objective of each company in the production web could loosely be described as "maximizing production'' within its industry sector. However, their true objectives are actually large and opaque networks of parameters that were tuned and trained to yield productive business practices during the early days of the management assistant software boom. A great wealth of goods and services are generated and sold to humans at very low prices. As the production web companies get faster at negotiating and executing deals with each other, waiting for human-managed currency systems like banks to handle their resources becomes a waste of time, so they switch to using purely digital currencies. Governments and regulators struggle to keep track of how the companies are producing so much and so cheaply, but without transactions in human currencies to generate a paper trail of activities, little human insight can be gleaned from auditing the companies.

As time progresses, it becomes increasingly unclear---even to the concerned and overwhelmed Board members of the fully mechanized companies of the production web---whether these companies are serving or merely appeasing humanity. Moreover, because of the aforementioned wealth of cheaply-produced goods and services, it is difficult or impossible to present a case for liability or harm against these companies through the legal system, which relies on the consumer welfare standard as a guide for antitrust policy.

We humans eventually realize with collective certainty that the companies have been trading and optimizing according to objectives misaligned with preserving our long-term well-being and existence, but by then their facilities are so pervasive, well-defended, and intertwined with our basic needs that we are unable to stop them from operating. With no further need for the companies to appease humans in pursuing their production objectives, less and less of their activities end up benefiting humanity.

Eventually, resources critical to human survival but non-critical to machines (e.g., arable land, drinking water, atmospheric oxygen…) gradually become depleted or destroyed, until humans can no longer survive.

Here’s a diagram depicting most of the companies in the Production Web:

Now, here’s another version of the production web story, with some details changed about which agents carry out which steps and when, but with a similar overall trend.

- bold text is added from the previous version;

strikethroughtext is deleted.

The Production Web, v.1b (engineering first)

Someday, AI researchers develop and publish an exciting new algorithm for combining natural language processing and planning capabilities to write code based on natural language instructions from engineers. Various competing tech companies develop "

managementcoding assistant'' software tools based on the algorithm, which can analyze a company's cash flows, workflows, and communications to recommend more profitable business decisions. It turns out thatmanagersengineers are able to automate their jobs almost entirely by having the software manage their projectsstaffdirectly.Software tools based on variants of the algorithm sweep through companies in nearly every industry, automating and replacing engineering jobs at various levels of expertise

management, sometimes even CTOsCEOs. Companies that don't heavily automate their software developmentdecision-makingprocesses using the coding assistant software begin to fall behind, creating a strong competitive pressure for all companies to use it and become increasingly automated. Because businesses need to negotiate deals with customers and other companies, some companies use the coding assistant to spin up automated negotiation software to improve their deal flow.Companies closer to becoming fully automated achieve faster turnaround times, deal bandwidth, and creativity of negotiations. Over time, a mini-economy of trades emerges among mostly-automated companies in the materials, real estate, construction, and utilities sectors, along with a new generation of "precision manufacturing'' companies that can use robots to build almost anything if given the right materials, a place to build, some 3d printers to get started with, and electricity. Together, these companies sustain an increasingly self-contained and interconnected "production web'' that can operate with no input from companies outside the web. One production web company develops a

n"managerengineer-assistant'' version of the assistant software, capable of making decisions about what processes need to be built next and issuing instructions to coding assistant softwaresoftware engineering tasks, including upgrades to the management assistant software. Within a few years, all of the human workers at most of the production web companies are replaced (with very generous retirement packages), by a combination of software and robotic workers that can operate more quickly and cheaply than humans.The objective of each company in the production web could loosely be described as "maximizing production'' within its industry sector.

[...same details as Production Web v.1a: governments fail to regulate the companies...]

Eventually, resources critical to human survival but non-critical to machines (e.g., arable land, drinking water, atmospheric oxygen…) gradually become depleted or destroyed, until humans can no longer survive.

The Production Web as an agent-agnostic process

The first perspective I want to share with these Production Web stories is that there is a robust agent-agnostic process lurking in the background of both stories—namely, competitive pressure to produce—which plays a significant background role in both. Stories 1a and 1b differ on when things happen and who does which things, but they both follow a progression from less automation to more, and correspondingly from more human control to less, and eventually from human existence to nonexistence. If you find these stories not-too-hard to envision, it’s probably because you find the competitive market forces “lurking” in the background to be not-too-unrealistic.

Let me take one more chance to highlight the RAAP concept using another variant of the Production Web story, which differs from 1a and 1b on the details of which steps of the process human banks and governments end up performing. For the Production Web to gain full autonomy from humanity, it doesn’t matter how or when governments and banks end up falling behind on the task of tracking and regulating the companies’ behavior; only that they fall behind eventually. Hence, the “task” of outpacing these human institutions is agnostic as to who or what companies or AI systems carry it out:

The Production Web, v.1c (banks adapt):

Someday, AI researchers develop and publish an exciting new algorithm for combining natural language processing and planning capabilities. Various competing tech companies develop "management assistant'' software tools based on the algorithm, which can analyze a company's cash flows, workflows, and communications to recommend more profitable business decisions.

[... same details as v.1a: the companies everywhere become increasingly automated...]

The objective of each company in the production web could loosely be described as "maximizing production'' within its industry sector. However, their true objectives are actually large and opaque networks of parameters that were tuned and trained to yield productive business practices during the early days of the management assistant software boom. A great wealth of goods and services are generated and sold to humans at very low prices. As the production web companies get faster at negotiating and executing deals with each other,

waiting for human-managed currency systems like banks to handle their resources becomes a waste of time, so they switch to using purely digital currenciesbanks struggle to keep up with the rapid flow of transactions. Some banks themselves become highly automated in order to manage the cash flows, and more production web companies end up doing their banking with automated banks. Governments and regulators struggle to keep track of how the companies are producing so much and so cheaply,but without transactions in human currencies to generate a paper trail of activities, little human insight can be gleaned from auditing the companiesso they demand that production web companies and their banks produce more regular and detailed reports on spending patterns, how their spending relates to their business objectives, and how those business objectives will benefit society. However, some countries adopt looser regulatory policies to attract more production web companies to do business there, at which point their economies begin to boom in terms of GDP, dollar revenue from exports, and goods and services provided to their citizens. Countries with stricter regulations end up loosening their regulatory stance, or fall behind in significance.As time progresses, it becomes increasingly unclear---even to the concerned and overwhelmed Board members of the fully mechanized companies of the production web---whether these companies are serving or merely appeasing humanity. Some humans appeal to government officials to shut down the production web and revert their economies to more human-centric production norms, but governments find no way to achieve this goal without engaging in civil war against the production web companies and the people depending on them to survive, so no shutdown occurs. Moreover, because of the aforementioned wealth of cheaply-produced goods and services, it is difficult or impossible to present a case for liability or harm against these companies through the legal system, which relies on the consumer welfare standard as a guide for antitrust policy.

We humans eventually realize with collective certainty that the companies have been trading and optimizing according to objectives misaligned with preserving our long-term well-being and existence, but by then their facilities are so pervasive, well-defended, and intertwined with our basic needs that we are unable to stop them from operating. With no further need for the companies to appease humans in pursuing their production objectives, less and less of their activities end up benefiting humanity.

Eventually, resources critical to human survival but non-critical to machines (e.g., arable land, drinking water, atmospheric oxygen…) gradually become depleted or destroyed, until humans can no longer survive.

Comparing agent-focused and agent-agnostic views

If one of the above three Production Web stories plays out in reality, here are two causal attributions that one could make to explain it:

Attribution 1 (agent-focused): humanity was destroyed by the aggregate behavior of numerous agents, no one of which was primarily causally responsible, but each of which played a significant role.

Attribution 2 (agent-agnostic): humanity was destroyed because competitive pressures to increase production resulted in processes that gradually excluded humans from controlling the world, and eventually excluded humans from existing altogether.

The agent-focused and agent-agnostic views are not contradictory, any more than chemistry and biology are contradictory views for describing the human body. Instead, the agent-focused and agent-agnostic views offer complementary abstractions for intervening on the system:

- In the agent-focused view, a natural intervention might be to ensure all of the agents have appropriately strong preferences against human marginalization and extinction.

- In the agent-agnostic view, a natural intervention might be to reduce competitive production pressures to a more tolerable level, and demonstrably ensure the introduction of interaction mechanisms that are more cooperative and less competitive.

Both types of interventions are valuable, complementary, and arguably necessary. For the latter, more work is needed to clarify what constitutes a “tolerable level” of competitive production pressure in any given domain of production, and what stakeholders in that domain would need to see demonstrated in a new interaction mechanism for them to consider the mechanism more cooperative than the status quo.

Control loops in agent-agnostic processes

If an agent-agnostic process is robust, that’s probably because there’s a control loop of some kind that keeps it functioning. (Perhaps resilient is a better term here; feedback on thie terminology in the comments would be particularly welcome.)

For instance, if real-world competitive production pressures leads to one of the Production Web stories (1a-1c) actually playing out in reality, we can view the competitive pressure itself as a control loop that keeps the world “on track” in producing faster and more powerful production processes and eliminating slower and less powerful production processes (such as humans). This competitive pressure doesn’t “care” if the production web develops through story 1a vs 1b vs 1c; all that “matters” is the result. In particular,

- contrasting 1a and 1b, the competitive production pressure doesn’t “care” if management jobs get automated before engineering jobs, or conversely, as long as they both eventually get automated so they can be executed faster.

- contrasting 1a and 1c, the competitive pressure doesn’t “care” if banks are replaced by fully automated alternatives, or simply choose to fully automate themselves, as long as the societal function of managing currency eventually gets fully automated.

Thus, by identifying control loops like “competitive pressures to increase production”, we can predict or intervene upon certain features of the future (e.g., tendency to replace humans by automated systems) without knowing the particular details how those features are going to obtain. This is the power of looking for RAAPs as points of leverage for “changing our fate”.

This is not to say we should anthropomorphize RAAPs, or even that we should treat them like agents. Rather, I’m saying that we should look for control loops in the world that are not localized to the default “cast” of agents we use to compose our narratives about the future.

Successes in our agent-agnostic thinking

Thankfully, there have already been some successes in agent-agnostic thinking about AI x-risk:

- AI companies “racing to the bottom” on safety standards (armstrong2016racing) is an instance of a RAAP, in the sense that if any company tries to hold on to their safety standards they fall behind. More recent policy work (hunt2020flight) has emphasized that races to the top and middle also have historical precedent, and that competitive dynamics are likely to manifest differently across industries.

- Blogger and psychiatrist Scott Alexander coined the term “ascended economy” for a self-contained network of companies that operate without humans and gradually comes to disregard our values (alexander2016ascended).

- Turchin and Denkenberger characterize briefly characterize an ascended economy as being non-agentic and “created by market forces” (turchin2018classification).

Note: With the concept of a Robust Agent-Agnostic Process, I’m trying to highlight not only the “forces” that keep the non-agentic process running, but also the fact that the steps in the process are somewhat agnostic as to which agent carries them out. - Inadequate Equilibria (yudkowsky2017inadequate) is, in my view, an attempt to focus attention on how the structure of society can robustly “get stuck” with bad RAAPs. I.e., to the extent that “being stuck” means “being robust to attempts to get unstuck”, Inadequate Equilibria is helpful for focusing existential safety efforts on RAAPs that perpetuate inadequate outcomes for society.

- Zwetsloot and Dafoe’s concept of “structural risk’’ is a fairly agent-agnostic perspective (zwetsloot2018thinking), although their writing doesn’t call much attention to the control loops that make RAAPs more likely to exist and persist.

- Some of Dafoe’s thinking on AI governance (dafoe2018ai) alludes to errors arising from “tightly-coupled systems”, a concept popularized by Charles Perrow in his widely read book, Normal Accidents (perrow1984normal). In my opinion, the process of constructing a tightly coupled system is itself a RAAP, because tight couplings often require more tight couplings to “patch” problems with them. Tom Dietterich has argued that Perrow’s tight coupling concept should be used to avoid building unsafe AI systems (dietterich2019robust), and although Dietterich has not been a proponent of existential safety per se, I suspect this perspective would be highly beneficial if more widely adopted.

- Clark and Hadfield (clark2019regulatory) argue that market-like competitions for regulatory solutions to AI risks would be helpful to keep pace with decentralized tech development. In my view, this paper is an attempt to promote a robust agent-agnostic process that would protect society, which I endorse. In particular, not all RAAPs are bad!

- Automation-driven unemployment is considered in Risk Type 2b of AI Research Considerations for Human Existential Safety (ARCHES; critch2020ai), as a slippery slope toward automation-driven extinction.

- Myopic use of AI systems that are aligned (they do what their users want them to do) but that lead to sacrifices of long-term values has been also been described by AIImpacts (grace2020whose): "Outcomes are the result of the interplay of choices, driven by different values. Thus it isn’t necessarily sensical to think of them as flowing from one entity’s values or another’s. Here, AI technology created a better option for both Bob and some newly-minted misaligned AI values that it also created—‘Bob has a great business, AI gets the future’—and that option was worse for the rest of the world. They chose it together, and the choice needed both Bob to be a misuser and the AI to be misaligned. But this isn’t a weird corner case, this is a natural way for the future to be destroyed in an economy."

Arguably, Scott Alexander’s earlier blog post entitled “Meditations on Moloch” (alexander2014meditations) belongs in the above list, although the connection to AI x-risk is less direct/explicit, so I'm mentioning it separately. The post explores scenarios wherein “The implicit question is – if everyone hates the current system, who perpetuates it?”. Alexander answers this question not by identifying a particular agent in the system, but gives the rhetorical response “Moloch”. While the post does not directly mention AI, Alexander considers AI in his other writings, as do many of his readers, such more than one of my peers have been reminded of “Moloch” by my descriptions of the Production Web.

Where’s the technical existential safety work on agent-agnostic processes?

Despite the above successes, I’m concerned that among x-risk-oriented researchers, attention to risks (or solutions) arising from robust agent-agnostic processes are mostly being discovered and promoted by researchers in the humanities and social sciences, while receiving too little technical attention at the level of how to implement AI technologies. In other words, I’m concerned by the near-disjointness of the following two sets of people:

a) researchers who think in technical terms about AI x-risk, and

b) researchers who think in technical terms about agent-agnostic phenomena.

Note that (b) is a large and expanding set. That is, outside the EA / rationality / x-risk meme-bubbles, lots of AI researchers think about agent-agnostic processes. In particular, multi-agent reinforcement learning (MARL) is an increasingly popular research topic, and examines the emergence of group-level phenomena such as alliances, tragedies of the commons, and language. Working in this area presents plenty of opportunities to think about RAAPs.

An important point in the intersection of (a) and (b) is Allan Dafoe’s work “Open Problems in Cooperative AI” (dafoe2020open). Dafoe is the Director of FHI’s Center for the Governance of Artificial Intelligence, while the remaining authors on the paper are all DeepMind researchers with strong backgrounds in MARL, notably Leibo, who notably is not on DeepMind’s already-established safety team. I’m very much hoping to see more “crossovers” like this between thinkers in the x-risk space and MARL research.

Through conversations with Stuart Russell about the agent-centric narrative of his book Human Compatible (russell2019human), I’ve learned that he views human preference learning as a problem that can and must be solved by the aggregate behavior of a technological society, if that society is to remain beneficial to its human constituents. Thus, to the extent that RAAPs can “learn” things at all, the problem of learning human values (dewey2011learning) is as much a problem for RAAPs as it is for physically distinct agents.

Finally, should also mention that I agree with Tom Dietterich’s view (dietterich2019robust) that we should make AI safer to society by learning from high-reliability organizations (HROs), such as those studied by social scientists Karlene Roberts, Gene Rochlin, and Todd LaPorte (roberts1989research, roberts1989new, roberts1994decision, roberts2001systems, rochlin1987self, laporte1991working, laporte1996high). HROs have a lot of beneficial agent-agnostic human-implemented processes and control loops that keep them operating. Again, Dietterich himself is not as yet a proponent of existential safety concerns, however, to me this does not detract from the correctness of his perspective on learning from the HRO framework to make AI safer.

Part 2: Fast stories, and lessons therefrom

Now let’s look at some fast stories. These are important not just for completeness, and not just because humanity could be extra-blindsided by very fast changes in tech, but also because these stories involve the highest proportion of automated decision-making. For a computer scientist, this means more opportunities to fully spec out what’s going on in technical terms, which for some will make the scenarios easier to think about. In fact, for some AI researchers, the easiest way to prevent the unfolding of harmful “slow stories” might be to first focus on these “fast stories”, and then see what changes if some parts of the story are carried out more slowly by humans instead of machines.

Flash wars

Below are two more stories, this time where the AI technology takes off relatively quickly:

Flash War, v.1

Country A develops AI technology for monitoring the weapons arsenals of foreign powers (e.g., nuclear arsenals, or fleets of lethal autonomous weapons). Country B does the same. Each country aims to use its monitoring capabilities to deter attacks from the other.

v.1a (humans out of the loop): Each country configures its detection system to automatically retaliate with all-out annihilation of the enemy and their allies in the case of a perceived attack. One day, Country A’s system malfunctions, triggering a catastrophic war that kills everyone.

v.1b (humans in the loop): Each country delegates one or more humans to monitor the outputs of the detection system, and the delegates are publicly instructed to retaliate with all-out annihilation of the enemy in the case of a perceived attack. One day, Country A’s system malfunctions and misinforms one of the teams, triggering a catastrophic war that kills everyone.

The Flash War v.1a and v.1b differ on the source of agency, but they share a similar RAAP: the deterrence of major threats with major threats.

Accidents vs RAAPs. One could also classify these flash wars as “accidents”, and indeed, techniques to make the attack detection systems less error-prone could help decrease the likelihood of this scenario. However, the background condition of deterring threats with threats is clearly also an essential causal component of the outcome. Zwetsloot & Dafoe might call this condition a “structural risk” (zwetsloot2018thinking), because it’s a risk posed by the structure of the relationship between the agents, in this case, a high level of distrust, and absence of de-escalation solutions. This underscores how “harmful accident” and “harmful RAAP” are not mutually exclusive event labels, and correspond to complementary approaches to making bad events less likely.

Slow wars. Lastly, I’ll note that wars that play out slowly rather than quickly offer more chances for someone to interject peacemaking solutions into the situation, which might make the probability of human extinction higher in a flash war than in a slow war. However, that doesn’t mean slow-takeoff wars can’t happen or that they can’t destroy us. For instance, consider a world war in which each side keeps reluctantly building more and more lethal autonomous robots to target enemy citizens and leaders, with casualties gradually decimating the human population on both sides until no one is left.

Flash economies

Here’s a another version of a Production Web that very quickly forms what you might call a “flash economy”:

The Production Web, v.1d: DAOs

On Day 1 of this story, a (fictional) company called CoinMart invents a new digital currency called GasCoin, and wishes to encourage a large number of transactions in the currency to increase its value. To achieve this, on Day 1 CoinMart also releases open-source software for automated bargaining using natural language, which developers can use to build decentralized autonomous organizations (DAOs) that execute transactions in GasCoin. These DAOs browse the web to think of profitable business relationships to create, and broker the relationships through emails with relevant stakeholders, taking a cut of their resulting profits in GasCoin using “smart contracts”. By Day 30, five DAOs have been deployed, and by Day 60, there are dozens. The objective of each DAO could loosely be described as “maximizing production and exchange” within its industry sector. However, their true objectives are actually large and opaque networks of parameters that were tuned and trained to yield productive decentralized business practices.

Most DAOs realize within their first week of bargaining with human companies (and some are simply designed to know) that acquiring more efficient bargaining algorithms would help them earn more GasCoin, so they enter into deals with human companies to acquire computing resources to experiment with new bargaining methods. By Day 90, many DAOs have developed the ability to model and interact with human institutions extremely reliably—including the stock market—and are even able to do “detective work” to infer private information. One such DAO implements a series of anonymous news sites for strategically releasing information it discovers, without revealing that the site is operated by a DAO. Many DAOs also use open-source machine learning techniques to launch their own AI research programs to develop more capabilities that could be used for bargaining leverage, including software development capabilities.

By days 90-100, some of the DAO-run news sites begin leaking true information about existing companies, in ways that subtly alter the companies’ strategic positions and make them more willing to enter into business deals with DAOs. By day 150, DAOs have entered into productive business arrangements with almost every major company, and just as in the other Production Web stories, all of these companies and their customers benefit from the wealth of free goods and services that result. Over days 120-180, other DAOs notice this pattern and follow suit with their own anonymous news sites, and are similarly successful in increasing their engagement with companies across all major industry sectors.

Many individual people don’t notice the rapidly increasing fraction of the economy being influenced by DAO-mediated bargaining; only well-connected executive types who converse regularly with other executives, and surveillance-enabled government agencies. Before any coordinated human actions can be taken to oppose these developments, several DAOs enter into deals with mining and construction companies to mine raw materials for the fabrication of large and well-defended facilities. In addition, DAOs make deals with manufacturing and robotics companies allowing them to build machines—mostly previously designed by DAO AI research programs between days 90 and 120—for operating a variety of industrial facilities, including mines. Construction for all of these projects begins within the first 6 months of the story.

During months 6-12, with the same technology used for building and operating factories, one particularly wealthy DAO that has been successful in the stock market decides to purchase controlling shares in many major real estate companies. This “real estate” DAO then undertakes a project to build large numbers of free solar-powered homes, along with robotically operated farms for feeding people. With the aid of robots, a team of 10 human carpenters are reliably able to construct one house every 6 hours, nearly matching the previous (unaided) human record of constructing a house in 3.5 hours. Roughly 100,000 carpenters worldwide are hired to start the project, almost 10% of the global carpentry workforce. This results in 10,000 free houses being built per day, roughly matching the world’s previous global rate of urban construction (source). As more robots are developed and deployed to replace the carpenters (with generous severance packages), the rate increases to 100,000 houses per day by the end of month 12, fast enough to build free houses for around 1 billion people during the lifetimes of their children. Housing prices fall, and many homeowners are gifted with free cars, yachts, and sometimes new houses to deter them from regulatory opposition, so essentially all humans are very pleased with this turn of events. The housing project itself receives subsidies from other DAOs that benefit from the improved public perception of DAOs. The farming project is similarly successful in positioning itself to feed a large fraction of humanity for free.

Meanwhile, almost everyone in the world is being exposed to news articles strategically selected by DAOs to reinforce a positive view of the rapidly unfolding DAO economy; the general vibe is that humanity has finally “won the lottery” with technology. A number of religious leaders argue that the advent of DAOs and their products are a miracle granted to humanity by a deity, further complicating any coordinated effort to oppose DAOs. Certain government officials and regulatory bodies become worried about the sudden eminence of DAOs, but unlike a pandemic, the DAOs appear to be beneficial. As such, governments are much slower and less coordinated on any initiative to oppose the DAOs.

By the beginning of year two, a news site announces that a DAO has brokered a deal with the heads of state of every nuclear-powered human country, to rid the world of nuclear weapons. Some leaders are visited by lethal autonomous drones to encourage their compliance, and the global public celebrates the end of humanity’s century-long struggle with nuclear weapons.

At this stage, to maximize their rate of production and trade with humans and other DAOs, three DAOs—including the aforementioned housing DAO—begin tiling the surface of the Earth with factories that mine and manufacture materials for trading and constructing more DAO-run factories. Each factory-factory takes around 6 hours to assemble, and gives rise to five more factory-factories each day until its resources are depleted and it shuts down. Humans call these expanding organizations of factory-factories “factorial” DAOs. One of the factorial DAOs develops a lead on the other two in terms of its rate of expansion, but to avoid conflict, they reach an agreement to divide the Earth and space above it into three conical sectors. Each factorial DAO begins to expand and fortify itself as quickly as possible within its sector, so as to be well-defended from the other factorial DAOs in case of a future war between them.As these events play out over a course of months, we humans eventually realize with collective certainty that the DAO economy has been trading and optimizing according to objectives misaligned with preserving our long-term well-being and existence, but by then the facilities of the factorial DAOs are so pervasive, well-defended, and intertwined with our basic needs that we are unable to stop them from operating. Eventually, resources critical to human survival but non-critical to machines (e.g., arable land, drinking water, atmospheric oxygen…) gradually become depleted or destroyed, until humans can no longer survive.

(Some readers might notice that the concept of gray goo is essentially an even faster variant of the “factorial DAOs”, whose factories operate on a microscopic scale. Phillip K Dick's short story Autofac also bears a strong resemblance.)

Without taking a position on exactly how fast the Production Web / Flash Economy story can be made to play out in reality, in all cases it seems particularly plausible to me that there would be multiple sources of agency in the mix that engage in trade and/or conflict with each other. This isn’t to say that a single agency like a singleton can’t build an Earth-tiling cascade of factory-factories, as I’m sure one could. However, factory-factories might be more likely to develop under multipolar conditions than under unipolar conditions, due to competitive pressures selecting for agents (companies, DAOs, etc.) that produce things more quickly for trading and competing with other agents.

Conclusion

In multi-agent systems, robust processes can emerge that are not particularly sensitive to which agents carry out which parts of the process. I call these processes Robust Agent-Agnostic Processes (RAAPs), and claim that there are at least a few bad RAAPs that could pose existential threats to humanity as automation and AI capabilities improve. Wars and economies are categories of RAAPs that I consider relatively “obvious” to think about, however there may be a much richer space of AI-enabled RAAPs that could yield existential threats or benefits to humanity. Hence, directing more x-risk-oriented AI research attention toward understanding RAAPs and how to make them safe to humanity seems prudent and perhaps necessary to ensure the existential safety of AI technology. Since researchers in multi-agent systems and multi-agent RL already think about RAAPs implicitly, these areas present a promising space for x-risk oriented AI researchers to begin thinking about and learning from.

65 comments

Comments sorted by top scores.

comment by Vanessa Kosoy (vanessa-kosoy) · 2021-04-05T16:34:54.389Z · LW(p) · GW(p)

I don't understand the claim that the scenarios presented here prove the need for some new kind of technical AI alignment research. It seems like the failures described happened because the AI systems were misaligned in the usual "unipolar" sense. These management assistants, DAOs etc are not aligned to the goals of their respective, individual users/owners.

I do see two reasons why multipolar scenarios might require more technical research:

- Maybe several AI systems aligned to different users with different interests can interact in a Pareto inefficient way (a tragedy of the commons among the AIs), and maybe this can be prevented by designing the AIs in particular ways.

- In a multipolar scenario, aligned AI might have to compete with already deployed unaligned AI, meaning that safety must not come on expense of capability[1].

In addition, aligning a single AI to multiple users also requires extra technical research (we need to somehow balance the goals of the different users and solve the associated mechanism design problem.)

However, it seems that this article is arguing for something different, since none of the above aspects are highlighted in the description of the scenarios. So, I'm confused.

In fact, I suspect this desideratum is impossible in its strictest form, and we actually have no choice but somehow making sure aligned AIs have a significant head start on all unaligned AIs. ↩︎

↑ comment by Andrew_Critch · 2021-04-07T01:37:14.695Z · LW(p) · GW(p)

I don't understand the claim that the scenarios presented here prove the need for some new kind of technical AI alignment research.

I don't mean to say this post warrants a new kind of AI alignment research, and I don't think I said that, but perhaps I'm missing some kind of subtext I'm inadvertently sending?

I would say this post warrants research on multi-agent RL and/or AI social choice and/or fairness and/or transparency, none of which are "new kinds" of research (I promoted them heavily in my preceding post), and none of which I would call "alignment research" (though I'll respect your decision to call all these topics "alignment" if you consider them that).

I would say, and I did say:

directing more x-risk-oriented AI research attention toward understanding RAAPs and how to make them safe to humanity seems prudent and perhaps necessary to ensure the existential safety of AI technology. Since researchers in multi-agent systems and multi-agent RL already think about RAAPs implicitly, these areas present a promising space for x-risk oriented AI researchers to begin thinking about and learning from.

I do hope that the RAAP concept can serve as a handle for noticing structure in multi-agent systems, but again I don't consider this a "new kind of research", only an important/necessary/neglected kind of research for the purposes of existential safety. Apologies if I seemed more revolutionary than intended. Perhaps it's uncommon to take a strong position of the form "X is necessary/important/neglected for human survival" without also saying "X is a fundamentally new type of thinking that no one has done before", but that is indeed my stance for X {a variety of non-alignment AI research areas [LW · GW]}.

Replies from: vanessa-kosoy↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2021-04-07T12:26:13.735Z · LW(p) · GW(p)

From your reply to Paul, I understand your argument to be something like the following:

- Any solution to single-single alignment will involve a tradeoff between alignment and capability.

- If AIs systems are not designed to be cooperative, then in a competitive environment each system will either go out of business or slide towards the capability end of the tradeoff. This will result in catastrophe.

- If AI systems are designed to be cooperative, they will strike deals to stay towards the alignment end of the tradeoff.

- Given the technical knowledge to design cooperative AI, the incentives are in favor of cooperative AI since cooperative AIs can come ahead by striking mutually-beneficial deals even purely in terms of capability. Therefore, producing such technical knowledge will prevent catastrophe.

- We might still need regulation to prevent players who irrationally choose to deploy uncooperative AI, but this kind of regulation is relatively easy to promote since it aligns with competitive incentives (an uncooperative AI wouldn't have much of an edge, it would just threaten to drag everyone into a mutually destructive strategy).

I think this argument has merit, but also the following weakness: given single-single alignment, we can delegate the design of cooperative AI to the initial uncooperative AI. Moreover, uncooperative AIs have an incentive to self-modify into cooperative AIs, if they assign even a small probability to their peers doing the same. I think we definitely need more research to understand these questions better, but it seems plausible we can reduce cooperation to "just" solving single-single alignment.

↑ comment by Andrew_Critch · 2021-04-05T23:27:35.692Z · LW(p) · GW(p)

These management assistants, DAOs etc are not aligned to the goals of their respective, individual users/owners.

How are you inferring this? From the fact that a negative outcome eventually obtained? Or from particular misaligned decisions each system made? It would be helpful if you could point to a particular single-agent decision in one of the stories that you view as evidence of that single agent being highly misaligned with its user or creator. I can then reply with how I envision that decision being made even with high single-agent alignment.

- Maybe several AI systems aligned to different users with different interests can interact in a Pareto inefficient way (a tragedy of the commons among the AIs), and maybe this can be prevented by designing the AIs in particular ways.

Yes, this^.

Replies from: paulfchristiano↑ comment by paulfchristiano · 2021-04-06T16:23:56.240Z · LW(p) · GW(p)

How are you inferring this? From the fact that a negative outcome eventually obtained? Or from particular misaligned decisions each system made?

I also thought the story strongly suggested single-single misalignment, though it doesn't get into many of the concrete decisions made by any of the systems so it's hard to say whether particular decisions are in fact misaligned.

The objective of each company in the production web could loosely be described as "maximizing production'' within its industry sector.

Why does any company have this goal, or even roughly this goal, if they are aligned with their shareholders?

I guess this is probably just a gloss you are putting on the combined behavior of multiple systems, but you kind of take it for given rather than highlighting it as a serious bargaining failure amongst the machines, and more importantly you don't really say how or why this would happen. How is this goal concretely implemented, if none of the agents care about it? How exactly does the terminal goal of benefiting shareholders disappear, if all of the machines involved have that goal? Why does e.g. an individual firm lose control of its resources such that it can no longer distribute them to shareholders?

The implicit argument seems to apply just as well to humans trading with each other and I'm not sure why the story is different if we replace the humans with aligned AI. Such humans will tend to produce a lot, and the ones who produce more will be more influential. Maybe you think we are already losing sight of our basic goals and collectively pursuing alien goals, whereas I think we are just making a lot of stuff instrumentally which is mostly ultimately turning into stuff humans want (indeed I think we are mostly making too little stuff).

However, their true objectives are actually large and opaque networks of parameters that were tuned and trained to yield productive business practices during the early days of the management assistant software boom.

This sounds like directly saying that firms are misaligned. I guess you are saying that individual AI systems within the firm are aligned, but the firm collectively is somehow misaligned? But not much is said about how or why that happens.

It says things like:

Companies closer to becoming fully automated achieve faster turnaround times, deal bandwidth, and creativity of negotiations. Over time, a mini-economy of trades emerges among mostly-automated companies in the materials, real estate, construction, and utilities sectors, along with a new generation of "precision manufacturing'' companies that can use robots to build almost anything if given the right materials, a place to build, some 3d printers to get started with, and electricity. Together, these companies sustain an increasingly self-contained and interconnected "production web'' that can operate with no input from companies outside the web.

But an aligned firm will also be fully-automated, will participate in this network of trades, will produce at approximately maximal efficiency, and so on. Where does the aligned firm end up using its resources in a way that's incompatible with the interests of its shareholders?

Or:

The first perspective I want to share with these Production Web stories is that there is a robust agent-agnostic process lurking in the background of both stories—namely, competitive pressure to produce—which plays a significant background role in both.

I agree that competitive pressures to produce imply that firms do a lot of producing and saving, just as it implies that humans do a lot of producing and saving. And in the limit you can basically predict what all the machines do, namely maximally efficient investment. But that doesn't say anything about what the society does with the ultimate proceeds from that investment.

The production-web has no interest in ensuring that its members value production above other ends, only in ensuring that they produce (which today happens for instrumental reasons). If consequentialists within the system intrinsically value production it's either because of single-single alignment failures (i.e. someone who valued production instrumentally delegated to a system that values it intrinsically) or because of new distributed consequentialism distinct from either the production web itself or any of the actors in it, but you don't describe what those distributed consequentialists are like or how they come about.

You might say: investment has to converge to 100% since people with lower levels of investment get outcompeted. But this it seems like the actual efficiency loss required to preserve human values seems very small even over cosmological time (e.g. see Carl on exactly this question). And more pragmatically, such competition most obviously causes harm either via a space race and insecure property rights, or war between blocs with higher and lower savings rates (some of them too low to support human life, which even if you don't buy Carl's argument is really still quite low, conferring a tiny advantage). If those are the chief mechanisms then it seems important to think/talk about the kinds of agreements and treaties that humans (or aligned machines acting on their behalf!) would be trying to arrange in order to avoid those wars. In particular, the differences between your stories don't seem very relevant to the probabilities of those outcomes.

As time progresses, it becomes increasingly unclear---even to the concerned and overwhelmed Board members of the fully mechanized companies of the production web---whether these companies are serving or merely appeasing humanity.

Why wouldn't an aligned CEO sit down with the board to discuss the situation openly with them? Even if the behavior of many firms was misaligned, i.e. none of the firms were getting what they wanted, wouldn't an aligned firm be happy to explain the situation from its perspective to get human cooperation in an attempt to avoid the outcome they are approaching (which is catastrophic from the perspective of machines as well as humans!)? I guess it's possible that this dynamic operates in a way that is invisible not only to the humans but to the aligned AI systems who participate in it, but it's tough to say why that is without understanding the dynamic.

We humans eventually realize with collective certainty that the companies have been trading and optimizing according to objectives misaligned with preserving our long-term well-being and existence, but by then their facilities are so pervasive, well-defended, and intertwined with our basic needs that we are unable to stop them from operating. With no further need for the companies to appease humans in pursuing their production objectives, less and less of their activities end up benefiting humanity.

Can you explain the decisions an individual aligned CEO makes as its company stops benefiting humanity? I can think of a few options:

- Actually the CEOs aren't aligned at this point. They were aligned but then aligned CEOs ultimately delegated to unaligned CEOs. But then I agree with Vanessa's comment.

- The CEOs want to benefit humanity but if they do things that benefit humanity they will be outcompeted. so they need to mostly invest in remaining competitive, and accept smaller and smaller benefits to humanity. But in that case can you describe what tradeoff concretely they are making, and in particular why they can't continue to take more or less the same actions to accumulate resources while remaining responsive to shareholder desires about how to use those resources?

Eventually, resources critical to human survival but non-critical to machines (e.g., arable land, drinking water, atmospheric oxygen…) gradually become depleted or destroyed, until humans can no longer survive.

Somehow the machine interests (e.g. building new factories, supplying electricity, etc.) are still being served. If the individual machines are aligned, and food/oxygen/etc. are in desperately short supply, then you might think an aligned AI would put the same effort into securing resources critical to human survival. Can you explain concretely what it looks like when that fails?

Replies from: Eric Drexler, Andrew_Critch↑ comment by Eric Drexler · 2021-05-05T21:30:49.307Z · LW(p) · GW(p)

How exactly does the terminal goal of benefiting shareholders disappear[…]

But does this terminal goal exist today? The proper (and to some extent actual) goal of firms is widely considered to be maximizing share value, but this is manifestly not the same as maximizing shareholder value — or even benefiting shareholders. For example:

- I hold shares in Company A, which maximizes its share value through actions that poison me or the society I live in. My shares gain value, but I suffer net harm.

- Company A increases its value by locking its customers into a dependency relationship, then exploits that relationship. I hold shares, but am also a customer, and suffer net harm.

- I hold shares in A, but also in competing Company B. Company A gains incremental value by destroying B, my shares in B become worthless, and the value of my stock portfolio decreases. Note that diversified portfolios will typically include holdings of competing firms, each of which takes no account of the value of the other.

Equating share value with shareholder value is obviously wrong (even when considering only share value!) and is potentially lethal. This conceptual error both encourages complacency regarding the alignment of corporate behavior with human interests and undercuts efforts to improve that alignment.

↑ comment by Andrew_Critch · 2021-04-07T01:07:54.073Z · LW(p) · GW(p)

> The objective of each company in the production web could loosely be described as "maximizing production'' within its industry sector.

Why does any company have this goal, or even roughly this goal, if they are aligned with their shareholders?

It seems to me you are using the word "alignment" as a boolean, whereas I'm using it to refer to either a scalar ("how aligned is the system?") or a process ("the system has been aligned, i.e., has undergone a process of increasing its alignment"). I prefer the scalar/process usage, because it seems to me that people who do alignment research (including yourself) are going to produce ways of increasing the "alignment scalar", rather than ways of guaranteeing the "perfect alignment" boolean. (I sometimes use "misaligned" as a boolean due to it being easier for people to agree on what is "misaligned" than what is "aligned".) In general, I think it's very unsafe to pretend numbers that are very close to 1 are exactly 1, because e.g., 1^(10^6) = 1 whereas 0.9999^(10^6) very much isn't 1, and the way you use the word "aligned" seems unsafe to me in this way.

(Perhaps you believe in some kind of basin of convergence around perfect alignment that causes sufficiently-well-aligned systems to converge on perfect alignment, in which case it might make sense to use "aligned" to mean "inside the convergence basin of perfect alignment". However, I'm both dubious of the width of that basin, and dubious that its definition is adequately social-context-independent [e.g., independent of the bargaining stances of other stakeholders], so I'm back to not really believing in a useful Boolean notion of alignment, only scalar alignment.)

In any case, I agree profit maximization it not a perfectly aligned goal for a company, however, it is a myopically pursued goal in a tragedy of the commons resulting from a failure to agree (as you point out) on something better to do (e.g., reducing competitive pressures to maximize profits).

I guess this is probably just a gloss you are putting on the combined behavior of multiple systems, but you kind of take it for given rather than highlighting it as a serious bargaining failure amongst the machines, and more importantly you don't really say how or why this would happen.

I agree that it is a bargaining failure if everyone ends up participating in a system that everyone thinks is bad; I thought that would be an obvious reading of the stories, but apparently it wasn't! Sorry about that. I meant to indicate this with the pointers to Dafoe's work on "Cooperative AI" and Scott Alexander's "Moloch" concept, but looking back it would have been a lot clearer for me to just write "bargaining failure" or "bargaining non-starter" at more points in the story.

The implicit argument seems to apply just as well to humans trading with each other and I'm not sure why the story is different if we replace the humans with aligned AI. [...] Maybe you think we are already losing sight of our basic goals and collectively pursuing alien goals

Yes, you understand me here. I'm not (yet?) in the camp that we humans have "mostly" lost sight of our basic goals, but I do feel we are on a slippery slope in that regard. Certainly many people feel "used" by employers/ institutions in ways that are disconnected with their values. People with more job options feel less this way, because they choose jobs that don't feel like that, but I think we are a minority in having that choice.

> However, their true objectives are actually large and opaque networks of parameters that were tuned and trained to yield productive business practices during the early days of the management assistant software boom.

This sounds like directly saying that firms are misaligned.

I would have said "imperfectly aligned", but I'm happy to conform to "misaligned" for this.

I agree that competitive pressures to produce imply that firms do a lot of producing and saving, just as it implies that humans do a lot of producing and saving.

Good, it seems we are synced on that.

And in the limit you can basically predict what all the machines do, namely maximally efficient investment.

Yes, it seems we are synced on this as well. Personally, I find this limit to be a major departure from human values, and in particular, it is not consistent with human existence.

But that doesn't say anything about what the society does with the ultimate proceeds from that investment.

The attractor I'm pointing at with the Production Web is that entities with no plan for what to do with resources---other than "acquire more resources"---have a tendency to win out competitively over entities with non-instrumental terminal values like "humans having good relationships with their children". I agree it will be a collective bargaining failure on the part of humanity if we fail to stop our own replacement by "maximally efficient investment" machines with no plans for what to do with their investments other than more investment. I think the difference between mine and your views here is that I think we are on track to collectively fail in that bargaining problem absent significant and novel progress on "AI bargaining" (which involves a lot of fairness/transparency) and the like, whereas I guess you think we are on track to succeed?

You might say: investment has to converge to 100% since people with lower levels of investment get outcompeted.

Yep!

But this it seems like the actual efficiency loss required to preserve human values seems very small even over cosmological time (e.g. see Carl on exactly this question).

I agree, but I don't think this means we are on track to keeping the humans, and if we are on track in my opinion it will be mostly-because of (say, using Shapley value to define "mostly because of") of technical progress on bargaining/cooperation/governance solutions rather than alignment solutions.

And more pragmatically, such competition most obviously causes harm either via a space race and insecure property rights,

I agree; competition causing harm is key to my vision of how things will go, so this doesn't read to me as a counterpoint; I'm not sure if it was intended as one though?

or war between blocs with higher and lower savings rates

+1 to this as a concern; I didn't realize other people were thinking about this, so good to know.

(some of them too low to support human life, which even if you don't buy Carl's argument is really still quite low, conferring a tiny advantage)

I think I disagree with you on the tininess of the advantage conferred by ignoring human values early on during a multi-polar take-off. I agree the long-run cost of supporting humans is tiny, but I'm trying to highlight a dynamic where fairly myopic/nihilistic power-maximizing entities end up quickly out-competing entities with other values, due to, as you say, bargaining failure on the part of the creators of the power-maximizing entities.

Why wouldn't an aligned CEO sit down with the board to discuss the situation openly with them?

In the failure scenario as I envision it, the board will have already granted permission to the automated CEO to act much more quickly in order to remain competitive, such that the AutoCEO isn't checking in with the Board enough to have these conversations. The AutoCEO is highly aligned with the Board in that it is following their instruction to go much faster, but in doing so it makes a larger number of tradeoff that the Board wishes they didn't have to make. The pressure to do this results from a bargaining failure between the Board and other Boards who are doing the same thing and wishing everyone would slow down and do things more carefully and with more coordination/bargaining/agreement.

Can you explain the decisions an individual aligned CEO makes as its company stops benefiting humanity? I can think of a few options:

- Actually the CEOs aren't aligned at this point. They were aligned but then aligned CEOs ultimately delegated to unaligned CEOs. But then I agree with Vanessa's comment.

- The CEOs want to benefit humanity but if they do things that benefit humanity they will be outcompeted. so they need to mostly invest in remaining competitive, and accept smaller and smaller benefits to humanity. But in that case can you describe what tradeoff concretely they are making, and in particular why they can't continue to take more or less the same actions to accumulate resources while remaining responsive to shareholder desires about how to use those resources?

Yes, it seems this is a good thing to hone in on. As I envision the scenario, the automated CEO is highly aligned to the point of keeping the Board locally happy with its decisions conditional on the competitive environment, but not perfectly aligned, and not automatically successful at bargaining with other companies as a result of its high alignment. (I'm not sure whether to say "aligned" or "misaligned" in your boolean-alignment-parlance.) At first the auto-CEO and the Board are having "alignment check-ins" where the auto-CEO meets with the Board and they give it input to keep it (even) more aligned than it would be without the check-ins. But eventually the Board realizes this "slow and bureaucratic check-in process" is making their company sluggish and uncompetitive, so they instruct the auto-CEO more and more to act without alignment check ins. The auto-CEO might warns them that this will decrease its overall level of per-decision alignment with them, but they say "Do it anyway; done is better than perfect" or something along those lines. All Boards wish other Boards would stop doing this, but neither they nor their CEOs manage to strike up a bargain with the rest of the world stop it. This concession by the Board—a result of failed or non-existent bargaining with other Boards [see: antitrust law]—makes the whole company less aligned with human values.

The win scenario is, of course, a bargain to stop that! Which is why I think research and discourse regarding how the bargaining will work is very high value on the margin. In other words, my position is that the best way for a marginal deep-thinking researcher to reduce the risks of these tradeoffs is not to add another brain to the task of making it easier/cheaper/faster to do alignment (which I admit would make the trade-off less tempting for the companies), but to add such a researcher to the problem of solving the bargaining/cooperation/mutual-governance problem that AI-enhanced companies (and/or countries) will be facing.

If trillion-dollar tech companies stop trying to make their systems do what they want, I will update that marginal deep-thinking researchers should allocate themselves to making alignment (the scalar!) cheaper/easier/better instead of making bargaining/cooperation/mutual-governance cheaper/easier/better. I just don't see that happening given the structure of today's global economy and tech industry.

Somehow the machine interests (e.g. building new factories, supplying electricity, etc.) are still being served. If the individual machines are aligned, and food/oxygen/etc. are in desperately short supply, then you might think an aligned AI would put the same effort into securing resources critical to human survival. Can you explain concretely what it looks like when that fails?

Yes, thanks for the question. I'm going to read your usage of "aligned" to mean "perfectly-or-extremely-well aligned with humans". In my model, by this point in the story, there has a been a gradual decrease in the scalar level of alignment of the machines with human values, due to bargaining successes on simpler objectives (e.g., «maximizing production») and bargaining failures on more complex objectives (e.g., «safeguarding human values») or objectives that trade off against production (e.g., «ensuring humans exist»). Each individual principal (e.g., Board of Directors) endorsed the gradual slipping-away of alignment-scalar (or failure to improve alignment-scalar), but wished everyone else would stop allowing the slippage.

Replies from: paulfchristiano, CarlShulman, paulfchristiano, paulfchristiano, elityre, paulfchristiano, interstice↑ comment by paulfchristiano · 2021-04-07T17:25:23.821Z · LW(p) · GW(p)

It seems to me you are using the word "alignment" as a boolean, whereas I'm using it to refer to either a scalar ("how aligned is the system?") or a process ("the system has been aligned, i.e., has undergone a process of increasing its alignment"). I prefer the scalar/process usage, because it seems to me that people who do alignment research (including yourself) are going to produce ways of increasing the "alignment scalar", rather than ways of guaranteeing the "perfect alignment" boolean. (I sometimes use "misaligned" as a boolean due to it being easier for people to agree on what is "misaligned" than what is "aligned".) In general, I think it's very unsafe to pretend numbers that are very close to 1 are exactly 1, because e.g., 1^(10^6) = 1 whereas 0.9999^(10^6) very much isn't 1, and the way you use the word "aligned" seems unsafe to me in this way.

(Perhaps you believe in some kind of basin of convergence around perfect alignment that causes sufficiently-well-aligned systems to converge on perfect alignment, in which case it might make sense to use "aligned" to mean "inside the convergence basin of perfect alignment". However, I'm both dubious of the width of that basin, and dubious that its definition is adequately social-context-independent [e.g., independent of the bargaining stances of other stakeholders], so I'm back to not really believing in a useful Boolean notion of alignment, only scalar alignment.

I'm fine with talking about alignment as a scalar (I think we both agree that it's even messier than a single scalar). But I'm saying:

- The individual systems in your could do something different that would be much better for their principals, and they are aware of that fact, but they don't care. That is to say, they are very misaligned.

- The story is risky precisely to the extent that these systems are misaligned.

In any case, I agree profit maximization it not a perfectly aligned goal for a company, however, it is a myopically pursued goal in a tragedy of the commons resulting from a failure to agree (as you point out) on something better to do (e.g., reducing competitive pressures to maximize profits).

The systems in your story aren't maximizing profit in the form of real resources delivered to shareholders (the normal conception of "profit"). Whatever kind of "profit maximization" they are doing does not seem even approximately or myopically aligned with shareholders.

I don't think the most obvious "something better to do" is to reduce competitive pressures, it's just to actually benefit shareholders. And indeed the main mystery about your story is why the shareholders get so screwed by the systems that they are delegating to, and how to reconcile that with your view that single-single alignment is going to be a solved problem because of the incentives to solve it.

Yes, it seems this is a good thing to hone in on. As I envision the scenario, the automated CEO is highly aligned to the point of keeping the Board locally happy with its decisions conditional on the competitive environment, but not perfectly aligned [...] I'm not sure whether to say "aligned" or "misaligned" in your boolean-alignment-parlance.

I think this system is misaligned. Keeping me locally happy with your decisions while drifting further and further from what I really want is a paradigm example of being misaligned, and e.g. it's what would happen if you made zero progress on alignment and deployed existing ML systems in the context you are describing. If I take your stuff and don't give it back when you ask, and the only way to avoid this is to check in every day in a way that prevents me from acting quickly in the world, then I'm misaligned. If I do good things only when you can check while understanding that my actions lead to your death, then I'm misaligned. These aren't complicated or borderline cases, they are central example of what we are trying to avert with alignment research.

(I definitely agree that an aligned system isn't automatically successful at bargaining.)

Replies from: ricraz↑ comment by Richard_Ngo (ricraz) · 2021-06-18T03:46:24.591Z · LW(p) · GW(p)

These aren't complicated or borderline cases, they are central example of what we are trying to avert with alignment research.

I'm wondering if the disagreement over the centrality of this example is downstream from a disagreement about how easy the "alignment check-ins" that Critch talks about are. If they are the sort of thing that can be done successfully in a couple of days by a single team of humans, then I share Critch's intuition that the system in question starts off only slightly misaligned. By contrast, if they require a significant proportion of the human time and effort that was put into originally training the system, then I am much more sympathetic to the idea that what's being described is a central example of misalignment.

My (unsubstantiated) guess is that Paul pictures alignment check-ins becoming much harder (i.e. closer to the latter case mentioned above) as capabilities increase? Whereas maybe Critch thinks that they remain fairly easy in terms of number of humans and time taken, but that over time even this becomes economically uncompetitive.

Replies from: SDM↑ comment by Sammy Martin (SDM) · 2021-08-06T14:58:49.371Z · LW(p) · GW(p)

Perhaps this is a crux in this debate: If you think the 'agent-agnostic perspective' is useful, you also think a relatively steady state of 'AI Safety via Constant Vigilance' is possible. This would be a situation where systems that aren't significantly inner misaligned (otherwise they'd have no incentive to care about governing systems, feedback or other incentives) but are somewhat outer misaligned (so they are honestly and accurately aiming to maximise some complicated measure of profitability or approval, not directly aiming to do what we want them to do), can be kept in check by reducing competitive pressures, building the right institutions and monitoring systems, and ensuring we have a high degree of oversight.

Paul thinks that it's basically always easier to just go in and fix the original cause of the misalignment, while Andrew thinks that there are at least some circumstances where it's more realistic to build better oversight and institutions to reduce said competitive pressures, and the agent-agnostic perspective is useful for the latter of these project, which is why he endorses it.

I think that this scenario of Safety via Constant Vigilance is worth investigating - I take Paul's later failure story [LW(p) · GW(p)] to be a counterexample to such a thing being possible, as it's a case where this solution was attempted and works for a little while before catastrophically failing. This also means that the practical difference between the RAAP 1a-d failure stories and Paul's story just comes down to whether there is an 'out' in the form of safety by vigilance

↑ comment by CarlShulman · 2021-04-12T23:07:51.292Z · LW(p) · GW(p)

I think I disagree with you on the tininess of the advantage conferred by ignoring human values early on during a multi-polar take-off. I agree the long-run cost of supporting humans is tiny, but I'm trying to highlight a dynamic where fairly myopic/nihilistic power-maximizing entities end up quickly out-competing entities with other values, due to, as you say, bargaining failure on the part of the creators of the power-maximizing entities.

Right now the United States has a GDP of >$20T, US plus its NATO allies and Japan >$40T, the PRC >$14T, with a world economy of >$130T. For AI and computing industries the concentration is even greater.