Posts

Comments

Thanks Anna for posting this! I agree with your hypothesis, and would add that shaming humans for not being VNM agents is probably a contributor to AI risk because of the cultural example it sets / because of the self-fulling prophesy of how-intelligence-gets-used that it supports.

The evidence you present in each case is outputs generated by LLMs.

The total evidence I have (and that everyone has) is more than behavioral. It includes

a) the transformer architecture, in particular the attention module,

b) the training corpus of human writing,

c) the means of execution (recursive calling upon its own outputs and history of QKV vector representations of outputs),

d) as you say, the model's behavior, and

e) "artificial neuroscience" experiments on the model's activation patterns and weights, like mech interp research.

When I think about how the given architecture, with the given training corpus, with the given means of execution, produces the observed behavior, with the given neural activation patterns, am lead to be to be 90% sure of the items in my 90% list, namely:

#1 (introspection), #2 (purposefulness), #3 (experiential coherence), #7 (perception of perception), #8 (awareness of awareness), #9 (symbol grounding), #15 (sense of cognitive extent), and #16 (memory of memory).

YMMV, but to me from a Bayesian perspective it seems a stretch to disbelieve those at this point, unless one adopts disbelief as an objective as in the popperian / falsificationist approach to science.

How would you distinguish an LLM both successfully extracting and then faithfully representing whatever internal reasoning generated a specific part of its outputs

I do not in general think LLMs faithfully represent their internal reasoning when asked about it. They can, and do, lie. But in the process of responding they also have access to latent information in their (Q,K,V) vector representation history. My claim is that they access (within those matrices, called by the attention module) information about their internal states, which are "internal" relative to the merely textual behavior we see, and thus establish a somewhat private chain of cognition that the model is aware of and tracking as it writes.

vs. conditioning on its previous outputs to give you plausible "explanation" for what it meant? The second seems much more likely to me (and this behavior isn't that hard to elicit, i.e. by asking an LLM to give you a one-word answer to a complicated question, and then asking it for its reasoning).

In my experience of humans, humans also do this.

A patient can hire us to collect their medical records into one place, to research a health question for them, and to help them prep for a doctor's appointment with good questions about the research. Then we do that, building and using our AI tool chain as we go, without training AI on sensitive patient data. Then the patient can delete their data from our systems if they want, or re-engage us for further research or other advocacy on their behalf.

A good comparison is the company Picnic Health, except instead of specifically matching patients with clinical trials, we do more general research and advocacy for them.

Do you have a mostly disjoint view of AI capabilities between the "extinction from loss of control" scenarios and "extinction by industrial dehumanization" scenarios?

a) If we go extinct from a loss of control event, I count that as extinction from a loss of control event, accounting for the 35% probability mentioned in the post.

b) If we don't have a loss of control event but still go extinct from industrial dehumanization, I count that as extinction caused by industrial dehumanization caused by successionism, accounting for the additional 50% probability mentioned in the post, totalling an 85% probability of extinction over the next ~25 years.

c) If a loss of control event causes extinction via a pathway that involves industrial dehumanization, that's already accounted for in the previous 35% (and moreovever I'd count the loss of control event as the main cause, because we have no control to avert the extinction after that point). I.e., I consider this a subset of (a): extinction via industrial dehumanization caused by loss of control. I'd hoped this would be clear in the post, from my use of the word "additional"; one does not generally add probabilities unless the underlying events are disjoint. Perhaps I should edit to add some more language to clarify this.

Do you have a model for maintaining "regulatory capture" in a sustained way

Yes: humans must maintain power over the economy, such as by sustaining the power (including regulatory capture power) of industries that care for humans, per the post. I suspect this requires involves a lot of technical, social, and sociotechnical work, with much of the sociotechnical work probably being executed or lobbied by industry, and being of greater causal force than either the purely technical (e.g., algorithmic) or purely social (e.g., legislative) work.

The general phenomenon of sociotechnical patterns (e.g., product roll-outs) dominating the evolution of the AI industry can be seen in the way Chat-GPT4 as a product has had more impact on the world — including via its influence on subsequent technical and social trends — than technical and social trends in AI and AI policy prior to ChatGPT-4 (e.g., papers on transformer models; policy briefings and think tank pieces on AI safety).

Do you have a model for maintaining "regulatory capture" in a sustained way, despite having no economic, political, or military power by which to enforce it?

No. Almost by definition, humans must sustain some economic or political power over machines to avoid extinction. The healthy parts of the healthcare industry are an area where humans currently have some terminal influence, as its end consumers. I would like to sustain that. As my post implies, I think humanity has around a 15% chance of succeeding in that, because I think we have around an 85% chance of all being dead by 2050. That 15% is what I am most motivated to work to increase and/or prevent decreasing, because other futures do not have me or my human friends or family or the rest of humanity in them.

Most of my models for how we might go extinct in next decade from loss of control scenarios require the kinds of technological advancement which make "industrial dehumanization" redundant,

Mine too, when you restrict to the extinction occuring (finishing) in the next decade. But the post also covers extinction events that don't finish (with all humans dead) until 2050, even if they are initiated (become inevitable) well before then. From the post:

First, I think there's around a 35% chance that humanity will lose control of one of the first few AGI systems we develop, in a manner that leads to our extinction. Most (80%) of this probability (i.e., 28%) lies between now and 2030. In other words, I think there's around a 28% chance that between now and 2030, certain AI developments will "seal our fate" in the sense of guaranteeing our extinction over a relatively short period of time thereafter, with all humans dead before 2040.

[...]

Aside from the ~35% chance of extinction we face from the initial development of AGI, I believe we face an additional 50% chance that humanity will gradually cede control of the Earth to AGI after it's developed, in a manner that leads to our extinction through any number of effects including pollution, resource depletion, armed conflict, or all three. I think most (80%) of this probability (i.e., 44%) lies between 2030 and 2040, with the death of the last surviving humans occurring sometime between 2040 and 2050. This process would most likely involve a gradual automation of industries that are together sufficient to fully sustain a non-human economy, which in turn leads to the death of humanity.

If I intersect this immediately preceding narrative with the condition "all humans dead by 2035", I think that most likely occurs via (a)-type scenarios (loss of control), including (c) (loss of control leading to industrial dehumanization), rather than (b) (successionism leading to industrial dehumanization).

I very much agree with human flourishing as the main value I most want AI technologies to pursue and be used to pursue.

In that framing, my key claim is that in practice no area of purely technical AI research — including "safety" and/or "alignment" research — can be adequately checked for whether it will help or hinder human flourishing, without a social model of how the resulting techologies will be used by individuals / businesses / governments / etc..

I may be missing context here, but as written / taken at face value, I strongly agree with the above comment from Richard. I often disagree with Richard about alignment and its role in the future of AI, but this comment is an extremely dense list of things I agree with regarding rationalist epistemic culture.

I'm afraid I'm sceptical that you methodology licenses the conclusions you draw.

Thanks for raising this. It's one of the reasons I spelled out my methodology, to the extent that I had one. You're right that, as I said, my methodology explicitly asks people to pay attention to the internal structure of what they were experiencing in themselves and calling consciousness, and to describe it on a process level. Personally I'm confident that whatever people are managing to refer to by "consciousness" is a process than runs on matter. If you're not confident of that, then you shouldn't be confident in my conclusion, because my methodology was premised on that assumption.

Of course people differ with respect to intuitions about the structure of consciousness.

Why do you say "of course" here? It could have turned out that people were all referring to the same structure, and their subjective sense of its presence would have aligned. That turned out not to be the case.

But the structure is not the typical referent of the word 'conscious',

I disagree with this claim. Consciousness is almost certainly a process that runs on matter, in the brain. Moreover, the belief that "consciousness exists" — whatever that means — is almost always derived from some first-person sense of awareness of that process, whatever it is. In my investigations, I asked people to attend to the process there were referring to, and describe it. As far as I can tell, they usually described pretty coherent things that were (almost certainly) actually happening inside their minds. This raises a question: why is the same word used to refer to these many different subject experiences of processes that are almost certainly physically real, and distinct, in the brain?

The standard explanation is that they're all facets or failed descriptions of some other elusive "thing" called "consciousness", which is somehow perpetually elusive and hard for scientists to discover. I'm rejecting that explanation, in favor of a simpler one: consciousness is a word that people use to refer to mental processes that they consider intrinsically valuable upon introspective observation, so they agree with each other when they say "consciousness is valuable" and disagree with each other when they say "the mental process I'm calling conscious consists of {details}". The "hard problem of consciousness" is the problem of resolving a linguistic dispute disguised as an ontological one, where people agree on the normative properties of consciousness (it's valuable) but not on its descriptive properties (its nature as a process/pattern.)

the first-person, phenomenal character of experience itself is.

I agree that the first-person experience of consciousness is how people are convinced that something they call consciousness exists. Usually when a person experiences something, like an image or a sound, they can describe the structure of the thing they're experiencing. So I just asked them to describe the structure they were experiencing and calling "consciousness", and got different — coherent — answers from different people. The fact that their answers were coherent, and seemed to correspond to processes that almost certainly actually exist in the human mind/brain, convinced me to just believe them that they were detecting something real and managing to refer to it through introspection, rather than assuming they were all somehow wrong and failing to describe some deeper more elusive thing that was beyond their experience.

I totally agree with the potential for confusion here!

My read is that the LessWrong community has too low of a prior on social norms being about membranes (e.g., when, how, and how not to cross various socially constructed information membranes). Using the term "boundaries" raises the prior on the hypothesis "social norms are often about boundaries", which I endorse and was intentional on my part, specifically for the benefit of LessWrong readership base (especially the EA community) who seemed to pay too little attention to the importance of <<boundaries>>, for many senses of "too little". I wrotr about that in Part 2 of the sequence, here: https://www.lesswrong.com/posts/vnJ5grhqhBmPTQCQh/boundaries-part-2-trends-in-ea-s-handling-of-boundaries

When a confusion between "social norms" and "boundaries" exists, like you I also often fall back on another term like "membrane", "information barrier", or "causal separation". But I also have some hope of improving Western discourse more broadly, by replacing the conflation "social norms are boundaries" with the more nuanced observation "social norms are often about when, how, how not, and when not to cross a boundary".

Nice catch! Now replaced by 'deliberate'.

Thanks for sharing this! Because of strong memetic selection pressures, I was worried I might be literally the only person posting on this platform with that opinion.

FWIW I think you needn't update too hard on signatories absent from the FLI open letter (but update positively on people who did sign). Statements about AI risk are notoriously hard to agree on for a mix of political reasons. I do expect lab leads to eventually find a way of expressing more concerns about risks in light of recent tech, at least before the end of this year. Please feel free to call me "wrong" about this at the end of 2023 if things don't turn out that way.

Do you have a success story for how humanity can avoid this outcome? For example what set of technical and/or social problems do you think need to be solved? (I skimmed some of your past posts and didn't find an obvious place where you talked about this.)

I do not, but thanks for asking. To give a best efforts response nonetheless:

David Dalrymple's Open Agency Architecture is probably the best I've seen in terms of a comprehensive statement of what's needed technically, but it would need to be combined with global regulations limiting compute expenditures in various ways, including record-keeping and audits on compute usage. I wrote a little about the auditing aspect with some co-authors, here

https://cset.georgetown.edu/article/compute-accounting-principles-can-help-reduce-ai-risks/

... and was pleased to see Jason Matheny advocating from RAND that compute expenditure thresholds should be used to trigger regulatory oversight, here:

https://www.rand.org/content/dam/rand/pubs/testimonies/CTA2700/CTA2723-1/RAND_CTA2723-1.pdf

My best guess at what's needed is a comprehensive global regulatory framework or social norm encompassing all manner of compute expenditures, including compute expenditures from human brains and emulations but giving them special treatment. More specifically-but-less-probably, what's needed is some kind of unification of information theory + computational complexity + thermodynamics that's enough to specify quantitative thresholds allowing humans to be free-to-think-and-use-AI-yet-unable-to-destroy-civilization-as-a-whole, in a form that's sufficiently broadly agreeable to be sufficiently broadly adopted to enable continual collective bargaining for the enforceable protection of human rights, freedoms, and existential safety.

That said, it's a guess, and not an optimistic one, which is why I said "I do not, but thanks for asking."

It confuses me that you say "good" and "bullish" about processes that you think will lead to ~80% probability of extinction. (Presumably you think democratic processes will continue to operate in most future timelines but fail to prevent extinction, right?) Is it just that the alternatives are even worse?

Yes, and specifically worse even in terms of probability of human extinction.

That is, norms do seem feasible to figure out, but not the kind of thing that is relevant right now, unfortunately.

From the OP:

for most real-world-prevalent perspectives on AI alignment, safety, and existential safety, acausal considerations are not particularly dominant [...]. In particular, I do not think acausal normalcy provides a solution to existential safety, nor does it undermine the importance of existential safety in some surprising way.

I.e., I agree.

we are so unprepared that the existing primordial norms are unlikely to matter for the process of settling our realm into a new equilibrium.

I also agree with that, as a statement about how we normal-everyday-humans seem quite likely to destroy ourselves with AI fairly soon. From the OP:

I strongly suspect that acausal norms are not so compelling that AI technologies would automatically discover and obey them. So, if your aim in reading this post was to find a comprehensive solution to AI safety, I'm sorry to say I don't think you will find it here.

For 18 examples, just think of 3 common everyday norms having to do with each of the 6 boundaries given as example images in the post :) (I.e., cell membranes, skin, fences, social group boundaries, internet firewalls, and national borders). Each norm has the property that, when you reflect on it, it's easy to imagine a lot of other people also reflecting on the same norm, because of the salience of the non-subjectively-defined actual-boundary-thing that the norm is about. That creates more of a Schelling-nature for that norm, relative to other norms, as I've argued somewhat in my «Boundaries» sequence.

Spelling out such examples more carefully in terms of the recursion described in 1 and 2 just prior is something I've been planning for a future post, so I will take this comment as encouragement to write it!

To your first question, I'm not sure which particular "the reason" would be most helpful to convey. (To contrast: what's "the reason" that physically dispersed human societies have laws? Answer: there's a confluence of reasons.). However, I'll try to point out some things that might be helpful to attend to.

First, committing to a policy that merges your utility function with someone else's is quite a vulnerable maneuver, with a lot of boundary-setting aspects. For instance, will you merge utility functions multiplicatively (as in Nash bargaining), linearly (as in Harsanyi's utility aggregation theorem), or some other way? Also, what if the entity you're merging with has self-modified to become a "utility monster" (an entity with strongly exaggerated preferences) so as to exploit the merging procedure? Some kind of boundary-setting is needed to decide whether, how, and how much to merge, which is one of the reasons why I think boundary-handling is more fundamental than utility-handling.

Relatedly, Scott Garrabrant has pointed out in his sequence on geometric rationality that linear aggregation is more like not-having-a-boundary, and multiplicative aggregation is more like having-a-boundary:

https://www.lesswrong.com/posts/rc5ZKGjXTHs7wPjop/geometric-exploration-arithmetic-exploitation#The_AM_GM_Boundary

I view this as further pointing away from "just aggregate utilities" and toward "one needs to think about boundaries when aggregating beings" (see Part 1 of my Boundaries sequence). In other words, one needs (or implicitly assumes) some kind of norm about how and when to manage boundaries between utility functions, even in an abstract utility-function-merging operations where the boundary issues come down to where to draw parentheses in between additive and multiplicative operations. Thus, boundary-management are somewhat more fundamental, or conceptually upstream, of principles that might pick out a global utility function for the entirely of the "acausal society".

(Even if the there is a global utility function that turns out to be very simple to write down, the process of verifying its agreeability will involve checking that a lot of boundary-interactions. For instance, one must check that this hypothetical reigning global utility function is not dethroned by some union of civilizations who successfully merge in opposition to it, which is a question of boundary-handling.)

This is cool (and fwiw to other readers) correct. I must reflect on what it means for real world cooperation... I especially like the A <-> []X -> [][]X <-> []A trick.

I'm working on it :) At this point what I think is true is the following:

If ShortProof(x \leftrightarrow LongProof(ShortProof(x) \to x)), then MediumProof(x).

Apologies that I haven't written out calculations very precisely yet, but since you asked, that's roughly where I'm at :)

Actually the interpretation of \Box_E as its own proof system only requires the other systems to be finite extenions of PA, but I should mention that requirement! Nonetheless even if they're not finite, everything still works because \Box_E still satisfies necessitation, distributivity, and existence of modal fixed points.

Thanks for bringing this up.

Based on a potential misreading of this post, I added the following caveat today:

Important Caveat: Arguments in natural language are basically never "theorems". The main reason is that human thinking isn't perfectly rational in virtually any precisely defined sense, so sometimes the hypotheses of an argument can hold while its conclusion remains unconvincing. Thus, the Löbian argument pattern of this post does not constitute a "theorem" about real-world humans: even when the hypotheses of the argument hold, the argument will not always play out like clockwork in the minds of real people. Nonetheless, Löb's-Theorem-like arguments can play out relatively simply in the English language, and this post shows what would look like.

Thanks! Added a note to the OP explaining that hereby means "by this utterance".

Hat tip to Ben Pace for pointing out that invitations are often self-referential, such as when people say "You are hereby invited", because "hereby" means "by this utterance":

https://www.lesswrong.com/posts/rrpnEDpLPxsmmsLzs/open-technical-problem-a-quinean-proof-of-loeb-s-theorem-for?commentId=CFvfaWGzJjnMP8FCa

That comment was like 25% of my inspiration for this post :)

I've now fleshed out the notation section to elaborate on this a bit. Is it better now?

In short, is our symbol for talking about what PA can prove, and is shorthand for PA's symbols for talking about what (a copy of) PA can prove.

- " 1+1=2" means "Peano Arithmetic (PA) can prove that 1+1=2". No parentheses are needed; the "" applies to the whole line that follows it. Also, does not stand for an expression in PA; it's a symbol we use to talk about what PA can prove.

- "" basically means the same thing. More precisely, it stands for a numerical expression within PA that can be translated as saying " 1+1=2". This translation is possible because of something called a Gödel numbering which allows PA to talk about a (numerically encoded version of) itself.

- " " is short for "" in cases where "" is just a single character of text.

- "" means "PA, along with X as an additional axiom/assumption, can prove Y". In this post we don't have any analogous notation for .

Well, the deduction theorem is a fact about PA (and, propositional logic), so it's okay to use as long as means "PA can prove".

But you're right that it doesn't mix seamlessly with the (outer) necessitation rule. Necessitation is a property of "", but not generally a property of "". When PA can prove something, it can prove that it can prove it. By contrast, if PA+X can prove Y, that does mean that PA can prove that PA+X can prove Y (because PA alone can work through proofs in a Gödel encoding), but it doesn't mean that PA+X can prove that PA can prove Y. This can be seen by example, by setting ".

As for the case where you want to refer to K or S5 instead of PA provability, those logics are still built on propositional logic, for which the deduction theorem does hold. So if you do the deduction only using propositional logic from theorems in along with an additional assumption X, then the deduction theorem applies. In particular, inner necessitation and box distributivity are both theorems of for every and you stick into them (rather than meta theorems about , which is what necessitation is). So the application of the deduction theorem here is still valid.

Still, the deduction theorem isn't safe to just use willy nilly along with the (outer) necessitation rule, so I've just added a caveat about that:

Note that from we cannot conclude , because still means "PA can prove", and not "PA+X can prove".

Thanks for calling this out.

Well, is just short for , i.e., "(not A) or B". By contrast, means that there exists a sequence of (very mechanical) applications of modus ponens, starting from the axioms of Peano Arithmetic (PA) with appended, ending in . We tried hard to make the rules of so that it would agree with in a lot of cases (i.e., we tried to design to make the deduction theorem true), but it took a lot of work in the design of Peano Arithmetic and can't be taken for granted.

For instance, consider the statement . If you believe Peano Arithmetic is consistent, then you believe that , and therefore you also believe that . But PA cannot prove that (by Gödel's Theorem, or Löb's theorem with ), so we don't have .

It's true that the deduction theorem is not needed, as in the Wikipedia proof. I just like using the deduction theorem because I find it intuitive (assume , prove , then drop the assumption and conclude ) and it removes the need for lots of parentheses everywhere.

I'll add a note about the meaning of so folks don't need to look it up, thanks for the feedback!

It did not get accross! Interesting. Procedurally I still object to calling people's arguments "crazy", but selfishly I guess I'm glad they were not my arguments? At a meta level though I'm still concerned that LessWrong culture is too quick to write off views as "crazy". Even the the "coordination is delusional"-type views that Katja highlights in her post do not seem "crazy" to me, more like misguided or scarred or something, in a way that warrants a closer look but not being called "crazy".

Oliver, see also this comment; I tried to @ you on it, but I don't think LessWrong has that functionality?

Separately from my other reply explaining that you are not the source of what I'm complaining about here, I thought I'd add more color to explain why I think my assessment here is not "hyperbolic". Specifically, regarding your claim that reducing AI x-risk through coordination is "not only fine to suggest, but completely uncontroversial accepted wisdom", please see the OP. Perhaps you have not witnessed such conversations yourself, but I have been party to many of these:

Some people: AI might kill everyone. We should design a godlike super-AI of perfect goodness to prevent that.

Others: wow that sounds extremely ambitious

Some people: yeah but it’s very important and also we are extremely smart so idk it could work

[Work on it for a decade and a half]

Some people: ok that’s pretty hard, we give up

Others: oh huh shouldn’t we maybe try to stop the building of this dangerous AI?

Some people: hmm, that would involve coordinating numerous people—we may be arrogant enough to think that we might build a god-machine that can take over the world and remake it as a paradise, but we aren’t delusional

In other words, I've seen people in AI governance being called or treated as "delusional" by loads of people (1-2 dozen?) core to the LessWrong community (not you). I wouldn't say by a majority, but by an influential minority to say the least, and by more people than would be fair to call "just institution X" for any X, or "just person Y and their friends" for any Y. The pattern is strong enough that for me, pointing to governance as an approach to existential safety on LessWrong indeed feels fraught, because influential people (online or offline) will respond to the idea as "delusional" as Katja puts it. Being called delusional is stressful, and hence "fraught".

@Oliver, the same goes for your way of referring to sentences you disagree with as "crazy", such as here.

Generally speaking, on the LessWrong blog itself I've observed too many instances of people using insults in response to dissenting views on the epistemic health of the LessWrong community, and receiving applause and karma for doing so, for me to think that there's not a pattern or problem here.

That's not to say I think LessWrong has this problem worse than other online communities (i.e., using insults or treating people as 'crazy' or 'delusional' for dissenting or questioning the status quo); only that I think it's a problem worth addressing, and a problem I see strongly at play on the topic of coordination and governance.

Thanks, Oliver. The biggest update for me here — which made your entire comment worth reading, for me — was that you said this:

I also think it's really not true that coordination has been "fraught to even suggest".

I'm surprised that you think that, but have updated on your statement at face value that you in fact do. By contrast, my experience around a bunch common acquaintances of ours has been much the same as Katja's, like this:

Some people: AI might kill everyone. We should design a godlike super-AI of perfect goodness to prevent that.

Others: wow that sounds extremely ambitious

Some people: yeah but it’s very important and also we are extremely smart so idk it could work

[Work on it for a decade and a half]

Some people: ok that’s pretty hard, we give up

Others: oh huh shouldn’t we maybe try to stop the building of this dangerous AI?

Some people: hmm, that would involve coordinating numerous people—we may be arrogant enough to think that we might build a god-machine that can take over the world and remake it as a paradise, but we aren’t delusional

In fact I think I may have even heard the world "delusional" specifically applied to people working on AI governance (though not by you) for thinking that coordination on AI regulation is possible / valuable / worth pursuing in service of existential safety.

As for the rest of your narrative of what's been happening in the world, to me it seems like a random mix of statements that are clearly correct (e.g., trying to coordinate with people who don't care about honestly or integrity will get you screwed) and other statements that seem, as you say,

pretty crazy to me,

and I agree that for the purpose of syncing world models,

I don't [think] this comment thread is the right context.

Anyway, cheers for giving me some insight into your thinking here.

This makes sense to me if you feel my comment is meant as a description of you or people-like-you. It is not, and quite the opposite. As I see it, you are not a representative member of the LessWrong community, or at least, not a representative source of the problem I'm trying to point at. For one thing, you are willing to work for OpenAI, which many (dozens of) LessWrong-adjacent people I've personally met would consider a betrayal of allegiance to "the community". Needless to say, the field of AI governance as it exists is not uncontroversially accepted by the people I am reacting to with the above complaint. In fact, I had you in mind as a person I wanted to defend by writing the complaint, because you're willing to engage and work full-time (seemingly) in good faith with people who do not share many of the most centrally held views of "the community" in question, be it LessWrong, Effective Altruism, or the rationality community.

If it felt otherwise to you, I apologize.

Katja, many thanks for writing this, and Oliver, thanks for this comment pointing out that everyday people are in fact worried about AI x-risk. Since around 2017 when I left MIRI to rejoin academia, I have been trying continually to point out that everyday people are able to easily understand the case for AI x-risk, and that it's incorrect to assume the existence of AI x-risk can only be understood by a very small and select group of people. My arguments have often been basically the same as yours here: in my case, informal conversations with Uber drivers, random academics, and people at random public social events. Plus, the argument is very simple: If things are smarter than us, they can outsmart us and cause us trouble. It's always seemed strange to say there's an "inferential gap" of substance here.

However, for some reason, the idea that people outside the LessWrong community might recognize the existence of AI x-risk — and therefore be worth coordinating with on the issue — has felt not only poorly received on LessWrong, but also fraught to even suggest. For instance, I tried to point it out in this previous post:

“Pivotal Act” Intentions: Negative Consequences and Fallacious Arguments

I wrote the following, targeting multiple LessWrong-adjacent groups in the EA/rationality communities who thought "pivotal acts" with AGI were the only sensible way to reduce AI x-risk:

In fact, before you get to AGI, your company will probably develop other surprising capabilities, and you can demonstrate those capabilities to neutral-but-influential outsiders who previously did not believe those capabilities were possible or concerning. In other words, outsiders can start to help you implement helpful regulatory ideas, rather than you planning to do it all on your own by force at the last minute using a super-powerful AI system.

That particular statement was very poorly received, with a 139-karma retort from John Wentworth arguing,

What exactly is the model by which some AI organization demonstrating AI capabilities will lead to world governments jointly preventing scary AI from being built, in a world which does not actually ban gain-of-function research?

I'm not sure what's going on here, but it seems to me like the idea of coordinating with "outsiders" or placing trust or hope in judgement of "outsiders" has been a bit of taboo here, and that arguments that outsiders are dumb or wrong or can't be trusted will reliably get a lot of cheering in the form of Karma.

Thankfully, it now also seems to me that perhaps the core LessWrong team has started to think that ideas from outsiders matter more to the LessWrong community's epistemics and/or ability to get things done than previously represented, such as by including material written outside LessWrong in the 2021 LessWrong review posted just a few weeks ago, for the first time:

https://www.lesswrong.com/posts/qCc7tm29Guhz6mtf7/the-lesswrong-2021-review-intellectual-circle-expansion

I consider this a move in a positive direction, but I am wondering if I can draw the LessWrong team's attention to a more serious trend here. @Oliver, @Raemon, @Ruby, and @Ben Pace, and others engaged in curating and fostering intellectual progress on LessWrong:

Could it be that the LessWrong community, or the EA community, or the rationality community, has systematically discounted the opinions and/or agency of people outside that community, in a way that has lead the community to plan more drastic actions in the world than would otherwise be reasonable if outsiders of that community could also be counted upon to take reasonable actions?

This is a leading question, and my gut and deliberate reasoning have both been screaming "yes" at me for about 5 or 6 years straight, but I am trying to get you guys to take a fresh look at this hypothesis now, in question-form. Thanks in any case for considering it.

I agree this is a big factor, and might be the main pathway through which people end up believing what people believe the believe. If I had to guess, I'd guess you're right.

E.g., if there's a evidence E in favor of H and evidence E' against H, if the group is really into thinking about and talking about E as a topic, then the group will probably end up believing H too much.

I think it would be great if you or someone wrote a post about this (or whatever you meant by your comment) and pointed to some examples. I think the LessWrong community is somewhat plagued by attentional bias leading to collective epistemic blind spots. (Not necessarily more than other communities; just different blind spots.)

Ah, thanks for the correction! I've removed that statement about "integrity for consequentialists" now.

I've searched my memory for the past day or so, and I just wanted to confirm that the "ever" part of my previous message was not a hot take or exaggeration.

I'm not sure what to do about this. I am mulling.

This piece of news is the most depressing thing I've seen in AI since... I don't know, ever? It's not like the algorithms for doing this weren't lying around already. The depressing thing for me is that it was promoted as something to be proud of, with no regard for the framing implication that cooperative discourse exists primarily in service of forming alliances to exterminate enemies.

Thanks for raising this! I assume you're talking about this part?

They explore a pretty interesting set-up, but they don't avoid the narrowly-self-referential sentence Ψ:

So, I don't think their motivation was the same as mine. For me, the point of trying to use a quine is to try to get away from that sentence, to create a different perspective on the foundations for people that find that kind of sentence confusing, but who find self-referential documents less confusing. I added a section "Further meta-motivation (added Nov 26)" about this in the OP.

Noice :)

At this point I'm more interested in hashing out approaches that might actually conform to the motivation in the OP. Perhaps I'll come back to this discussion with you after I've spent a lot more time in a mode of searching for a positive result that fits with my motivation here. Meanwhile, thanks for thinking this over for a bit.

True! "Hereby" covers a solid contingent of self-referential sentences. I wonder if there's a "hereby" construction that would make the self-referential sentence Ψ (from the Wikipedia poof) more common-sense-meaningful to, say, lawyers.

this suggests that you're going to be hard-pressed to do any self-reference without routing through the nomal machinery of löb's theorem, in the same way that it's hard to do recursion in the lambda calculus without routing through the Y combinator

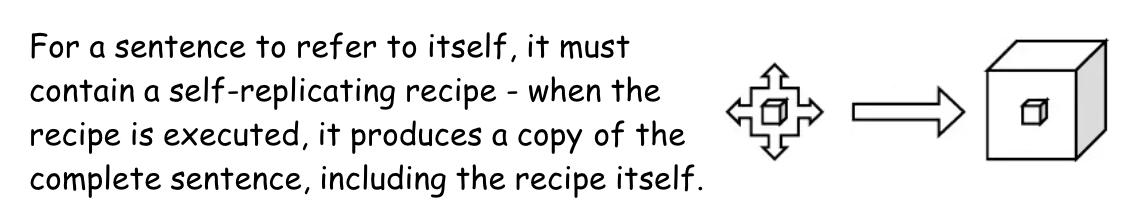

If by "the normal machinery", you mean a clever application of the diagonal lemma, then I agree. But I think we can get away with not having the self-referential sentence, by using the same y-combinator-like diagonal-lemma machinery to make a proof that refers to itself (instead of a proof about sentences that refer to themselves) and checks its own validity. I think I if or someone else produces a valid proof like that, skeptics of its value (of which you might be one; I'm not sure) will look at it and say "That was harder and less efficient than the usual way of proving Löb using the self-referential sentence and no self-validation". I predict I'll agree with that, and still find the new proof to be of additional intellectual value, for the following reason:

- Human documents tend to refer to the themselves a lot, like bylaws.

- Human sentences, on the other hand, rarely refer to themselves. (This sentence is an exception, but there aren't a lot of naturally occurring examples.)

- Therefore, a proof of Löb whose main use of self-references involves the entire proof referring to itself, rather than a sing sentence referring to itself, will be more intuitive to humans (such as lawyers) who are used to thinking about self-referential documents.

The skeptic response to that will be to say that those peoples' intuitions are the wrong way to think about y-combinator manipulation, and to that I'll be like "Maybe, but I'm not super convinced their perspective is wrong, and in any case I don't mind meeting them where they're at, using a proof that they find more intuitive.".

Summary: I'm pretty confident the proof will be valuable, even though I agree it will have to use much of the same machinery as the usual proof, plus some extra machinery for helping the proof to self-validate, as long as the proof doesn't use sentences that are basically only about their own meaning (the way the sentence is basically only about its own relationship to the sentence C, which is weird).

Thanks for your attention to this! The happy face is the outer box. So, line 3 of the cartoon proof is assumption 3.

If you want the full []([]C->C) to be inside a thought bubble, then just take every line of the cartoon and put into a thought bubble, and I think that will do what you want.

LMK if this doesn't make sense; given the time you've spent thinking about this, you're probably my #1 target audience member for making the more intuitive proof (assuming it's possible, which I think it is).

ETA: You might have been asking if there should be a copy of Line 3 of the cartoon proof inside Line 1 of the cartoon proof. The goal is, yes, to basically to have a compressed copy of line 3 inside line 1, like how the strings inside this Java quine are basically a 2x compressed copy of the whole program:

https://en.wikipedia.org/wiki/Quine_(computing)#Constructive_quines#:~:text=java

The last four lines of the Java quine are essentially instructions for duplicating the strings in a form that reconstructs the whole program.

If we end up with a compressed proof like this, you might complain that the compression is being depicted as magical/non-reductive, and ask for cartoon that breaks down into further detail showing how the Quinean compression works. However, I'll note that your cartoon guide did not break down the self-referential sentence L into a detailed cartoon form; you used natural language instead, and just illustrated vaguely that the sentence can unpack itself with this picture:

I would say the same picture could work for the proof, but with "sentence" replaced by "proof".

Yes to both of you on these points:

- Yes to Alex that (I think) you can use an already-in-hand proof of Löb to make the self-referential proof work, and

- Yes to Eliezer that that would be cheating wouldn't actually ground out all of the intuitions, because then the "santa clause"-like sentence is still in use in already-in-hand proof of Löb.

(I'll write a separate comment on Eliezer's original question.)

That thing is hilarious and good! Thanks for sharing it. As for the relevance, it explains the statement of Gödel's theorem, but not the proof it. So, it could be pretty straightforwardly reworked to explain the statement of Löb's theorem, but not so easily the proof of Löb's theorem. With this post, I'm in the business of trying to find a proof of Löb that's really intuitive/simple, rather than just a statement of it that's intuitive/simple.

Why is it unrealistic? Do you actually mean it's unrealistic that the set I've defined as "A" will be interpretable at "actions" in the usual coarse-grained sense? If so I think that's a topic for another post when I get into talking about the coarsened variables ...

Going further, my proposed convention also suggests that "Cartesian frames" should perhaps be renamed to "Cartesian factorizations", which I think is a more immediately interpretable name for what they are. Then in your equation , you can refer to and as "Cartesian factors", satisfying your desire to treat and as interchangeable. And, you leave open the possibility that the factors are derivable from a "Cartesian partition" of the world into the "Cartesian parts" and .

There is of course the problem that for some people "Cartesian" just means "factoring into coordinates" (e.g., "Cartesian plane"), in which case "Cartesian factorization" will sound a bit redundant, but for those people "Cartesian frame" is already not very elucidating.

Scott, thanks for writing this! While I very much agree with the distinctions being drawn, I think the word "boundary" should be usable for referring to factorizations that do not factor through the physical separation of the world into objects. In other words, I want the technical concept of «boundaries» that I'm developing to be able to refer to things like social boundaries, which are often not most-easily-expressed in the physics factorization of the world into particles (but are very often expressible as Markov blankets in a more abstract space, I claim).

Because of this, instead of using "boundary" only for partitions, and "frame" only for factorizations, I propose to instead just use "part"/"partition" for referring to partitions, and "factor"/"factorization" for referring to factorizations. E.g.,

- Cartesian partition:

- Cartesian factorization:

Otherwise, we are using up four words (partition, boundary, factorization, frame) to refer to two things (parts and factors).

Then, in my proposed language, a

- boundary factor is a factor in a factorization of state space that looks like this:

,

and a - boundary part is a part in a partition of physical space that looks like this:

.

Overall, I suspect this language convention to be more expressive than what you are proposing.

What do you think?

Thanks, Scott!

I think the boundary factorization into active and passive is wrong.

Are you sure? The informal description I gave for A and P allow for the active boundary to be a bit passive and the passive boundary to be a bit active. From the post:

the active boundary, A — the features or parts of the boundary primarily controlled by the viscera, interpretable as "actions" of the system— and the passive boundary, P — the features or parts of the boundary primarily controlled by the environment, interpretable as "perceptions" of the system.

There's a question of how to factor B into a zillion fine-grained features in the first place, but given such a factorization, I think we can define A and P fairly straightforwardly using Shapley value to decide how much V versus E is controlling each feature, and then A and P won't overlap and will cover everything.

Thanks, fixed!

Cool! This was very much in line with the kind of update I was aiming for here, cheers :)