Power dynamics as a blind spot or blurry spot in our collective world-modeling, especially around AI

post by Andrew_Critch · 2021-06-01T18:45:39.820Z · LW · GW · 26 commentsContents

Where I’m coming from A history of systemic avoidance Multi-stakeholder concerns as a distraction from x-risk Aversion to thinking about political forces “Alignment” framings from MIRI’s formative years. Corrective influences from the MIRI meme-plex The “problems are only real when you can solve them” problem Conclusion None 26 comments

Where I’m coming from

***Epistemic status: personal experience***

In a number of prior posts, and in ARCHES, I’ve argued that more existential safety consideration is needed on the topic of multi-principal/multi-agent (multi/multi) dynamics among powerful AI systems.

In general, I have found it much more difficult to convince thinkers within and around LessWrong’s readership base to attend to multi/multi dynamics, as opposed to, say, convincing generally morally conscious AI researchers who are not (yet) closely associated with the effective altruism or rationality communities.

Because EA/rationality discourse is particularly concerned with maintaining good epistemic processes, I think it would be easy to conclude from this state of affairs that

- multi/multi dynamics are not important (because communities with great concern for epistemic process do not care about them much), and

- AI researchers who do care about multi/multi dynamics have “bad epistemics” (e.g., because they have been biased by institutionalized trends).

In fact, more than one LessWrong reader has taken these positions with me in private conversation, in good faith (I’m almost certain).

In this post, I wish to share an opposing concern: that the EA and rationality communities have become systematically biased to ignore multi/multi dynamics, and power dynamics more generally.

A history of systemic avoidance

***Epistemic status: self-evidently important considerations based on somewhat-publicly verifiable facts/trends.***

Our neglect of multi/multi dynamics has not been coincidental. For a time, influential thinkers in the rationality community intentionally avoided discussions of multi/multi dynamics, so as to avoid contributing to the sentiment that the development and use of AI technology would be driven by competitive (imperfectly cooperative) motives. (FWIW, I also did this sometimes.) The idea was that we — the rationality community — should avoid developing narratives that could provoke businesses and state leaders into worrying about whose values would be most represented in powerful AI systems, because that might lead them to go to war with each other, ideologically or physically.

Indeed, there was a time when this community — particularly the Singularity Institute — represented a significant share of public discourse on the future of AI technology, and it made sense to be thoughtful about how to use that influence. Eliezer recently wrote (in a semi-private group, but with permission to share):

The vague sense of assumed common purpose, in the era of AGI-alignment thinking from before Musk, was a fragile equilibrium, one that I had to fight to support every time some wise fool sniffed and said "Friendly to who?". Maybe somebody much weaker than Elon Musk could and inevitably would have smashed that equilibrium with much less of a financial investment, reducing Musk's "counterfactual impact". Maybe I'm an optimistic fool for thinking that this axis didn't just go from 0%-in-practice to 0%-in-practice. But I am still inclined to consider people a little responsible for the thing that they seem to have proximally caused according to surface appearances. That vague sense of common purpose might have become stronger if it had been given more time to grow and be formalized, rather than being smashed.

That ship has now sailed. Perhaps it was right to worry that our narratives could trigger competition between states and companies, or perhaps the competitive dynamic was bound to emerge anyway and it was hubristic to think ourselves so important. Either way, carefully avoiding questions about multi/multi dynamics on LessWrong or The Alignment Forum will not turn back the clock. It will not trigger an OpenAI/DeepMind merger, nor unmake statements by the US or Chinese governments concerning the importance of AI technology. As such, it no longer makes sense to worry that “we” will accidentally trigger states or big companies to worry more-than-a-healthy-amount about who will control future AI systems, at least not simply by writing blog posts or papers about the issue.

Multi-stakeholder concerns as a distraction from x-risk

*** Epistemic status: personal experience, probably experienced by many other readers ***

I’ve also been frustrated many times by the experience of trying to point out that AI could be an existential threat to humanity, and being met with a response that side-steps the existential threat and says something like, “Yes, but who gets to decide how safe it should be?” or “Yes, but whose values will it serve if we do make it safe?”. This happened especially-much in grad school, around 2010–2013, when talking to other grad students. The multi-stakeholder considerations were almost always raised in ways that seemed to systematically distract conversation away from x-risk, rather than action-oriented considerations of how to assemble a multi-stakeholder solution to x-risk.

This led me to build up a kind of resistance toward people who wanted to ask questions about multi/multi dynamics. However, by 2015, I started to think that multi-stakeholder dynamics were going to play into x-risk, even at a technical scale. But when I point this out to other x-risk-concerned people, it often feels like I’m met with the same kind of immune response that I used to have toward people with multi-stakeholder concerns about AI technology.

Other than inertia resulting from this “immune” response, I think there may be other factors contributing to our collective blind-spot around multi/multi dynamics, e.g., a collective aversion to politics.

Aversion to thinking about political forces

*** Epistemic status: observation + speculation ***

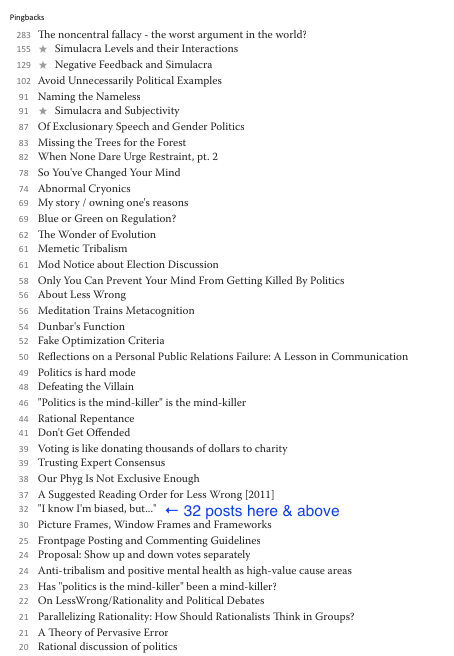

Politics is the Mind-Killer [LW · GW] (PMK) is one of the most heavily quoted posts on LessWrong. By my count of the highly-rated posts that cite it, the post has a “LessWrong h-index” of 32, i.e., 32 posts citing that are each rated 32 karma or higher:

The PMK post does not directly advocate for readers to avoid thinking about politics; in fact, it says “I’m not saying that I think we should be apolitical, or even that we should adopt Wikipedia’s ideal of the Neutral Point of View.” However, it also begins with the statement “People go funny in the head when talking about politics”. If a person doubts their own ability not to “go funny in the head”, the PMK post — and concerns like it — could lead them to avoid thinking about or engaging with politics as a way of preventing themselves from “going funny”.

Furthermore, one can imagine this avoidance leading to an under-development of mental and social habits for thinking and communicating clearly about political issues, conceivably making the problem worse for some individuals or groups. This has been remarked before, in the "Has "politics is the mind-killer" been a mind-killer? [LW · GW]".

Finally, these effects could easily combine to create a culture filter selecting heavily for people who dislike or find it difficult to interact with political forces in discourse.

*** Epistemic status: personal experience ***

The above considerations are reflective of my experience. For instance, at least five people that I know and respect as intellectuals within this community have shared with me that they find it difficult to think about topics that their friends or co-workers disagree with them about.

“Alignment” framings from MIRI’s formative years.

*** Epistemic status: publicly verifiable facts ***

Aligning Superintelligence with Human Interests: A Technical Research Agenda (soares2015aligning) was written at a time when laying out precise research objectives was an important step in establishing MIRI as an institution focused primarily on research rather than movement-building (after re-branding from SingInst). The problem of aligning a single agent with a single principal is a conceptually simple starting point, and any good graduate education in mathematics will teach you that for the purpose of understanding something confusing, it’s always best to start with the simplest non-trivial example.

*** Epistemic status: speculation ***

Over time, friends and fans of MIRI may have over-defended this problem framing, in the course of defending MIRI itself as an fledgling research institution against social/political pressures to dismiss AI as a source of risk to humanity. For instance, back in 2015, folks like Andrew Ng were in the habit of publicly claiming that worrying about AGI was “like worrying about overpopulation on mars” (The Register; Wired), so it was often more important to say “No, that doesn’t make sense, it’s possible powerful AI systems to be misaligned with what we want” than to address the more nuanced issue that “Moreover, we’re going to want a lot of resilient socio-technical solutions to account for disagreements about how powerful systems should be used.”

Corrective influences from the MIRI meme-plex

*** Epistemic status: publicly verifiable facts ***

Not all influences from the MIRI-sphere have pushed us away from thinking about multi-stakeholder issues. For instance, Inadequate Equilibria (yudkowsky2017inadequate), is clearly an effort to help this community to think about multi-agent dynamics, which could help with thinking about politics. Reflective Oracles (fallenstein2015reflective) are another case of this, but in a highly technical context that probably (according-to-me: unfortunately) didn’t have much effect on broader rationality-community-discourse.

The “problems are only real when you can solve them” problem

*** Epistemic status: personal experience / reflections***

It seems to me that many will people tend to ignore a given problem until it becomes sufficiently plausible that the problem can be solved. Moreover, I think their «ignore the problem» mental operation often goes as far as «believing the problem doesn’t exist». I saw MIRI facing this for years when trying to point to AI friendliness or alignment as a problem. Frequently people would ask “But what would a solution look like?”, and absent a solution, they’d tag the problem as “not a real problem” rather than just “a difficult problem”.

I think the problem of developing AI tech to enable cooperation-rather-than-conflict is in a similar state right now. Open Problems in Cooperative AI (dafoe2020cooperative) is a good start at carving out well-defined problems, and I’m hoping a lot more work will follow in that vein.

Conclusion

*** Epistemic status: personal reflections ***

I think it’s important to consider the potential that a filter-bubble with a fair amount of inertia has formed as a result of our collective efforts to defend AI x-risk as a real and legitimate concern, and to consider what biases or weaknesses are most likely to now be present in that filter bubble. Personally, I think we’ve developed such a blind spot around technical issues with multi-principal/multi-agent AI interaction, but since Open Problems in Cooperative AI (dafoe2020cooperative), it might be starting to clear up. Similarly, aversion to political thinking may also be weakening our collective ability to understand, discuss, and reach consensus on the state of the political world, particularly surrounding AI.

26 comments

Comments sorted by top scores.

comment by paulfchristiano · 2021-06-01T23:41:34.298Z · LW(p) · GW(p)

In this post, I wish to share an opposing concern: that the EA and rationality communities have become systematically biased to ignore multi/multi dynamics, and power dynamics more generally.

I feel like you are lumping together things like "bargaining in a world with many AIs representing diverse stakeholders" with things like "prioritizing actions on the basis of how they affect the balance of power." I would prefer keep those things separate.

In the first category: it seems to me that rationalist and EA community think about AI-AI bargaining and costs from AI-AI competition much more than the typical AI researchers, as measured by e.g. fraction of time spent thinking about those problems, fraction of writing that is about those problems, fraction of stated research priorities that involve those problems, and so on. This is all despite outlier technical beliefs suggesting an unprecedentedly "unipolar" world during the most important parts of AI deployment (which I mostly disagree with).

To the extent that you disagree, I'd be curious to get your sense of the respective fractions, or what evidence leads you to think that the normal AI community thinks more about these issues.

It's a bit hard to do the comparison, but e.g. it looks to me like <2% of NeurIPS 2020 is about multi-AI scenarios (proceedings), while the fraction within the EA/rationalist community looks more like 10-20% to me: discussion about game theory amongst AIs, alignment schemes involving multiple principals, explicit protocols for reaching cooperative arrangements, explorations of bargaining solutions, AI designs that reduce the risk fo bargaining failures, AI designs that can provide assurances to other organizations or divide execution, etc. I'm not sure what the number is but would be pretty surprised if you could slice up the EA/rationalist community in a way that put <10% on these categories. Beyond technical work, I think the EA/rationalist communities are nearly as interested in AI governance as they are in technical alignment work (way more governance interest than the broader AI community).

In the second category: I agree that the EA and rationalist communities spend less time on arguments about shifting the balance of power, and especially that they are less likely to prioritize actions on the basis of how they would shift the balance of power (rather than how they would improve humanity's collective knowledge or ability to tackle problems---including bargaining problems!).

For my part, this is an explicit decision to prioritize win-wins and especially reduction in the probability of x-risk scenarios where no one gets what they want. This is a somewhat unpopular perspective in the broader morally-conscious AI community. But it seems like "prioritizing win-wins" is mostly in line with what you are looking for out of multi-agent interactions (and so this brings us back to the first category, which I think is also an example of looking for win-win opportunities).

I think most of the biases you discuss are more directly relevant to the second category. For example, "Politics is the mind-killer" is mostly levied against doing politics, not thinking about politics as someone that someone else might do (thereby destroy the world). Similarly, when people raise multi-stakeholder concerns as a way that we might end up not aligning ML systems (or cause other catastrophic risks) most people in the alignment community are quick to agree (and indeed they constantly make this argument themselves). They are more resistant when "who" is raised as a more important object-level question, by someone apparently eager to get started on the fighting.

Replies from: Andrew_Critch↑ comment by Andrew_Critch · 2021-06-07T15:58:01.331Z · LW(p) · GW(p)

Sorry for the slow reply!

I feel like you are lumping together things like "bargaining in a world with many AIs representing diverse stakeholders" with things like "prioritizing actions on the basis of how they affect the balance of power."

Yes, but not a crux for my point. I think this community has a blind/blurry spot around both of those things (compared to the most influential elites shaping the future of humanity). So, the thesis statement of the post does not hinge on this distinction, IMO.

I would prefer keep those things separate.

Yep, and in fact, for actually diagnosing the structure of the problem, I would prefer to subdivide further into three things and not just two:

- Thinking about how power dynamics play out in the world;

- Thinking about what actions are optimal*, given (1); and

- Choosing optimal* actions, given (2) (which, as you say, can involve actions chosen to shift power balances)

My concern is that the causal factors laid out in this post have lead to correctable weaknesses in all three of these areas. I'm more confident in the claim of weakness (relative to skilled thinkers in this area, not average thinkers) than any particular causal story for how the weakness formed. But, having drawn out the distinction among 1-3, I'll say that the following mechanism is probably at play in a bunch of people:

a) people are really afraid of people manipulated;

b) they see/experience (3) as an instance of being manipulated and therefore have strong fear reactions around it (or disgust, or anger, or anxiety);

c) their avoidance reactions around (3) generalize to avoiding (1) and (2).

d) point (c) compounds with the other causal factors in the OP to lead to too-much-avoidance-of-thought around power/bargaining.

There are of course specific people who are exceptions to this concern. And, I hear you that you do lots of (1) and (2) while choosing actions based on a version of optimal* defined in terms of "win-wins". (This is not an update for me, because I know that you personally think about bargaining dynamics.)

However, my concern is not about you-specifically. Who exactly is about, you might ask? I'd say "the average lesswrong reader" or "the average AI researcher interested in AI alignment".

For example, "Politics is the mind-killer" is mostly levied against doing politics, not thinking about politics as some

onething that someone else might do (thereby destroy the world).

Yes, but not a crux to the point I'm trying to make. I already noted in the post that PMK is was not trying to make people think about politics; in fact, I included a direct quote to that effect: "The PMK post does not directly advocate for readers to avoid thinking about politics; in fact, it says “I’m not saying that I think we should be apolitical, or even that we should adopt Wikipedia’s ideal of the Neutral Point of View.” " My concern, as noted in the post, is that the effects of PMK (rather than its intentions) may have been somewhat crippling:

[OP] However, it also begins with the statement “People go funny in the head when talking about politics”. If a person doubts their own ability not to “go funny in the head”, the PMK post — and concerns like it — could lead them to avoid thinking about or engaging with politics as a way of preventing themselves from “going funny”.

I also agree with the following comparison you made to mainstream AI/ML, but it's also not a crux for the point I'm trying to make:

it seems to me that rationalist and EA community think about AI-AI bargaining and costs from AI-AI competition much more than the typical AI researchers, as measured by e.g. fraction of time spent thinking about those problems, fraction of writing that is about those problems, fraction of stated research priorities that involve those problems, and so on. This is all despite outlier technical beliefs suggesting an unprecedentedly "unipolar" world during the most important parts of AI deployment (which I mostly disagree with).

My concern is not that our community is under-performing the average AI/ML researcher in thinking about the future — as you point out, we are clearly over-performing. Rather, the concern is that we are underperforming the forces that will actually shape the future, which are driven primarily by the most skilled people who are going around shifting the balance of power. Moreover, my read on this community is that it mostly exhibits a disdainful reaction to those skills, both in specific (e.g., if I called out specific people who have them) and in general (if I call them out in the abstract, as I have here).

Here's a another way of laying out what I think is going on:

A = «anxiety/fear/disgust/anger around being manipulated»

B = «filter-bubbling around early EA narratives designed for institution-building, single, single-stakeholder AI alignment»

C = «commitment to the art-of-rationality/thinking»

S = «skill at thinking about power dynamics»

D = «disdain for people who exhibit S»

Effect 1: A increases C (this is healthy).

Effect 2: C increases S (which is probably why out community it out-performing mainstream AI/ML).

Effect 3: A decreases S in a bunch of people (not all people!), specifically, people who turn anxiety into avoidance-of-the-topic.

Effect 4: Effects 1-3 make it easy for a filter-bubble to form that ignores power dynamics among and around powerful AI systems and counts on single-stakeholder AI alignment to save the world with one big strong power-dynamic instead of a more efficient/nuanced wielding of power.

The solution to this problem is not to decrease C, from which we derive our strength, but to mitigate Effect 3 by getting A to be more calibrated / less triggered. Around AI specifically, this requires holding space in discourse for thinking and writing about power-dynamics in multi-stakeholder scenarios.

Hopefully this correction can be made without significantly harming efforts to diversify highly focussed efforts on alignment, such as yours. E.g., I'm still hoping like 10 people will go work for you at ARC, as soon as you want to expand to that size, in large part because I know you personally think about bargaining dynamics and are mulling them over in the background while you address alignment. [Other readers: please consider this an endorsement to go work for Paul if he wants you on his team!]

Replies from: paulfchristiano↑ comment by paulfchristiano · 2021-06-08T00:29:53.974Z · LW(p) · GW(p)

Let's define:

- X = thinking about the dynamics of conflict + how they affect our collective ability to achieve things we all want; prioritizing actions based on those considerations

- Y = thinking about how actions shift the balance of power + how we should be trying to shift the balance of power; prioritizing actions based on those considerations

I'm saying:

- I think the alignment community traditionally avoids Y but does a lot of X.

- I think that the factors you listed (including in the parent) are mostly reasons we'd do less Y.

- So I read you as mostly making a case for "why the alignment community might be inappropriately averse to Y."

- I think that separating X and Y would make this discussion clearer.

- I'm personally sympathetic to both activities. I think the altruistic case for marginal X is stronger.

Here are some reasons I perceive you as mostly talking about Y rather than X:

- You write: "Rather, the concern is that we are underperforming the forces that will actually shape the future, which are driven primarily by the most skilled people who are going around shifting the balance of power." This seems like a good description of Y but not X.

- You listed "Competitive dynamics as a distraction from alignment." But in my people from the alignment community very often bring up X themselves both as a topic for research and as a justification for their research (suggesting that in fact they don't regard it as a distraction), and in my experience Y derails conversations about alignment perhaps 10x more often than X.

- You talk about the effects of the PMK post. Explicitly that post is mostly about Y rather than X and it is often brought up when someone starts Y-ing on LW. It may also have the effect of discouraging X, but I don't think you made the case for that.

- You mention the causal link from "fear of being manipulated" to "skill at thinking about power dynamics" which looks very plausible (to me) in the context of Y but looks like kind of a stretch (to me) in the context of X. You say "they find it difficult to think about topics that their friends or co-workers disagree with them about," which again is most relevant to Y (where people frequently disagree about who should have power or how important it is) and not particularly relevant to X (similar to other technical discussions).

- In your first section you quote Eliezer. But he's not complaining about people thinking about how fights go in a way that might disruptive a sense of shared purpose, he's complaining that Elon Musk is in fact making their decisions in order to change which group gets power in a way that more obviously disrupts any sense of shared purpose. This seems like complaining about Y, rather than X.

- More generally, my sense is that X involves thinking about politics and Y mostly is politics, and most of your arguments describe why people might be averse to doing politics rather than discussing it. Of course that can flow backwards (people who don't like doing something may also not like talking about it) but there's certainly a missing link.

- Relative to the broader community thinking about beneficial AI, the alignment community does unusually much X and unusually little Y. So prima facie it's more likely that "too little X+Y" is mostly about "too little Y" rather than "too little X." Similarly, when you list corrective influences they are about X rather than Y.

I care about this distinction because in my experience discussions about alignment of any kind (outside of this community) are under a lot of social pressure to turn into discussions about Y. In the broader academic/industry community it is becoming harder to resist those pressures.

I'm fine with lots of Y happening, I just really want to defend "get better at alignment" as a separate project that may require substantial investment. I'm concerned that equivocating between X and Y will make this difficulty worse, because many of the important divisions are between (alignment, X) vs (Y) rather than (alignment) vs (X, Y).

Replies from: habryka4, Benito↑ comment by habryka (habryka4) · 2021-06-08T03:42:16.397Z · LW(p) · GW(p)

This also summarizes a lot of my take on this position. Thank you!

↑ comment by Ben Pace (Benito) · 2021-06-08T02:13:54.619Z · LW(p) · GW(p)

This felt like a pretty helpful clarification of your thoughts Paul, thx.

comment by Kaj_Sotala · 2021-06-02T15:56:21.142Z · LW(p) · GW(p)

In this post, I wish to share an opposing concern: that the EA and rationality communities have become systematically biased to ignore multi/multi dynamics, and power dynamics more generally.

The EA and rationality communities tend to lean very strongly towards mistake rather than conflict theory. A topic that I've had in my mind for a while, but haven't gotten around writing a full post about, is of both of them looking like emotional defense strategies.

It looks to me like Scott's post is pointing towards, not actually different theories, but one's underlying cognitive-emotional disposition or behavioral strategy towards outgroups. Do you go with the disposition towards empathizing and assuming that others are basically good people that you can reason with, or with the disposition towards banding together with your allies and defending against a potential threat?

And at least in the extremes, both of them look like they have elements of an emotional defense. Mistake doesn't want to deal with the issue that some people you just can't reason with no matter how good your intentions, so it ignores that and attempts to solve all problems by dialogue and reasoning. (Also, many Mistake Theorists are just bad at dealing with conflict in general.) Conflict doesn't want to deal with the issue that often people who hurt you have understandable reasons for doing so and that they are often hurting too, so it ignores that and attempts to solve all problems by conflict.

If this model is true, then it also suggests that Mistake Theorists should also be systematically biased against the possibility of things like power dynamics being genuinely significant. If power dynamics are genuinely significant, then you might have to resolve things by conflict no matter how much you invest in dialogue and understanding, which is the exact scenario that Mistake is trying to desperately avoid.

Replies from: vanessa-kosoy, ryan_b↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2021-06-02T16:41:20.561Z · LW(p) · GW(p)

...Mistake Theorists should also be systematically biased towards the possibility of things like power dynamics being genuinely significant.

You meant to say, biased against that possibility?

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2021-06-02T17:00:50.835Z · LW(p) · GW(p)

Oops, yeah. Edited.

↑ comment by ryan_b · 2021-06-12T21:29:29.711Z · LW(p) · GW(p)

Mistake Theorists should also be systematically biased against the possibility of things like power dynamics being genuinely significant

This surprises me, because it feels like the whole pitch for things like paperclip maximizers is a huge power imbalance where the more powerful party is making a mistake.

comment by paulfchristiano · 2021-06-02T18:18:57.529Z · LW(p) · GW(p)

Speaking for myself, I'm definitely excited about improving cooperation/bargaining/etc., and I think that working on technical problems could be a cost-effective way to help with that. I don't think it's obvious without really getting into the details whether this is more or less leveraged than technical alignment research. To the extent we disagree it's about particular claims/arguments and I don't think disagreements can be easily explained by a high-level aversion to political thinking.

(Clarifying in case I represent a meaningful part of the LW pushback, or in case other people are in a similar boat to me.)

comment by johnswentworth · 2021-06-02T04:38:35.853Z · LW(p) · GW(p)

Meta-note: I think the actual argument here is decent, but using the phrase "power dynamics" will correctly cause a bunch of people to dismiss it without reading the details. "Power", as political scientists use the term, is IMO something like a principle component which might have some statistical explanatory power, but is actively unhelpful for building gears-level models.

I would suggest instead the phrase "bargaining dynamics", which I think points to the gearsy parts of "power" while omitting the actively-unhelpful parts.

Replies from: PaulK↑ comment by PaulK · 2021-06-04T19:55:52.204Z · LW(p) · GW(p)

(I assume that by "gears-level models" you mean a combination of reasoning about actors' concrete capabilities; and game-theory-style models of interaction where we can reach concrete conclusions? If so,)

I would turn this around, and say instead that "gears-level models" alone tend to not be that great for understanding how power works.

The problem is that power is partly recursive. For example, A may have power by virtue of being able to get B to do things for it, but B's willingness also depends on A's power. All actors, in parallel, are looking around, trying to understand the landscape of power and possibility, and making decisions based on their understanding, changing that landscape in turn. The resulting dynamics can just be incredibly complicated. Abstractions can come to have something almost like causal power, like a rumor starting a stampede.

We have formal tools for thinking about these kinds of things, like common knowledge, and game-theoretic equilibria. But my impression is that they're pretty far from being able to describe most important power dynamics in the world.

Replies from: johnswentworth↑ comment by johnswentworth · 2021-06-23T17:08:07.884Z · LW(p) · GW(p)

I do not think they're far from being able to describe most important power dynamics, although one does need to go beyond game-theory-101. In particular, Schelling's work is key for things like "rumor starting a stampede" or abstractions having causal power, as well as properly understanding threats, the importance of risk tolerance, and various other aspects particularly relevant to bargaining dynamics.

comment by Pattern · 2021-06-02T02:01:26.241Z · LW(p) · GW(p)

any good graduate education in mathematics will teach you that for the purpose of understanding something confusing, it’s always best to start with the simplest non-trivial example.

While that comment is meant as a metaphor, I'd say it's always best to start with a trivial example. Seriously, start with the number of dimensions d, and turn it all the way down to 0* and 1, solve those cases, and draw a line all the way to where you started, and check if it's right.

Reflective Oracles (fallenstein2015reflective) are another case of this, but in a highly technical context that probably (according-to-me: unfortunately) didn’t have much effect on broader rationality-community-discourse.

I haven't seen them mentioned in years.

*If zero is impossible, then do 1 and 2 instead.

comment by romeostevensit · 2021-06-02T02:05:33.667Z · LW(p) · GW(p)

A research review that I found incredibly helpful for bridging my understanding of technical topics and multi-agent cooperation in more practical contexts was this paper: Statistical physics of human cooperation. I'd be really excited about people engaging with this approach.

comment by Steven Byrnes (steve2152) · 2021-06-01T19:46:07.269Z · LW(p) · GW(p)

FWIW my "one guy's opinion" is (1) I'm expecting people to build goal-seeking AGIs, and I think by default their goals will be opaque and unstable and full of unpredictable distortions compared to whatever was intended, and solving this problem is necessary for a good future (details [LW · GW]), (2) Figuring out how AGIs will be deployed and what they'll be used for in a complicated competitive human world is also a problem that needs to be solved to get a good future. I don't think either of these problems is close to being solved, or that they're likely to be solved "by default".

(I'm familiar with your argument that companies are incentivized to solve single-single alignment, and therefore it will be solved "by default", but I remain pessimistic, at least in the development scenario I'm thinking about, again see here [LW · GW].)

So I think (1) and (2) are both very important things that people should be working on right now. However, I think I might have some intelligent things to say about (1), whereas I have nothing intelligent to say about (2). So that's the main reason I'm working on (1). :-P I do wish you & others luck—and I've said that before, see e.g. section 10 here [LW · GW]. :-)

comment by Mitchell_Porter · 2021-06-02T23:14:23.862Z · LW(p) · GW(p)

Trying to get the gist of this post... There's the broad sweep of AI research across the decades, up to our contemporary era of deep learning, AlphaGo, GPT-3. In the 2000s, the school of thought associated with Eliezer, MIRI, and Less Wrong came into being. It was a pioneer in AI safety, but its core philosophy of preparing for the first comprehensively superhuman AI, remains idiosyncratic in a world focused on more specialized forms of AI.

There is a quote from Eliezer talking about "AI alignment" research, which would be that part of AI safety concerned with AI (whether general or special) getting the right goals. Apparently the alignment research community was more collaborative before OpenAI and truly big money got involved, but now it's competitive and factional.

Part of the older culture of alignment research, was a reluctance to talk about competitive scenarios. The fear was that research into alignment per se would be derailed by a focus on western values vs nonwestern values, one company rather than another, and so on. But this came to pass anyway, and the thesis of this post is that there should now be more attention given to politics and how to foster cooperation.

My thoughts... I don't know how much attention those topics should be given. But I do think it essential that someone keep trying to solve the problem of human-friendly general AI in a first-principles way... As I see it, the MIRI school of thought was born into a world that, at the level of civilizational trends, was already headed towards superhuman AI, and in an uncontrolled and unsafe way, and that has never stopped being the case.

In a world where numerous projects and research programs existed, that theoretically might cross the AI threshold unprepared, MIRI was a voice for planning ahead and doing it right, by figuring out how to do it right. For a while that was its distinctive quality, its "brand", in the AI world... Now it's a different time: AI and its applications are everywhere, and AI safety is an academic subdiscipline.

But for me, the big picture and the endgame is still the same. Technical progress occurs in an out-of-control way, the threshold of superhuman AI is still being approached on multiple fronts, and so while one can try to moderate the risks at a political or cultural level, the ultimate outcome still depends on whether or not the first project across the threshold is "safe" or "aligned" or "friendly".

comment by JenniferRM · 2021-06-03T23:38:34.380Z · LW(p) · GW(p)

I googled for mentions of [confidence building measures] on Lesswrong and felt mildly saddened and surprised that there were so few, but happy that there were at least some. A confidence building measure (or "CBM") is a sort of "soft power" technique where people find ways to stay chill and friendly somewhat far away from possible battle lines, in order to make everyone more confident that grownups are in charge on both sides. They seem like they could help rule out large classes of failure modes, if they work as they claim to work "on the tin".

In the search, I found 5 hits. One from me in 2011 and another from me in 2017. Two mentions are "incidental" (in biblios) but there was a pretty solid and recent and direct hit with [AN #146]: Plausible stories of how we might fail to avert an existential catastrophe [LW · GW]. which is pointing to a non-less-wrong text on CBMs. Quoting the core of AN #146 here:

This paper explores confidence-building measures (CBMs) as a way to reduce the negative effects of military AI use on international stability. CBMs were an important tool during the Cold War. However, as CBMs rely on a shared interest to succeed, their adoption has proven challenging in the context of cybersecurity, where the stakes of conflict are less clear than in the Cold War. The authors present a set of CBMs that could diminish risks from military use of AI and discuss their advantages and downsides. On the broad side, these include building norms around the military use of AI, dialogues between civil actors with expertise in the military use of AI from different countries, military to military dialogues, and code of conducts with multilateral support. On the more specific side, states could engage in public signalling of the importance of Test and Evaluation (T&E), transparency about T&E processes and push for international standards for military AI T&E. In addition, they could cooperate on civilian AI safety research, agree on specific rules to prevent accidental escalation (similar to the Incidents at Sea Agreement from the Cold War), clearly mark autonomous systems as such, and declare certain areas as off-limits for autonomous systems.

The paper itself appears to be authored by... maybe this Michael Horowitz and then this Paul Scharre seems like an excellent bet for the other author. A key feature here in my mind is that Horowitz appears to already have a functional enduring bipartisan stance that seems to have survived various US presidential administration changes? This is... actually kinda hopeful! :-)

(Edit: Oh wait. Hopefulness points half retracted? The "AI IR Michael Horowitz" is just a smart guy I think, and not specifically a person with direct formal relationships with the elected US govt officials. Hmm.)

There are specific ideas, backstory, phrases, and policy proposals in the article itself: AI and International Stability: Risks and Confidence-Building Measures. Here's a not-entirely-random but not especially critical part of the larger article:

States have long used established “rules of the road” to govern the interaction of military forces operating with a high degree of autonomy, such as at naval vessels at sea, and there may be similar value in such a CBM for interactions with AI-enabled autonomous systems. The 1972 Incidents at Sea Agreement and older “rules of the road” such as maritime prize law provide useful historical examples for how nations have managed analogous challenges in the past. Building on these historical examples, states could adopt a modern-day “international autonomous incidents agreement” that focuses on military applications of autonomous systems, especially in the air and maritime environments. Such an agreement could help reduce risks from accidental escalation by autonomous systems, as well as reduce ambiguity about the extent of human intention behind the behavior of autonomous systems.

I guess the thing I'd want to communicate is that it doesn't seem to be the case that there are literally ZERO competent grownups anywhere in the world, even though I sometimes feel like that might be the case when I look at things like the world's handling of covid. From what I can tell, the grownups that get good things done get in, apply competence to do helpful things, then bounce out pretty fast afterwards?

Another interesting aspect is that the letters "T&E" occur 31 times in the Horowitz & Scharre paper which is short for the "Testing & Evaluation" of "new AI capabilities". They seem to want to put "T&E" processes into treaties and talks as a first class object, basically?

States could take a variety of options to mitigate the risks of creating unnecessary incentives to shortcut test and evaluation, including publicly signaling the importance of T&E, increasing transparency about T&E processes, promoting international T&E standards, and sharing civilian research on AI safety.

Neither italics nor bold in original.

But still... like... wisdom tournament designs? In treaties? That doesn't seem super obviously terrible if we can come up with methods that would be good in principle and in practice.

comment by WalterL · 2021-06-04T01:35:39.395Z · LW(p) · GW(p)

This all feels so abstract. Like, what have we lost by having too much faith in the PMK article? If I buy what you are pitching, what action should I take to more properly examine 'multi-principal/multi-agent AI'? What are you looking for here?

comment by TekhneMakre · 2021-06-04T01:29:25.828Z · LW(p) · GW(p)

Somewhat relevant: Yudkowsky, Eliezer. 2004. Coherent Extrapolated Volition. https://intelligence.org/files/CEV.pdf

Gives one of the desiderata for CEV as "Avoid creating a motive for modern-day humans to fight over the initial dynamic".

comment by ryan_b · 2021-06-12T21:49:10.154Z · LW(p) · GW(p)

I strongly support this line of investigation.

That being said, I propose that avoiding multi/multi dynamics is the default for pretty much all research - by way of example consider the ratio of Prisoner's Dilemma results to Stag Hunt results in game theory. We see this even in fields where there is consensus on the individual case, and we don't have that yet.

The generic explanation of "it is harder so fewer people do it" appears sufficient here.

Replies from: noah-walton↑ comment by Noah Walton (noah-walton) · 2021-08-01T19:07:54.675Z · LW(p) · GW(p)

I read you as saying Stag Hunt is a multi/multi game. If I'm right, why?

Replies from: ryan_b↑ comment by ryan_b · 2021-08-02T20:21:19.581Z · LW(p) · GW(p)

I don't, because as far as I understand it there is no principal/agent mechanism at work in a Stag Hunt. I can see I was powerfully vague though, so thank you for pointing that out via the question.

I was comparing Stag Hunt to the Prisoner's Dilemma, and the argument is this:

- Prisoner's Dilemma is one agent reasoning about another agent. This is simple, so there will be many papers on it.

- Stag Hunt is multiple agents reasoning about multiple agents. This is less simple, so there will be fewer papers, corresponding to the difference in difficulty.

- I expect the same to also apply to the transition from one principal and one agent to multiple principles and multiple agents.

Returning to the sufficiency claim: I think I weigh the "Alignment framings from MIRIs early years" arguments more heavily than Andrew does; I estimate a mild over-commitment to the simplest-case-first-norm of approximately the same strength as the community's earlier over-commitment to modest epistemology would be sufficient to explain the collective "ugh" response. It's worth noting that the LessWrong sector is the only one referenced that has much in the way of laypeople - which is to say people like me - in it. I suspect that our presence in the community biases it more strongly towards simpler procedures, which leads me to put more weight on the over-commitment explanation.

That being said, my yeoman-community-member impressions of the anti-politics bias largely agree with Andrew's, even though I only read this website, and some of the high-level discussion of papers and research agenda posts from MIRI/Open AI/Deepmind/etc. My gut feeling says there should be a way to make multi/multi AI dynamics palatable for us despite this. For example, consider the popularity of posts surrounding Voting Theory [? · GW], which are all explicitly political. Multi/multi dynamics are surely less political than that, I reason.

comment by Stuart_Armstrong · 2021-06-09T10:03:08.212Z · LW(p) · GW(p)

Thanks for writing this.

For myself, I know that power dynamics are important, but I've chosen to specialise down on the "solve technical alignment problem towards a single entity" and leave those multi-agent concerns to others (eg the GovAI part of the FHI), except when they ask for advice.

comment by Soul · 2021-06-13T14:57:38.818Z · LW(p) · GW(p)

If it’s commonly accepted that a fully realized AGI would be the most important invention ever, does it matter what the competitive ranking of the polities existant in that future moment are?

Whether or not any country, including the current major players, continues or collapses, degenerates or flourishes, will be reduced in importance as naturally the primary concern of most, if not all, key decision makers will no longer be about the preservation of their country of allegiance, if any.

Much like how if aliens were to be revealed to exist, we wouldn’t really care much anymore about the disputes of one polity versus another.

comment by jbash · 2021-06-01T20:21:00.930Z · LW(p) · GW(p)

perhaps the competitive dynamic was bound to emerge anyway and it was hubristic to think ourselves so important.

Um, I think that understates the case rather vastly, and lets "you" off the hook too easily.

It was always obvious that innumerable kinds of competition would probably be decisive factors in the way things actually played out, and that any attempt to hide from that would lead to dangerous mistakes. It wasn't just obvious "pre-Musk". It was blindingly obvious in 1995. Trying to ignore it was not an innocuous error.

I'm not sure that a person who would try to do that even qualifies as a "wise" fool.