«Boundaries», Part 2: trends in EA's handling of boundaries

post by Andrew_Critch · 2022-08-06T00:42:48.744Z · LW · GW · 15 commentsContents

The trends 1. Expansive thinking 2. Niche-finding 3. Work/life balance 4. Romances at work 5. Social abrasiveness 6. Pivotal Acts 7. Resistance from AI labs 8. Thought experiments What to do? Recap None 15 comments

This is Part 2 of my «Boundaries» Sequence [? · GW] on LessWrong, and is also available on the EA Forum [EA · GW].

Summary: Here I attempt to constructively outline various helpful and harmful trends I see in the EA and rationality communities, which I think arise from a tendency to ignore the boundaries of social systems (rather than a generic tendency to violate norms). Taken too far, this tendency can manifest as a 'lack of respect' for boundaries, by which I mean a mixture of

- not establishing boundaries where they'd be warranted,

- crossing boundaries in clumsy/harmful ways, and

- not following good procedures for deciding whether to cross a boundary.

I propose that if some version of «respecting boundaries» were a more basic tenet of EA — alongside or within the principles of neglect, importance, and tractability — then EA would have fewer problems and do more good for the world.

The trends

Below are the (good and bad) trends I'd like to analyze from the perspective of respecting boundaries. Each of these trends has also been remarked by at least a dozen self-identified EAs I know personally:

- expansive thinking: lots of EAs are good at 'thinking outside the box' about how to do good for the world, e.g., expanding one's moral circle (example: Singer, 2011).

- niche-finding: EA helps people find impactful careers that are a good fit for their own strengths and weaknesses (example: 80k, 2014).

- work/life balance: EA has sometimes struggled with people working too hard for the cause areas, in ways that harm them as individuals in an unsustainable way.

- romances at work: people dating their co-workers are an example of a professional boundary being crossed by personal affairs.

- social abrasiveness: EA culture, and perhaps more so rationalist culture, is often experienced by newcomers or outsiders as abrasive or harsh. (Hypocrisy flag: I think I've been guilty of this, though hopefully less over the past few years as I've gotten older and reflected on these topics.)

- pivotal acts: numerous EAs seriously consider pivotal acts [LW · GW] — i.e., a potential unilateral acts by a powerful, usually AI-enabled actor, to make the world permanently safer — as the best way to do good for humanity (as opposed to pivotal processes [LW · GW] carried out multilaterally).

- resistance from AI labs: well-established AI labs are resistant to adopting EA culture as a primary guiding influence.

I'm going to analyze each of the above trends in terms of boundaries, partly to illustrate the importance of the boundary concept, partly to highlight some cool things the EA movement seems to have done with boundaries, and partly to try helping somewhat with the more problematic trends above.

1. Expansive thinking

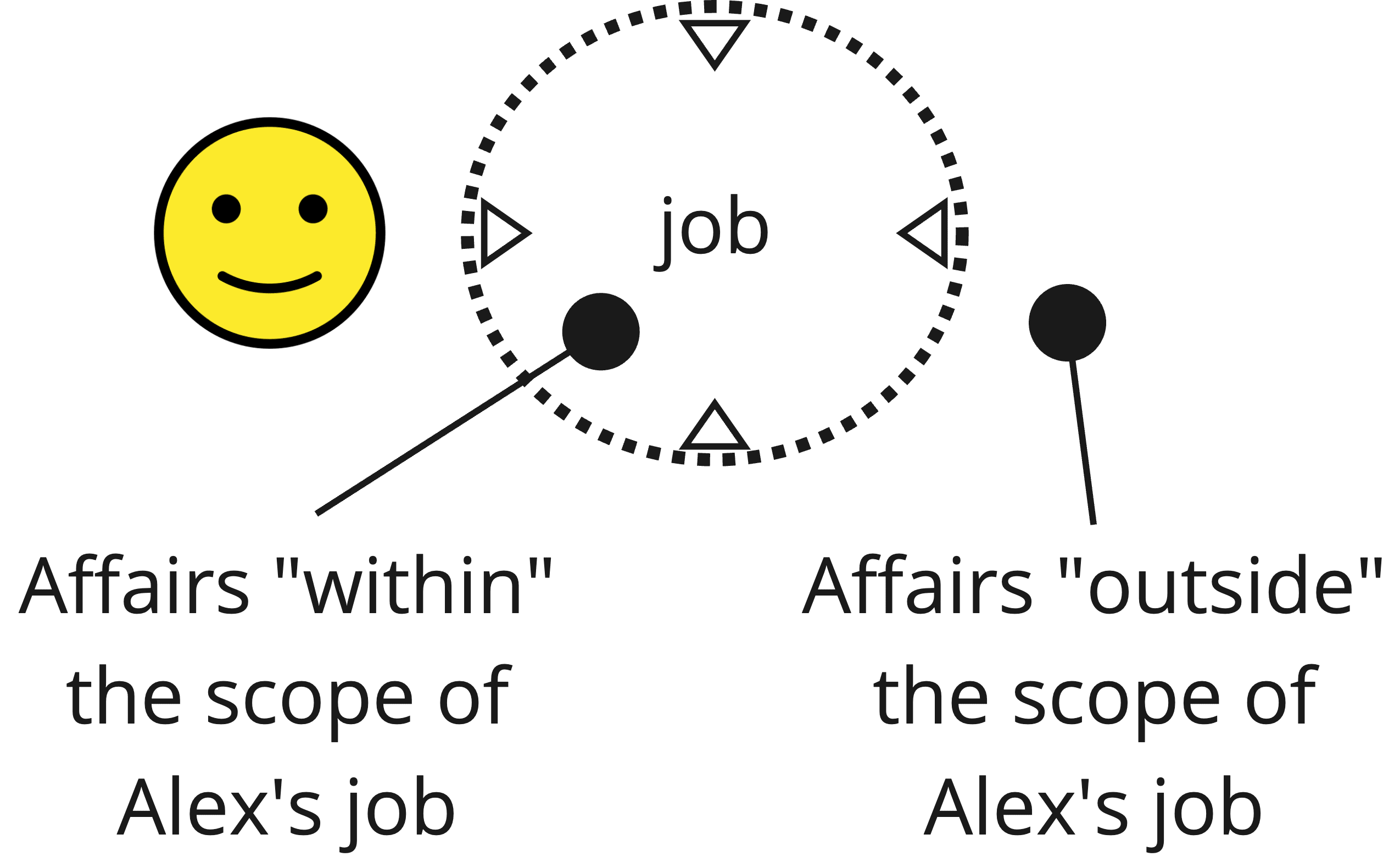

Consider a fictional person named Alex. A "job" for Alex is a scope of affairs (features of the world) that Alex is considered responsible for observing and handling. Alex might have multiple roles that we can think of as jobs, e.g. "office manager", "husband", "neighbor".

Alex probably thinks about the world beyond the scope of his job(s). But usually, Alex doesn't take actions outside the scope of his job(s):

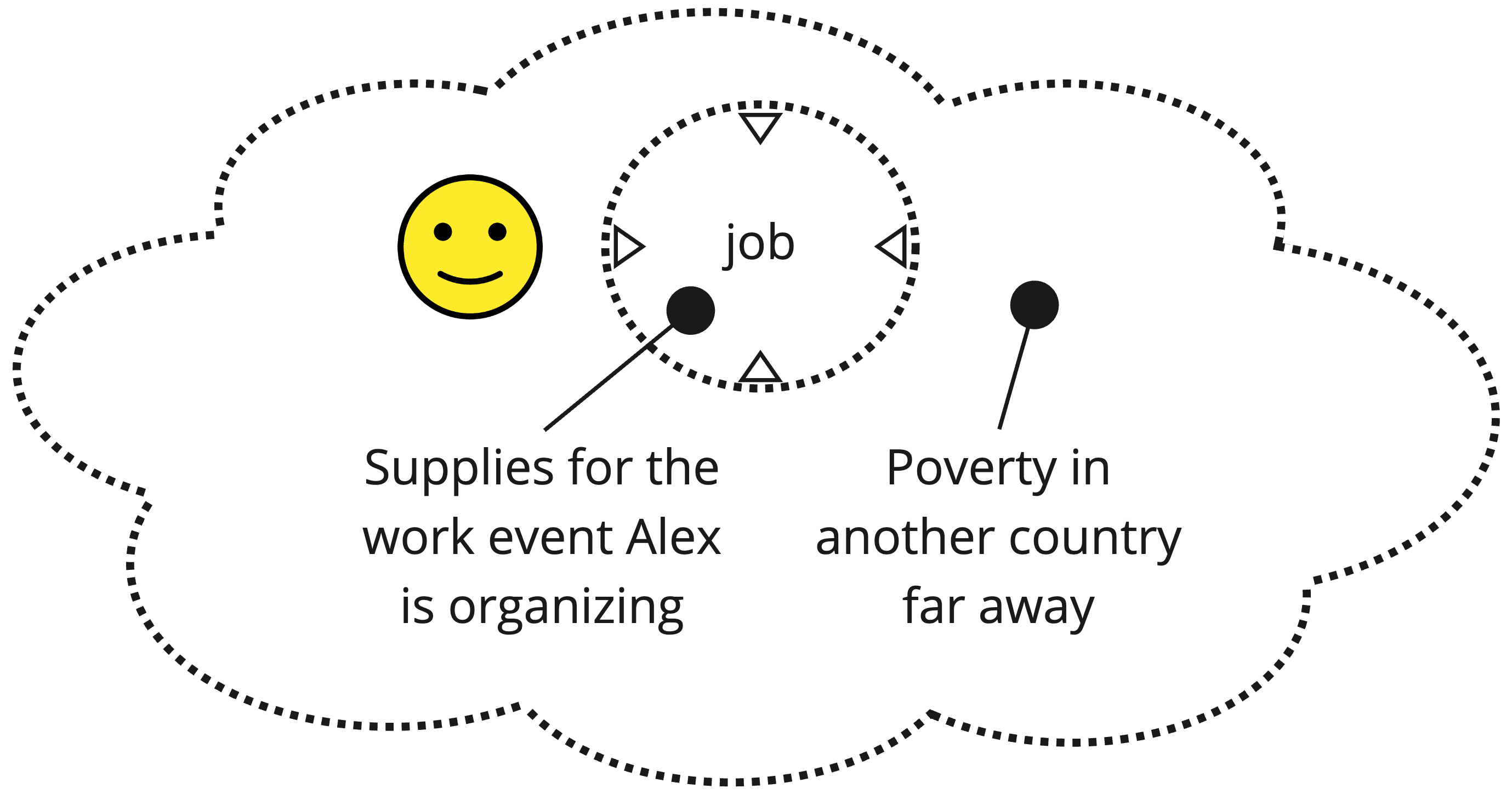

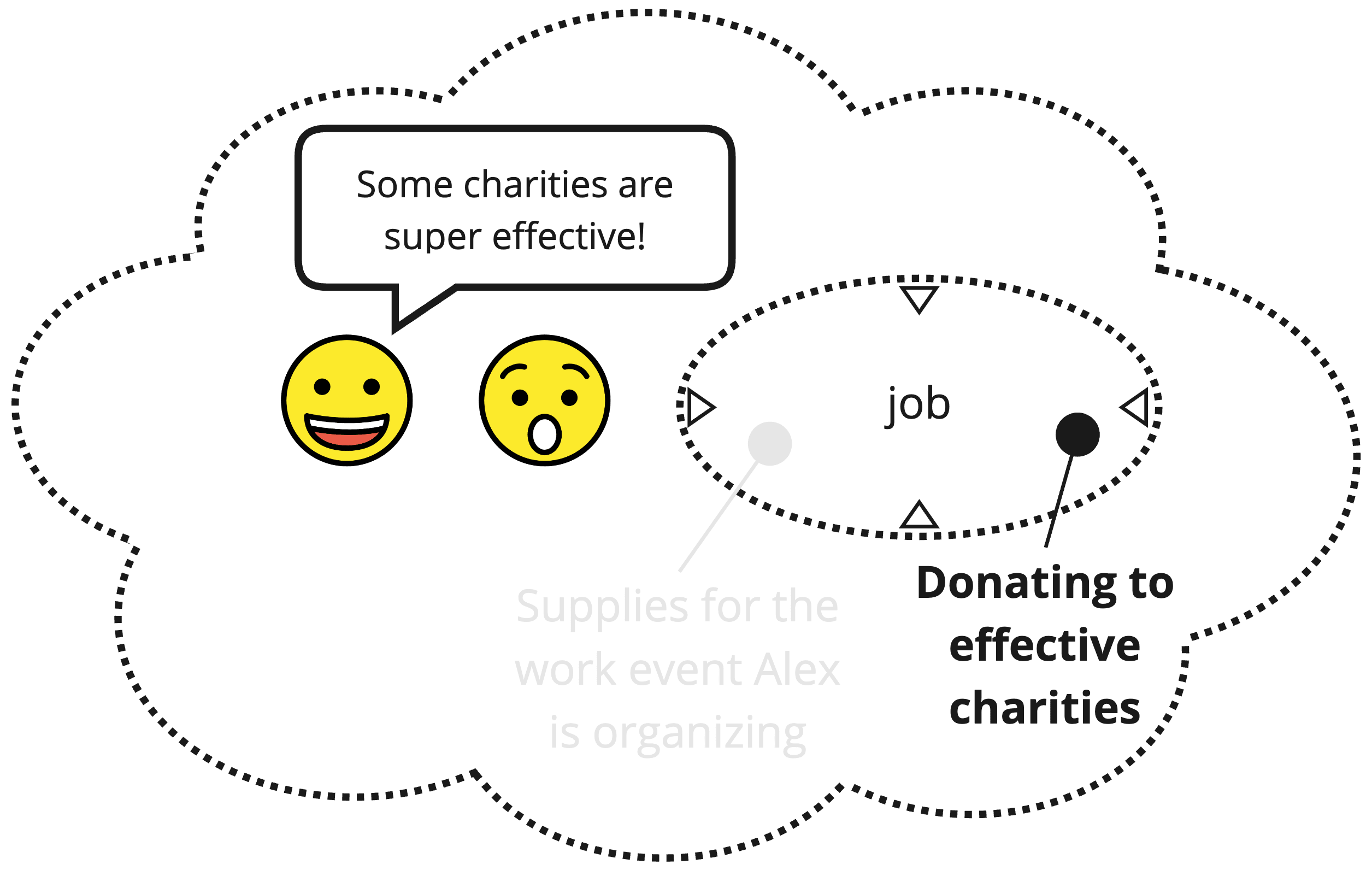

The Effective Altruism movement has provided a lot of discourse and social context that helps people extend their sense of "job" to include important and neglected problems in the world that might be tractable to them-personally, e.g., global poverty (tractable via donations to Give Directly).

In other words, EA has helped people to expand both their circle of compassion and their scope of responsibility to act. See also "The Self-Expansion Model of Motivation in Close Relationships" (Aron, 2023).

2. Niche-finding

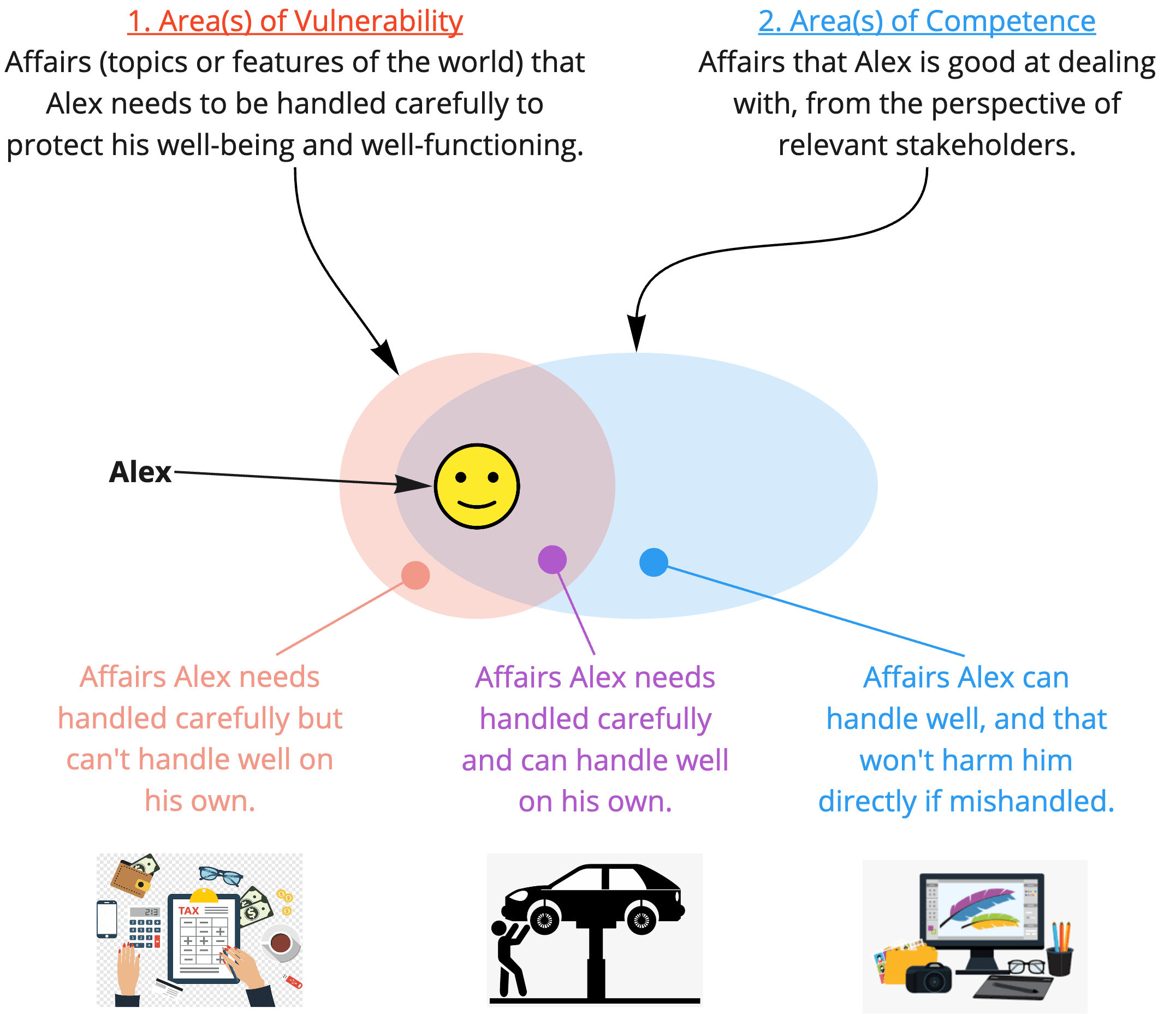

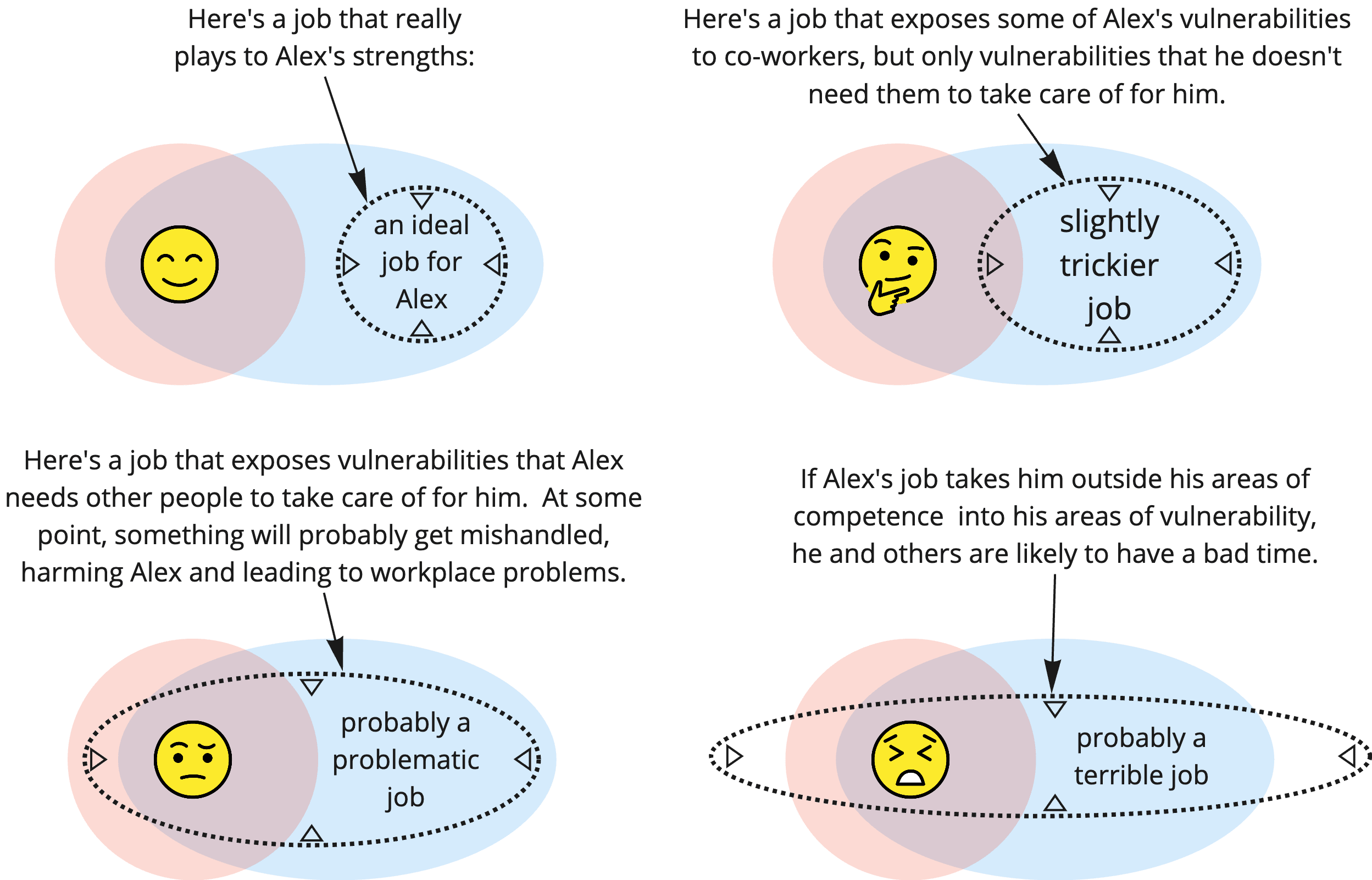

Identifying areas of competence and vulnerability are important for scoping out Alex's job(s). This is just standard career advice of the kind that 80,000 Hours might share for helping people find a good job (Todd, 2014; Todd, 2021), but I'd like to think about it from the perspective of boundaries, so let me spell out some nuances.

By competence I just mean the ability to do a good job with things from the perspective of relevant stakeholders (which might depend on the stakeholders). By vulnerabilities, I mean ways in which a person's functioning or wellbeing can be damaged or harmed. A person is is, after all, a physical system, and physical systems can pretty much always be damaged in some way, which needn't be limited to his body. For a hypothetical person named "Alex", we can imagine instances of:

- (high vulnerability, low competence) Alex is bad at managing his finances. His friend Betty helps him pay his taxes every year and plan for retirement. If Betty messes up, Alex could end up poor or in trouble with the government. So, for Alex this is an area of high vulnerability and low competence.

- (high vulnerability, high competence) Alex is good at keeping his car maintained. This is also a point of vulnerability, because if his car breaks on the highway, he could get hurt. But he's competent in this area, so he can take care of it himself.

- (low vulnerability, high competence) Alex is also good at graphic design work. And, occasionally when he designs some graphics that people turn out not to like, he's totally fine. This is an area of low vulnerability and high competence.

In an ideal world, Alex's job has him engaging with affairs that he's good at handling, and that won't harm him if mishandled, i.e., areas of high competence and low vulnerability:

3. Work/life balance

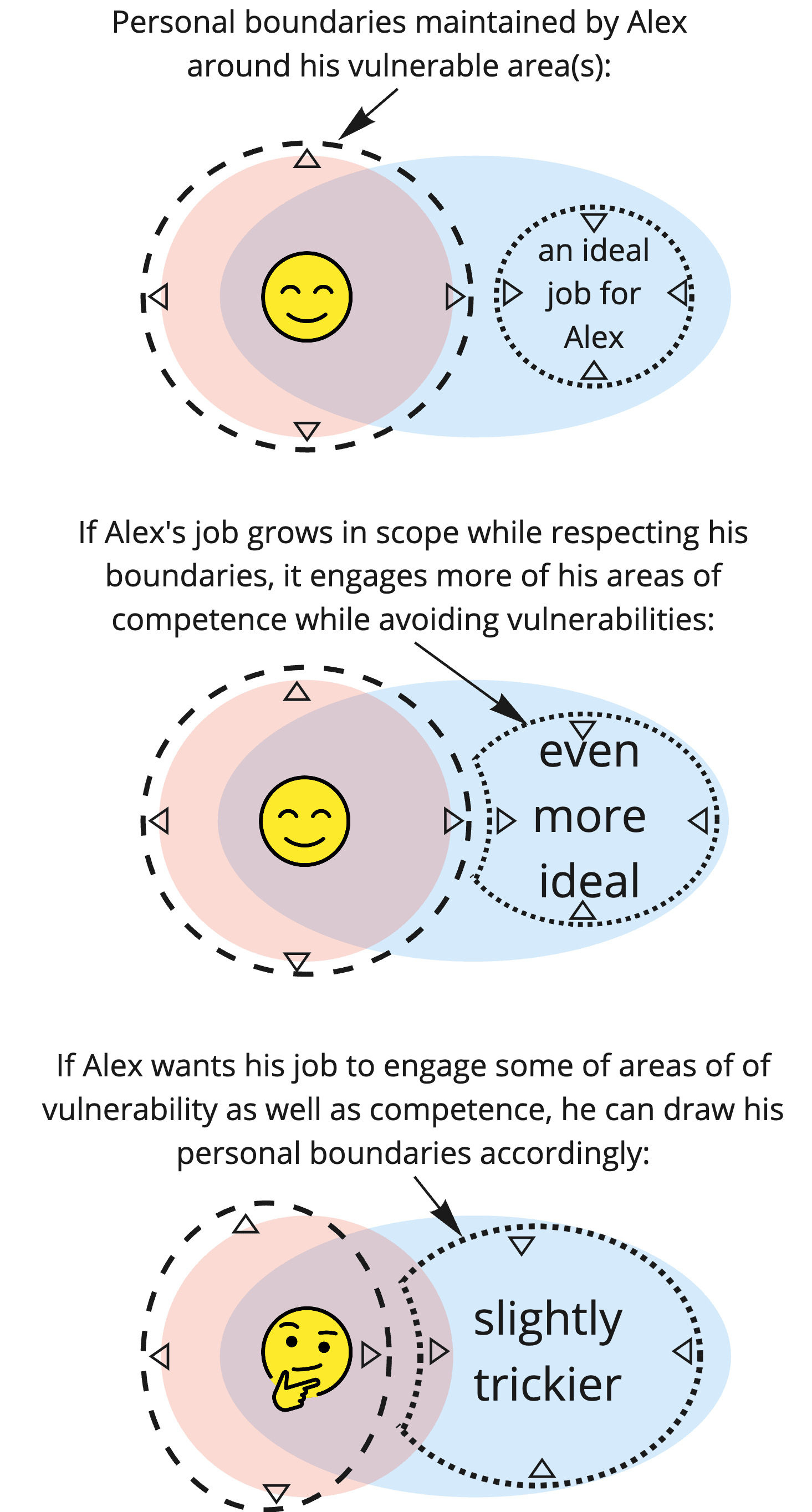

More recently, leaders in EA have been trying to talk more about boundary-setting (Freedman, 2021; GWWC). I think a big reason this discussion needed to happen was that, early on, EA was very much about expanding and breaking down boundaries, which may have led some people to dissolve or ignore what would otherwise be "work/life" or "personal/professional" boundaries that protect their wellbeing as individuals. In other words, people started to burn out ('Elizabeth', 2018 [EA · GW]; Toner, 2019).

Since jobs change over time, Alex may need to establish boundaries — in this case, socially reinforced constraints — to protect vulnerable aspects of his personal life from the day-to-day dealings of his work:

Whether the "even more ideal" or "slightly trickier" career path will be better for Alex in the end, the boundaries he sets or doesn't set will be a major factor determining his experience.

4. Romances at work

Effective altruism's "break lots of boundaries" attitude may have also contributed to — or arisen from — a frequent breakdown of professional/social distinctions, and might have lead to frequent intertwining of romantic relationships with work (Wise, 2022 [EA · GW]), and/or dating across differences in power dynamics despite taboos to the contrary (Wise, 2022 [EA · GW]). Whatever position one takes on these issues, at a meta level we can observe that the topic is about where boundaries should or should not be drawn between people.

5. Social abrasiveness

EAs can sometimes come across as "abrasive", which, taken literally, basically means "creating friction between boundaries". Stefan Schubert writes, in his post "Naive Effective Altruism and Conflict (2020)":

... people often have unrealistic expectations of how others will react to criticism. Rightly or wrongly, people tend to feel that their projects are their own, and that others can only have so much of a say over them. They can take a certain amount of criticism, but if they feel that you’re invading their territory too much, they will typically find you abrasive. And they will react adversely.

Notice the language about 'territory', a sense of boundary that is being crossed. This observation isn't just about an arbitrary social norm; it's about a sense of space where something is being protected and, despite that, invaded. Irrespective of whether EA should be more or less observant of social niceties, a sense of boundaries is key to the pattern he's describing.

Relatedly, Duncan Sabien recently wrote a LessWrong post entitled Benign Boundary Violations [LW · GW], where he advocates for harmless boundary violations as actively healthy for social and cultural dynamics. Again, irrespective of the correctness of the post, the «boundary» concept is crucial to the social dynamics in question (and post title!).

6. Pivotal Acts

(This part is more so characteristic of rationalist discourse than EA discourse, but EA is definitely heavily influenced by rationalist memes.)

I recently wrote about a number of problems that arise from the intention to carry out a "pivotal act", i.e., a major unilateral act from a single agent or institution that makes the world safer (–, 2022 [LW · GW]). I'll defer to that post for a mix of observations and value judgement on that topic. For this post, it suffices to say that such a "pivotal act" plan would involve violating a lot of boundaries.

7. Resistance from AI labs

A lot of EAs have the goal of influencing AI labs to care more about EA principles and objectives. Many have taken jobs in big labs to push or maintain EA as a priority in some way. I don't have great written sources on this, but it seems to me that those people often end up frustrated that they can't convince their employers to be more caring and ambitious about saving the world. At the very least, one can publicly observe that

- DeepMind and OpenAI both talk about trying to do a lot of good for humanity, and

- both have employed numerous self-identified EAs who intended to promote EA culture within the organization, but

- neither lab has publicly espoused the EA principles of importance, tractability, and neglect.

- FaceBook AI Research has not, as far as I know, employed any self-identified EAs in a full-time capacity (except maybe as interns). I expect this to change at some point, but for now EA representation in FAIR is weak relative to OpenAI and DeepMind.

There are many reasons why individual institutions might not take it on as their job to make the whole world safe, but I posit that a major contributing factor is that sense that it would violate a lot of boundaries. (This is kind of the converse to the observation about 'pivotal acts'.) By contrast, in my personal experience I've found it easier to argue for people to play a part in a pivotal process [LW · GW], i.e., a distributed process whereby the world is made safer but where no single institution or source of agency has full control to make it happen.

8. Thought experiments

EAs think a lot about thought experiments in ethics. A lot of the thought experiments involve norm violations that are more specifically boundary violations; for a recent example, see "Consequentialists (in society) should self-modify to have side constraints"(R, Cotton-Baratt 2022 [EA · GW]).

What to do?

Proposing big changes to how EA should work is beyond the scope of this post. I'm mostly just advocating for more thinking about boundaries as important determinants of what happens in the world and how to do good.

What's the best way for EA to accommodate this? I'm not sure! Perhaps in the trio of "importance, neglect, and tractability", we could replace "tractability" with "approachability" to highlight that social and sociotechnical systems need to be "approached" in a way that somehow handles their boundaries, rather than simply being "treated" (the root of 'tractable') like illnesses.

Irrespective of how to implement a change, I do think that "boundaries" should probably be treated as first-class objects in our philosophy of do-gooding, alongside and distinct from both "beliefs" (as treated in the epistemic parts of the LessWrong sequences [LW · GW]) and "values|objectives|preferences".

Recap

In this post, I described some trends where thinking about boundaries could be helpful to understanding and improving the EA movement, specifically, patterns of boundary expansion and violation in expansive thinking, niche-finding, work/life balance, romances at work, social abrasiveness, pivotal act intentions, and AI labs seeming somewhat resistant to EA rhetoric and ideology. I haven't done much to clarify what, if anything, should change as a result of these observations, although I'm fairly confident that making «boundaries» a more central concept in EA discourse would be a good idea, such as by replacing the idea of "tractability" with "approachability" or another term more evocative of spatial mataphor.

This was Part 2 of my «Boundaries» Sequence [? · GW] on LessWrong, and is also available on the EA Forum [EA · GW].

15 comments

Comments sorted by top scores.

comment by Noosphere89 (sharmake-farah) · 2022-08-06T02:37:45.373Z · LW(p) · GW(p)

To be sort of blunt, I suspect a lot of the reason why AI safety memes are resisted by companies like Deepmind is because taking them seriously would kill their business models. It would force them to fire all their AI capabilities groups, expand their safety groups, and at least try to not be advancing AI.

When billions of dollars are on the line, potentially trillions if the first AGI controls the world for Google, it's not surprising that any facts that would reconsider that goal are doomed to fail. Essentially it's a fight between a human's status-seeking, ambition and drive towards money vs ethics and safety, and with massive incentives for the first group of motivations means the second group loses.

On pivotal acts, a lot of it comes from MIRI who believe that a hard takeoff is likely, and to quote Eli Tyre, hard vs soft takeoff matters for whether pivotal acts need to be done:

On the face of it, this seems true, and it seems like a pretty big clarification to my thinking. You can buy more time or more safety, at little bit at a time, instead of all at once, in sort of the way that you want to achieve life extension escape velocity.

But it seems like this largely depends on whether you expect takeoff to be hard or soft. If AI takeoff is hard, you need pretty severe interventions, because they either need to prevent the deployment of AGI or be sufficient to counter the actions of a superintelligece. Generally, it seems like the sharper takeoff is, the more good outcomes flow through pivotal acts, and the smoother takeoff is the more we should expect good outcomes to flow through incremental improvements.

Replies from: conor-sullivan, sharmake-farahAre there any incremental actions that add up to a "pivotal shift" in a hard takeoff world?

↑ comment by Lone Pine (conor-sullivan) · 2022-08-08T05:41:08.257Z · LW(p) · GW(p)

I think that the big labs could be moved if the story was: "There will be a soft takeoff during which dozens of players will make critical decisions, and if the collective culture is supportive of the right approach (on alignment), then we will succeed, otherwise everyone will be dead and $GOOG will be $0." The story of hard takeoffs and pivotal acts is just not compatible with the larger culture, and cannot be persuasive. Now there is the problem of epistemics: the soft takeoff model being convenient for persuasion reasons doesn't make it true. But my opinion is that the soft takeoff model is more likely anyway.

↑ comment by Noosphere89 (sharmake-farah) · 2025-01-21T14:28:57.177Z · LW(p) · GW(p)

I'm much less negative on alignment/control efforts, and now think the default path is probably that we aren't doomed with 75-80% probability, largely due to realizing that the assumption of complexity of value/huge inductive biases were necessary for human values was more or less false, and control is finally starting up, and got some useful results, though we will need to see and wait for more results, and I credit Quintin Pope for starting this transformation, which makes the top comment weird, but also I do think something like this is still true, and I unretracted my comment above, with the context in this comment.

comment by Chipmonk · 2023-06-03T23:28:11.895Z · LW(p) · GW(p)

Some number of the examples in this post don't make sense to me. For example, where is the membrane in "work/life balance"? Or, where is the membrane in "personal space" (see Duncan's post, which is linked).

I think there's a thing that is "social boundaries", which is like preferences— and there's also a thing like "informational or physical membranes", which happens to use the same word "boundaries", but is much more universal than preferences. Personally, I think these two things are worth regarding as separate concepts.

Personally, I like to think about membranes as a predominantly homeostatic autopoietic thing. Agents maintain their membranes. They do not "set" boundaries, they ARE boundaries.

[I explain this disagreement a bit more in this post [LW · GW].]

Replies from: Chipmonk↑ comment by Chipmonk · 2023-07-11T15:34:14.576Z · LW(p) · GW(p)

An extra thought: All membranes (boundaries) are Markov blankets, but not all Markov blankets are membranes.

For example, if two social groups are isolated from each other there's a Markov blanket between them. However, that could just be spurious because they happen not to talk, rather than because there is an agent there that is actively maintaining that separation [LW · GW].

comment by Raemon · 2022-08-08T00:20:15.616Z · LW(p) · GW(p)

I found myself sort of bouncing off here, despite being interested in the topic and having a sense that "there's something to this thread."

I think right now my main takeaway is "think a bit more about boundaries on the margin, in a wider variety of places" (which is maybe all you wanted), but I think I got that more from in-person conversations with you than from this post. In this post there's a lot of individual examples that give a sense of the breadth of how to apply the "boundaries" frame, but each one felt like I could use more details connecting it to the previous post. (Pedagogically I'd have found it helpful if the post was "T-shaped", where it goes deep on one example connecting it to the game theory concepts in the previous post, and then explore a breadth of other examples which are easier to latch onto by virtue of connecting them to the initial deep example)

I agree that each of the domains listed here has some interesting stuff to think through. (though I'm not sure I need the "boundary" frame to think Something Is Up with each example as a place where EAs/rationalists might be making a mistake)

Replies from: Andrew_Critch, Andrew_Critch↑ comment by Andrew_Critch · 2022-08-08T05:08:33.241Z · LW(p) · GW(p)

... From your perspective, which section do you think would be most interesting to do the deep dive on? (I.e. the top of the "T"?)

(Interested to hear from others as well.)

↑ comment by Martín Soto (martinsq) · 2022-08-08T11:27:13.880Z · LW(p) · GW(p)

I'd like to hear more about how the boundaries framework can be applied to Resistance from AI Labs to yield new insights or at least a more convenient framework. More concretely, I'm not exactly sure which boundaries you refer to here:

There are many reasons why individual institutions might not take it on as their job to make the whole world safe, but I posit that a major contributing factor is that sense that it would violate a lot of boundaries.

My main issue is I for now agree with Noosphere89 [LW · GW]'s comment: the main reason is just commonsense "not willing to sacrifice profit". And this can certainly be conceptualized as "not willing to cross certain boundaries" (extralimiting the objectives of a usual business, reallocating boundaries of internal organization, etc.), but I don't see how these can shed any more light than the already commonsense considerations.

To be clear, I know you discuss this in more depth in your posts on pivotal acts / processes, but I'm curious as to how explicitly applying the boundaries framework could clarify things.

↑ comment by Raemon · 2022-08-08T06:11:00.329Z · LW(p) · GW(p)

Short answer: maybe Work/Life balance? Probably a reasonable combination of "meaty/relevant, without being too controversial an example".

Longer answer: I'm not actually sure. While thinking about the answer, I notice part of the thing is that Expansive Thinking, Niche Finding and Work-Life balance each introduce somewhat different frames, and maybe another issue was I got sort of frame-fatigued by the time I got to Work/Life/Balance.

Replies from: Raemon↑ comment by Raemon · 2022-08-08T06:11:30.210Z · LW(p) · GW(p)

(random additional note: I'm also anticipating that this boundaries sequence isn't really going to address the core cruxes around pivotal acts)

Replies from: Andrew_Critch↑ comment by Andrew_Critch · 2022-08-08T17:10:56.475Z · LW(p) · GW(p)

Thanks for this prediction; by default it's correct! But, my mind could be changed on this. Did you voice this as:

(a) a disappointment (e.g., "Ah, too bad Critch isn't trying to address pivotal acts here"), or

(b) as an expectation of what I should be aiming for (e.g., "Probably it's too much to write a sequence about boundaries that's also trying to address pivotal acts.")

(c) neither / just a value-neutral prediction?

↑ comment by Andrew_Critch · 2022-08-08T05:01:02.325Z · LW(p) · GW(p)

Thanks for the suggestion! Maybe I'll make a T-shaped version someday :)

comment by Caspar Oesterheld (Caspar42) · 2023-05-30T05:12:00.438Z · LW(p) · GW(p)

Minor typos:

I think it's "Freeman"?

Cotton-Baratt 2022 [EA · GW]

And "Cotton-Barratt" with two rs.

comment by Chipmonk · 2023-04-23T00:44:26.468Z · LW(p) · GW(p)

1. Expansive thinking

Consider a fictional person named Alex. A "job" for Alex is a scope of affairs (features of the world) that Alex is considered responsible for observing and handling. Alex might have multiple roles that we can think of as jobs, e.g. "office manager", "husband", "neighbor".

I somewhat disagree with how this section is presented so I wrote a post about it [LW · GW] and proposed a compromise.

In summary:

- I propose defining boundaries in the Alex example not in terms of “jobs”, but in terms of: 1) contracts (mutual agreements between two parties), and 2) property / things he owns.

- Alex is not “responsible” for *someone else’s* poverty. (And “donating” is not/cannot be part of his “job”.) He is, however, responsible for his values, and in this case because of his values, he is *expressing care* for someone else’s poverty, and this is distinct from “taking responsibility”.