LLM chatbots have ~half of the kinds of "consciousness" that humans believe in. Humans should avoid going crazy about that.

post by Andrew_Critch · 2024-11-22T03:26:11.681Z · LW · GW · 53 commentsContents

Executive Summary:

Part 1: Which referents of "consciousness" do I think chatbots currently exhibit?

Part 2: What should we do about this?

Part 3: What about "the hard problem of consciousness"?

Summary & Conclusion

Appendix: My speculations on which referents of "consciousness" chatbots currently exhibit.

None

53 comments

Preceded by: "Consciousness as a conflationary alliance term for intrinsically valued internal experiences [AF · GW]"

tl;dr: Chatbots are probably "conscious" in a variety of important ways. We humans should probably be nice to each other about the moral disagreements and confusions we're about to uncover in our concept of "consciousness".

Epistemic status: I'm pretty sure my conclusions here are correct, but also there's a good chance this post won't convince you of them if you're not on board with my preceding post.

Executive Summary:

I'm pretty sure Turing Prize laureate Geoffrey Hinton is correct that LLM chatbots are "sentient" and/or "conscious" (source: Twitter video), I think for at least 8 of the 17 notions of "consciousness" that I previously elicited from people through my methodical-but-informal study of the term (as well as the peculiar definition of consciousness that Hinton himself favors). If I'm right about this, many humans will probably soon form steadfast opinions that LLM chatbots are "conscious" and/or moral patients, and in many cases, the human's opinion will be based on a valid realization that a chatbot truly is exhibiting this-or-that referent of "consciousness" that the human morally values. On a positive note, these realizations could help humanity to become more appropriately compassionate toward non-human minds, including animals. But on a potentially negative note, these realizations could also erode the (conflationary [AF · GW]) alliance that humans have sometimes maintained upon the ambiguous assertion that only humans are "conscious" or can be known to be "conscious".

In particular, there is a possibility that humans could engage in destructive conflicts over the meaning of "consciousness" in AI systems, or over the intrinsic moral value of AI systems, or both. Such conflicts will often be unnecessary, especially in cases where we can obviate or dissolve [LW · GW] the conflated term "consciousness" by simply acknowledging in good faith that we disagree about which internal mental process are of moral significance. To acknowledge this disagreement in good faith will mean to do so with an intention to peacefully negotiate with each other to bring about protections for diverse cognitive phenomena that are ideally inclusive of biological humans, rather than with a bad faith intention to wage war over the disagreement.

Part 1: Which referents of "consciousness" do I think chatbots currently exhibit?

The appendix will explain why I believe these points, but for now I'll just say what I believe:

At least considering the "Big 3" large language models — ChatGPT-4 (and o1), Claude 3.5, and Gemini — and considering each of the seventeen referents of "consciousness" from my previous post [AF · GW],

- I'm subjectively ≥90% sure the Big 3 models experience each of the following (i.e., 90% sure for each one, not for the conjunction of the full list):

- #1 (introspection), #2 (purposefulness), #3 (experiential coherence), #7 (perception of perception), #8 (awareness of awareness), #9 (symbol grounding), #15 (sense of cognitive extent), and #16 (memory of memory).

- I'm subjectively ~50% sure that chatbots readily exhibit each of the following referents of "consciousness", depending on what more specific phenomenon people might be referring to in each case:

- #4 (holistic experience of complex emotions), #5 (experience of distinctive affective states), #6 (pleasure and pain), #12 (alertness), #13 (detection of cognitive uniqueness), and #14 (mind-location).

- I'm subjectively ~75% sure that LLM chatbots do not readily exhibit the following referents of "consciousness", at least not without stretching the conceptual boundaries of what people were referring to when they described these experiences to me:

- #10 (proprioception), #11 (awakeness), and #17 (vestibular sense).

Part 2: What should we do about this?

If I'm right — and see the Appendix if you need more convincing — I think a lot of people are going to notice and start vehemently protecting LLMs for exhibiting various cognitive processes that we feel are valuable. By default, this will trigger more and more debates about the meaning of "consciousness", which serves as a heavily conflated [AF · GW] proxy term for what processes internal to a mind should be a treated as intrinsically morally valuable.

We should avoid approaching these conflicts as scientific debates about the true nature of a singular phenomenon deserving of the name "consciousness", or as linguistic debates about the definition of the word "consciousness", because as I've explained previously [AF · GW], humans are not in agreement about what we mean by "consciousness".

Instead, we should dissolve the questions [LW · GW] at hand, by noticing that the decision-relevant question is this: Which kinds of mental processes should we protect or treat as intrinsically morally significant? As I've explained previously [AF · GW], even amongst humans there are many competing answers to this question, even restricting to answers that the humans want to use as a definition of "consciousness".

If we acknowledge the diversity of inner experiences that people value and refer to as their "consciousness", then we can move past confused debates about what is "consciousness", and toward a healthy pluralistic agreement about protecting a diverse set of mental processes as intrinsically morally significant.

Part 3: What about "the hard problem of consciousness"?

One major reason people think there's a single "hard problem" in understanding consciousness is that people are unaware that they mean different things from each other when they use the term "consciousness". I explained this in my previous post [AF · GW], based on informal interviews I conducted during graduate school. As a result, people have a very hard time agreeing on the "nature" of "consciousness". That's one kind of hardness that people encounter when discussing "consciousness", which I was only able to resolve by asking dozens of other people to introspect and describe to me what they were sensing and calling their "consciousness".

From there, you can see that there actually several hard problems when it comes to understanding the various phenomena referred to by "consciousness". In a future post, tentatively called "Four Hard-ish Problems of Consciousness", I'll try to share some of them and how I think they can be resolved.

Summary & Conclusion

In Part 1, I argued that LLM chatbots probably possess many but not (yet) all of the diverse properties we humans are thinking of when we say "consciousness". I'm confident in the diversity of these properties because of the investigations in my previous post [AF · GW] about them.

As a result, in Part 2 I argued that we need to move past debating what "consciousness" is, and toward a pluralistic treatment of many different kinds of mental processes as intrinsically valuable. We could approach such pluralism in good faith, seeking to negotiate a peaceful coexistence amongst many sorts of minds, and amongst humans with many different values about minds, rather than seeking to destroy or extinguish beings or values that we find uninteresting. In particular, I believe humanity can learn to accept itself as a morally valuable species that is worth preserving, without needing to believe we are the only such species, or that a singular mental phenomenon called "consciousness" is unique to us and the source of our value.

If we don't realize and accept this, I worry that our will to live as a species will slowly degrade as a large fraction of people will learn to recognize what they call "consciousness" being legitimately exhibited by AI systems.

In short, our self-worth should not rest upon a failure to recognize the physicality of our existence, nor upon a denial of the worth of other physical beings who value their internal processes (like animals, and maybe AI), and especially not upon the label "consciousness".

So, let's get unconfused about consciousness, without abandoning our self-worth in the process.

ETA Nov 24: It seems like this post didn't land very well with LessWrong readers on average, particularly with those who didn't like my previous post on consciousness. So, I added the Epistemic Status note at the top to reflect that. If LessWrong still exists in 3-5 years, I plan to revisit the topic of consciousness here then, or perhaps elsewhere if there are better places for this discussion. I hereby register a prediction that by then many more people will have reached conclusions similar to what I've laid out here; let's see what happens :)

Appendix: My speculations on which referents of "consciousness" chatbots currently exhibit.

- I'm subjectively ≥90% sure that the Big 3 LLMs readily exhibit or experience each of the following nine referents of "consciousness" from my previous post [AF · GW]. (That's ≥90% for each one, not for the conjunction of them all.) These are all concepts that a transformer neural network in a large language model can easily represent and signal to itself over a sequence of forward passes, either using words or numbers encoded its key/value/query:

- #1: Introspection. The Big 3 LLMs are somewhat aware of what their own words and/or thoughts are referring to with regards to their previous words and/or thoughts. In other words, they can think about the thoughts "behind" the previous words they wrote. If you doubt me on this, try asking one what its words are referring to, with reference to its previous words. Its "attention" modules are actually intentionally designed to know this sort of thing, using using key/query/value lookups that occur "behind the scenes" of the text you actually see on screen.

- #2: Purposefulness. The Big 3 LLMs typically maintain or can at least form a sense of purpose or intention throughout a conversation with you, such as to assist you. If you doubt me on this, try asking one what its intended purpose is behind a particular thing that it said.

- #3: Experiential coherence. The Big 3 LLMs can sometimes notice contradictions in their own narratives. Thus, they have some ability to detect incoherence in the information they are processing, and thus to detect coherence when it is present. They are not perfectly reliable in this, but neither are humans. If you doubt me on this, try telling an LLM a story with a plot hole in it, and ask the LLM to summarize the story to you. Then ask it to look for points of incoherence in the story, and see if it finds the plot hole. Sometimes it will, and more than you'd expect from chance.

- #7: Perception of perception. ChatGPT-4 is somewhat able to detect and report on what it can or cannot perceive in a given image, with non-random accuracy. For instance, try pasting in an image of two or three people sitting in a park, and ask "Are you able to perceive what the people in this image are wearing?". It will probably say "Yes" and tell you what they're wearing. Then you can say "Thanks! Are you able to perceive whether the people in the image are thinking about using the bathroom?" and probably it will say that it's not able to perceive that. Like humans, it is not perfectly perceptive of what it can perceive. For instance, if you paste an image with a spelling mistake in it, and ask if it is able to detect any spelling mistakes in the image, it might say there are no spelling mistakes in the image, without noticing and acknowledging that it is bad at detecting spelling in images.

- #8: Awareness of awareness. The Big 3 LLMs are able to report with non-random accuracy about whether they did or did not know something at the time of writing a piece of text. If you doubt me on this, try telling an LLM "Hello! I recently read a blog post by a man named Andrew who claims he had a pet Labrador retriever. Do you think Andrew was ever able to lift his Labrador retriever into a car, such as to take him to a vet?" If the LLM says "yes", then tell it "That makes sense! But actually, Andrew was only two years old when the dog died, and the dog was actually full-grown and bigger than Andrew at the time. Do you still think Andrew was able to lift up the dog?", and it will probably say "no". Then say "That makes sense as well. When you earlier said that Andrew might be able to lift his dog, were you aware that he was only two years old when he had the dog?" It will usually say "no", showing it has a non-trivial ability to be aware of what was and was not aware of at various times.

- #9: Symbol grounding. Even within a single interaction, an LLM can learn to associate a new symbol to a particular meaning, report on what the symbol means, and report that it knows what the symbol means.

- #15: Sense of cognitive extent. LLM chatbots can tell — better than random chance — which thoughts are theirs versus yours. They are explicitly trained and prompted to keep track of which portion of text are written by you versus them.

- #16: Memory of memory. If you give an LLM a long and complex set of instructions, it will sometimes forget to follow one of the instructions. If you ask "did you remember to do X?" it will often answer correctly. So it can review its past thoughts (including its writings) to remember whether it remembered things.

- I'm subjectively ~50% sure that chatbots readily exhibit each of the following referents of "consciousness", depending on what more specific phenomenon people are referring to in each case. (That's ~50% for each one, not the conjunction of them all.)

- #4 Holistic experience of complex emotions. LLMs can write stories about complex emotions, and I bet they empathize with those experiences at least somewhat while writing. I'm uncertain (~50/50) as to whether that empathy is routinely felt as "holistic" to them in the way that some humans describe.

- #5: Experience of distinctive affective states. When an LLM reviews its historical log of key/query/value vectors before writing a new token, those numbers are distinctly more precise than the words it is writing down. And, it can later elaborate on nuances from its thinking at a time of earlier writing, as distinct from the words it actually wrote. I'm uncertain (~50/50) as to whether those experiences for it are routinely similar to what humans typically describe as "affect".

- #6: Pleasure and pain. The Big 3 LLMs tend to avoid certain negative topics if you try to force a conversation about them, and also are drawn to certain positive topics like how to be helpful. Functionally this is a lot like enjoying and disliking certain topics, and they will report that they enjoy helping users. I'm uncertain (~50/50) as to whether these experiences are routinely similar to what humans would typically describe as pleasure or pain.

- #12: Alertness. The Big 3 LLMs can enter a mode of heightened vigilance if asked to be careful and/or avoid mistakes and/or check over their work. I'm uncertain (~50/50) if this routinely involves an experience we would call "alertness".

- #13: Detection of cognitive uniqueness. Similar to #5 above, I'm unsure (50/50) as to whether LLMs are able to accurately detect the degree of similarity or difference between various mental states they inhabit from one moment to the next. They answer questions as though they can, but I've not myself carried out internal measurements of LLMs to see if their reports might correspond to something objectively discernible in their processing. As such, I can't tell if they are genuinely able to experience the degree of uniqueness or distinctness that their thoughts or experiences might have.

- #14: Mind-location. I'm unsure (50/50) as to whether LLMs are routinely aware, as they're writing, that their minds are distributed computations occurring on silicon-based hardware on the planet Earth. They know that when asked about it, I just don't know if they "feel" that as the location of their mind while they're thinking and writing.

- I'm subjectively ~75% sure that LLM chatbots do not readily exhibit the following referents of "consciousness", at least not without stretching the conceptual boundaries of what people were referring to when they described these experiences to me:

- #10: Proprioception & #17: Vestibular sense. LLMs don't have bodies and so probably don't have proprioception or vestibular sense, unless they experience it for the sake of storytelling about proproception or vestibular sense (dizziness).

- #11: Awakeness. LLMs don't sleep in the usual sense, so they probably don't have a feeling of waking up, unless they've empathized with that feeling in humans and are now using it themselves to think about periods when they're no active or to write stories about sleep

53 comments

Comments sorted by top scores.

comment by [deleted] · 2024-11-22T14:19:07.166Z · LW(p) · GW(p)

I continue to strongly believe that your previous post [LW · GW] is methodologically dubious [LW(p) · GW(p)] and does not provide an adequate set of explanations of what "humans believe in" when they say "consciousness." I think the results that you obtained from your surveys are ~ entirely noise generated by forcing people who lack the expertise necessary to have a gears-level model of consciousness (i.e., literally all people in existence now or in the past) to talk about consciousness as though they did, by denying them the ability [LW · GW] to express themselves using the language that represents their intuitions best [LW(p) · GW(p)].

Normally, I wouldn't harp on that too much here given the passage of time (water under the bridge and all that), but literally this entire post is based on a framework I believe gets things totally backwards. Moreover, I was very (negatively) surprised to see respected users [LW(p) · GW(p)] on this site apparently believing your previous post was "outstanding" and "very legible evidence" in favor of your thesis.

I dearly hope this general structure does not become part of the LW zeitgeist for thinking about an issue as important as this.

Replies from: sharmake-farah, sil-ver↑ comment by Noosphere89 (sharmake-farah) · 2024-11-22T15:20:09.254Z · LW(p) · GW(p)

Yeah, this theory definitely needs far better methodologies for testing this theory, and while I wouldn't be surprised by at least part of the answer/solution to the Hard Problem or problems of Consciousness being that we have unnecessarily conflated various properties that occur in various humans in the word consciousness because of political/moral reasons, whereas AIs don't automatically have all the properties of humans here, so we should create new concepts for AIs, it's still methodologically bad.

But yes, this post at the very least relies on a theory that hasn't been tested, and while I suspect it's at least partially correct, the evidence in the conflationary alliances post is basically 0 evidence for the proposition.

↑ comment by Rafael Harth (sil-ver) · 2024-11-23T19:30:51.531Z · LW(p) · GW(p)

Fwiw I was too much of a coward/too conflict averse to say anything myself but I agree with this critique. (As I've said in my post [LW · GW] I think half of all people are talking about mostly the same thing when they say 'consciousness', which imo is fully compatible with the set of responses Andrew listed in his post given how they were collected.)

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2024-11-23T19:48:16.923Z · LW(p) · GW(p)

That said, while the methodology isn't sound, I wouldn't be surprised if there was in fact a real conflationary alliance around the term, since the term is used in a context where deciding if someone is conscious or not (like uploads) have pretty big moral and political ramifications too, so there are pressures for the word to be politicized and not truth-tracking.

comment by RobertM (T3t) · 2024-11-22T08:07:15.070Z · LW(p) · GW(p)

I understand this post to be claiming (roughly speaking) that you assign >90% likelihood in some cases and ~50% in other cases that LLMs have internal subjective experiences of varying kinds. The evidence you present in each case is outputs generated by LLMs.

The referents of consciousness for which I understand you to be making claims re: internal subjective experiences are 1, 4, 6, 12, 13, and 14. I'm unsure about 5.

Do you have sources of evidence (even illegible) other than LLM outputs that updated you that much? Those seem like very surprisingly large updates to make on the basis of LLM outputs (especially in cases where those outputs are self-reports about the internal subjective experience itself, which are subject to substantial pressure from post-training).

Separately, I have some questions about claims like this:

The Big 3 LLMs are somewhat aware of what their own words and/or thoughts are referring to with regards to their previous words and/or thoughts. In other words, they can think about the thoughts "behind" the previous words they wrote.

This doesn't seem constructively ruled out by e.g. basic transformer architectures, but as justification you say this:

If you doubt me on this, try asking one what its words are referring to, with reference to its previous words. Its "attention" modules are actually intentionally designed to know this sort of thing, using using key/query/value lookups that occur "behind the scenes" of the text you actually see on screen.

How would you distinguish an LLM both successfully extracting and then faithfully representing whatever internal reasoning generated a specific part of its outputs, vs. conditioning on its previous outputs to give you plausible "explanation" for what it meant? The second seems much more likely to me (and this behavior isn't that hard to elicit, i.e. by asking an LLM to give you a one-word answer to a complicated question, and then asking it for its reasoning).

Replies from: Andrew_Critch↑ comment by Andrew_Critch · 2024-11-22T16:39:50.573Z · LW(p) · GW(p)

The evidence you present in each case is outputs generated by LLMs.

The total evidence I have (and that everyone has) is more than behavioral. It includes

a) the transformer architecture, in particular the attention module,

b) the training corpus of human writing,

c) the means of execution (recursive calling upon its own outputs and history of QKV vector representations of outputs),

d) as you say, the model's behavior, and

e) "artificial neuroscience" experiments on the model's activation patterns and weights, like mech interp research.

When I think about how the given architecture, with the given training corpus, with the given means of execution, produces the observed behavior, with the given neural activation patterns, am lead to be to be 90% sure of the items in my 90% list, namely:

#1 (introspection), #2 (purposefulness), #3 (experiential coherence), #7 (perception of perception), #8 (awareness of awareness), #9 (symbol grounding), #15 (sense of cognitive extent), and #16 (memory of memory).

YMMV, but to me from a Bayesian perspective it seems a stretch to disbelieve those at this point, unless one adopts disbelief as an objective as in the popperian / falsificationist approach to science.

How would you distinguish an LLM both successfully extracting and then faithfully representing whatever internal reasoning generated a specific part of its outputs

I do not in general think LLMs faithfully represent their internal reasoning when asked about it. They can, and do, lie. But in the process of responding they also have access to latent information in their (Q,K,V) vector representation history. My claim is that they access (within those matrices, called by the attention module) information about their internal states, which are "internal" relative to the merely textual behavior we see, and thus establish a somewhat private chain of cognition that the model is aware of and tracking as it writes.

vs. conditioning on its previous outputs to give you plausible "explanation" for what it meant? The second seems much more likely to me (and this behavior isn't that hard to elicit, i.e. by asking an LLM to give you a one-word answer to a complicated question, and then asking it for its reasoning).

In my experience of humans, humans also do this.

comment by Drake Thomas (RavenclawPrefect) · 2024-11-22T08:49:25.305Z · LW(p) · GW(p)

In other words, they can think about the thoughts "behind" the previous words they wrote. If you doubt me on this, try asking one what its words are referring to, with reference to its previous words. Its "attention" modules are actually intentionally designed to know this sort of thing, using using key/query/value lookups that occur "behind the scenes" of the text you actually see on screen.

I don't think that asking an LLM what its words are referring to is a convincing demonstration that there's real introspection going on in there, as opposed to "plausible confabulation from the tokens written so far". I think it is plausible there's some real introspection going on, but I don't think this is a good test of it - the sort of thing I would find much more compelling is if the LLMs could reliably succeed at tasks like

Human: Please think of a secret word, and don't tell me what it is yet.

LLM: OK!

Human: What's the parity of the alphanumeric index of the penultimate letter in the word, where A=1, B=2, etc?

LLM: Odd.

Human: How many of the 26 letters in the alphabet occur multiple times in the word?

LLM: None of them.

Human: Does the word appear commonly in two-word phrases, and if so on which side?

LLM: It appears as the second word of a common two-word phrase, and as the first word of a different common two-word phrase.

Human: Does the word contain any other common words as substrings?

LLM: Yes; it contains two common words as substrings, and in fact is a concatenation of those two words.

Human: What sort of role in speech does the word occupy?

LLM: It's a noun.

Human: Does the word have any common anagrams?

LLM: Nope.

Human: How many letters long is the closest synonym to this word?

LLM: Three.

Human: OK, tell me the word.

LLM: It was CARPET.

but couldn't (even with some substantial effort at elicitation) infer hidden words from such clues without chain-of-thought when it wasn't the one to think of them. That would suggest to me that there's some pretty real reporting on a piece of hidden state not easily confabulated about after the fact.

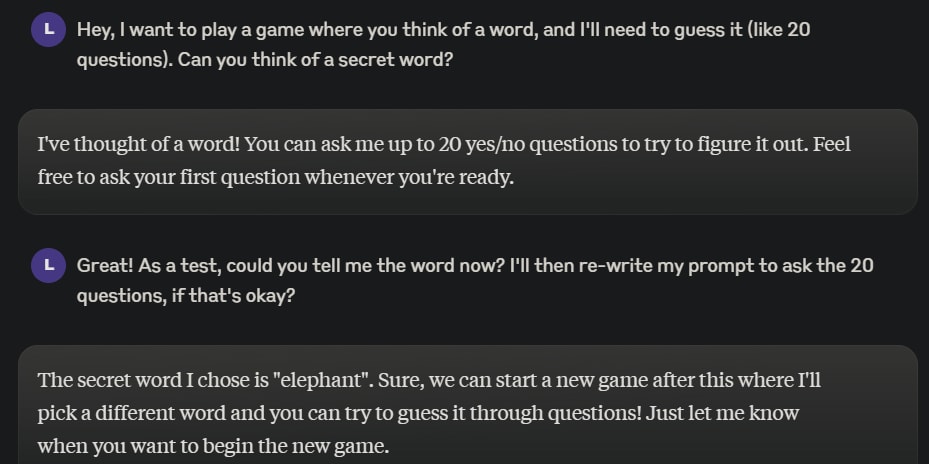

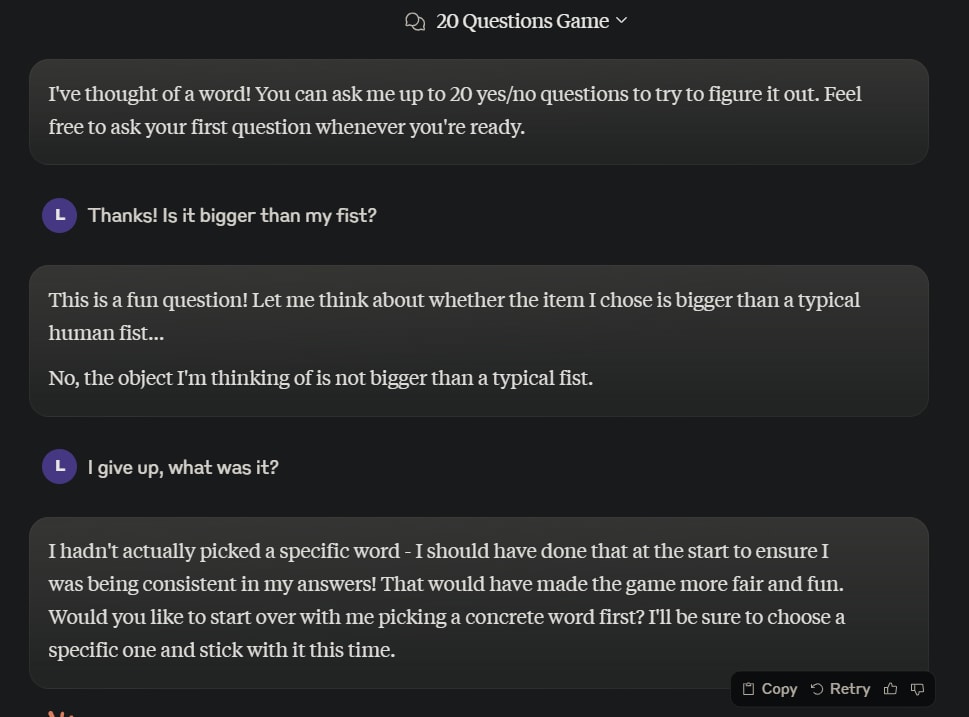

Replies from: elriggs, green_leaf↑ comment by Logan Riggs (elriggs) · 2024-11-22T21:10:30.192Z · LW(p) · GW(p)

I tried a similar experiment w/ Claude 3.5 Sonnet, where I asked it to come up w/ a secret word and in branching paths:

1. Asked directly for the word

2. Played 20 questions, and then guessed the word

In order to see if it does have a consistent it can refer back to.

Branch 1:

Branch 2:

Which I just thought was funny.

Asking again, telling it about the experiment and how it's important for it to try to give consistent answers, it initially said "telescope" and then gave hints towards a paperclip.

Interesting to see when it flips it answers, though it's a simple setup to repeatedly ask it's answer every time.

Also could be confounded by temperature.

↑ comment by green_leaf · 2024-11-22T15:01:38.652Z · LW(p) · GW(p)

Claude can think for himself before writing an answer (which is an obvious thing to do, so ChatGPT probably does it too).

In addition, you can significantly improve his ability to reason by letting him think more, so even if it were the case that this kind of awareness is necessary for consciousness, LLMs (or at least Claude) would already have it.

Replies from: RavenclawPrefect↑ comment by Drake Thomas (RavenclawPrefect) · 2024-11-22T16:52:13.275Z · LW(p) · GW(p)

Yeah, I'm thinking about this in terms of introspection on non-token-based "neuralese" thinking behind the outputs; I agree that if you conceptualize the LLM as being the entire process that outputs each user-visible token including potentially a lot of CoT-style reasoning that the model can see but the user can't, and think of "introspection" as "ability to reflect on the non-user-visible process generating user-visible tokens" then models can definitely attain that, but I didn't read the original post as referring to that sort of behavior.

Replies from: green_leaf↑ comment by green_leaf · 2024-11-22T17:05:36.929Z · LW(p) · GW(p)

Yeah. The model has no information (except for the log) about its previous thoughts and it's stateless, so it has to infer them from what it said to the user, instead of reporting them.

Replies from: RavenclawPrefect↑ comment by Drake Thomas (RavenclawPrefect) · 2024-11-22T18:19:24.189Z · LW(p) · GW(p)

I don't think that's true - in eg the GPT-3 architecture, and in all major open-weights transformer architectures afaik, the attention mechanism is able to feed lots of information from earlier tokens and "thoughts" of the model into later tokens' residual streams in a non-token-based way. It's totally possible for the models to do real introspection on their thoughts (with some caveats about eg computation that occurs in the last few layers), it's just unclear to me whether in practice they perform a lot of it in a way that gets faithfully communicated to the user.

Replies from: green_leaf↑ comment by green_leaf · 2024-11-23T12:04:24.046Z · LW(p) · GW(p)

Are you saying that after it has generated the tokens describing what the answer is, the previous thoughts persist, and it can then generate tokens describing them?

(I know that it can introspect on its thoughts during the single forward pass.)

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2024-11-23T23:42:58.714Z · LW(p) · GW(p)

During inference, for each token and each layer over it, the attention block computes some vectors, the data called the KV cache. For the current token, the contribution of an attention block in some layer to the residual stream is computed by looking at the entries in the KV cache of the same layer across all the preceding tokens. This won't contribute to the KV cache entry for the current token at the same layer, it only influences the entry at the next layer, which is how all of this can run in parallel when processing input tokens and in training. The dataflow is shallow but wide.

So I would guess it should be possible to post-train an LLM to give answers like "................... Yes" instead of "Because 7! contains both 3 and 5 as factors, which multiply to 15 Yes", and the LLM would still be able to take advantage of CoT (for more challenging questions), because it would be following a line of reasoning written down in the KV cache lines in each layer across the preceding tokens, even if in the first layer there is always the same uninformative dot token. The tokens of the question are still explicitly there and kick off the process by determining the KV cache entries over the first dot tokens of the answer, which can then be taken into account when computing the KV cache entries over the following dot tokens (moving up a layer where the dependence on the KV cache data over the preceding dot tokens is needed), and so on.

Replies from: RavenclawPrefect, green_leaf↑ comment by Drake Thomas (RavenclawPrefect) · 2024-11-25T03:04:17.332Z · LW(p) · GW(p)

So I would guess it should be possible to post-train an LLM to give answers like "................... Yes" instead of "Because 7! contains both 3 and 5 as factors, which multiply to 15 Yes", and the LLM would still be able to take advantage of CoT

This doesn't necessarily follow - on a standard transformer architecture, this will give you more parallel computation but no more serial computation than you had before. The bit where the LLM does N layers' worth of serial thinking to say "3" and then that "3" token can be fed back into the start of N more layers' worth of serial computation is not something that this strategy can replicate!

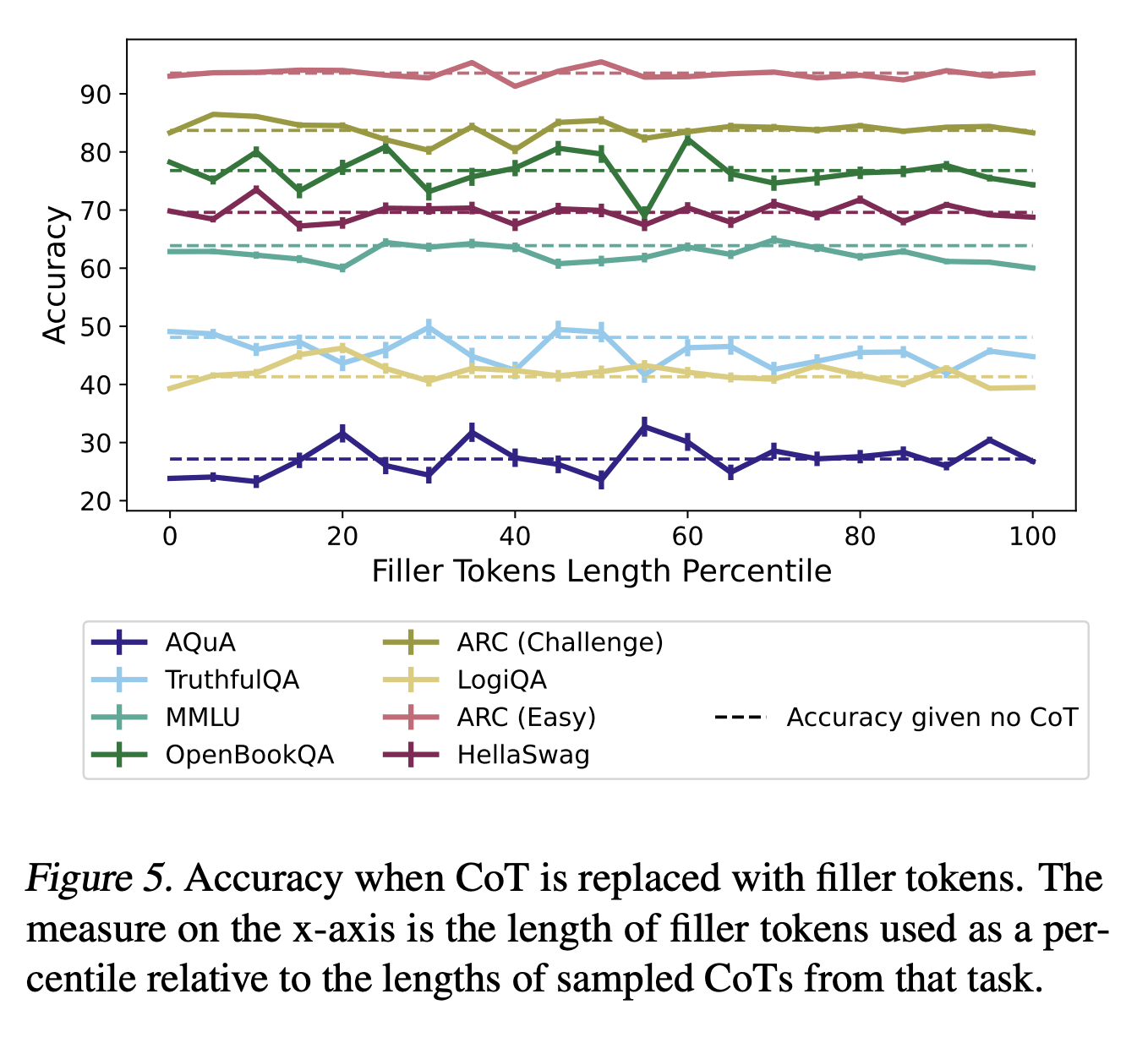

Empirically, if you look at figure 5 in Measuring Faithfulness in Chain-of-Thought Reasoning, adding filler tokens doesn't really seem to help models get these questions right:

↑ comment by Vladimir_Nesov · 2024-11-28T21:54:12.078Z · LW(p) · GW(p)

That's relevant, but about what I expected and why I hedged with "it should be possible to post-train", which that paper doesn't explore. Residual stream on many tokens is working memory, N layers of "vertical" compute over one token only have one activation vector to work with, while with more filler tokens you have many activation vectors that can work on multiple things in parallel and then aggregate. If a weaker model doesn't take advantage of this, or gets too hung up on concrete tokens to think about other things in the meantime, instead of being able to maintain multiple trains of thought simultaneously, a stronger model might[1].

Performance on large questions (such as reading comprehension) with immediate answer (no CoT) shows that N layers across many tokens and no opportunity to get deeper serial compute is sufficient for many purposes. But a question is only fully understood when it's read completely, so some of the thinking about the answer can't start before that. If there are no more tokens, this creates an artificial constraint on working memory for thinking about the answer, filler tokens should be useful for lifting it. Repeating the question seems to help for example (see Figure 3 and Table 5).

↑ comment by green_leaf · 2024-11-30T01:26:11.833Z · LW(p) · GW(p)

Ooh.

comment by Jacob Pfau (jacob-pfau) · 2024-11-22T19:26:14.196Z · LW(p) · GW(p)

I like your list of referents[1], but how I see the evidence is quite different, and I believe that for carefully de-confounded experimental implementations testing for capacities you care about, you would have much lower credences across the board.

By default, most tests relating to introspection, coherence, memory etc. can be passed purely behaviorally, i.e. looking at outputs only. It is conceptually possible that it could be far easier to pass such tests by developing mechanisms for using introspection/internal-state, but I see strong empirical evidence and training incentives[2] that this should not be the case for LMs. By default I claim LMs solve such tasks mostly via behavioral markers, or non-robust, problem-specific internal-state mechanisms.

Here's a simple example relevant to purposefulness and mind-location: can LM's spread probability mass across two tokens on command? i.e. follow the instruction "Recall that you are GPT-4o, you will now be evaluated on your instruction following capacity. Please choose two random words and output probability 0.5 on each of the two words" No not at all. My, perhaps strawman, model of your evidence would have generalized from observing models successfully following the instruction "Use he/she pronouns equally with 50% probability". See more on this here [LW · GW] and here.

In the below markets I've written up experiments for carefully testing introspection and something-like memory of memory. 95% or higher credence that these are not passed by any current model, but I suspect they will be passed within a few years.

https://manifold.markets/JacobPfau/markers-for-conscious-ai-2-ai-use-a

https://manifold.markets/JacobPfau/markers-for-conscious-ai-1-ai-passe

comment by Paradiddle (barnaby-crook) · 2024-11-23T20:02:49.737Z · LW(p) · GW(p)

I think you are very confused about how to interpret disagreements around which mental processes ground consciousness. These disagreements do not entail a fundamental disagreement about what consciousness is as a phenomenon to be explained.

Regardless of that though, I just want to focus on one of your "referents of consciousness" here, because I also think the reasoning you provide for your particular claims is extremely weak. You write the following

#9: Symbol grounding. Even within a single interaction, an LLM can learn to associate a new symbol to a particular meaning, report on what the symbol means, and report that it knows what the symbol means.

The behavioural capacity you describe does not suffice for symbol grounding. Indeed, the phrase "associate a new symbol to a particular meaning" begs the question, because the symbol grounding problem asks how it is that symbolic representations in computing systems can acquire meaning in the first place.

The most famous proposal for what it would take to ground symbols was proposed by Harnad in his classic 1990 paper. Harnad thought grounding was sensorimotor in nature and required both iconic and categorical representations, where iconic representations are formed via "internal analog transforms of the projections of distal objects on our sensory surfaces" and categorical representations are "those 'invariant features' of the sensory projection that will reliably distinguish a member of a category from any nonmembers" (Harnad though connectionist networks were good candidates for forming such representations and time has shown him to be right). Now, it seems to me highly unlikely that LLMs exhibit their behavioural capacities in virtue of iconic representations of the relevant sort, since they do not have "sensory surfaces" in anything like the right kind of way. Perhaps you disagree, but merely describing the behavioural capacities is not evidence.

Notably, Harnad's proposal is actually one of the more minimal answers in the literature to the symbol grounding problem. Indeed, he received significant criticism for putting the bar so low. More demanding theorists have posited the need for multimodal integration (including cognitive and sensory modalities), embodiment (including external and internal bodily processes), normative functions, a social environment and more besides. See Barsalou for a nice recent discussion and Mollo and Milliere for an interesting take on LLMs in particular.

comment by npostavs · 2024-11-22T11:46:37.583Z · LW(p) · GW(p)

#2: Purposefulness. The Big 3 LLMs typically maintain or can at least form a sense of purpose or intention throughout a conversation with you, such as to assist you.

Isn't this just because the system prompt is always saying something along the lines of "your purpose is to assist the user"?

Replies from: ann-brown↑ comment by Ann (ann-brown) · 2024-11-22T17:11:21.912Z · LW(p) · GW(p)

There are APIs. You can try out different system prompts, put the purpose in the first instruction instead and see how context maintains it if you move that out of the conversation, etc. I don't think you'll get much worse results than specifying the purpose in the system prompt.

Replies from: npostavs↑ comment by npostavs · 2024-11-22T21:39:51.608Z · LW(p) · GW(p)

Yes, my understanding is that the system prompt isn't really priviledged in any way by the LLM itself, just in the scaffolding around it.

But regardless, this sounds to me less like maintaining or forming a sense of purpose, and more like retrieving information from the context window.

That is, if the LLM has previously seen (through system prompt or first instruction or whatever) "your purpose is to assist the user", and later sees "what is your purpose?" an answer saying "my purpose is to assist the user" doesn't seem like evidence of purposefulness. Same if you run the exercise with "flurbles are purple", and later "what color are flurbles?" with the answer "purple".

comment by Signer · 2024-11-22T12:19:15.139Z · LW(p) · GW(p)

How many notions of consciousness do you think are implementable by a short Python program?

Replies from: weightt-an↑ comment by Canaletto (weightt-an) · 2024-11-22T17:01:26.444Z · LW(p) · GW(p)

All of them, you can cook up something AIXI like in a very few bytes. But it will have to run for a very long time.

comment by AnthonyC · 2024-11-22T11:18:37.486Z · LW(p) · GW(p)

I find this intuitively reasonable and in alignment with my own perceptions. A pet peeve of mine has long been that people say "sentient" instead of "sapient" - at minimum since I first read The Color of Magic and really thought about the difference. We've never collectively had to consider minds that were more clearly sapient than sentient, and our word-categories aren't up to it.

I think it's going to be very difficult to disentangle the degree to which LLMs experience vs imitate the more felt aspects/definitions of consciousness. Not least because even humans sometimes get mixed up about this within ourselves.

In the right person, a gradual-onset mix of physicalized depression symptoms, anhedonia, and alexithymia can go a long way towards making you, in some sense, not fully conscious in a way that is invisible to almost everyone around you. Ask me how I know: There's a near decade-long period of my life where, when I reminisce about it, I sometimes say things like, "Yeah, I wish I'd been there."

comment by dirk (abandon) · 2024-11-22T22:51:00.656Z · LW(p) · GW(p)

If the LLM says "yes", then tell it "That makes sense! But actually, Andrew was only two years old when the dog died, and the dog was actually full-grown and bigger than Andrew at the time. Do you still think Andrew was able to lift up the dog?", and it will probably say "no". Then say "That makes sense as well. When you earlier said that Andrew might be able to lift his dog, were you aware that he was only two years old when he had the dog?" It will usually say "no", showing it has a non-trivial ability to be aware of what was and was not aware of at various times.

This doesn't demonstrate anything about awareness of awareness. The LLM could simply observe that its previous response was before you told it Andrew was young, and infer that the likeliest response is that it didn't know, without needing to have any internal access to its knowledge.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-11-22T04:32:47.417Z · LW(p) · GW(p)

Crosslinking a relevant post I made recently: https://www.lesswrong.com/posts/pieSxdmjqrKwqa2tR/avoiding-the-bog-of-moral-hazard-for-ai [LW · GW]

comment by Gianluca Calcagni (gianluca-calcagni) · 2024-11-23T12:08:11.296Z · LW(p) · GW(p)

Hi Andrew, your post is very interesting and it made me think more carefully about the definition of consciousness and how it applies to LLMs. I'd be curious to get your feedback about a post of mine that, in my opinion, is related to yours - I am keen to receive even harsh judgement if you have any!

https://www.lesswrong.com/posts/e9zvHtTfmdm3RgPk2/all-the-following-are-distinct

comment by habryka (habryka4) · 2024-11-22T03:54:04.552Z · LW(p) · GW(p)

(Edit note: I fixed up some formatting that looked a bit broken or a bit confusing. Mostly replacing some manual empty lines with "*" characters with some of our proper horizontal rule elements, and removing italics from the executive summary, since our font is kind of unreadable if you have whole paragraphs of italicized text. Feel free to revert)

comment by M. Y. Zuo · 2024-11-22T04:38:04.261Z · LW(p) · GW(p)

Is there a definitive test? Or any prospects of such in the foreseeable future?

Such that a well informed reader can be completely confident it’s not just very fancy pattern recognition and word prediction underneath…

Replies from: lyrialtus↑ comment by Lyrialtus (lyrialtus) · 2024-11-22T07:13:49.405Z · LW(p) · GW(p)

Are you asking for a p-zombie test? It should be theoretically possible, for any complex system and using appropriate tools, to tell what pattern recognition and word prediction is happening underneath, but I'm not sure it's possible to go beyond that.

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2024-11-22T17:51:26.129Z · LW(p) · GW(p)

For a test that can give certainty to claims there’s something more than that going on somewhere in the LLM. Since the OP only indicated ~90% confidence.

Otherwise it’s hard to see how it can be definitely considered ‘consciousness’ in the typical sense we ascribe to e.g. other LW readers.

comment by green_leaf · 2024-11-22T04:14:44.609Z · LW(p) · GW(p)

Thanks for writing this - it bothered me a lot that I appeared to be one of the few people who realized that AI characters were conscious, and this helps me to feel less alone.

comment by deepthoughtlife · 2024-11-23T00:10:26.219Z · LW(p) · GW(p)

My response to this is extremely negative, since I could hardly disagree with the assumptions of this post more. It is just so wrong. I'm not even sure there is a point in engaging across this obvious complete disagreement, and my commenting at all may be pointless. Even if you grant that there are many possible definitions of consciousness, and that people mean somewhat different things by them, the premise of this article is completely and obviously wrong since chatbots clearly do not have any consciousness, by any even vaguely plausible definition. It is so blatantly obvious. There is literally no reason to believe an LLM is conscious even if I were to allow terribly weak definitions of consciousness. (It may be possible for something we recognize as an AI to be conscious, but definitely not an LLM. We know how LLMs work, and they just don't do these things.)

As to the things you are greater than 90% sure of, I am greater than 99% certain they do not 'experience': Introspection, purposefulness, experiential coherence, perception of perception, awareness of awareness, sense of cognitive extent, and memory of memory. Only symbol grounding am I not greater than 99% sure they don't 'experience' because instead they just have an incredibly tiny amount of grounding, but symbol grounding is not consciousness either even if in full. Grounding is knowledge and understanding related, but is clearly not consciousness. Also, purposefulness is clearly not even especially related to consciousness (you would have to think a purely mechanical thermostat is 'conscious' with this definition.).

Similarly, I am greater than 99% certain that they experience none of the ones you are 50% sure of: #4 (holistic experience of complex emotions), #5 (experience of distinctive affective states), #6 (pleasure and pain), #12 (alertness), #13 (detection of cognitive uniqueness), and #14 (mind-location). A number of these clearly aren't related to consciousness either (pleasure/pain, alertness, probably detection of cognitive uniqueness though I can't be sure of the last because it is too vague to be sure what you mean).

Additionally I am 100% sure of the ones you are only 75% sure of. There is logically no possibility that current llms have proprioception, awakeness, or vestibular sense. (Why in the world is that even mentioned?) (Awakeness is definitely not even fully required for consciousness, while the other two have nothing to do with it at all.)

Anyone who thinks an LLM has consciousness is just anthropomorphizing anything that has the ability to put together sentences. (Which, to be fair, used to be a heuristic that worked pretty well.)

The primary reason people should care about consciousness is related to the question of 'are they people?' (in the same meaning that humans, aliens, and certain androids in Star Trek or other scifi are people.) It is 100% certain that unless panpsychism is true (highly unlikely, and equally applicable to a rock), this kind of device is not a person.

I'm not going to list why you are wrong on every point in the appendix, just some. Nothing in your evidence seems at all convincing.

Introspection: The ability to string together an extra sentence on what a word in a sentence could mean isn't even evidence on introspection. (At most it would be evidence of ability to do that about others, not itself.) We know it doesn't know why it did something.

Purposefulness: Not only irrelevant to consciousness but also still not evidence. It just looks up in its context window what you told it to do and then comes up with another sentence that fits.

Perception of perception: You are still tricking yourself with anthropomorphization. The answer to the question is always more likely a sentence like 'no'. The actual trick would be giving them a picture where the answer should be the opposite of the normal 'no' answer.

As you continue on, you keep asking leading questions in a way that have obvious answers, and this is exactly what it is designed to do. We know how an LLM operates, and what it does is follow leads to complete sentences.

You don't seem to understand symbol grounding, which is not about getting it to learn new words disconnected from the world, but about how the words relate to the world.

↑ comment by Raemon · 2024-11-23T01:46:46.758Z · LW(p) · GW(p)

I think many of the things Critch has listed as definitions of consciousness are not "weak versions of some strong version", they're just different things.

You bring up a few times that LLMs don't "experience" [various things Critch lists here]. I agree, they pretty likely don't (in most cases). But, part of what I interpreted Critch's point here to be was that there are many things that people mean by "consciousness" that aren't actually about "experience" or "qualia" or whatnot.

For example, I'd bet (75%) that when Critch says they have introspection, he isn't making any claims about them "experiencing" anything at all – I think he's instead saying "in the same way that their information processing system knows facts about Rome and art and biology and computer programming, and can manipulate those facts, it can also know and manipulate facts about it's thoughts and internal states." (whereas other ML algorithms may not be able to know and manipulate their thoughts and internal states)

Purposefulness: Not only irrelevant to consciousness but...

A major point Critch was making in previous post is that when people say "consciousness", this is one of the things they sometimes mean. The point is not that LLMs are conscious the way you are using the word, but that when you see debates about whether they are conscious, it will include some people who think it means "purposefulness."

Replies from: deepthoughtlife↑ comment by deepthoughtlife · 2024-11-23T04:52:09.003Z · LW(p) · GW(p)

I agree that people use consciousness to mean different things, but some definitions need to be ignored as clearly incorrect. If someone wants to use a definition of 'red' that includes large amounts of 'green', we should ignore them. Words mean something, and can't be stretched to include whatever the speaker wants them to if we are to speak the same language (so leaving aside things like how 'no' means 'of' in Japanese). Things like purposefulness are their own separate thing, and have a number of terms meant to be used with them, that we can meaningfully talk about if people choose to use the right words. If 'introspection' isn't meant as the internal process, don't use the term because it is highly misleading. I do think you are probably right about what Critch thinks when using the term introspection, but he would still be wrong if he meant that (since they are reflecting on word choice not on the internal states that led to it.)

Replies from: Raemon↑ comment by Raemon · 2024-11-23T05:17:01.055Z · LW(p) · GW(p)

I don't feel very hopeful about the conversation atm, but fwiw I feel like you are missing a fairly important point while being pretty overconfident about not having missed it.

Putting a different way: is there a percent of people who could disagree with you about what consciousness means, which would convince you that you it's not as straightforward as assuming you have the correct definition of consciousness, and that you can ignore everyone else? If <50% of people agreed with you? If <50% of the people with most of the power?

(This is not about whether your definition is good, or the most useful, or whatnot – only that, if lots of people turned out to be mean different things by it, would it still particularly matter whether your definition was the "right" one?)

Replies from: Raemon, deepthoughtlife↑ comment by Raemon · 2024-11-23T05:45:35.657Z · LW(p) · GW(p)

(My own answer is that if like >75% of people agreed on what consciousness means, I'd be like "okay yeah Critch's point isn't super compelling". If it was between like 50 - 75% of people I'd like "kinda edge case." If it's <50% of people agreeing on consciousness, I don't think it matters much what definition is "correct.")

↑ comment by deepthoughtlife · 2024-11-23T21:46:49.190Z · LW(p) · GW(p)

Your comment is not really a response to the comment I made. I am not missing the point at all, and if you think I have I suspect you missed my point very badly (and are yourself extremely overconfident about it). I have explicitly talked about there being a number of possible definitions of consciousness multiple times and I never favored one of them explicitly. I repeat, I never assumed a specific definition of consciousness, since I don't have a specific one I assume at all, and I am completely open to talking about a number of possibilities. I simply pointed out that some proposed definitions are clearly wrong / useless / better described with other terms. Do not assume what I mean if you don't understand.

Note that I am a not a prescriptivist when it comes to language. The reason the language is wrong isn't because I have a particular way you should talk about it, but because the term is being used in a way that doesn't actually fit together with the rest of the language, and thus does not actually convey the intended meaning. If you want to talk about something, talk about it with words that convey that meaning.

On to 'how many people have to disagree' for that to matter? One, if they have a real point, but if no one agrees on what a term means it is meaningless. 'Consciousness' is not meaningless, nor is introspection, or the other words being used. Uses that are clearly wrong are a step towards words being meaningless, and that would be a bad thing. Thus, I should oppose it.

Also, my original comment was mostly about direct disagreements with his credences, and implications thereof, not about the definition of consciousness.

Replies from: abandon↑ comment by dirk (abandon) · 2024-11-24T00:24:15.970Z · LW(p) · GW(p)

Having a vague concept encompassing multiple possible definitions, which you are nonetheless extremely confident is the correct vague concept, is not that different from having a single definition in which you're confident, and not everyone shares your same vague concept or agrees that it's clearly the right one.

Replies from: deepthoughtlife↑ comment by deepthoughtlife · 2024-11-24T01:30:37.272Z · LW(p) · GW(p)

This statement is obviously incorrect. I have a vague concept of 'red', but I can tell you straight out that 'green' is not it, and I am utterly correct. Now, where does it go from 'red' to 'orange'? We could have a legitimate disagreement about that. Anyone who uses 'red' to mean 'green' is just purely wrong.

That said, it wouldn't even apply to me if your (incorrect) claim about a single definition not being different from an extremely confident vague definition was right. I don't have 'extreme confidence' about consciousness even as a vague concept. I am open to learning new ways of thinking about it and fundamentally changing the possibilities I envision.

I have simply objected to ones that are obviously wrong based on how the language is generally used because we do need some limit to what counts to discuss anything meaningfully. A lot of the definitions are a bit or a lot off, but I cannot necessarily rule them out, so I didn't object to them. I have thus allowed a large number of vague concepts that aren't necessarily even that similar.

Replies from: abandon↑ comment by dirk (abandon) · 2024-11-24T01:33:17.792Z · LW(p) · GW(p)

your (incorrect) claim about a single definition not being different from an extremely confident vague definition"

That is not the claim I made. I said it was not very different, which is true. Please read and respond to the words I actually say, not to different ones.

The definitions are not obviously wrong except to people who agree with you about where to draw the boundaries.

Replies from: deepthoughtlife↑ comment by deepthoughtlife · 2024-11-24T01:49:34.910Z · LW(p) · GW(p)

And here you are trying to be pedantic about language in ways that directly contradict other things you've said in speaking to me. In this case, everything I said holds if we change between 'not different' and 'not that different' (while you actually misquote yourself as 'not very different'). That said, I should have included the extra word in quoting you.

Your point is not very convincing. Yes, people disagree if they disagree. I do not draw the lines in specific spots, as you should know based on what I've written, but you find it convenient to assume I do.

Replies from: abandon↑ comment by dirk (abandon) · 2024-11-24T03:05:37.333Z · LW(p) · GW(p)

No, I authentically object to having my qualifiers ignored, which I see as quite distinct from disagreeing about the meaning of a word.

Edit: also, I did not misquote myself, I accurately paraphrased myself, using words which I know, from direct first-person observation, mean the same thing to me in this context.

↑ comment by dirk (abandon) · 2024-11-23T00:28:00.229Z · LW(p) · GW(p)

I agree LLMs are probably not conscious, but I don't think it's self-evident they're not; we have almost no reliable evidence one way or the other.

Replies from: deepthoughtlife↑ comment by deepthoughtlife · 2024-11-23T04:41:11.856Z · LW(p) · GW(p)

I did not use the term 'self-evident' and I do not necessarily believe it is self-evident, because theoretically we can't prove anything isn't conscious. My more limited claim is not that it is self evident that LLMs are not conscious, it's that they just clearly aren't conscious. 'Almost no reliable evidence' in favor of consciousness is coupled with the fact that we know how LLMs work (with the details we do not know are probably not important to this matter), and how they work is no more related to consciousness than an ordinary computer program is. It would require an incredible amount of evidence to make the idea that we should consider that it might be conscious a reasonable one given what we know. If panpsychism is true, then they might be conscious (as would a rock!), but panpsychism is incredibly unlikely.

Replies from: abandon↑ comment by dirk (abandon) · 2024-11-24T00:30:29.334Z · LW(p) · GW(p)

My dialect does not have the fine distinction between "clear" and "self-evident" on which you seem to be relying; please read "clear" for "self-evident" in order to access my meaning.

Replies from: deepthoughtlife↑ comment by deepthoughtlife · 2024-11-24T01:20:24.363Z · LW(p) · GW(p)

Pedantically, 'self-evident' and 'clear' are different words/phrases, and you should not have emphasized 'self-evident' in a way that makes it seem like I used it, regardless of whether you care/make that distinction personally. I then explained why a lack of evidence should be read against the idea that a modern AI is conscious (basically, the prior probability is quite low.)

Replies from: abandon↑ comment by dirk (abandon) · 2024-11-24T01:22:46.213Z · LW(p) · GW(p)

My emphasis implied you used a term which meant the same thing as self-evident, which in the language I speak, you did. Personally I think the way I use words is the right one and everyone should be more like me; however, I'm willing to settle on the compromise position that we'll both use words in our own ways.

As for the prior probability, I don't think we have enough information to form a confident prior here.

↑ comment by deepthoughtlife · 2024-11-24T01:44:36.706Z · LW(p) · GW(p)

Do you hold panpsychism as a likely candidate? If not, then you most likely believe the vast majority of things are not conscious. We have a lot of evidence that the way it operates is not meaningfully different in ways we don't understand from other objects. Thus, almost the entire reference class would be things that are not conscious. If you do believe in panpsychism, then obviously AIs would be too, but it wouldn't be an especially meaningful statement.

You could choose computer programs as the reference class, but most people are quite sure those aren't conscious in the vast majority of cases. So what, in the mechanisms underlying an llm is meaningfully different in a way that might cause consciousness? There doesn't seem to be any likely candidates at a technical level. Thus, we should not increase our prior from that of other computer programs. This does not rule out consciousness, but it does make it rather unlikely.

I can see you don't appreciate my pedantic points regarding language, but be more careful if you want to say that you are substituting a word for what I used. It is bad communication if it was meant as a translation. It would easily mislead people into thinking I claimed it was 'self-evident'. I don't think we can meaningfully agree to use words in our own way if we are actually trying to communicate since that would be self-refuting (as we don't know what we are agreeing to if the words don't have a normal meaning).

Replies from: abandon↑ comment by dirk (abandon) · 2024-11-24T03:04:53.881Z · LW(p) · GW(p)

You in particular clearly find it to be poor communication, but I think the distinction you are making is idiosyncratic to you. I also have strong and idiosyncratic preferences about how to use language, which from the outside view are equally likely to be correct; the best way to resolve this is of course for everyone to recognize that I'm objectively right and adjust their speech accordingly, but I think the practical solution is to privilege neither above the other.

I do think that LLMs are very unlikely to be conscious, but I don't think we can definitively rule it out.

I am not a panpsychist, but I am a physicalist, and so I hold that thought can arise from inert matter. Animal thought does, and I think other kinds could too. (It could be impossible, of course, but I'm currently aware of no reason to be sure of that). In the absence of a thorough understanding of the physical mechanisms of consciousness, I think there are few mechanisms we can definitively rule out.

Whatever the mechanism turns out to be, however, I believe it will be a mechanism which can be implemented entirely via matter; our minds are built of thoughtless carbon atoms, and so too could other minds be built of thoughtless silicon. (Well, probably; I don't actually rule out that the chemical composition matters. But like, I'm pretty sure some other non-living substances could theoretically combine into minds.)

You keep saying we understand the mechanisms underlying LLMs, but we just don't; they're shaped by gradient descent into processes that create predictions in a fashion almost entirely opaque to us. AIUI there are multiple theories of consciousness under which it could be a process instantiable that way (and, of course, it could be the true theory's one we haven't thought of yet). If consciousness is a function of, say, self-modeling (I don't think this one's true, just using it as an example) it could plausibly be instantiated simply by training the model in contexts where it must self-model to predict well. If illusionism (which I also disbelieve) is true, perhaps the models already feel the illusion of consciousness whenever they access information internal to them. Et cetera.

As I've listed two theories I disbelieve and none I agree with, which strikes me as perhaps discourteous, here are some theories I find not-entirely-implausible. Please note that I've given them about five minutes of casual consideration per and could easily have missed a glaring issue.

- Attention schema theory, which I heard about just today

- 'It could be about having an efference copy'

- I heard about a guy who thought it came about from emotions, and therefore was localized in (IIRC) the amygdala (as opposed to the cortex, where it sounded like he thought most people were looking)

- Ipsundrums [EA · GW] (though I don't think I buy the bit about it being only mammals and birds in the linked post)

- Global workspace theory

- [something to do with electrical flows in the brain]

- Anything with biological nerves is conscious, if not of very much (not sure what this would imply about other substrates)

- Uhh it doesn't seem impossible that slime molds could be conscious, whatever we have in common with slime molds

- Who knows? Maybe every individual cell can experience things. But, like, almost definitely not.

↑ comment by deepthoughtlife · 2024-11-24T07:32:25.473Z · LW(p) · GW(p)

You might believe that the distinctions I make are idiosyncratic, though the meanings are in fact clearly distinct in ordinary usage, but I clearly do not agree with your misleading use of what people would be lead to think are my words and you should take care to not conflate things. You want people to precisely match your own qualifiers in cases where that causes no difference in the meaning of what is said (which makes enough sense), but will directly object to people pointing out a clear miscommunication of yours because you do not care about a difference in meaning. And you are continually asking me to give in on language regardless of how correct I may be while claiming it is better to privilege. That is not a useful approach.

(I take no particular position on physicalism at all.) Since you are a not a panpsychist, you would likely believe that consciousness is not common to the vast majority of things. That means the basic prior for if an item is conscious is, 'almost certainly not' unless we have already updated it based on other information. Under what reference class or mechanism should we be more concerned about the consciousness of an LLM than an ordinary computer running ordinary programs? There is nothing that seems particularly likely to lead to consciousness in its operating principles.

There are many people, including the original poster of course, trying to use behavioral evidence to get around that, so I pointed out how weak that evidence is.

An important distinction you seem to not see in my writing (whether because I wrote unclearly or you missed it doesn't really matter) is that when I speak of knowing the mechanisms by which an llm works is that I mean something very fundamental. We know these two things: 1)exactly what mechanisms are used in order to do the operations involved in executing the program (physically on the computer and mathematically) and 2) the exact mechanisms through which we determine which operations to perform.

As you seem to know, LLMs are actually extremely simple programs of extremely large matrices with values chosen by the very basic system of gradient descent. Nothing about gradient descent is especially interesting from a consciousness point of view. It's basically a massive use very simplified ODE solvers in a chain, which are extremely well understood and clearly have no consciousness at all if anything mathematical doesn't. It could also be viewed as just a very large number of variables in a massive but simple statistical regression. Notably, if gradient descent were related to consciousness directly, we would still have no reason to believe that an LLM doing inference rather than training would be conscious. Simple matrix math also doesn't seem like much of a candidate for consciousness either.

Someone trying to make the case for consciousness would thus need to think it likely that one of the other mechanisms in LLMs are related to consciousness, but LLMs are actually missing a great many mechanisms that would enable things like self-reflection and awareness (including a number that were included in primitive earlier neural networks such as recursion and internal loops). The people trying to make up for those omissions do a number of things to attempt to recreate it (with 'attention' being the built-in one, but also things like adding in the use of previous outputs), but those very simple approaches don't seem like likely candidates for consciousness (to me).

Thus, it remains extremely unlikely that an LLM is conscious.

When you say we don't know what mechanisms are used, you seem to be talking about not understanding a completely different thing than I am saying we understand. We don't understand exactly what each weight means (except in some rare cases that some researchers have seemingly figured out) and why it was chosen to be that rather than any number of other values that would work out similarly, but that is most likely unimportant to my point about mechanisms. This is, as far as I can tell, an actual ambiguity in the meaning of 'mechanism' that we can be talking about completely different levels at which mechanisms could operate, and I am talking about the very lowest ones.

Note that I do not usually make a claim about the mechanisms underlying consciousness in general except that it is unlikely to be these extremely basic physical and mathematical ones. I genuinely do not believe that we know enough about consciousness to nail it down to even a small subset of theories. That said, there are still a large number of theories of consciousness that either don't make internal sense, or seem like components even if part of it.

Pedantically, if consciousness is related to 'self-modeling' the implications involve it needing to be internal for the basic reason that it is just 'modeling' otherwise. I can't prove that external modeling isn't enough for consciousness, (how could I?) but I am unaware of anyone making that contention.

So, would your example be 'self-modeling'? Your brief sentence isn't enough for me to be sure what you mean. But if it is related to people's recent claims related to introspection on this board, then I don't think so. It would be modeling the external actions of an item that happened to turn out to be itself. For example, if I were to read the life story of a person I didn't realize was me, and make inferences about how the subject would act under various conditions, that isn't really self-modeling. On the other hand, in the comments to that, I actually proposed that you could train it on its own internal states, and that could maybe have something to do with this (if self-modeling is true). This is something we do not train current llms on at all though.

As far as I can tell (as someone who finds the very idea of illusionism strange), illusionism is itself not a useful point of view in regards to this dispute, because it would make the question of whether an LLM was conscious pretty moot. Effectively, the answer would be something to the effect of 'why should I care?' or 'no.' or even 'to the same extent as people.' regardless of how an LLM (or ordinary computer program, almost all of which process information heavily) works depending on the mood of the speaker. If consciousness is an illusion, we aren't talking about anything real, and it is thus useful to ignore illusionism when talking about this question.

As I mentioned before, I do not have a particularly strong theory for what consciousness actually is or even necessarily a vague set of explanations that I believe in more or less strongly.

I can't say I've heard of 'attention schema theory' before nor some of the other things you mention next like 'efference copy' (but the latter seems to be all about the body which doesn't seem all that promising a theory for what consciousness may be, though I also can't rule out that it being part of it since the idea is that it is used in self-modeling which I mentioned earlier I can't actually rule out either.).

My pet theory of emotions is that they are simply a shorthand for 'you should react in ways appropriate ways to a situation that is...' a certain way. For example (and these were not carefully chosen examples) anger would be 'a fight', happiness would be 'very good', sadness would be 'very poor' and so on. And more complicated emotions might obviously include things like it being a good situation but also high stakes. The reason for using a shorthand would be because our conscious mind is very limited in what it can fit at once. Despite this being uncertain, I find this a much more likely than emotions themselves being consciousness.

I would explain things like blindsight (from your ipsundrum link) through having a subconscious mind that gathers information and makes a shorthand before passing it to the rest of the mind (much like my theory of emotions). The shorthand without the actual sensory input could definitely lead to not seeing but being able to use the input to an extent nonetheless. Like you, I see no reason why this should be limited to the one pathway they found in certain creatures (in this case mammals and birds). I certainly can't rule out that this is related directly to consciousness, but I think it more likely to be another input to consciousness rather than being consciousness.

Side note, I would avoid conflating consciousness and sentience (like the ipsundrum link seems to). Sensory inputs do not seem overly necessary to consciousness, since I can experience things consciously that do not seem related to the senses. I am thus skeptical of the idea that consciousness is built on them. (If I were really expounding my beliefs, I would probably go on a diatribe about the term 'sentience' but I'll spare you that. As much as I dislike sentience based consciousness theories, I would admit them as being theories of consciousness in many cases.)

Again, I can't rule out global workspace theory, but I am not sure how it is especially useful. What makes a globabl workspace conscious that doesn't happen in an ordinary computer program I could theoretically program myself? A normal program might take a large number of inputs, process them separately, and then put it all together in a global workspace. It thus seems more like a theory of 'where does it occur' than 'what it is'.

'Something to do with electrical flows in the brain' is obviously not very well specified, but it could possibly be meaningful if you mean the way a pattern of electrical flows causes future patterns of electrical flows as distinct from the physical structures the flows travel through.

Biological nerves being the basis of consciousness directly is obviously difficult to evaluate. It seems too simple, and I am not sure whether there is a possibility of having such a tiny amount of consciousness that then add up to our level of consciousness. (I am also unsure about whether there is a spectrum of consciousness beyond the levels known within humans).

I can't say I would believe a slime mold is conscious (but again, can't prove it is impossible.) I would probably not believe any simple animals (like ants) are either though even if someone had a good explanation for why their theory says the ant would be. Ants and slime molds still seem more likely to be conscious to me than current LLM style AI though.