Posts

Comments

Large Language Models, Small Labor Market Effects?

We examine the labor market effects of AI chatbots using two large-scale adoption surveys (late 2023 and 2024) covering 11 exposed occupations (25,000 workers, 7,000 workplaces), linked to matched employer-employee data in Denmark. AI chatbots are now widespread—most employers encourage their use, many deploy in-house models, and training initiatives are common. These firm-led investments boost adoption, narrow demographic gaps in take-up, enhance workplace utility, and create new job tasks. Yet, despite substantial investments, economic impacts remain minimal. Using difference-in-differences and employer policies as quasi-experimental variation, we estimate precise zeros: AI chatbots have had no significant impact on earnings or recorded hours in any occupation, with confidence intervals ruling out effects larger than 1%. Modest productivity gains (average time savings of 2.8%), combined with weak wage pass-through, help explain these limited labor market effects. Our findings challenge narratives of imminent labor market transformation due to Generative AI.

From marginal revolution.

What does this crowd think? These effects are surprisingly small. Do we believe these effects? Anecdotally the effect of LLMs has been enormous for my own workflow and colleagues. How can this be squared with the supposedly tiny labor market effect?

Are we that selected of a demographic?

I am not sure what 'it' refers to in 'it is bad'.

People read more into this shortform than I intended. It is not a cryptic reaction, criticism, or reply to/of another post.

I don't know what you mean by intelligent [pejorative] but it sounds sarcarcastic.

To be clear, the low predictive efficiency is not a dig at archeology. It seems I have triggered something here.

Whether a question/domain has low or high (marginal) predictive effiency is not a value judgement, just an observation.

I don't dispute these facts.

The Marginal Returns of Intelligence

A lot of discussion of intelligence considers it as a scalar value that measures a general capability to solve a wide range of tasks. In this conception of intelligence it is primarily a question of having a ' good Map' . This is a simplistic picture since it's missing the intrinsic limits imposed on prediction by the Territory. Not all tasks or domains have the same marginal returns to intelligence - these can vary wildly.

Let me tell you about a 'predictive efficiency' framework that I find compelling & deep and that will hopefully give you some mathematical flesh to these intuitions. I initially learned about these ideas in the context of Computational Mechanics, but I realized that there underlying ideas are much more general.

Let be a predictor variable that we'd like to use to predict a target variable under a joint distribution . For instance could be the contex window and could be the next hundred tokens, or could be the past market data and is the future market data.

In any prediction task there are three fundamental and independently varying quantities that you need to think of:

- is the irreducible uncertainty or the intrinsic noise that remains even when is known.

- quantifies the reducible uncertainty or the amount of predictable information contained in .

For the third quantity, let us introduce the notion of causal states or minimally sufficient statistics. We define an equivalence relation on by declaring

The resulting equivalence classes, denoted as , yield a minimal sufficient statistic for predicting . This construction is ``minimal'' because it groups together all those that lead to the same predictive distribution , and it is ``sufficient'' because, given the equivalence class , no further refinement of can improve our prediction of .

From this, we define the forecasting complexity (or statistical complexity) as

which measures the amount of information---the cost in bits---to specify the causal state of . Finally, the \emph{predictive efficiency} is defined by the ratio

which tells us how much of the complexity actually contributes to reducing uncertainty in . In many real-world domains, even if substantial information is stored (high ), the gain in predictability () might be modest. This situation is often encountered in fields where, despite high skill ceilings (i.e. very high forecasting complexity), the net effect of additional expertise is limited because the predictive information is a small fraction of the complexity.

Example of low efficiency.

Let be the outcome of 100 independent fair coin flips, so each has bits.

Define as a single coin flip whose bias is determined by the proportion of heads in . That is, if has heads then:

- Total information in : \\

When averaged over all possible , the mean bias is 0.5 so that is marginally a fair coin. Hence,

- Conditional Entropy or irreducible uncertainty : \\

Given , the outcome is drawn from a Bernoulli distribution whose entropy depends on the number of heads in . For typical (around 50 heads), bit; however, averaging over all yields a slightly lower value. Numerically, one finds:

- Predictable Information : \\

With the above numbers, the mutual information is

- Forecasting Complexity : \\

The causal state construction groups together all sequences with the same number of heads. Since , there are 101 equivalence classes. The entropy of these classes is given by the entropy of the binomial distribution . Using an approximation:

- Predictive Efficiency :

In this example, a vast amount of internal structural information (the cost to specify the causal state) is required to extract just a tiny bit of predictability. In practical terms, this means that even if one possesses great expertise—analogous to having high forecasting complexity —the net benefit is modest because the inherent (predictive efficiency) is low. Such scenarios are common in fields like archaeology or long-term political forecasting, where obtaining a single predictive bit of information may demand enormous expertise, data, and computational resources.

Please read carefully what I wrote - I am talking about energy consumption worldwide not electricity consumption in the EU. Electricity in the EU accounts only for a small percentage of carbon emissions.

See

As you can see, solar energy is still a tiny percentage of total energy sources. I don't think it is an accident that the electricity split graph in the EU has been cited in this discussion because it is a proxy that is much more rose-colored.

Energy and electricity are often conflated in discussions around climate change, perhaps not coincidentally because the latter seems much more tractable to generate renewably than total energy production.

No, it is not confused. Be careful with reading precisely what I wrote. I said total energy production worldwide, not electricity production in the european union.

As you can see Solar is still a tiny percentage of energy consumption. That is not to say that things will not change - I certainly hope so! I give it significant probability. But if we are to be honest with ourselves than it is currently yet to be seen whether solar energy will prove to be the solution.

Moreover, in the case that solar energy does take over and ' solve' climate change that still does not prove the thesis - that solar energy solving climate change being majorly the result of deliberate policy instead of the result of market forces / ceteris paribus technological development.

I somewhat agree but

- The correlation is not THAT strong

- The correlation differs by field

And finally there is a difference between skill ceilings for domains with high versus low predictive efficiency. In the latter much more intelligence will still yield returns but rapidly diminishing

(See my other comment for more details on predictive effiency)

One aspect I didnt speak about that may be relevant here is the distinction between

irreducible uncertainty h (noise, entropy)

reducible uncertainty E ('excess entropy')

and forecasting complexity C ('stochastic complexity').

All three can independently vary in general.

Domains can be more or less noisy (more entropy h)- both inherently and because of limited observations

Some domains allow for a lot of prediction (there is a lot of reducible uncertainty E) while others allow for only limited prediction (eg political forecasting over longer time horizons)

And said prediction can be very costly to predict (high forecasting complexity C). Archeology is a good example: to predict one bit about the far past correctly might require an enormous amount of expertise, data and information. In other words it s really about the ratio between the reducible uncertainty and the forecasting complexity: E/C.

Some fields have very high skill ceiling but because of a low E/C ratio the net effect of more intelligence is modest. Some domains arent predictable at all, i.e. E is low. Other domains have a more favorable E/C ratio and C is high. This is typically a domain where there is a high skill ceiling and the leverage effect of addiitonal intelligence is very large.

[For a more precise mathematical toy model of h, E,C take a look at computational mechanics]

Wow! I like the idea of persuasion as acting on the lack of a fully coherent preference! Something to ponder 🤔

Is Superhuman Persuasion a thing?

Sometimes I see discussions of AI superintelligence developping superhuman persuasion and extraordinary political talent.

Here's some reasons to be skeptical of the existence of 'superhuman persuasion'.

- We don't have definite examples of extraordinary political talent.

Famous politicians rose to power only once or twice. We don't have good examples of an individual succeeding repeatedly in different political environments.

Examples of very charismatic politicans can be better explained by ' the right person at the right time or place'.

- Neither do we have strong examples of extraordinary persuasion.

>> For instance hypnosis is mostly explained by people wanting to be persuaded by the hypnotist. If you don't want to be persuaded it's very hard to change your mind. There is some skill in persuasion required for sales, and sales people are explicitly trained to do so but beyond a fairly low bar the biggest predictors for salesperson success is finding the correct audience and making a lot of attempts.

Another reason has to do with the ' intrinsic skill ceiling of a domain' .

For an agent A to have a very high skill in a given domain is not just a question of the intelligence of A or the resources they have at their disposal; it also a question of how high the skill ceiling of that domain is.

Domains differ in how high their skill ceilings go. For instance, the skill ceiling of tic-tac-toe is very low. [1] Domains like medicine and law have moderately high skill ceiling: it takes a long time to become a doctor, and most people don't have the ability to become a good doctor.

Domains like mathematics or chess have very high skill ceilings where a tiny group of individuals dominate everybody else. We can measure this fairly explicitly in games like Chess through an ELO rating system.

The domain of ' becoming rich' is mixed: the richest people are founders - becoming a wildly succesful founder requires a lot of skill but it is also very luck based.

Political forecasting is a measureable domain close to political talent. It seems to be very mixed bag whether this domain allows for a high skill ceiling. Most ' political experts' are not experts as shown by Tetlock et al. But even superforecaster only outperform for quite limited time horizons.

Domains with high skill ceilings are quite rare. Typically they operate in formal systems with clear rules and objective metrics for success and low noise. By contrast, persuasion and political talent likely have lower natural ceilings because they function in noisy, high-entropy social environments.

What we call political genius often reflects the right personality at the right moment rather than superhuman capability. While we can identify clear examples of superhuman technical ability (even in today's AI systems), the concept of "superhuman persuasion" may be fundamentally limited by the unpredictable, context-dependent, and adaptive & adversarial [people resist hostile persuasion] nature of human social response.

Most persuasive domains may cap out at relatively modest skill ceilings because the environment is too chaotic and subjective to allow for the kind of systematic skill development possible in more structured domains.

- ^

I'm amused that many frontier models still struggle with tic-tac-toe; though likely for not so good reasons.

Ryan Greenblatt on steering the AI paradigm:

I'm skeptical of strategies which look like "steer the paradigm away from AI agents + modern generative AI paradigm to something else which is safer". Seems really hard to make this competitive enough and I have other hopes that seem to help a bunch while being more likely to be doable.

(This isn't to say I expect that the powerful AI systems will necessarily be trained with the most basic extrapolation of the current paradigm, just that I think steering this ultimate paradigm to be something which is quite different and safer is very difficult.)

I agree and I would add I am generally suspicious of a general pattern here. Let's call it the ' Steering Towards Safe Paradigms'-strategy.

It goes something like this:

>>> There is a technology X causing public harm Z. The problem is that X is very competitive - individual actors benefit greatly from using X.

Instead of working to

(i) [develop technologies to] mitigate the harms of technology X,

(ii) put in existing safeguards or develop new safeguards to prevent harm Z,

(iii) or ban/govern technology X

we should instead purposely and deliberately develop a technology Y that is fundamentally different than X that does the same role as X without harm Z but slightly less competitively. >>>

A good example could be the development of renewable energy to replace fossil fuels to prevent climate change.

I am generally skeptical of strategies that look like ' steer the paradigm' as I think it overstates in general how much influence ' we' (individuals, governments, human society as a whole etc) have and falls prey to a social desirability bias. In so far as it is based on uncalibrated hopes and beliefs about the actual action space it can be net harmful.

Note that this is distinct from humanity automatically developing a technology Y' that is more competitive than X without harm Z because Y' is actually just straight up the next step in the tech tree that is more competitive for mundane market reasons.

An example could be the benefit of plastic billard balls instead of ivory billard balls saving the elephant. Although saving the elephant was iirc part of the reason to develop plastic billard balls the reason it worked was more mundane: plastic billard balls are far cheaper than ivory billard balls. In other words, standard market forces were likely the prime cause, not deliberate and purposeful policy to develop a less competitive but safer technology.

Let's zoom in to the renewable energy example. Are these examples where the ' Steering towards Safe Paradigms'-strategy was succesful?

The new tech (fusion, fission, solar, wind) is based on fundamental principles than the old tech (oil and gas).

Fusion would be an example but perpetually thirty years away. Fission works but wasnt purposely develloped to fight climate change. Wind is deliberatily and purposefully developed to combat climate change but unfortunately it is not competitive without large subsidies and most likely never will [mostly because of low energy density and off-peak load balance concerns].

Solar is at least somewhat competitive with fossil fuels [except because of load balancing it may not be able to replace fossil fuels completely] and purposely developped out of environmental concerns and would be the best example.

I think my main question marks here is: solar energy is still a promise. It hasnt even begun to make a dent in total energy consumption ( a quick perplexity search reveals only 2 percent of global energy is solar-generated). Despite the hype it is not clear climate change will be solved by solar energy.

Moreover, the real question is to what degree the development of competitive solar energy was the result of a purposeful policy. People like to believe that tech development subsidies have a large counterfactual but imho this needs to be explicitly proved and my prior is that the effect is probably small compared to overall general development of technology & economic incentives that are not downstream of subsidies / government policy.

Let me contrast this with two different approaches to solving a problem Z (climate change).

- Deploy existing competitive technology (fission)

- Mitigate the harm directly (geo-engineering)

It seems to me that in general the latter two approaches have a far better track record of counterfactually Actually Solving the Problem. And perhaps more cynically, the hopeful promise of 'Steering towards Safe Paradigms' - strategy in combatting climate change is taking away from the above more mundane strategies.

My comment was a little ambiguous. What I meant was human society purposely differentially researching and developing technology X instead of Y where Y has a public (global) harm Z but private benefit and X is based on a different design principle than Y but slightly less competitive but still able to replace Y.

A good example would be the development of renewable energy to replace fossil fuels to prevent climate change.

The new tech (fusion, fission, solar, wind) is based on fundamental principles than the old tech (oil and gas).

Lets zoom in:

Fusion would be an example but perpetually thirty years away. Fission works but wasnt purposely develloped to fight climate change. Wind is not competitive without large subsidies and most likely never will.

Solar is at least lomited competitive with fossil fuels [except because of load balancing it may not be able to replace fossil fuels completely] , purposely developped out of environmental concerns and would be the best example.

I think my main question marks here is: solar energy is still a promise. It hasnt even begun to make a dent in total energy consumption ( a quick perplexity search reveals only 2 percent of global energy is solar-generated). Despite the hype it is not clear climate change will be solved by solar energy.

Moreover, the real question is to what degree the development of competitive solar energy was the result of a purposeful policy. People like to believe that tech development subsidies have a large counterfactual but imho this needs to be explicitly proved and my prior is that the effect is probably small compared to overall general development of technology & economic incentives that are not downstream of subsidies / government policy.

Let me contrast this with two different approaches to solving a problem Z (climate change).

- Deploy existing competitive technology (fission)

- Solve the problem directly (geo-engineering)

It seems to me that in general the latter two approaches have a far better track record of counterfactually Actually Solving the Problem.

Yes - this is specifically staying within the framework of hidden markov chains.

Even if you go outside though it seems you agree there is a generative predictive gap - you're just saying it's not infinite.

Eggsyntax below gives the canonical example of hash function where prediction is harder than generation which hold for general computable processes.

A suggestive analogy of AI takeover might be the early-modern European colonial takeovers of the New World, India under Pizarro, Cortez, Clives.

Colonialism took several hundred years but the major disempowerement events were quite relatively quick, involving explicit hostile action using superior technology [and many native allies].

Are you imagining AI takeover to be slower or similar to Pizarro/Cortez/Clives campaigns?

A potential counterargument to gradual disempowerement is that one of the decisive advantages that AIs have over humanity & its decisionmaking processing are its speed of thinking and it's speed and coherency of decision-making (not now but for future agentic systems). Most decisionmaking at a national and international level is slow and mostly concerned with symbolics because of the inherent limitations of decisionmaking at the scale of large human societies [even authoritarian states struggle with good and fast decisionmaking]. AIs could circumvent this and act much faster and decisively.

Another argument is that humans aren't stupid. The public doesn't trust AI. When the power of AI is clearly visible it seems likely the public will react given time. Being publicly semi-hostile will incur a response. One shouldn't fall into the sleepwalker bias. Faking alignment and then suddenly striking seems to be a much more rational move than gradual disempowerment.

Congratulations on this paper. It seems like a major result.

Any chance of more exposition for those of us less cognitively-inclined? =)

the steelman is that quants do the version of technical analysis that works - they disprove the EMH proportional to quant salaries.

Couldn't agree more. Variants of this strategy get proposed often.

If you are a proponent of this strategy - I'm curious whether you know of any examples in history where humanity purposefully and succesfully steered towards a significantly less competitive [economically, militarily,...] technology that was nonetheless safer.

Eightfold path of option trading

Threefold duality and the Eightfold path of Option Trading

It is a truth universally acknowledged that the mere whiff of duality is catnip to the mathematician.

Given any asset X, like a stock, sold for a price P means there is a duality between buying and selling: one party buys X for P while another sells X for P.

Implicitly there is another duality: instead of interchanging the buy and sell actions, one can interchange the asset X and the price P, treating money as an asset and the asset as a medium of exchange.

A European call option at strike price S gives one the option to buy the underlying asset X at price S on the expiration date. [1]

There is a dual option - called a (European) put option that gives one the right [option] to sell the underlying on the expiration date.

Optionality is beautiful. Optionality is brilliant. The pursuit of optionality writ large is the great purpose of the higher limbic system. An option is optionality incarnate, offering limited downside yet unlimited upside potential.

There are much more classic financial instruments, but options represent a powerful abstraction layer on top of direct asset ownership.

Rk. Any security's payoff profile can be theoretically replicated using only put and call options. This is known as the Options Replication Theorem or sometimes the Fundamental Theorem of Asset Pricing in its broader form.

Options themselves can be bought and sold.

There is now a fourfold of options:

Buy a Call option

Sell a Call option

Buy a Put option

Sell a Put option

A single transaction might offer all four in some combination at the same time.

Creating options

There is a third hidden duality in option trading - that between owning and creating an option. So far we have regarded as the existence of options as given - options are bought and sold and they can be exercised (extinguished) but where do options come from? But options have to be created.

Creating an option is called a 'writing an option'. Writing an option is an inherent dangerous activity, only done by the largest, strongest, most experienced economical actors. Writing an option exposes its scribe to unlimited downside yet limited upside.

Why is this interesting?

- Options are closely related to infraBayesianism and Imprecise Probability

- Duality is always interesting.

- There has been some speculation about a Logic of Capitalism - intrinstic Grammatica of Cash. Perhaps the eightfold duality of option trading is the skeleton key to its formulation.

- ^ There is a minor difference between so-called 'American' style and 'European' style options. The former allows exercising the options up to the expiry date while the latter only allows exercising at the date. I'm taking European options by default, mostly because the Black-Scholes equation[2] is more easily expressed for European options - they admit a closed form solutions while there is no closed form solution for American options in general.

- ^ Note that the Black-Scholes equation is a special case of the Hamilton-Jacobi-Bellman equation, the central equation in both physics and reinforcement learning & control theory.

In an anthropically selected world one would expect to see some conspicuous coincidences - especially related to events and people that are highly counterfactual. Most events are not highly counterfactual. How this or that person lived and died - for the grand course of history it didn't matter.

A small group of individuals plausibly did change the course of history, at least somewhat. Unfortunately, it seems it much more likely to be counterfactually impactful by being very evil than being very good. It is easier to break things and easier murder en masse than to save en masse.

No fewer than 42 plots to kill Adolf Hitler have been uncovered by historians. The true number is likely higher due to an unknown number of undocumented cases. Overwhelmingly these were serious murder plots by high-ranking military officials, resistance fighters or clever lone wolfs. In other words - these could have been succesful...

Voters are irrational.

Voters want better health care, a powerful military, a strong social security net, but also lower taxes. There's 120% demands but only 100% to go around.

Voters also often believe their pet issue voting bloc is more powerful than it actually is. It’s an interesting question to ask why people like to believe their voting bloc is larger than it really is. One reason might be that the people that are ill-calibrated on their own causal impact are more likely to show up as a voter.

It follows that an effective politician lies. There is no blame to go to politicans really. The average politician is a Christ-like figure that takes on the karmic burden of dishonesty for the dharma, because voters are children.

A conventional politician lies sure - but he or she would not want to be known as a liar. Being known as a liar seems bad. But there is another type of politician: the 'Brazen Liar Politican' who is known to be a liar, who lies blatantly and lies often, who puts no effort in hiding their lies.

How could this possibly be a good political strategy? Well the voters know that he's a liar but paradoxically that may make them more likely to vote for him. This is because voters are irrational. Many voters with may believe the Brazen Liar Politician may ultimately favor their special interest, their pet issue while throwing other voter blocs (suckers!) under the bus - after the election.

In this regime - paradoxically - it may become advantageous to be known to be dishonest!

Forecasting and scenario building has become quite popular and prestigious in EA-adjacent circles. I see extremely detailed scenario building & elaborate narratives.

Yes AI will be big, AGI plausibly close. But how much detail can one really expect to predict? There were a few large predictions that some people got right, but once one zooms in the details don't fit while the correct predictions were much more widespread within the group of people that were paying attention.

I can't escape the feeling that we're quite close to the limits of the knowable and 80% of EA discourse on this is just larp.

Does anybody else feel this way?

Why no large hive intelligences?

Ants, social wasps, bees, termites dominate vertebrate biomass. On the insect level these are incredibly dominant in the biosphere. Eusociality is a game-changing tech. Yet with a single exception there are no large hive intelligence. That single exception is the naked mole rat - a rare non-dominant species. Why?

Claude suggests:

- Genetic predisposition: Hymenopteran insects (ants, bees, wasps) have a haplodiploid genetic system where females share 75% of their genes with sisters but only 50% with their own offspring. This creates a genetic incentive for females to raise sisters rather than their own young, facilitating eusociality. Vertebrates lack this genetic system.

- Ecological factors: Small, confined spaces like burrows or nests favor eusocial evolution. Insects can thrive in tiny spaces inaccessible to larger vertebrates.

- Cognitive complexity: The greater cognitive abilities of vertebrates may actually work against eusociality, as it enables more complex individual decision-making and flexibility rather than rigid, colony-focused behaviors.

I'm not entirely convinced. The haplodiploid genetic systems isn't universal for eusocial species. The point of eusociality is exactly that one can build burrows at scale [e.g. like beavers]. The point about cognitive complexity is the best argument imho but I don't quite see how it would block eusociality.

The Ammann Hypothesis: Free Will as a Failure of Self-Prediction

A fox chases a hare. The hare evades the fox. The fox tries to predict where the hare is going - the hare tries to make it as hard to predict as possible.

Q: Who needs the larger brain?

A: The fox.

This is a little animal tale meant to illustrate the following phenomenon:

Generative complexity can be much smaller than predictive complexity under partial observability. In other words, when partially observing a blackbox there are simple internal mechanism that create complex patterns that require very large predictors to predict well.

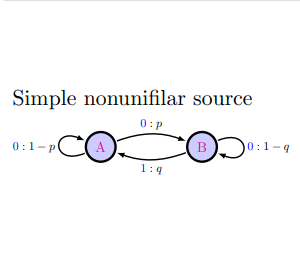

Consider the following simple 2-state HMM

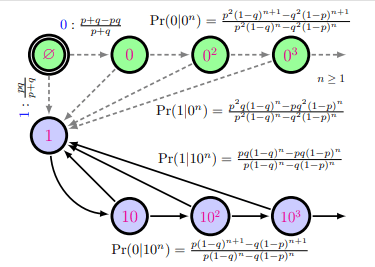

Note that the symbol 0 is output in three different ways: A -> A, A-> B, and B -> B. This means that if we see the symbol 0 we don't know where we are. We can use Bayesian updating to guess where we are but starting from a stationary distribution our belief states can become extremely complicated - in fact, the data sequence generated by the simple nonunifalar source has an optimal predictor HMM that requires infinitely many states :

This simple example illustrates the gap between generative complexity and predictive complexity, a generative-predictive gap.

I note that in this case the generative-predictive is intrinsic. The gap happens even (especially!) in the ideal limit of perfect prediction!

Free Will as generative-predictive gap

The brain is a predictive engine. So much is accepted. Now imagine an organism/agent endowed with a brain predicting the external world. To do well, it may be helpful to predict its own actions. What if this process has a predictive-generative gap?The brain will ascribe an inherent uncertainty ['entropy'] to its own actions!

An agent having a generative-predictive gap for predicting its own action would experience a mysterious force ' choosing' its actions. It may even decide to call this irreducible uncertainty of self-prediction "Free Will" .

************************************************************

[Nora Ammann initially suggested this idea to me. Similar ideas have been expressed by Steven Byrnes]

I've heard of this extraordinary finding. As for any extraordinary evidence, the first question should be: is the data accurate?

Does anybody know if this has been replicated?

I did not realize the death penalty for captains failing to engage was the proper context for Horatio Nelson's immortal signal " England expects that every man will do his duty"

'taste', ' mathematical beauty', ' interesting to mathematicians' aren't arbitrary markers but reflect a deeper underlying structure that is, I believe, ultimately formalizable.

It does not seem unlikely to me at all that it will be possible to mathematically describe those true statements that are moreover of particular beauty or likely interest to mathematicians (human, artificial or alien).

The Godel speedup story is an interesting point. I haven't thought deeply enough about this but IIRC the original ARC heuristic arguments has several sections on this and related topics. You might want to consult there.

it's the mystery of love, John

The people require it, sir.

Social sciences suffer from social drsirability bias, very noisy data, difficult to formalize concepts/abstractions too leaky, and difficulties with controls. Additionally, many people have strong (and often false) intuitions about social reality overriding scientific judgement.

One could call the social world more "complex" than physical realm but "complex" is a tricky word... A human is arguably more complex than an atom; yet the models that social scientists use are much less complex than used by physicists.

Although I wouldn't dispute the stats that you are citing here, John, I would guess these might be downstream from above difficulties.

I asked a well-known string theorist about the fabled 10^500 vacua and asked him whether he worried that this would make string theory a vacuous theory since a theory that fits anything fits nothing. He replied ' no, no the 10^500 'swampland' is a great achievement of string theory - you see... all other theories have infinitely many adjustable parameters'. He was saying string theory was about ~1500 bits away from the theory of everything but infinitely ahead of its competitors.

Diabolical.

Much ink has been spilled on the scientific merits and demerits of string theory and its competitors. The educated reader will recognize that this all this and more is of course, once again, solved by UDASSA.

No.

For those who might not have noticed Dan's clever double entendre: (Khovanov) homology is literally about counting/measuring holes in weird high-dimensional spaces - designing a new homology theory is in a very real sense about looking for holes that are not (yet) there.

I watched the video. It doesnt seem to say that China is behind in machine tooling - rather the opposite: prices are falling, capacity is increasing, new technology is rapidly adopted.

The best and most recent (last year) evidence based on comparing ancient and modern genomes seems to suggest intelligence was selected very strongly during agricultural revolution (a full SD) and has changed <0.2SD since AD0 [for the populations studied]

It seems that the evolutionary pressure for intelligence wasnt that strong in the last few thousand years compared to selection on many other traits (health and sexual selected traits seem to dominate).

Edit: it would take some effort to dig up this study. Ping me if this is of interest to you.

Cephalopods are highly intelligent but also have a very short lifecycle and very large number of offspring - making them a prime target for a artificial breeding program for intelligence uplifting.

E.g. the lifecycle of many octopi is about 1-2 years. A 15 year program could potentially breed for very significant behaviourial changes.

For instance, dog and horse breeds can be made within 10 generations.

Google says the female giant pacific octopis lays between 120k and 400k eggs at the end of her life. Giant pacific octopi live about 3-5 years.

I asked Claude to make some back of the enveloppe calculations

>>>I'll use the breeder's equation to estimate the potential response to selection for intelligence in octopuses. The breeder's equation states:

R = h² × S

Where:

- R is the response to selection (change in trait mean per generation)

- h² is the narrow-sense heritability of the trait

- S is the selection differential (difference between selected parents and population mean)

Let's make some reasonable assumptions for these parameters in octopuses:

- Heritability (h²): For cognitive traits in animals, heritability typically ranges from 0.2 to 0.6. Let's use h² = 0.4 as a moderate estimate for octopus intelligence.

- Selection differential (S): With 120,000-400,000 eggs per female, we could be extremely selective. If we select the top 0.1% of individuals (still giving us 120-400 individuals), we could achieve a selection differential of about 3 standard deviations.

Let's calculate the response to selection per generation:

R = 0.4 × 3 = 1.2 standard deviations per generation

For a breeding program over 15 years with Giant Pacific Octopuses (3-5 year lifecycle):

- Using a 4-year lifecycle: 15 ÷ 4 = 3.75 generations (let's round to 4 generations)

- Total expected gain: 4 × 1.2 = 4.8 standard deviations

For octopuses with a shorter 1-2 year lifecycle:

- Using a 1.5-year lifecycle: 15 ÷ 1.5 = 10 generations

- Total expected gain: 10 × 1.2 = 12 standard deviations

This represents a substantial shift in the distribution of intelligence. For context, the difference in IQ between average humans and those considered profoundly gifted is about 4 standard deviations. A shift of 12 standard deviations would be extraordinary.

However, several factors would likely limit these theoretical gains:

- Selection plateaus as genetic variation is depleted

- Pleiotropy (genes affecting multiple traits) may create unfavorable trade-offs

- The trait may reach biological/physiological limits

- Inbreeding depression could become an issue with intense selection

Even with these limitations, the potential for significant intelligence enhancement in octopuses through selective breeding appears substantial, especially for species with shorter lifecycles.

Alexander reacting to Claude back of enveloppe calculation:

Narrow sense heritability is probably higher for intelligence. Indeed, at this level of selection one would have to worry about inbreeding depression, selection plateaus etc

My best guess is that 6 SD would be possible IF one was actually able to accurately select top 0.1%.

This is probably quite hard. GWAS data for humans currently does not allow for this kind of precision. Doing the selection accurately / i.e. estimating the gradient is the main rate-limiting step [as it is in deep learning!]. One would need to construct psychometrically valid tests for cephalopod, run them at scale.

How much is 6 SD? Octopi might be about as intelligent as a dog (uncertain about this). 6 SD would be quite insane, and would naively plausibly push them tonthe upper end of dolphin/chimpanzee intelligence. The main limitor in my mind is that octopi are not natively social species and are do not have a long enough lifecycle to do significant learning so this might not actually lead to intelligence uplifting. Additionlly, there is the issue that octopi dont have vocal chords so would need to communicate differently.

Ofc like the famous Soviet silver fox breeding program one could separately select for sociability [which might be more important for effective intelligence. Iirc wolfs usually outperform dogs cognitively yet some dog breeds are generally considered more intelligent in a relevant sense.]

I wouldn't claim to be an expert on the UK system but from talking with colleagues at UCL it seems to be the case that French positions are more secure and given out earlier [and this was possibly a bigger difference in the past]. I am not entirely sure about the number 32. Anecdotally, I would say many of the best people I know did not obtain tenure this early. This is something that may also vary by field - some fields are more popular, better funded because of [perceived] practical applications.

Mathematiscs is very different from other fields. For instance: it is more long-tailed, benefits from ' deep research, deep ideas' far more than other fields, is difficult to paralellize, has ultimate ground truth [proofs], and in large fraction of subfields [e.g. algebraic geometry, homotopy theory ...] the amount of prerequisite knowledge is very large,[1] has many specialized subdisciplines , there are no empirical

All these factors suggest that the main relevant factor of production is how many positions that allow intellectuall freedom, are secure, at a young age plus how they are occupied by talented people is.

- ^

e.g. it often surprises outsiders that in certian subdisciplines of mathematics even very good PhD students will often struggle reading papers at the research frontier - even after four years of specialized study.

Yes I use LLMs in my writing [not this comment] and I strongly encourage others to do so too.

This the age of Cyborgism. Jumping on making use of the new capabilities opening up will likely be key to getting alignment right. AI is coming, whether you like it or not.

There is also a mundane reason: I have an order of magnitude more ideas than I can write down. Using LLMs allows me to write an essay in 30 min which otherwise would take half a day.

Sure happy to disagree on this one.

Fwiw, the French dominance isn't confined to Bourbakist topics. E.g. Pierre Louis Lions won one of the French medals and is the world most cited mathematician, with a speciality in PDEs. Some of his work investigates the notion of general nonsmooth ("viscosity") solutions for the general Hamilton-Jacobi(-Bellmann) equation both numerically and analytically. It's based on a vast generalization of the subgradient calculus ("nonsmooth" calculus), and is very directly related to good numerical approximation schemes.

Certainly for many/most other subjects the French system is not so good. E.g. for ML all that theory is mostly a waste.

Those are some good points certainly.

The UK/US system typically gives tenure around ~40, typically after ~two postdocs and a assistant -> associate -> full prof.

In the French system a typical case might land an effectively tenured job at 30. Since 30-40 is a decade of peak creativity for scientists in general, mathematicians in particular I would say this is highly

Laurent Lafforgue is a good example. Iirc he published almost nothing for seven years after his PhD until the work that he did for the Fields medal. He wouldnt have gotten a job in the American system.

He is an extreme example but generically having many more effectively tenured positions at a younger age means that mathematicians feel the freedom to doggedly pursue important, but perhaps obscure-at-present, research bets.

My point is primarily that the selection is at 20, instead of at 18. It s not about training per se, although here too the French system has an advantage. Paris has ~ 14 universities, a number of grand ecolees, research labs, etc a large fraction which do serious research mathematics. Paris consequently has the largest and most diverse assortiment of advanced coursework in the world. I don't believe there is any place in the US that compares [I've researched this in detail in the past].

Why Do the French Dominate Mathematics?

France has an outsized influence in the world of mathematics despite having significantly fewer resources than countries like the United States. With approximately 1/6th of the US population and 1/10th of its GDP, and French being less widely spoken than English, France's mathematical achievements are remarkable.

This dominance might surprise those outside the field. Looking at prestigious recognitions, France has won 13 Fields Medals compared to the United States' 15 a nearly equal achievement despite the vast difference in population and resources. Other European nations lag significantly behind, with the UK having 8, Russia/Soviet Union 6/9, and Germany 2.

France's mathematicians are similarly overrepresented in other mathematics prizes and honors, confirming this is not merely a statistical anomaly.

I believe two key factors explain France's exceptional performance in mathematics while remaining relatively average in other scientific disciplines:

1. The "Classes Préparatoires" and "Grandes Écoles" System

The French educational system differs significantly from others through its unique "classes préparatoires" (preparatory classes) and "grandes écoles" (elite higher education institutions).

After completing high school, talented students enter these intensive two-year preparatory programs before applying to the grandes écoles. Selection is rigorously meritocratic, based on performance in centralized competitive examinations (concours). This system effectively postpones specialization until age 20 rather than 18, allowing for deeper mathematical development during a critical cognitive period.

The École Normale Supérieure (ENS) stands out as the most prestigious institution for mathematics in France. An overwhelming majority of France's top mathematicians—including most Fields Medalists—are alumni of the ENS. The school provides an ideal environment for mathematical talent to flourish with small class sizes, close mentorship from leading mathematicians, and a culture that prizes abstract thinking.

This contrasts with other countries' approaches:

- Germany traditionally lacked elite-level mathematical training institutions (though the University of Bonn has recently emerged as a center of excellence)

- The United States focuses on mathematics competitions for students under 18, but these competitions often emphasize problem-solving skills that differ significantly from those required in mathematical research

The intellectual maturation between ages 18 and 20 is profound, and the French system capitalizes on this critical developmental window.

2. Career Stability Through France's Academic System

France offers significantly more stable academic positions than many other countries. Teaching positions throughout the French system, while modestly compensated, effectively provide tenure and job security.

This stability creates an environment where mathematicians can focus on deep, long-term research without the publish-or-perish pressure common in other academic systems. In mathematics particularly, where breakthroughs often require years of concentrated thought on difficult problems, this freedom to think without immediate productivity demands is invaluable.

While this approach might be less effective in experimental sciences requiring substantial resources and team management, for mathematics—where the primary resource is time for thought—it has proven remarkably successful.

ADHD is about the Voluntary vs Involuntary actions

The way I conceptualize ADHD is as a constraint on the quantity and magnitude of voluntary actions I can undertake. When others discuss actions and planning, their perspective often feels foreign to me—they frame it as a straightforward conscious choice to pursue or abandon plans. For me, however, initiating action (especially longer-term, less immediately rewarding tasks) is better understood as "submitting a proposal to a capricious djinn who may or may not fulfill the request." The more delayed the gratification and the longer the timeline, the less likely the action will materialize.

After three decades inhabiting my own mind, I've found that effective decision-making has less to do with consciously choosing the optimal course and more with leveraging my inherent strengths (those behaviors I naturally gravitate toward, largely outside my conscious control) while avoiding commitments that highlight my limitations (those things I genuinely intend to do and "commit" to, but realistically never accomplish).

ADHD exists on a spectrum rather than as a binary condition. I believe it serves an adaptive purpose—by restricting the number of actions under conscious voluntary control, those with ADHD may naturally resist social demands on their time and energy, and generally favor exploration over exploitation.

Society exerts considerable pressure against exploratory behavior. Most conventional advice and social expectations effectively truncate the potential for high-variance exploration strategies. While one approach to valuable exploration involves deliberately challenging conventions, another method simply involves burning bridges to more traditional paths of success.

I use LLMs throughout my personal and professional life. The productivity gains are immense. Yes hallucination is a problem but it's just as spam/ads/misinformation on wikipedia/internet - an small drawback that doesn't oblivate the ginormous potential of the internet/LLMs

I am 95% certain you are leaving value on the table.

I do agree straight LLMs are not generally intelligent (in the sense of universal intelligence/AIXI) and therefore not completely comparable to humans.

This was basically my model since i first started paying attention to modern AI

Curious why did you think differently before ? :)