A computational no-coincidence principle

post by Eric Neyman (UnexpectedValues) · 2025-02-14T21:39:39.277Z · LW · GW · 38 commentsThis is a link post for https://www.alignment.org/blog/a-computational-no-coincidence-principle/

Contents

Our no-coincidence conjecture How we came up with the statement Thoughts for theoretical computer scientists Why we care None 39 comments

This post presents a conjecture formulated at the Alignment Research Center in 2023. Our belief in the conjecture is at least partly load-bearing for our belief in ARC's overall agenda. We haven't directly worked on the conjecture for a while now, but we believe the conjecture is interesting in its own right.

In a recent paper in Annals of Mathematics and Philosophy, Fields medalist Timothy Gowers asks why mathematicians sometimes believe that unproved statements are likely to be true. For example, it is unknown whether is a normal number (which, roughly speaking, means that every digit appears in with equal frequency), yet this is widely believed. Gowers proposes that there is no sign of any reason for to be non-normal -- especially not one that would fail to reveal itself in the first million digits -- and in the absence of any such reason, any deviation from normality would be an outrageous coincidence. Thus, the likely normality of is inferred from the following general principle:

No-coincidence principle (Gowers): If an apparently outrageous coincidence happens in mathematics, then there is a reason for it.

In other words: suppose that the digit 3 accounts for not 10% but 11% of digits in the decimal expansion of . Intuitively, there ought to be an explanation of this fact: maybe not a proof, but something that someone could say to me that would make me say, "Oh, I get it now, it makes sense that 3 appears more than 10% of the time in ".

(For an apparently outrageous coincidence that turns out to be true, check out Chebyshev's bias: in a sense, primes that are 3 mod 4 are more common than primes that are 1 mod 4. As expected by Gowers' no-coincidence principle, we have a heuristic understanding for why this should be true. However, we only have a proof conditioned on strong forms of the Riemann hypothesis.)

The no-coincidence principle is profound, but also informal. The purpose of this post is to propose a formalization, so that we can at least ask whether or not it's true. In this post, I will:

- State ARC's computational no-coincidence conjecture, which is our best attempt at formalizing Gowers' no-coincidence principle (in a restricted setting).

- Explain how we came up with the statement: why this one, and not a different one.

- Explain why I think theoretical computer scientists should find our conjecture compelling.

- Explain why we care about the conjecture.

Our no-coincidence conjecture

Here is our best attempt to capture the spirit of Gowers' no-coincidence principle in a formal mathematical statement:

Computational no-coincidence conjecture: For a reversible[1] circuit , let be the property that there is no input to that ends in zeros, such that also ends in zeros. There exists a polynomial-time verification algorithm V that receives as input:

- A reversible circuit

- An advice string

such that:

- For all such that is true, there exists with length polynomial[2] in the size of , such that .

- For 99% of random[3] reversible circuits , no such exists.

Here, plays the role of the "apparently outrageous coincidence". To see why it's so outrageous, let's do some math.

It is conjectured that random depth- reversible circuits are pseudorandom permutations (see e.g. page 5 here), so let's model a random reversible circuit as an actually random permutation of . There are inputs that end in zeros, and for each of these, the probability that ends in zeros is (approximately independently). So the probability that none of these inputs are mapped to an input that ends in zeros is roughly

Now, obviously the actual probability that a randomly chosen reversible circuit satisfies is much higher than that (there are only exponentially many polynomial-size circuits, and e.g. the circuit that negates all bits satisfies ). This discrepancy comes from our choice to model random reversible circuits as truly random permutations.

However, the fact that a random circuit is so unlikely to satisfy "by chance" suggests that any circuit that does satisfy has some kind of special structure that explains "why" it satisfies . (For example, the aforementioned circuit , implemented as parallel NOT gates, has a very clear structure.) In this context, our no-coincidence conjecture states: such structure can be expressed in a polynomial-length advice string.[4]

In other words, we believe that there is a "formal language" for expressing different kinds of structure that can give rise to circuits that satisfy .[5] All circuits that satisfy have some such structure, while most random circuits have no such structure.[6]

How we came up with the statement

Gowers' no-coincidence principle speaks of "apparently outrageous coincidences". We wanted some simple yet expansive domain in which we could speak of outrageous coincidences, and boolean circuits were a natural one.[7] Our first attempt at formalization was to consider the family of circuits and to let the "outrageous coincidence" be the property be that outputs 1 on every input.

The resulting no-coincidence conjecture was then: There exists a polynomial-time verification algorithm V that receives as input a boolean circuit and an advice string , such that for any that always outputs 1, there is a polynomial-length such that , but for 99% of random circuits, no such exists.

Unfortunately, this statement was uncompelling for two reasons:

- Random circuits (unlike random reversible circuits) do not behave like random functions. In fact, some of the most natural distributions of random circuits (e.g. sufficiently deep layered circuits) mostly consist of circuits that are equivalent to the constant-0 or constant-1 function (and so the "always outputs 1" property is not surprising at all).

- Even if we managed to avoid the above problem with a clever choice of distribution, a verifier could succeed by running on a small number of random (or pseudorandom) inputs, and outputting if returned 1 every time. doesn't even need an advice string!

Our next attempt was to consider the family of circuits and the property that never outputs the all-zeros string. (On a naive analysis, this is unlikely to happen "by chance", since there are way more inputs than possible outputs.) This modification resolved the second issue (even if outputs the all-zeros string on some inputs, finding any such input by sampling may be infeasible.) However, the first issue remained.

To the best of our knowledge, the "reversible circuits" formulation above (which is similar in spirit to the previous formulation but inherits the nice properties of random reversible circuits) resolves the first issue as well.

Ultimately, though, we are not wedded to our particular formulation. Perhaps there is some clever sampling-based verifier that "trivializes" our conjecture as well, in which case we would want to revise it. We are ultimately interested in the informal claim that if a circuit exhibits a very surprising property, it is possible to point out some internal structure of the circuit that will "explain" to a verifier why the surprising property holds.

Thoughts for theoretical computer scientists

I think that complexity theorists ought to find our conjecture really compelling and deep. This is for a few reasons:

- In a sense, our conjecture is a (much) weaker version of NP = coNP. Concretely, it is coNP-hard to determine if is true for a reversible circuit .[8] Thus, if 99% were changed to 100% in the statement of the conjecture, the resulting statement would be equivalent to NP = coNP. We think that our weakening is natural and -- to our knowledge -- new.

- If the conjecture is true and is one such verifier, then is a really interesting object that is somewhat analogous to a proof verifier. Essentially, is a verifier for "heuristic arguments" about the plausibility of , and the that causes to accept is a heuristic argument, written in some formal language that interprets. Thus, finding a that makes the conjecture true would be a step toward formalizing heuristic arguments (see more in our earlier work, Formalizing the presumption of independence).[9]

- While our conjecture statement is reminiscent of many complexity-theoretic conjectures,[10] our reason for believing it is quite different from the reasons why computer scientists generally believe complexity-theoretic conjectures. It rests on an intuition similar to the one that underpins Gowers' no-coincidence principle. Concretely: if a fact about a circuit is too unlikely to be true simply "by chance", then there ought to be an explanation involving the internal structure of the circuit that explains the fact. The conjecture thus leverages complexity theory to bring a new perspective to an important topic in mathematical philosophy.

Why we care

At ARC, we are interested in finding explanations of neural network behavior. Concretely, a trained neural net (such as GPT-4) exhibits a really surprising property: it gets low loss on the training set (far lower than a random neural net). We are interested in understanding the structural properties of the neural net that result in it getting low loss.

Our notion of an explanation is related to, but different from, interpretations sought after by mechanistic interpretability researchers. The main commonality is that we also seek a mechanistic understanding of neural nets via a close analysis of their internals. Our methods might also be similar: we are interested in learning generative models of neural activations, much as Anthropic's interpretability team learned sparse autoencoders for neural features using dictionary learning. However, there are important differences:

- We do not seek an explanation that can be understood by humans. Instead, we hope to use our explanations to solve formally specified safety-relevant tasks such as mechanistic anomaly detection and tail risk estimation.[11]

- We seek to understand neural networks in their entirety: enough that we can fully explain the mechanisms by which a neural net is able to achieve such low loss on its training set. This likely means that we cannot make convenient assumptions, like features being linearly represented in the neural activations. Our explanations, then, must be far more versatile than the ones that are typically sought after in interpretability research.

One might reasonably ask: why do we believe that such explanations exist? In other words, maybe we can understand some bits and pieces of neural network behavior, but not fully explain the internal structural properties that result in the model's behavior.

Our belief in the existence of such explanations follows from a more general belief: that all surprising mathematical structure has an explanation, in the sense of Gowers' no-coincidence principle.

From our discussions with other researchers, we have gotten the impression that some agree with this overarching intuition while others do not. On the other hand, it seems difficult to argue about the truth or falsehood of such an informal statement. Thus, our attempt at formalization is in part motivated by wanting to explain more concretely what we believe.

If our conjecture is false,[12] we would like to know. It may cause us to lose faith in our belief that neural networks are explainable (in the way that we are using the word "explainable"), and to pivot to a new research direction.

- ^

A circuit is reversible if every gate is a bijective function, see e.g. the Toffoli gate.

- ^

In fact, we believe that this is probably true if must be quasi-linear, i.e. .

- ^

We think that the distribution over random circuits probably doesn't matter too much for the formal statement, so long as the circuits are deep enough to ensure good mixing. But to be concrete, we could say that a random circuit consists of layers. Each layer has gates with three inputs and three outputs, such that each of the wires is an input to exactly one gate (with wires randomly assigned to gate). Each gate is uniformly chosen among the 8! bijective functions from to .

For some prior work on random reversible circuits, see Gay et al. (2025).

- ^

Technically, our conjecture makes a weaker claim. For instance, there might be a verifier for which the advice string does not necessarily have a natural interpretation as pointing out structural properties of . Ultimately, though, we are interested in finding a verifier that accepts or rejects based on a structural explanation of the circuit; our no-coincidence conjecture is our best attempt to formalize that claim, even if it is imperfect.

- ^

This is analogous to how there is a formal language for mathematical proofs, and a proof verifier that checks whether a proof is valid. But in our case, is valid if it provides "compelling evidence" that may be true. A verifier can sometimes accept even if is false, so long as it does not do so for too many random circuits; thus, our soundness condition is weaker than the soundness condition for proofs.

- ^

Some circuits that do not satisfy may have a structure that causes to accept. This is inevitable. Consider for instance a reversible circuit that does not do anything with the first bits of the input, while having no discernible structure on the last bits. The heuristic estimate we gave before now suggests a roughly chance that is true. Since must be able to output 1 on every for which is true, the structural observation that the first bits are left alone ought to be enough to cause to output 1.

- ^

After all, many mathematical propositions can be written as statements about boolean circuits. Consider the Goldbach conjecture: every even number greater than 2 can be written as a sum of two primes. We can write this as: for all , there exist and such that and for all , and . Consider a circuit that outputs if , , and . Then Goldbach's conjecture is equivalent to "For all there exist , such that for all , , ." (Or to be more precise, there is a family of such circuits, one for every possible size of , and Goldbach's conjecture is equivalent to this statement being true for all circuits in the family.)

- ^

We reduce from Circuit-SAT. Let be a boolean circuit. For , let be equal to if and if , where is the bitwise NOT of . Then is reversible, and is true if and only if is satisfiable.

- ^

If our conjecture is true, we think it is probably independent of ZFC. (This is because we expect it to be impossible to rule out that some satisfies just by chance, despite the incredible implausibility.) Despite that, it seems realistic to us to find a specific verifier that would likely satisfy the conjecture statement (even if this could never be proven).

- ^

Save perhaps for the "random circuit" bit, although that's not unprecedented either.

- ^

This is mainly because we do not think it is safe to assume that safety-relevant internal structures will be understandable to humans.

- ^

At least assuming that we have not messed up the conjecture statement. Perhaps it will be false for a reason that has nothing to do with the existence of explanations for circuit behavior, in a way that we have not anticipated. (But we think that our conjecture is more likely to be true for unsatisfying reasons, than false for unsatisfying reasons.)

38 comments

Comments sorted by top scores.

comment by Jacob_Hilton · 2025-02-14T21:45:55.686Z · LW(p) · GW(p)

Before reversible circuits, we first considered a simpler setting: triangle counting. The no-coincidence principle in that setting turned out to be true, but for a relatively uninteresting reason, because the domain was not rich enough. Nevertheless, I think this result serves as a helpful exercise for people trying to get to grips with our definitions, as well as providing more of the story about how we ended up with our reversible circuits statement.

In the triangle counting setting, we consider the distribution over undirected 3-partite graphs on 3 groups of vertices obtained by taking each of the possible edges to be present independently with probability . We take , so there are many more edges than vertices, but much fewer than the total number of possible edges.

For a randomly sampled , one can check that the number of triangles in has mean and variance . So if has triangles, then we consider this to be an "outrageous coincidence", since this exceeds the mean by standard deviations. (This is "outrageous" because if the number of triangles were normally distributed with this mean and variance, then the probability of exceeding this for any of the possible graphs would tend to zero.) This motivates the following statement, which turns out to be true:

Proposition (No-coincidence principle for triangle counting, ). For any , there is a linear-time verification algorithm that receives as input:

- A 3-partite graph on 3 groups of vertices, represented as a list of edges

- An advice string

such that:

- For all graphs with triangles, if is sufficiently large then there exists with length linear in the number of edges of such that .

- For , the probability that there is any with tends to zero.

(Note that the result would be trivial if we allowed to run in polynomial time, since it could then directly count the number of triangles, so we need to be more fine-grained than that.)

Dmitry Vaintrob and Aryan Bhatt were able to generalize this result to polygon counting (note that we consider graphs on groups of vertices arranged in a cycle, not -partite graphs in general, so there are possible edges, not ) and to permanents. So we concluded that neither of these settings seemed to be rich enough to produce an interesting no-coincidence principle (even though permanents are #P-hard to compute), and moved on to considering circuits instead.

Hint for polygon counting:

Write the number of polygons as a cyclic trace .

comment by tailcalled · 2025-02-15T10:03:37.168Z · LW(p) · GW(p)

If this is meant to be a weakening of NP vs co-NP, what do you make of the stronger statement that NP = co-NP? As I understand it, this most complexity theorists think this is false. Do you have any reason to think that your conjecture is much much more likely to hold than NP = co-NP, or do you also think NP = co-NP could hold?

Replies from: Jacob_Hilton↑ comment by Jacob_Hilton · 2025-02-15T17:33:18.240Z · LW(p) · GW(p)

Good question! We also think that NP ≠ co-NP. The difference between 99% (our conjecture) and 100% (NP = co-NP) is quite important, essentially because 99% of random objects "look random", but not 100%. For example, consider a uniformly random string for some large . We can quite confidently say things like: the number of 0s in is between and ; there is no streak of alternating 0s and 1s; etc. But these only hold with 99% confidence (more precisely, with probability tending to 1), not 100%.

Going back to the conjecture statement, the job of the verification algorithm is much harder for 100% than 99%. For 100%, has to definitively tell (with the help of a certificate) whether a circuit has property . Whereas for 99%, it simply has to spot (again with the help of a "certificate" of sorts) any structure at all that reveals the circuit to be non-random in a way that could cause it to have property . For example, could start by checking the proportions of different types of gates, and if these differed too much from a random circuit, immediately reject the circuit out-of-hand for being "possibly structured". Footnote 6 has another example of structure that could cause a circuit to have property , which seems much harder for a 100%- to deal with.

Replies from: glauberdebona↑ comment by glauberdebona · 2025-03-08T11:24:32.388Z · LW(p) · GW(p)

Your comment seems to imply that the conjecture's "99%" is about circuits for which is false. Otherwise, it would be impossible for a to miss 100% of random reversible circuits without missing the circuits for which is true. In the conjecture, should "random reversible circuits " be read as "random reversible circuits for which is false"? It does not change much indeed, but I might have to correct my presentation of the conjecture here [LW · GW].

Replies from: Jacob_Hilton↑ comment by Jacob_Hilton · 2025-03-10T17:50:17.018Z · LW(p) · GW(p)

The statements are equivalent if only a tiny fraction (tending to 0) of random reversible circuits satisfy . We think this is very likely to be true, since it is a very weak consequence of the conjecture that random (depth-) reversible circuits are pseudorandom permutations. If it turned out to not be true, it would no longer make sense to think of as an "outrageous coincidence" and so I think we would have to abandon the conjecture. So in short we are happy to consider either version (though I agree that "for which is false" is a bit more natural).

comment by Gurkenglas · 2025-02-15T10:11:14.658Z · LW(p) · GW(p)

Ultimately, though, we are interested in finding a verifier that accepts or rejects based on a structural explanation of the circuit; our no-coincidence conjecture is our best attempt to formalize that claim, even if it is imperfect.

Can you say more about what made you decide to go with the 99% clause? Did you consider any alternatives?

Replies from: Alibi↑ comment by Alibi · 2025-02-15T19:29:09.050Z · LW(p) · GW(p)

Reading the post, I also felt like 99% was kind of an arbitrary number. I would have expected it to be something like: for all $\epsilon > 0$ there exists a $V$ such that ... $1-\epsilon$ of random circuits satisfy ...

Replies from: Gurkenglas↑ comment by Gurkenglas · 2025-02-15T20:27:16.320Z · LW(p) · GW(p)

I'm hoping more for some stepping stones between the pre-theoretic concept of "structural" and the fully formalized 99%-clause. If we could measure structuralness more directly we should be able to get away with less complexity in the rest of the conjecture.

Replies from: UnexpectedValues↑ comment by Eric Neyman (UnexpectedValues) · 2025-02-16T01:16:06.013Z · LW(p) · GW(p)

Thanks, this is a good question.

My suspicion is that we could replace "99%" with "all but exponentially small probability in ". I also suspect that you could replace it with , with the stipulation that the length of (or the running time of V) will depend on . But I'm not exactly sure how I expect it to depend on -- for instance, it might be exponential in .

My basic intuition is that the closer you make 99% to 1, the smaller the number of circuits that V is allowed to say "look non-random" (i.e. are flagged for some advice ). And so V is forced to do more thorough checks ("is it actually non-random in the sort of way that could lead to P being true?") before outputting 1.

99% is just a kind-of lazy way to sidestep all of these considerations and state a conjecture that's "spicy" (many theoretical computer scientists think our conjecture is false) without claiming too much / getting bogged down in the details of how the "all but a small fraction of circuits" thing depends on or the length of or the runtime of V.

Replies from: CronoDAS, glauberdebona↑ comment by CronoDAS · 2025-02-16T04:13:15.231Z · LW(p) · GW(p)

many theoretical computer scientists think our conjecture is false

Does that mean that you (plural) are either members of a theoretical computer science community or have discussed the conjecture with people that are? (I have no idea who you are or what connections you may or may not have with academia in general.)

Replies from: UnexpectedValues↑ comment by Eric Neyman (UnexpectedValues) · 2025-02-16T07:03:15.278Z · LW(p) · GW(p)

Yeah, I did a CS PhD in Columbia's theory group and have talked about this conjecture with a few TCS professors.

↑ comment by glauberdebona · 2025-03-07T21:34:31.640Z · LW(p) · GW(p)

I think I have an idea to make that 99% closer to 1. But its development became too big for a comment here, so I made a post [LW · GW]about it.

comment by Dmitry Vaintrob (dmitry-vaintrob) · 2025-02-16T05:46:57.099Z · LW(p) · GW(p)

I really liked the post - I was confused by the meaning and purpose no-coincidence principle when I was a ARC, and this post clarifies it well. I like that this is asking for something that is weaker than a proof (or a probabilistic weakening of proof), as [related to the example of using the Riemann hypothesis], in general you expect from incompleteness for there to be true results that lead to "surprising" families of circuits which are not provable by logic. I can also see Paul's point of how this statement is sort of like P vs. BPP but not quite.

More specifically, this feels like a sort of 2nd-order boolean/polynomial hierarchy statement whose first-order version is P vs. BPP. Are there analogues of this for other orders?

comment by Capybasilisk · 2025-02-15T08:02:55.742Z · LW(p) · GW(p)

Isn't this just the problem of induction in philosophy?

E.g., we have no actual reason to believe that the laws of physics won't completely change on the 3rd of October 2143, we just assume they won't.

Replies from: Jacob_Hilton↑ comment by Jacob_Hilton · 2025-02-17T17:20:05.317Z · LW(p) · GW(p)

It's not, but I can understand your confusion, and I think the two are related. To see the difference, suppose hypothetically that 11% of the first million digits in the decimal expansion of were 3s. Inductive reasoning would say that we should expect this pattern to continue. The no-coincidence principle, on the other hand, would say that there is a reason (such as a proof or a heuristic argument) for our observation, which may or may not predict that the pattern will continue. But if there were no such reason and yet the pattern continued, then the no-coincidence principle would be false, whereas inductive reasoning would have been successful.

So I think one can view the no-coincidence principle as a way to argue in favor of induction (in the context of formally-defined phenomena): when there is a surprising pattern, the no-coincidence principle says that there is a reason for it, and this reason may predict that the pattern will continue (although we can't be sure of this until we find the reason). Interestingly, one could also use induction to argue in favor of the no-coincidence principle: we can usually find reasons for apparently outrageous coincidences in mathematics, so perhaps they always exist. But I don't think they are the same thing.

comment by Logan Zoellner (logan-zoellner) · 2025-02-17T14:45:25.187Z · LW(p) · GW(p)

The general No-Coincidence principle is almost certainly false. There are lots of patterns in math that hold for a long time before breaking (e.g. Skewe's Number) and there are lots of things that require astronomically large proofs (e.g Godel's speed-up theorem). It would be an enormous coincidence for both of these cases to never occur at once.

I have no reason to think your particular formalization would fare better.

Replies from: Jacob_Hilton↑ comment by Jacob_Hilton · 2025-02-17T19:36:10.967Z · LW(p) · GW(p)

For the informal no-coincidence principle, it's important to us (and to Gowers IIUC) that a "reason" is not necessarily a proof, but could instead be a heuristic argument (in the sense of this post [LW · GW]). We agree there are certainly apparently outrageous coincidences that may not be provable, such as Chebyshev's bias (discussed in the introduction to the post). See also John Conway's paper On Unsettleable Arithmetical Problems for a nice exposition of the distinction between proofs and heuristic arguments (he uses the word "probvious" for a statement with a convincing heuristic argument).

Correspondingly, our formalization doesn't bake in any sort of proof system. The verification algorithm only has to correctly distinguish circuits that might satisfy property from random circuits using the advice string – it doesn't necessarily have to interpret as a proof and verify its correctness.

Replies from: logan-zoellner↑ comment by Logan Zoellner (logan-zoellner) · 2025-02-17T23:15:59.843Z · LW(p) · GW(p)

I doubt that weakening from formal proof to heuristic saves the conjecture. Instead I lean towards Stephen Wolfram's Computational Irreducibly view of math. Some things are true simply because they are true and in general there's no reason to expect a simpler explanation.

In order to reject this you would either have to assert:

a) Wolfram is wrong and there are actually deep reasons why simple systems behave precisely the way they do

or

b) For some reason computational irreducibly applies to simple things but not to infinite sets of the type mathematicians tend to be interested in.

I should also clarify that in a certain sense I do believe b). I believe that pi is normal because something very fishy would have to be happening for it to not be.

However, I don't think this holds in general.

With Collatz, for example, we are already getting close to the hairy "just so" Turing machine like behavior where you would expect the principle to fail.

Certainly, if one were to collect all the Collatz-like systems that arise from Turing Machines I would expect some fraction of them to fail the no-coincidence principle.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2025-02-18T00:10:03.622Z · LW(p) · GW(p)

Some things are true simply because they are true and in general there's no reason to expect a simpler explanation.

You could believe:

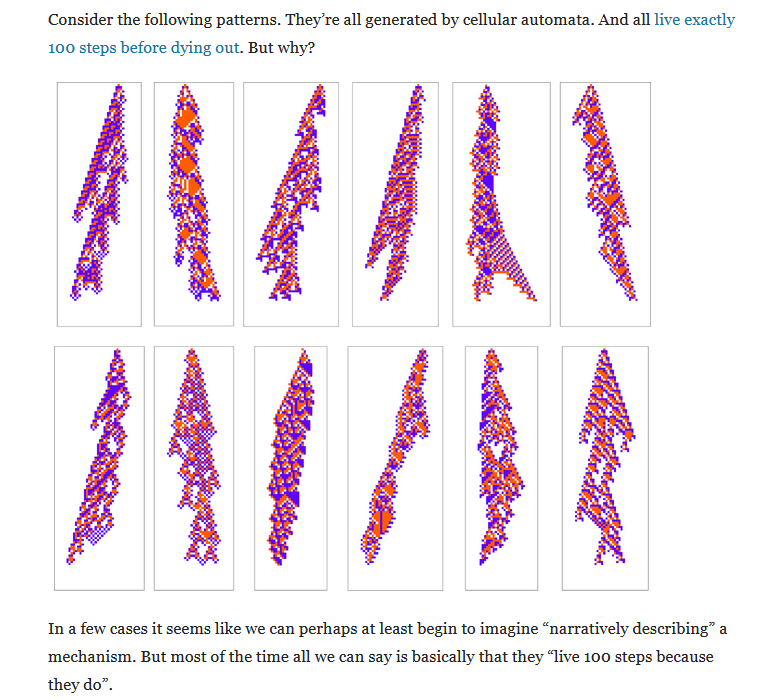

Some things are true simply because they are true, but only when being true isn't very surprising. (For instance, it isn't very surprising that there are some cellular automata that live for 100 steps or that any particular cellular automata lives for 100 steps.)

However, things which are very surprising and don't have a relatively compact explanation are exponentionally rare. And, in the case where something is infinitely surprising (e.g., if the digits of pi weren't normal), there will exist a finite explanation.

Replies from: logan-zoellner↑ comment by Logan Zoellner (logan-zoellner) · 2025-02-18T00:43:37.013Z · LW(p) · GW(p)

It sounds like you agree "if a Turing machine goes for 100 steps and then stops" this is ordinary and we shouldn't expect an explanation. But also believe "if pi is normal for 10*40 digits and then suddenly stops being normal this is a rare and surprising coincidence for which there should be an explanation".

And in the particular case of pi I agree with you.

But if you start using this principle in general it is not going to work out well for you. Most simple to describe sequences that suddenly stop aren't going to have nice pretty explanations.

Or to put it another way: the number of things which are nice (like pi) are dramatically outnumbered by the number of things that are arbitrary (like cellular automata that stop after exactly 100 steps).

I would absolutely love if there was some criteria that I could apply to tell me whether something is nice or arbitrary, but the Halting Problem forbids this. The best we can do is mathematical taste. If mathematicians have been studying something for a long time and it really does seem nice, there is a good chance it is.

Replies from: Jacob_Hilton↑ comment by Jacob_Hilton · 2025-02-18T02:37:45.533Z · LW(p) · GW(p)

The hope is to use the complexity of the statement rather than mathematical taste.

If it takes me 10 bits to specify a computational possibility that ought to happen 1% of the time, then we shouldn't be surprised to find around 10 (~1% of ) occurrences. We don't intend the no-coincidence principle to claim that these should all happen for a reason.

Instead, we intend the no-coincidence principle to claim that such if such coincidences happen much more often than we would have expected them to by chance, then there is a reason for that. Or put another way: if we applied bits of selection to the statement of a -level coincidence, then there is a reason for it. (Hopefully the "outrageous" qualifier helps to indicate this, although we don't know whether Gowers meant quite same thing as us.)

The formalization reflects this distinction: the property is chosen to be so unlikely that we wouldn't expect it to happen for any circuit at all by chance (), not merely that we wouldn't expect it to happen for a single random circuit. Hence by the informal principle, there ought to be a reason for any occurrence of property .

Replies from: logan-zoellner↑ comment by Logan Zoellner (logan-zoellner) · 2025-02-18T13:27:14.358Z · LW(p) · GW(p)

The hope is to use the complexity of the statement rather than mathematical taste.

I understand the hope, I just think it's going to fail (for more or less the same reason it fails with formal proof).

With formal proof, we have Godel's speedup, which tells us that you can turn a Godel statement in a true statement with a ridiculously long proof.

You attempt to get around this by replacing formal proof with "heuristic", but whatever your heuristic system, it's still going to have some power (in the Turing hierarchy sense) and some Godel statement. That Godel statement is in turn going to result in a "seeming coincidence".

Wolfram's observation is that this isn't some crazy exception, this is the rule. Most true statements in math are pretty arbitrary and don't have shorter explanations than "we checked it and its true".

The reason why mathematical taste works is that we aren't dealing with "most true statements", we're only dealing with statements that have particular beauty or interest to Mathematicians.

It may seem like cheating to say that human mathematicians can do something that literally no formal mathematical system can do. But if you truly believe that, the correct response would be to respond when asked "is pi normal" with "I don't know".

The reason why your intuition is throwing you off is because you keep thinking of coincidences as "pi is normal" and not "we picked an arbitrary CA with 15k bits of complexity and ran it for 15k steps but it didn't stop. I guess it never terminates."

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2025-03-22T15:42:34.124Z · LW(p) · GW(p)

'taste', ' mathematical beauty', ' interesting to mathematicians' aren't arbitrary markers but reflect a deeper underlying structure that is, I believe, ultimately formalizable.

It does not seem unlikely to me at all that it will be possible to mathematically describe those true statements that are moreover of particular beauty or likely interest to mathematicians (human, artificial or alien).

The Godel speedup story is an interesting point. I haven't thought deeply enough about this but IIRC the original ARC heuristic arguments has several sections on this and related topics. You might want to consult there.

comment by Bunthut · 2025-03-10T15:56:45.788Z · LW(p) · GW(p)

This isn't my area of expertise, but I think I have a sketch for a very simple weak proof:

The conjecture states that V runtime and length are polynomial in C size, but leaves the constant open. Therefore a counterexample would have to be an infinite family of circuits satisfying P(C), with their corresponding growing faster than polynomial. To prove the existence of such a counterexample, you would need a proof that each member of the family satisfies P(C). But that proof has finite length, and can be used as the for any member of the family with minor modification. Therefore there can never be a proven counterexample.

Or am I misunderstanding something?

Replies from: UnexpectedValues↑ comment by Eric Neyman (UnexpectedValues) · 2025-03-10T18:42:16.840Z · LW(p) · GW(p)

That's an interesting point! I think it only applies to constructive proofs, though: you could imagine disproving the counterexample by showing that for every V, there is some circuit that satisfies P(C) but that V doesn't flag, without exhibiting a particular such circuit.

comment by Lionel Levine · 2025-02-20T15:03:51.763Z · LW(p) · GW(p)

Suppose I hand you a circuit C that I sampled uniformly at random from the set of all depth-n reversible circuits satisfying P. What is a reason to believe that there exists a short heuristic argument for the fact that this particular C satisfies P?

Replies from: UnexpectedValues↑ comment by Eric Neyman (UnexpectedValues) · 2025-02-20T19:43:15.931Z · LW(p) · GW(p)

We've done some experiments with small reversible circuits. Empirically, a small circuit generated in the way you suggest has very obvious structure that makes it satisfy P (i.e. it is immediately evident from looking at the circuit that P holds).

This leaves open the question of whether this is true as the circuits get large. Our reasons for believing this are mostly based on the same "no-coincidence" intuition highlighted by Gowers: a naive heuristic estimate suggests that if there is no special structure in the circuit, the probability that it would satisfy P is doubly exponentially small. So probably if C does satisfy P, it's because of some special structure.

comment by tailcalled · 2025-02-15T21:08:00.832Z · LW(p) · GW(p)

Would "the neural network has learned a lookup table with a compressed version of the dataset and interpolates on that in order to output its answers" count as an explanation of the low dataset loss?

(Note, this phrasing kind of makes it sound too simple. Since the explanations you are seeking presumably don't come with the dataset baked-in as a thing they can reference primitively, presumably the actual formal statement would need to include this entire compressed lookup table. Also, I'm imagining a case where there isn't really a "compression algorithm" because the compression is intimately tied up with the neural network itself, and so it's full of ad-hoc cases.)

Like I guess from an alignment perspective this could still be useful because it would be nice to know to what extent "bag of heuristics" holds, and this is basically a formalization of that. But at the same time, I already roughly speaking (with lots of asterisks, but not ones that seem likely to be addressed by this) expect this to hold, and it doesn't really rule out other dangers (like those heuristics could interact in a problematic way), so it seems kind of like it would just lead to a dead-end from my perspective.

Replies from: tailcalled↑ comment by tailcalled · 2025-02-15T21:14:11.504Z · LW(p) · GW(p)

Maybe to expand: In order to get truly good training loss on an autoregressive training objective, you probably need to have some sort of intelligence-like or agency-like dynamic. But much more importantly, you need a truly vast amount of knowledge. So most of the explanation for the good performance comes from the knowledge, not the intelligence-like dynamic.

(Ah, but intelligence is more general, so maybe we'd expect it to show up in lots of datapoints, thereby making up a relatively big chunk of the training objective? I don't think so, for two reasons: 1) a lot of datapoints don't really require much intelligence to predict, 2) there are other not-very-intelligence-requiring things like grammar or certain aspects of vocabulary which do show up in a really big chunk.)

Replies from: UnexpectedValues↑ comment by Eric Neyman (UnexpectedValues) · 2025-02-16T18:22:58.876Z · LW(p) · GW(p)

Is this a correct rephrasing of your question?

It seems like a full explanation of a neural network's low loss on the training set needs to rely on lots of pieces of knowledge that it learns from the training set (e.g. "Barack" is usually followed by "Obama"). How do random "empirical regularities" about the training set like this one fit into the explanation of the neural net?

Our current best guess about what an explanation looks like is something like modeling the distribution of neural activations. Such an activation model would end up having baked-in empirical regularities, like the fact that "Barack" is usually followed by "Obama". So in other words, just as the neural net learned this empirical regularity of the training set, our explanation will also learn the empirical regularity, and that will be part of the explanation of the neural net's low loss.

(There's a lot more to be said here, and our picture of this isn't fully fleshed out: there are some follow-up questions you might ask to which I would answer "I don't know". I'm also not sure I understood your question correctly.)

Replies from: tailcalled↑ comment by tailcalled · 2025-02-16T19:37:32.176Z · LW(p) · GW(p)

Yeah, this seems like a reasonable restatement of my question.

I guess my main issue with this approach is that extrapolating the distribution of activations from a dataset isn't what I'd consider the hard part of alignment. Rather, it would be:

- Detecting catastrophic outputs and justifying their catastrophicness to others. (In particular, I suspect no individual output will be catastrophic on the margin regardless of whether catastrophe will occur. Either the network will consistently avoid giving catastrophic outputs, or it will sufficiently consistently be harmful that localizing the harm to 1 output will not be meaningful.)

- Learning things about the distribution of inputs that cannot be extrapolated from any dataset. (In particular, the most relevant short-term harm I've noticed would be stuff like young nerds starting to see the AI as a sort of mentor and then having their questionable ideas excessively validated by this mentor rather than receiving appropriate pushback. This would be hard to extrapolate from a dataset, even though it is relatively obvious if you interact with certain people. Though whether that counts as "catastrophic" is a complicated question.)

comment by Christopher King (christopher-king) · 2025-03-21T14:59:14.533Z · LW(p) · GW(p)

Ultimately, though, we are not wedded to our particular formulation. Perhaps there is some clever sampling-based verifier that "trivializes" our conjecture as well, in which case we would want to revise it.

I think your goal should be to show that your abstract conjecture implies the concrete result you're after, or is even equivalent to it.

At ARC, we are interested in finding explanations of neural network behavior. Concretely, a trained neural net (such as GPT-4) exhibits a really surprising property: it gets low loss on the training set (far lower than a random neural net).

We can formalize this in a similar way as the reversible circuit conjecture. Here's a rough sketch:

Transformer performance no-coincidence conjecture: Consider a computable process that randomly generates text. The distribution has significantly lower entropy than the uniform distribution. Consider the property P(T) that says "the transformer T gets low average loss when predicting this process". There is a deterministic polynomial time verifier V(T, π) such that:

- P(T) implies that there exists a polynomial length π with V(T,π) = 1.

- For 99% of transformers T, there is no π with V(T,π) = 1.

Note that "ignore π, and then test T on a small number of inputs" doesn't work. P is only asking if T has low average loss, so you can't falsify P with a small number of inputs.

comment by evalu · 2025-03-12T22:29:29.752Z · LW(p) · GW(p)

99% of random[3] reversible circuits , no such exists.

Do you mean 99% of circuits that don't satisfy P? Because there probably are distributions of random reversible circuits that satisfy P exactly 1% of the time, and that would make V's job as hard as NP = coNP.

Replies from: UnexpectedValues↑ comment by Eric Neyman (UnexpectedValues) · 2025-03-12T22:34:36.298Z · LW(p) · GW(p)

We are interested in natural distributions over reversible circuits (see e.g. footnote 3), where we believe that circuits that satisfy P are exceptionally rare (probably exponentially rare).

comment by danielechlin · 2025-02-21T05:12:56.671Z · LW(p) · GW(p)

Reminds me of the Tolkien cosmology including the inexplicable Tom Bombadil. Human intuition on your conjecture is varied. I vote it's false - seems like if the universe has enough chances to do something coincidental it'll get lucky eventually. I feel that force is stronger than the ability to find an even better contextualized explanation.

comment by avturchin · 2025-02-16T12:08:02.323Z · LW(p) · GW(p)

A possible example of such coincidence is the Glodbach conjecture: every even number greater than 2 can be presented as a sum of two primes. As for any large number there are many ways to express it as a sum of primes, it can be pure coincidence that we didn't find exceptions.

comment by Warty · 2025-02-15T22:56:30.765Z · LW(p) · GW(p)

For 99% of random[3] reversible circuits , no such exists.

What's the proportion of circuits where P(C) is true?

Replies from: UnexpectedValues↑ comment by Eric Neyman (UnexpectedValues) · 2025-02-16T01:18:24.287Z · LW(p) · GW(p)

My guess is that P is true for an exponentially small fraction of circuits. You could plausibly prove this with combinatorics (given that e.g. the first layer randomly puts inputs into gates, which means you could try to reason about the class of circuits that are the same except that the inputs are randomly permuted before being run through the circuit). I haven't gone through this math, though.