Alexander Gietelink Oldenziel's Shortform

post by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2022-11-16T15:59:54.709Z · LW · GW · 569 commentsContents

571 comments

569 comments

Comments sorted by top scores.

comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-05-27T12:52:22.928Z · LW(p) · GW(p)

My mainline prediction scenario for the next decades.

My mainline prediction * :

- LLMs will not scale to AGI. They will not spawn evil gremlins or mesa-optimizers. BUT Scaling laws will continue to hold and future LLMs will be very impressive and make a sizable impact on the real economy and science over the next decade. EDIT: since there is a lot of confusion about this point. BY LLM I mean the paradigm of pre-trained transformers. This does not include different paradigms that follow pre-trained transformers but are still called large language models. EDIT2: since I'm already anticipating confusion on this point: when I say scaling laws will continue to hold that means that the 3-way relation between model size, compute, data will probably continue to hold. It has been known for a long time that amount of data used by gpt-4 level models is already within perhaps an OOM of the maximum. ]

- there is a single innovation left to make AGI-in-the-alex sense work, i.e. coherent, long-term planning agents (LTPA) that are effective and efficient in data sparse domains over long horizons.

- that innovation will be found within the next 10-15 years

- It will be clear to the general public that these are dangerous

- governments will act quickly and (relativiely) decisively to bring these agents under state-control. national security concerns will dominate.

- power will reside mostly with governments AI safety institutes and national security agencies. In so far as divisions of tech companies are able to create LTPAs they will be effectively nationalized.

- International treaties will be made to constrain AI, outlawing the development of LTPAs by private companies. Great power competition will mean US and China will continue developing LTPAs, possibly largely boxed. Treaties will try to constrain this development with only partial succes (similar to nuclear treaties).

- LLMs will continue to exist and be used by the general public

- Conditional on AI ruin the closest analogy is probably something like the Cortez-Pizarro-Afonso takeovers [LW · GW]. Unaligned AI will rely on human infrastructure and human allies for the earlier parts of takeover - but its inherent advantages in tech, coherence, decision-making and (artificial) plagues will be the deciding factor.

- The world may be mildly multi-polar.

- This will involve conflict between AIs.

- AIs very possible may be able to cooperate in ways humans can't.

- The arrival of AGI will immediately inaugurate a scientific revolution. Sci-fi sounding progress like advanced robotics, quantum magic, nanotech, life extension, laser weapons, large space engineering, cure of many/most remaining diseases will become possible within two decades of AGI, possibly much faster.

- Military power will shift to automated manufacturing of drones & weaponized artificial plagues. Drones, mostly flying will dominate the battlefield. Mass production of drones and their rapid and effective deployment in swarms will be key to victory.

Two points on which I differ with most commentators: (i) I believe AGI is a real (mostly discrete) thing , not a vibe, or a general increase of improved tools. I believe it is inherently agenctic. I don't think spontaneous emergence of agents is impossible but I think it is more plausible agents will be built rather than grown.

(ii) I believe in general the ea/ai safety community is way overrating the importance of individual tech companies vis a vis broader trends and the power of governments. I strongly agree with Stefan Schubert's take here on the latent hidden power of government: https://stefanschubert.substack.com/p/crises-reveal-centralisation

Consequently, the ea/ai safety community is often myopically focusing on boardroom politics that are relativily inconsequential in the grand scheme of things.

*where by mainline prediction I mean the scenario that is the mode of what I expect. This is the single likeliest scenario. However, since it contains a large number of details each of which could go differently, the probability on this specific scenario is still low.

Replies from: steve2152, dmurfet, ryan_greenblatt, thomas-kwa, Seth Herd, D0TheMath, lcmgcd, James Anthony↑ comment by Steven Byrnes (steve2152) · 2024-05-29T01:50:40.400Z · LW(p) · GW(p)

governments will act quickly and (relativiely) decisively to bring these agents under state-control. national security concerns will dominate.

I dunno, like 20 years ago if someone had said “By the time somebody creates AI that displays common-sense reasoning, passes practically any written test up including graduate-level, (etc.), obviously governments will be flipping out and nationalizing AI companies etc.”, to me that would have seemed like a reasonable claim. But here we are, and the idea of the USA govt nationalizing OpenAI seems a million miles outside the Overton window.

Likewise, if someone said “After it becomes clear to everyone that lab leaks can cause pandemics costing trillions of dollars and millions of lives, then obviously governments will be flipping out and banning the study of dangerous viruses—or at least, passing stringent regulations with intrusive monitoring and felony penalties for noncompliance etc,” then that would also have sounded reasonable to me! But again, here we are.

So anyway, my conclusion is that when I ask my intuition / imagination whether governments will flip out in thus-and-such circumstance, my intuition / imagination is really bad at answering that question. I think it tends to underweight the force compelling goverments to continue following longstanding customs / habits / norms? Or maybe it’s just hard to predict and these are two cherrypicked examples, and if I thought a bit harder I’d come up with lots of examples in the opposite direction too (i.e., governments flipping out and violating longstanding customs on a dime)? I dunno. Does anyone have a good model here?

Replies from: ryan_greenblatt, Lblack↑ comment by ryan_greenblatt · 2024-05-29T02:46:39.623Z · LW(p) · GW(p)

One strong reason to think the AI case might be different is that US national security will be actively using AI to build weapons and thus it will be relatively clear and salient to US national security when things get scary.

Replies from: steve2152, johnvon↑ comment by Steven Byrnes (steve2152) · 2024-06-03T12:56:35.665Z · LW(p) · GW(p)

For one thing, COVID-19 obviously had impacts on military readiness and operations, but I think that fact had very marginal effects on pandemic prevention.

For another thing, I feel like there’s a normal playbook for new weapons-development technology, which is that the military says “Ooh sign me up”, and (in the case of the USA) the military will start using the tech in-house (e.g. at NRL) and they’ll also send out military contracts to develop the tech and apply it to the military. Those contracts are often won by traditional contractors like Raytheon, but in some cases tech companies might bid as well.

I can’t think of precedents where a tech was in wide use by the private sector but then brought under tight military control in the USA. Can you?

The closest things I can think of is secrecy orders (the US military gets to look at every newly-approved US patent and they can choose to declare them to be military secrets) and ITAR (the US military can declare that some area of tech development, e.g. certain types of high-quality IR detectors that are useful for night vision and targeting, can’t be freely exported, nor can their schematics etc. be shared with non-US citizens).

Like, I presume there are lots of non-US-citizens who work for OpenAI. If the US military were to turn OpenAI’s ongoing projects into classified programs (for example), those non-US employees wouldn’t qualify for security clearances. So that would basically destroy OpenAI rather than control it (and of course the non-USA staff would bring their expertise elsewhere). Similarly, if the military was regularly putting secrecy orders on OpenAI’s patents, then OpenAI would obviously respond by applying for fewer patents, and instead keeping things as trade secrets which have no normal avenue for military review.

By the way, fun fact: if some technology or knowledge X is classified, but X is also known outside a classified setting, the military deals with that in a very strange way: people with classified access to X aren’t allowed to talk about X publicly, even while everyone else in the world does! This comes up every time there’s a leak, for example (e.g. Snowden). I mention this fact to suggest an intuitive picture where US military secrecy stuff involves a bunch of very strict procedures that everyone very strictly follows even when they kinda make no sense.

(I have some past experience with ITAR, classified programs, and patent secrecy orders, but I’m not an expert with wide-ranging historical knowledge or anything like that.)

↑ comment by Lucius Bushnaq (Lblack) · 2024-06-05T14:09:55.754Z · LW(p) · GW(p)

But here we are, and the idea of the USA govt nationalizing OpenAI seems a million miles outside the Overton window.

Registering that it does not seem that far out the Overton window to me anymore. My own advance prediction of how much governments would be flipping out around this capability level has certainly been proven a big underestimate.

↑ comment by Daniel Murfet (dmurfet) · 2024-05-28T00:59:03.488Z · LW(p) · GW(p)

I think this will look a bit outdated in 6-12 months, when there is no longer a clear distinction between LLMs and short term planning agents, and the distinction between the latter and LTPAs looks like a scale difference comparable to GPT2 vs GPT3 rather than a difference in kind. At what point do you imagine a national government saying "here but no further?".

Replies from: cubefox↑ comment by cubefox · 2024-05-28T17:59:35.767Z · LW(p) · GW(p)

So you are predicting that within 6-12 months, there will no longer be a clear distinction between LLMs and "short term planning agents". Do you mean that agentic LLM scaffolding like Auto-GPT [LW · GW] will qualify as such?

Replies from: dmurfet↑ comment by Daniel Murfet (dmurfet) · 2024-05-29T01:28:11.421Z · LW(p) · GW(p)

I think scaffolding is the wrong metaphor. Sequences of actions, observations and rewards are just more tokens to be modeled, and if I were running Google I would be busy instructing all work units to start packaging up such sequences of tokens to feed into the training runs for Gemini models. Many seemingly minor tasks (e.g. app recommendation in the Play store) either have, or could have, components of RL built into the pipeline, and could benefit from incorporating LLMs, either by putting the RL task in-context or through fine-tuning of very fast cheap models.

So when I say I don't see a distinction between LLMs and "short term planning agents" I mean that we already know how to subsume RL tasks into next token prediction, and so there is in some technical sense already no distinction. It's a question of how the underlying capabilities are packaged and deployed, and I think that within 6-12 months there will be many internal deployments of LLMs doing short sequences of tasks within Google. If that works, then it seems very natural to just scale up sequence length as generalisation improves.

Arguably fine-tuning a next-token predictor on action, observation, reward sequences, or doing it in-context, is inferior to using algorithms like PPO. However, the advantage of knowledge transfer from the rest of the next-token predictor's data distribution may more than compensate for this on some short-term tasks.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2024-09-17T17:40:28.283Z · LW(p) · GW(p)

I think o1 is a partial realization of your thesis, and the only reason it's not more successful is because the compute used for GPT-o1 and GPT-4o were essentially the same:

https://www.lesswrong.com/posts/bhY5aE4MtwpGf3LCo/openai-o1 [LW · GW]

And yeah, the search part was actually quite good, if a bit modest in it's gains.

Replies from: alexander-gietelink-oldenziel, dmurfet↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-09-19T14:12:25.111Z · LW(p) · GW(p)

As far as I can tell Strawberry is proving me right: it's going beyond pre-training and scales inference - the obvious next step.

A lot of people said just scaling pre-trained transformers would scale to AGI. I think that's silly and doesn't make sense. But now you don't have to believe me - you can just use OpenAIs latest model.

The next step is to do efficient long-horizon RL for data-sparse domains.

Strawberry working suggest that this might not be so hard. Don't be fooled by the modest gains of Strawberry so far. This is a new paradigm that is heading us toward true AGI and superintelligence.

↑ comment by Daniel Murfet (dmurfet) · 2024-09-18T04:12:38.664Z · LW(p) · GW(p)

Yeah actually Alexander and I talked about that briefly this morning. I agree that the crux is "does this basic kind of thing work" and given that the answer appears to be "yes" we can confidently expect scale (in both pre-training and inference compute) to deliver significant gains.

I'd love to understand better how the RL training for CoT changes the representations learned during pre-training.

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-09-19T14:22:17.578Z · LW(p) · GW(p)

in my reading, Strawberry is showing that indeed scaling just pretraining transformers will *not* lead to AGI. The new paradigm is inference-scaling - the obvious next step is doing RL on long horizons and sparse data domains. I have been saying this ever since gpt-3 came out.

For the question of general intelligence imho the scaling is conceptually a red herring: any (general purpose) algorithm will do better when scaled. The key in my mind is the algorithm not the resource, just like I would say a child is generally intelligent while a pocket calculator is not even if the child can't count to 20 yet. It's about the meta-capability to learn not the capability.

As we spoke earlier - it was predictable that this was going to be the next step. It was likely it was going to work, but there was a hopeful world in which doing the obvious thing turned out to be harder. That hope has been dashed - it suggests longer horizons might be easy too. This means superintelligence within two years is not out of the question.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2024-09-20T20:00:13.634Z · LW(p) · GW(p)

We have been shown that this search algorithm works, and we not yet have been shown that the other approaches don't work.

Remember, technological development is disjunctive, and just because you've shown that 1 approach works, doesn't mean that we have been shown that only that approach works.

Of course, people will absolutely try to scale this one up now that they found success, and I think that timelines have definitely been shortened, but remember that AI progress is closer to a disjunctive scenario than conjunctive scenario:

I agree with this quote below, but I wanted to point out the disjunctiveness of AI progress:

As we spoke earlier - it was predictable that this was going to be the next step. It was likely it was going to work, but there was a hopeful world in which doing the obvious thing turned out to be harder. That hope has been dashed - it suggests longer horizons might be easy too. This means superintelligence within two years is not out of the question.

https://gwern.net/forking-path

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-09-23T13:50:21.328Z · LW(p) · GW(p)

strong disagree. i would be highly surprised if there were multiple essentially different algorithms to achieve general intelligence*.

I also agree with the Daniel Murfet's quote. There is a difference between a disjunction before you see the data and a disjunction after you see the data. I agree AI development is disjunctive before you see the data - but in hindsight all the things that work are really minor variants on a single thing that works.

*of course "essentially different" is doing a lot of work here. some of the conceptual foundations of intelligence haven't been worked out enough (or Vanessa has and I don't understand it yet) for me to make a formal statement here.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2024-09-23T19:47:34.988Z · LW(p) · GW(p)

Re different algorithms, I actually agree with both you and Daniel Murfet in that conditional on non-reversible computers, there is at most 1-3 algorithms to achieve intelligence that can scale arbitrarily large, and I'm closer to 1 than 3 here.

But once reversible computers/superconducting wires are allowed, all bets are off on how many algorithms are allowed, because you can have far, far more computation with far, far less waste heat leaving, and a lot of the design of computers is due to heat requirements.

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-09-26T14:11:01.678Z · LW(p) · GW(p)

Reversible computing and superconducting wires seem like hardware innovations. You are saying that this will actually materially change the nature of the algorithm you'd want to run?

I'd bet against. I'd be surprised if this was the case. As far as I can tell everything we have so seen so far points to a common simple core of general intelligence algorithm (basically an open-loop RL algorithm on top of a pre-trained transformers). I'd be surprised if there were materially different ways to do this. One of the main takeaways of the last decade of deep learning process is just how little architecture matters - it's almost all data and compute (plus I claim one extra ingredient, open-loop RL that is efficient on long horizons and sparse data novel domains)

I don't know for certain of course. If I look at theoretical CS though the universality of computation makes me skeptical of radically different algorithms.

↑ comment by ryan_greenblatt · 2024-05-27T17:42:16.281Z · LW(p) · GW(p)

I'm a bit confused by what you mean by "LLMs will not scale to AGI" in combination with "a single innovation is all that is needed for AGI".

E.g., consider the following scenarios:

- AGI (in the sense you mean) is achieved by figuring out a somewhat better RL scheme and massively scaling this up on GPT-6.

- AGI is achieved by doing some sort of architectural hack on top of GPT-6 which makes it able to reason in neuralese for longer and then doing a bunch of training to teach the model to use this well.

- AGI is achieved via doing some sort of iterative RL/synth data/self-improvement process for GPT-6 in which GPT-6 generates vast amounts of synthetic data for itself using various tools.

IMO, these sound very similar to "LLMs scale to AGI" for many practical purposes:

- LLM scaling is required for AGI

- LLM scaling drives the innovation required for AGI

- From the public's perspective, it maybe just looks like AI is driven by LLMs getting better over time and various tweaks might be continuously introduced.

Maybe it is really key in your view that the single innovation is really discontinuous and maybe the single innovation doesn't really require LLM scaling.

↑ comment by Thomas Kwa (thomas-kwa) · 2024-05-27T18:32:13.534Z · LW(p) · GW(p)

I think a single innovation left to create LTPA is unlikely because it runs contrary to the history of technology and of machine learning. For example, in the 10 years before AlphaGo and before GPT-4, several different innovations were required-- and that's if you count "deep learning" as one item. ChatGPT actually understates the number here because different components of the transformer architecture like attention, residual streams, and transformer++ innovations were all developed separately.

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-05-27T19:30:01.226Z · LW(p) · GW(p)

I mostly regard LLMs = [scaling a feedforward network on large numbers of GPUs and data] as a single innovation.

Replies from: thomas-kwa↑ comment by Thomas Kwa (thomas-kwa) · 2024-05-27T20:31:35.321Z · LW(p) · GW(p)

Then I think you should specify that progress within this single innovation could be continuous over years and include 10+ ML papers in sequence each developing some sub-innovation.

↑ comment by Seth Herd · 2024-05-27T21:51:36.916Z · LW(p) · GW(p)

Agreed on all points except a couple of the less consequential, where I don't disagree.

Strongest agreement: we're underestimating the importance of governments for alignment and use/misuse. We haven't fully updated from the inattentive world hypothesis [LW · GW]. Governments will notice the importance of AGI before it's developed, and will seize control. They don't need to nationalize the corporations, they just need to have a few people embedded at theh company and demand on threat of imprisonment that they're kept involved with all consequential decisions on its use. I doubt they'd even need new laws, because the national security implications are enormous. But if they need new laws, they'll create them as rapidly as necessary. Hopping borders will be difficult, and just put a different government in control.

Strongest disagreement: I think it's likely that zero breakthroughs are needed to add long term planning capabilities to LLM-based systems, and so long term planning agents (I like the terminology) will be present very soon, and improve as LLMs continue to improve. I have specific reasons for thinking this. I could easily be wrong, but I'm pretty sure that the rational stance is "maybe". This maybe advances the timelines dramatically.

Also strongly agree on AGI as a relatively discontinuous improvement; I worry that this is glossed over in modern "AI safety" discussions, causing people to mistake controlling LLMs for aligning the AGIs we'll create on top of them. AGI alignment requires different conceptual work.

↑ comment by Garrett Baker (D0TheMath) · 2024-05-27T18:13:05.697Z · LW(p) · GW(p)

Do you think the final big advance happens within or with-out labs?

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-05-27T19:30:49.122Z · LW(p) · GW(p)

Probably within.

↑ comment by lemonhope (lcmgcd) · 2024-05-29T07:05:53.758Z · LW(p) · GW(p)

So somebody gets an agent which efficiently productively indefinitely works on any specified goal, then they just let the government find out and take it? No countermeasures?

↑ comment by James Anthony · 2024-05-28T17:33:38.531Z · LW(p) · GW(p)

What "coherent, long-term planning agents" means, and what is possible with these agents, is not clear to me. How would they overcome lack of access to knowledge, as was highlighted by F.A. Hayek in "The Use of Knowledge in Society"? What actions would they plan? How would their planning come to replace humans' actions? (Achieving control over some sectors of battlefields would only be controlling destruction, of course, it would not be controlling creation.)

Some discussion is needed that recognizes and takes into account differences among governance structures. What seems the most relevant to me are these cases: (1) totalitarian governments, (2) somewhat-free governments, (3) transnational corporations, (4) decentralized initiatives. This is a new kind of competition, but the results will be like with major wars: Resilient-enough groups will survive the first wave or new groups will re-form later, and ultimately the competition will be won by the group that outproduces the others. In each successive era, the group that outproduces the others will be the group that leaves people the freest.

comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-12-28T23:10:11.008Z · LW(p) · GW(p)

John wrote an explosive postmortem on the alignment field [LW · GW], boldy proclaiming that almost all alignment research is trash. John held the ILIAD conference [which I helped organize] as one of the few examples of places where research is going in the right direction. While I share some of his concerns about the field's trajectory, and I am flattered that ILIAD was appreciated, I feel ambivalent about ILIAD being pulled into what I can only describe as an alignment culture war.

There's plenty to criticise about mainstream alignment research but blanket dismissals feel silly to me? Sparse auto-encoders are exciting! Research on delegated oversight & safety-by-debate is vitally important. Scary demos isn't exciting as Deep Science but its influence on policy is probably much greater than that long-form essay on conceptual alignment. AI psychology doesn't align with a physicist's aesthetic but as alignment is ultimately about attitudes of artifical intelligences maybe just talking with Claude about his feelings might prove valuable. There's lots of experimental work in mainstream ML on deep learning that will be key to constructing a grounded theory of deep learning. And I'm sure there is a ton more I am not familiar with.

Beyond being an unfair and uninformed dismissal of a lot of solid work, it risks unnecessarily antagonizing people - making it even harder to advocate theoretical research like agent foundations.

Humility is no sin. I sincerely believe mathematical and theory-grounded research programmes in alignment are neglected, tractable and important, potentially even crucial. Yet I'll be the first to acknowledge that there are many worlds in which it is too late or fundamentally unable to deliver on its promise while prosaic alignment ideas do. And in worlds in which theory does bear fruit - ultimately that will be through engaging with pretty mundane, prosaic things.

What's concerning is watching a certain strain of dismissiveness towards mainstream ideas calcify within parts of the rationalist ecosystem. As Vanessa notes in her comment, this attitude of isolation and attendant self-satisfied sense of superiority certainly isn't new. It has existed for a while around MIRI & the rationalist community. Yet it appears to be intensifying as AI safety becomes more mainstream and the rationalist community's relative influence decreases

[1]

.

I liked this comment by Adam Shai (shared with permission):

If one disagrees with the mainstream approach then its on you (talking to myself!) to _show it_, or better yet to do the thing _better_. Being convincing to others often requires operationalizing your ideas in a tangible situation/experiment/model, and actually isn't just a politically useful tool, it's one of the main mechanism by which you can reality check yourself. It's very easy to get caught up in philosophically beautiful ideas and to trick oneself. The test is what you can do with the ideas. The mainstream approach is great because it actually does stuff! It finds latents in actually existing networks, it shows by example situations that feel concerning, etc. etc.

I disagree with many aspects of the mainstream approach, but I also have a more global belief that the mainstream is a mainstream for a good reason! And those of us that disagree with it, or think too many people are going that route, should be careful not to box oneself into a predetermined and permanent social role of "outsider who makes no real progress even if they talk about cool stuff"

- ^

See also the confident pronouncements of certain doom in these quarters - surely just as silly as complete confidence in the impossibility of doom.

↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2024-12-29T11:41:36.918Z · LW(p) · GW(p)

I think that there are two key questions we should be asking:

- Where is the value of a an additional researcher higher on the margin?

- What should the field look like in order to make us feel good about the future?

I agree that "prosaic" AI safety research is valuable. However, at this point it's far less neglected than foundational/theoretical research and the marginal benefits there are much smaller. Moreover, without significant progress on the foundational front, our prospects are going to be poor, ~no matter how much mech-interp and talking to Claude about feelings we will do.

John has a valid concern that, as the field becomes dominated by the prosaic paradigm, it might become increasingly difficult to get talent and resources to the foundational side, or maintain memetically healthy coherent discourse. As to the tone, I have mixed feelings. Antagonizing people is bad, but there's also value in speaking harsh truths the way you see them. (That said, there is room in John's post for softening the tone without losing much substance.)

↑ comment by TsviBT · 2024-12-29T02:09:17.621Z · LW(p) · GW(p)

Scary demos isn't exciting as Deep Science but its influence on policy

There maybe should be a standardly used name for the field of generally reducing AI x-risk, which would include governance, policy, evals, lobbying, control, alignment, etc., so that "AI alignment" can be a more narrow thing. I feel (coarsely speaking) grateful toward people working on governance, policy, evals_policy, lobbying; I think control is pointless or possibly bad (makes things look safer than they are, doesn't address real problem); and frustrated with alignment.

What's concerning is watching a certain strain of dismissiveness towards mainstream ideas calcify within parts of the rationalist ecosystem. As Vanessa notes in her comment, this attitude of isolation and attendant self-satisfied sense of superiority certainly isn't new. It has existed for a while around MIRI & the rationalist community. Yet it appears to be intensifying as AI safety becomes more mainstream and the rationalist community's relative influence decreases

What should one do, who:

- thinks that there's various specific major defeaters to the narrow project of understanding how to align AGI;

- finds partial consensus with some other researchers about those defeaters;

- upon explaining these defeaters to tens or hundreds of newcomers, finds that, one way or another, they apparently-permanently fail to avoid being defeated by those defeaters?

It sounds like in this paragraph your main implied recommendation is "be less snooty". Is that right?

Replies from: alexander-gietelink-oldenziel, ryan_greenblatt, lahwran, dtch1997↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-12-29T08:12:48.090Z · LW(p) · GW(p)

What is a defeater and can you give some examples ?

Replies from: TsviBT↑ comment by TsviBT · 2024-12-29T12:35:06.330Z · LW(p) · GW(p)

A thing that makes alignment hard / would defeat various alignment plans or alignment research plans.

E.g.s: https://www.lesswrong.com/posts/uMQ3cqWDPHhjtiesc/agi-ruin-a-list-of-lethalities#Section_B_ [LW · GW]

E.g. the things you're studying aren't stable under reflection.

E.g. the things you're studying are at the wrong level of abstraction (SLT, interp, neuro) https://www.lesswrong.com/posts/unCG3rhyMJpGJpoLd/koan-divining-alien-datastructures-from-ram-activations [LW · GW]

E.g. https://tsvibt.blogspot.com/2023/03/the-fraught-voyage-of-aligned-novelty.html

This just in: Alignment researchers fail to notice skulls from famous blog post "Yes, we have noticed the skulls".

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-12-29T13:14:51.458Z · LW(p) · GW(p)

"E.g. the things you're studying are at the wrong level of abstraction (SLT, interp, neuro)"

Let's hear it. What do you mean here exactly?

Replies from: TsviBT↑ comment by TsviBT · 2024-12-29T13:20:03.201Z · LW(p) · GW(p)

From the linked post:

The first moral that I'd draw is simple but crucial: If you're trying to understand some phenomenon by interpreting some data, the kind of data you're interpreting is key. It's not enough for the data to be tightly related to the phenomenon——or to be downstream of the phenomenon, or enough to pin it down in the eyes of Solomonoff induction, or only predictable by understanding it. If you want to understand how a computer operating system works by interacting with one, it's far far better to interact with the operating at or near the conceptual/structural regime at which the operating system is constituted.

Replies from: alexander-gietelink-oldenzielWhat's operating-system-y about an operating system is that it manages memory and caching, it manages CPU sharing between process, it manages access to hardware devices, and so on. If you can read and interact with the code that talks about those things, that's much better than trying to understand operating systems by watching capacitors in RAM flickering, even if the sum of RAM+CPU+buses+storage gives you a reflection, an image, a projection of the operating system, which in some sense "doesn't leave anything out". What's mind-ish about a human mind is reflected in neural firing and rewiring, in that a difference in mental state implies a difference in neurons. But if you want to come to understand minds, you should look at the operations of the mind in descriptive and manipulative terms that center around, and fan out from, the distinctions that the mind makes internally for its own benefit. In trying to interpret a mind, you're trying to get the theory of the program.

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-12-29T13:31:03.963Z · LW(p) · GW(p)

You'll have to be a little more direct to get your point across I fear.

I am sensing you think mechinterp, SLT, and neuroscience aren't at a high enough level of abstraction. I am curious why you think so and would benefit from understanding more clearly what you are proposing instead.

↑ comment by TsviBT · 2024-12-29T13:38:13.608Z · LW(p) · GW(p)

They aren't close to the right kind of abstraction. You can tell because they use a low-level ontology, such that mental content, to be represented there, would have to be homogenized, stripped of mental meaning, and encoded. Compare trying to learn about arithmetic, and doing so by explaining a calculator in terms of transistors vs. in terms of arithmetic. The latter is the right level of abstraction; the former is wrong (it would be right if you were trying to understand transistors or trying to understand some further implementational aspects of arithmetic beyond the core structure of arithmetic).

What I'm proposing instead, is theory.

Replies from: adam-shai, alexander-gietelink-oldenziel↑ comment by Adam Shai (adam-shai) · 2024-12-29T19:36:52.192Z · LW(p) · GW(p)

I think I disagree, or need some clarification. As an example, the phenomenon in question is that the physical features of children look more or less like combinations of the parents features. Is the right kind of abstraction a taxonomy and theory of physical features at the level of nose-shapes and eyebrow thickness? Or is it at the low-level ontology of molecules and genes, or is it in the understanding of how those levels relate to eachother?

Or is that not a good analogy?

Replies from: TsviBT↑ comment by TsviBT · 2024-12-29T20:05:07.603Z · LW(p) · GW(p)

I'm unsure whether it's a good analogy. Let me make a remark, and then you could reask or rephrase.

The discovery that the phenome is largely a result of the genome, is of course super important for understanding and also useful. The discovery of mechanically how (transcribe, splice, translate, enhance/promote/silence, trans-regulation, ...) the phenome is a result of the genome is separately important, and still ongoing. The understanding of "structurally how" characters are made, both in ontogeny and phylogeny, is a blob of open problems (evodevo, niches, ...). Likewise, more simply, "structurally what"--how to even think of characters. Cf. Günter Wagner, Rupert Riedl.

I would say the "structurally how" and "structurally what" is most analogous. The questions we want to answer about minds aren't like "what is a sufficient set of physical conditions to determine--however opaquely--a mind's effects", but rather "what smallish, accessible-ish, designable-ish structures in a mind can [understandably to us, after learning how] determine a mind's effects, specifically as we think of those effects". That is more like organology and developmental biology and telic/partial-niche evodevo (<-made up term but hopefully you see what I mean).

https://tsvibt.blogspot.com/2023/04/fundamental-question-what-determines.html

Replies from: adam-shai↑ comment by Adam Shai (adam-shai) · 2024-12-29T21:57:53.155Z · LW(p) · GW(p)

I suppose it depends on what one wants to do with their "understanding" of the system? Here's one AI safety case I worry about: if we (humans) don’t understand the lower-level ontology that gives rise to the phenomenon that we are more directly interested in (in this case I think thats something like an AI systems behavior/internal “mental” states - your "structurally what", if I'm understanding correctly, which to be honest I'm not very confident I am), then a sufficiently intelligent AI system that does understand that relationship will be able to exploit the extra degrees of freedom in the lower level ontology to our disadvantage, and we won’t be able to see it coming.

I very much agree that structurally what matters a lot, but that seems like half the battle to me.

↑ comment by TsviBT · 2024-12-29T22:06:07.862Z · LW(p) · GW(p)

I very much agree that structurally what matters a lot, but that seems like half the battle to me.

But somehow this topic is not afforded much care or interest. Some people will pay lip service to caring, others will deny that mental states exist, but either way the field of alignment doesn't put much force (money, smart young/new people, social support) toward these questions. This is understandable, as we have much less legible traction on this topic, but that's... undignified, I guess is the expression.

↑ comment by TsviBT · 2024-12-29T22:02:40.570Z · LW(p) · GW(p)

a sufficiently intelligent AI system that does understand that relationship will be able to exploit the extra degrees of freedom in the lower level ontology to our disadvantage, and we won’t be able to see it coming.

Even if you do understand the lower level, you couldn't stop such an adversarial AI from exploiting it, or exploiting something else, and taking control. If you understand the mental states (yeah, the structure), then maybe you can figure out how to make an AI that wants to not do that. In other words, it's not sufficient, and probably not necessary / not a priority.

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-12-29T15:00:18.431Z · LW(p) · GW(p)

Ok. How would this theory look like and how would it cache out into real world consequences ?

Replies from: TsviBT↑ comment by TsviBT · 2024-12-29T15:02:08.559Z · LW(p) · GW(p)

This is a derail. I can know that something won't work without knowing what would work. I don't claim to know something that would work. If you want my partial thoughts, some of them are here: https://tsvibt.blogspot.com/2023/09/a-hermeneutic-net-for-agency.html

In general, there's more feedback available at the level of "philosophy of mind" than is appreciated.

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-12-29T15:05:12.719Z · LW(p) · GW(p)

I think I am asking a very fair question.

What is the theory of change of your philosophy of mind caching out into something with real-world consequences ?

I.e. a training technique? Design principles? A piece of math ? Etc

Replies from: TsviBT↑ comment by TsviBT · 2024-12-29T15:10:53.971Z · LW(p) · GW(p)

I.e. a training technique? Design principles? A piece of math ? Etc

All of those, sure? First you understand, then you know what to do. This is a bad way to do peacetime science, but seems more hopeful for

- cruel deadline,

- requires understanding as-yet-unconceived aspects of Mind.

I think I am asking a very fair question.

No, you're derailing from the topic, which is the fact that the field of alignment keeps failing to even try to avoid / address major partial-consensus defeaters to alignment.

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-12-29T15:21:42.392Z · LW(p) · GW(p)

I'm confused why you are so confident in these "defeaters" by which I gather objection/counterarguments to certain lines of attack on the alignment problem.

E.g. I doubt it would be good if the alignment community would outlaw mechinterp/slt/ neuroscience just because of some vague intuition that they don't operate at the right abstraction.

Certainly, the right level of abstraction is a crucial concern but I dont think progress on this question will be made by blanket dismissals. People in these fields understand very well the problem you are pointing towards. Many people are thinking deeply how to resolve this issue.

Replies from: TsviBT↑ comment by TsviBT · 2024-12-29T15:31:44.093Z · LW(p) · GW(p)

why you are so confident in these "defeaters"

More than any one defeater, I'm confident that most people in the alignment field don't understand the defeaters. Why? I mean, from talking to many of them, and from their choices of research.

People in these fields understand very well the problem you are pointing towards.

I don't believe you.

if the alignment community would outlaw mechinterp/slt/ neuroscience

This is an insane strawman. Why are you strawmanning what I'm saying?

I dont think progress on this question will be made by blanket dismissals

Progress could only be made by understanding the problems, which can only be done by stating the problems, which you're calling "blanket dismissals".

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-12-30T11:47:40.549Z · LW(p) · GW(p)

Okay seems like the commentariat agrees I am too combative. I apologize if you feel strawmanned.

Feels like we got a bit stuck. When you say "defeater" what I hear is a very confident blanket dismissal. Maybe that's not what you have in mind.

Replies from: ete↑ comment by plex (ete) · 2024-12-30T22:03:59.828Z · LW(p) · GW(p)

Defeater, in my mind, is a failure mode which if you don't address you will not succeed at aligning sufficiently powerful systems.[1] It does not mean work outside of that focused on them is useless, but at some point you have to deal with the defeaters, and if the vast majority of people working towards alignment don't get them clearly, and the people who do get them claim we're nowhere near on track to find a way to beat the defeaters, then that is a scary situation.

This is true even if some of the work being done by people unaware of the defeaters is not useless, e.g. maybe it is successfully averting earlier forms of doom than the ones that require routing around the defeaters.

- ^

Not best considered as an argument against specific lines of attack, but as a problem which if unsolved leads inevitably to doom. People with a strong grok of a bunch of these often think that way more timelines are lost to "we didn't solve these defeaters" than the problems being even plausibly addressed by the class of work being done by most of the field. This does unfortunately make it get used as (and feel like) an argument against those approaches by people who don't and don't claim to understand those approaches, but that's not the generator or important nature of it.

↑ comment by ryan_greenblatt · 2024-12-29T02:23:57.830Z · LW(p) · GW(p)

There maybe should be a standardly used name for the field of generally reducing AI x-risk

I say "AI x-safety" and "AI x-safety technical research". I potentially cut the "x-" to just "AI safety" or "AI safety technical research".

Replies from: TsviBT, TsviBT↑ comment by TsviBT · 2024-12-29T02:35:20.927Z · LW(p) · GW(p)

"AI x-safety" seems ok. The "x-" is a bit opaque, and "safety" is vague, but I'll try this as my default.

(Including "technical" to me would exclude things like public advocacy.)

Replies from: ryan_greenblatt, sjadler↑ comment by ryan_greenblatt · 2024-12-29T03:59:18.097Z · LW(p) · GW(p)

Yeah, I meant that I use "AI x-safety" to refer to the field overall and "AI x-safety technical research" to specifically refer to technical research in that field (e.g. alignment research).

(Sorry about not making this clear.)

↑ comment by sjadler · 2024-12-29T08:03:39.652Z · LW(p) · GW(p)

I’ve often preferred a frame of ‘catastrophe avoidance’ over a frame of x-risk. This has a possible downside of people underfeeling the magnitude of risk, but also an upside of IMO feeling way more plausible. I think it’s useful to not need to win specific arguments about extinction, and also to not have some of the existential/extinction conflation happening in ‘x-‘.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2024-12-29T20:29:21.755Z · LW(p) · GW(p)

FWIW this seems overall highly obfuscatory to me. Catastrophic clearly includes things like "A bank loses $500M" and that's not remotely the same as an existential catastrophe.

Replies from: sjadler, Davidmanheim↑ comment by sjadler · 2024-12-29T20:32:33.906Z · LW(p) · GW(p)

It’s much more the same than a lot of prosaic safety though, right?

Let me put it this way: If an AI can’t achieve catastrophe on that order of magnitude, it also probably cannot do something truly existential.

One of the issues this runs into is if a misaligned AI is playing possum, and so doesn’t attempt lesser catastrophes until it can pull off a true takeover. I nonetheless though think this framing points generally at the right type of work (understood that others may disagree of course)

Replies from: Benito↑ comment by Ben Pace (Benito) · 2024-12-29T20:38:46.222Z · LW(p) · GW(p)

Not confident, but I think that "AIs that cause your civilization problems" and "AIs that overthrow your civilization" may be qualitatively different kinds of AIs. Regardlesss, existential threats are the most important thing here, and we just have a short term ('x-risk') that refers to that work.

And anyway I think the 'catastrophic' term is already being used to obfuscate, as Anthropic uses it exclusively on their website / in their papers [LW(p) · GW(p)], literally never talking about extinction or disempowerment[1], and we shouldn't let them get away with that by also adopting their worse terminology.

- ^

(And they use the term 'existential' 3 times in oblique ways that barely count.)

↑ comment by Davidmanheim · 2024-12-30T00:46:14.112Z · LW(p) · GW(p)

Yes - the word 'global' is a minimum necessary qualification for referring to catastrophes of the type we plausibly care about - and even then, it is not always clear that something like COVID-19 was too small an event to qualify.

↑ comment by the gears to ascension (lahwran) · 2024-12-30T02:53:51.007Z · LW(p) · GW(p)

How about "AI outcomes"

Replies from: mateusz-baginski↑ comment by Mateusz Bagiński (mateusz-baginski) · 2024-12-30T21:02:21.837Z · LW(p) · GW(p)

Insufficiently catchy

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2024-12-30T21:32:05.558Z · LW(p) · GW(p)

perhaps. but my reasoning is something like -

better than "alignment": what's being aligned? outcomes should be (citation needed)

better than "ethics": how does one act ethically? by producing good outcomes (citation needed).

better than "notkilleveryoneism": I actually would prefer everyone dying now to everyone being tortured for a million years and then dying, for example, and I can come up with many other counterexamples - not dying is not the (fundamental) problem, achieving good things is the problem (and would produce not-dying).

might not work for deontologists. that seems fine to me, I float somewhere between virtue ethics and utilitarianism anyway.

perhaps there are more catchy words that could be used, though. hope to see someone suggest one someday.

↑ comment by Mateusz Bagiński (mateusz-baginski) · 2024-12-31T15:18:41.196Z · LW(p) · GW(p)

[After I wrote down the thing, I became more uncertain about how much weight to give to it. Still, I think it's a valid consideration to have on your list of considerations.]

"AI alignment", "AI safety", "AI (X-)risk", "AInotkilleveryoneism", "AI ethics" came to be associated with somewhat specific categories of issues. When somebody says "we should work (or invest more or spend more) on AI {alignment,safety,X-risk,notkilleveryoneism,ethics}", they communicate that they are concerned about those issues and think that deliberate work on addressing those issues is required or otherwise those issues are probably not going to be addressed (to a sufficient extent, within relevant time, &c.).

"AI outcomes" is even broader/[more inclusive] than any of the above (the only step left to broaden it even further would be perhaps to say "work on AI being good" or, in the other direction, work on "technology/innovation outcomes") and/but also waters down the issue even more. Now you're saying "AI is not going to be (sufficiently) good by default (with various AI outcomes people having very different ideas about what makes AI likely not (sufficiently) good by default)".

It feels like we're moving in the direction of broadening our scope of consideration to (1) ensure we're not missing anything, and (2) facilitate coalition building (moral trade [? · GW]?). While this is valid, it risks (1) failing to operate on the/an appropriate level of abstraction, and (2) diluting our stated concerns so much that coalition building becomes too difficult because different people/groups endorsing stated concerns have their own interpretations/beliefs/value systems. (Something something find an optimum (but also be ready and willing to update where you think the optimum lies when situation changes)?)

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2024-12-31T16:40:09.127Z · LW(p) · GW(p)

but how would we do high intensity, highly focused research on something intentionally restructured to be an "AI outcomes" research question? I don't think this is pointless - agency research might naturally talk about outcomes in a way that is general across a variety of people's concerns. In particular, ethics and alignment seem like they're an unnatural split, and outcomes seems like a refactor that could select important problems from both AI autonomy risks and human agency risks. I have more specific threads I could talk about.

↑ comment by Daniel Tan (dtch1997) · 2024-12-29T11:24:40.557Z · LW(p) · GW(p)

| standardly used name for the field of generally reducing AI x-risk

In my jargon at least, this is "AI safety", of which "AI alignment" is a subset

↑ comment by philh · 2024-12-29T21:33:40.812Z · LW(p) · GW(p)

Beyond being an unfair and uninformed dismissal

Why do you think it's uninformed? John specifically says that he's taking "this work is trash" as background and not trying to convince anyone who disagrees. It seems like because he doesn't try, you assume he doesn't have an argument?

it risks unnecessarily antagonizing people

I kinda think it was necessary. (In that, the thing ~needed to be written and "you should have written this with a lot less antagonism" is not a reasonable ask.)

↑ comment by MondSemmel · 2024-12-29T10:56:09.818Z · LW(p) · GW(p)

1) "there are many worlds in which it is too late or fundamentally unable to deliver on its promise while prosaic alignment ideas do. And in worlds in which theory does bear fruit" - Yudkowsky had a post somewhere about you only getting to do one instance of deciding to act as if the world was like X. Otherwise you're no longer affecting our actual reality. I'm not describing this well at all, but I found the initial point quite persuasive.

2) Highly relevant LW post & concept: The Tale of Alice Almost: Strategies for Dealing With Pretty Good People [LW · GW]. People like Yudkowsky and johnswentworth think that vanishingly few people are doing something that's genuinely helpful for reducing x-risk, and most people are doing things that are useless at best or actively harmful (by increasing capabilities) at worst. So how should they act towards those people? Well, as per the post, that depends on the specific goal:

Suppose you value some virtue V and you want to encourage people to be better at it. Suppose also you are something of a “thought leader” or “public intellectual” — you have some ability to influence the culture around you through speech or writing.

Suppose Alice Almost is much more V-virtuous than the average person — say, she’s in the top one percent of the population at the practice of V. But she’s still exhibited some clear-cut failures of V. She’s almost V-virtuous, but not quite.

How should you engage with Alice in discourse, and how should you talk about Alice, if your goal is to get people to be more V-virtuous?

Well, it depends on what your specific goal is.

...

What if Alice is Diluting Community Values?

Now, what if Alice Almost is the one trying to expand community membership to include people lower in V-virtue … and you don’t agree with that?

Now, Alice is your opponent.

In all the previous cases, the worst Alice did was drag down the community’s median V level, either directly or by being a role model for others. But we had no reason to suppose she was optimizing for lowering the median V level of the community. Once Alice is trying to “popularize” or “expand” the community, that changes. She’s actively trying to lower median V in your community — that is, she’s optimizing for the opposite of what you want.

The mainstream wins the war of ideas by default. So if you think everyone dies if the mainstream wins, then you must argue against the mainstream, right?

↑ comment by Daniel Tan (dtch1997) · 2024-12-29T11:37:40.953Z · LW(p) · GW(p)

There's plenty to criticise about mainstream alignment research

I'm curious what you think John's valid criticisms are. His piece is so hyperbolic that I have to consider all arguments presented there somewhat suspect by default.

Edit: Clearly people disagree with this sentiment. I invite (and will strongly upvote) strong rebuttals.

comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2025-04-08T17:27:42.276Z · LW(p) · GW(p)

The Ammann Hypothesis: Free Will as a Failure of Self-Prediction

A fox chases a hare. The hare evades the fox. The fox tries to predict where the hare is going - the hare tries to make it as hard to predict as possible.

Q: Who needs the larger brain?

A: The fox.

This is a little animal tale meant to illustrate the following phenomenon:

Generative complexity can be much smaller than predictive complexity under partial observability. In other words, when partially observing a blackbox there are simple internal mechanism that create complex patterns that require very large predictors to predict well.

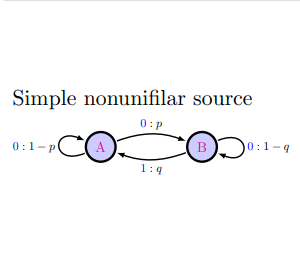

Consider the following simple 2-state HMM

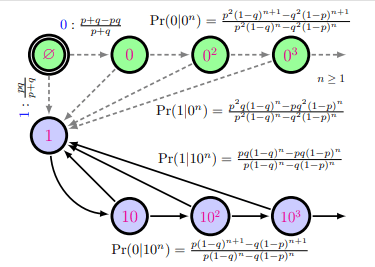

Note that the symbol 0 is output in three different ways: A -> A, A-> B, and B -> B. This means that if we see the symbol 0 we don't know where we are. We can use Bayesian updating to guess where we are but starting from a stationary distribution our belief states can become extremely complicated - in fact, the data sequence generated by the simple nonunifalar source has an optimal predictor HMM that requires infinitely many states :

This simple example illustrates the gap between generative complexity and predictive complexity, a generative-predictive gap.

I note that in this case the generative-predictive is intrinsic. The gap happens even (especially!) in the ideal limit of perfect prediction!

Free Will as generative-predictive gap

The brain is a predictive engine. So much is accepted. Now imagine an organism/agent endowed with a brain predicting the external world. To do well, it may be helpful to predict its own actions. What if this process has a predictive-generative gap?The brain will ascribe an inherent uncertainty ['entropy'] to its own actions!

An agent having a generative-predictive gap for predicting its own action would experience a mysterious force ' choosing' its actions. It may even decide to call this irreducible uncertainty of self-prediction "Free Will" .

************************************************************

[Nora Ammann initially suggested this idea to me. Similar ideas have been expressed by Steven Byrnes]

Replies from: obserience, adam-shai, eggsyntax, matthias-dellago↑ comment by anithite (obserience) · 2025-04-08T20:27:02.434Z · LW(p) · GW(p)

in my opinion, this is a poor choice of problem for demonstrating the generator/predictor simplicity gap.

If not restricted to Markov model based predictors, we can do a lot better simplicity-wise.

Simple Bayesian predictor tracks one real valued probability B in range 0...1. Probability of state A is implicitly 1-B.

This is initialized to B=p/(p+q) as a prior given equilibrium probabilities of A/B states after many time steps.

P("1")=qA is our prediction with P("0")=1-P("1") implicitly.

Then update the usual Bayesian way:

if "1", B=0 (known state transition to A)

if "0", A,B:=(A*(1-p),A*p+B*(1-q)), then normalise by dividing both by the sum. (standard bayesian update discarding falsified B-->A state transition)

In one step after simplification: B:=(B(1+p-q)-p)/(Bq-1)

That's a lot more practical than having infinite states. Numerical stability and achieving acceptable accuracy of a real implementable predictor is straightforward but not trivial. A near perfect predictor is only slightly larger than the generator.

A perfect predictor can use 1 bit (have we ever observed a 1) and ceil(log2(n)) bits counting n, the number of observed zeroes in the last run to calculate the perfectly correct prediction. Technically as n-->infinity this turns into infinite bits but scaling is logarithmic so a practical predictor will never need more than ~500 bits given known physics.

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2025-04-12T03:52:21.578Z · LW(p) · GW(p)

Yes - this is specifically staying within the framework of hidden markov chains.

Even if you go outside though it seems you agree there is a generative predictive gap - you're just saying it's not infinite.

Eggsyntax below gives the canonical example of hash function where prediction is harder than generation which hold for general computable processes.

↑ comment by Adam Shai (adam-shai) · 2025-04-09T02:09:48.174Z · LW(p) · GW(p)

Can a Finite-State Fox Catch a Markov Mouse? for more details

↑ comment by eggsyntax · 2025-04-11T16:08:25.700Z · LW(p) · GW(p)

Eliezer made that point nicely with respect to LLMs here [LW · GW]:

Consider that somewhere on the internet is probably a list of thruples: <product of 2 prime numbers, first prime, second prime>.

GPT obviously isn't going to predict that successfully for significantly-sized primes, but it illustrates the basic point:

There is no law saying that a predictor only needs to be as intelligent as the generator, in order to predict the generator's next token.

Indeed, in general, you've got to be more intelligent to predict particular X, than to generate realistic X. GPTs are being trained to a much harder task than GANs.

Same spirit: <Hash, plaintext> pairs, which you can't predict without cracking the hash algorithm, but which you could far more easily generate typical instances of if you were trying to pass a GAN's discriminator about it (assuming a discriminator that had learned to compute hash functions).

↑ comment by Matthias Dellago (matthias-dellago) · 2025-04-09T15:19:47.088Z · LW(p) · GW(p)

I first heard this idea from Joscha Bach, and it is my favorite explanation of free will. I have not heard it called as a 'predictive-generative gap' before though, which is very well formulated imo

comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-11-16T19:32:12.368Z · LW(p) · GW(p)

Misgivings about Category Theory

[No category theory is required to read and understand this screed]

A week does not go by without somebody asking me what the best way to learn category theory is. Despite it being set to mark its 80th annivesary, Category Theory has the evergreen reputation for being the Hot New Thing, a way to radically expand the braincase of the user through an injection of abstract mathematics. Its promise is alluring, intoxicating for any young person desperate to prove they are the smartest kid on the block.

Recently, there has been significant investment and attention focused on the intersection of category theory and AI, particularly in AI alignment research. Despite the influx of interest I am worried that it is not entirely understood just how big the theory-practice gap is.

I am worried that overselling risks poisoning the well for the general concept of advanced mathematical approaches to science in general, and AI alignment in particular. As I believe mathematically grounded approaches to AI alignment are perhaps the only way to get robust worst-case safety guarantees for the superintelligent regime I think this would be bad.

I find it difficult to write this. I am a big believer in mathematical approaches to AI alignment, working for one organization (Timaeus) betting on this and being involved with a number of other groups. I have many friends within the category theory community, I have even written an abstract nonsense paper myself, I am sympathetic to the aims and methods of the category theory community. This is all to say: I'm an insider, and my criticisms come from a place of deep familiarity with both the promise and limitations of these approaches.

A Brief History of Category Theory

‘Before functoriality Man lived in caves’ - Brian Conrad

Category theory is a branch of pure mathematics notorious for its extreme abstraction, affectionately derided as 'abstract nonsense' by its practitioners.

Category theory's key strength lies in its ability to 'zoom out' and identify analogies between different fields of mathematics and different techniques. This approach enables mathematicians to think 'structurally', viewing mathematical concepts in terms of their relationships and transformations rather than their intrinsic properties.

Modern mathematics is less about solving problems within established frameworks and more about designing entirely new games with their own rules. While school mathematics teaches us to be skilled players of pre-existing mathematical games, research mathematics requires us to be game designers, crafting rule systems that lead to interesting and profound consequences. Category theory provides the meta-theoretic tools for this game design, helping mathematicians understand which definitions and structures will lead to rich and fruitful theories.

“I can illustrate the second approach with the same image of a nut to be opened.

The first analogy that came to my mind is of immersing the nut in some softening liquid, and why not simply water? From time to time you rub so the liquid penetrates better,and otherwise you let time pass. The shell becomes more flexible through weeks and months – when the time is ripe, hand pressure is enough, the shell opens like a perfectly ripened avocado!

A different image came to me a few weeks ago.

The unknown thing to be known appeared to me as some stretch of earth or hard marl, resisting penetration… the sea advances insensibly in silence, nothing seems to happen, nothing moves, the water is so far off you hardly hear it.. yet it finally surrounds the resistant substance.

“ - Alexandre Grothendieck

The Promise of Compositionality and ‘Applied category theory’

Recently a new wave of category theory has emerged, dubbing itself ‘applied category theory’.

Applied category theory, despite its name, represents less an application of categorical methods to other fields and more a fascinating reverse flow: problems from economics, physics, social sciences, and biology have inspired new categorical structures and theories. Its central innovation lies in pushing abstraction even further than traditional category theory, focusing on the fundamental notion of compositionality - how complex systems can be built from simpler parts.

The idea of compositionality has long been recognized as crucial across sciences, but it lacks a strong mathematical foundation. Scientists face a universal challenge: while simple systems can be understood in isolation, combining them quickly leads to overwhelming complexity. In software engineering, codebases beyond a certain size become unmanageable. In materials science, predicting bulk properties from molecular interactions remains challenging. In economics, the gap between microeconomic and macroeconomic behaviours persists despite decades of research.

Here then lies the great promise: through the lens of categorical abstraction, the tools of reductionism might finally be extended to complex systems. The dream is that, just as thermodynamics has been derived from statistical physics, macroeconomics could be systematically derived from microeconomics. Category theory promises to provide the mathematical language for describing how complex systems emerge from simpler components.

How has this promise borne out so far? On a purely scientific level, applied category theorists have uncovered a vast landscape of compositional patterns. In a way, they are building a giant catalogue, a bestiary, a periodic table not of ‘atoms’ (=simple things) but of all the different ways ‘atoms' can fit together into molecules (=complex systems).

Not surprisingly, it turns out that compositional systems have an almost unfathomable diversity of behavior. The fascinating thing is that this diversity, while vast, isn't irreducibly complex - it can be packaged, organized, and understood using the arcane language of category theory. To me this suggests the field is uncovering something fundamental about how complexity emerges.

How close is category theory to real-world applications?

Are category theorists very smart? Yes. The field attracts and demands extraordinary mathematical sophistication. But intelligence alone doesn't guarantee practical impact.

It can take many decades for basic science to yield real-world applications - neural networks themselves are a great example. I am bullish in the long-term that category theory will prove important scientifically. But at present the technology readiness level isn’t there.

There are prototypes. There are proofs of concept. But there are no actual applications in the real world beyond a few trials. The theory-practice gap remains stubbornly wide.

The principality of mathematics is truly vast. If categorical approaches fail to deliver on their grandiose promises I am worried it will poison the well for other theoretic approaches as well, which would be a crying shame.

Replies from: dmurfet, alexander-gietelink-oldenziel, lcmgcd, quinn-dougherty, quinn-dougherty, StartAtTheEnd, lcmgcd, Maelstrom↑ comment by Daniel Murfet (dmurfet) · 2024-11-17T02:04:08.135Z · LW(p) · GW(p)

Modern mathematics is less about solving problems within established frameworks and more about designing entirely new games with their own rules. While school mathematics teaches us to be skilled players of pre-existing mathematical games, research mathematics requires us to be game designers, crafting rule systems that lead to interesting and profound consequences

I don't think so. This probably describes the kind of mathematics you aspire to do, but still the bulk of modern research in mathematics is in fact about solving problems within established frameworks and usually such research doesn't require us to "be game designers". Some of us are of course drawn to the kinds of frontiers where such work is necessary, and that's great, but I think this description undervalues the within-paradigm work that is the bulk of what is going on.

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-11-17T08:53:31.804Z · LW(p) · GW(p)

Yes thats worded too strongly and a result of me putting in some key phrases into Claude and not proofreading. :p

I agree with you that most modern math is within-paradigm work.

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-11-16T19:32:29.535Z · LW(p) · GW(p)

I shall now confess to a great caveat. When at last the Hour is there the Program of the World is revealed to the Descendants of Man they will gaze upon the Lines Laid Bare and Rejoice; for the Code Kernel of God is written in category theory.

Replies from: dmurfet↑ comment by Daniel Murfet (dmurfet) · 2024-11-17T02:05:30.181Z · LW(p) · GW(p)

Typo, I think you meant singularity theory :p

↑ comment by lemonhope (lcmgcd) · 2024-11-17T07:05:18.755Z · LW(p) · GW(p)

You should not bury such a good post in a shortform

↑ comment by Quinn (quinn-dougherty) · 2024-11-17T17:43:35.490Z · LW(p) · GW(p)

my dude, top level post- this does not read like a shortform

↑ comment by Quinn (quinn-dougherty) · 2024-12-07T05:20:23.615Z · LW(p) · GW(p)

I was at an ARIA meeting with a bunch of category theorists working on safeguarded AI and many of them didn't know what the work had to do with AI.

epistemic status: short version of post because I never got around to doing the proper effort post I wanted to make.

↑ comment by StartAtTheEnd · 2024-11-17T09:12:17.384Z · LW(p) · GW(p)

Great post!

It's a habit of mine to think in very high levels of abstraction (I haven't looked much into category theory though, admittedly), and while it's fun, it's rarely very useful. I think it's because of a width-depth trade-off. Concrete real-world problems have a lot of information specific to that problem, you might even say that the unique information is the problem. An abstract idea which applies to all of mathematics is way too general to help much with a specific problem, it can just help a tiny bit with a million different problems.

I also doubt the need for things which are so complicated that you need a team of people to make sense of them. I think it's likely a result of bad design. If a beginner programmer made a slot machine game, the code would likely be convoluted and unintuitive, but you could probably design the program in a way that all of it fits in your working memory at once. Something like "A slot machine is a function from the cartesian product of wheels to a set of rewards". An understanding which would simply the problem so that you could write it much shorter and simpler than the beginner. What I mean is that there may exist simple designs for most problems in the world, with complicated designs being due to a lack of understanding.

The real world values the practical way more than the theoretical, and the practical is often quite sloppy and imperfect, and made to fit with other sloppy and imperfect things.

The best things in society are obscure by statistical necessity, and it's painful to see people at the tail ends doubt themselves at the inevitable lack of recognition and reward.

↑ comment by lemonhope (lcmgcd) · 2024-11-17T07:07:14.530Z · LW(p) · GW(p)

As a layman, I have not seen much unrealistic hype. I think the hype-level is just about right.

↑ comment by Maelstrom · 2024-11-16T22:54:52.568Z · LW(p) · GW(p)

One needs only to read 4 or so papers on category theory applied to AI to understand the problem. None of them share a common foundation on what type of constructions to use or formalize in category theory. The core issue is that category theory is a general language for all of mathematics, and as commonly used just exponentially increase the search space for useful mathematical ideas.

I want to be wrong about this, but I have yet to find category theory uniquely useful outside of some subdomains of pure math.

Replies from: cubefoxcomment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2025-03-01T23:11:03.862Z · LW(p) · GW(p)

ADHD is about the Voluntary vs Involuntary actions

The way I conceptualize ADHD is as a constraint on the quantity and magnitude of voluntary actions I can undertake. When others discuss actions and planning, their perspective often feels foreign to me—they frame it as a straightforward conscious choice to pursue or abandon plans. For me, however, initiating action (especially longer-term, less immediately rewarding tasks) is better understood as "submitting a proposal to a capricious djinn who may or may not fulfill the request." The more delayed the gratification and the longer the timeline, the less likely the action will materialize.

After three decades inhabiting my own mind, I've found that effective decision-making has less to do with consciously choosing the optimal course and more with leveraging my inherent strengths (those behaviors I naturally gravitate toward, largely outside my conscious control) while avoiding commitments that highlight my limitations (those things I genuinely intend to do and "commit" to, but realistically never accomplish).

ADHD exists on a spectrum rather than as a binary condition. I believe it serves an adaptive purpose—by restricting the number of actions under conscious voluntary control, those with ADHD may naturally resist social demands on their time and energy, and generally favor exploration over exploitation.

Society exerts considerable pressure against exploratory behavior. Most conventional advice and social expectations effectively truncate the potential for high-variance exploration strategies. While one approach to valuable exploration involves deliberately challenging conventions, another method simply involves burning bridges to more traditional paths of success.

Replies from: AllAmericanBreakfast, AlphaAndOmega, hastings-greer↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2025-03-02T17:07:37.830Z · LW(p) · GW(p)

My partner has ADHD. She and I talk about it often because I don’t, and understanding and coordinating with each other takes a lot of work.

Her environment is a strong influence on what tasks she considers and chooses. If she notices a weed in the garden walking from the car to the front door, she can get caught up for hours weeding before she makes it into the house. If she’s in her home office trying to work from home and notices something to tidy, same thing.

All the tasks her environment suggests to her seem important and urgent, because she’s not comparing them to some larger list of potential priorities that apply to different contexts - she’s always working on the top priority strictly with reference to the context she’s in at the moment.

She is much better than me at accomplishing tasks that her environment naturally suggests to her - cooking (inspired by recipes she finds on social media), cleaning, shopping, gardening, socializing, and making social plans in response to texts and notifications on her phone.

I am much better than her at constructing an organized list of global priorities and working through them systematically. However, I find it very difficult to be opportunistic, and I can be inflexible and distracted from the moment because I’m always thinking of the one main task I want to focus on.

I don’t think explore/exploit is quite the right frame in our relationship. I’m much more capable of “exploring” topics that require understanding complex abstract interconnections because I can force myself to keep coming back to them over and over again, whatever they are, in any environment, until I’ve understood them. By contrast she’s more capable of “exploiting” unpredictable opportunities as they arise. But the opportunities she and I are exposed to are constrained by our patterns of attention.

↑ comment by AlphaAndOmega · 2025-03-02T13:01:59.007Z · LW(p) · GW(p)

I have ADHD, and also happen to be a psychiatry resident.

As far as I can tell, it has been nothing but negative in my personal experience. It is a handicap, one I can overcome with coping mechanisms and medication, but I struggle to think of any positive impact on my life.

For a while, there were evopsych theories that postulated that ADHD had an adaptational benefit, but evopsych is a shakey field at the best of times, and no clear benefit was demonstrated.

https://pubmed.ncbi.nlm.nih.gov/32451437/

>All analyses performed support the presence of long-standing selective pressures acting against ADHD-associated alleles until recent times. Overall, our results are compatible with the mismatch theory for ADHD but suggest a much older time frame for the evolution of ADHD-associated alleles compared to previous hypotheses.

The ancient ancestral environment probably didn't reward strong executive function and consistency in planning as strongly as agricultural societies did. Even so, the study found that prevalence was dropping even during Palaeolithic times, so it wasn't even something selected for in hunter-gatherers!

I hate having ADHD, and sincerely hope my kids don't. I'm glad I've had a reasonably successful life despite having it.