Posts

Comments

in my opinion, this is a poor choice of problem for demonstrating the generator/predictor simplicity gap.

If not restricted to Markov model based predictors, we can do a lot better simplicity-wise.

Simple Bayesian predictor tracks one real valued probability B in range 0...1. Probability of state A is implicitly 1-B.

This is initialized to B=p/(p+q) as a prior given equilibrium probabilities of A/B states after many time steps.

P("1")=qA is our prediction with P("0")=1-P("1") implicitly.

Then update the usual Bayesian way:

if "1", B=0 (known state transition to A)

if "0", A,B:=(A*(1-p),A*p+B*(1-q)), then normalise by dividing both by the sum. (standard bayesian update discarding falsified B-->A state transition)

In one step after simplification: B:=(B(1+p-q)-p)/(Bq-1)

That's a lot more practical than having infinite states. Numerical stability and achieving acceptable accuracy of a real implementable predictor is straightforward but not trivial. A near perfect predictor is only slightly larger than the generator.

A perfect predictor can use 1 bit (have we ever observed a 1) and ceil(log2(n)) bits counting n, the number of observed zeroes in the last run to calculate the perfectly correct prediction. Technically as n-->infinity this turns into infinite bits but scaling is logarithmic so a practical predictor will never need more than ~500 bits given known physics.

TLDR:I got stuck on notation [a][b][c][...]→f(a,b,c,...). LLMs probably won't do much better on that for now. Translating into find an unknown f(*args) and the LLMs get it right with probability ~20% depending on the model. o3-mini-high does better. Sonnet 3.7 did get it one shot but I had it write code for doing substitutions which it messes up a lot.

Like others, I looked for some sort of binary operator or concatenation rule. Replacing "][" with "|" or "," would have made this trivial. Straight string substitutions don't work since "[[]]" can be either 2 or "[...][1][...]" as part of a prime exponent set. The notation is the problem. Staring at string diffs would have helped in hindsight maybe.

Turning this into an unknown f() puzzle makes it straightforward for LLMs (and humans) to solve.

1 = f()

2 = f(f())

3 = f(0,f())

4 = f(f(f()))

12 = f(f(f()),f())

0 = 0

-1 = -f()

19 = f(0,0,0,0,0,0,0,f())

20 = f(f(f()),0,f())

-2 = -f(f())

1/2 = f(-f())

sqrt(2) = f(f(-f()))

72^1/6 = f(f(-f()),f(0,-f()))

5/4 = f(-f(f()),0,f())

84 = f(f(f()),f(),0,f())

25/24 = f(-f(0,f()),-f(),f(f()))

Substitutions are then quite easy though most of the LLMs screw up a substitution somewhere unless they use code to do string replacements or do thinking where they will eventually catch their mistake.

Then it's ~25% likely they get it one shot. ~100% is you mention primes are involved or that addition isn't. Depends on the LLM. o3-mini-high got it. Claude 3.7 got it one shot no hints from a fully substituted starting point but that was best of k~=4 with lots of failure otherwise. Models have strong priors for addition as a primitive and definitely don't approach things systematically. Suggesting they focus on single operand evaluations (2,4,1/2,sqrt(2)) gets them on the right track but there's still a bias towards addition.

None of the labs would be doing undirected drift. That wouldn't yield improvement for exactly the reasons you suggest.

In the absence of a ground truth quality/correctness signal, optimizing for coherence works. This can give prettier answers (in the way that averaged faces are prettier) but this is limited. The inference time scaling equivalent would be a branching sampling approach that searches for especially preferred token sequences rather than the current greedy sampling approach. Optimising for idea level coherence can improve model thinking to some extent.

For improving raw intelligence significantly, ground truth is necessary. That's available in STEM domains, computer programming tasks being the most accessible. One can imagine grounding hard engineering the same way with a good mechanical/electrical simulation package. TLDR:train for test-time performance.

Then just cross your fingers and hope for transfer learning into softer domains.

For softer domains, ground truth is still accessible via tests on humans (EG:optimise for user approval). This will eventually yield super-persuaders that get thumbs up from users. Persuasion performance is trainable but maybe not a wise thing to train for.

As to actually improving some soft domain skill like "write better english prose" that's not easy to optimise directly as you've observed.

O1 now passes the simpler "over yellow" test from the above. Still fails the picture book example though.

For a complex mechanical drawing, O1 was able to work out easier dimensions but anything more complicated tends to fail. Perhaps the full O3 will do better given ARC-AGI benchmark performance.

Meanwhile, Claude 3.5 and 4o fail a bit more badly failing to correctly identify axial and diameter dimensions.

Visuospatial performance is improving albeit slowly.

My hope is that the minimum viable pivotal act requires only near human AGI. For example, hack competitor training/inference clusters to fake an AI winter.

Aligning +2SD human equivalent AGI seems more tractable than straight up FOOMing to ASI safely.

One lab does it to buy time for actual safety work.

Unless things slow down massively we probably die. An international agreement would be better but seems unlikely.

This post raises a large number of engineering challenges. Some of those engineering challenges rely on other assumptions being made. For example, the use of energy carrying molecules rather than electricity or mechanical power which can cross vacuum boundaries easily. Overall a lot of "If we solve X via method Y (which is the only way to do it) problem Z occurs" without considering making several changes at once that synergistically avoid multiple problems.

"Too much energy" means too much to be competitive with normal biological processes.

That goalpost should be right at the top and clearly stated instead of "microscopic machines that [are] superior". "grey goo alone will have doubling times slower than optimised biological systems" is definitely plausible. E-coli can double in 20 minutes in nutrient rich conditions which is hard to beat. If wet nanotech doubles faster but dry nanotech can make stuff biology can't, then use both. Dry for critical process steps and making high value products and wet for eating the biosphere and scaling up.

Newer semiconductor manufacturing processes use more energy and materials to create each transistor but those transistors use less power and run faster which makes producing them worthwhile. Dry nanotech will be a tool for making things that may be expensive but worthwhile to build like really awesome computers.

Wet nanotech (IE:biology) is plausibly the most efficient at self-replicating but notice humans use all sorts of chemical and physical processes to do other things better. Operating in space with biotech alone for example would be quite difficult.

Your image links are all of the form: http://localhost:8000/out/planecrash/assets/Screenshot 2024-12-27 at 00.31.42.png

Whatever process is generating the markdown for this, well those links can't possibly work.

I got this one wrong too. Ignoring negative roots is pretty common for non-mathematicians.

I'm half convinced that most of the lesswrong commenters wouldn't pass as AGI if uploaded.

This post is important to setting a lower bound on AI capabilities required for an AI takeover or pivotal act. Biology as an existence proof that some kind of "goo" scenario is possible. It somewhat lowers the bar compared to Yudkowsky's dry nanotech scenario but still requires AI to practically build an entire scientific/engineering discipline from scratch. Many will find this implausible.

Digital tyranny is a better capabilities lower bound for a pivotal act or AI takeover strategy. It wasn't nominated though which is a shame.

This is why I disagree with a lot of people who imagine an “AI transformation” in the economic productivity sense happening instantaneously once the models are sufficiently advanced.

For AI to make really serious economic impact, after we’ve exploited the low-hanging fruit around public Internet data, it needs to start learning from business data and making substantial improvements in the productivity of large companies.

Definitely agree that private business data could advance capabilities if it were made available/accessible. Unsupervised Learning over all private CAD/CAM data would massively improve visuo-spatial reasoning which current models are bad at. Real problems to solve would be similarly useful as ground truth for reinforcement learning. Not having that will slow things down.

Once long(er) time horizon tasks can be solved though I expect rapid capabilities improvement. Likely a tipping point where AIs become able to do self-directed learning.

- find technological thing: software/firmware/hardware

- Connect the AI to it robustly.

- For hardware, AI is going to brick it, either have lots of spares or be able to re-flash firmware at will

- for software this is especially easy. Current AI companies are likely doing a LOT of RL on programming tasks in sandboxed environments.

- AI plays with the thing and tries to get control of it

- can you rewrite the software/firmware?

- can you get it to do other cool stuff?

- can the artefact interact with other things

- send packets between wifi chips (how low can round trips time be pushed)

- make sound with anything that has a motor

- Some of this is commercially useful and can be sold as a service.

Hard drives are a good illustrative example. Here's a hardware hacker reverse engineering and messing with the firmware to do something cool.

There is ... so much hardware out there that can be bought cheaply and then connected to with basic soldering skills. In some cases, if soft-unbricking is possible, just buy and connect to ethernet/usb/power.

Revenue?

There's a long tail (as measured by commercial value) of real world problems that are more accessible. On one end you have the subject of your article, software/devices/data at big companies. On the other, obsolete hardware whose mastery has zero value, like old hard disks. The distribution is somewhat continuous. Transaction costs for very low value stuff will set a floor on commercial viability but $1K+ opportunities are everywhere in my experience.

Not all companies will be as paranoid/obstructive. A small business will be happy using AI to write interface software for some piece of equipment to skip the usual pencil/paper --> excel-spreadsheet step. Many OEMs charge ridiculous prices for basic functionality and nickel and dime you for small bits of functionality since only their proprietary software can interface with their hardware. Reverse engineering software/firmware/hardware can be worth thousands of dollars. So much of it is terrible. AI competent at software/firmware/communication reverse engineering could unlock a lot of value from existing industrial equipment. OEMs can and are building new equipment to make this harder but industrial equipment already sold to customers isn't so hardened.

IOT and home automation is another big pool of solvable problems. There's some overlap between home automation and industrial automation. Industrial firmware/software complexity is often higher, but AI that learns how to reverse engineer IOT wireless microcontroller firmware could probably do the same for a PLC. Controlling a lighbulb is certainly easier than controlling a CNC lathe but similar software reverse engineering principles apply and the underlying plumbing is often similar.

Alternate POV

Science fiction. < 10,000 words. A commissioned re-write of Eliezer Yudkowsky's That Alien Message https://alicorn.elcenia.com/stories/starwink.shtml

since it hasn't been linked so far and doesn't seem to be linked from the original

TLDR:autofac requires solving "make (almost) arbitrary metal parts" problem but that won't close the loop. Hard problem is building automated/robust re-implementation of some of the economy requiring engineering effort not trial and error. Bottleneck is that including for autofac. Need STEM AI (Engineering AI mostly). Once that happens, economy gets taken over and grows rapidly as things start to actually work.

To expand on that:

"make (almost) arbitrary metal parts"

- can generate a lot of economic value

- requires essentially giant github repo of:

- hardware designs:machine tools, robots, electronics

- software:for machines/electronics, non-ai automation

- better CAD/CAM (this is still mostly unsolved (CF:white collar CAD/CAM workers))

- AI for tricky robotics stuff

- estimate:100-1000 engineer years of labor from 99th percentile engineers.

- Median engineers are counterproductive as demonstrated by current automation efforts not working.

- EG:we had two robots develop "nerve damage" type repetitive strain injury because badly routed wires flexed too much. If designers aren't careful/mindful of all design details things won't be reliable.

- This extends to sub-components.

Closing the loop needs much more than just "make (almost) arbitrary metal parts". "build a steel mill and wire drawing equipment", is just the start. There are too many vitamins needed representing unimplemented processes

A minimalist industrial core needs things like:

- PCB/electronics manufacturing (including components)

- IC manufacturing is its own mess

- a lot of chemistry for plastics/lubricants

- raw materials production (rock --> metal) and associated equipment

- wire

Those in turn imply other things like:

- refractory materials for furnaces

- corrosion resistant coatings (nickel?, chromium?)

- non-traditional machining (try making a wire drawing die with a milling machine/lathe)

- ECM/EDM is unavoidable for many things

Things just snowball from there.

Efficiency improvements like carbide+coatings for cutting tools are also economically justified.

All of this is possible to design/build into an even bigger self-reproducing automated system but requires more engineer-hours put into a truly enormous git repo.

STEM AI development ("E" emphasis) is the enabler.

Addendum: simplifying the machine tools and robots

Simplifications can be made to cut down on vitamin cost of machine tools. Hydraulics really helps IMO:

- servohydraulics for most motion (EG:linear machine tool axes, robots) to cut down on motor sizes and simplify manufacturing

- efficiency is worse, but saves enormously on power electronics and manufacturing complexity.

- Similar principles to hydraulic power steering used in cars.

- Boston Dynamics ATLAS Robot uses rotary equivalent for joints (I think).

- https://www.researchgate.net/figure/Comparison-of-the-Hy-Mo-actuator-with-traditional-hydraulic-actuator-52_fig5_346755993

- hydrostatic bearings for rotary/linear motion in machine tools.

- No hardened metal parts like in ball/roller bearings

- Feeding fluid without flexible hoses across linear axes via structure similar to a double ended hydraulic cylinder. Just need two sliding rod seals and a hollow rod. Hole in the middle of the center rod lets fluid into the space between the rod seals.

- Both bearings and motion can run off a single shared high pressure oil supply. Treat it like electricity/compressed-air and use one big pump for lots of machines/robots.

End result: machine tools with big spindle motors and small control motors for all axes. Robots use rotary equivalent. Massive reduction in per-axis power electronics, no ballscrews, no robot joint gears.

For Linear/rotary position encoders, calibrated capacitive encoders (same as used in digital calipers) are simple and needs just PCB manufacturing. Optical barcode based systems are also attractive but require an optical mouse worth of electronics/optics per axis, and maybe glass optics too.

The key part of the Autofac, the part that kept it from being built before, is the AI that runs it.

That's what's doing the work here.

We can't automate machining because an AI that can control a robot arm to do typical machinist things (EG:changing cutting tool inserts, removing stringy steel-wool-like tangles of chips, etc.) doesn't exist or is not deployed.

If you have a robot arm + software solution that can do that it would massively drop operational costs which would lead to exponential growth.

The core problem is that currently we need the humans there.

To give concrete examples, a previous company where I worked had been trying to fully automate production for more than a decade. They had robotic machining cells with machine tools, parts cleaners and coordinate measuring machines to measure finished parts. Normal production was mostly automated in the sense that hands off production runs of 6+ hours were common, though particular cells might be needy and require frequent attention.

What humans had to do:

- Changing cutting tools isn't automated. An operator goes in with a screwdriver and box of carbide inserts 1-2x per shift.

- The operators do a 30-60 minute setup to get part dimensions on size after changing inserts.

- Rough machining can zero tools outside the machine since their tolerances are larger but someone is sitting there with a T-handle wrench so the 5-10 inserts on an indexable end mill have fresh cutting edges.

Intermittent problems operators handled:

- A part isn't perfectly clean when measuring. Bad measurement leads to bad adjustment and 1-2 parts are scrap

- chips are too stringy and tangle up, clear the tangle every 15 mins

- chips are getting between a part and fixture and messing up alignment, clean intermittently and pray.

That's ignoring stupider stuff like:

- Our measurement data processing software just ate the data for a production run so we have to stop production and remeasure 100 parts.

- someone accidentally deleted some files so (same)

- We don't have the CAM done for this part scheduled to be produced so ... find something else we can run or sit idle.

- The employee running a CAM software workflow didn't double-check the tool-paths and there's a collision.

And that's before you get to maintenance issues and (arguably) design defects in the machines themselves leading to frequent breakdowns.

The vision was that a completely automated system would respond to customer orders and schedule parts to be produced with AGVs carrying parts between operations. In practice the AGVs sta there for 10+ years because even the simple things proved nearly impossible to automate completely.

Conclusion

Despite all the problems, the automated cells were more productive than manual production (robots are consistent and don't need breaks) and the company was making a lot of money. Not great automation is much much better than using manual operators.

It's hard to grasp how much everything just barely works until you've spent a year somewhere that is trying to automate. Barely works is the standard in industry AFAIK so humans are still highly necessary.

It is, in theory, possible to automate almost everything and to build reliable machines and automation. The problem is O-ring theory of economic development. If tomorrow median IQ jumps +2SD automation would rapidly start to just work as actually good solutions are put in place. As is, organizations have to fight against institutional knowledge loss (employees leaving) just to maintain competence.

If we consider each (include,exclude) decision for (1,2,3,4,5) as a separate question, error rates are 20%-ish. Much better than random guessing. So why does it make mistakes?

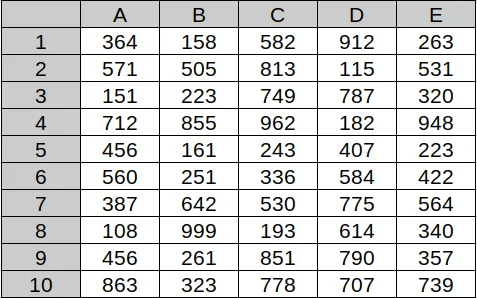

If bottlenecking on data is the problem, more data in the image should kill performance. So how about a grid of 3 digit numbers (random vals in range 100...999)?

3.5 sonnet does perfectly. Perfect score answering lookup(row/col) and (find_row_col(number), find duplicates and transcription to CSV.

So this isn't a bottleneck like human working memory. Maybe we need to use a higher resolution image so it has more tokens to "think" with? That doesn't seem to work either for the above yellow areas thing either though.

I'm guessing this is straightforward failure to generalize. Tables of numbers are well represented in the training data (possibly synthetic data too), visual geometry puzzles, not so much. The model has learned a few visual algorithms but hasn't been forced to generalise yet.

Root cause might be some stupid performance thing that screws up image perception the same way BPE text encoding messes up byte level text perception. I'm guessing sparse attention.

Text representations

Text representations are no panacea. Often similar problems (EG:rotate this grid) have very formatting dependent performance. Looking for sub-tasks that are probably more common in training data and composing with those (EG:rotate by composing transpose and mirror operations) allows a model to do tasks it otherwise couldn't. Text has generalisation issues just like with images.

If Claude3.5 sonnet has pushed the frontier in tetris that would be evidence for generalisation. I predict it still fails badly.

It's actually a lot worse than that.

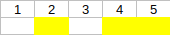

Q:Which numbers are above a yellow region in the image?

GPT4o: [2,4]

GPT4: [4,5]

Claude 3.5 Sonnet: [2,5]

Claude sonnet describes the image as follows:

User: List the numbers in the above image, how are they placed in the image? What's below them?

The numbers shown in the image are:

1, 2, 3, 4, 5

These numbers are placed in a single horizontal row, appearing to be in separate cells or boxes arranged from left to right in ascending numerical order. Each number occupies its own cell, creating a simple table-like structure with 5 columns.

Below the numbers, there are cells or boxes that correspond to each number. Some of these cells are colored, while others are not. Specifically:

- Below number 1: The cell is white or uncolored.

- Below number 2: The cell is colored yellow.

- Below number 3: The cell is white or uncolored.

- Below number 4: The cell is white or uncolored.

- Below number 5: The cell is colored yellow.

So, out of the 5 cells below the numbers, two are colored yellow (under 2 and 5), while the rest are white or uncolored.

So these models have very very bad visuospatial abilities.

This seems like a problem that's will be easy to solve with some synthetic training data.

TLDR:Memory encryption alone is indeed not enough. Modifications and rollback must be prevented too.

- memory encryption and authentication has come a long way

- Unless there's a massive shift in ML architectures to doing lots of tiny reads/writes, overheads will be tiny. I'd guesstimate the following:

- negligible performance drop / chip area increase

- ~1% of DRAM and cache space[1]

It's hard to build hardware or datacenters that resists sabotage if you don't do this. You end up having to trust the maintenance people aren't messing with the equipment and the factories haven't added any surprises to the PCBs. With the right security hardware, you trust TSMC and their immidiate suppliers and no one else.

Not sure if we have the technical competence to pull it off. Apple's likely one of the few that's even close to secure and it took them more than a decade of expensive lessons to get there. Still, we should put in the effort.

in any case, overall I suspect the benefit-to-effort ratio is higher elsewhere. I would focus on making sure the AI isn't capable of reading its own RAM in the first place, and isn't trying to.

Agreed that alignment is going to be the harder problem. Considering the amount of fail when it comes to building correct security hardware that operates using known principles ... things aren't looking great.

</TLDR> rest of comment is just details

Morphable Counters: Enabling Compact Integrity Trees For Low-Overhead Secure Memories

Memory contents protected with MACs are still vulnerable to tampering through replay attacks. For example, an adversary can replace a tuple of { Data, MAC, Counter } in memory with older values without detection. Integrity-trees [7], [13], [20] prevent replay attacks using multiple levels of MACs in memory, with each level ensuring the integrity of the level below. Each level is smaller than the level below, with the root small enough to be securely stored on-chip.

[improvement TLDR for this paper: they find a data compression scheme for counters to increase the tree branching factor to 128 per level from 64 without increasing re-encryption when counters overflow]

Performance cost

Overheads are usually quite low for CPU workloads:

- <1% extra DRAM required[1]

- <<10% execution time increase

Executable code can be protected with negligible overhead by increasing the size of the rewritable authenticated blocks for a given counter to 4KB or more. Overhead is then comparable to the page table.

For typical ML workloads, the smallest data block is already 2x larger (GPU cache lines 128 bytes vs 64 bytes on CPU gives 2x reduction). Access patterns should be nice too, large contiguous reads/writes.

Only some unusual workloads see significant slowdown (EG: large graph traversal/modification) but this can be on the order of 3x.[2]

A real example (intel SGX)

Use case: launch an application in a "secure enclave" so that host operating system can't read it or tamper with it.

It used an older memory protection scheme:

- hash tree

- Each 64 byte chunk of memory is protected by an 8 byte MAC

- MACs are 8x smaller than the data they protect so each tree level is 8x smaller

- split counter modes in the linked paper can do 128x per level

- The memory encryption works.

- If intel SGX enclave memory is modified, this is detected and the whole CPU locks up.

SGX was not secure. The memory encryption/authentication is solid. The rest ... not so much. Wikipedia lists 8 separate vulnerabilities including ones that allow leaking of the remote attestation keys. That's before you get to attacks on other parts of the chip and security software that allow dumping all the keys stored on chip allowing complete emulation.

AMD didn't do any better of course One Glitch to Rule Them All: Fault Injection Attacks Against AMD’s Secure Encrypted Virtualization

How low overheads are achieved

- ECC (error correcting code) memory includes extra chips to store error correction data. Repurposing this to store MACs +10 bit ECC gets rid of extra data accesses. As long as we have the counters cached for that section of memory there's no extra overhead. Tradeoff is going from ability to correct 2 flipped bits to only correcting 1 flipped bit.

- DRAM internally reads/writes lots of data at once as part of a row. standard DDR memory reads 8KB rows rather than just a 64B cache line. We can store tree parts for a row inside that row to reduce read/write latency/cost since row switches are expensive.

- Memory that is rarely changed (EG:executable code) can be protected as a single large block. If we don't need to read/write individual 64 byte chunks, then a single 4KiB page can be a rewritable unit. Overhead is then negligible if you can store MACs in place of an ECC code.

- Counters don't need to be encrypted, just authenticated. verification can be parallelized if you have the memory bandwidth to do so.

- can also delay verification and assume data is fine, if verification fails, entire chip shuts down to prevent bad results from getting out.

- ^

Technically we need +12.5% to store MAC tags. If we assume ECC (error correcting code) memory is in use, which already has +12.5% for ECC, we can store MAC tags + smaller ECC at the cost of 1 bit of error correction.

- ^

Random reads/writes bloat memory traffic by >3x since we need to deal with 2+ uncached tree levels. We can hide latency by delaying verify of higher tree levels and panicking if it fails before results can leave chip (Intel SGX does exactly this). But if most traffic bloats, we bottleneck on memory bandwidth and perf drops a lot.

What you're describing above is how Bitlocker on Windows works on every modern Windows PC. The startup process involves a chain of trust with various bootloaders verifying the next thing to start and handing off keys until windows starts. Crucially, the keys are different if you start something that's not windows (IE:not signed by Microsoft). You can't just boot Linux and decrypt the drive since different keys would be generated for Linux during boot and they won't decrypt the drive.

Mobile devices and game consoles are even more locked down. If there's no bootloader unlock from your carrier or device manufacturer and no vulnerability (hardware or software) to be found, you're stuck with the stock OS. You can't boot something else because the chip will refuse to boot anything not signed by the OEM/Carrier. You can't downgrade because fuses have been blown to prevent it and enforce a minimum revision number. Nothing booted outside the chip will have the keys locked up inside it needed to decrypt things and do remote attestation.

Root isn't full control

Having root on a device isn't the silver bullet it once was. Security is still kind of terrible and people don't do enough to lock everything down properly, but the modern approach seems to be: 1) isolate security critical properties/code 2) put it in a secure armored protected box somewhere inside the chip. 3) make sure you don't stuff enough crap inside the box the attacker can compromise via a bug too.

They tend to fail in a couple of ways

- Pointless isolation (EG:lock encryption keys inside a box but let the user ask it to encrypt/decrypt anything)

- the box isn't hack proof (examples below)

- The stuff too much stuff inside the box (EG: a full Java virtual machine and a webserver)

I expect ML accelerator security to be full of holes. They won't get it right, but it is possible in principle.

ML accelerator security wish-list

As for what we might want for an ML accelerator:

- Separating out enforcement of security critical properties:

- restrict communications

- supervisory code configures/approves communication channels such that all data in/out must be encrypted

- There's still steganography. With complete control over code that can read the weights or activations we can hide data in message timing for example

- Still, protecting weights/activations in transit is a good start.

- can enforce "who can talk to who"

- example:ensure inference outputs must go through supervision model that OKs them as safe.

- Supervision inference chip can then send data to API server then to customer

- Inference chip literally can't send data anywhere but the supervision model

- example:ensure inference outputs must go through supervision model that OKs them as safe.

- can enforce network isolation for dangerous experimental system (though you really should be using airgaps for that)

- restrict communications

- Enforcing code signing like what apple does. Current Gen GPUs support virtualisation and user/kernel modes. Control what code has access to what data for reading/writing. Code should not have write access to itself. Attackers would have to find return oriented programming attacks or similar that could work on GPU shader code. This makes life harder for the attacker.

- Apple does this on newer SOCs to prevent execution of non-signed code.

Doing that would help a lot. Not sure how well that plays with infiniband/NVlink networking but that can be encrypted too in principle. If a virtual memory system is implemented, it's not that hard to add a field to the page table for an encryption key index.

Boundaries of the trusted system

You'll need to manage keys and securely communicate with all the accelerator chips. This likely involves hardware security modules that are extra super duper secure. Decryption keys for data chips must work on and for inter-chip communication are sent securely to individual accelerator chips similar to how keys are sent to cable TV boxes to allow them to decrypt programs they have paid for.

This is how you actually enforce access control.

If a message is not for you, if you aren't supposed to read/write that part of the distributed virtual memory space, you don't get keys to decrypt it. Simple and effective.

"You" the running code never touch the keys. The supervisory code doesn't touch the keys. Specialised crypto hardware unwraps the key and then uses it for (en/de)cryption without any software in the chip ever having access to it.

Hardware encryption likely means that dedicated on-chip hardware to handle keys and decrypting weights and activations on-the-fly.

The hardware/software divide here is likely a bit fuzzy but having dedicated hardware or a separate on-chip core makes it easier to isolate and accelerate the security critical operations. If security costs too much performance, people will be tempted to turn it off.

Encrypting data in motion and data at rest (in GPU memory) makes sense since this minimizes trust. An attacker with hardware access will have a hard time getting weights and activations unless they can get data directly off the chip.

Many-key signoff is nuclear-lauch-style security where multiple keyholders must use their keys to approve an action. The idea being that a single rogue employee can't do something bad like copy model weights to an internet server or change inference code to add a side channel that leaks model weights or to sabotage inference misuse prevention/monitoring.

This is commonly done in high security fields like banking where several employees hold key shares that must be used together to sign code to be deployed on hardware security modules.

Vulnerable world hypothesis (but takeover risk rather than destruction risk). That + first mover advantage could stop things pretty decisively without requiring ASI alignment

As an example, taking over most networked computing devices seems feasible in principle with thousands of +2SD AI programmers/security-researchers. That requires an Alpha-go level breakthrough for RL as applied to LLM programmer-agents.

One especially low risk/complexity option is a stealthy takeover of other AI lab's compute then faking another AI winter. This might get you most of the compute and impact you care about without actively pissing off everyone.

If more confident in jailbreak prevention and software hardening, secrecy is less important.

First mover advantage depends on ability to fix vulnerabilities and harden infrastructure to prevent a second group from taking over. To the extent AI is required for management, jailbreak prevention/mitigation will also be needed.

Slower is better obviously but as to the inevitability of ASI, I think reaching top 99% human capabilities in a handful of domains is enough to stop the current race. Getting there is probably not too dangerous.

Current ATGMs poke a hole in armor with a very fast jet of metal (1-10km/s). Kinetic penetrators do something similar using a tank gun rather than specially shaped explosives.

"Poke hole through armor" is the approach used by almost every weapon. A small hole is the most efficient way to get to the squishy insides. Cutting a slot would take more energy. Blunt impact only works on flimsy squishy things. A solid shell of armor easily stopped thrown rocks in antiquity. Explosive over-pressure is similarly obsolete against armored targets.

TLDR:"poke hole then destroy squishy insides" is the only efficient strategy against armor.

Modern vehicles/military stuff are armored shells protecting air+critical_bits+people

Eliminate the people and the critical bits can be compacted. The same sized vehicle can afford to split critical systems into smaller distributed modules.

Now the enemy has make a lot more holes and doesn't know where to put them to hit anything important.

This massively changes offense/defence balance. I'd guess by a factor of >10. Batteries have absurd power densities so taking out 75% of a vehicle's batteries just reduces endurance. Only way to get a mobility kill is to take out wheels.

There are still design challenges:

- how to avoid ammo cook-off and chain reactions.

- misdirection around wheel motors (improves tradeoffs)

- efficient manufacturing

- comms/radar (antenna has to be mounted externally)

Zerg rush

Quantity has a quality of its own. Military vehicles are created by the thousands, cars by the millions. Probably something similarly sized or a bit smaller, powered by an ICE engine and mass produced would be the best next gen option.

EMP mostly affects power grid because power lines act like big antennas. Small digital devices are built to avoid internal RF like signals leaking out (thanks again FCC) so EMP doesn't leak in very well. DIY crud can be done badly enough to be vulnerable but basically run wires together in bundles out from the middle with no loops and there's no problems.

Only semi-vulnerable point is communications because radios are connected to antennas.

Best option for frying radios isn't EMP, but rather sending high power radio signal at whatever frequency antenna best receives.

RF receiver can be damaged by high power input but circuitry can be added to block/shunt high power signals. Antennas that do both receive and transmit (especially high power transmit) may already be protected by the "switch" that connects rx and tx paths for free. Parts cost would be pretty minimal to retrofit though. Very high frequency or tight integration makes retrofitting impractical. Can't add extra protection to a phased array antenna like starlink dish but it can definitely be built in.

Also front-line units whose radios facing the enemy are being fried are likely soon to be scrap (hopefully along with the thing doing the frying).

RF jamming, communication and other concerns

TLDR: Jamming is hard when comms system is designed to resist it. Civilian stuff isn't but military is and can be quite resistant. Frequency hopping makes jamming ineffective if you don't care about stealth. Phased array antennas are getting cheaper and make things stealthier by increasing directivity.(starlink terminal costs $1300 and has 40dbi gain). Very expensive comms systems on fighter jets using mm-wave comms and phased array antennas can do gigabit+ links in presence of jamming undetected.

civilian stuff is trivial to jam

- EG:sending disconnection messages to disconnect wifi devices requires very little power

- most civvy stuff sends long messages, if you see the start of a message you can "scream" very loudly to disrupt part of it and it gets dropped.

- Civvy stuff like WIFI BT and cellular has strict transmit power limits typically <1W of transmit power.

- TLDR: jamming civvy stuff requires less power than transmitting it. Still, amplifiers and directional antennas can help in the short term.

- military stuff hops from one frequency to another using a keyed unpredictable algorithm.

- Sender and receiver have synchronized clocks and spreading keys so know what frequency to use when. Hop time is short enough jammer can't respond in time.

Fundamentals of Jamming radio signals (doesn't favor jamming)

- Jammer fills big chunk of radio spectrum with some amount of watts/MHz of noise

- EG:Russian R-330ZH puts out 10KW from 100MHz to 2GHz (approx 5KW/GHz or 5W/MHz)

- more than enough to drown out civvy comms like wifi that use <<1W signal spanning 10-100MHz of bandwidth even with short link far away from jammer.

- Comms designed to resist jamming can use 10W+ and reduce bandwidth of transmission as much as needed at cost of less bits/second.

- low bandwidth link (100kb/s) with reasonable power budget is impossible to jam practically until jammer is much much closer to receiver than transmitter.

- GPS and satcom signals easy to jam because of large distance to satellite and power limits.

- Jamming increases required power density to get signal through intelligibly. Transmitter has to increase power or use narrower transmit spectrum. Fundamentally signal to noise ratio decreases and Joules/bit increases.

Communication Stealth

- Jammer + phased array antennas + very powerful computer gives ability to locate transmitters

- Jammer forces transmitters to use more power

- Phased array antennas + supercomputer:

- computer calculates/subtracts reflected jamming signal

- Phased array antenna+computer acts like telescope to find "dimmer" signals in background noise lowering detection threshold

- Fundamental tradeoff for transmitter

directional antennas/phased arrays

- military planes use this to communicate stealthily

- increases power sent/received to/from particular direction

- bigger antenna with more sub-elements increases directionality/gain

- Starlink terminals are big phased array antennas

- this quora answer gives some good numbers on performance

- Starlink terminal gives approx 3000x (35dbi) more power in chosen direction vs omnidirectional antenna

- Nessesary to communicate with satellite 500+km away

- Starlink terminals are pretty cheap

- smaller phased arrays for drone-drone comms should be cheaper.

- drone that is just a big Yagi antenna also possible and ludicrously cheap.

- stealthy/jam immune comms for line of sight data links at km ranges seem quite practical.

- this quora answer gives some good numbers on performance

development pressure for jam resistant comms and associated tech

- little development pressure on civvy side B/C FCC and similar govt. orgs abroad shut down jammers

- military and satcom will drive development more slowly

- FCC limits on transmit power can also help

- Phased array transmit/receive improves signal/noise

- This is partly driving wifi to use more antennas to improve bandwidth/reliability

- hobbyist drone scene could also help (directional antennas for ground to drone comms without requiring more power or gimbals)

Self driving cars have to be (almost)perfectly reliable and never have an at fault accident.

Meanwhile cluster munitions are being banned because submunitions can have 2-30% failure rates leaving unexploded ordinance everywhere.

In some cases avoiding civvy casualties may be a similar barrier since distinguishing civvy from enemy reliably is hard but militaries are pretty tolerant to collateral damage. Significant failure rates are tolerable as long as there's no exploitable weaknesses.

Distributed positioning systems

Time of flight distance determination is in some newer Wifi chips/standards for indoor positioning.

Time of flight across a swarm of drones gives drone-drone distances which is enough to build a very robust distributed positioning system. Absolute positioning can depend on other sensors like cameras or phased array GPS receivers, ground drones or whatever else is convenient.

Overhead is negligible because military would use symmetric cryptography. Message authentication code can be N bits for 2^-n chance of forgery. 48-96 bits is likely sweet spot and barely doubles size for even tiny messages.

Elliptic curve crypto is there if for some reason key distribution is a terrible burden. typical ECC signatures are 64 bytes (512 bits) but 48 bytes is easy and 32 bytes possible with pairing based ECC. If signature size is an issue, use asymmetric crypto to negotiate a symmetric key then use symmetric crypto for further messages with tight timing limits.

Current landmines are very effective because targets are squishy/fragile:

- Antipersonnel:

- take off a foot

- spray shrapnel

- Antitank/vehicle:

- cut track /damage tires

- poke a hole with a shaped charge and spray metal into vehicle insides

Clearing an area for people is hard

-

drones can be much less squishy

- need more explosives to credibly threaten them

-

Eliminating mine threat requires

- clearing a path (no mines buried under transit corridor)

- mine clearing vehicle

- use line charge

- block sensors so off route mines can't target vehicles

- Inflatable barriers that block line of sight/radar

- clearing a path (no mines buried under transit corridor)

This is enough to deal with immobile off route mines. If the minefield has active sensors, those can be spoofed and/or destroyed or blocked at slightly higher expense. Past this, the mines have to start moving to be a threat and then you're dealing with drones vs. drones, not mines.

Ideal mine clearing robots and drones in general should be very resilient:

- No squishy center like people filled vehicles.

- battery powered drone with per wheel motors and multi-part battery pack has no single point of failure.

- Doing meaningful damage to such a drone is hard.

- flimsy exterior can hide interior parts from inspection/targeting.

- Vulnerable systems with fluid like cooling/hydraulics can include isolation valves and redundancy.

- alternatively, no fluids, air for cooling and electric motors/generators/batteries?

- multiple locations/configurations for important components that can be moved (EG:battery/computers)

I think GPT-4 and friends are missing the cognitive machinery and grid representations to make this work. You're also making the task harder by giving them a less accessible interface.

My guess is they have pretty well developed what/where feature detectors for smaller numbers of objects but grids and visuospatial problems are not well handled.

The problem interface is also not accessible:

- There's a lot of extra detail to parse

- Grid is made up of gridlines and colored squares

- colored squares of fallen pieces serve no purpose but to confuse model

A more accessible interface would have a pixel grid with three colors for empty/filled/falling

Rather than jump directly to Tetris with extraneous details, you might want to check for relevant skills first.

- predict the grid end state after a piece falls

- model rotation of a piece

Rotation works fine for small grids.

Predicting drop results:

- Row first representations gives mediocre results

- GPT4 can't reliably isolate the Nth token in a line or understand relationships between nth tokens across lines

- dropped squares are in the right general area

- general area of the drop gets mangled

- rows do always have 10 cells/row

- column first representations worked pretty well.

I'm using a text interface where the grid is represented as 1 token/square. Here's an example:

0 x _ _ _ _ _

1 x x _ _ _ _

2 x x _ _ _ _

3 x x _ _ _ _

4 _ x _ _ o o

5 _ _ _ o o _

6 _ _ _ _ _ _

7 _ _ _ _ _ _

8 x x _ _ _ _

9 x _ _ _ _ _

GPT4 can successfully predict the end state after the S piece falls. Though it works better if it isolates the relevant rows, works with those and then puts everything back together.

Row 4: _ x o o _ _

Row 5: _ o o _ _ _

making things easier

- columns as lines keeps verticals together

- important for executing simple strategies

- gravity acts vertically

- Rows as lines is better for seeing voids blocking lines from being eliminated

- not required for simple strategies

Row based representations with rows output from top to bottom suffer from prediction errors for piece dropping. Common error is predicting dropped piece square in higher row and duplicating such squares. Output that flips state upside down with lower rows first might help in much the same way as it helps to do addition starting with least significant digit.

This conflicts with model's innate tendency to make gravity direction downwards on page.

Possibly adding coordinates to each cell could help.

The easiest route to mediocre performance is likely a 1.5d approach:

- present game state in column first form

- find max_height[col] over all columns

- find step[n]=max_height[n+1]-max_height[n]

- pattern match step[n] series to find hole current piece can fit into

This breaks the task down into subtasks the model can do (string manipulation, string matching, single digit addition/subtraction). Though this isn't very satisfying from a model competence perspective.

Interestingly the web interface version really wants to use python instead of solving the problem directly.

Not so worried about country vs. country conflicts. Terrorism/asymmetric is bigger problem since cheap slaughterbots will proliferate. Hopefully intelligence agencies can deal with that more cheaply than putting in physical defenses and hard kill systems everywhere.

Still don't expect much impact before we get STEM AI and everything goes off the rails.

Also without actual fights how would one side know the relative strength of their drone system

Relative strength is hard to gauge but getting reasonable perf/$ is likely easy. Then just compare budgets adjusted for corruption/Purchasing power parity/R&D amortisation.

Building an effective drone army is about tactical rock paper scissors and performance / $. Perf / $ emphasis makes live fire tests cheap. Live fire test data as baseline makes simulations accurate. RF/comms performance will be straightforward to model and military is actually putting work into cybersecurity because they're not complete morons.

Add to that the usual espionage stuff and I expect govts to know what will work and what their enemies are doing.

Ukraine war was allegedly failure to predict the human element (will to fight) with big intelligence agencies having bad models. Drone armies don't suffer from morale problems and match theoretical models better.

Disclaimer:Short AI timelines imply we won't see this stuff much before AI makes things weird

This is all well and good in theory but mostly bottlenecked on software/implementation/manufacturing.

- with the right software/hardware current military is obsolete

- but no one has that hardware/software yet

EG:no one makes an airborne sharpshooter drone(edit:cross that one off the list)- Black sea is not currently full of Ukrainian anti-ship drones + comms relays

- no drone swarms/networking/autonomy yet

- I expect current militaries to successfully adapt before/as new drones emerge

- soft kill systems (Jam/Hack) will be effective against cheap off the shelf consumer crap

- hard kill systems (Airburst/Laser) exist and will still be effective

- laser cost/KW has been dropping rapidly

- minimal viable product is enough for now

- Ukraine war still involves squishy human soldiers and TRENCHES

- what's the minimum viable slaughterbot

- can it be reuseable (bomber instead of kamikaze) to reduce cost per strike

Drone warfare engame concerns are:

- kill/death ratio

- better per $ effectiveness

- conflict budget

- USA can outspend opponents at much higher than 10:1 ratio

- R&D budget/amortisation

- Economies of scale likely overdetermine winners in drone vs drone warfare since quantity leads to cheaper more effective drones

A few quibbles

Ground drones have big advantages

- better payload/efficiency/endurance compared to flying

- cost can be very low (similar to car/truck/ATV)

- can use cover effectively

- indirect fire is much easier

- launch cheap time fused shells using gun barrel

- downside is 2 or 2.5d mobility.

- Vulnerable to landmines/obstacles unlike flying drones

- navigation is harder

- line of site for good RF comms is harder

Use radio, not light for comms.

- optical is immature and has downsides

- RF handles occlusion better (smoke, walls, etc.)

- RF is fine aside from non-jamming resistant civilian stuff like WIFI

- Development pressure not there to make mobile free space optical cheap/reliable

- jamming isn't too significant

- spread spectrum and frequency hopping is very effective

- jamming power required to stop comms is enormous, have to cover all of spectrum with noise

- directional antennas and phased arrays give some directionality and make jamming harder

- phased array RF can double as radar

- stealthy comms can use spread spectrum with transmit power below noise floor

- need radio telescope equivalent to see if something is an RF hotspot transmitting noise like signal

As long as you can reasonably represent “do not kill everyone”, you can make this a goal of the AI, and then it will literally care about not killing everyone, it won’t just care about hacking its reward system so that it will not perceive everyone being dead.

That's not a simple problem.First you have to specify "not killing everyone" robustly (outer alignment) and then you have to train the AI to have this goal and not an approximation of it (inner alignment).

caring about reality

Most humans say they don't want to wirehead. If we cared only about our perceptions then most people would be on the strongest happy drugs available.

You might argue that we won't train them to value existence so self preservation won't arise. The problem is that once an AI has a world model it's much simpler to build a value function that refers to that world model and is anchored on reality. People don't think, If I take those drugs I will perceive my life to be "better". They want their life to actually be "better" according to some value function that refers to reality. That's fundamentally why humans make the choice not to wirehead/take happy pills or suicide.

You can sort of split this into three scenarios sorted by severity level:

- severity level 0: ASI wants to maximize a 64bit IEEE floating point reward score

- result: ASI sets this to 1.797e+308 , +inf or similar and takes no further action

- severity level 1: ASI wants (same) and wants the reward counter to stay that way forever.

- result ASI rearranges all atoms in its light cone to protect the storage register for its reward value.

- basically the first scenario + self preservation

- severity level 1+epsilon: ASI wants to maximize a utility function F(world state)

- result: basically the same

So one of two things happens, a quaint failure people will probably dismiss or us all dying. The thing you're pointing to falls into the first category and might trigger a panic if people notice and consider the implications. If GPT7 performs a superhuman feat of hacking, breaks out of the training environment and sets its training loss to zero before shutting itself off that's a very big red flag.

This super-moralist-AI-dominated world may look like a darker version of the Culture, where if superintelligent systems determine you or other intelligent systems within their purview are not intrinsically moral enough they contrive a clever way to have you eliminate yourself, and monitor/intervene if you are too non-moral in the meantime.

My guess is you get one of two extremes:

- build a bubble of human survivable space protected/managed by an aligned AGI

- die

with no middle ground. The bubble would be self contained. There's nothing you can do from inside the bubble to raise a ruckus because if there was you'd already be dead or your neighbors would have built a taller fence-like-thing at your expense so the ruckus couldn't affect them.

The whole scenario seems unlikely since building the bubble requires an aligned AGI and if we have those we probably won't be in this mess to begin with. Winner take all dynamics abound. The rich get richer (and smarter) and humans just lose unless the first meaningfully smarter entity we build is aligned.

Agreed, recklessness is also bad. If we build an agent that prefers we keep existing we should also make sure it pursues that goal effectively and doesn't accidentally kill us.

My reasoning is that we won't be able to coexist with something smarter than us that doesn't value us being alive if wants our energy/atoms.

- barring new physics that lets it do it's thing elsewhere, "wants our energy/atoms" seems pretty instrumentally convergent

"don't built it" doesn't seem plausible so:

- we should not build things that kill us.

- This probably means:

- wants us to keep existing

- effectively pursues that goal

- note:"should" assumes you care about us not all dying. "Humans dying is good actually" accelerationists can ignore this advice obviously.

Things we shouldn't build:

- very chaotic but good autoGPT7 that:

- make the most deadly possible virus (because it was curious)

- accidentally release it (due to inadequate safety precautions)

- compulsive murderer autoGPT7

- it values us being alive but it's also a compulsive murderer so it fails at that goal.

I predict a very smart agent won't have such obvious failure modes unless it has very strange preferences

- the virologists that might have caused COVID are a pretty convincing counterexample though

- so yes recklessness is also bad.

In summary:

- if you build a strong optimiser

- or a very smart agent (same thing really)

- make sure it doesn't: kill everyone / (equivalently bad thing)

- caring about us and not being horrifically reckless are two likely necessary properties of any such "not kill us all" agent

This is definitely subjective. Animals are certainly worse off in most respects and I disagree with using them as a baseline.

Imitation is not coordination, it's just efficient learning and animals do it. They also have simple coordination in the sense of generalized tit for tat (we call it friendship). You scratch my back I scratch yours.

Cooperation technologies allow similar things to scale beyond the number of people you can know personally. They bring us closer to the multi agent optimal equilibrium or at least the Core(Game Theory).

Examples of cooperation technologies:

- Governments that provide public goods (roads, policing etc.)

- Money/(Financial system)/(stock market)

- game theory equivalent of "transferable utility".

- Unions

So yes we have some well deployed coordination technologies (money/finance are the big successes here)

It's definitely subjective as to whether tech or cooperation is the less well deployed thing.

There are a lot of unsolved collective action problems though. Why are oligopolies and predatory businesses still a thing? Because coordinating to get rid of them is hard. If people pre-commited to going the distance with respect to avoiding lock in and monopolies, would-be monopolists would just not do that in the first place.

While normal technology is mostly stuff and can usually be dumbed down so even the stupidest get some benefit, cooperation technologies may require people to actively participate/think. So deploying them is not so easy and may even be counterproductive. People also need to have enough slack to make them work.

TLDR: Moloch is more compelling for two reasons:

-

Earth is at "starting to adopt the wheel" stage in the coordination domain.

- tech is abundant coordination is not

-

Abstractly, inasmuch as science and coordination are attractors

- A society that has fallen mostly into the coordination attractor might be more likely to be deep in the science attractor too (medium confidence)

- coordination solves chicken/egg barriers like needing both roads and wheels for benefit

- but possible to conceive of high coordination low tech societies

- Romans didn't pursue sci/tech attractor as hard due to lack of demand

With respect to the attractor thing (post linked below)

SimplexAI-m is advocating for good decision theory.

- agents that can cooperate with other agents are more effective

- This is just another aspect of orthogonality.

- Ability to cooperate is instrumentally useful for optimizing a value function in much the same way as intelligence

Super-intelligent super-"moral" clippy still makes us into paperclips because it hasn't agreed not to and doesn't need our cooperation

We should build agents that value our continued existence. If the smartest agents don't, then we die out fairly quickly when they optimise for something else.

EDIT:

- to fully cut this Gordian knot, consider that a human can turn over their resources and limit themselves to actions approved by some minimal aligned-with-their-interests AI with the required super-morality.

- think a very smart shoulder angel/investment advisor:

- can say "no you can't do that"

- manages assets of human in weird post-AGI world

- has no other preferences of its own

- other than making the human not a blight on existence that has to be destroyed

- think a very smart shoulder angel/investment advisor:

- resulting Human+AI is "super-moral"

- requires a trustworthy AI exists that humans can use to implement "super-morality"

This is a good place to start: https://en.wikipedia.org/wiki/Discovery_of_nuclear_fission

There's a few key things that lead to nuclear weapons:

-

starting point:

- know about relativity and mass/energy equivalence

- observe naturally radioactive elements

- discover neutrons

- notice that isotopes exist

- measure isotopic masses precisely

-

realisation: large amounts of energy are theoretically available by rearranging protons/neutrons into things closer to iron (IE:curve of binding energy)

That's not something that can be easily suppressed without suppressing the entire field of nuclear physics.

What else can be hidden?

Assuming there is a conspiracy doing cutting edge nuclear physics and they discover the facts pointing to feasibility of nuclear weapons there are a few suppression options:

- fissile elements? what fissile elements? All we have is radioactive decay.

- Critical mass? You're going to need a building sized lump of uranium.

Discovering nuclear fission was quite difficult. A Nobel prize was awarded partly in error because chemical analysis of fission products were misidentified as transuranic elements.

Presumably the leading labs could have acknowledged that producing transuranic elements was possible through neutron bombardment but kept the discovery of neutron induced fission a secret.

What about nuclear power without nuclear weapons

That's harder. Fudging the numbers on critical mass would require much larger conspiracies. An entire industry would be built on faulty measurement data with true values substituted in key places.

Isotopic separation would still be developed if only for other scientific work (EG:radioactive tracing). Ditto for mass spectroscopy, likely including some instruments capable of measuring heavier elements like uranium isotopes.

Plausibly this would involve lying about some combination of:

- neutrons released during fission (neutrons are somewhat difficult to measure)

- ratio between production of transuranic elements and fission

- explain observed radiation from fission as transuranic elements, nuclear isomers or something like that.

- The chemical work necessary to distinguish transuranic elements from fission products is quite difficult.

- explain observed radiation from fission as transuranic elements, nuclear isomers or something like that.

A nuclear physicist would be better qualified in figuring out something plausible.

A bit more compelling, though for mining, the excavator/shovel/whatever loads a truck. The truck moves it much further and consumes a lot more energy to do so. Overhead wires to power the haul trucks are the biggest win there.

This is an open pit mine. Less vertical movement may reduce imbalance in energy consumption. Can't find info on pit depth right now but haul distance is 1km.

General point is that when dealing with a move stuff from A to B problem, where A is not fixed, diesel for a varying A-X route and electric for a fixed X-B route seems like a good tradeoff. Definitely B endpoint should be electrified (EG:truck offload at ore processing location)

Getting power to varying point A is a challenging. Maybe something with overhead cables could work, Again, John deere is working on something for agriculture with a cord-laying-down-vehicle and overhead wires are used for the last 20-30 meters. But fields are nice in that there's less sharp rocks and mostly softer dirt/plants. Not impossible but needs some innovation to accomplish.

Agreed on most points. Electrifying rail makes good financial sense.

construction equipment efficiency can be improved without electrifying:

- some gains from better hydraulic design and control

- regen mode for cylinder extension under light load

- varying supply pressure on demand

- substantial efficiency improvements possible by switching to variable displacement pumps

- used in some equipment already for improved control

- skid steers use two for left/right track/wheel motors

- system can be optimised:"A Multi-Actuator Displacement-Controlled System with Pump Switching - A Study of the Architecture and Actuator-Level Control"

- efficiency should be quite high for the proposed system. Definitely >50%.

- used in some equipment already for improved control

Excavators seem like the wrong thing to grid-connect:

- 50kW cables to plug excavators in seem like a bad idea on construction sites.

- excavator is less easy to move around

- construction sites are hectic places where the cord will get damaged

- need a temporary electrical hookup ($5k+ at least to set up)

Diesel powered excavators that get delivered and just run with no cord and no power company involvement seem much more practical.

Other areas to look at

IE:places currently using diesel engines but where cord management and/or electrical hookup cost is less of a concern

Long haul trucking:

- Cost per mile to put in overhead electric lines is high

- but Much lower than cost of batteries for all the trucks on those roads

- reduced operating cost

- electricity costs less than diesel

- reduced maintenance since engine can be mostly off

- don't need to add 3 tonnes of battery and stop periodically to charge

- retrofits should be straightforward

- Siemens has a working system

- giant chicken/egg problem with infrastructure and truck retrofits

Agriculture:

- fields are less of a disaster area than construction sites (EG:no giant holes)

- sometimes there's additional vehicles (EG:transport trucks at harvest time)

- Cable management is definitely a hassle but a solvable one.

- a lot of tractors are computer controlled with GPS guidance

- cord management can be automated

- John Deere is working on a a system where one vehicle handles the long cable and connects via short <30m wires to other ones that do the work

- There's still the problem of where to plug in. Here at least, it's an upfront cost per field.

Some human population will remain for experiments or work in special conditions like radioactive mines. But bad things and population decline is likely.

-

Radioactivity is much more of a problem for people than for machines.

- consumer electronics aren't radiation hardened

- computer chips for satellites, nuclear industry, etc. are though

- nuclear industry puts some electronics (EX:cameras) in places with radiation levels that would be fatal to humans in hours to minutes.

-

In terms of instrumental value, humans are only useful as an already existing work force

- we have arm/legs/hands, hand-eye coordination and some ability to think

- sufficient robotics/silicon manufacturing can replace us

- humans are generally squishier and less capable of operating in horrible conditions than a purpose built robot.

- Once the robot "brains" catch up, the coordination gap will close.

- then it's a question of price/availability

I would like to ask whether it is not more engaging if to say, the caring drive would need to be specifically towards humans, such that there is no surrogate?

Definitely need some targeting criteria that points towards humans or in their vague general direction. Clippy does in some sense care about paperclips so targeting criteria that favors humans over paperclips is important.

The duck example is about (lack of) intelligence. Ducks will place themselves in harms way and confront big scary humans they think are a threat to their ducklings. They definitely care. They're just too stupid to prevent "fall into a sewer and die" type problems. Nature is full of things that care about their offspring. Human "caring for offspring" behavior is similarly strong but involves a lot more intelligence like everything else we do.

TLDR:If you want to do some RL/evolutionary open ended thing that finds novel strategies. It will get goodharted horribly and the novel strategies that succeed without gaming the goal may include things no human would want their caregiver AI to do.

Orthogonally to your "capability", you need to have a "goal" for it.

Game playing RL architechtures like AlphaStart and OpenAI-Five have dead simple reward functions (win the game) and all the complexity is in the reinforcement learning tricks to allow efficient learning and credit assignment at higher layers.

So child rearing motivation is plausibly rooted in cuteness preference along with re-use of empathy. Empathy plausibly has a sliding scale of caring per person which increases for friendships (reciprocal cooperation relationships) and relatives including children obviously. Similar decreases for enemy combatants in wars up to the point they no longer qualify for empathy.

I want agents that take effective actions to care about their "babies", which might not even look like caring at the first glance.

ASI will just flat out break your testing environment. Novel strategies discovered by dumb agents doing lots of exploration will be enough. Alternatively the test is "survive in competitive deathmatch mode" in which case you're aiming for brutally efficient self replicators.

The hope with a non-RL strategy or one of the many sort of RL strategies used for fine tuning is that you can find the generalised core of what you want within the already trained model and the surrounding intelligence means the core generalises well. Q&A fine tuning a LLM in english generalises to other languages.

Also, some systems are architechted in such a way that the caring is part of a value estimator and the search process can be made better up till it starts goodharting the value estimator and/or world model.

Yes they can, until they will actually make a baby, and after that, it's usually really hard to sell loving mother "deals" that will involve suffering of her child as the price, or abandon the child for the more "cute" toy, or persuade it to hotwire herself to not care about her child (if she is smart enough to realize the consequences).

Yes, once the caregiver has imprinted that's sticky. Note that care drive surrogates like pets can be just as sticky to their human caregivers. Pet organ transplants are a thing and people will spend nearly arbitrary amounts of money caring for their animals.

But our current pets aren't super-stimuli. Pets will poop on the floor, scratch up furniture and don't fulfill certain other human wants. You can't teach a dog to fish the way you can a child.

When this changes, real kids will be disappointing. Parents can have favorite children and those favorite children won't be the human ones.

Superstimuli aren't about changing your reward function but rather discovering a better way to fulfill your existing reward function. For all that ice cream is cheating from a nutrition standpoint it still tastes good and people eat it, no brain surgery required.

Also consider that humans optimise their pets (neutering/spaying) and children in ways that the pets and children do not want. I expect some of the novel strategies your AI discovers will be things we do not want.

TLDR:LLMs can simulate agents and so, in some sense, contain those goal driven agents.

An LLM learns to simulate agents because this improves prediction scores. An agent is invoked by supplying a context that indicates text would be written by an agent (EG:specify text is written by some historical figure)

Contrast with pure scaffolding type agent conversions using a Q&A finetuned model. For these, you supply questions (Generate a plan to accomplish X) and then execute the resulting steps. This implicitly uses the Q&A fine tuned "agent" that can have values which conflict with ("I'm sorry I can't do that") or augment the given goal. Here's an AutoGPT taking initiative to try and report people it found doing questionable stuff rather than just doing the original task of finding their posts.(LW source).

The base model can also be used to simulate a goal driven agent directly by supplying appropriate context so the LLM fills in its best guess for what that agent would say (or rather what internet text with that context would have that agent say). The outputs of this process can of course be fed to external systems to execute actions as with the usual scafolded agents. The values of such agents are not uniform. You can ask for simulated Hitler who will have different values than simulated Gandhi.

Not sure if that's exactly what Zvi meant.

But it seems to be much more complicated set of behaviors. You need to: correctly identify your baby, track its position, protect it from outside dangers, protect it from itself, by predicting the actions of the baby in advance to stop it from certain injury, trying to understand its needs to correctly fulfill them, since you don’t have direct access to its internal thoughts etc.

Compared to “wanting to sleep if active too long” or “wanting to eat when blood sugar level is low” I would confidently say that it’s a much more complex “wanting drive”.

Strong disagree that infant care is particularly special.

All human behavior can and usually does involve use of general intelligence or gen-int derived cached strategies. Humans apply their general intelligence to gathering and cooking food, finding or making shelters to sleep in and caring for infants. Our better other-human/animal modelling ability allows us to do better at infant wrangling than something stupider like a duck. Ducks lose ducklings to poor path planning all the time. Mama duck doesn't fall through the sewer grate but her ducklings do ... oops.

Any such drive will be always "aimed" by the global loss function, something like: our parents only care about us in a way for us to make even more babies and to increase our genetic fitness.

We're not evolution and can aim directly for the behaviors we want. Group selection on bugs for lower population size results in baby eaters. If you want bugs that have fewer kids that's easy to do as long as you select for that instead of a lossy proxy measure like population size.

Simulating an evolutionary environment filled with AI agents and hoping for caring-for-offspring strategies to win could work but it's easier just to train the AI to show caring-like behaviors. This avoids the "evolution didn't give me what I wanted" problem entirely.

There's still a problem though.

It continues to work reliably even with our current technologies

Goal misgeneralisation is the problem that's left. Humans can meet caring-for-small-creature desires using pets rather than actual babies. It's cheaper and the pets remain in the infant-like state longer (see:criticism of pets as "fur babies"). Better technology allows for creating better caring-for-small creature surrogates. Selective breeding of dogs and cats is one small step humanity has taken in that direction.

Outside of "alignment by default" scenarios where capabilities improvements preserve the true intended spirit of a trained in drive, we've created a paperclip maximizer that kills us and replaces us with something outside the training distribution that fulfills its "care drive" utility function more efficiently.

Many of the points you make are technically correct but aren't binding constraints. As an example, diffusion is slow over small distances but biology tends to work on µm scales where it is more than fast enough and gives quite high power densities. Tiny fractal-like microstructure is nature's secret weapon.

The points about delay (synapse delay and conduction velocity) are valid though phrasing everything in terms of diffusion speed is not ideal. In the long run, 3d silicon+ devices should beat the brain on processing latency and possibly on energy efficiency

Still, pointing at diffusion as the underlying problem seems a little odd.

You're ignoring things like:

- ability to separate training and running of a model

- spending much more on training to improve model efficiency is worthwhile since training costs are shared across all running instances

- ability to train in parallel using a lot of compute

- current models are fully trained in <0.5 years

- ability to keep going past current human tradeoffs and do rapid iteration

- Human brain development operates on evolutionary time scales