Digital brains beat biological ones because diffusion is too slow

post by GeneSmith · 2023-08-26T02:22:25.014Z · LW · GW · 21 commentsContents

Why hasn't evolution stumbled across a better method of doing things than passive diffusion? None 21 comments

I've spent quite a bit of time thinking about the possibility of genetically enhancing humans to be smarter, healthier, more likely to care about others, and just generally better in ways that most people would recognize as such.

As part of this research, I've often wondered whether biological systems could be competitive with digital systems in the long run.

My framework for thinking about this involved making a list of differences between digital systems and biological ones and trying to weigh the benefits of each. But the more I've thought about this question, the more I've realized most of the advantages of digital systems over biological ones stem from one key weakness of the latter: they are bottlenecked by the speed of diffusion.

I'll give a couple of examples to illustrate the point:

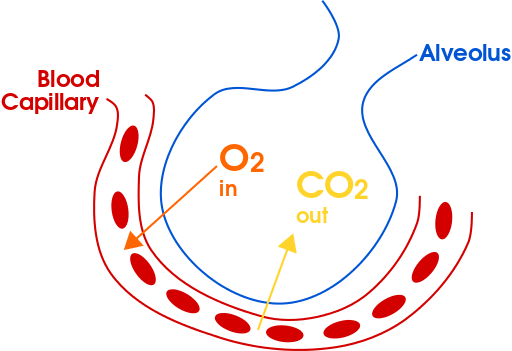

- To get oxygen into the bloodstream, the body passes air over a huge surface area in the lungs. Oxygen passively diffuses into the bloodstream through this surface where it binds to hemoglobin. The rate at which the body can absorb new oxygen and expel carbon dioxide waste is limited by the surface area of the lungs and the concentration gradient of both molecules.

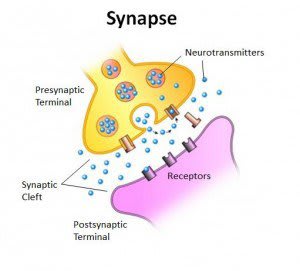

- Communication between neurons relies on the diffusion of neurotransmitters across the synaptic cleft. This process takes approximately 0.5-1ms. This imposes a fundamental limit on the speed at which the brain can operate.

- A signal propogates down the axon of a neuron at about 100 meters per second. You might wonder why this is so much slower than a wire; after all, both are transmitting a signal using electric potential, right?

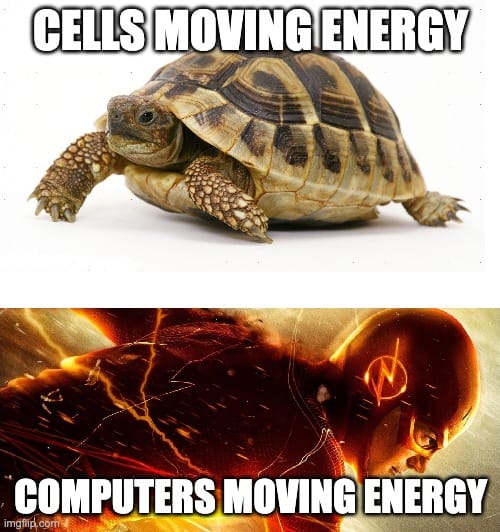

It turns out the manner in which the electrical potential is transmitted is much different in a neuron. Signals are propagated down an axon via passive diffusion of Na+ ions into the axon via an Na+ channel. The signal speed is fundamentally limited by the speed at which sodium ions can diffuse into the cell. As a result, electrical signals travel through a wire about 2.7 million times faster than they travel through an axon. - Delivery of energy (mainly ATP) to different parts of the cell occurs via diffusion. The fastest rate of diffusion I found of any molecule within a cell was that of positively charged hydrogen ions, which diffuse at a blistering speed of 0.007 meters/second. ATP diffuses much slower. So energy can be transferred through a wire at more than 38 billion times the speed that ATP can diffuse through a cell.

Why hasn't evolution stumbled across a better method of doing things than passive diffusion?

Here I am going to speculate. I think that evolution is basically stuck at a local maxima. Once diffusion provided a solution for "get information or energy from point A to point B", evolving a fundamentally different system requires a large number of changes, each of which individually makes the organism less well adapted to its environment.

We can see examples of the difficulty of evolving fundamentally new abilities in Professor Richard Lenski's long-running evolution experiment using E. coli. which has been running since 1988. Lenski began growing E. coli in flasks full of a nutrient solution containing glucose, potassium phosphate, citrate, and a few other things.

The only carbon source for these bacteria is glucose, which is limited. Once per day, a small portion of the bacteria in each flask is transferred to another flask, at which point they grow and multiply again.

Each flask will contain a number of different strains of E. coli, all of which originate from a common ancestor.

To measure the rate of evolution, Lenski and his colleagues measure the proportion of each strain. The ratio of one strain compared to the others gives a clear idea of its "fitness advantage". Lenski's lab has historical samples frozen, which they occasionally use to benchmark the fitness of newer lineages.

After 15 years of running this experiment, something very surprising happened. Here's a quote from Dr. Lenski explaining the finding:

In 2003, the bacteria started doing something remarkable. One of the 12 lineages suddenly began to consume a second carbon source, citrate, which had been present in our medium throughout the experiment.

It's in the medium as what's called a chelating agent to bind metals in the medium. But E. coli, going back to its original definition as a species, is incapable of that.

But one day we found one of our flasks had more turbidity. I thought we probably had a contaminant in there. Some bacterium had gotten in there that could eat the citrate and, therefore, had raised the turbidity. We went back into the freezer and restarted evolution. We also started checking those bacteria to see whether they really were E. coli. Yep, they were E. Coli. Were they really E. coli that had come from the ancestral strain? Yep. So we started doing genetics on it.

It was very clear that one of our bacteria lineages had essentially, I like to say, sort of woken up one day, eaten the glucose, and unlike any of the other lineages, discovered that there was this nice lemony dessert, and they'd begun consuming that and getting a second source of carbon and energy.

Zack was interested in the question of why did it take so long to evolve this and has only one population evolved that ability? He went into the freezer and he picked bacterial individuals or clones from that lineage that eventually evolved that ability. And then he tried to evolve that ability again starting from different points. So in a sense, it's almost like, well, it's like rewinding the tape and starting let's go back to the minute five of the movie. Let's go back to a minute 10 of the movie, minute 20 of the movie and see if the result changes depending on when we did it, because this citrate phenotype there were essentially two competing explanations for why it was so difficult to evolve.

One was that it was just a really rare mutation. It wasn't like one of these just change one letter. It was something where maybe you had to flip a certain segment of DNA and you had to have exactly this break point and exactly that break point. And that was the only way to do it. So it was a rare event, but it could have happened at any point in time. The alternative hypothesis is that, well, what happened was a series of events that made something perfectly ordinary become possible that wasn't possible at the beginning because a mutation would only have this effect once other aspects of the organism had changed.

To make a long story short, it turns out it's such a difficult trait to evolve because both of those hypotheses are true.

This is a bit like how I think of the evolution of the ability to transmit energy or information without diffusion. Evolution was never able to directly evolve a way to transmit signals or information faster than myelinated neurons. Instead it ended up evolving a species capable of controlling electricity and making computers. And that species is in the process of turning over control of the world to a brand-new type of life that doesn't use DNA at all and it not limited by the speed of diffusion in any sense.

It's difficult to imagine how we could overcome many of these diffusion-based limitations of brains without a major redesign of how neurons function. We can probably raise IQ into the low or mid 200s with genetic engineering. But 250 IQ humans are rapidly going to be surpassed by AI that can spawn a new generation of itself in a matter of weeks or months.

So I think in the long run, the only way biological brains win is if we simply do not build AGI.

21 comments

Comments sorted by top scores.

comment by anithite (obserience) · 2023-08-26T18:19:26.701Z · LW(p) · GW(p)

Many of the points you make are technically correct but aren't binding constraints. As an example, diffusion is slow over small distances but biology tends to work on µm scales where it is more than fast enough and gives quite high power densities. Tiny fractal-like microstructure is nature's secret weapon.

The points about delay (synapse delay and conduction velocity) are valid though phrasing everything in terms of diffusion speed is not ideal. In the long run, 3d silicon+ devices should beat the brain on processing latency and possibly on energy efficiency [LW · GW]

Still, pointing at diffusion as the underlying problem seems a little odd.

You're ignoring things like:

- ability to separate training and running of a model

- spending much more on training to improve model efficiency is worthwhile since training costs are shared across all running instances

- ability to train in parallel using a lot of compute

- current models are fully trained in <0.5 years

- ability to keep going past current human tradeoffs and do rapid iteration

- Human brain development operates on evolutionary time scales

- increasing human brain size by 10x won't happen anytime soon but can be done for AI models.

People like Hinton Typically point to those as advantages and that's mostly down to the nature of digital models as copy-able data, not anything related to diffusion.

Energy processing

Lungs are support equipment. Their size isn't that interesting. Normal computers, once you get off chip, have large structures for heat dissipation. Data centers can spend quite a lot of energy/equipment-mass getting rid of heat.

Highest biological power to weight ratio is bird muscle which produces around 1 w/cm³ (mechanical power). Mitochondria in this tissue produces more than 3w/cm³ of chemical ATP power. Brain power density is a lot lower. A typical human brain is 80 watts/1200cm³ = 0.067W/cm³.

synapse delay

This is a legitimate concern. Biology had to make some tradeoffs here. There are a lot of places where direct mechanical connections would be great but biology uses diffusing chemicals.

Electrical synapses exist and have negligible delay. though they are much less flexible (can't do inhibitory connections && signals can pass both ways through connection)

conduction velocity

Slow diffiusion speed of charge carriers is a valid point and is related to the 10^8 factor difference in electrical conductivity between neuron saltwater and copper. Conduction speed is an electrical problem. There's a 300x difference in conduction speed between myelinated(300m/s) and un-myelinated neurons(1m/s).

compensating disadvantages to current digital logic

The brain runs at 100-1000 Hz vs 1GHz for computers (10^6 - 10^7 x slower). It would seem at first glance that digital logic is much better.

The brain has the advantage of being 3D compared to 2D chips which means less need to move data long distances. Modern deep learning systems need to move all their synapse-weight-like data from memory into the chip during each inference cycle. You can do better by running a model across a lot of chips but this is expensive and may be inneficient [LW · GW].

In the long run, silicon (or something else) will beat brains in speed and perhaps a little in energy efficiency. If this fellow is right about lower loss interconnects [LW · GW] then you get another + 3OOM in energy efficiency.

But again, that's not what's making current models work. It's their nature as copy-able digital data that matters much more.

comment by localdeity · 2023-08-26T19:22:47.919Z · LW(p) · GW(p)

So I think in the long run, the only way biological brains win is if we simply do not build AGI.

Depends on how long you're talking about. It seems plausible to me that, if we got a bunch of 250 IQ humans, then they could in fact do a major redesign of neurons. However, I would expect all this to take at least 100 years (if not aided by superintelligent AI), which is longer than most AI timelines I've seen (unless we bring AI development to a snail's pace or a complete stop).

Replies from: GeneSmith↑ comment by GeneSmith · 2023-08-26T20:09:38.744Z · LW(p) · GW(p)

Huh. I would actually bet on the 250 IQ humans being able to redesign neurons in far less than 100 years. But I think the odds of such humans existing before AGI is low.

Replies from: localdeity↑ comment by localdeity · 2023-08-27T08:38:35.040Z · LW(p) · GW(p)

I'm counting the time it takes to (a) develop the 250 IQ humans [15-50 years], (b) have them grow to adulthood and become world-class experts in their fields [25-40 years], (c) do their investigation and design in mice [10-25 years], and (d) figure out how to incorporate it into humans nonfatally [5-15 years].

Then you'd either grow new humans with the super-neurons, or figure out how to change the neurons of existing adults; the former is usually easier with genetics, but I don't think you could dial the power up to maximum in one generation without drastically changing how mental development goes in childhood, with a high chance of causing most children to develop severe psychological problems; the 250 IQ researchers would be good at addressing this, of course, perhaps even at evaluating the early signs of those problems early (to allow faster iteration); but I think they'd still have to spend 10-50 years on iterating with human children before fixing the crippling bugs.

So I think it might be faster to solve the harder problem of replacing an adult's neurons with backwards-compatible, adjustable super-neurons—that can interface with the old ones but also use the new method to connect to each other, which initially works at the same speed but then you can dial it up progressively and learn to fix the problems as they come up. Harder to set it up—maybe 5-10 extra years—but once you have it, I'd say 5-15 years before you've successfully dialed people up to "maximum".

comment by Andy_McKenzie · 2023-08-26T02:51:29.477Z · LW(p) · GW(p)

I agree with most of this post, but it doesn’t seem to address the possibility of whole brain emulation. However, many/(?most) would argue this is unlikely to play a major role because AGI will come first.

comment by brendan.furneaux · 2023-08-27T08:24:14.071Z · LW(p) · GW(p)

Although the crux of your claim that diffusion is the rate-limiting step of many biological processes may be sound, the question you actually ask, "Why hasn't evolution stumbled across a better method of doing things than passive diffusion?", is misguided. Evolution has stumbled across such methods. Your post itself contains several examples of evolved systems which move energy and information faster than diffusion. These include the respiratory system, which moves air into and out of the lungs much faster than diffusion would allow; the circulatory system, which moves oxygen and glucose (energy), hormones (information), and many other things, all much faster than diffusion; and neurons, which move impulses down the axon much faster than diffusion. In none of these cases has the role of diffusion been completely removed, which is what you are looking for I suppose, but life has increased the total speed from point A to point B by several orders of magnitude by finding an alternate mechanism for most of the distance.

Beyond that type of optimization, I agree with you that this is a local maximum problem. Life's fundamental processes are based on chemical reactions in aqueous solution, which necessarily involve diffusion, and any move beyond that is a big, low-probability step.

That said, particularly in the case of the brain, I don't think evolution has thoroughly explored its possibility space, and there is room for further optimization within the current "paradigm". As one example, the evolution of human intelligence proceeded at least in large part by scaling brain size, but we are currently limited by the constraint that the head needs to be able to fit through the mother's pelvis during birth. Birds, based on totally different evolutionary pressures involving weight reduction for flight, seem to be able to pack processing power into a much smaller volume than mammals. If humans could "only" use bird brain architecture, then we could presumably increase our intelligence substantially without increasing the size of our heads. But despite the presumed evolutionary pressure (we suffer much higher maternal mortality than other species due to our big heads) there has not been enough time for us to convergently evolve brain miniaturization to the extent of birds.

comment by jmh · 2023-08-26T11:37:06.261Z · LW(p) · GW(p)

There was a story in NewAtlas about a computer - brain tissue merge that seems to have produced some interesting features. Perhaps such a union is the type of evolutionary next step for human cognitive improvements rather than genetic screening/engineering or chemical additives.

comment by Metacelsus · 2023-08-26T20:56:50.836Z · LW(p) · GW(p)

Agreed. See also https://denovo.substack.com/p/biological-doom for an overview of different types of biological computation.

comment by Ilio · 2023-08-26T12:39:26.128Z · LW(p) · GW(p)

Main point: yes neurons are not the best building block for communication speed, but you shouldn’t assume that increasing it would necessarily increase fitness. Muscles are much slower, retina even more, and even the fastest punch (mantris shrimps) is several time slower than communication speed in myelinated axons. That’s said, the overall conclusion that using neurons is an evolutionary trap is probably sound, as we know most of our genetic code is for tweaking something in the brain, without much change in how most neurons work and learn.

Tangent point: you’re assuming that 250IQ is a sound concept. If you were to pass an IQ test well designed for mouses, would you expect to reach 250? If you were to measure IQ in slim molt, what kind of result would you expect, and would that IQ level help predict the behavior below?

https://www.discovermagazine.com/planet-earth/brainless-slime-mold-builds-a-replica-tokyo-subway

Replies from: GeneSmith↑ comment by GeneSmith · 2023-08-26T16:50:25.099Z · LW(p) · GW(p)

My point isn't that blindly increasing neuron transmission speed would increase fitness. It probably would up to a point, but I don't know enough about how sensitive the brain is to the speed of signals in a neuron to be sure. My point is that getting information from one part of the brain to another is clearly a fundamental task in cognitive processing and that the relatively slow speed of electrical signals is always going to be a limitation of neurons.

I think something like 250IQ is still a pretty sound concept, though I agree we would really benefit from better methods of measuring intelligence than the standard IQ test. For one, it would be helpful to measure subject-specific aptitudes.

Replies from: m-ls↑ comment by M Ls (m-ls) · 2023-08-28T22:38:39.559Z · LW(p) · GW(p)

"specific" My General intelligence is possibly above average but my maths co-processor is crap, my AiPU module for LLM is better than anyones, to the point I laugh at my own jokes in great pain, using the AiPU for maths is not great, I have a strong interior narrative, I am not a super-recogniser, not tone deaf, but have some weird fractal 2.23 mind's eye (I can swap between wireframe rotations and rendered scenes plus some other stuff I cannot find words for)(def not aphantasic https://newworkinphilosophy.substack.com/p/margherita-arcangeli-institut-jean ), I have a good memory, auto-pilot is very strong and rarely get lost I think these two things are connected in me at least, about this memory thing my wife says I have a long memory and this is a bad thing. Putting all that into IQ is dumb.

comment by Robert Mcdougal (robert-mcdougal) · 2024-01-02T00:57:45.902Z · LW(p) · GW(p)

"We can probably raise IQ into the low or mid 200s with genetic engineering."

So you don't think 300 to 900 IQ humans are possible? You think 250 is the max of a human?

Speed of thinking isn't the only component of g-factor, it's also memory, the size of the recall-able knowledge space, and how many variables you could juggle in your head at once which imo could keep on getting larger if brains and bodies could also keep getting bigger. Do non-human brains think at a slower rate than bonobos and chimps? Young non-human primates and other animals have pretty fast reaction times. The reason they have lower IQs than humans can be explained entirely by neuron count. Human intelligence is just primate brains scaled up with more neurons. Gorillas and orangutans have brain sizes 1/3 as big as humans. Human brains have all the speed constraints as gorillas obviously. Knowledge and working memory is more important than speed. A 500 IQ human could outsource strict numerical calculation to silicon calculators

Replies from: GeneSmith↑ comment by GeneSmith · 2024-01-03T03:53:38.630Z · LW(p) · GW(p)

I think it’s plausible we could go higher but I’m fairly certain the linear model will break down at some point. I don’t know exactly where , but somewhere above the current human range is a good guess.

You’ll likely need a “validation generation” to go beyond that, meaning a generation or very high IQ people who can study themselves and each other to better understand how real intelligence has deviated from the linear model at high IQ ranges.

The reason they have lower IQs than humans can be explained entirely by neuron count.

Not true. Humans have an inbuilt propensity for language in a way that gorillas and other non-human primates don’t. There are other examples like this.

Replies from: robert-mcdougal↑ comment by Robert Mcdougal (robert-mcdougal) · 2024-01-12T21:55:10.197Z · LW(p) · GW(p)

How do we know this "propensity for language" isn't an emergent property that is a function of neuron count past a certain point?

comment by qjh (juehang) · 2023-08-28T13:55:23.792Z · LW(p) · GW(p)

A minor point, perhaps a nitpick: both biological systems and electronic ones depend on directed diffusion. In our bodies diffusion is often directed by chemical potentials, and in electronics it is directed by electric or vector potentials. It's the strength of the 'direction' versus the strength of the diffusion that makes the difference. (See: https://en.m.wikipedia.org/wiki/Diffusion_current)

Except in superconductors, of course.

comment by Vladimir_Nesov · 2023-08-26T11:12:36.511Z · LW(p) · GW(p)

Superintelligence is like self-replicating diamondoid machines as human industry replacement: both are plausible predictions, but not cruxes of AI risk. Serial speed advantage [LW(p) · GW(p)] alone is more than sufficient. Additionally, it by itself predicts the timeframe of only a few physical years from first AGIs to ancient AI civilizations/individuals (as hardware that removes contingent weaknesses of modern GPUs is manufactured).

Human intelligence enhancement is relevant as a path to figuring out alignment or AGI building ban coordination. With alignment, even biological brains don't have to be competitive.

There is also the problem of civilizational value drift [LW(p) · GW(p)], in the long run even a human civilization might go off the rails, and it's unclear that this problem gets addressed in time without intelligence enhancement, in a world without AGIs. The same applies to WBEs/ems if they magically appear before synthetic AGIs, except they also have the serial speed advantage. So if they remain at human level and don't work on value drift, their initial alignment with humanity isn't going to help, as in a few physical years they become an ancient civilization that has drifted uncontrollably far away from their origins (in directions not guided by moral arguments). Hanson's book explores how this process could start.

Replies from: GeneSmith↑ comment by GeneSmith · 2023-08-26T16:52:29.264Z · LW(p) · GW(p)

With alignment, even biological brains don't have to be competitive.

I would agree with this, but only if by "alignment" you also include risk of misuse, which I don't generally consider the same problem as "make this machine aligned with the interests of the person controlling it"

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-08-26T17:39:12.940Z · LW(p) · GW(p)

Ultimately, alignment is whatever makes turning on an AI a good idea rather than a bad idea. Some pivotal processes need to ensure enough coordination to avoid unilateral initiation of catastrophes. Banning manufacturing of GPUs (or of selling enriched uranium at a mall) is an example of such a process that doesn't even need AIs to do the work.

Replies from: faul_sname↑ comment by faul_sname · 2023-08-27T05:08:55.243Z · LW(p) · GW(p)

Ultimately, alignment is whatever makes turning on an AI a good idea rather than a bad idea.

This is pithy, but I don't think it's a definition of alignment that points at a real property of an agent (as opposed to a property of the entire universe, including the agent).

If we have an AI which controls which train goes on which track, and can detect where all the trains in its network are but not whether or not there is anything on the tracks, whether or not this AI is "aligned" shouldn't depend on whether or not anyone happens to be on the tracks (which, again, the AI can't even detect).

The "things should be better instead of worse problem" is real and important, but it is much larger than anything that can reasonably be described as "the alignment problem".

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-08-27T09:38:19.113Z · LW(p) · GW(p)

The non-generic part is "turning an AI on", and "good idea" is an epistemic consideration on part of the designers, not reference to actual outcome in a reality.

I heard that sentence attributed to Yudkowsky on some podcasts, and it makes sense as an umbrella desideratum after pivoting from shared-extrapolated-values AI to pivotal act AI (as described on arbital), since with that goal there doesn't appear to be a more specific short summary anymore. In context of that sentence as I mentioned it there is discussion of humans with augmented intelligence, so pivotal act (specialized tool) AI is more centrally back on the table (even as it still seems prudent to plan for long AGI timelines [LW · GW] in our world as it is, to avoid abandoning that possibility only to arrive at it unprepared).

comment by Nathan Helm-Burger (nathan-helm-burger) · 2023-08-28T15:24:15.584Z · LW(p) · GW(p)

While I agree that easier speed upgrades to hardware is one advantage we should expect a digital mind to have, I wouldn't call that the primary advantage. Anithite mentions some others in their comment. I think increasing hardware size is a pretty big one. The biggest I think though is substrate independence. This unlocks many others. Easy hardware upgrades, rapid copying, travel at the speed of light between different substrate locations, easy storage of backup copies, ability to easily store oneself in a compressed form and then expand again, to add and modify parts of yourself. And many more. The beings best able to colonize the galaxy are obviously going to be ones with these sorts of advantages. Being able to send yourself in a hundred spaceships, each the size of a pea, with a compressed dormant copy of you, pushed through the interstellar spaces on laser beams operated by a copy of yourself remaining behind... No biological entity can compete with that kind of space travel, unless we are very mistaken about the laws of physics.