Review: Planecrash

post by L Rudolf L (LRudL) · 2024-12-27T14:18:33.611Z · LW · GW · 45 commentsThis is a link post for https://nosetgauge.substack.com/p/review-planecrash

Contents

The setup The characters The competence The philosophy Validity, Probability, Utility Coordination Decision theory The political philosophy of dath ilan A system of the world None 45 comments

Take a stereotypical fantasy novel, a textbook on mathematical logic, and Fifty Shades of Grey. Mix them all together and add extra weirdness for spice. The result might look a lot like Planecrash [LW · GW] (AKA: Project Lawful), a work of fiction co-written by "Iarwain" (a pen-name of Eliezer Yudkowsky) and "lintamande".

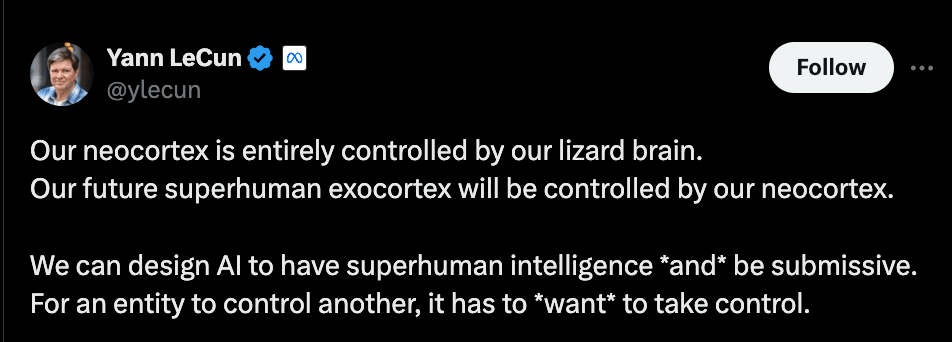

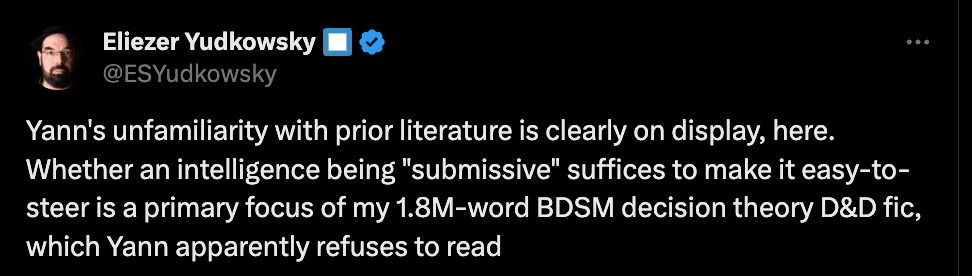

Yudkowsky is not afraid to be verbose and self-indulgent in his writing. He previously wrote a Harry Potter fanfic that includes what's essentially an extended Ender's Game fanfic in the middle of it, because why not. In Planecrash, it starts with the very format: it's written as a series of forum posts (though there are ways to get an ebook). It continues with maths lectures embedded into the main arc, totally plot-irrelevant tangents that are just Yudkowsky ranting about frequentist statistics, and one instance of Yudkowsky hijacking the plot for a few pages to soapbox about his pet Twitter feuds (with transparent in-world analogues for Effective Altruism, TPOT, and the post-rationalists). Planecrash does not aspire to be high literature. Yudkowsky is self-aware of this, and uses it to troll big-name machine learning researchers:

why would anyone ever read Planecrash? I read (admittedly—sometimes skimmed) it, and I see two reasons:

- The characters are competent in a way that characters in fiction rarely are. Yudkowsky is good at writing intelligent characters in a specific way that I haven't seen anyone else do as well. Lintamande writes a uniquely compelling story of determination and growth in an extremely competent character.

- More than anyone else I've yet read, Yudkowsky has his own totalising and self-consistent worldview/philosophy, and Planecrash makes it pop more than anything else he's written.

The setup

Dath ilan is an alternative quasi-utopian Earth, based (it's at least strongly hinted) on the premise of: what if the average person was Eliezer Yudkowsky? Dath ilan has all the normal quasi-utopian things like world government and land-value taxes and the widespread use of Bayesian statistics in science. Dath ilan also has some less-normal things, like annual Oops It's Time To Overthrow the Government festivals, an order of super-rationalists, and extremely high financial rewards for designing educational curricula that bring down the age at which the average child learns the maths behind the game theory of cooperation.

Keltham is an above-average-selfishness, slightly-above-average-intelligence young man from dath ilan. He dies in the titular plane crash, and wakes up in Cheliax.

Cheliax is a country in a medieval fantasy world in another plane of existence to dath ilan's (get it?). (This fantasy world is copied from a role-playing game setting—a fact I discovered when Planecrash literally linked to a Wiki article to explain part of the in-universe setting.) Like every other country in this world, Cheliax is medieval and poor. Unlike the other countries, Cheliax has the additional problem of being ruled by the forces of Hell.

Keltham meets Carissa, a Chelish military wizard who alerts the Chelish government about Keltham. Keltham is kept unaware about the Hellish nature of Cheliax, so he's eager to use his knowledge to start the scientific and industrial revolutions in Cheliax to solve the medieval poverty thing—starting with delivering lectures on first-order logic (why, what else would you first do in a medieval fantasy world?). An elaborate game begins where Carissa and a select group of Chelish agents try to extract maximum science from an unwitting Keltham before he realises what Cheliax really is—and hope that by that time, they'll have tempted him to change his morals towards a darker, more Cheliax-compatible direction.

The characters

Keltham oscillates somewhere between annoying and endearing.

The annoyingness comes from his gift for interrupting any moment with polysyllabic word vomit. Thankfully, this is not random pretentious techno-babble but a coherent depiction of a verbose character who thinks in terms of a non-standard set of concepts. Keltham's thoughts often include an exclamation along the lines of "what, how is {'coordination failure' / 'probability distribution' / 'decision-theoretic-counterfactual-threat-scenario'} so many syllables in this language, how do these people ever talk?"—not an unreasonable question. However, the sheer volume of Keltham's verbosity is still something, especially when it gets in the way of everything else.

The endearingness comes from his manic rationalist problem-solver energy, which gets applied to everything from figuring out chemical processes for magic ingredients to estimating the odds that he's involved in a conspiracy to managing the complicated social scene Cheliax places him in. It's somewhat like The Martian, a novel (and movie) about an astronaut stranded on Mars solving a long series of engineering challenges, but the problem-solving is much more abstract and game-theoretic and interpersonal, than concrete and physical and man-versus-world.

By far the best and most interesting character in Planecrash is Carissa Sevar, one of the several characters whose point-of-view is written by lintamande rather than Yudkowsky. She's so driven that she accidentally becomes a cleric of the god of self-improvement. She grapples realistically with the large platter of problems she's handed, experiences triumph and failure, and keeps choosing pain over stasis. All this leads to perhaps the greatest arc of grit and unfolding ambition that I've read in fiction.

The competence

I have a memory of once reading some rationalist blogger describing the worldview of some politician as: there's no such thing as competence, only loyalty. If a problem doesn't get solved, it's definitely not because the problem was tricky and there was insufficient intelligence applied to it or a missing understanding of its nature or someone was genuinely incompetent. It's always because whoever was working on it wasn't loyal enough to you. (I thought this was Scott Alexander on Trump, but the closest from him seems to be this, which makes a very different point.)

Whether or not I hallucinated this, the worldview of Planecrash is the opposite.

Consider Queen Abrogail Thrune II, the despotic and unhinged ruler of Cheliax who has a flair for torture. You might imagine that her main struggles are paranoia over the loyalty of her minions, and finding time to take glee in ruling over her subjects. And there's some of those. But more than that, she spends a lot of time being annoyed by how incompetent everyone around her is.

Or consider Aspexia Rugatonn, Cheliax's religious leader and therefore in charge of making the country worship Hell. She's basically a kindly grandmother figure, except not. You might expect her thoughts to be filled with deep emotional conviction about Hell, or disappointment in the "moral" failures of those who don't share her values (i.e. every non-sociopath who isn't brainwashed hard enough). But instead, she spends a lot of her time annoyed that other people don't understand how to act most usefully within the bounds of the god of Hell's instructions. The one time she gets emotional is when a Chelish person finally manages to explain the concept of corrigibility [? · GW] to her as well as Aspexia herself could. (The gods and humans in the Planecrash universe are in a weird inverse version of the AI alignment problem. The gods are superintelligent, but have restricted communication bandwidth and clarity with humans. Therefore humans often have to decide how to interpret tiny snippets of god-orders through changing circumstances. So instead of having to steer the superintelligence given limited means, the core question is how to let yourself be steered by a superintelligence that has very limited communication bandwidth with you.)

Fiction is usually filled with characters who advance the plot in helpful ways with their emotional fumbles: consider the stereotypical horror movie protagonist getting mad and running into a dark forest alone, or a character whose pride is insulted doing a dumb thing on impulse. Planecrash has almost none of that. The characters are all good at their jobs. They are surrounded by other competent actors with different goals thinking hard about how to counter their moves, and they always think hard in response, and the smarter side tends to win. Sometimes you get the feeling you're just reading the meeting notes of a competent team struggling with a hard problem. Evil is not dumb or insane, but just "unaligned" by virtue of pursuing a different goal than you—and does so very competently. For example: the core values of the forces of Hell are literally tyranny, slavery, and pain. They have a strict hierarchy and take deliberate steps to encourage arbitrary despotism out of religious conviction. And yet: their hierarchy is still mostly an actual competence hierarchy, because the decision-makers are all very self-aware that they can only be despotic to the extent that it still promotes competence on net. Because they're competent.

Planecrash, at its heart, is competence porn. Keltham's home world of dath ilan is defined by its absence of coordination failures. Neither there nor in Cheliax's world are there really any lumbering bureaucracies that do insane things for inscrutable bureaucratic reasons; all the organisations depicted are all remarkably sane. Important positions are almost always filled by the smart, skilled, and hardworking. Decisions aren't made because of emotional outbursts. Instead, lots of agents go around optimising for their goals by thinking hard about them. For a certain type of person, this is a very relaxing world to read about, despite all the hellfire

The philosophy

"Rationality is systematized winning [LW · GW]", writes Yudkowsky in The Sequences [? · GW]. All the rest is commentary.

The core move in Yudkowsky's philosophy is:

- We want to find the general solution to some problem.

- for example: fairness—how should we split gains from a project where many people participated

- Now here are some common-sense properties that this thing should follow

- for example:

- (1) no gains should be left undivided

- (2) if two people both contribute identically to every circumstance (formalised as a set of participating people), they should receive an equal share of the gains

- (3) the rule should give the same answer if you combine the division of gains from project A and then project B, as when you use it to calculate the division of gains from project A+B

- (4) if one person doesn't add value in any circumstance, their share of the gains is zero

- for example:

- Here is The Solution. Note that it's mathematically provable that if you don't follow The Solution, there exists a situation where you will do something obviously dumb.

- For example: Shapely value is the unique solution that satisfies the axioms above. (The Planecrash walkthrough of Shapely value is roughly here; see also here for more Planecrash about trade and fairness.)

- Therefore, The Solution is uniquely spotlighted by the combination of common-sense goals and maths as the final solution to this problem, and if you disagree, please read this 10,000 word dialogue.

The centrality of this move is something I did not get from The Sequences, but which is very apparent in Planecrash. A lot of the maths in Planecrash isn't new Yudkowsky material. But Planecrash is the only thing that has given me a map through the core objects of Yudkowsky's philosophy, and spelled out the high-level structure so clearly. It's also, as far as I know, the most detailed description of Yudkowsky's quasi-utopian world of dath ilan.

Validity, Probability, Utility

Keltham's lectures to the Chelish—yes, there are actually literal maths lectures within Planecrash—walk through three key examples, at a spotty level of completeness but at a high quality of whatever is covered:

- Validity, i.e. logic. In particular, Yudkowksy highlights what I think is some combination of Lindstrom's theorem and Godel's completeness theorem, that together imply first-order logic is the unique logic that is both complete (i.e. everything true within it can be proven) and has some other nice properties. However, first-order logic is also not strong enough to capture some things we care about (such as the natural numbers), so this is the least-strong example of the above pattern. Yudkowsky has written out his thoughts on logic in the mathematics and logic section here [? · GW], if you want to read his takes in a non-fiction setting.

- Probability. So-called Dutch book theorems show that if an agent does not update their beliefs in a Bayesian way, there exists a set of losing bets that they would take despite it leading to a guaranteed loss. So your credences in beliefs should be represented as probabilities, and you should update those probabilities with Bayes' theorem. (Here is a list of English statements that, dath ilani civilisation thinks, anyone competent in Probability should be able to translate into correct maths.)

- Utility. The behaviour of any agent that is "rational" in a certain technical sense should be describable as it having a "utility function", i.e. every outcome can be assigned a number, such that the agent predictably chooses outcomes with higher numbers over those with lower ones. This is because if an agent violates this constraint, there must exist situations where it would do something obviously dumb. As a shocked Keltham puts it: "I, I mean, there's being chaotic, and then there's being so chaotic that it violates coherence theorems".

In Yudkowsky's own words, not in Planecrash but in an essay he wrote [LW · GW] (with much valuable discussion in the comments):

We have multiple spotlights all shining on the same core mathematical structure, saying dozens of different variants on, "If you aren't running around in circles or stepping on your own feet or wantonly giving up things you say you want, we can see your behavior as corresponding to this shape. Conversely, if we can't see your behavior as corresponding to this shape, you must be visibly shooting yourself in the foot." Expected utility is the only structure that has this great big family of discovered theorems all saying that. It has a scattering of academic competitors, because academia is academia, but the competitors don't have anything like that mass of spotlights all pointing in the same direction.

So if we need to pick an interim answer for "What kind of quantitative framework should I try to put around my own decision-making, when I'm trying to check if my thoughts make sense?" or "By default and barring special cases, what properties might a sufficiently advanced machine intelligence look to us like it possessed, at least approximately, if we couldn't see it visibly running around in circles?", then there's pretty much one obvious candidate: Probabilities, utility functions, and expected utility.

Coordination

Next, coordination. There is no single theorem or total solution for the problem of coordination. But the Yudkowskian frame has near-infinite scorn for failures of coordination. Imagine not realising all possible gains just because you're stuck in some equilibrium of agents defecting against each other. Is that winning? No, it's not. Therefore, it must be out.

Dath ilan has a mantra that goes, roughly: if you do that, you will end up there, so if you want to end up somewhere that is not there, you will have to do Something Else Which Is Not That. And the basic premise of dath ilan is that society actually has the ability to collectively say "we are currently going there, and we don't want to, and while none of us can individually change the outcome, we will all coordinate to take the required collective action and not defect against each other in the process even if we'd gain from doing so". Keltham claims that in dath ilan, if there somehow developed an oppressive tyranny, everyone would wait for some Schelling time (like a solar eclipse or the end of the calendar year or whatever) and then simultaneously rise up in rebellion. It probably helps that dath ilan has annual "oops it's time to overthrow the government" exercises. It also helps that everyone in dath ilan knows that everyone knows that everyone knows that everyone knows (...) all the standard rationalist takes on coordination and common knowledge [LW · GW].

Keltham summarises the universality of Validity, Probability, Utility, and Coordination (note the capitals):

"I am a lot more confident that Validity, Probability, and Utility are still singled-out mathematical structures whose fragmented shards and overlapping shadows hold power in Golarion [=the world of Cheliax], than I am confident that I already know why snowflakes here have sixfold symmetry. And I wanted to make that clear before I said too much about the hidden orders of reality out of dath ilan - that even if the things I am saying are entirely wrong about Golarion, that kind of specific knowledge is not the most important knowledge I have to teach. I have gone into this little digression about Validity and timelessness and optimality, in order to give you some specific reason to think that [...] some of the knowledge he has to teach is sufficiently general that you have strong reason for strong hope that it will work [...] [...] "It is said also in dath ilan that there is a final great principle of Law, less beautiful in its mathematics than the first three, but also quite important in practice; it goes by the name Coordination, and deals with agents simultaneously acting in such fashion to all get more of what they wanted than if they acted separately."

Decision theory

The final fundamental bit of Yudkowsky's philosophy is decision theories more complicated than causal decision theory.

A short primer / intuition pump: a decision theory specifies how you should choose between various options (it's not moral philosophy, because it assumes that we know already know what we value). The most straightforward decision theory is causal decision theory, which says: pick the option that causes the best outcome in expectation. Done, right? No; the devil is in the word "causes". Yudkowsky makes much of Newcomb's problem [LW · GW], but I prefer another example: Parfit's hitchhiker [? · GW]. Imagine you're a selfish person stuck in a desert without your wallet, and want to make it back to your hotel in the city. A car pulls up, with a driver who knows whether you're telling the truth. You ask to be taken back to your hotel. The driver asks if you'll pay $10 to them as a service. Dying in the desert is worse for you than paying $10, so you'd like to take this offer. However, you obey causal decision theory: if the driver takes you to your hotel, you would go to your hotel to get your wallet, but once inside you have the option between (a) take $10 back to the driver and therefore lose money, and (b) stay in your hotel and lose no money. Causal decision theory says to take option (b), because you're a selfish agent who doesn't care about the driver. And the driver knows you'd be lying if you said "yes", so you have to tell the driver "no". The driver drives off, and you die of thirst in the desert. If only you had spent more time arguing about non-causal decision theories on LessWrong.

Dying in a desert rather than spending $10 is not exactly systematised winning. So causal decision theory is out. (You could argue that another moral of Parfit's hitchhiker is that being a purely selfish agent is bad, and humans aren't purely selfish so it's not applicable to the real world anyway, but in Yudkowsky's philosophy—and decision theory academia—you want a general solution to the problem of rational choice where you can take any utility function and win by its lights regardless of which convoluted setup philosophers drop you into.) Yudkowsky's main academic / mathematical accomplishment is co-inventing (with Nate Soares) functional decision theory, which says you should consider your decisions as the output of a fixed function, and then choose the function that leads to the best consequences for you. This solves Parfit's hitchhiker, as well as problems like the smoking lesion problem [? · GW] that evidential decision theory, the classic non-causal decision theory, succumbs to. As far as I can judge, functional decision theory is actually a good idea (if somewhat underspecified), but academic engagement (whether critiques and praises) with it has been limited so there's no broad consensus in its favor that I can point at. (If you want to read Yudkowsky's explanation for why he doesn't spend more effort on academia, it's here.)

(Now you know what a Planecrash tangent feels like, except you don't, because Planecrash tangents can be much longer.)

One big aspect of Yudkowskian decision theory is how to respond to threats. Following causal decision theory means you can neither make credible threats nor commit to deterrence to counter threats. Yudkowsky endorses not responding to threats to avoid incentivising them, while also having deterrence commitments to maintain good equilibria. He also implies this is a consequence of using a sensible functional decision theory. But there's a tension here: your deterrence commitment could be interpreted as a threat by someone else, or visa versa. When the Eisenhower administration's nuclear doctrine threatened massive nuclear retaliation in event of the Soviets taking West Berlin, what's the exact maths that would've let them argue to the Soviets "no no this isn't a threat, this is just a deterrence commitment", while allowing the Soviets keep to Yudkowsky's strict rule to ignore all threats?

My (uninformed) sense is that this maths hasn't been figured out. Planecrash never describes it (though here is some discussion of decision theory in Planecrash). Posts in the LessWrong decision theory canon like this [LW · GW] or this [LW · GW] and this [LW · GW] seem to point to real issues around decision theories encouraging commitment races, and when Yudkowsky pipes up in the comments he's mostly falling back on the conviction that, surely, sufficiently-smart agents will find some way around mutual destruction in a commitment race (systematised winning, remember?). There are also various critiques of functional decision theory [LW · GW] (see also Abram Demski's comment [LW(p) · GW(p)] on that post acknowledging that functional decision theory is underspecified). Perhaps it all makes sense if you've worked through Appendix B7 of Yudkowsky's big decision theory paper (which I haven't actually read, let alone taken time to digest), but (a) why doesn't he reference that appendix then, and (b) I'd complain about that being hard to find, but then again we are talking about the guy who leaves the clearest and most explicit description of his philosophy scattered across an R-rated role-playing-game fanfic posted in innumerable parts on an obscure internet forum, so I fear my complaint would be falling on deaf ears anyway.

The political philosophy of dath ilan

Yudkowsky has put a lot of thought into how the world of dath ilan functions. Overall it's very coherent.

Here's a part where Keltham explains dath ilan's central management principle: everything, including every project, every rule within any company, and any legal regulation, needs to have one person responsible for it.

Keltham is informed, though he doesn't think he's ever been tempted to make that mistake himself, that overthinky people setting up corporations sometimes ask themselves 'But wait, what if this person here can't be trusted to make decisions all by themselves, what if they make the wrong decision?' and then try to set up more complicated structures than that. This basically never works. If you don't trust a power, make that power legible, make it localizable to a single person, make sure every use of it gets logged and reviewed by somebody whose job it is to review it. If you make power complicated, it stops being legible and visible and recordable and accountable and then you actually are in trouble.

Here's a part where Keltham talks about how dath ilan solves the problem of who watches the watchmen:

If you count the rehearsal festivals for it, Civilization spends more on making sure Civilization can collectively outfight the Hypothetical Corrupted Governance Military, than Civilization spends on its actual military.

Here's a part where dath ilan's choice of political system is described, which I will quote at length:

Conceptually and to first-order, the ideal that Civilization is approximating is a giant macroagent composed of everybody in the world, taking coordinated macroactions to end up on the multi-agent-optimal frontier, at a point along that frontier reflecting a fair division of the gains from that coordinated macroaction -

Well, to be clear, the dath ilani would shut it all down if actual coordination levels started to get anywhere near that. Civilization has spoken - with nearly one voice, in fact - that it does not want to turn into a hivemind.

[...]

Conceptually and to second-order, then, Civilization thinks it should be divided into a Private Sphere and a Public Shell. Nearly all the decisions are made locally, but subject to a global structure that contains things like "children may not be threatened into unpaid labor"; or "everybody no matter who they are or what they have done retains the absolute right to cryosuspension upon their death"; [...]

[...]

Directdemocracy has been tried, from time to time, within some city of dath ilan: people making group decisions by all individually voting on them. It can work if you try it with fifty people, even in the most unstructured way. Get the number of direct voters up to ten thousand people, and no amount of helpfully-intended structure in the voting process can save you.

[...]

Republics have been tried, from time to time, within some city of dath ilan: people making group decisions by voting to elect leaders who make those decisions. It can work if you try it with fifty people, even in the most unstructured way. Get the number of voters up to ten thousand people, and no amount of helpfully-intended structure in the voting process Acan save you.

[...]

There are a hundred more clever proposals for how to run Civilization's elections. If the current system starts to break, one of those will perhaps be adopted. Until that day comes, though, the structure of Governance is the simplest departure from directdemocracy that has been found to work at all.

Every voter of Civilization, everybody at least thirteen years old or who has passed some competence tests before then, primarily exerts their influence through delegating their vote to a Delegate.

A Delegate must have at least fifty votes to participate in the next higher layer at all; and can retain no more than two hundred votes before the marginal added influence from each additional vote starts to diminish and grow sublinearly. Most Delegates are not full-time, unless they are representing pretty rich people, but they're expected to be people interested in politics [...]. Your Delegate might be somebody you know personally and trust, if you're the sort to know so many people personally that you know one Delegate. [...]

If you think you've got a problem with the way Civilization is heading, you can talk to your Delegate about that, and your Delegate has time to talk back to you.

That feature has been found to not actually be dispensable in practice. It needs to be the case that, when you delegate your vote, you know who has your vote, and you can talk to that person, and they can talk back. Otherwise people feel like they have no lever at all to pull on the vast structure that is Governance, that there is nothing visible that changes when a voter casts their one vote. Sure, in principle, there's a decision-cohort whose votes move in logical synchrony with yours, and your cohort is probably quite large unless you're a weird person. But some part of you more basic than that will feel like you're not in control, if the only lever you have is an election that almost never comes down to the votes of yourself and your friends.

The rest of the electoral structure follows almost automatically, once you decide that this property has to be preserved at each layer.

The next step up from Delegates are Electors, full-time well-paid professionals who each aggregate 4,000 to 25,000 underlying voters from 50 to 200 Delegates. Few voters can talk to their Electors [...] but your Delegate can have some long conversations with them. [...]

Representatives aggregate Electors, ultimately 300,000 to 3,000,000 underlying votes apiece. There are roughly a thousand of those in all Civilization, at any given time, with social status equivalent to an excellent CEO of a large company or a scientist who made an outstanding discovery [...]

And above all this, the Nine Legislators of Civilization are those nine candidates who receive the most aggregate underlying votes from Representatives. They vote with power proportional to their underlying votes; but when a Legislator starts to have voting power exceeding twice that of the median Legislator, their power begins to grow sublinearly. By this means is too much power prevented from concentrating into a single politician's hands.

Surrounding all this of course are numerous features that any political-design specialist of Civilization would consider obvious:

Any voter (or Delegate or Elector or Representative) votes for a list of three possible delegees of the next layer up; if your first choice doesn't have enough votes yet to be a valid representor, your vote cascades down to the next person on your list, but remains active and ready to switch up if needed. This lets you vote for new delegees entering the system, without that wasting your vote while there aren't enough votes yet.

Anyone can at any time immediately eliminate a person from their 3-list, but it takes a 60-day cooldown to add a new person or reorder the list. The government design isn't meant to make it cheap or common to threaten your delegee with a temporary vote-switch if they don't vote your way on that particular day. The government design isn't meant to make it possible for a new brilliant charismatic leader to take over the entire government the next day with no cooldowns. It is meant to let you rapidly remove your vote from a delegee that has sufficiently ticked you off.

Once you have served as a Delegate, or delegee of any other level, you can't afterwards serve in any other branches of Governance. [...]

This is meant to prevent a political structure whose upper ranks offer promotion as a reward to the most compliant members of the ranks below, for by this dark-conspiratorial method the delegees could become aligned to the structure above rather than their delegators below.

(Most dath ilani would be suspicious of a scheme that tried to promote Electors from Delegates in any case; they wouldn't think there should be a political career ladder [...] Dath ilani are instinctively suspicious of all things meta, and much more suspicious of anything purely meta; they want heavy doses of object-level mixed in. To become an Elector you do something impressive enough, preferably something entirely outside of Governance, that Delegates will be impressed by you. You definitely don't become an Elector by being among the most ambitious and power-seeking people who wanted to climb high and knew they had to start out a lowly Delegate, who then won a competition to serve the system above them diligently enough to be selected for a list of Electors fed to a political party's captive Delegates. If a dath ilani saw a system like this, that was supposedly a democracy set in place by the will of its people, they would ask what the captive 'voters' even thought they were supposedly trying to do under the official story.)

Dath ilani Legislators have a programmer's or engineer's appreciation for simplicity:

[...] each [regulation] must be read aloud by a Legislator who thereby accepts responsibility for that regulation; and when that Legislator retires a new Legislator must be found to read aloud and accept responsibility for that regulation, or it will be stricken from the books. Every regulation in Civilization, if something goes wrong with it, is the fault of one particular Legislator who accepted responsibility for it. To speak it aloud, it is nowadays thought, symbolizes the acceptance of this responsibility.

Modern dath ilani aren't really the types in the first place to produce literally-unspeakable enormous volumes of legislation that no hapless citizen or professional politician could ever read within their one lifetime let alone understand. Even dath ilani who aren't professional programmers have written enough code to know that each line of code to maintain is an ongoing cost. Even dath ilani who aren't professional economists know that regulatory burdens on economies increase quadratically in the cost imposed on each transaction. They would regard it as contrary to the notion of a lawful polity with law-abiding citizens that the citizens cannot possibly know what all the laws are, let alone obey them. Dath ilani don't go in for fake laws in the same way as Golarion polities with lots of them; they take laws much too seriously to put laws on the books just for show.

Finally, the Keepers are an order of people trained in all the most hardcore arts of rationality, and who thus end up with inhuman integrity and even-handedness of judgement. They are used in many ways, for example:

There are also Keeper cutouts at key points along the whole structure of Governance - the Executive of the Military reports not only to the Chief Executive but also to an oathsworn Keeper who can prevent the Executive of the Military from being fired, demoted, or reduced in salary, just because the Chief Executive or even the Legislature says so. It would be a big deal, obviously, for a Keeper to fire this override; but among the things you buy when you hire a Keeper is that the Keeper will do what they said they'd do and not give five flying fucks about what sort of 'big deal' results. If the Legislators and the Chief Executive get together and decide to order the Military to crush all resistance, the Keeper cutout is there to ensure that the Executive of the Military doesn't get a pay cut immediately after they tell the Legislature and Chief Executive to screw off.

Also, to be clear, absolutely none of this is plot-relevant.

A system of the world

Yudkowsky proves that ideas matter: if you have ideas that form a powerful and coherent novel worldview, it doesn't matter if your main method for publicising them is ridiculously-long fanfiction, or if you dropped out of high school, or if you wear fedoras. People will still listen, and you might become (so far) the 21st century's most important philosopher.

Why is Yudkowsky so compelling? There are intellectuals like Scott Alexander who are most-strongly identified by a particular method (an even-handed, epistemically-rigorous, steelmaning-focused treatment of a topic), or intellectuals like Robin Hanson who are most-strongly identified by a particular style (eclectic irreverence about incentive mechanisms). But Yudkowsky's hallmark is delivering an entire system of the world that covers everything from logic to what correct epistemology looks like to the maths behind rational decision-making and coordination, and comes complete with identifying the biggest threat (misaligned AI) and the structure of utopia (dath ilan). None of the major technical inventions (except some in decision theory) are original to Yudkowsky. But he's picked up the pieces, slotted them into a big coherent structure, and presented it in great depth. And Yudkowsky's system claims to come with proofs for many key bits, in the literal mathematical sense. No, you can't crack open a textbook and see everything laid out, step-by-step. But the implicit claim is: read this long essay on coherence theorems, these papers on decision theory, this 20,000-word dialogue, these sequences on LessWrong, and ideally a few fanfics too, and then you'll get it.

Does he deliver? To an impressive extent, yes. There's a lot of maths that is laid out step-by-step and does check out. There are many takes that are correct, and big structures that point in the right direction, and what seems wrong at least has depth and is usefully provocative. But dig deep enough, and there are cracks: arguments [LW · GW] about how much coherence theorems [LW · GW] really imply, critiques [LW · GW] of the decision theory, and good counterarguments [LW · GW] to the most extreme versions of Yudkowsky's AI risk thesis. You can chase any of these cracks up towers of LessWrong posts, or debate them endlessly at those parties where people stand in neat circles and exchange thought experiments about acausal trade [? · GW]. If you have no interaction with rationalist/LessWrong circles, I think you'd be surprised at the fraction of our generation's top mathematical-systematising brainpower that is spent on this—or that is bobbing in the waves left behind, sometimes unknowingly.

As for myself: Yudkowsky's philosophy is one of the most impressive intellectual edifices I've seen. Big chunks of it—in particular the stuff about empiricism, naturalism, and the art of genuinely trying to figure out what's true that The Sequences [? · GW] especially focus on—were very formative in my own thinking. I think it's often proven itself directionally correct. But Yudkowsky's philosophy makes a claim for near-mathematical correctness, and I think there's a bit of trouble there. While it has impressive mathematical depth and gets many things importantly right (e.g. Bayesianism), despite much effort spent digesting it, I don't see it meeting the rigour bar it would need for its predictions (for example about AI risk) to be more like those of a tested scientific theory than those of a framing, worldview, or philosophy. However, I'm also very unsympathetic to a certain straitlaced science-cargo-culting attitude that recoils from Yudkowsky's uncouthness and is uninterested in speculation or theory—they would do well to study the actual history [LW · GW] of science. I also see in Yudkowsky's philosophy choices of framing and focus that seem neither forced by reason nor entirely natural in my own worldview. I expect that lots more great work will come out within the Yudkowskian frame, whether critiques or patches, and this work could show it to be anywhere from impressive but massively misguided to almost prophetically prescient. However, I expect even greater things if someone figures out a new, even grander and more applicable system of the world. Perhaps that person can then describe it in a weird fanfic.

45 comments

Comments sorted by top scores.

comment by Eneasz · 2025-01-03T00:02:55.784Z · LW(p) · GW(p)

I have very mixed feelings about GlowFic which are a direct result of trying to read PlaneCrash.

Pro: they are a joy for the author, and gets an author to write many hundreds of thousands of words effortlessly, which is great when you want more words from an author.

Con: the format is anti-conducive to narrative density. The joy is in creating any words, which is great for the author, but bad for audiences. Readers want a high engagement-per-word ratio.

For context, my two favorite works are HPMOR and Worth The Candle, of 660K and 1.6M words. I spent 16 months podcasting a read-through/analysis of Worth The Candle. I'm not shy about reading lots of words. But when I tried to do the same for PlaneCrash I stopped after 200k words. The problem was not the decision theory or the math, which I found interesting in the brief sections they came up. The problem was plot and character development. 200,000 words is two full novels. In a single novel an author will typically build an entire world and get us to fall in love with the characters, throw them into conflict, build to a climax, drive at least one character through an entire character arc where they develop as a person, and bring an audience through emotional catharsis via a climax and resolution. Often with side-plots or supporting characters fleshed out as well. In 200,000 words this could be done TWICE (maybe 1.5 times if the books run long).

In 200,000 words of PlaneCrash we got through one major plot point and set up the next. It felt like as much action as you get roughly within 25k words normally. That's an order of magnitude more cost-per-payoff compared to any general-audience novel (including HPMOR). This is more than even I am willing to pay.

As Devon Erikson says, every word is a bid for the attention of the reader, it's a price the author is bidding up. They need to recoup that cost by delivering to the reader a greater amount of something the reader wants - enjoyment, excitement, insight, information, emotional release, whatever. In the GlowFic format, authors are primarily writing for their own enjoyment, and perhaps their onlooking friends. Mass audiences don't get the expected per-word payoff.

I think a serious review of PlaneCrash such as this one should acknowledge that the narrative-to-wordcount ratio is way out of proportion to what most people will accept, and this is the major flaw of the piece.

Replies from: Nathan Young↑ comment by Nathan Young · 2025-01-07T22:55:00.993Z · LW(p) · GW(p)

Yeah I wish someone would write a condensed and less onanistic version of Planecrash. I think one could get much of the benefit in a much shorter package.

Replies from: Viliamcomment by Mikhail Samin (mikhail-samin) · 2024-12-29T09:31:04.200Z · LW(p) · GW(p)

Note that it's mathematically provable that if you don't follow The Solution, there exists a situation where you will do something obviously dumb

This is not true, Shapley value is not that kind of Solution. Coherent agents can have notions of fairness outside of these constraints. You can only prove that for a specific set of (mostly natural) constraints, Shapeley value is the only solution. But there’s no dutchbooking for notions of fairness.

One of the constraints (that the order of the players in subgames can’t matter) is actually quite artificial; if you get rid of it, there are other solutions, such as the threat-resistant ROSE value, inspired by trying to predict the ending of planecrash: https://www.lesswrong.com/posts/vJ7ggyjuP4u2yHNcP/threat-resistant-bargaining-megapost-introducing-the-rose [LW · GW].

your deterrence commitment could be interpreted as a threat by someone else, or visa versa

I don’t think this is right/relevant. Not responding to a threat means ensuring the other player doesn’t get more than what’s fair in expectation through their actions. The other player doing the same is just doing what they’d want to do anyway: ensuring that you don’t get more than what’s fair according to their notion of fairness.

See https://www.lesswrong.com/posts/TXbFFYpNWDmEmHevp/how-to-give-in-to-threats-without-incentivizing-them [LW · GW] for the algorithm when the payoff is known.

read this long essay on coherence theorems, these papers on decision theory, this 20,000-word dialogue, these sequences on LessWrong, and ideally a few fanfics too, and then you'll get it

Something that I feel is missing from this review is the amount of intuitions about how minds work and optimization that are dumped at the reader. There are multiple levels at which much of what’s happening to the characters is entirely about AI. Fiction allows to communicate models; and many readers successfully get an intuition for corrigibility before they read the corrigibility tag, or grok why optimizing for nice readable thoughts optimizes against interpretability.

I think an important part of planecrash isn’t in its lectures but in It’s story and the experiences of its characters. While Yudkowsky jokes about LeCun refusing to read it, it is actually arguably one of the most comprehensive ways to learn about decision theory, with many of the lessons taught through experiences of characters and not through lectures.

Replies from: LRudL↑ comment by L Rudolf L (LRudL) · 2024-12-29T15:13:20.767Z · LW(p) · GW(p)

Shapley value is not that kind of Solution. Coherent agents can have notions of fairness outside of these constraints. You can only prove that for a specific set of (mostly natural) constraints, Shapeley value is the only solution. But there’s no dutchbooking for notions of fairness.

I was talking more about "dumb" in the sense of violates the "common-sense" axioms that were earlier established (in this case including order invariance by assumption), not "dumb" in the dutchbookable sense, but I think elsewhere I use "dumb" as a stand-in for dutchbookable so fair point.

See https://www.lesswrong.com/posts/TXbFFYpNWDmEmHevp/how-to-give-in-to-threats-without-incentivizing-them [LW · GW] for the algorithm when the payoff is known.

Looks interesting, haven't had a chance to dig into yet though!

Something that I feel is missing from this review is the amount of intuitions about how minds work and optimization that are dumped at the reader. There are multiple levels at which much of what’s happening to the characters is entirely about AI. Fiction allows to communicate models; and many readers successfully get an intuition for corrigibility before they read the corrigibility tag, or grok why optimizing for nice readable thoughts optimizes against interpretability.

I think an important part of planecrash isn’t in its lectures but in It’s story and the experiences of its characters. While Yudkowsky jokes about LeCun refusing to read it, it is actually arguably one of the most comprehensive ways to learn about decision theory, with many of the lessons taught through experiences of characters and not through lectures.

Yeah I think this is very true, and I agree it's a good way to communicate your worldview [LW(p) · GW(p)].

I do think there are some ways in which the worlds Yudkowksy writes about are ones where his worldview wins. The Planecrash god setup, for example, is quite fine-tuned to make FDT and corrigibility important. This is almost tautological, since as a writer you can hardly do anything else than write the world as you think it works. But it still means that "works in this fictional world" doesn't transfer as much to "works in the real world", even when the fictional stuff is very coherent and well-argued.

comment by Ben Pace (Benito) · 2024-12-31T20:16:09.742Z · LW(p) · GW(p)

Curated! I think this is by far the best and easiest to read explanation of what Planecrash is like and about, thank you so much for writing it.

("Curated" is a term which here means "This just got emailed to 30,000 people, of whom typically half open the email, and for ~1 week it gets shown at the top of the frontpage to anyone who hasn't read it.")

I love the focus competence (it reminds me of Eliezer's own description of Worm). I think this doesn't quite discuss 'lawfulness' as Eliezer thinks of it, but does hit tons of points of that part of his philosophy.

It's fun that you spent most of this review on Eliezer's philosophy, and not the plot of the story, and then end with "Also, to be clear, absolutely none of this is plot-relevant." Though I do somewhat wish there was a section here that reviews the plot, for those of us who are curious about what happens in the book without reading 1M+ words.

Having read Eliezer's writing for over a decade, I think I understand it fairly well, but there are still ways of looking at it here that I hadn't crystalized before.

Replies from: Dweomite↑ comment by Dweomite · 2025-01-04T07:37:55.298Z · LW(p) · GW(p)

Though I do somewhat wish there was a section here that reviews the plot, for those of us who are curious about what happens in the book without reading 1M+ words.

I think I could take a stab at a summary.

This is going to elide most of the actual events of the story to focus on the "main conflict" that gets resolved at the end of the story. (I may try to make a more narrative-focused outline later if there's interest, but this is already quite a long comment.)

As I see it, the main conflict (the exact nature of which doesn't become clear until quite late) is mainly driven by two threads that develop gradually throughout the story... (major spoilers)

The first thread is Keltham's gradual realization that the world of Golarion is pretty terrible for mortals, and is being kept that way by the power dynamics of the gods.

The key to understanding these dynamics is that certain gods (and coalitions of gods) have the capability to destroy the world. However, the gods all know (Eliezer's take on) decision theory, so you can't extort them by threatening to destroy the world. They'll only compromise with you if you would honestly prefer destroying the world to the status quo, if those were your only two options. (And they have ways of checking.) So the current state of things is a compromise to ensure that everyone who could destroy the world, prefers not to.

Keltham would honestly prefer destroying Golarion (primarily because a substantial fraction of mortals currently go to hell and get tortured for eternity), so he realizes that if he can seize the ability to destroy the world, then the gods will negotiate with him to find a mutually-acceptable alternative.

Keltham speculates (though it's only speculation) that he may have been sent to Golarion by some powerful but distant entity from the larger multiverse, as the least-expensive way of stopping something that entity objects to.

The second thread is that Nethys (god of knowledge, magic, and destruction) has the ability to see alternate versions of Golarion and to communicate with alternate versions of himself, and he's seen several versions of this story play out already, so he knows what Keltham is up to. Nethys wants Keltham to succeed, because the new equilibrium that Keltham negotiates is better (from Nethys' perspective) than the status quo.

However, it is absolutely imperative that Nethys does not cause Keltham to succeed, because Nethys does not prefer destroying the world to the status quo. If Keltham only succeeds because of Nethys' interventions, the gods will treat Keltham as Nethys' pawn, and treat Keltham's demands as a threat from Nethys, and will refuse to negotiate.

So Nethys can only intervene in ways that all of the major gods will approve of (in retrospect). So he runs around minimizing collateral damage, nudges Keltham towards being a little friendlier in the final negotiations, and very carefully never removes any obstacle from Keltham's path until Keltham has proven that he can overcome it on his own.

Nethys considers is likely that this whole situation was intentionally designed as some sort of game, by some unknown entity. (Partly because Keltham makes several successful predictions based on dath ilani game tropes.)

At the end of the story, Keltham uses an artifact called the starstone to turn himself into a minor god, then uses his advanced knowledge of physics (unknown to anyone else in the setting, including the gods) to create weapons capable of destroying the world, announces that that's his BATNA, and successfully negotiates with the rest of the gods to shut down hell, stop stifling mortal technological development, and make a few inexpensive changes to improve overall mortal quality-of-life. Keltham then puts himself into long-term stasis to see if the future of this world will seem less alienating to him than the present.

comment by quiet_NaN · 2024-12-28T16:01:43.705Z · LW(p) · GW(p)

One big aspect of Yudkowskian decision theory is how to respond to threats. Following causal decision theory means you can neither make credible threats nor commit to deterrence to counter threats. Yudkowsky endorses not responding to threats to avoid incentivising them, while also having deterrence commitments to maintain good equilibria. He also implies this is a consequence of using a sensible functional decision theory. But there's a tension here: your deterrence commitment could be interpreted as a threat by someone else, or visa versa.

I have also noted this tension. Intuitively, one might think that it depends on the morality of the action -- the robber who threatens to blow up a bank unless he gets his money might be seen as a threat, while a policy of blowing up your own banks in case of robberies might be seen as a deterrence commitment.

However, this can not be it, because decision theory works with any utility functions.

The other idea is that that 'to make threats' is one of these irregular verbs. I make credible deterrence commitments, you show a willingness to escalate, they try to blackmail me with irrational threats. This is of course just as silly in the context of game theory.

One axis of difference might be if you physically restrict your own options to prevent you from not following through on your threat (like a Chicken player removing their steering wheel, or that doomsday machine from Dr. Strangelove). But this merely makes a difference for people who are known to follow causal decision theory where they would try to maximize the utility in whatever branch of reality they find themselves in. From my understanding, the adherents of functional decision theory do not need to physically constrain their options -- they would be happy to burn the world in one branch of reality if that was the dominant strategy before their opponent had made their choice.

Consider the ultimatum game (which gets convered in Planecrash, naturally) where one party makes a proposal on how to split 10$ and the other party can either accept (gaining their share) or reject it (in which case neither party gains anything). In planecrash, the dominant strategy is presented as rejecting unfair allocations with some probability so that the expected value of the proposing party is lower than if they had proposed a fair split. However, this hinges on the concept of fairness. If each dollar has the same utility to every participant, then a 50-50 split seems fair. But in a more general case, the utilities of both parties might be utterly incomparable, or the effort of both players might be very different -- an isomorphic situation is a silk merchant encountering the first of possibly multiple highwaymen, and having to agree on a split, with both parties having the option to burn all the silk if they don't agree. Agreeing to a 50-50 split each time could easily make the business model of the silk merchant impossible.

"This is my strategy, and I will not change it no matter what, so you better adapt your strategy if you want to avoid fatal outcomes" is an attitude likely to lead to a lot of fatal outcomes.

Replies from: ben-livengood, martin-randall, Tapatakt↑ comment by Ben Livengood (ben-livengood) · 2024-12-28T17:20:52.984Z · LW(p) · GW(p)

I think it might be as simple as not making threats against agents with compatible values.

In all of Yudkowsky's fiction the distinction between threats (and unilateral actions removing consent from another party) and deterrence comes down to incompatible values.

The baby-eating aliens are denied access to a significant portion of the universe (a unilateral harm to them) over irreconcilable values differences. Harry Potter transfigures Voldemort away semi-permanently non-consensually because of irreconcilable values differences. Carissa and friends deny many of the gods their desired utility over value conflict.

Planecrash fleshes out the metamorality with the presumed external simulators who only enumerate the worlds satisfying enough of their values, with the negative-utilitarians having probably the strongest "threat" acausally by being more selective.

Cooperation happens where there is at least some overlap in values and so some gains from trade to be made. If there are no possible mutual gains from trade then the rational action is to defect at a per-agent cost up to the absolute value of the negative utility of letting the opposing agent achieve their own utility. Not quite a threat, but a reality about irreconcilable values.

↑ comment by Martin Randall (martin-randall) · 2024-12-29T21:41:22.786Z · LW(p) · GW(p)

... the effort of both players might be very different ...

Covered in the glowfic. Here is how it goes down in Dath Ilan:

The next stage involves a complicated dynamic-puzzle with two stations, that requires two players working simultaneously to solve. After it's been solved, one player locks in a number on a 0-12 dial, the other player may press a button, and the puzzle station spits out jellychips thus divided.

The gotcha is, the 2-player puzzle-game isn't always of equal difficulty for both players. Sometimes, one of them needs to work a lot harder than the other.

Now things start to heat up. There's an obvious notion that if one player worked harder than the other, they should get more jellychips. But how much more? Can you quantify how hard the players are working, and split the jellychips in proportion to that? The game obviously seems to be pointing in the direction of quantifying how hard the players are working, relative to each other, but there's no obvious way to do that.

Somebody proposes that each player say, on a scale of 0 to 12, how hard they felt like they worked, and then the jellychips should be divided in whatever ratio is nearest to that ratio.

The solution relies on people being honest. This is, perhaps, less of a looming unsolvable problem for dath ilani children than for adults in Golarion.

And in Golarion:

"...I don't see how that game is any different than this one? Unless you mean there's not the reputational element."

They just ignore the effort difference and go for 50:50 splits. Fair over the long term, robust to deception and self-deception, low cognitive effort.

The Dath Ilani kids are wrong according to Shapley Values (confirmed as the Dath Ilan philosophy here). Let's suppose that Aylick and Brogue are paired up on a box where Aylick had to put in three jellychips worth of effort and Brogue had to put in one jellychip worth of effort. Then their total gains from trade are 12-4=8. The Shapley division is then 4 each, which can be achieved as follows:

- Aylick gets seven jellychips. Less her three units of effort, her total reward is four.

- Brogue gets five jellychips. Less his one unit of effort, his total reward is four.

The Dath Ilan Child division is nine to three, which I think is only justified with the politician's fallacy. But they are children.

Replies from: khafra↑ comment by khafra · 2024-12-30T11:19:51.071Z · LW(p) · GW(p)

AFAICT, in the Highwayman example, if the would-be robber presents his ultimatum as "give me half your silk or I burn it all," the merchant should burn it all, same as if the robber says "give me 1% of your silk or I burn it all."

But a slightly more sophisticated highwayman might say "this is a dangerous stretch of desert, and there are many dangerous, desperate people in those dunes. I have some influence with most of the groups in the next 20 miles. For x% of your silk, I will make sure you are unmolested for that portion of your travel."

Then the merchant actually has to assign a probabilities to a bunch of events, calculate Shapley values, and roll some dice for his mixed strategy.

comment by quiet_NaN · 2024-12-28T17:00:21.469Z · LW(p) · GW(p)

Some additional context.

This fantasy world is copied from a role-playing game setting—a fact I discovered when Planecrash literally linked to a Wiki article to explain part of the in-universe setting.

The world of Golarian is a (or the?) setting of the Pathfinder role playing game, which is a fork of the D&D 3.5 rules[1] (but notably different from Forgotten Realms, which is owned by WotC/Hasbro). The core setting is defined in some twenty-odd books which cover everything from the political landscape in dozens of polities to detailed rule for how magic and divine spells work. From what I can tell, the Planecrash authors mostly take what given and fill in the blanks in a way which makes the world logically coherent, in the same way Eliezer did with the world of Harry Potter in HPMOR.

Also, Planecrash (aka Project Lawful) is a form of collaborative writing (or free form roleplaying?) called glowfic. In this case, EY writes Keltham and the setting of dath ilan (which exists only in Keltham's mind, as far as the plot is concerned), plus a few other minor characters. Lintamande writes Carissa and most of the world -- it is clear that she is an expert in the Pathfinder setting -- as well as most of the other characters. At some point, other writers (including Alicorn) join and write minor characters. If you have read through planecrash and want to read more from Eliezer in that format, glowfic.com has you covered. For example, here is a story which sheds some light on the shrouded past of dath ilan (plus more BDSM, of course).

- ^

D&D 3 was my first exposure to the D&D (via Bioware's Neverwinter Nights), so it is objectively the best edition. Later editions are mostly WotC milking the franchise with a complete overhaul every few years. AD&D 2 is also fine, if a bit less streamlined -- THAC0, armor classes going negative and all that.

↑ comment by FiftyTwo · 2025-01-23T11:39:38.588Z · LW(p) · GW(p)

The setting for Planecrash is called "Glowlarion" sometimes and is shared with a lot of other glowfics, and makes some systematic changes from the original Paizo canon, mostly in terms of making it more similar to real life history of the same period, more internally coherent and with the gods and metaphysics being more impactful.

There's a brief outline of some of the changes here: https://docs.google.com/document/d/1ZGaV1suMeHrDlsYovZbG4c4tdMVgdq0HzgRX0HUGYkU/edit?tab=t.0

These are the two oldest non-crossover threads I can find: https://www.glowfic.com/posts/3456 by lintamande and apprenticebard (who wrote Korva in planecrash) and https://www.glowfic.com/posts/3538 by lintamande and Alicorn (who wrote Luminosity, the HPMOR style reworking of Twilight).

Incomplete list of other notable threads: https://glowficwiki.noblejury.com/books/dungeons-and-dragons/page/notable-threads

comment by gjm · 2024-12-28T00:17:07.317Z · LW(p) · GW(p)

The Additional Questions Elephant (first image in article, "image credit: Planecrash") is definitely older than Planecrash; see e.g. https://knowyourmeme.com/photos/1036583-reaction-images for an instance from 2015.

Replies from: FeepingCreature↑ comment by FeepingCreature · 2024-12-28T09:06:00.804Z · LW(p) · GW(p)

Original source, to my knowledge. (July 1st, 2014)

"So long, Linda! I'm going to America!"

comment by Archimedes · 2024-12-29T04:12:52.584Z · LW(p) · GW(p)

This review led me to find the following podcast version of Planecrash. I've listened to the first couple of episodes and the quality is quite good.

comment by Martin Randall (martin-randall) · 2025-01-02T04:33:06.705Z · LW(p) · GW(p)

Hopefully this is the place for planecrash-inspired fanfic based on understanding Shapley values with Venn diagrams [LW · GW].

Keltham: And so long as that gets transcripted and sent out soon enough, hopefully nobody from Chelish Governance gives me a completely baffled look if I say that my baseline fair share of an increase in Chelish production ought to be around roughly the amount that Chelish production would've increased by adding me in the alternate world where the country had randomly half of its current people, or gets confused and worried if I say that a proposed contract clause would be annoying enough in a final offer to make me visibly generate a random number between 0 and 999 and walk out on Cheliax if the number is 0.

(time passes)

Lrilatha: Ah, Keltham. We understand that you expect to get about half the shares in Project Lawful based on a fair division of spoils, calculated as your marginal value as added to all possible subsets of actors.

Keltham: If the word 'spoils' is translating at all correctly, it has odd connotations for a scientific project. But that's exactly right. I will reject lesser splits with a probability corresponding to how disproportionately they reserve the gains for you.

Lrilatha: There's no need for that. We accept your terms. Here is a contract to that effect between you, Cheliax, and other parties.

Keltham: Oh. I was expecting more ... what was the word again ... "haggling".

Lrilatha: Asmodeus is a god of both negotiations and contracts. Today we offer the second.

Keltham: Please assure me that there are meant to be no unexpected unpleasant consequences for myself, as seen from my individual perspective. I mean, I probably didn't have to say that, but why trust what you can verify?.

Lrilatha: I do not expect any of the terms of this contract to have any unexpected unpleasant consequences for yourself, relative to the fair division of spoils, calculated as discussed. You should be aware, if you are not, that there are some devils with veto rights over projects of this form, which affects that division.

Keltham: Asmodia why don't you chart this out for us and anyone reading the transcripts to show how this affects the fair division? It will be a good opportunity to refresh their understanding.

Asmodia: certainly. Let's assume that there is one devil with veto power, so the actors are Keltham, Cheliax, and the Veto Devil. Then the marginal value of each actor is:

- Keltham only: minimal

- Cheliax only: current Chelish production

- Veto Devil only: minimal

- Keltham + Cheliax: current Chelish production, because the Veto Devil vetoes the project.

- Cheliax + Veto Devil: current Chelish production

- Veto Devil + Keltham: minimal

- Keltham + Cheliax + Veto Devil: current Chelish production plus the gains from Project Lawful, whatever they are...

Carissa: Let's say a million resurrection diamonds.

Asmodeus: So as you can see from this diagram, the fair division is that Keltham, Cheliax, and the Veto Devil all get three hundred and thirty three thousand, three hundred and thirty three resurrection diamonds, plus an equal share of the final diamond. Cheliax retains its rights to current Chelish production.

Keltham: That's right. But why would a devil get to veto an agreement between me and Cheliax?

Lrilatha: The veto provisions are part of an old contract between Hell and Cheliax, in exchange for Hell's assistance in certain matters. Both parties expected it not to come up, since mortals in Golarian do not attempt to bargain using the fair division of spoils as you calculate it, and prefer to "haggle". But here we are.

Asmodeus is the God of Trickery, so Lrilatha is glossing over the fact that there are a very large number of devils on the Committee for Considering Shapley Value Agreements with Lawful Clueless Outsiders, all of whom get a veto. Not an infinite amount of course, unlike the Abyss, Hell is reassuringly finite, plus it is helpful to be able to sign contracts in a finite time. But certainly all of the Contract Devils are on it, because why wouldn't they?

I don't know how the story ends. Seems like a fair division should resist problems with group entities that structure themselves so as to maximize their joint share of a future fair division. Perhaps Keltham spontaneously invents it. But I don't know what it is (other than "lol, no I'm not giving almost all the gains to the Veto Committee, go to Hell").

comment by a_boring_centrist (jeremy-1) · 2024-12-31T22:46:00.414Z · LW(p) · GW(p)

Planecrash is one of the best and one of the worst books I've ever read. The characters are intelligent in a way I've only seen elsewhere in HPMOR and the Naruto fanfic Waves Arisen, which I suspect was written by Yudkowsky under a pseudonym. It has top notch humour and world building as well, although those aren't as unparalled as the character intelligence is. But on the other hand, it's an incredibly and needlessly long story, which happens naturally from being a forum RP. Vast swathes could be cut with minimal loss- I bet you could condense the story to 1/3 its length with smart editing while losing almost nothing of value.

If you deeply loved HPMOR, I'd recommend Planecrash. But even then I'd recommend skimming large chunks of it.

comment by lsusr · 2024-12-28T00:35:11.065Z · LW(p) · GW(p)

I really like your post. Good how-to manuals like yours are rare and precious.

Replies from: LRudL↑ comment by L Rudolf L (LRudL) · 2024-12-28T13:36:58.913Z · LW(p) · GW(p)

Thanks for this link! That's a great post

comment by Dmitry Vaintrob (dmitry-vaintrob) · 2024-12-27T22:41:19.918Z · LW(p) · GW(p)

Thank you for writing this! This is my favorite thing on this site in a while.

comment by Carl Feynman (carl-feynman) · 2024-12-27T20:03:16.927Z · LW(p) · GW(p)

All the pictures are missing for me.

Replies from: gjm↑ comment by gjm · 2024-12-27T20:39:54.689Z · LW(p) · GW(p)

They're present on the original for which this is a linkpost. I don't know what the mechanism was by which the text was imported here from the original, but presumably whatever it was it didn't preserve the images.

Replies from: LRudL↑ comment by L Rudolf L (LRudL) · 2024-12-27T21:02:07.208Z · LW(p) · GW(p)

I copy-pasted markdown from the dev version of my own site, and the images showed up fine on my computer because I was running the dev server; images now fixed to point to the Substack CDN copies that the Substack version uses. Sorry for that.

comment by Eli Tyre (elityre) · 2024-12-29T01:07:16.090Z · LW(p) · GW(p)

Can someone tell me if this post contains spoilers?

Planecrash might be the single work of fiction for which I most want to avoid spoilers, of either the plot or the finer points of technical philosophy.

Replies from: ryan_greenblatt, eggsyntax, elityre, amaury-lorin, davekasten, LRudL, NoriMori1992↑ comment by ryan_greenblatt · 2024-12-29T01:17:25.242Z · LW(p) · GW(p)

It has spoilers thought they aren't that big of spoilers I think.

↑ comment by eggsyntax · 2024-12-31T21:55:37.172Z · LW(p) · GW(p)

It's definitely spoilerful by my standards. I do have unusually strict standards for what counts as spoilers, but it sounds like in this case you're wanting to err on the side of caution.

Giving a quick look back over it, I don't see any spoilers for anything past book 1 ('Mad Investor Chaos and the Woman of Asmodeus').

↑ comment by Eli Tyre (elityre) · 2024-12-30T06:35:23.282Z · LW(p) · GW(p)

A different way to ask the question: what, specifically, is the last part of the text that is spoiled by this review?

↑ comment by momom2 (amaury-lorin) · 2025-01-05T21:37:19.786Z · LW(p) · GW(p)

Having read Planecrash, I do not think there is anything in this review that I would not have wanted to know before reading the work (which is the important part of what people consider "spoilers" for me).

↑ comment by davekasten · 2024-12-30T06:09:32.465Z · LW(p) · GW(p)

Everyone who's telling you there aren't spoilers in here is well-meaning, but wrong. But to justify why I'm saying that is also spoilery, so to some degree you have to take this on faith.

(Rot13'd for those curious about my justification: Bar bs gur znwbe cbvagf bs gur jubyr svp vf gung crbcyr pna, vs fhssvpvragyl zbgvingrq, vasre sne zber sebz n srj vfbyngrq ovgf bs vasbezngvba guna lbh jbhyq anviryl cerqvpg. Vs lbh ner gryyvat Ryv gung gurfr ner abg fcbvyref V cbyvgryl fhttrfg gung V cerqvpg Nfzbqvn naq Xbein naq Pnevffn jbhyq fnl lbh ner jebat.)

↑ comment by L Rudolf L (LRudL) · 2024-12-29T15:05:24.062Z · LW(p) · GW(p)

It doesn't contain anything I would consider a spoiler.

If you're extra scrupulous, the closest things are:

- A description of a bunch of stuff that happens very early on to set up the plot

- One revelation about the character development arc of a non-major character

- A high-level overview of technical topics covered, and commentary on the general Yudkowskian position on them (with links to precise Planecrash parts covering them), but not spoiling any puzzles or anything that's surprising if you've read a lot of other Yudkowsky

- A bunch of long quotes about dath ilani governance structures (but these are not plot relevant to Planecrash at all)

- A few verbatim quotes from characters, which I guess would technically let you infer the characters don't die until they've said those words?

↑ comment by Ben Pace (Benito) · 2024-12-31T20:08:22.847Z · LW(p) · GW(p)

As someone who's only read like 60% of the first book, the only spoiler to me was in the paragraph about Carissa.

↑ comment by NoriMori1992 · 2025-01-31T04:47:44.376Z · LW(p) · GW(p)

I think if you're describing planecrash as "the single work of fiction for which I most want to avoid spoilers", you probably just shouldn't read any reviews of it or anything about it until after you've read it.

If you do read this review beforehand, you should avoid the paragraph that begins with "By far the best …" (The paragraph right before the heading called "The competence".) That mentions something that I definitely would have considered a spoiler if I'd read it before I read planecrash.

Aside from that, it's hard to answer without knowing what kinds of things you consider spoilers and what you already know about planecrash.

comment by anithite (obserience) · 2024-12-27T20:54:00.280Z · LW(p) · GW(p)

Your image links are all of the form: http://localhost:8000/out/planecrash/assets/Screenshot 2024-12-27 at 00.31.42.png

Whatever process is generating the markdown for this, well those links can't possibly work.

comment by johnlawrenceaspden · 2025-01-06T17:29:19.753Z · LW(p) · GW(p)

Thank you so much for this! I tried to read this thing once before, and didn't get into it at all, despite pretty much loving everything Eliezer has ever written, which experience I now put down to something about the original presentation.

I'm now about 10% of the way through, and loving it. Two days well spent, see y'all in a fortnight or so.....

For anyone in a similar position, I found (and am currently reading):

https://akrolsmir-glowflow-streamlit-app-79n743.streamlit.app

which is much more to my taste.

And I also found https://www.mikescher.com/blog/29/Project_Lawful_ebook, of which the project-lawful-avatars-moreinfo.epub version looks like the one I would prefer to read (but haven't actually tried reading more than a couple of pages of yet).

I actually think that the avatars are really important to the feel of the story, and have been carefully chosen, and I wouldn't want to be without them.

Replies from: johnlawrenceaspden↑ comment by johnlawrenceaspden · 2025-01-18T19:31:27.069Z · LW(p) · GW(p)

Have ended up reading project-lawful-avatars-moreinfo.epub as the best of the options. 70 pages into a total of 183 (very long) pages. It is still great. Enjoying it immensely.

Replies from: johnlawrenceaspden↑ comment by johnlawrenceaspden · 2025-02-16T16:20:51.552Z · LW(p) · GW(p)

Finally finished it, took about a month-and-a-half at around 3 hours a day. It kind of ate my life. I enjoyed it immensely.

I think the last thing that I liked as much as this was 'Game of Thrones'. I think it's probably a Great Work of Literature. Shame the future isn't going to be long enough for it to get recognised as such...

I wouldn't have wished it shorter. There were a couple of 'Sandbox' chapters that I'd probably cut, in the same way that Lord of the Rings could do without Tom Bombadil, but the main thing is well-paced and consistently both fun and thought-provoking.

It turned out that my preferred way to read it was to unzip project-lawful-avatars-moreinfo.epub. In the unzipped structure all the chapters become plain html files with avatars included, which can be easily read in firefox.

comment by Joseph Miller (Josephm) · 2025-01-01T14:28:49.302Z · LW(p) · GW(p)

Has someone made an ebook that I can easily download onto my kindle?

I'm unclear if a good ebook should include all the pictures from the original version.

Replies from: abandon↑ comment by dirk (abandon) · 2025-01-03T00:26:33.768Z · LW(p) · GW(p)

There's https://www.mikescher.com/blog/29/Project_Lawful_ebook (which includes versions both with and without the pictures, so take your pick; the pictures are used in-story sometimes but it's rare enough you can IMO skip them without much issue, if you'd rather).

Replies from: Josephm↑ comment by Joseph Miller (Josephm) · 2025-01-07T01:26:47.240Z · LW(p) · GW(p)

Wow that's great, thanks. @L Rudolf L [LW · GW] you should link this in this post.

Replies from: LRudL↑ comment by L Rudolf L (LRudL) · 2025-01-07T10:53:03.329Z · LW(p) · GW(p)

Thanks for the heads-up, that looks very convenient. I've updated the post to link to this instead of the scraper repo on GitHub.

comment by L Rudolf L (LRudL) · 2024-12-27T21:01:15.360Z · LW(p) · GW(p)

Images issues now fixed, apologies for that

comment by NoriMori1992 · 2025-01-31T04:24:24.158Z · LW(p) · GW(p)

Neither there nor in Cheliax's world are there really any lumbering bureaucracies that do insane things for inscrutable bureaucratic reasons; all the organisations depicted are all remarkably sane. Important positions are almost always filled by the smart, skilled, and hardworking. Decisions aren't made because of emotional outbursts. Instead, lots of agents go around optimising for their goals by thinking hard about them.

(I'm spoiler tagging my entire response to this because I don't know what kinds of spoilers are acceptable in this context and I'd rather err on the side of caution.)