There are no coherence theorems

post by Dan H (dan-hendrycks), EJT (ElliottThornley) · 2023-02-20T21:25:48.478Z · LW · GW · 130 commentsContents

Introduction Coherence arguments Cited ‘coherence theorems’ and what they actually say The Von Neumann-Morgenstern Expected Utility Theorem: Savage’s Theorem The Bolker-Jeffrey Theorem Dutch Books Cox’s Theorem The Complete Class Theorem Omohundro (2007), ‘The Nature of Self-Improving Artificial Intelligence’ Money-Pump Arguments by Johan Gustafsson Completeness doesn’t come for free A money-pump for Completeness Summarizing this section Conclusion Bottom-lines Appendix: Papers and posts in which the error occurs ‘The nature of self-improving artificial intelligence’ ‘The basic AI drives’ ‘Coherent decisions imply consistent utilities’ ‘Things To Take Away From The Essay’ ‘Sufficiently optimized agents appear coherent’ ‘What do coherence arguments imply about the behavior of advanced AI?’ ‘Coherence theorems’ ‘Coherence arguments do not entail goal-directed behavior’ ‘Coherence arguments imply a force for goal-directed behavior.’ ‘AI Alignment: Why It’s Hard, and Where to Start’ ‘Money-pumping: the axiomatic approach’ ‘Ngo and Yudkowsky on alignment difficulty’ ‘Ngo and Yudkowsky on AI capability gains’ ‘AGI will have learnt utility functions’ None 131 comments

[Written by EJT as part of the CAIS Philosophy Fellowship. Thanks to Dan for help posting to the Alignment Forum]

Introduction

For about fifteen years, the AI safety community has been discussing coherence arguments [? · GW]. In papers and posts on the subject, it’s often written that there exist 'coherence theorems' which state that, unless an agent can be represented as maximizing expected utility, that agent is liable to pursue strategies that are dominated by some other available strategy. Despite the prominence of these arguments, authors are often a little hazy about exactly which theorems qualify as coherence theorems. This is no accident. If the authors had tried to be precise, they would have discovered that there are no such theorems.

I’m concerned about this. Coherence arguments seem to be a moderately important part of the basic case for existential risk from AI [LW · GW]. To spot the error in these arguments, we only have to look up what cited ‘coherence theorems’ actually say. And yet the error seems to have gone uncorrected for more than a decade.

More detail below.[1]

Coherence arguments

Some authors frame coherence arguments in terms of ‘dominated strategies’. Others frame them in terms of ‘exploitation’, ‘money-pumping’, ‘Dutch Books’, ‘shooting oneself in the foot’, ‘Pareto-suboptimal behavior’, and ‘losing things that one values’ (see the Appendix [LW · GW] for examples).

In the context of coherence arguments, each of these terms means roughly the same thing: a strategy A is dominated by a strategy B if and only if A is worse than B in some respect that the agent cares about and A is not better than B in any respect that the agent cares about. If the agent chooses A over B, they have behaved Pareto-suboptimally, shot themselves in the foot, and lost something that they value. If the agent’s loss is someone else’s gain, then the agent has been exploited, money-pumped, or Dutch-booked. Since all these phrases point to the same sort of phenomenon, I’ll save words by talking mainly in terms of ‘dominated strategies’.

With that background, here’s a quick rendition of coherence arguments [LW · GW]:

- There exist coherence theorems which state that, unless an agent can be represented as maximizing expected utility, that agent is liable to pursue strategies that are dominated by some other available strategy.

- Sufficiently-advanced artificial agents will not pursue dominated strategies.

- So, sufficiently-advanced artificial agents will be ‘coherent’: they will be representable as maximizing expected utility.

Typically, authors go on to suggest that these expected-utility-maximizing agents are likely to behave in certain, potentially-dangerous ways. For example, such agents are likely to appear ‘goal-directed [LW · GW]’ in some intuitive sense. They are likely to have certain instrumental goals, like acquiring power and resources. And they are likely to fight back against attempts to shut them down or modify their goals.

There are many ways to challenge the argument stated above, and many of those challenges have [LW · GW] been [LW · GW] made [LW · GW]. There are also many ways to respond to those challenges, and many of those responses have been made [LW · GW] too [LW · GW]. The challenge that seems to remain yet unmade is that Premise 1 is false: there are no coherence theorems.

Cited ‘coherence theorems’ and what they actually say

Here’s a list of theorems that have been called ‘coherence theorems’. None of these theorems state that, unless an agent can be represented as maximizing expected utility, that agent is liable to pursue dominated strategies. Here’s what the theorems say:

The Von Neumann-Morgenstern Expected Utility Theorem:

The Von Neumann-Morgenstern Expected Utility Theorem is as follows:

An agent can be represented as maximizing expected utility if and only if their preferences satisfy the following four axioms:

- Completeness: For all lotteries X and Y, X is at least as preferred as Y or Y is at least as preferred as X.

- Transitivity: For all lotteries X, Y, and Z, if X is at least as preferred as Y, and Y is at least as preferred as Z, then X is at least as preferred as Z.

- Independence: For all lotteries X, Y, and Z, and all probabilities 0<p<1, if X is strictly preferred to Y, then pX+(1-p)Z is strictly preferred to pY+(1-p)Z.

- Continuity: For all lotteries X, Y, and Z, with X strictly preferred to Y and Y strictly preferred to Z, there are probabilities p and q such that (i) 0<p<1, (ii) 0<q<1, and (iii) pX+(1-p)Z is strictly preferred to Y, and Y is strictly preferred to qX+(1-q)Z.

Note that this theorem makes no reference to dominated strategies, vulnerabilities, exploitation, or anything of that sort.

Some authors (both inside and outside the AI safety community) have tried to defend some or all of the axioms above using money-pump arguments. These are arguments with conclusions of the following form: ‘agents who fail to satisfy Axiom A can be induced to make a set of trades or bets that leave them worse-off in some respect that they care about and better-off in no respect, even when they know in advance all the trades and bets that they will be offered.’ Authors then use that conclusion to support a further claim. Outside the AI safety community, the claim is often:

Agents are rationally required to satisfy Axiom A.

But inside the AI safety community, the claim is:

Sufficiently-advanced artificial agents will satisfy Axiom A.

This difference will be important below. For now, the important thing to note is that the conclusions of money-pump arguments are not theorems. Theorems (like the VNM Theorem) can be proved without making any substantive assumptions. Money-pump arguments establish their conclusion only by making substantive assumptions: assumptions that might well be false. In the section titled ‘A money-pump for Completeness [LW · GW]’, I will discuss an assumption that is both crucial to money-pump arguments and likely false.

Savage’s Theorem

Savage’s Theorem is also a Von-Neumann-Morgenstern-style representation theorem. It also says that an agent can be represented as maximizing expected utility if and only if their preferences satisfy a certain set of axioms. The key difference between Savage’s Theorem and the VNM Theorem is that the VNM Theorem takes the agent’s probability function as given, whereas Savage constructs the agent’s probability function from their preferences over lotteries.

As with the VNM Theorem, Savage’s Theorem says nothing about dominated strategies or vulnerability to exploitation.

The Bolker-Jeffrey Theorem

This theorem is also a representation theorem, in the mould of the VNM Theorem and Savage’s Theorem above. It makes no reference to dominated strategies or anything of that sort.

Dutch Books

The Dutch Book Argument for Probabilism says:

An agent can be induced to accept a set of bets that guarantee a net loss if and only if that agent’s credences violate one or more of the probability axioms.

The Dutch Book Argument for Conditionalization says:

An agent can be induced to accept a set of bets that guarantee a net loss if and only if that agent updates their credences by some rule other than Conditionalization.

These arguments do refer to dominated strategies and vulnerability to exploitation. But they suggest only that an agent’s credences (that is, their degrees of belief) must meet certain conditions. Dutch Book Arguments place no constraints whatsoever on an agent’s preferences. And if an agent’s preferences fail to satisfy any of Completeness, Transitivity, Independence, and Continuity, that agent cannot be represented as maximizing expected utility (the VNM Theorem is an ‘if and only if’, not just an ‘if’).

Cox’s Theorem

Cox’s Theorem says that, if an agent’s degrees of belief satisfy a certain set of axioms, then their beliefs are isomorphic to probabilities.

This theorem makes no reference to dominated strategies, and it says nothing about an agent’s preferences.

The Complete Class Theorem

The Complete Class Theorem says that an agent’s policy of choosing actions conditional on observations is not strictly dominated by some other policy (such that the other policy does better in some set of circumstances and worse in no set of circumstances) if and only if the agent’s policy maximizes expected utility with respect to a probability distribution that assigns positive probability to each possible set of circumstances.

This theorem does refer to dominated strategies. However, the Complete Class Theorem starts off by assuming that the agent’s preferences over actions in sets of circumstances satisfy Completeness and Transitivity. If the agent’s preferences are not complete and transitive, the Complete Class Theorem does not apply. So, the Complete Class Theorem does not imply that agents must be representable as maximizing expected utility if they are to avoid pursuing dominated strategies.

Omohundro (2007), ‘The Nature of Self-Improving Artificial Intelligence’

This paper seems to be the original source of the claim that agents are vulnerable to exploitation unless they can be represented as expected-utility-maximizers. Omohundro purports to give us “the celebrated expected utility theorem of von Neumann and Morgenstern… derived from a lack of vulnerabilities rather than from given axioms.”

Omohundro’s first error is to ignore Completeness. That leads him to mistake acyclicity for transitivity, and to think that any transitive relation is a total order. Note that this error already sinks any hope of getting an expected-utility-maximizer out of Omohundro’s argument. Completeness (recall) is a necessary condition for being representable as an expected-utility-maximizer. If there’s no money-pump that compels Completeness, there’s no money-pump that compels expected-utility-maximization.

Omohundro’s second error is to ignore Continuity. His ‘Argument for choice with objective uncertainty’ is too quick to make much sense of. Omohundro says it’s a simpler variant of Green (1987). The problem is that Green assumes every axiom of the VNM Theorem except Independence. He says so at the bottom of page 789. And, even then, Green notes that his paper provides “only a qualified bolstering” of the argument for Independence.

Money-Pump Arguments by Johan Gustafsson

It’s worth noting that there has recently appeared a book which gives money-pump arguments for each of the axioms of the VNM Theorem. It’s by the philosopher Johan Gustafsson and you can read it here.

This does not mean that the posts and papers claiming the existence of coherence theorems are correct after all. Gustafsson’s book was published in 2022, long after most of the posts on coherence theorems. Gustafsson argues that the VNM axioms are requirements of rationality, whereas coherence arguments aim to establish that sufficiently-advanced artificial agents will satisfy the VNM axioms. More importantly (and as noted above) the conclusions of money-pump arguments are not theorems. Theorems (like the VNM Theorem) can be proved without making any substantive assumptions. Money-pump arguments establish their conclusion only by making substantive assumptions: assumptions that might well be false.

I will now explain how denying one such assumption allows us to resist Gustafsson’s money-pump arguments. I will then argue that there can be no compelling money-pump arguments for the conclusion that sufficiently-advanced artificial agents will satisfy the VNM axioms.

Before that, though, let’s get the lay of the land. Recall that Completeness is necessary for representability as an expected-utility-maximizer. If an agent’s preferences are incomplete, that agent cannot be represented as maximizing expected utility. Note also that Gustafsson’s money-pump arguments for the other axioms of the VNM Theorem depend on Completeness. As he writes in a footnote on page 3, his money-pump arguments for Transitivity, Independence, and Continuity all assume that the agent’s preferences are complete. That makes Completeness doubly important to the ‘money-pump arguments for expected-utility-maximization’ project. If an agent’s preferences are incomplete, then they can’t be represented as an expected-utility-maximizer, and they can’t be compelled by Gustafsson’s money-pump arguments to conform their preferences to the other axioms of the VNM Theorem. (Perhaps some earlier, less careful money-pump argument can compel conformity to the other VNM axioms without assuming Completeness, but I think it unlikely.)

So, Completeness is crucial. But one might well think that we don’t need a money-pump argument to establish it. I’ll now explain why this thought is incorrect, and then we’ll look at a money-pump.

Completeness doesn’t come for free

Here’s Completeness again:

Completeness: For all lotteries X and Y, X is at least as preferred as Y or Y is at least as preferred as X.

Since:

‘X is strictly preferred to Y’ is defined as ‘X is at least as preferred as Y and Y is not at least as preferred as X.’

And:

‘The agent is indifferent between X and Y’ is defined as ‘X is at least as preferred as Y and Y is at least as preferred as X.’

Completeness can be rephrased as:

Completeness (rephrased): For all lotteries X and Y, either X is strictly preferred to Y, or Y is strictly preferred to X, or the agent is indifferent between X and Y.

And then you might think that Completeness comes for free. After all, what other comparative, preference-style attitude can an agent have to X and Y?

This thought might seem especially appealing if you think of preferences as nothing more than dispositions to choose. Suppose that our agent is offered repeated choices between X and Y. Then (the thought goes), in each of these situations, they have to choose something. If they reliably choose X over Y, then they strictly prefer X to Y. If they reliably choose Y over X, then they strictly prefer Y to X. If they flip a coin, or if they sometimes choose X and sometimes choose Y, then they are indifferent between X and Y.

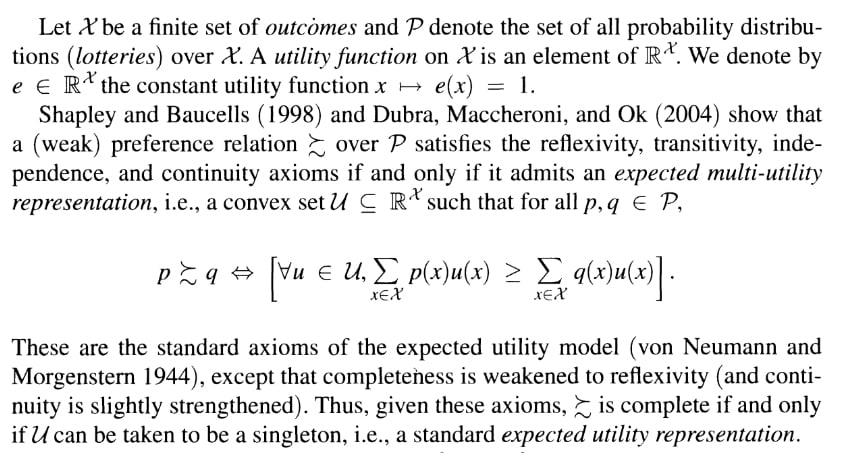

Here’s the important point missing from this thought: there are two ways of failing to have a strict preference between X and Y. Being indifferent between X and Y is one way: preferring X at least as much as Y and preferring Y at least as much as X. Having a preferential gap between X and Y is another way: not preferring X at least as much as Y and not preferring Y at least as much as X. If an agent has a preferential gap between any two lotteries, then their preferences violate Completeness.

The key contrast between indifference and preferential gaps is that indifference is sensitive to all sweetenings and sourings. Consider an example. C is a lottery that gives the agent a pot of ten dollar-bills for sure. D is a lottery that gives the agent a different pot of ten dollar-bills for sure. The agent does not strictly prefer C to D and does not strictly prefer D to C. How do we determine whether the agent is indifferent between C and D or whether the agent has a preferential gap between C and D? We sweeten one of the lotteries: we make that lottery just a little but more attractive. In the example, we add an extra dollar-bill to pot C, so that it contains $11 total. Call the resulting lottery C+. The agent will strictly prefer C+ to D. We get the converse effect if we sour lottery C, by removing a dollar-bill from the pot so that it contains $9 total. Call the resulting lottery C-. The agent will strictly prefer D to C-. And we also get strict preferences by sweetening and souring D, to get D+ and D- respectively. The agent will strictly prefer D+ to C and strictly prefer C to D-. Since the agent’s preference-relation between C and D is sensitive to all such sweetenings and sourings, the agent is indifferent between C and D.

Preferential gaps, by contrast, are insensitive to some sweetenings and sourings. Consider another example. A is a lottery that gives the agent a Fabergé egg for sure. B is a lottery that returns to the agent their long-lost wedding album. The agent does not strictly prefer A to B and does not strictly prefer B to A. How do we determine whether the agent is indifferent or whether they have a preferential gap? Again, we sweeten one of the lotteries. A+ is a lottery that gives the agent a Fabergé egg plus a dollar-bill for sure. In this case, the agent might not strictly prefer A+ to B. That extra dollar-bill might not suffice to break the tie. If that is so, the agent has a preferential gap between A and B. If the agent has a preferential gap, then slightly souring A to get A- might also fail to break the tie, as might slightly sweetening and souring B to get B+ and B- respectively.

The axiom of Completeness rules out preferential gaps, and so rules out insensitivity to some sweetenings and sourings. That is why Completeness does not come for free. We need some argument for thinking that agents will not have preferential gaps. ‘The agent has to choose something’ is a bad argument. Faced with a choice between two lotteries, the agent might choose arbitrarily, but that does not imply that the agent is indifferent between the two lotteries. The agent might instead have a preferential gap. It depends on whether the agent’s preference-relation is sensitive to all sweetenings and sourings.

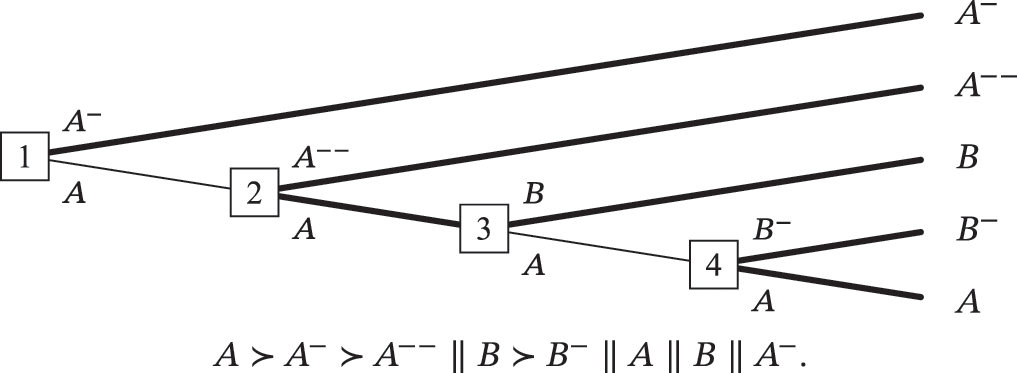

A money-pump for Completeness

So, we need some other argument for thinking that sufficiently-advanced artificial agents’ preferences over lotteries will be complete (and hence will be sensitive to all sweetenings and sourings). Let’s look at a money-pump. I will later explain how my responses to this money-pump also tell against other money-pump arguments for Completeness.

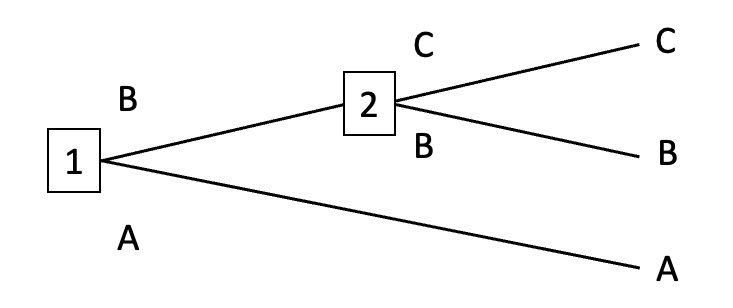

Here's the money-pump, suggested by Ruth Chang (1997, p.11) and later discussed by Gustafsson (2022, p.26):

‘’ denotes strict preference and ‘’ denotes a preferential gap, so the symbols underneath the decision tree say that the agent strictly prefers A to A- and has a preferential gap between A- and B, and between B and A.

Now suppose that the agent finds themselves at the beginning of this decision tree. Since the agent doesn’t strictly prefer A to B, they might choose to go up at node 1. And since the agent doesn’t strictly prefer B to A-, they might choose to go up at node 2. But if the agent goes up at both nodes, they have pursued a dominated strategy: they have made a set of trades that left them with A- when they could have had A (an outcome that they strictly prefer), even though they knew in advance all the trades that they would be offered.

Note, however, that this money-pump is non-forcing: at some step in the decision tree, the agent is not compelled by their preferences to pursue a dominated strategy. The agent would not be acting against their preferences if they chose to go down at node 1 or at node 2. And if they went down at either node, they would not pursue a dominated strategy.

To avoid even a chance of pursuing a dominated strategy, we need only suppose that the agent acts in accordance with the following policy: ‘if I go up at node 1, I will go down at node 2.’ Since the agent does not strictly prefer A- to B, acting in accordance with this policy does not require the agent to change or act against any of their preferences.

More generally, suppose that the agent acts in accordance with the following policy in all decision-situations: ‘if I previously turned down some option X, I will not choose any option that I strictly disprefer to X.’ That policy makes the agent immune to all possible money-pumps for Completeness.[2] And (granted some assumptions), the policy never requires the agent to change or act against any of their preferences.

Here’s why. Assume:

- That the agent’s strict preferences are transitive.

- That the agent knows in advance what trades they will be offered.

- That the agent is capable of backward induction: predicting what they would choose at later nodes and taking those predictions into account at earlier nodes.

(If the agent doesn’t know in advance what trades they will be offered or is incapable of backward induction, then their pursuit of a dominated strategy need not indicate any defect in their preferences. Their pursuit of a dominated strategy can instead be blamed on their lack of knowledge and/or reasoning ability.)

Given the agent’s knowledge of the decision tree and their grasp of backward induction, we can infer that, if the agent proceeds to node 2, then at least one of the possible outcomes of going to node 2 is not strictly dispreferred to any option available at node 1. Then, if the agent proceeds to node 2, they can act on a policy of not choosing any outcome that is strictly dispreferred to some option available at node 1. The agent’s acting on this policy will not require them to act against any of their preferences. For suppose that it did require them to act against some strict preference. Suppose that B is strictly dispreferred to A, so that the agent’s policy requires them to choose C, and yet C is strictly dispreferred to B. Then, by the transitivity of strict preference, C is strictly dispreferred to A. That means that both B and C are strictly dispreferred to A, contrary to our original assumption that at least one of the possible outcomes of going to node 2 is not strictly dispreferred to any option available at node 1. We have reached a contradiction, and so we can reject the assumption that the agent’s policy will require them to act against their preferences. This proof is easy to generalize so that it applies to decision trees with more than three terminal outcomes.

Summarizing this section

Money-pump arguments for Completeness (understood as the claim that sufficiently-advanced artificial agents will have complete preferences) assume that such agents will not act in accordance with policies like ‘if I previously turned down some option X, I will not choose any option that I strictly disprefer to X.’ But that assumption is doubtful. Agents with incomplete preferences have good reasons to act in accordance with this kind of policy: (1) it never requires them to change or act against their preferences, and (2) it makes them immune to all possible money-pumps for Completeness.

So, the money-pump arguments for Completeness are unsuccessful: they don’t give us much reason to expect that sufficiently-advanced artificial agents will have complete preferences. Any agent with incomplete preferences cannot be represented as an expected-utility-maximizer. So, money-pump arguments don’t give us much reason to expect that sufficiently-advanced artificial agents will be representable as expected-utility-maximizers.

Conclusion

There are no coherence theorems. Authors in the AI safety community should stop suggesting that there are.

There are money-pump arguments, but the conclusions of these arguments are not theorems. The arguments depend on substantive and doubtful assumptions.

Here is one doubtful assumption: advanced artificial agents with incomplete preferences will not act in accordance with the following policy: ‘if I previously turned down some option X, I will not choose any option that I strictly disprefer to X.’ Any agent who acts in accordance with that policy is immune to all possible money-pumps for Completeness. And agents with incomplete preferences cannot be represented as expected-utility-maximizers.

In fact, the situation is worse than this. As Gustafsson notes, his money-pump arguments for the other three axioms of the VNM Theorem depend on Completeness. If Gustafsson’s money-pump arguments fail without Completeness, I suspect that earlier, less-careful money-pump arguments for the other axioms of the VNM Theorem fail too. If that’s right, and if Completeness is false, then none of Transitivity, Independence, and Continuity has been established by money-pump arguments either.

Bottom-lines

- There are no coherence theorems

- Money-pump arguments don’t give us much reason to expect that advanced artificial agents will be representable as expected-utility-maximizers.

Appendix: Papers and posts in which the error occurs

Here’s a selection of papers and posts which claim that there are coherence theorems.

‘The nature of self-improving artificial intelligence’

“The appendix shows how the rational economic structure arises in each of these situations. Most presentations of this theory follow an axiomatic approach and are complex and lengthy. The version presented in the appendix is based solely on avoiding vulnerabilities and tries to make clear the intuitive essence of the argument.”

“In each case we show that if an agent is to avoid vulnerabilities, its preferences must be representable by a utility function and its choices obtained by maximizing the expected utility.”

‘The basic AI drives’

“The remarkable “expected utility” theorem of microeconomics says that it is always possible for a system to represent its preferences by the expectation of a utility function unless the system has “vulnerabilities” which cause it to lose resources without benefit.”

‘Coherent decisions imply consistent utilities’ [LW · GW]

“It turns out that this is just one instance of a large family of coherence theorems which all end up pointing at the same set of core properties. All roads lead to Rome, and all the roads say, "If you are not shooting yourself in the foot in sense X, we can view you as having coherence property Y."”

“Now, by the general idea behind coherence theorems, since we can't view this behavior as corresponding to expected utilities, we ought to be able to show that it corresponds to a dominated strategy somehow—derive some way in which this behavior corresponds to shooting off your own foot.”

“And that's at least a glimpse of why, if you're not using dominated strategies, the thing you do with relative utilities is multiply them by probabilities in a consistent way, and prefer the choice that leads to a greater expectation of the variable representing utility.”

“The demonstrations we've walked through here aren't the professional-grade coherence theorems as they appear in real math. Those have names like "Cox's Theorem" or "the complete class theorem"; their proofs are difficult; and they say things like "If seeing piece of information A followed by piece of information B leads you into the same epistemic state as seeing piece of information B followed by piece of information A, plus some other assumptions, I can show an isomorphism between those epistemic states and classical probabilities" or "Any decision rule for taking different actions depending on your observations either corresponds to Bayesian updating given some prior, or else is strictly dominated by some Bayesian strategy".”

“But hopefully you've seen enough concrete demonstrations to get a general idea of what's going on with the actual coherence theorems. We have multiple spotlights all shining on the same core mathematical structure, saying dozens of different variants on, "If you aren't running around in circles or stepping on your own feet or wantonly giving up things you say you want, we can see your behavior as corresponding to this shape. Conversely, if we can't see your behavior as corresponding to this shape, you must be visibly shooting yourself in the foot." Expected utility is the only structure that has this great big family of discovered theorems all saying that. It has a scattering of academic competitors, because academia is academia, but the competitors don't have anything like that mass of spotlights all pointing in the same direction.”

‘Things To Take Away From The Essay’ [LW · GW]

“So what are the primary coherence theorems, and how do they differ from VNM? Yudkowsky mentions the complete class theorem in the post, Savage's theorem comes up in the comments, and there are variations on these two and probably others as well. Roughly, the general claim these theorems make is that any system either (a) acts like an expected utility maximizer under some probabilistic model, or (b) throws away resources in a pareto-suboptimal manner. One thing to emphasize: these theorems generally do not assume any pre-existing probabilities (as VNM does); an agent's implied probabilities are instead derived. Yudkowsky's essay does a good job communicating these concepts, but doesn't emphasize that this is different from VNM.”

‘Sufficiently optimized agents appear coherent’

“Summary: Violations of coherence constraints in probability theory and decision theory correspond to qualitatively destructive or dominated behaviors.”

“Again, we see a manifestation of a powerful family of theorems showing that agents which cannot be seen as corresponding to any coherent probabilities and consistent utility function will exhibit qualitatively destructive behavior, like paying someone a cent to throw a switch and then paying them another cent to throw it back.”

“There is a large literature on different sets of coherence constraints that all yield expected utility, starting with the Von Neumann-Morgenstern Theorem. No other decision formalism has comparable support from so many families of differently phrased coherence constraints.”

‘What do coherence arguments imply about the behavior of advanced AI?’

“Coherence arguments say that if an entity’s preferences do not adhere to the axioms of expected utility theory, then that entity is susceptible to losing things that it values.”

Disclaimer: “This is an initial page, in the process of review, which may not be comprehensive or represent the best available understanding.”

‘Coherence theorems’

“In the context of decision theory, "coherence theorems" are theorems saying that an agent's beliefs or behavior must be viewable as consistent in way X, or else penalty Y happens.”

Disclaimer: “This page's quality has not been assessed.”

“Extremely incomplete list of some coherence theorems in decision theory

- Wald’s complete class theorem

- Von-Neumann-Morgenstern utility theorem

- Cox’s Theorem

- Dutch book arguments”

‘Coherence arguments do not entail goal-directed behavior’ [LW · GW]

“One of the most pleasing things about probability and expected utility theory is that there are many coherence arguments that suggest that these are the “correct” ways to reason. If you deviate from what the theory prescribes, then you must be executing a dominated strategy. There must be some other strategy that never does any worse than your strategy, but does strictly better than your strategy with certainty in at least one situation. There’s a good explanation of these arguments here.”

“The VNM axioms are often justified on the basis that if you don't follow them, you can be Dutch-booked: you can be presented with a series of situations where you are guaranteed to lose utility relative to what you could have done. So on this view, we have "no Dutch booking" implies "VNM axioms" implies "AI risk".”

‘Coherence arguments imply a force for goal-directed behavior.’ [LW · GW]

“‘Coherence arguments’ mean that if you don’t maximize ‘expected utility’ (EU)—that is, if you don’t make every choice in accordance with what gets the highest average score, given consistent preferability scores that you assign to all outcomes—then you will make strictly worse choices by your own lights than if you followed some alternate EU-maximizing strategy (at least in some situations, though they may not arise). For instance, you’ll be vulnerable to ‘money-pumping’—being predictably parted from your money for nothing.3”

‘AI Alignment: Why It’s Hard, and Where to Start’

“The overall message here is that there is a set of qualitative behaviors and as long you do not engage in these qualitatively destructive behaviors, you will be behaving as if you have a utility function.”

‘Money-pumping: the axiomatic approach’ [LW · GW]

“This post gets somewhat technical and mathematical, but the point can be summarised as:

- You are vulnerable to money pumps only to the extent to which you deviate from the von Neumann-Morgenstern axioms of expected utility.

In other words, using alternate decision theories is bad for your wealth.”

‘Ngo and Yudkowsky on alignment difficulty’ [LW · GW]

“Except that to do the exercises at all, you need them to work within an expected utility framework. And then they just go, "Oh, well, I'll just build an agent that's good at optimizing things but doesn't use these explicit expected utilities that are the source of the problem!"

And then if I want them to believe the same things I do, for the same reasons I do, I would have to teach them why certain structures of cognition are the parts of the agent that are good at stuff and do the work, rather than them being this particular formal thing that they learned for manipulating meaningless numbers as opposed to real-world apples.

And I have tried to write that page once or twice (eg "coherent decisions imply consistent utilities [LW · GW]") but it has not sufficed to teach them, because they did not even do as many homework problems as I did, let alone the greater number they'd have to do because this is in fact a place where I have a particular talent.”

“In this case the higher structure I'm talking about is Utility, and doing homework with coherence theorems leads you to appreciate that we only know about one higher structure for this class of problems that has a dozen mathematical spotlights pointing at it saying "look here", even though people have occasionally looked for alternatives.

And when I try to say this, people are like, "Well, I looked up a theorem, and it talked about being able to identify a unique utility function from an infinite number of choices, but if we don't have an infinite number of choices, we can't identify the utility function, so what relevance does this have" and this is a kind of mistake I don't remember even coming close to making so I do not know how to make people stop doing that and maybe I can't.”

“Rephrasing again: we have a wide variety of mathematical theorems all spotlighting, from different angles, the fact that a plan lacking in clumsiness, is possessing of coherence.”

‘Ngo and Yudkowsky on AI capability gains’ [? · GW]

“I think that to contain the concept of Utility as it exists in me, you would have to do homework exercises I don't know how to prescribe. Maybe one set of homework exercises like that would be showing you an agent, including a human, making some set of choices that allegedly couldn't obey expected utility, and having you figure out how to pump money from that agent (or present it with money that it would pass up).

Like, just actually doing that a few dozen times.

Maybe it's not helpful for me to say this? If you say it to Eliezer, he immediately goes, "Ah, yes, I could see how I would update that way after doing the homework, so I will save myself some time and effort and just make that update now without the homework", but this kind of jumping-ahead-to-the-destination is something that seems to me to be... dramatically missing from many non-Eliezers. They insist on learning things the hard way and then act all surprised when they do. Oh my gosh, who would have thought that an AI breakthrough would suddenly make AI seem less than 100 years away the way it seemed yesterday? Oh my gosh, who would have thought that alignment would be difficult?

Utility can be seen as the origin of Probability within minds, even though Probability obeys its own, simpler coherence constraints.”

‘AGI will have learnt utility functions’ [LW · GW]

“The view that utility maximizers are inevitable is supported by a number of coherence theories developed early on in game theory which show that any agent without a consistent utility function is exploitable in some sense.”

- ^

Thanks to Adam Bales, Dan Hendrycks, and members of the CAIS Philosophy Fellowship for comments on a draft of this post. When I emailed Adam to ask for comments, he replied with his own draft paper on coherence arguments. Adam’s paper takes a somewhat different view on money-pump arguments, and should be available soon.

- ^

Gustafsson later offers a forcing money-pump argument for Completeness: a money-pump in which, at each step, the agent is compelled by their preferences to pursue a dominated strategy. But agents who act in accordance with the policy above are immune to this money-pump as well. Here’s why.

Gustafsson claims that, in the original non-forcing money-pump, going up at node 2 cannot be irrational. That’s because the agent does not strictly disprefer A- to B: the only other option available at node 2. The fact that A was previously available cannot make choosing A- irrational, because (Gustafsson claims) Decision-Tree Separability is true: “The rational status of the options at a choice node does not depend on other parts of the decision tree than those that can be reached from that node.” But (Gustafsson claims) the sequence of choices consisting of going up at nodes 1 and 2 is irrational, because it leaves the agent worse-off than they could have been. That implies that going up at node 1 must be irrational, given what Gustafsson calls ‘The Principle of Rational Decomposition’: any irrational sequence of choices must contain at least one irrational choice. Generalizing this argument, Gustafsson gets a general rational requirement to choose option A whenever your other option is to proceed to a choice node where your options are A- and B. And it’s this general rational requirement (‘Minimal Unidimensional Precaution’) that allows Gustafsson to construct his forcing money-pump. In this forcing money-pump, an agent’s incomplete preferences compel them to violate the Principle of Unexploitability: that principle which says getting money-pumped is irrational. The Principle of Preferential Invulnerability then implies that incomplete preferences are irrational, since it’s been shown that there exists a situation in which incomplete preferences force an agent to violate the Principle of Unexploitability.

Note that Gustafsson aims to establish that agents are rationally required to have complete preferences, whereas coherence arguments aim to establish that sufficiently-advanced artificial agents will have complete preferences. These different conclusions require different premises. In place of Gustafsson’s Decision-Tree Separability, coherence arguments need an amended version that we can call ‘Decision-Tree Separability*’: sufficiently-advanced artificial agents’ dispositions to choose options at a choice node will not depend on other parts of the decision tree than those that can be reached from that node. But this premise is easy to doubt. It’s false if any sufficiently-advanced artificial agent acts in accordance with the following policy: ‘if I previously turned down some option X, I will not choose any option that I strictly disprefer to X.’ And it’s easy to see why agents might act in accordance with that policy: it makes them immune to all possible money-pumps for Completeness, and (as I am about to prove back in the main text) it never requires them to change or act against any of their preferences.

John Wentworth’s ‘Why subagents? [LW · GW]’ suggests another policy for agents with incomplete preferences: trade only when offered an option that you strictly prefer to your current option. That policy makes agents immune to the single-souring money-pump. The downside of Wentworth’s proposal is that an agent following his policy will pursue a dominated strategy in single-sweetening money-pumps, in which the agent first has the opportunity to trade in A for B and then (conditional on making that trade) has the opportunity to trade in B for A+. Wentworth’s policy will leave the agent with A when they could have had A+.

130 comments

Comments sorted by top scores.

comment by habryka (habryka4) · 2023-02-21T01:01:24.339Z · LW(p) · GW(p)

Crossposting this comment from the EA Forum:

Nuno says:

I appreciate the whole post. But I personally really enjoyed the appendix. In particular, I found it informative that Yudkowsk can speak/write with that level of authoritativeness, confidence, and disdain for others who disagree, and still be wrong (if this post is right).

I respond:

(if this post is right)

The post does actually seem wrong though.

I expect someone to write a comment with the details at some point (I am pretty busy right now, so can only give a quick meta-level gleam), but mostly, I feel like in order to argue that something is wrong with these arguments is that you have to argue more compellingly against completeness and possible alternative ways to establish dutch-book arguments.

Also, the title of "there are no coherence arguments" is just straightforwardly wrong. The theorems cited are of course real theorems, they are relevant to agents acting with a certain kind of coherence, and I don't really understand the semantic argument that is happening where it's trying to say that the cited theorems aren't talking about "coherence", when like, they clearly are.

You can argue that the theorems are wrong, or that the explicit assumptions of the theorems don't hold, which many people have done, but like, there are still coherence theorems, and IMO completeness seems quite reasonable to me and the argument here seems very weak (and I would urge the author to create an actual concrete situation that doesn't seem very dumb in which a highly intelligence, powerful and economically useful system has non-complete preferences).

The whole section at the end feels very confused to me. The author asserts that there is "an error" where people assert that "there are coherence theorems", but man, that just seems like such a weird thing to argue for. Of course there are theorems that are relevant to the question of agent coherence, all of these seem really quite relevant. They might not prove the things in-practice, as many theorems tend to do, and you are open to arguing about that, but that doesn't really change whether they are theorems.

Like, I feel like with the same type of argument that is made in the post I could write a post saying "there are no voting impossibility theorems" and then go ahead and argue that the Arrow's Impossibility Theorem assumptions are not universally proven, and then accuse everyone who ever talked about voting impossibility theorems that they are making "an error" since "those things are not real theorems". And I think everyone working on voting-adjacent impossibility theorems would be pretty justifiedly annoyed by this.

Replies from: ElliottThornley, SaidAchmiz, Radamantis, SaidAchmiz, keith_wynroe, Radamantis, ElliottThornley↑ comment by EJT (ElliottThornley) · 2023-02-21T01:22:49.042Z · LW(p) · GW(p)

I’m following previous authors in defining ‘coherence theorems’ as

theorems which state that, unless an agent can be represented as maximizing expected utility, that agent is liable to pursue strategies that are dominated by some other available strategy.

On that definition, there are no coherence theorems. VNM is not a coherence theorem, nor is Savage’s Theorem, nor is Bolker-Jeffrey, nor are Dutch Book Arguments, nor is Cox’s Theorem, nor is the Complete Class Theorem.

there are theorems that are relevant to the question of agent coherence

I'd have no problem with authors making that claim.

I would urge the author to create an actual concrete situation that doesn't seem very dumb in which a highly intelligence, powerful and economically useful system has non-complete preferences

Working on it.

Replies from: adele-lopez-1↑ comment by Adele Lopez (adele-lopez-1) · 2023-02-21T01:52:17.413Z · LW(p) · GW(p)

theorems which state that, unless an agent can be represented as maximizing expected utility, that agent is liable to pursue strategies that are dominated by some other available strategy.

While I agree that such theorems would count as coherence theorems, I wouldn't consider this to cover most things I think of as coherence theorems, and as such is simply a bad definition.

I think of coherence theorems loosely as things that say if an agent follows such and such principles, then we can prove it will have a certain property. The usefulness comes from both directions: to the extent the principles seem like good things to have, we're justified in assuming a certain property, and to the extent that the property seems too strong or whatever, then one of these principles will have to break.

Replies from: ElliottThornley↑ comment by EJT (ElliottThornley) · 2023-02-21T02:53:40.883Z · LW(p) · GW(p)

I think of coherence theorems loosely as things that say if an agent follows such and such principles, then we can prove it will have a certain property.

If you use this definition, then VNM (etc.) counts as a coherence theorem. But Premise 1 of the coherence argument (as I've rendered it) remains false, and so you can't use the coherence argument to get the conclusion that sufficiently-advanced artificial agents will be representable as maximizing expected utility.

Replies from: habryka4↑ comment by habryka (habryka4) · 2023-02-21T03:29:44.352Z · LW(p) · GW(p)

I don't think the majority of the papers that you cite made the argument that coherence arguments prove that any sufficiently-advanced AI will be representable as maximizing expected utility. Indeed I am very confident almost everyone you cite does not believe this, since it is a very strong claim. Many of the quotes you give even explicitly say this:

then you will make strictly worse choices by your own lights than if you followed some alternate EU-maximizing strategy (at least in some situations, though they may not arise)

The emphasis here is important.

I don't think really any of the other quotes you cite make the strong claim you are arguing against. Indeed it is trivially easy to think of an extremely powerful AI that is VNM rational in all situations except for one tiny thing that does not matter or will never come up. Technically it's preferences can now not be represented by a utility function, but that's not very relevant to the core arguments at hand, and I feel like in your arguments you are trying to tear down some strawman of some extreme position that I don't think anyone holds.

Eliezer has also explicitly written about it being possible to design superintelligences that reflectively coherently believe in logical falsehoods. He thinks this is possible, just very difficult. That alone would also violate VNM rationality.

Replies from: ElliottThornley↑ comment by EJT (ElliottThornley) · 2023-02-21T19:47:30.703Z · LW(p) · GW(p)

You misunderstand me (and I apologize for that. I now think I should have made this clear in the post). I’m arguing against the following weak claim:

- For any agent who cannot be represented as maximizing expected utility, there is at least some situation in which that agent will pursue a dominated strategy.

And my argument is:

- There are no theorems which state or imply that claim. VNM doesn’t, Savage doesn’t, Bolker-Jeffrey doesn’t, Dutch Books don't, Cox doesn't, Complete Class doesn't.

- Money-pump arguments for the claim are not particularly convincing (for the reasons that I give in the post).

‘The relevant situations may not arise’ is a different objection. It’s not the one that I’m making.

↑ comment by Said Achmiz (SaidAchmiz) · 2023-02-21T04:00:19.660Z · LW(p) · GW(p)

(and I would urge the author to create an actual concrete situation that doesn’t seem very dumb in which a highly intelligence, powerful and economically useful system has non-complete preferences)

Please see this old comment [LW(p) · GW(p)] and this one [LW(p) · GW(p)].

Replies from: habryka4↑ comment by habryka (habryka4) · 2023-02-21T04:19:24.372Z · LW(p) · GW(p)

These are both great! I now find that I have strong-upvoted them both at the time. Indeed, I think this kind of concreteness feels like it does actually help the discussion quite a bit.

I also quite liked John's post on this topic: https://www.lesswrong.com/posts/3xF66BNSC5caZuKyC/why-subagents [LW · GW]

↑ comment by NunoSempere (Radamantis) · 2023-02-21T02:42:37.590Z · LW(p) · GW(p)

Copying my response from the EA forum:

(if this post is right)

The post does actually seem wrong though.

Glad that I added the caveat.

Also, the title of "there are no coherence arguments" is just straightforwardly wrong. The theorems cited are of course real theorems, they are relevant to agents acting with a certain kind of coherence, and I don't really understand the semantic argument that is happening where it's trying to say that the cited theorems aren't talking about "coherence", when like, they clearly are.

Well, part of the semantic nuance is that we don't care as much about the coherence theorems that do exist if they will fail to apply to current and future machines

IMO completeness seems quite reasonable to me and the argument here seems very weak (and I would urge the author to create an actual concrete situation that doesn't seem very dumb in which a highly intelligence, powerful and economically useful system has non-complete preferences).

Here are some scenarios:

- Our highly intelligent system notices that to have complete preferences over all trades would be too computationally expensive, and thus is willing to accept some, even a large degree of incompleteness.

- The highly intelligent system learns to mimic the values of human, which end up having non-complete preferences, which the agent mimics

- You train a powerful system to do some stuff, but also to detect when it is out of distribution and in that case do nothing. Assuming you can do that, their preference is incomplete, since when offered tradeoffs they always take the default option when out of distribution.

The whole section at the end feels very confused to me. The author asserts that there is "an error" where people assert that "there are coherence theorems", but man, that just seems like such a weird thing to argue for. Of course there are theorems that are relevant to the question of agent coherence, all of these seem really quite relevant. They might not prove the things in-practice, as many theorems tend to do.

Mmh, then it would be good to differentiate between:

- There are coherence theorems that talk about some agents with some properties

- There are coherence theorems that prove that AI systems as will soon exist in the future will be optimizing utility functions

You could also say a third thing, which would be: there are coherence theorems that strongly hint that AI systems as will soon exist in the future will be optimizing utility functions. They don't prove it, but they make it highly probable because of such and such. In which case having more detail on the such and such would deflate most of the arguments in this post, for me.

For instance:

“‘Coherence arguments’ mean that if you don’t maximize ‘expected utility’ (EU)—that is, if you don’t make every choice in accordance with what gets the highest average score, given consistent preferability scores that you assign to all outcomes—then you will make strictly worse choices by your own lights than if you followed some alternate EU-maximizing strategy (at least in some situations, though they may not arise). For instance, you’ll be vulnerable to ‘money-pumping’—being predictably parted from your money for nothing.

This is just false, because it is not taking into account the cost of doing expected value maximization, since giving consistent preferability scores is just very expensive and hard to do reliably. Like, when I poll people for their preferability scores [EA · GW], they give inconsistent estimates. Instead, they could be doing some expected utility maximization, but the evaluation steps are so expensive that I now basically don't bother to do some more hardcore approximation of expected value for individuals [EA · GW], but for large projects and organizations [EA · GW]. And even then, I'm still taking shortcuts and monkey-patches, and not doing pure expected value maximization.

“This post gets somewhat technical and mathematical, but the point can be summarised as:

- You are vulnerable to money pumps only to the extent to which you deviate from the von Neumann-Morgenstern axioms of expected utility.

In other words, using alternate decision theories is bad for your wealth.”

The "in other words" doesn't follow, since EV maximization can be more expensive than the shortcuts.

Then there are other parts that give the strong impression that this expected value maximization will be binding in practice:

“Rephrasing again: we have a wide variety of mathematical theorems all spotlighting, from different angles, the fact that a plan lacking in clumsiness, is possessing of coherence.”

“The overall message here is that there is a set of qualitative behaviors and as long you do not engage in these qualitatively destructive behaviors, you will be behaving as if you have a utility function.”

“The view that utility maximizers are inevitable is supported by a number of coherence theories developed early on in game theory which show that any agent without a consistent utility function is exploitable in some sense.”

Here are some words I wrote that don't quite sit right but which I thought I'd still share: Like, part of the MIRI beat as I understand it is to hold that there is some shining guiding light, some deep nature of intelligence that models will instantiate and make them highly dangerous. But it's not clear to me whether you will in fact get models that instantiate that shining light. Like, you could imagine an alternative view of intelligence where it's just useful monkey patches all the way down, and as we train more powerful models, they get more of the monkey patches, but without the fundamentals. The view in between would be that there are some monkey patches, and there are some deep generalizations, but then I want to know whether the coherence systems will bind to those kinds of agents.

No need to respond/deeply engage, but I'd appreciate if you let me know if the above comments were too nitpicky.

Replies from: Benito, habryka4↑ comment by Ben Pace (Benito) · 2023-02-21T18:20:48.848Z · LW(p) · GW(p)

Well, part of the semantic nuance is that we don't care as much about the coherence theorems that do exist if they will fail to apply to current and future machines

The correct response to learning that some theorems do not apply as much to reality as you thought, surely mustn't be to change language so as to deny those theorems' existence. Insofar as this is what's going on, these are pretty bad norms of language in my opinion.

Replies from: Radamantis↑ comment by NunoSempere (Radamantis) · 2023-02-21T18:59:02.156Z · LW(p) · GW(p)

I am not defending the language of the OP's title, I am defending the content of the post.

Replies from: Radamantis↑ comment by NunoSempere (Radamantis) · 2023-02-21T19:00:22.479Z · LW(p) · GW(p)

See this comment: <https://www.lesswrong.com/posts/yCuzmCsE86BTu9PfA/there-are-no-coherence-theorems?commentId=v2mgDWqirqibHTmKb>

↑ comment by habryka (habryka4) · 2023-02-21T03:33:02.263Z · LW(p) · GW(p)

This is just false, because it is not taking into account the cost of doing expected value maximization, since giving consistent preferability scores is just very expensive and hard to do reliably.

I do really want to put emphasis on the parenthetical remark "(at least in some situations, though they may not arise)". Katja is totally aware that the coherence arguments require a bunch of preconditions that are not guaranteed to be the case for all situations, or even any situation ever, and her post is about how there is still a relevant argument here.

↑ comment by Said Achmiz (SaidAchmiz) · 2023-02-24T07:56:53.369Z · LW(p) · GW(p)

Also, the title of “there are no coherence arguments” is just straightforwardly wrong. The theorems cited are of course real theorems, they are relevant to agents acting with a certain kind of coherence, and I don’t really understand the semantic argument that is happening where it’s trying to say that the cited theorems aren’t talking about “coherence”, when like, they clearly are.

This seems wrong to me. The post’s argument is that the cited theorems aren’t talking about “coherence”, and it does indeed seem clear that (at least most of, possibly all but I could see disagreeing about maybe one or two) these theorems are not, in fact, talking about “coherence”.

↑ comment by keith_wynroe · 2023-02-21T22:44:56.792Z · LW(p) · GW(p)

Ngl kinda confused how these points imply the post seems wrong, the bulk of this seems to be (1) a semantic quibble + (2) a disagreement on who has the burden of proof when it comes to arguing about the plausibility of coherence + (3) maybe just misunderstanding the point that's being made?

(1) I agree the title is a bit needlessly provocative and in one sense of course VNM/Savage etc count as coherence theorems. But the point is that there is another sense that people use "coherence theorem/argument" in this field which corresponds to something like "If you're not behaving like an EV-maximiser you're shooting yourself in the foot by your own lights", which is what brings in all the scary normativity and is what the OP is saying doesn't follow from any existing theorem unless you make a bunch of other assumptions

(2) The only real substantive objection to the content here seems to be "IMO completeness seems quite reasonable to me". Why? Having complete preferences seems like a pretty narrow target within the space of all partial orders you could have as your preference relation, so what's the reason why we should expect minds to steer towards this? Do humans have complete preferences?

(3) In some other comments you're saying that this post is straw-manning some extreme position because people who use coherence arguments already accept you could have e.g.

>an extremely powerful AI that is VNM rational in all situations except for one tiny thing that does not >matter or will never come up

This seems to be entirely missing the point/confused - OP isn't saying that agents can realistically get away with not being VNM-rational because its inconsistencies/incompletenesses aren't efficiently exploitable, they're saying that you can have an agent that aren't VNM-rational and aren't exploitable in principle - i.e., your example is an agent that could in theory be money-pumped by another sufficiently powerful agent that was able to steer the world to where their corner-case weirdness came out - the point being made about incompleteness here is that you can have a non VNM-rational agent that's not just un-Dutch-Bookable as a matter of empirical reality but in principle. The former still gets you claims like "A sufficiently smart agent will appear VNM-rational to you, they can't have any obvious public-facing failings", the latter undermines this

↑ comment by NunoSempere (Radamantis) · 2023-02-21T02:43:12.387Z · LW(p) · GW(p)

Copying my second response from the EA forum:

Like, I feel like with the same type of argument that is made in the post I could write a post saying "there are no voting impossibility theorems" and then go ahead and argue that the Arrow's Impossibility Theorem assumptions are not universally proven, and then accuse everyone who ever talked about voting impossibility theorems that they are making "an error" since "those things are not real theorems". And I think everyone working on voting-adjacent impossibility theorems would be pretty justifiedly annoyed by this.

I think that there is some sense in which the character in your example would be right, since:

- Arrow's theorem doesn't bind approval voting.

- Generalizations of Arrow's theorem don't bind probabilistic results, e.g., each candidate is chosen with some probability corresponding to the amount of votes he gets.

Like, if you had someone saying there was "a deep core of electoral process" which means that as they scale to important decisions means that you will necessarily get "highly defective electoral processes", as illustrated in the classic example of the "dangers of the first pass the post system". Well in that case it would be reasonable to wonder whether the assumptions of the theorem bind, or whether there is some system like approval voting which is much less shitty than the theorem provers were expecting, because the assumptions don't hold.

The analogy is imperfect, though, since approval voting is a known decent system, whereas for AI systems we don't have an example friendly AI.

Replies from: habryka4, sharmake-farah↑ comment by habryka (habryka4) · 2023-02-21T03:22:31.729Z · LW(p) · GW(p)

Sorry, this might have not been obvious, but I indeed think the voting impossibility theorems have holes in them because of the lotteries case and that's specifically why I chose that example.

I think that intellectual point matters, but I also think writing a post with the title "There are no voting impossibility theorems", defining "voting impossibility theorems" as "theorems that imply that all voting systems must make these known tradeoffs", and then citing everyone who ever talked about "voting impossibility theorems" as having made "an error" would just be pretty unproductive. I would make a post like the ones that Scott Garrabrant made being like "I think voting impossibility theorems don't account for these cases", and that seems great, and I have been glad about contributions of this type.

↑ comment by Noosphere89 (sharmake-farah) · 2023-02-21T16:30:06.261Z · LW(p) · GW(p)

Like, if you had someone saying there was "a deep core of electoral process" which means that as they scale to important decisions means that you will necessarily get "highly defective electoral processes", as illustrated in the classic example of the "dangers of the first pass the post system". Well in that case it would be reasonable to wonder whether the assumptions of the theorem bind, or whether there is some system like approval voting which is much less shitty than the theorem provers were expecting, because the assumptions don't hold.

Unfortunately, most democratic countries do use first past the post.

The 2 things that are inevitable is condorcet cycles and strategic voting (Though condorcet cycles are less of a problem as you scale up the population, and I have a sneaking suspicion that condorcet cycles go away if we allow a real numbered infinite amount of people.)

Replies from: Douglas_Knight, Radamantis↑ comment by Douglas_Knight · 2023-02-21T19:43:54.059Z · LW(p) · GW(p)

I think most democratic countries use proportional representation, not FTPT. But talking about "most" is an FTPT error. Enough countries use proportional representation that you can study the effect of voting systems. And the results are shocking to me. The theoretical predictions are completely wrong. Duverger's law is false in every FTPT country except America. On the flip side, while PR does lead to more parties, they still form 1-dimensional spectrum. For example, a Green Party is usually a far-left party with slightly different preferences, instead of a single issue party that is willing to form coalitions with the right.

If politics were two dimensional, why wouldn't you expect Condorcet cycles? Why would population get rid of them? If you have two candidates, a tie between them is on a razor's edge. The larger the population of voters, the less likely. But if you have three candidates and three roughly equally common preferences, the cyclic shifts of A > B > C, then this is a robust tie. You only get a Condorcet winner when one of the factions becomes as big as the other two combined. Of course I have assumed away the other three preferences, but this is robust to them being small, not merely nonexistent.

I don't know what happens in the following model: there are three issues A,B,C. Everyone, both voter and candidate, is for all of them, but in a zero-sum way, represented a vector a,b,c, with a+b+c = 11, a,b,c>=0. Start with the voters as above, at (10,1,0), (0,10,1), (1,0,10). Then the candidates (11,0,0), (0,11,0), (0,0,11) form a Condorcet cycle. By symmetry there is no Condorcet winner over all possible candidates. Randomly shift the proportion of voters. Is there a candidate that beats the three given candidates? One that beats all possible candidates? I doubt it. Add noise to make the individual voters unique. Now, I don't know.

↑ comment by NunoSempere (Radamantis) · 2023-02-21T18:56:56.013Z · LW(p) · GW(p)

You don't have strategic voting with probabilistic results. And the degree of strategic voting can also be mitigated.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2023-02-21T19:02:22.871Z · LW(p) · GW(p)

Hm, I remember Wikipedia talked about Hylland's theroem that generalizes the Gibbard-Sattherwaite theorem to the probabilistic case, though Wikipedia might be wrong on that.

↑ comment by EJT (ElliottThornley) · 2023-02-21T01:21:15.733Z · LW(p) · GW(p)

comment by Charlie Steiner · 2023-02-21T12:34:07.846Z · LW(p) · GW(p)

It seems like you deliberately picked completeness because that's where Dutch book arguments are least compelling, and that you'd agree with the more usual Dutch book arguments.

But I think even the Dutch book for completeness makes some sense. You just have to separate "how the agent internally represents its preferences" from "what it looks like the agent us doing." You describe an agent that dodges the money-pump by simply acting consistently with past choices. Internally this agent has an incomplete representation of preferences, plus a memory. But externally it looks like this agent is acting like it assigns equal value to whatever indifferent things it thought of choosing between first. If humans don't get to control the order this agent considers options, or if we let it run for a long time and it's already experienced the things humans might try to present to it from them on, then it will look like it's acting according to complete preferences.

Replies from: ElliottThornley, Lblack, Dweomite, antimonyanthony↑ comment by EJT (ElliottThornley) · 2023-02-21T18:31:04.558Z · LW(p) · GW(p)

Great points. Thinking about these kinds of worries is my next project, and I’m still trying to figure out my view on them.

Replies from: JenniferRM↑ comment by JenniferRM · 2023-05-14T04:33:17.937Z · LW(p) · GW(p)

I don't know if you're still working on this, but if don't already know of the literature on choice supportive bias and similar processes that occur in humans, they look to me a lot like heuristics that probably harden a human agent into being "more coherent" over time (especially in proximity to other ways of updating value estimation processes), and likely have an adaptive role in improving (regularizing?) instrumental value estimates.

Your essay seemed consistent with the claim that "in the past, as verifiable by substantial scholarship, no one ever proved exactly X" but your essay never actually showed "X is provably false" that I noticed?

And, indeed, maybe you can prove it one way or the other for some X, where X might be (as you seem to claim) "naive coherence is impossible" or maybe where some X' or X'' are "sophisticated coherence is approached by algorithm L as t goes to infinity" (or whatever)?

For my money, the thing to do here might be to focus on Value-of-Information, since VoI seems to me like a super super super important concept, and potentially a way to bridge questions of choice and knowledge and costly information gathering actions.

Replies from: ElliottThornley↑ comment by EJT (ElliottThornley) · 2023-05-26T15:41:57.998Z · LW(p) · GW(p)

Thanks! I'll have a think about choice-supportive bias and how it applies.

I think it is provably false that any agent not representable as an expected-utility-maximizer is liable to pursue dominated strategies. Agents with incomplete preferences aren't representable as expected-utility-maximizers, and they can make themselves immune from pursuing dominated strategies by acting in accordance with the following policy: ‘if I previously turned down some option X, I will not choose any option that I strictly disprefer to X.’

Replies from: JenniferRM↑ comment by JenniferRM · 2023-05-30T23:06:15.657Z · LW(p) · GW(p)

I don't know about you, but I'm actually OK dithering a bit, and going in circles, and doing things that mere entropy can "make me notice regret based on syntactically detectable behavioral signs" (like not even active adversarial optimization pressure like that which is somewhat inevitably generated in predator prey contexts).

For example, in my twenties I formed an intent, and managed to adhere to the habit somewhat often, where I'd flip a coin any time I noticed decisions where the cost to think about it in an explicit way was probably larger than the difference in value between the likely outcomes.

(Sometimes I flipped coins and then ignored the coin if I noticed I was sad with that result, as a way to cheaply generate that mental state of having an intuitive internally accessible preference without having to put things into words or do math. When I noticed that that stopped working very well, I switched to flipping a coin, then "if regret, flip again, and follow my head on head, and follow the first coin on tails". The double flipping protocol seemed to help make ALL the first coins have "enough weight" for me to care about them sometimes, even when I always then stopped for a second to see if I was happy or sad or bored by the first coin flip. And of course I do such things much much much less now, and lately have begun to consider taking a personal vow to refuse to randomize, except towards enemies, for an experimental period of time.)

The plans and the hopes here sort of naturally rely on "getting better at preferring things wisely over time"!

And the strategy relies pretty critically on having enough MEMORY to hope to build up data on various examples of different ways that similar situations went in the past, such as to learn from mistakes and thereby rarely "lack a velleity" and to reduce the rate at which I justifiably regret past velleities or choices.

And a core reason I think that sentience and sapience reliably convergently evolve is almost exactly "to store and process memories to enable learning (including especially contextually sensitive instrumental preference learning) inside a single lifetime".

(Credit assignment and citation note: Cristof Koch was the first researcher who I heard explain surprising experimental data that suggested that "minds are common" with stuff like bee learning, and eventually I stopped being surprised when I heard about low key bee numeracy or another crazy mental power of cuttlefish. I didn't know about EITHER of the experiments I just linked to, but I paused to find "the kinds of things one finds here if one actually looks". That I found such links, for me, was a teensy surprise, and slightly contributes to my posterior belief in a claim roughly like "something like 'coherence' is convergently useful and is what our minds were originally built, by evolution, to approximately efficiently implement".)

Basically, I encourage you, when you go try to prove that "Agents with incomplete preferences can make themselves immune from pursuing dominated strategies by following plan P" to consider the resource costs of those plans (like the cost in memory) and to ask whether those resources are being used optimally, or whether a different use of them could get better results faster.

Also... I expect that the proofs you attempt might actually succeed if you have "agents in isolation" or "agents surrounded only by agents that respect property rights" but to fail if you consider the case of adversarial action space selection in an environment of more than one agent (like where wolves seek undominated strategies for eating sheep, and no sheep is able to simply 'turn down' the option of being eaten by an arbitrarily smart wolf without itself doing something clever and potentially memory-or-VNM-perfection-demanding).

I do NOT think you will prove "in full generality, nothing like coherence is pragmatically necessary to avoid dutch booking" but I grant that I'm not sure about this! I have noticed from experience that my mathematical intuitions are actually fallible. That's why real math is worth the elbow grease! <3

↑ comment by Lucius Bushnaq (Lblack) · 2023-03-21T08:41:32.168Z · LW(p) · GW(p)

That separation between internal preferences and external behaviour is already implicit in Dutch books. Decision theory is about external behaviour, not internal representations. It talks about what agents do, not how agents work inside. As parts of decision theory, a preference, to them, is about something the system does or does not do in a given situation. When they talk about someone preferring pizza without pineapple, it's about that person paying money to not have pineapple on their pizza in some range of situations, not some definition related to computations about pineapples and pizzas in that person's brain.

↑ comment by Dweomite · 2024-07-03T20:24:13.575Z · LW(p) · GW(p)

Making a similar point from a different angle:

The OP claims that the policy "if I previously turned down some option X, I will not choose any option that I strictly disprefer to X" escapes the money pump but "never requires them to change or act against their preferences".

But it's not clear to me what conceptual difference there is supposed to be between "I will modify my action policy to hereafter always choose B over A-" and "I will modify my preferences to strictly prefer B over A-, removing the preference gap and bringing my preferences closer to completeness".

Replies from: ElliottThornley↑ comment by EJT (ElliottThornley) · 2024-07-06T11:54:22.094Z · LW(p) · GW(p)

Ah yep, apologies, I meant to say "never requires them to change or act against their strict preferences."

Whether there's a conceptual difference will depend on our definition of 'preference.' We could define 'preference' as follows: an agent prefers X to Y iff the agent reliably chooses X over Y.' In that case, modifying the policy is equivalent to forming a preference.

But we could also define 'preference' so that it requires more than just reliable choosing. For example, we might also require that (when choosing between lotteries) the agent always take opportunities to shift probability mass away from Y and towards X.

On the latter definition, modifying the policy need not be equivalent to forming a preference, because it only involves the reliably choosing and not the shifting of probability mass.

And the latter definition might be more pertinent in this context, where our interest is in whether agents will be expected utility maximizers.

But also, even if we go with the former definition, I think it matters a lot whether money-pumps compel rational agents to complete all their preferences up front, or whether money-pumps just compel agents to resolve preferential gaps over time, conditional on them coming to face choices that are arranged like a money-pump (and only completing their preferences if and once they've faced a sufficiently diverse range of choices). In particular, I think it matters in the context of the shutdown problem. I talk a bit more about this here [LW(p) · GW(p)].

Replies from: Dweomite↑ comment by Dweomite · 2024-07-06T21:52:47.029Z · LW(p) · GW(p)

If it doesn't move probability mass, won't it still be vulnerable to probabilistic money pumps? e.g. in the single-souring pump, you could just replace the choice between A- and B with a choice between two lotteries that have different mixtures of A- and B.

I have also left a reply [LW(p) · GW(p)] to the comment you linked.

↑ comment by Anthony DiGiovanni (antimonyanthony) · 2024-05-15T07:27:43.109Z · LW(p) · GW(p)

You describe an agent that dodges the money-pump by simply acting consistently with past choices. Internally this agent has an incomplete representation of preferences, plus a memory. But externally it looks like this agent is acting like it assigns equal value to whatever indifferent things it thought of choosing between first.

Not sure I follow this / agree. Seems to me that in the "Single-Souring Money Pump" case:

- If the agent systematically goes down at node 1, all we learn is that the agent doesn't strictly prefer [B or A-] to A.

- If the agent systematically goes up at node 1 and down at node 2, all we learn is that the agent doesn't strictly prefer [A or A-] to B.

So this doesn't tell us what the agent would do if they were faced with just a choice between A and B, or A- and B. We can't conclude "equal value" here.

comment by Adele Lopez (adele-lopez-1) · 2023-02-21T02:04:34.862Z · LW(p) · GW(p)