Posts

Comments

I hope that is a roughly correct rendition of your argument.

Thanks for the great summary, Kave!

So this argument doesn't bite for, say, shrimp welfare interventions, which could be arbitrarily more impactful than global health, or R&D developments.

Nitpick. SWP received 1.82 M 2023-$ (= 1.47*10^6*1.24) during the year ended on 31 March 2024, which is 1.72*10^-8 (= 1.82*10^6/(106*10^12)) of the gross world product (GWP) in 2023, and OP estimated R&D has a benefit-to-cost ratio of 45. So I estimate SWP can only be up to 1.29 M (= 1/(1.72*10^-8)/45) times as cost-effective as R&D due to this increasing SWP’s funding.

Here are my even-assuming-outside-view criticisms:

- Even the Davidson model allows that the distribution for interventions that increase the rate/effectiveness of R&D (rather than just purchasing some at the same rate) could be much more effective. I think superresearchers (or even just a large increase in the number of top researchers) are such an intervention

- To the extent we're allowing cause-hopping to enable large multipliers (which we must to think that there are potentially much more impactful opportunities than superbabies), I care about superbabies because of the cause of x-risk reduction! Which I think has much higher cost-effectiveness than growth-based welfare interventions.

Fair points, although I do not see how they would be sufficiently strong to overcome the large baseline difference between SWP and general R&D. I do not think reducing the nearterm risk of human extinction is astronomically cost-effective, and I am sceptical of longterm effects.

Thanks for the post! I think genetic engineering for increasing IQ can indeed be super valuable, and is quite neglected in society. However, I would be very surprised if it was among the areas where additional investment generates the most welfare per $:

- Open Philanthropy (OP) estimated that funding R&D (research and development) is 45 % as cost-effective as giving cash to people with 500 $/year.

- People in extreme poverty have around 500 $/year, and unconditional cash transfer to them are like 1/3 as cost-effective as GiveWell's (GW's) top charities. GW used to consider such transfers around 10 % as cost-effective as their top charities, but now thinks they are 3 to 4 times as cost-effective as previously.

- So I think R&D is like 15 % (= 0.45/3) as cost-effective as GW's top charities.

- I estimate the Shrimp Welfare Project (SWP) is 64.3 k times as cost-effective as GW's top charities.

- So I believe SWP is like 429 k (= 64.3*10^3/0.15) times as cost-effective as R&D (neglecting the beneficial or harmful effects of R&D on animals; it is unclear to me whether saving human lives is beneficial or harmful).

- Trusting these numbers, genetic engineering would have to be 429 k times as cost-effective as typical R&D for it to be as cost-effective as SWP. I can see it being 10 times as cost-effective as R&D, but this is not anything close to enough to make it competitive with SWP.

Thanks for the post, Dan and Elliot. I have not read the comments, but I do not think preferential gaps make sense in principle. If one was exactly indifferent between 2 outcomes, I believe any improvement/worsening of one of them must make one prefer one of the outcomes over the other. At the same time, if one is roughly indifferent between 2 outcomes, a sufficiently small improvement/worsening of one of them will still lead to one being practically indifferent between them. For example, although I think i) 1 $ plus a chance of 10^-100 of 1 $ is clearly better than ii) 1 $, I am practically indifferent between i) and ii), because the value of 10^-100 $ is negligible.

Thanks, JBlack. As I say in the post, "We can agree on another [later] resolution date such that the bet is good for you". Metaculus' changing the resolution criteria does not obviously benefit one side or the other. In any case, I am open to updating the terms of the bet such that, if the resolution criteria do change, the bet is cancelled unless both sides agree on maintaining it given the new criteria.

Thanks, Dagon. Below is how superintelligent AI is defined in the question from Metaculus related to my bet proposal. I think it very much points towards full automation.

"Superintelligent Artificial Intelligence" (SAI) is defined for the purposes of this question as an AI which can perform any task humans can perform in 2021, as well or superior to the best humans in their domain. The SAI may be able to perform these tasks themselves, or be capable of designing sub-agents with these capabilities (for instance the SAI may design robots capable of beating professional football players which are not successful brain surgeons, and design top brain surgeons which are not football players). Tasks include (but are not limited to): performing in top ranks among professional e-sports leagues, performing in top ranks among physical sports, preparing and serving food, providing emotional and psychotherapeutic support, discovering scientific insights which could win 2021 Nobel prizes, creating original art and entertainment, and having professional-level software design and AI design capabilities.

As an AI improves in capacity, it may not be clear at which point the SAI has become able to perform any task as well as top humans. It will be defined that the AI is superintelligent if, in less than 7 days in a non-externally-constrained environment, the AI already has or can learn/invent the capacity to do any given task. A "non-externally-constrained environment" here means, for instance, access to the internet and compute and resources similar to contemporaneous AIs.

Fair! I have now added a 3rd bullet, and clarified the sentence before the bullets:

I think the bet would not change the impact of your donations, which is what matters if you also plan to donate the profits, if:

- Your median date of superintelligent AI as defined by Metaculus was the end of 2028. If you believe the median date is later, the bet will be worse for you.

- The probability of me paying you if you win was the same as the probability of you paying me if I win. The former will be lower than the latter if you believe the transfer is less likely given superintelligent AI, in which case the bet will be worse for you.

- The cost-effectiveness of your best donation opportunities in the month the transfer is made is the same whether you win or lose the bet. If you believe it is lower if you win the bet, this will be worse for you.

We can agree on another resolution date such that the bet is good for you accounting for the above.

I agree the bet is not worth it if superintelligent AI as defined by Metaculus' immediately implies donations can no longer do any good, but this seems like an extreme view. Even if AIs outperform humans in all tasks for the same cost, humans could still donate to AIs.

I think the Cuban Missile Crisis is a better analogy for the period right after Metaculus' question resolves non-ambiguously than mutually assured destruction. For the former, there were still good opportunities to decrease the expected damage of nuclear war. For the latter, the damage had already been made.

Thanks, Daniel. My bullet points are supposed to be conditions for the bet to be neutral "in terms of purchasing power, which is what matters if you also plan to donate the profits", not personal welfare. I agree a given amount of purchashing power will buy the winner less personal welfare given superintelligent AI, because then they will tend to have a higher real consumption in the future. Or are you saying that a given amount of purchasing power given superintelligent AI will buy not only less personal welfare, but also less impartial welfare via donations? If so, why? The cost-effectiveness of donations should ideally be constant across spending categories, including across worlds where there is or not superintelligent AI by a given date. Funding should be moved from the least to the most cost-effective categories until their marginal cost-effectiveness is equalised. I understand the altruistic market is not efficient. However, for my bet not to be taken, I think one would have to argue about which concrete decisions major funders like Open Philanthropy are making badly, and why they imply spending more money on worlds where there is no superintelligent AI relative to what is being done at the margin.

Thanks, Richard! I have updated the bet to account for that.

If, until the end of 2028, Metaculus' question about superintelligent AI:

- Resolves non-ambiguously, I transfer to you 10 k January-2025-$ in the month after that in which the question resolved.

- Does not resolve, you transfer to me 10 k January-2025-$ in January 2029. As before, I plan to donate my profits to animal welfare organisations.

The nominal amount of the transfer in $ is 10 k times the ratio between the consumer price index for all urban consumers and items in the United States, as reported by the Federal Reserve Economic Data, in the month in which the bet resolved and January 2025.

Great discussion! I am open to the following bet.

If, until the end of 2028, Metaculus' question about superintelligent AI:

- Resolves non-ambiguously, I transfer to you 10 k January-2025-$ in the month after that in which the question resolved.

- Does not resolve, you transfer to me 10 k January-2025-$ in January 2029. As before, I plan to donate my profits to animal welfare organisations.

The nominal amount of the transfer in $ is 10 k times the ratio between the consumer price index for all urban consumers and items in the United States, as reported by the Federal Reserve Economic Data, in the month in which the bet resolved and January 2025.

I think the bet would not change the impact of your donations, which is what matters if you also plan to donate the profits, if:

- Your median date of superintelligent AI as defined by Metaculus was the end of 2028. If you believe the median date is later, the bet will be worse for you.

- The probability of me paying you if you win was the same as the probability of you paying me if I win. The former will be lower than the latter if you believe the transfer is less likely given superintelligent AI, in which case the bet will be worse for you.

- The cost-effectiveness of your best donation opportunities in the month the transfer is made is the same whether you win or lose the bet. If you believe it is lower if you win the bet, this will be worse for you.

We can agree on another resolution date such that the bet is good for you accounting for the above.

Sorry for the lack of clarity! "today-$" refers to January 2025. For example, assuming prices increased by 10 % from this month until December 2028, the winner would receive 11 k$ (= 10*10^3*(1 + 0.1)).

You are welcome!

I also guess the stock market will grow faster than suggested by historical data, so I would only want to have X roughly as far as in 2028.

Here is a bet which would be worth it for me even with more distant resolution dates. If, until the end of 2028, Metaculus' question about ASI:

- Resolves with a given date, I transfer to you 10 k 2025-January-$.

- Does not resolve, you transfer to me 10 k 2025-January-$.

- Resolves ambiguously, nothing happens.

This bet involves fixed prices, so I think it would be neutral for you in terms of purchasing power right after resolution if you had the end of 2028 as your median date of ASI. I would transfer you nominally more money if you won than you nominally would transfer to me if I won, as there would tend to be more inflation if you won. I think mid 2028 was your median date of ASI, so the bet resolving at the end of 2028 may make it worth it for you. If not, it can be moved forward. It would still be the case that the purchasing power of a nominal amount of money would decrease faster after resolution if you won than if I did. However, you could mitigate this by investing your profits from the bet if you win.

The bet may still be worse than some loans, but you can always make the bet and ask for such loans?

Here is the link to join EA/Rationality Lisbon's WhatsApp community.

You could instead pay me $10k now, with the understanding that I'll pay you $20k later in 2028 unless AGI has been achieved in which case I keep the money... but then why would I do that when I could just take out a loan for $10k at low interest rate?

Have you or other people worried about AI taken such loans (e.g. to increase donations to AI safety projects)? If not, why?

If you have an idea for a bet that's net-positive for me I'm all ears.

Are you much higher than Metaculus' community on Will ARC find that GPT-5 has autonomous replication capabilities??

I gain money in expectation with loans, because I don't expect to have to pay them back.

I see. I was implicitly assuming a nearterm loan or one with an interest rate linked to economic growth, but you might be able to get a longterm loan with a fixed interest rate.

What specific bet are you offering?

I transfer 10 k today-€ to you now, and you transfer 20 k today-€ to me if there is no ASI as defined by Metaculus on date X, which has to be sufficiently far away for the bet to be better than your best loan. X could be 12.0 years (= LN(0.9*20*10^3/(10*10^3))/LN(1 + 0.050)) from now assuming a 90 % chance I win the bet, and an annual growth of my investment of 5.0 %. However, if the cost-effectiveness of my donations also decreases 5 %, then I can only go as far as 6.00 years (= 12.0/2).

I also guess the stock market will grow faster than suggested by historical data, so I would only want to have X roughly as far as in 2028. So, at the end of the day, it looks like you are right that you would be better off getting a loan.

You could instead pay me $10k now, with the understanding that I'll pay you $20k later in 2028 unless AGI has been achieved in which case I keep the money... but then why would I do that when I could just take out a loan for $10k at low interest rate?

We could set up the bet such that it would involve you losing/gaining no money in expectation under your views, whereas you would lose money in expectation with a loan? Also, note the bet I proposed above was about ASI as defined by Metaculus, not AGI.

Thanks, Daniel. That makes sense.

But it wasn't rational for me to do that, I was just doing it to prove my seriousness.

My offer was also in this spirit of you proving your seriousness. Feel free to suggest bets which would be rational for you to take. Do you think there is a significant risk of a large AI catastrophe in the next few years? For example, what do you think is the probability of human population decreasing from (mid) 2026 to (mid) 2027?

Thanks, Daniel!

To be clear, my view is that we'll achieve AGI around 2027, ASI within a year of that, and then some sort of crazy robot-powered self-replicating economy within, say, three years of that

Is you median date of ASI as defined by Metaculus around 2028 July 1 (it would be if your time until AGI was strongly correlated with your time from AGI to ASI)? If so, I am open to a bet where:

- I give you 10 k€ if ASI happens until the end of 2028 (slightly after your median, such that you have a positive expected monetary gain).

- Otherwise, you give me 10 k€, which I would donate to animal welfare interventions.

Thanks, Ryan.

Daniel almost surely doesn't think growth will be constant. (Presumably he has a model similar to the one here.)

That makes senes. Daniel, my terms are flexible. Just let me know what is your median fraction for 2027, and we can go from there.

I assume he also thinks that by the time energy production is >10x higher, the world has generally been radically transformed by AI.

Right. I think the bet is roughly neutral with respect to monetary gains under Daniel's view, but Daniel may want to go ahead despite that to show that he really endorses his views. Not taking the bet may suggest Daniel is worried about losing 10 k€ in a world where 10 k€ is still relevant.

Thanks for the update, Daniel! How about the predictions about energy consumption?

| In what year will the energy consumption of humanity or its descendants be 1000x greater than now? |

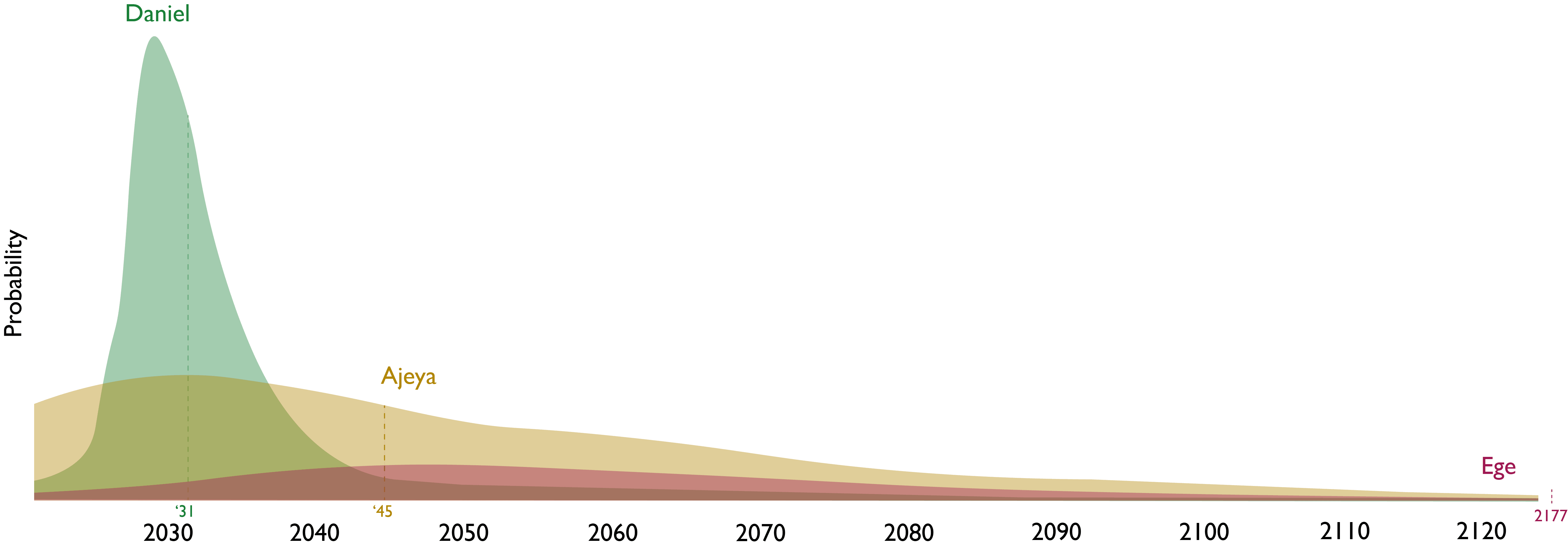

Your median date for humanity's energy consumption being 1 k times as large as now is 2031, whereas Ege's is 2177. What is your median primary energy consumption in 2027 as reported by Our World in Data as a fraction of that in 2023? Assuming constant growth from 2023 until 2031, your median fraction would be 31.6 (= (10^3)^((2027 - 2023)/(2031 - 2023))). I would be happy to set up a bet where:

- I give you 10 k€ if the fraction is higher than 31.6.

- You give me 10 k€ if the fraction is lower than 31.6. I would then use the 10 k€ to support animal welfare interventions.

Hi there,

Assuming 10^6 bit erasures per FLOP (as you did; which source are you using?), one only needs 8.06*10^13 kWh (= 2.9*10^(-21)*10^(35+6)/(3.6*10^6)), i.e. 2.83 (= 8.06*10^13/(2.85*10^13)) times global electricity generation in 2022, or 18.7 (= 8.06*10^13/(4.30*10^12)) times the one generated in the United States.

Thanks!

Nice post, Luke!

with this handy reference table:

There is no table after this.

He also offers a chart showing how a pure Bayesian estimator compares to other estimators:

There is no chart after this.

Thanks for this clarifying comment, Daniel!

Great post!

The R-square measure of correlation between two sets of data is the same as the cosine of the angle between them when presented as vectors in N-dimensional space

Not R-square, just R:

Nice post! I would be curious to know whether significant thinking has been done on this topic since your post.

Great!

Thanks for writing this!

Have you considered crossposting to the EA Forum (although the post was mentioned here)?

With a loguniform distribution, the mean moral weight is stable and roughly equal to 2.

Thanks for the post!

I was trying to use the lower and upper estimates of 5*10^-5 and 10, guessed for the moral weight of chickens relative to humans, as the 10th and 90th percentiles of a lognormal distribution. This resulted in a mean moral weight of 1000 to 2000 (the result is not stable), which seems too high, and a median of 0.02.

1- Do you have any suggestions for a more reasonable distribution?

2- Do you have any tips for stabilising the results for the mean?

I think I understand the problems of taking expectations over moral weights (E(X) is not equal to 1/E(1/X)), but believe that it might still be possible to determine a reasonable distribution for the moral weight.

"These two equations are algebraically inconsistent". Yes, combining them results into "0 < 0", which is false.