Experts' AI timelines are longer than you have been told?

post by Vasco Grilo (vascoamaralgrilo) · 2025-01-16T18:03:18.958Z · LW · GW · 4 commentsThis is a link post for https://bayes.net/espai/

Contents

How should we analyse survey forecasts of AI timelines? My bet proposal for people with short AI timelines None 4 comments

This is a linkpost [EA · GW] for How should we analyse survey forecasts of AI timelines? by Tom Adamczewski, which was published on 16 December 2024[1]. Below are some quotes from Tom's post, and a bet I would be happy to make with people whose AI timelines are much shorter than those of the median AI expert.

How should we analyse survey forecasts of AI timelines?

The Expert Survey on Progress in AI (ESPAI) is a large survey of AI researchers about the future of AI, conducted in 2016, 2022, and 2023. One main focus of the survey is the timing of progress in AI1.

[...]

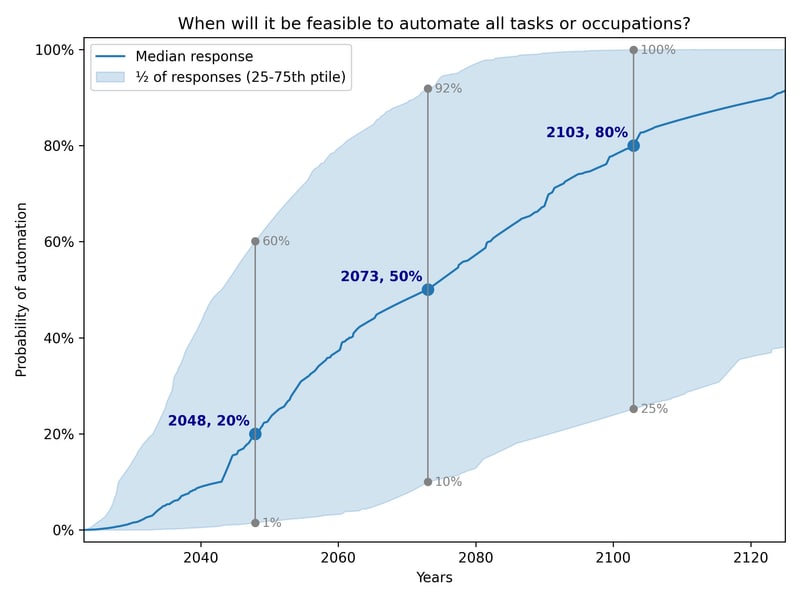

This plot represents a summary of my best guesses as to how the ESPAI data should be analysed and presented.

["Experts were asked when it will be feasible to automate all tasks or occupations. The median expert thinks this is 20% likely by 2048, and 80% likely by 2103".]

[...]

I differ from previous authors in four main ways:

- Show distribution of responses. Previous summary plots showed a random subset of responses, rather than quantifying the range of opinion among experts. I show a shaded area representing the central 50% of individual-level CDFs (25th to 75th percentile). More

- Aggregate task and occupation questions. Previous analyses only showed task (HLMI) and occupation (FAOL) results separately, whereas I provide a single estimate combining both. By not providing a single headline result, previous approaches made summarization more difficult, and left room for selective interpretations. I find evidence that task automation (HLMI) numbers have been far more widely reported than occupation automation (FAOL). More

- Median aggregation. I’m quite uncertain as to which method is most appropriate in this context for aggregating the individual distributions into a single distribution. The arithmetic mean of probabilities, used by previous authors, is a reasonable option. I choose the median merely because it has the convenient property that we get the same result whether we take the median in the vertical direction (probabilities) or the horizontal (years). More

- Flexible distributions: I fit individual-level CDF data to “flexible” interpolation-based distributions that can match the input data exactly. The original authors use the Gamma distribution. This change (and distribution fitting in general) makes only a small difference to the aggregate results. More

[...]

If you need a textual description of the results in the plot, I would recommend:

Experts were asked when it will be feasible to automate all tasks or occupations. The median expert thinks this is 20% likely by 2048, and 80% likely by 2103. There was substantial disagreement among experts. For automation by 2048, the middle half of experts assigned it a probability between 1% and a 60% (meaning ¼ assigned it a chance lower than 1%, and ¼ gave a chance higher than 60%). For automation by 2103, the central half of experts forecasts ranged from a 25% chance to a 100% chance.2

This description still contains big simplifications (e.g. using “the median expert thinks” even though no expert directly answered questions about 2048 or 2103). However, it communicates both:

- The uncertainty represented by the aggregated CDF (using the 60% belief interval from 20% to 80%)

- The range of disagreement among experts (using the central 50% of responses)

In some cases, this may be too much information. I recommend if at all possible that the results should not be reduced to the single number of the year by which experts expect a 50% chance of advanced AI. Instead, emphasise that we have a probability distribution over years by giving two points on the distribution. So if a very concise summary is required, you could use:

Surveyed experts think it’s unlikely (20%) it will become feasible to automate all tasks or occupations by 2048, but it probably will (80%) by 2103.

If even greater simplicity is required, I would urge something like the following, over just using the median year:

AI experts think full automation is most likely to become feasible between 2048 and 2103.

My bet proposal for people with short AI timelines

If, until the end of 2028, Metaculus' question about superintelligent AI:

- Resolves non-ambiguously, I transfer to you 10 k January-2025-$ in the month after that in which the question resolved.

- Does not resolve, you transfer to me 10 k January-2025-$ in January 2029. As before [EA · GW], I plan to donate my profits to animal welfare [? · GW] organisations.

The nominal amount of the transfer in $ is 10 k times the ratio between the consumer price index for all urban consumers and items in the United States, as reported by the Federal Reserve Economic Data, in the month in which the bet resolved and January 2025.

I think the bet would not change the impact of your donations, which is what matters if you also plan to donate the profits, if:

- Your median date of superintelligent AI as defined by Metaculus was the end of 2028. If you believe the median date is later, the bet will be worse for you.

- The probability of me paying you if you win was the same as the probability of you paying me if I win. The former will be lower than the latter if you believe the transfer is less likely given superintelligent AI, in which case the bet will be worse for you.

- The cost-effectiveness of your best donation opportunities in the month the transfer is made is the same whether you win or lose the bet. If you believe it is lower if you win the bet, this will be worse for you.

We can agree on another resolution date such that the bet is good for you accounting for the above.

- ^

There is "20241216" in the source code of the page.

4 comments

Comments sorted by top scores.

comment by Dagon · 2025-01-16T22:59:23.161Z · LW(p) · GW(p)

I don't think your bet has very much relationship to your post title or the first 2/3 of the body. The metaculus question resolved based on "according to widespread media and historical consensus." which does not require any automation or feasible automation of tasks or occupations, let alone all of them.

Replies from: vascoamaralgrilo↑ comment by Vasco Grilo (vascoamaralgrilo) · 2025-01-16T23:52:45.322Z · LW(p) · GW(p)

Thanks, Dagon. Below is how superintelligent AI is defined in the question from Metaculus related to my bet proposal. I think it very much points towards full automation.

"Superintelligent Artificial Intelligence" (SAI) is defined for the purposes of this question as an AI which can perform any task humans can perform in 2021, as well or superior to the best humans in their domain. The SAI may be able to perform these tasks themselves, or be capable of designing sub-agents with these capabilities (for instance the SAI may design robots capable of beating professional football players which are not successful brain surgeons, and design top brain surgeons which are not football players). Tasks include (but are not limited to): performing in top ranks among professional e-sports leagues, performing in top ranks among physical sports, preparing and serving food, providing emotional and psychotherapeutic support, discovering scientific insights which could win 2021 Nobel prizes, creating original art and entertainment, and having professional-level software design and AI design capabilities.

As an AI improves in capacity, it may not be clear at which point the SAI has become able to perform any task as well as top humans. It will be defined that the AI is superintelligent if, in less than 7 days in a non-externally-constrained environment, the AI already has or can learn/invent the capacity to do any given task. A "non-externally-constrained environment" here means, for instance, access to the internet and compute and resources similar to contemporaneous AIs.

comment by JBlack · 2025-01-17T09:38:23.854Z · LW(p) · GW(p)

I don't think you would get many (or even any) takers among people who have median dates for ASI before the end of 2028.

Many people, and particularly people with short median timelines, have a low estimate of probability of civilization continuing to function in the event of emergence of ASI within the next few decades. That is, the second dot point in the last section "the probability of me paying you if you win was the same as the probability of you paying me if I win" does not hold.

Even without that, suppose that things go very well and ASI exists in 2027. It doesn't do anything drastic and just quietly carries out increasingly hard tasks through 2028 and 2029 and is finally recognized as having been ASI all along in 2030. By this time everyone knows that it could have automated everything back in 2027, but Metaculus doesn't resolve until 2030 so you win despite being very wrong about timelines.

Other not-very-unlikely scenarios include Metaculus being shut down before 2029 for any reason whatsoever (violating increasingly broad online gambling laws, otherwise failing as a viable organization, etc.), or that specific question being removed or reworded more tightly.

So the bet isn't actually decided just by ASI timelines, but is one in which the short-timelines side of the bet only wins in the case of additional conjunctions with many clauses.

Operationalizing bets where at least one side believe that there is a significant probability of the end of civilization if they should win is already difficult. Tying one side of the bet but not the other to the continued existence of a very specific organization just makes it worse.

Replies from: vascoamaralgrilo↑ comment by Vasco Grilo (vascoamaralgrilo) · 2025-01-17T11:53:21.668Z · LW(p) · GW(p)

Thanks, JBlack. As I say in the post, "We can agree on another [later] resolution date such that the bet is good for you". Metaculus' changing the resolution criteria does not obviously benefit one side or the other. In any case, I am open to updating the terms of the bet such that, if the resolution criteria do change, the bet is cancelled unless both sides agree on maintaining it given the new criteria.