Posts

Comments

"Richard Hanania was basically wrong, but so were the markets"

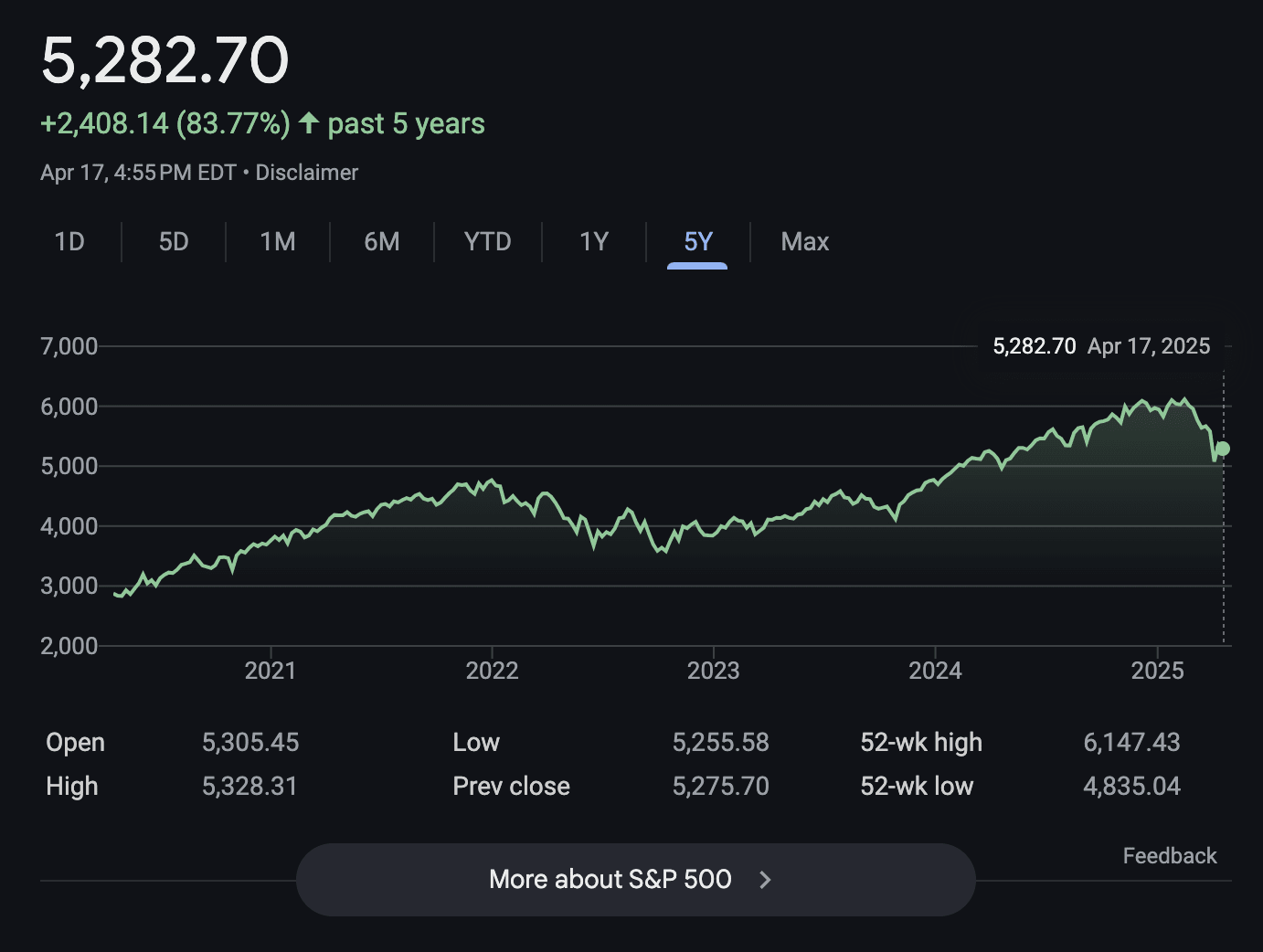

I do not see the markets giving a big vote in favour of trump at the election. I cannot even pick out where the election is. To me, an equally plausible theory is that markets carried on seeing economic growth until the tariffs (which are very visible).

Group Link: https://chat.whatsapp.com/K54tAk9YyFPKJWAJQt7iTK

For the London group, this link didn't work for me.

I am excited about improvements to the wiki. Might write some.

Claims

The claims logo is ugly.

This piece was inspired partly by @KatjaGrace who has a short story idea that I hope to cowrite with her. Also partly inspired by @gwern's discussion with @dwarkeshsp

What would you conclude or do if

It's hard to know, because I feel this thing. I hope I might be tempted to follow the breadcrumbs suggested and see that humans really do talk about consciousness a lot. Perhaps to try and build a biological brain and quiz it.

I was not at the session. Yes Claude did write it. I assume the session was run by Daniel Kokatajlo or Eli Lifland.

If I had to guess, I would guess that the prompt show is all it got. (65%)

I wish we kept and upvotable list of journalists so we could track who is trusted in the community and who isn't.

Seems not hard. Just a page with all the names as comments. I don't particularly want to add people, so make the top level posts anonymous. Then anyone can add names and everyone else can vote if they are trustworthy and add comments of experiences with them.

This journalist wants to talk to me about the Zizian stuff.

https://www.businessinsider.com/author/rob-price

I know about as much as the median rat, but I generally think it's good to answer journalists on substantive questions.

Do you think is a particularly good or bad idea, do you have any comments about this particular journalist. Feel free to DM me.

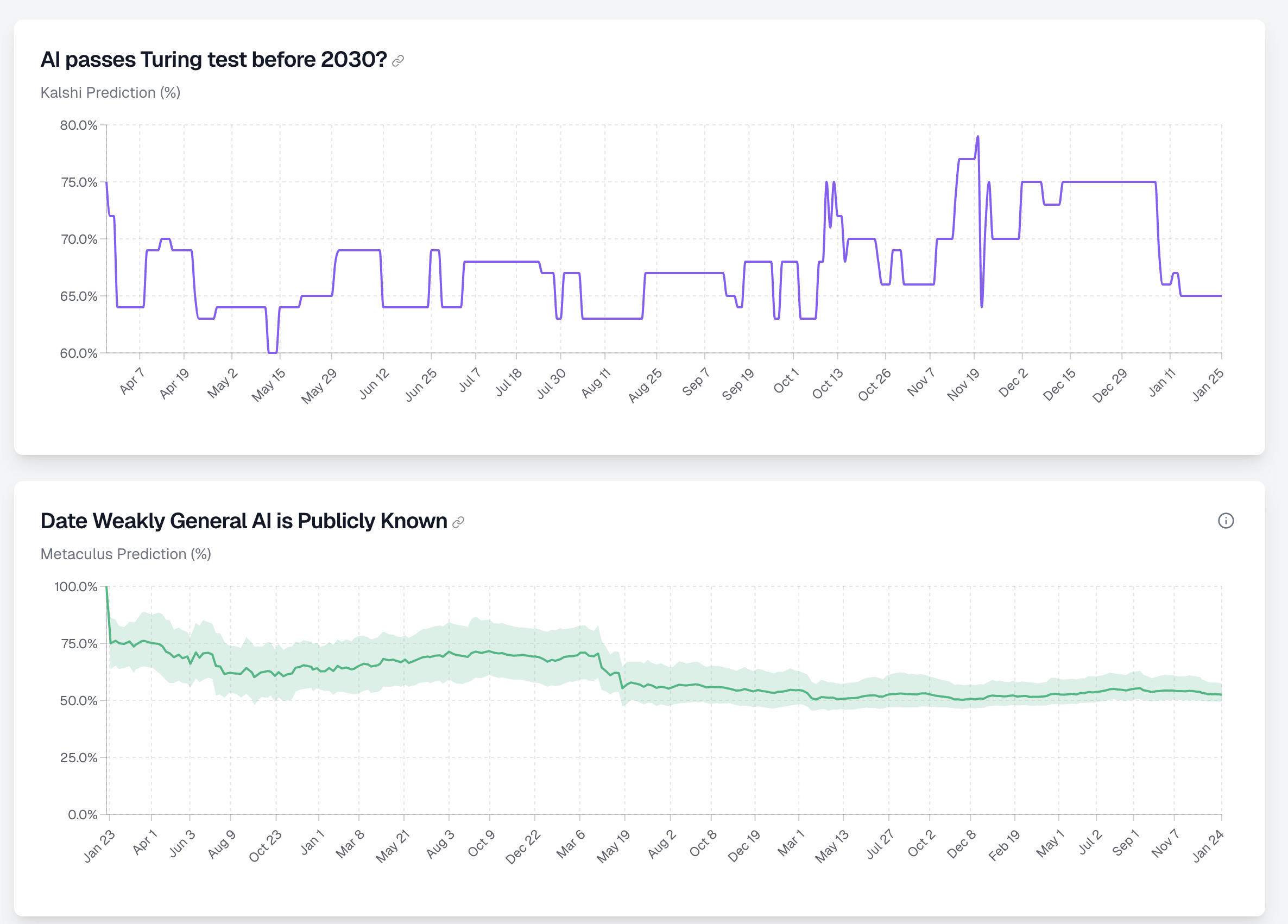

How might I combine these two datasets? One is a binary market, the other is a date market. So for any date point, one is a percentage P(turing test before 2030) the other is a cdf across a range of dates P(weakly general AI publicly known before that date).

Here are the two datasets.

Suggestions:

- Fit a normal distribution to the turing test market such that the 1% is at the current day and the P(X<2030) matches the probability for that data point

- Mirror the second data set but for each data point elevate the probabilities before 2030 such that P(X<2030) matches the probability for the first dataset

Thoughts:

Overall the problem is that one doesn't know what distribution to fit the second single datapoint to. The second suggestion just uses the distribution of the second data set for the first, but that seems quite complext.

"Why would you want to combine these datasets?"

Well they are two different views when something like AGI will appear. Seems good to combine them.

Suggested market. Happy to take suggestions on how to improve it:

https://manifold.markets/NathanpmYoung/will-o3-perform-as-well-on-the-fron?play=true

I guess I frame this as "vibes are signals too". Like if my body doesn't like someone, that's a signal. And it might be they smell or have an asymmetric face, but also they might have some distrustworthy trait that my body recognises (because figuring out lying is really important evolutionarily).

I think it's good to analyse vibes and figure out if unfair judgemental things are enough to account for most of the bad vibes or if there is a missing component that may be fair.

Seems fine, though this doesn't seem like the central crux.

Currently:

- Prediction markets are used

- Argument maps tend not to be.

My bird flu risk dashboard is here:

If you find it valuable, you could upvote it on HackerNews:

Yeah I wish someone would write a condensed and less onanistic version of Planecrash. I think one could get much of the benefit in a much shorter package.

Error checking in important works is moderately valuable.

I recall thinking this article got a lot right.

I remain confused about the non-linear stuff, but I have updated to thinking that norms should be that stories are accurate not merely informative with caveats given.

I am glad people come into this community to give critique like this.

Solid story. I like it. Contains a few useful frames and is memorable as a story.

I have listened to this essay about 3 times and I imagine I might do so again. Has been a valuable addition to my thinking about whether people have contact with reality and what their social goals might be.

I have used this dichotomy, 5 - 100 times during the last few years. I am glad it was brought to my attention.

Sure, but again to discuss what really happened, it wasn't that it wasn't prioritised, it was that I didn't realise it until late into the process.

That isn't prioritisation, in my view, that's halfassing. And I endorse having done so.

Or a coordination problem.

I think coordiantion problems are formed from many bad thinkers working together.

I mean the Democratic party insiders who resisted the idea that Biden was unsuitable for so long and counselled him to stay when he was pressed. I think those people were thinking badly.

Or perhaps I think they were thinking more about their own careers than the next administration being Democrat.

Yes, this is one reason I really like forecasting. I forces me to see if my thinking was bad and learn what good thinking looks like.

I think it caused them to have much less time to choose a candidate and so they chose a less good candidate than they were able to.

If thinking is the process of coming to conclusions you reflectively endorse, I think they did bad thinking and that in time people will move to that view.

Thinking is about choosing the action that actually wins, not the one that is justifiable by social reality, right?

Do you mean this as a rebuke?

I feel a little defensive here, because I think the acknowledgement and subsequent actions were more accurate and information preserving than any others I can think of. I didn't want to rewrite it, I didn't want to quickly hack useful chunks out, I didn't want to pretend I thought things I didn't, I actually did hold these views once.

If you have suggestions for a better course of action, I'm open.

Do you find this an intuitive framework? I find the implication that conversation fits neatly into these boxes or that these are the relevant boxes a little doubtful.

Are you able to quickly give examples in any setting of what 1,2,3 and 4 would be?

I don't really understand the difference between simulacra levels 2 and 3.

- Discussing reality

- Attempting to achieve results in reality by inaccuracy

- Attempting to achieve results in social reality by inaccuracy

I've never really got 4 either, but let's stick to 1 - 3.

Also they seem more like nested circles rather than levels - the jump between 2 and 3 (if I understand it correctly) seems pretty arbitrary.

Upvote to signal: I would buy a button like this, if they existed.

Physical object.

I might (20%) make a run of buttons that say how long since you pressed them. eg so I can push the button in the morning when I have put in my anti-baldness hair stuff and then not have to wonder whether I did.

Would you be interested in buying such a thing?

Perhaps they have a dry wipe section so you can write what the button is for.

If you would, can you upvote the attached comment.

Politics is the Mindfiller

There are many things to care about and I am not good at thinking about all of them.

Politics has many many such things.

Do I know about:

- Crime stats

- Energy generation

- Hiring law

- University entrance

- Politicians' political beliefs

- Politicians' personal lives

- Healthcare

- Immigration

And can I actually confidently think that things you say are actually the case. Or do I have a surface level $100 understanding?

Poltics may or may not be the mindkiller, whatever Yud meant by that, but for me it is the mindfiller, it's just a huge amount of work to stay on top of.

I think it would be healthier for me to focus on a few areas and then say I don't know about the rest.

Some thoughts on Rootclaim

Blunt, quick. Weakly held.

The platform has unrealized potential in facilitating Bayesian analysis and debate.

Either

- The platform could be a simple reference document

- The platform could be an interactive debate and truthseeking tool

- The platform could be a way to search the rootclaim debates

Currently it does none of these and is frustrating to me.

Heading to the site I expect:

- to be able to search the video debates

- to be able to input my own probability estimates to the current bayesian framework

- Failing this, I would prefer to just have a reference document which doesn't promise these

I am not sure most foodies are thinking about food with every new person. Maybe hardcore foodies?

Sure but then those things aren't due to an actual relationship with an actual God, they are for the reasons you state. Which is really really importantly different.

I find it pretty tiring to add all the footnotes in. If the post gets 50 karma or this gets 20 karma, I probably will.

@Ben Pace do you folks have some kind of substack upload tool. I know you upload Katja's stuff. If there were a thing I could put a substack address into and get footnotes properly, that would be great.

Is there a summary of the rationalist concept of lawfulness anywhere. I am looking for one and can't find it.

But isn't the point of karma to be a ranking system? Surely its bad if it's a suboptimal one?

I would have a dialogue with someone on whether Piper should have revealed SBF's messages. Happy to take either side.

Thanks, appreciated.

Sure but shouldn't the karma system be a prioritisation ranking, not just "what is fun to read?"

I would say I took at least 10 hours to write it. I rewrote it about 4 times.

Yeah but the mapping post is about 100x more important/well informed also. Shouldn't that count for something? I'm not saying it's clearer, I'm saying that it's higher priority, probably.

Hmmmm. I wonder how common this is. This is not how I think of the difference. I think of mathematicians as dealing with coherent systems of logic and engineers dealing with building in the real world. Mathematicians are useful when their system maps to the problem at hand, but not when it doesn't.

I should say i have a maths degree so it's possible that my view of mathematicians and the general view are not conincident.

Yeah this seems like a good point. Not a lot to argue with, but yeah underrated.

It is disappointing/confusing to me that of the two articles I recently wrote, the one that was much closer to reality got a lot less karma.

- A new process for mapping discussions is a summary of months of work that I and my team did on mapping discourse around AI. We built new tools, employed new methodologies. It got 19 karma

- Advice for journalists is a piece that I wrote in about 5 hours after perhaps 5 hours of experiences. It has 73 karma and counting

I think this is isn't much evidence, given it's just two pieces. But I do feel a pull towards coming up with theories rather than building and testing things in the real world. To the extent this pull is real, it seems bad.

If true, I would recommend both that more people build things in the real world and talk about them and that we find ways to reward these posts more, regardless of how alive they feel to us at the time.

(Aliveness being my hypothesis - many of us understand or have more live feelings about dealing with journalists than a sort of dry post about mapping discourse)

Hmmm, what is the picture that the analogy gives you. I struggle to imagine how it's misleading but I want to hear.

I common criticism seems to be "this won't change anything" see (here and here). People often believe that journalists can't choose their headlines and so it is unfair to hold them accountable for them. I think this is wrong for about 3 reasons:

- We have a loud of journalists pretty near to us whose behaviour we absolutely can change. Zvi, Scott and Kelsey don't tend to print misleading headlines but they are quite a big deal and to act as if creating better incentives because we can't change everything seems to strawman my position

- Journalists can control their headlines. I have seen 1-2 times journalists change headlines after pushback. I don't think it was the editors who read the comments and changed the headlines of their own accord. I imagine that the journalists said they were taking too much pushback and asked for the change. This is probably therefore an existence proof that journalists can affect headlines. I think reality is even further in my direction. I imagine that journalists and their editors are involved in the same social transactions as exist between many employees and their bosses. If they ask to change a headline, often they can probably shift it a bit. Getting good sources might be enough to buy this from them.

- I am not saying that they must have good headlines, I am just holding the threat of their messages against them. I've only done this twice, but in one case a journalist was happy to give me this leverage. And having it, I felt more confident about the interview.

I think there is a failure mode where some rats hear a system described and imagine that reality matches it as they imagine it. In this case, I think that's mistaken - journalists have incentives to misdescribe their power of their own headlines. And reality is a bit messier than the simple model suggests. And we have more power than I think some commenters think.

I recommend trying this norm. It doesn't cost you much, it is a good red flag if someone gets angry when you suggest it and if they agree you get leverage to use if they betray you. Seems like a good trade that only gets better the more of us do it. Rarely is reality so kind (and hence I may be mistaken)

I don't think that's the case, because the journalist you are speaking to is not the person who's makes the decision.

I think this is incorrect. I imagine journalists have more latitude to influence headlines when they arelly care.

Why do you think it's stretched. It's about the difference between mathematicians and engineers. One group are about relating the real world the other are about logically consistent ideas that may be useful.

I exert influence where I can. I think if all of LessWrong took up this norm we could shift the headline-content accuracy gap.