Don't go bankrupt, don't go rogue

post by Nathan Young · 2025-02-06T10:31:14.312Z · LW · GW · 1 commentsContents

You wanna bet? Your morality has a bank balance A theory of value drift What are you talking about, Nathan? Don’t bet the farm More power doesn’t always mean more speed None 1 comment

Would you bet your life’s savings on a 50% chance to triple it? Now imagine betting your sanity, values and goals. How much should you be willing to put on the table?

You wanna bet?

Let’s say you go to a casino, which offers a coin flip. Heads you triple it, tails you lose it. How much of your money should you bet on it? How many times should you do so?

One might think that this question is solely about expected value. Does it offer better return than other possible investments? If so, bet everything, if not, nothing.

This seems like a myopic view. For most of us, it’s about expected utility. How do I value very large amounts of money? What does one feel like if I go bankrupt? Because even if it’s the highest expected value option, if I put all my money on it, I’ll go bankrupt half the time.

Many people settle financially for only betting part of their wealth, even on outcomes they expect to be multiples of the money they put in. If you go bankrupt, you can’t play anymore, you’re stuck at zero. And for most people[1] the potential negatives outweigh the potential positives.

Your morality has a bank balance

I suggest there is a similar interplay between power and purpose. I can get more and more power, but I may be risking my integrity or goals and all that power isn’t much use if I am unrecognisable to myself.

Famously Sam Bankman-Fried said he would bet the whole world for a 51% chance of 2 worlds. Here is the tail end of that exchange[2]:

[Tyler] COWEN: Then you keep on playing the game. So, what’s the chance we’re left with anything? Don’t I just St. Petersburg paradox you into nonexistence.

BANKMAN-FRIED: Well, not necessarily. Maybe you St. Petersburg paradox into an enormously valuable existence. That’s the other option.

Bankman-Fried then propagated one of the largest frauds in history. He took a business worth billions, and in order to try and have it be worth tens of billions, ended up in jail.

I don’t understand Bankman-Fried, but I do wonder if his past self would endorse this. If we could take him back in time, stand him in front of his teenage self, whether that boy would endorse, or be appalled by, the man he had become[3].

Likewise, many look at Elon Musk and wonder where the autistic genius went, to be replaced by the right wing, edgelord industrialist[4].

“What good is it for someone to gain the whole world, yet forfeit their soul?" The Bible, Mark 8:36.

How can we think about these risks of value drift? Of losing touch with one’s previous purpose?

Before we start, some definitions:

By power, I mean something like the ability to affect change times the amount of consent required from others. I don’t mean this as a mathematical function - but to me doing stuff and not needing permission are the key elements. If you can spend a billion dollars without asking anyone, that's a lot of power. If you can spend $10 bn but need to go through a long and complex approvals process, that may be less power.

By value drift, I mean one’s goals and purposes changing over time. We see this in many people’s lives. They get married and seem sincere in claiming to be devoted and faithful, but sadly later they become absorbed in their careers or distracted by someone else. As the marriage breaks down, often one or other of the spouses have had their values drift.

A theory of value drift

Money is very amenable to probability calculations, but power is hard to quantify. It’s hard to put a number on, and comes in many forms. Money is its own kind of power, as are political positions, one’s career. So we’ll make a toy model.

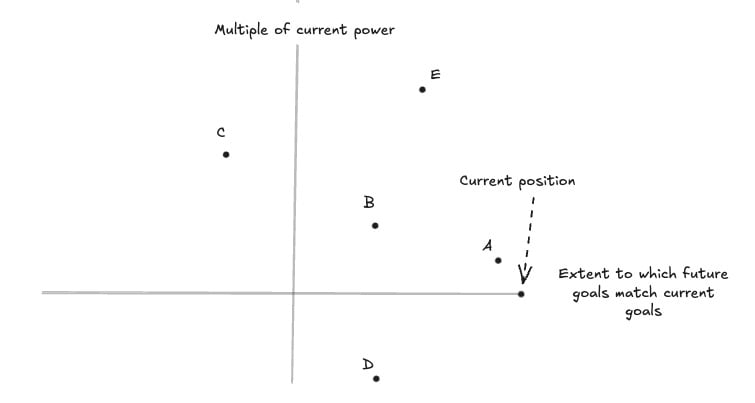

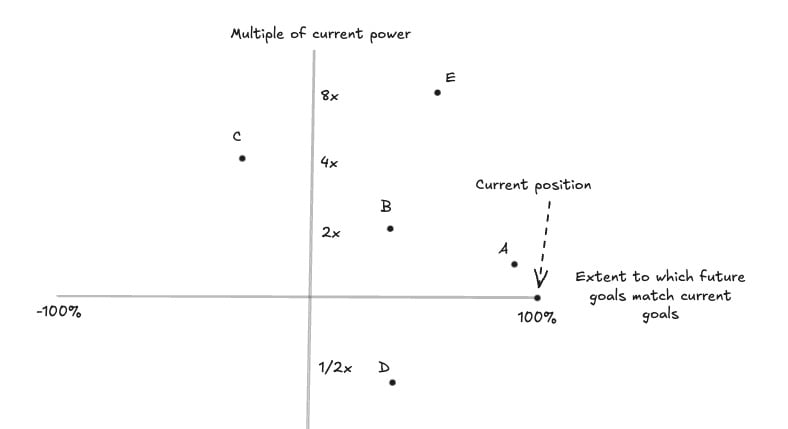

Let’s imagine the simple model that I have some amount of power and some goals. How should I trade these off if I gain more power? Here, I can drift from my values by moving on the x-axis and gain (or lose) power by moving on the y-axis[5]. Which moves should I want to make?Let’s consider some positions, remembering the new goals are “future goals” and the ones we started with “current goals”.

Position A. Here, I gain a bit of power and am able to use it more or less totally for current goals. This person is recognisably me, but with a bit more leverage.

Position B. Here I perhaps double my power, but my goals have substantially shifted. Now maybe this is good, perhaps I will have learned of new, better goals, but perhaps not. I am a big fan of having children, but many people seem to assume children are a bit like this - I might develop a load of new priorities that lessen my attachment to my previous ones. I think the effect is overstated, but I use it to say these new goals may not be bad.

Position C. Here I am more powerful still, but now work moderately against my current goals. I trash positions I previously stood for, I stand against groups I used to ally with.

Position D. Here I am a chunk less powerful, and substantially less able to use my power for my current goals.

Position E. This seems better. Our future goals will change a bit, but we are just way more powerful and even the smaller share of power which matches our current priorities is much more powerful than we have now.

I think it’s worth considering which of these moves we would make. E seems great, it’s hard to tell between A and B. C and D both seem pretty bad. Though plausibly C is even worse than D, now that we are using our significantly greater power to push back against our current goals.

We can label the axes on this toy model and things become clearer. Remember this is still a toy example. Now, C works 25% against our current positions, with 4x the power. We will do nothing that we did before and will work significantly against our “current” self. In this example it looks like even D is better, where we move forward at perhaps ⅛ the pace we did before. E seems even more clearly good, with perhaps 4x the power to direct to our current goals after the reduction is taken into account. A seems an improvement, but our current position looks better than B.

What are you talking about, Nathan?

The thing that caused me to write this article is watching many people go a bit crazy on twitter. Some of them are quite powerful people. And I suggest they were doing trades like this without knowing. Here are some examples:

- Elon’s shift to the right. Banning the word “cisgender” on X is hardly the free speech position he previously espoused. Trump attempted to get fake slates of electors created after the 2020 election. Would Musk have previously supported someone who did that?

- Dustin Moskovitz, the facebook founder and philanthropist regularly rails against Musk on threads and has called Tesla the next Enron. I have my issues with Musk but is he really a top priority? And like it or not, Tesla seems to produce good cars. I have driven in them, autopilot is great.

- Marc Andreessen, the hedge fund manager and tech booster, has become ever more tribal, creating lists of enemy ideas and quoting memes that he could know are wrong, but chooses not to.

- Many right wing commentators were willing to call out January 6th when it happened, but have since moved to talking about ‘election irregularities’. Often their brands are around integrity or hard truth telling (eg Ben Shapiro’s “facts don’t care about your feelings”). How is that going?

Or some non-social media based examples:

- Green party politicians and nuclear. In the UK, the environmental party has long stood against nuclear power. But France, which built nuclear power stations, has far less emissions for electricity. Which is better for the environment? How did the greens get here?

- Bill Gates used his Microsoft money and to set up the Gates Foundation. I have no idea if this was his intention when he ran the company so hard. Note that not all shifts in goals are bad.

- Mike Pence accepting the Vice Presidency. I think he knew what kind of man Trump was. And perhaps he thought that it was worth the risk, to get Roe V Wade overturned. But he seems shaken by the events of Jan 6th, which were largely caused by a man he helped elect.

- Sam Bankman Fried wanted to become wealthy so he could help animal welfare causes. But by the time he was exceedingly wealthy he desired to get wealthier still. It’s possible Bankman-Fried really would endorse this, I don’t know.

- Effective altruist funder Open Philanthropy seemingly has shifted away from even slightly [right wing organisations.](https://80000hours.org/2025/01/it-looks-like-there-are-some-good-funding-opportunities-in-ai-safety-right-now/) I thought they were about effectiveness.

I have written previously about how not to go insane, but I’d like to think about this as “sometimes power isn’t worth the tradeoffs, even for power’s sake”. Because we gain power for reasons. And even if those reasons are purely selfish - a life of sex drugs and rock and roll - we still need a mind capable[6] of enjoying the sex, the drugs and the rock music.

If we could take them back to stand before their previous selves, before whatever bargains they made for that power, would their previous selves endorse the choice?

Don’t bet the farm

The conventional answer to the casino problem posed at the top is to do with bet sizing. Don’t bet everything, no matter how confident you are.

I suggest the same is true here. No matter how confident I am that I have figured out the true nature of the world, I probably shouldn’t bet everything on it. Whether it’s climate change, wokeness, Trump (whether for or against) if you go all in on such things, then eventually you’ll be wrong and you may have lost yourself in the process. Even when I am pretty confident on something eg the importance of AI or the badness of factory farms, I try not to let it subsume me. Sometimes it’s good to go dancing.

I think that my Christian friends often seem a bit healthier in this regard. Perhaps they bundle all their obsession into God and so aren’t so easily obsessed with this week’s political winds. But I also respect Sam Harris for leaving Twitter when he felt it was bad for him and Bryan Caplan for avoiding the news and cultivating a “beautiful bubble” for himself. And I guess that wisdom involves saying no. Turning down positions that will corrupt me. Saying awkward but true things. Upsetting powerful people. Just because the deal looks good, doesn’t mean I should invest everything in it.

More power doesn’t always mean more speed

I am not saying power is bad. And I think power speaks pretty loudly in its own defence. What I mean is that in pursuing power, I am pursuing it for goals. And it is worth asking whether with with this new power I will actually reach those goals better. Do I trust the more powerful version of me? Do I trust him with my goals, my consciousness, with my relationships to the people I love? And if I do not, perhaps for the sake of us both[7], I should be less willing to go rogue.

Thanks to Argon, Jens, Eleanor and Josh for their comments. If you have general suggestions for improving my blogs, I'd like to hear them. You can subscribe to these and less rationality-focused blogs here.

- ^

If you want to read more about this, google "Kelly betting". Though if you're anything like me you might want to read something like Never Go Full Kelly [LW · GW] too, to balance out your newfound confidence.

- ^

The full related section of the discussion between Tyler Cowen and Sam Bankman-Fried:

COWEN: Okay, but let’s say there’s a game: 51 percent, you double the Earth out somewhere else; 49 percent, it all disappears. Would you play that game? And would you keep on playing that, double or nothing?

BANKMAN-FRIED: With one caveat. Let me give the caveat first, just to be a party pooper, which is, I’m assuming these are noninteracting universes. Is that right? Because to the extent they’re in the same universe, then maybe duplicating doesn’t actually double the value because maybe they would have colonized the other one anyway, eventually.

COWEN: But holding all that constant, you’re actually getting two Earths, but you’re risking a 49 percent chance of it all disappearing.

BANKMAN-FRIED: Again, I feel compelled to say caveats here, like, “How do you really know that’s what’s happening?” Blah, blah, blah, whatever. But that aside, take the pure hypothetical.

COWEN: Then you keep on playing the game. So, what’s the chance we’re left with anything? Don’t I just St. Petersburg paradox you into nonexistence?

BANKMAN-FRIED: Well, not necessarily. Maybe you St. Petersburg paradox into an enormously valuable existence. That’s the other option.

- ^

Bankman-Fried might even think this gamble was worth it, for all those other Sams in other universes, who now have $50 bn to spend on their causes. Though many of them may gamble it on even larger frauds.

- ^

Some draft reviewers disliked that "right-wing, edgelord industrialist" is mixed valence - industrialist isn't bad. I dunno. Seems more accurate to how the median person views him.

- ^

A reviewer asked what the origin represents. Not much. Technically it's a place of current power levels where ones new goals are entirely orthogonal (neither for nor against) one's current goals.

- ^

There is an analogy to AI here, which is that one trains AI to achieve one's goals, but if the AI has different goals, then it may not be an improvement.

- ^

One might see this as a principal-agent problem where the agent is my future self. Do I trust him with all I have built up?

1 comments

Comments sorted by top scores.

comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2025-02-10T04:46:55.212Z · LW(p) · GW(p)

How did the greens get here?

Largely via opposition to nuclear weapons, and some cost-benefit analysis which assumes nuclear proponents are too optimistic about both costs and risks of nuclear power (further reading). Personally I think this was pretty reasonable in the 70s and 80s. At this point I'd personally prefer to keep existing nuclear running and build solar panels instead of new reactors, though if SMRs worked in a sane regulatory regime that'd be nice too.